Abstract

Community college students often are unaware of the stakes involved in their performance on placement exams, used to sort the students into their first math and English courses. In our randomized experiment of approximately 13,000 newly admitted community college students, we test whether informing students, via a supplemental notification letter, about the implications of placement exam scores influences their placement exam participation, preparation, and performance. We also test whether message framing (loss-framed vs. gain-framed) alters the effect of the information on study outcomes. We find that neither type of message has an effect on placement exam preparation or performance. We find limited evidence to suggest that the loss-framed message may have a small effect on the avoidance of placement exams by increasing the likelihood of students’ submitting alternative evidence of academic preparation. Overall, our evidence suggests that students’ prior knowledge about the ramifications of placement exams is not enough to improve performance. Colleges should consider alternative means of assessing college readiness, such as measures of high school achievement, and should provide students who still have to take placement exams with detailed information about test content and study materials before testing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Prior to enrolling in their first courses, most newly admitted community college students must demonstrate preparation for college-level coursework. Students typically are directed to a series of placement exams designed to evaluate proficiency in reading, writing, and math. Based largely on their exam performance, students are assigned either to college-level courses that contribute to earning a postsecondary credential, or to one of several levels of developmental coursework that aims to teach basic skills and competencies necessary for success in college-level coursework. These placement decisions have meaningful consequences for students’ likelihood of completing college (Bahr 2008, 2009; Bailey et al. 2010), but students often are unaware of the high stakes prior to taking the placement tests (Fay et al. 2013; Hodara et al. 2012).

Observing the significance of this issue, Achieving the Dream’s Developmental Education Initiative (DEI) has prioritized an important, unaddressed question within developmental education research, namely “Do efforts to better prepare students and increase awareness of the high-stakes nature of placement tests lead to higher scores and better predictive value?” (Burdman 2012, p. viii). The purpose of this study is to directly address the question posed by DEI. Specifically, we assess the impact of a supplemental notification letter, designed to raise awareness of the implications of placement exams, on students’ participation, preparation, and performance on these exams.

Background

Developmental Education

Community colleges serve as an entry point to higher education for millions of students, admitting nearly all students who choose to apply (Bahr and Gross 2016). In order to match the promise of access with a commitment to fostering success, institutions typically assess new students’ skills in math and English before placing them in the curriculum. Skill assessment has most often been done with standardized placement exams, such as Accuplacer®, Compass®, or Asset® (Burdman 2012; Fields and Parsad 2012; Hughes and Scott-Clayton 2011).

As a result of this assessment, more than half of students who enroll in a community college are placed in developmental coursework in math, reading, writing, or some combination of the three (Attewell et al. 2006; Bailey et al. 2010). Students referred to developmental coursework are required to advance successfully through one to four levels of remedial courses before being permitted to enroll in credit-bearing courses in that subject (Bettinger et al. 2013).

Contrary to the success-fostering intent of development education, research unfortunately paints a dismal picture of the educational prospects of students who are referred to developmental coursework. For example, only 33% of students referred to developmental math and 46% of students referred to developmental reading complete the required developmental sequence (Bailey et al. 2010). These figures drop to 17% and 29%, respectively, when students are referred three or more levels below college-readiness (Bailey et al. 2010). Not completing a developmental sequence greatly reduces students’ chances of earning a postsecondary credential or successfully transferring to a four-year institution (Bahr 2008, 2009). Thus, while developmental education may provide to some students the foundational skills necessary to succeed in college (Lesik 2006; Moss and Yeaton 2006, 2013; Moss et al. 2014), many experience developmental education as a stumbling block in their path to a college degree.

Placement Testing

Given the high stakes involved in placement decisions, much attention is being directed by researchers, policymakers, and practitioners to the quality and accuracy of placement exams (Bahr 2016; Bahr et al. in press; Belfield and Crosta 2012; Burdman 2012; Hodara et al. 2012; Scott-Clayton 2012). While placement exams are seemingly efficient sorting tools, evidence suggests that standardized exams are weak predictors of success in college-level courses (Bahr 2016; Belfield and Crosta 2012; Scott-Clayton 2012). In part, this may be due to misalignment between exam content and the requirements of college-level courses in some academic and career pathways. For instance, standardized placement exams in math typically assess students’ readiness for college-level algebra, which is knowledge that is not required in all programs of study (Hodara et al. 2012). Concerns about high rates of placement error have led some researchers to question the use of a single measure of readiness, as embodied in placement exams (Bahr et al. in press; Hodara et al. 2012).

In addition, evidence indicates that students’ experience of the placement process contributes to elevated placement error. Students often are unaware of the consequences of their performance on placement exams and, therefore, do not adequately prepare (Fay et al. 2013; Hodara et al. 2012; Venezia et al. 2010). Furthermore, students often describe placement exams as disconnected from their prior academic preparation, not allowing them to clearly demonstrate their college readiness or to express their academic aspirations and goals. Undoubtedly, these feelings are magnified by an appointment with a computer rather than with an academic advisor. Academic advisors report that students frequently are frustrated once they realize the implications of their scores (Safran and Visher 2010). Some students seek retesting, but many are unaware of options to challenge placement exam results (Venezia et al. 2010). Clearly, more can be done by colleges to ensure that students are equipped with the information that they need to successfully navigate the placement process, including ensuring that, prior to taking the placement exams, students grasp the implications of their performance.

Conceptual Framework: The Influence of Notifications and Message Framing on Awareness

Research on how communication strategies affect individual decision-making processes and consequent behavior often distinguishes between message content that is framed in terms of gains or message content that is framed in terms of losses (Tversky and Kahneman 1981, 1986). Gain-framed messages focus on the positive outcomes (i.e., benefits, rewards) that may result from specified behaviors, while loss-framed messages emphasize risk and possible negative outcomes (i.e., costs, consequences).

Scholars have found inconsistent results when comparing the effects of gain- and loss-framed messages (Maheswaran and Meyers-Levy 1990). Some studies indicate that messages explaining the positive attributes of products or the benefits of particular behaviors are more persuasive than messages highlighting the costs or risks of opposing behaviors (e.g., Rothman et al. 1993). For example, Levin and Gaeth (1988) found that study participants who were told that ground beef was 75% lean tended to rate the product higher on several attributes (e.g., quality, taste) than did participants who were told that the ground beef was 25% fat.

Other studies suggest that messages that foreground costs (i.e., losses) influence behavior more consistently than do messages that focus on benefits. To illustrate, Banks et al. (1995) found that a negatively-framed video was more persuasive in influencing women to obtain a mammogram than was a comparable positively-framed video. Similarly, Meyerowitz and Chaiken (1987) found that women were more likely to conduct their own breast-exams at home if they were warned of the negative consequences of not doing so, as compared with women who were told about the benefits of self-exams.

Still other studies have found that message framing is inconsequential (e.g., Lauvey and Rubin 1990). However, much of the prior work on this subject has focused on health, advertising, and consumption behavior (e.g., Block and Keller 1995; Grewal et al. 1994; Witte and Allen 2000). Few studies have explored whether message framing is important for influencing student behavior and educational outcomes in a higher education context.

Once exposed to a gain- or loss-framed message, message recipients will decide on a course of action or inaction. Rational choice theory suggests that students will make decisions that maximize their utility (e.g., satisfaction, happiness), given the information at their disposal (DesJardins and Toutkoushian 2005). Drawing on this theory, one would expect that community college students who are given information about the high stakes involved in placement testing will adjust their behavior to minimize their costs and maximize their gains. For example, underprepared students may decide not to matriculate if the information leads them to believe that, given their own assessment of their academic readiness, the time and money costs associated with enrollment outweigh the benefits. Another possibility is that students may decide to submit alternative evidence of their academic readiness, such as ACT scores, thereby eliminating the risk of performing poorly on the placement exams and consequently being placed in developmental coursework. Still another possibility is that students choose to study and prepare for placement exams to ensure the best possible scores and the best possible curricular placement from which to begin college. Thus, the decision students face is multifaceted.

When individuals must navigate complex decision-making processes in organizational contexts (Jones 1999; March 1994; Simon 1991), bounded rationality can help us better comprehend their actions. Bounded rationality asserts that decisions arise from two sources: (1) the external environment and the demands or incentives it presents, and (2) the internal make-up of the individual, which includes individual’s cognitive architecture and procedural limitations that affect how the individual navigates decision-making processes that occur over long periods of time.

Bounded choice models view the environment as being fundamentally more complex than assumed in rational choice models. This complexity can contribute to a decision-maker’s uncertainty about the probability of certain outcomes and ambiguity about the nature of the problem, like the connection between placement exam performance and long-term academic outcomes (e.g., college graduation). According to March (1994), more information is not likely to provide additional clarity to decision-makers who are navigating environments that are subjectively ambiguous in nature.

In constructing this study, we anticipated that many community college students perceive the assessment and placement process to be abstruse. Further, we anticipated that providing students with additional information about the potential ramifications of placement test results would make the assessment and placement process more transparent. Thus, a supplementary information letter would interact with students’ rational decision-making framework to facilitate behaviors that maximize the probability of favorable educational outcomes.

Research Questions

In this study, we use data from a randomized controlled trial of a large sample of newly admitted community college students to test the effect of a low-cost, supplemental notification letter on students’ placement exam participation, preparation, and performance. The letter content highlighted the implications of students’ placement test scores on the financial costs of college and time required to complete a credential. Further, we examine whether framing the notification in terms of gains or losses (benefits or costs, respectively) alters the effect of the letter on student behavior. To organize our inquiry, we pose three research questions and address each in the sequence that our student sample progressed through the placement process:

- 1.

Does receiving information about the consequences of placement exam scores influence students’ participation in the placement process?

- 2.

Does receiving information about the consequences of placement exam scores influence students’ amount or level of preparation for the placement exams?

- 3.

Does receiving information about the consequences of placement exam scores improve students’ placement exam performance?

Data and Methods

Institutional Context

Data for this study were collected at a large, multi-campus, suburban community college in the Midwest, hereafter referred to as the Study Community College (SCC). SCC served more than 24,000 students in the Fall term of 2014 (Ginder et al. 2015). Just under one-third (29%) of students enrolled full-time, 57% were female, and 34% were underrepresented minorities (59% white; 3% unknown; 4% non-resident).

Verification of College Readiness

Newly admitted students at SCC receive an admittance letter that welcomes them to the institution and notifies them of their responsibility to verify college readiness in math and English prior to being allowed to register for college-level courses in these subjects. SCC accepts several methods of verification, in addition to the option of demonstrating readiness on college-administered placement exams. In mathematics, students may submit ACT scores that exceed a specified threshold, documentation of a score of 3 or higher on the Advanced Placement (AP) exam in Calculus AB or BC, evidence of prior completion of the prerequisite math course at another accredited postsecondary institution within the past 3 years, or evidence of completion of a bachelor’s degree or a higher-level credential from an accredited U.S. postsecondary institution. In English, students may submit ACT, SAT, or CLEP scores that exceed specified thresholds, documentation of a score of 3 or higher on the AP exam in English, evidence of prior completion of an equivalent college-level English composition course at another accredited postsecondary institution, or evidence of completion of an associate’s degree or a higher-level credential from an accredited U.S. postsecondary institution.

Students who do not verify college readiness through one of these methods are required to take a placement exam in math, English, or both. During the period of time addressed in this study, SCC administered the Compass® placement exam developed by ACT, Inc. Compass is a commercially available, computer-administered exam that provides scores to quantify students’ skills in math and English. Students may either schedule an appointment to take the necessary exam, or they can take the exam during walk-in hours at a campus testing center.

Students who achieve sufficiently high placement exam scores are deemed to have verified college readiness and may proceed with enrolling in college-level math and English courses. Students who do not achieve sufficiently high scores are referred to developmental coursework. Students with very low English placement exam scores also are required to meet with an academic advisor prior to enrolling in courses.

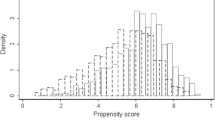

Sample Selection and Random Allocation Process

As noted earlier, we focus on newly admitted community college students. Between July 30, 2013, and July 28, 2014, a total of 13,175 students were admitted to SCC. Of these, we dropped six students due to an administrative error that resulted in double admission. In addition, we dropped 144 students who did not report their age at the time of application, as well as 40 additional students who did not report their gender. Lastly, we dropped 111 students for whom the campus to which they applied was missing, resulting in a final analytical sample of 12,874 students (98% of the original sample).

Since SCC uses a rolling application process, students were assigned randomly to conditions throughout the study. At least twice each week, the registrar’s office approved applicants for admission, processed admittance letters in batch form, and sent the letters via U.S. mail. Over the course of the study, there were 93 batches of admittance letters sent by SCC. Due to seasonal variation in the number of applications received, batch size ranged from 10 to 565 students, with an average of 142 students per batch. Of note, the admittance letter contained no information about the implications of students’ placement exam scores; rather, it simply indicated the need to verify college readiness and offered college-administered placement exams as a means to do so.

We randomly assigned each admitted student in each batch to one of three conditions—one of two treatment groups or the control group. To maximize statistical power and control for unobserved student characteristics that vary by season, the random assignment into conditions forced three groups of approximately equal size within each batch (Alferes 2012; Shadish et al. 2002), with groups differing in size by no more than one student and only when the number of students in a batch was not a multiple of three.

Intervention

Within 1 day of sending the standard admittance letter, the two treatment groups were sent one of two versions of a letter that stressed the high stakes associated with their placement exam scores. To increase the likelihood that students would review the letter, we sent the letter in an official college envelope (addressed from the administrative office) and on college letterhead. Given that the letters were sent very near the time that students were receiving a formal decision about admittance to the college, we expected students’ receptiveness to college communication to be high. However, we did not verify receipt of the intervention letter, nor did we have any method of measuring students’ comprehension of the letter content. On the other hand, we did survey students to see if they recalled receiving the intervention letter and also asked about various behaviors that may have been affected by receiving and reading the letters (discussed later).

For one treatment group, the content of the additional letter focused on the benefits of obtaining high placement exam scores, such as allowing the student to enroll directly in college-level courses, reducing the total financial cost of attendance, and shortening time to completion (see Appendix 1). We refer to the content of this letter as the gain-framed message.

The second treatment group received a similar letter, the content of which focused on the costs associated with obtaining low placement exam scores, such as preventing a student from enrolling directly in college-level courses, increasing the total financial cost of attendance, and lengthening time to completion (see Appendix 2). We refer to the content of this letter as the loss-framed message. The third group of students—the control group—received only the standard admittance letter.

Importantly, to ensure that students who were required to take a placement exam received the intervention prior to testing, we randomized students into conditions before applicant demographic information and evidence of alternative academic preparation (e.g., ACT scores) was available. As a result, students who were excluded from the analysis due to missing data received the intervention, as were students who ultimately were not required to complete a placement exam because they were able to verify college readiness through other means. After dropping cases with missing data, the treatment and control groups remained nearly equal in size, with 4283 students (33.3%) assigned to the loss-frame treatment group, 4314 students (33.5%) assigned to the gain-frame treatment group, and 4277 students (33.2%) assigned to the control.

Dependent Variables

We divide our dependent variables into three groups corresponding to our research questions, addressing participation in the placement process, preparation for the placement exams, and performance on those exams.

Participation

Two nominal variables were constructed from administrative records to describe students’ participation in the Compass English and math exams. The variable for English took on five values, as follows:

- 1.

took the Compass English exam (n = 4851; 38%),

- 2.

sought to verify college readiness in English by submitting an alternative standardized English score (n = 308; 2%),

- 3.

both took the Compass English exam and submitted an alternative standardized English score (n = 108; 1%),

- 4.

took only the English language proficiency exam (n = 681; 5%), and

- 5.

did not participate in the English placement process (n = 6926; 54%).

Of the 6926 students who did not participate in the English placement process, 79% (5440 students) had not enrolled in SCC by the summer term of 2015, 2 years after beginning the intervention and 1 year after concluding it.

The variable for participation in math placement took on four values, as follows:

- 1.

took the Compass math exam (n = 4227, 33%),

- 2.

submitted an alternative standardized math score (n = 1496; 12%),

- 3.

took both the Compass math exam and submitted an alternative standardized math score (n = 692; 5%), and

- 4.

did not participate in the math placement process (n = 6459; 50%).

Of the 6459 students who did not participate in the math placement process, 83% (5368 students) had not enrolled in SCC by the summer term of 2015. Overall, of those students who opted to participate in the placement process by sitting for a placement test and/or submitting an alternative score, 3.3% submitted scores for only math or English.

Although the majority of students who did not participate in the math and English placement process did not enroll in SCC, the reverse is true of those who participated in either or both subject placement exams. Nearly three-quarters (74%; n = 5528) of the 7460 students who took a placement exam enrolled in SCC, and most (96%; n = 5307) of these students did so within one year of applying to SCC. In the end, we found no difference in enrollment versus non-enrollment by treatment condition (χ2 [2] = 1.32; p = 0.51).

Preparation

To address preparation, we administered a survey to students who participated in a placement exam (n = 7460). This survey was administered as part of the placement exam process, on the same computer, immediately after students logged-in for the exam but prior to attempting the exam. Consequently, data on preparation were unavailable for students who did not participate in a placement exam. Furthermore, some students who took the placement exam chose not to respond to some or all of the survey questions. In total, 5244 students (70%) responded to a least one survey question.

Note that, for 23% of the survey respondents (n = 1224), two or more sets of survey responses were recorded. Multiple sets of responses occurred when a student took two or more Compass placement exams on different dates or different times, as opposed to taking all required placement exams in a single sitting. In such cases, we used the first set of survey responses recorded for the student because these responses were nearest temporally to the receipt of the treatment.

Three dependent variables were drawn from the survey questions. Students were asked whether they recalled receiving the notification letter, specifically, “Did you receive a letter from [SCC] regarding the importance of this exam for determining in which courses you may enroll?” In total, 98% of students who responded to the survey answered this question (n = 5153), with 58% reporting that they received the letter (n = 2998) and 42% reporting that they did not receive the letter (n = 2155). Students assigned to either treatment group were significantly more likely than were students in the control group to report receiving the intervention letter, both when examined by each treatment group separately (χ2 [2] = 8.66; p = 0.01) and when treatment groups were pooled (χ2 [1] = 8.58; p = 0.003). Upon closer examination, these differences were modest with the treatment groups being approximately 4 percentage points more likely to report receiving the letter than was the control group. Finally, we tested whether students who participated in the placement process and reported receiving the letter differed demographically from the larger group of students assigned to the treatment. We found no significant differences in age (t [3408] = 1.38; p = 0.17), gender (χ2 [1] = 0.44; p = 0.51), or campus of application (χ2 [4] = 1.56; p = 0.82) between the two groups.

Students also were asked to report how prepared they were to take the placement exam, specifically, “How prepared are you to take this placement test?” Ninety-nine percent of students answered this question (n =5170), with 4% reporting that they were not prepared (n =225), 12% reporting that they were somewhat unprepared (n = 642), 59% reporting that they were somewhat prepared (n =3056), and 24% reporting that they were well prepared (n =1247). The last relevant survey question asked students to estimate the number of hours that they spent preparing for the placement exam. Again, ninety-nine percent of students who received the survey answered this question (n = 5173). They reported studying from 0 to 9 h with a mean of 1.8 h (s = 2.3).

Performance

We drew on administrative records of placement exam scores to test the effect of the treatment on student performance. For English performance, we combined students’ scores on the Compass writing and reading subtests, as is the practice at SCC regarding student placement. SCC requires that students achieve a combined score of 150 or higher to be placed into college-level English. For the 4959 students who took the Compass English exam, either alone (n = 4851) or in combination with submitting alternative standardized exam scores (n = 108) of readiness, average placement scores for students who scored above the college-level placement threshold was 172.5 (s = 13.5) versus 97.4 (s = 34.4) for those who scored below the college-level threshold.

Due to the adaptive testing algorithms used in the Compass math placement exam, analyzing the effect of the intervention on math performance was more complicated. Students are assessed through a branching series of subtests that began with high school algebra. The student’s performance on the high school algebra problems determined whether s/he remained in this placement area or was shifted down to the pre-algebra subtest or up to the college algebra subtest, and then possibly further up into a trigonometry subtest. The highest-level subtest that a student was able to complete was used by the college as the placement domain, providing the score used to place the student in the math curriculum. At SCC, this is the only score that is maintained in the administrative database. For example, in the database, students who have a pre-algebra score do not have algebra, college algebra, or trigonometry scores. Likewise, students who have an algebra score, do not have pre-algebra, college algebra, or trigonometry scores, and so on.Footnote 1

Consequently, for the 4919 students who took the Compass math placement exam, it was necessary to analyze the effect of the treatment for students who placed in one math domain separately from the effect of the treatment for students who placed in other math domains. More specifically, we analyzed the effect of the treatment on math performance among lower-achieving students with pre-algebra subtest scores (n = 2910; mean = 27.7 points; s = 8.9; range of 16–84) separately from the effect of the treatment among higher-achieving students with Compass algebra subtest scores (n = 1811; mean = 35.4; s = 10.8; range of 15–89). We did not analyze the effect of the treatment on math performance among very high achieving students with placement domains of college algebra or trigonometry due to small analytical subsamples: just 67 students placed in the college algebra domain, and only 131 students placed in the trigonometry domain.

It is possible, though, that the effect of the treatment on math performance is such that treated students were more likely to place in a higher (or lower) math domain, which would be unobserved when each math domain is analyzed separately. To test this possibility, we estimate the effect of the treatment on students’ math placement domain, using an ordinal outcome variable and including all four domains (n = 4919; pre-algebra = 1, algebra = 2, college algebra = 3, trigonometry = 4).

Independent Variables

The primary independent variable of interest in this study is the treatment, coded as a three-category nominal variable: control (= 0), loss-framed message (= 1), and gain-framed message (= 2). Because many students apply to SCC but do not enroll, the selection of statistical controls was limited to elements collected in the short application for admission. In that regard, our analysis incorporated student’s self-reported age, gender, and the campus to which a student applied, all collected in the admission application. As noted earlier, respondents with missing data on age, gender, and campus of application were dropped from the analysis. Students’ race/ethnicity, also collected at the time of application, was not included in our analysis due to an excess of missing data on this variable (88% of the sample).

Methods of Analysis

Data were analyzed and results are presented in the sequence in which students progressed through the assessment process. First, students made decisions about placement test participation. Then, for students who chose to take the placement test, a survey was administered asking questions about their preparation. Finally, for students who completed a placement test, we analyzed their performance.

Participation

We used multinomial logistic regression to test the effect of the intervention on students’ mode of participation in the placement testing process. In the case of the five-category nominal variable representing participation in English placement testing, we set exclusive participation in the Compass English exam (i.e., no submission of alternative evidence of college readiness) as the baseline outcome category. Likewise, for participation in the math placement testing process, we set exclusive participation in the Compass math exam as the baseline outcome category. Estimates from the logistic models were converted to predicted probabilities of participation to provide a measure of absolute difference between groups, as opposed to presenting differences relative to the comparison group (Graubard and Korn 1999). In both models, we controlled for sex (male, female, missing), age (identity, square, and cube to account for nonlinear relationships), and campus of application.

Preparation

Our analyses of the survey data were conditioned on taking a placement exam because the survey was administered electronically immediately prior to the placement exam at the same sitting. We used logistic regression to test the effect of the intervention on the probability that a student reported receiving the intervention. We used ordinal logistic regression to test the effect of the intervention on self-reported readiness for the Compass placement exam. We used ordinary least squares (OLS) regression to test the effect of the intervention on self-reported hours of preparation for the Compass placement exam. Again, we controlled for sex, age, the square of age, the cube of age, and campus of application.

Performance

We used OLS regression to test the effect of the intervention on scores achieved on the Compass English exam, Compass pre-algebra subtest, and Compass algebra subtest. We used ordered logistic regression to test the effect of the treatment on students’ likelihood of placing in a higher or lower math domain. We controlled for sex, age, and campus of application, as in previous models.

Potential Limitations of the Data

This study benefits from a large and diverse sample of students, strengthening its capacity to inform policy and practice pertaining to student placement in institutions of similar size and student composition. Still, such policies and practices vary considerably across institutions. Therefore, caution should be exercised in generalizing our findings to institutions that are markedly different from SCC.

In addition, SCC’s admissions application was the only source of data for students in this study who did not participate in placement testing. The brevity of this instrument, combined with the optional nature of some of the elements in the instrument (e.g., race/ethnicity), limited the number of covariates available for our analysis. Nevertheless, the randomized application of our treatment greatly reduces the importance of such covariates, as compared with nonexperimental studies.

Finally, as noted earlier, students at SCC may seek exemption from placement exams by submitting selected alternative standardized scores (e.g., ACT, SAT), documentation of adequate performance on certain AP exams, or evidence of completion of the equivalent levels of coursework in math or English at other accredited postsecondary institutions. The data used in this study include alternative standardized exam scores submitted by students, but do not include information on AP exams or prior completion of relevant courses. Very few students (less than 1%) attempted to submit alternative placement materials, however. Hence, we expect that, if this information were available, the impact on our findings would be negligible.

Results

The distributions of sex, age, and campus of application at key stages of this study are presented in Table 1. Tests of imbalance in the distribution of these characteristics by treatment condition reveal no significant differences within the stages shown in Table 1. This finding lends assurance that any observed effects of the treatment are not due to differential selection on any of the variables for which we have data. Note that additional balance tests (not shown) in which cases with missing data were coded to categories of missing, rather than dropped from the analysis, further verified our findings of no significant differences in the distribution of student characteristics across treatment conditions.

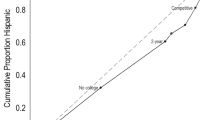

Participation

Results for our multinomial logistic regression analysis of the five-category nominal variable representing participation in English placement testing and the four-category nominal variable representing participation in math placement testing are presented in Table 2. In Table 3, we provide predicted probabilities of each mode of participation in English and math placement testing for a typical student in our sample (the modal categories of female, 19 years of age, and applying to Campus E).

The findings indicate that, as compared with students who did not receive a letter (the control group), students who received the loss-framed letter had a significantly higher relative risk of submitting an alternative exam score in English versus taking the Compass English exam. Practically speaking, however, this difference between the control and loss-frame treatment group is quite small, with the loss-frame group predicted to be just one percentage point more likely to submit an alternative exam score.

The findings for math are similar. As compared with the control group, students who received the loss-framed message had a higher relative risk of submitting alternative exam scores for math, whether alone or in conjunction with taking the Compass math exam, versus only taking the Compass math exam. Again, the practical differences between the control and loss-frame treatment groups are quite small, amounting to a one- to two-percentage-point greater likelihood of submitting an alternative exam score among students in the loss-frame group.

Preparation

Results of our tests of the effect of the intervention on self-reported receipt of the treatment, self-reported readiness for the exam, and self-reported hours of preparation are presented in Table 4. We find that both the loss- and gain-frame groups were significantly more likely than was the control group to report receiving the letter that constituted the treatment. Although the difference between control and treated students was substantively small, this finding is reassuring because a no effect finding could indicate that the supplemental letter was disregarded by students. Yet, despite this reassuring evidence, neither the loss- nor gain-framed message had a significant effect on self-reported readiness or self-reported hours of preparation.

Performance

We find no evidence of a significant effect of the treatment on placement exam scores (see Table 4), neither English nor math. For each of the three Compass scores considered here, the effects of both the gain- and loss-framed messages are statistically insignificant.

However, we find a small, significant effect of the gain-framed message on students’ likelihood of advancing up a math domain, indicating an improvement in math placement exam performance. Upon examining this effect more closely using a series of logistic regression models (not shown), we find that students who received the gain-framed message were significantly more likely than were students in the control group to place in the algebra domain versus the pre-algebra domain (b = 0.170; p = 0.033). A typical student (modal sex, age, and campus of application) who received the gain-framed treatment is predicted to be four-percentage points more likely to place in the algebra domain than in the pre-algebra domain, relative to a peer who did not receive the treatment. No such effect is observed for the loss-framed message, nor is an effect of either treatment observed at either of the two other math domain boundaries (i.e., algebra vs. college algebra; college algebra vs. trigonometry).

Heterogeneity Analysis

Another possibility that we considered is that the effect of our treatment varies by gender and age. It is possible, for example, that average differences by gender in academic experiences may influence how the supplemental information is perceived, resulting in differences in response to the treatment. Similarly, older students may have a response to the treatment that differs from younger students due to concerns about time away from school and rusty skills. Older students also may have less access than do younger students to alternative test scores that would allow them to bypass placement exams.

To investigate these possibilities, we re-estimated all models, first interacting the treatment with gender and then, in a separate set of models, interacting the treatment with age (identity, square, and cube). The results (not shown) are largely consistent with our previous findings with two exceptions. First, with respect to gender, males who received the loss-framed letter exhibited a small but statistically significant increase of approximately one-quarter of an hour (0.28 h) in self-reported preparation for the placement exams, relative to males in the control group. This effect is not observed among females, and it is not observed for either males or females who received the gain-framed letter.

With respect to age, older students who received the gain-framed letter and who placed in the pre-algebra domain experienced a small but statistically increase in their math score. The greatest effect is observed among students in their early and mid-40s, but, at its peak, the effect amounts to only a two-point increase as compared with the control group. No such age-related effects were observed among students who received the loss-framed letter or among students who placed in the algebra domain.

Sensitivity of Results to Alternative Model Specifications

We conducted several additional analyses to test the sensitivity of our results to alternative specifications of the models, seeking to address the most critical threats to the validity of our findings. First, in order to test the effect of simply receiving a message about the consequences of placement exam scores (without regard to its framing), we pooled the two treatment conditions into a single treatment group and re-estimated our main analyses. Substantively speaking, the results (not shown) differ only in that the effect of the pooled treatment on the likelihood of submitting alternative evidence of English readiness is not statistically significant, whereas we found that the effect of the loss-framed letter was a significant (though small) increase in the likelihood of submitting such alternative evidence.

Second, to ensure that our findings are not influenced by the loss of the few students who did not report their age or gender, or who were missing information for the campus of application, we recoded all missing values on gender and campus to a missing category on these variables, rather than dropping these cases. For individuals who did not report their age, we used the mean age of the subsample for each specific analysis and then created an additional dummy indicator of age imputation. Handling missing data in this manner allowed us to retain all students in the analyses. The results (not shown) again aligned closely with our main analyses, lending confidence in our findings.

Third, we used instrumental variable analysis to determine if treatment delivery (i.e., students’ reported receipt of the letter) altered the estimated effect of the intervention on the number of hours studied, as well as scores for the English, pre-algebra and algebra tests. For these analyses we applied a two-stage least squares regression model, that included a binary random assignment variable as the instrument as well as all previously mentioned covariates. These results (not shown) corroborate that the letter did not significantly impact any of the test scores or hours of preparation.

Finally, we re-estimated our analyses of placement exam preparation and performance, limiting the sample to untreated students and treated students who reported receiving the letter that constituted the treatment. The results (Table 5) indicate that treated students—both the gain-frame and loss-frame groups—who reported receiving the letter also reported significantly higher levels of readiness and a significantly greater number of hours of preparation for the placement exams, as compared with the control group. However, these effects do not translate into stronger performance on either the English or math placement exams, nor does the significant, positive effect of the gain-framed message on math placement domain remain.

Ancillary Analyses

Our findings also raise a number of questions worthy of further investigation. Among these questions, the large fraction of students who do not enroll in community college after being admitted begs the question of whether our treatment influenced students’ decision in this regard. To test this possibility, we constructed two dichotomous outcome variables that address enrollment in SCC. The first captures whether a student enrolled in SCC within the time horizon of our administrative data (n =12,874; 45.9% enrolled; 54.1% did not enroll) in which the last observed term is the summer term of 2015. The second variable captures whether a student enrolled in SCC within one year of applying to SCC (n =12,874; 43.7% enrolled; 56.3% did not enroll). We used logistic regression to test the effect of the intervention on each of these outcomes, employing the same statistical controls as in our main analyses. The results (not shown) indicate that neither the gain- nor loss-framed message had any effect on students’ decision to enroll in SCC.

Our main analyses indicated that the loss-framed message increased the likelihood that students would submit alternative evidence of college readiness, whether in conjunction with taking the placement exam (as observed with math) or instead of taking the placement exam (as observed with both math and English), relative to taking the placement exam alone. We do not know, however, whether the treatment increased the likelihood that college-ready students would submit evidence of their readiness. Such an effect presumably would result in a distribution of submitted ACT scores that differed between students in the treatment and control groups. To test this possibility, we used OLS regression to analyze variation in students’ submitted ACT math scores (n = 2188) as a function of the three-category treatment variable and our previous set of statistical controls. We did the same with students’ ACT English and reading scores (n = 401). The results (not shown) indicate no significant differences in ACT scores between the control group and either of the treatment groups.

Finally, we disaggregated the effect of the treatment on the reading and writing components of English exam performance, using OLS regression to analyze the reading and writing subset scores separately rather than as a composite. The results (not shown) indicated a small but statistically significant and negative effect of the loss-framed message on reading performance, as compared with the control group. However, the estimated difference of 1.3 points is not practically significant in light of the 99-point range of the exam. Otherwise, the findings align with our main analyses.

Discussion

Community colleges across the country are implementing changes to their assessment processes in an effort to ensure that new students are accurately placed in the curriculum. Many of these reforms seek to address one or more of three primary concerns. First, students often lack an understanding of the placement process and are unprepared for it (Fay et al. 2013; Hodara et al. 2012; Safran and Visher 2010; Venezia et al. 2010). Second, the content of placement exams and college-level curriculum sometimes are misaligned (Hodara et al. 2012; Hughes and Scott-Clayton 2011). Third, the single measure of placement used by many community colleges—a standardized placement exam—provides an assessment of students’ readiness for college-level coursework that frequently is incomplete and inaccurate (Bahr 2016; Bahr et al. in press; Belfield and Crosta 2012; Hodara et al. 2012; Hughes and Scott-Clayton 2011; Scott-Clayton 2012).

This study focuses on the first of these concerns. We designed and implemented a low-cost intervention that provided information to students about the implications of placement exam scores for the financial cost of attending college and the time required to complete a credential. The central objective of this intervention was to improve students’ preparation and performance on placement exams. In addition, we tested whether framing the message as gains (e.g., lower cost and shorter time in college resulting from college-level placement) or losses (e.g., higher cost and longer time in college resulting from remedial placement) alters the effect of the information on student behavior and outcomes.

Overall, we found that informing students about the ramifications of placement test results had minimal effect on student behavior. However, we did find some evidence that suggests that the loss-framed message may motivate students to try to bypass the placement exam by submitting potentially substitutive exam scores (e.g., ACT scores). The fact that, in the case of math, recipients of the loss-framed letter were both more likely to only submit alternative scores and also more likely to submit alterative scores in conjunction with taking the placement exam suggests that their alternative scores were not always sufficient to allow them to bypass the placement exam. In additional analyses, we confirmed this interpretation, finding no significant differences between treated and control groups in ACT math, English, and reading scores submitted by students.

However, the letters sent to the treated students did not mention explicitly the option of bypassing placement exams by submitting alternative evidence of readiness. The effects observed here might have been larger had this information been conveyed to students. More generally, research is needed on the extent to which students are aware and avail themselves of opportunities to demonstrate college readiness through means other than placement exams. Interventions designed to raise students’ awareness of such opportunities and, specifically, to help college-ready students bypass placement exams likely would reduce rates of placement in remedial coursework and ultimately improve college completion rates.

In the survey administered when students took the placement exam, recipients of both the gain- and loss-framed messages reported receiving the letter at significantly higher rates than did students in the control group, but the differences between treated and control groups were relatively small. We speculate that students in the control group may have confused the admission letter, that briefly mentions the placement process, with the intervention letter. Future research might avoid this confound by utilizing letter tracking strategies (e.g., certified letters that require a signature) that have been found to be an effective method to verify letter receipt (Yeaton and Moss in press). Unfortunately, we do not observe a significant difference between the treatment and control groups in self-reported preparation, except for a small increase of one-quarter of an hour in preparation among males who received the loss-framed treatment.

We do not find evidence of an effect of the treatment on English or math placement exam scores specifically. However, we do find a small positive effect of the gain-framed message on students’ math placement domain, indicating that recipients of this message performed slightly better on the math placement exam than did the control group.

Overall, our study indicates that arming students with information about the importance of placement exam scores has only very limited influence on behavior. Alone, it is insufficient to produce substantive improvement in student preparation and performance on placement exams. In addition to advising students about why they should prepare for placement exams, it may be important for institutions also to provide students with clear guidance about how to prepare for placement exams, as well as the level of readiness needed to be successful in a given content area.

In fact, SCC offers the opportunity for prospective students to take practice exams and engage in interactive online tutorials. Directing students to these resources and clearly explaining the relationship between study time and exam performance may have increased the effectiveness of our intervention. Furthermore, conveying information to students through multiple modes of communication like text messages, which are a cost-effective means to provide information to students (Lenhart 2012), may prove more effective than sending a single letter.

Finally, it is important to consider how information about the importance of the placement testing process may differ in institutional settings that have engaged in reform efforts to improve the accuracy of placement. Institutions that have taken steps to design custom placement exams that are closely aligned with the content and expectations of the college-level curriculum (e.g., Hodara et al. 2012) may be better equipped to provide information to students about the placement testing process, how the exam articulates with an institution’s standard of college readiness, and the explicit steps students can take to be academically prepared for and successful in college.

Moreover, institutions that assess students with multiple measures of college readiness (e.g., Bahr et al. in press; Hodara et al. 2012) can greatly reduce their reliance on high-stakes placement exams, instead drawing on alternatives sources of information, such as high school achievement. This comprehensive approach to assessment allows for a more nuanced understanding of students’ academic strengths and weaknesses than is offered by a single cut-score on a placement exam, ensuring that students are placed more accurately into the college curriculum. In fact, shifting away from placement exams and toward multiple measures assessment may be the best way to improve the accuracy of student placement in the math and English curricula (Bahr et al. in press).

Conclusion

Placement exams are designed to assess academic readiness for college so that students can be placed at a level within the curriculum that maximizes their chances of success. Students who are assessed below college-ready standards too often fail to emerge from the developmental curriculum and, when they do, often spend more time and money in pursuit of a college degree than do their peers who are placed at the college-level. Given the high-stakes nature of placement testing in many institutions, additional steps should be taken to ensure that students are aware of the consequences of their placement exam scores and are equipped with the resources needed to maximize their performance. Our study was designed to directly answer the question of whether raising students’ awareness about the implications of their placement exams has an effect on key student outcomes, including placement exam preparation and performance. The fact that the intervention tested here did not lead to substantive improvements in student preparation for or performance on placement exams, indicates institutions need to provide direct and comprehensive guidance to students throughout the placement process, to provide study and other support materials to help students prepare for the exams, and, ideally, to not rely solely on a single high-stakes placement exam but instead draw on multiple measures of college readiness. Doing so will ensure that students can engage actively as partners in the assessment process and improve the accuracy of student placement.

Notes

For the small number of students who took the math placement exam more than once, we used the first (earliest) math score because it was nearest temporally to the receipt of the treatment. The one exception to this rule were a very small number of instances of students taking the math placement exam twice in a single day and this day also being the earliest date of attempting the math placement exam. In such cases, we used the highest math domain and corresponding score achieved on this date, which may or may not be the first score recorded for that date.

References

Alferes, V. R. (2012). Methods of randomization in experimental design (Vol. 171). Thousand Oaks, CA: Sage Publications.

Attewell, P., Lavin, D., Domina, T., & Levey, T. (2006). New evidence on college remediation. Journal of Higher Education, 77, 886–924.

Bahr, P. R. (2008). Does mathematics remediation work? A comparative analysis of academic attainment among community college students. Research in Higher Education, 49, 420–450.

Bahr, P. R. (2009). Revisiting the efficacy of postsecondary remediation: The moderating effects of depth/breadth of deficiency. Review of Higher Education, 33, 177–205.

Bahr, P. R. (2016). Replacing placement tests in Michigan’s community colleges. Ann Arbor: University of Michigan.

Bahr, P. R., Fagioli, L. P., Hetts, J., Hayward, C., Willett, T., Lamoree, D., Newell, M. A., Sorey, K., & Baker, R. B. (in press). Improving placement accuracy in California’s community colleges using multiple measures of high school achievement. Community College Review.

Bahr, P. R., & Gross, J. (2016). Community colleges. In M. N. Bastedo, P. G. Altbach, & P. J. Gumport (Eds.), American higher education in the 21st century (4th ed., pp. 462–502). Baltimore: John Hopkins University.

Bailey, T., Jeong, D. W., & Cho, S. (2010). Referral, enrollment, and completion in developmental education sequences in community colleges. Economics of Education Review, 29, 255–270.

Banks, S. M., Salovery, P., Greener, S., Rothman, A. J., Moyer, A., Beauvais, J., et al. (1995). The effects of message framing on mammography utilization. Health Psychology, 14, 178–184.

Belfield, C. R., & Crosta, P. M. (2012). Predicting success in college: The importance of placement tests and high school transcripts (CCRC Working Paper No. 42). New York: Columbia University.

Bettinger, E. P., Boatman, A., & Long, B. T. (2013). Student supports: Developmental education and other academic programs. The Future of Children, 23, 93–115.

Block, L. G., & Keller, P. A. (1995). When to accentuate the negative: The effects of perceived efficacy and message framing on intentions to perform a health-related behavior. Journal of Marketing Research, 32, 192–203.

Burdman, P. (2012). Where to begin? The evolving role of placement exams for students starting college. Boston: Jobs for the Future. Retrieved from Jobs for the Future website: http://www.jff.org/publications/where-begin-evolving-role-placement-exams-students-starting-college.

DesJardins, S. L., & Toutkoushian, R. K. (2005). Are students really rational? The development of rational thought and its application to student choice. Higher Education: Handbook of Theory and Research (pp. 191–240). Berlin: Springer.

Fay, M. P., Bickerstaff, S., & Hodara, M. (2013). Why students do not prepare for math placement exams: Student perspectives (CCRC Brief No. 57). New York: Columbia University.

Fields, R., & Parsad, B. (2012). Tests and cut scores used for student placement in postsecondary education: Fall 2011. Washington, DC: National Assessment Governing Board. Retrieved from https://www.nagb.org/content/nagb/assets/documents/commission/researchandresources/test-and-cut-scores-used-for-student-placement-in-postsecondary-education-fall-2011.pdf.

Ginder, S. A., Kelly-Reid, J. E., & Mann, F. B. (2015). Enrollment and Employees in Postsecondary Institutions, Fall 2014; and Financial Statistics and Academic Libraries, Fiscal Year 2014: First Look (Provisional Data) (NCES 2016-005). U.S. Department of Education. Washington, DC: National Center for Education Statistics. Retrieved from http://nces.ed.gov/pubsearch.

Graubard, B. I., & Korn, E. L. (1999). Predictive margins with survey data. Biometrics, 55, 652–659.

Grewal, D., Gotlieb, J., & Marmorstein, H. (1994). The moderating effects of message framing and source credibility on the price-perceived risk relationship. Journal of Consumer Research, 21, 145–153.

Hodara, M., Jaggars, S. S., & Karp, M. M. (2012). Improving developmental education assessment and placement: Lessons from community colleges across the country (CCRC Working Paper No. 51). New York: Columbia University.

Hughes, K. L., & Scott-Clayton, J. (2011). Assessing developmental assessment in community colleges. Community College Review, 39, 327–351.

Jones, B. D. (1999). Bounded rationality. Annual Review of Political Science, 2, 297–321.

Lauvey, D., & Rubin, M. (1990). Message framing, dispositional optimism, and follow-up for abnormal Papanicolaou tests. Research in Nursing & Health, 13, 199–207.

Lenhart, A. (2012). Teens, smartphones & texting. Pew Internet & American Life Project, 21, 1–34.

Lesik, S. A. (2006). Applying the regression-discontinuity design to infer causality with non-random assignment. The Review of Higher Education, 30, 1–19.

Levin, I. P., & Gaeth, G. J. (1988). How consumers are affected by the framing of attribute information before and after consuming the product. Journal of Consumer Research, 15, 374–378.

Maheswaran, D., & Meyers-Levy, J. (1990). The influence of message framing and issue involvement. Journal of Marketing Research, 27, 361–367.

March, J. G. (1994). Primer on decision making: How decisions happen. New York: Simon and Schuster.

Meyerowitz, B. E., & Chaiken, S. (1987). The effect of message framing on breast self-examination attitudes, intentions, and behavior. Journal of Personality and Social Psychology, 52, 500–510.

Moss, B. G., & Yeaton, W. H. (2006). Shaping policies related to developmental education: An evaluation using the regression-discontinuity design. Educational Evaluation and Policy Analysis, 28, 215–229.

Moss, B. G., & Yeaton, W. H. (2013). Evaluating effects of developmental education for college students using a regression discontinuity design. Evaluation Review, 37, 370–404.

Moss, B. G., Yeaton, W. H., & Lloyd, J. (2014). Estimating the effectiveness of developmental mathematics by embedding a randomized experiment within a regression discontinuity design. Educational Evaluation and Policy Analysis., 36, 170–185.

Rothman, A. J., Salovey, P., Antone, C., Keough, K., & Martin, C. D. (1993). The influence of message framing on intentions to perform health behaviors. Journal of Experimental Social Psychology, 29, 408–433.

Safran, S., & Visher, M. G. (2010). Case studies of three community colleges: The policy and practice of assessing and placing students in developmental education courses. New York: Columbia University.

Scott-Clayton, J. (2012). Do high-stakes exams predict college success? (CCRC Working Paper No. 41). New York: Columbia University.

Shadish, W. R., Cook, T. D., & Campbell, T. D. (2002). Experimental and quasi-experimental design for generalized causal inference. Boston: Houghton Mifflin.

Simon, H. A. (1991). Bounded rationality and organizational learning. Organization Science, 2, 125–134.

Tversky, A., & Kahneman, D. (1981). The framing of decisions and the psychology of choice. Science, 211, 453–458.

Tversky, A., & Kahneman, D. (1986). Rational choice and the framing of decisions. The Journal of Business, 59, 251–278.

Venezia, A., Bracco, K. R., & Nodine, T. (2010). One-shot deal? Students’ perceptions of assessment and course placement in California’s community colleges. San Francisco, CA: WestEd.

Witte, K., & Allen, M. (2000). A meta-analysis of fear appeals: Implications for effective public health campaigns. Health Education and Behavior, 27, 591–615.

Yeaton, W. H., & Moss, B. G. (in press). A multiple-design, experimental strategy: Academic probation warning letter’s impact on student achievement. The Journal of Experimental Education.

Acknowledgements

An earlier version of this study was presented at the 2016 annual meeting of the Association for the Study of Higher Education in Columbus, Ohio. The authors thank the Editor and anonymous referees of the journal Research in Higher Education for feedback on this work. In addition, the authors thank the administration of the study college for support of this investigation, and also thank Susan Dynarski (University of Michigan) for the suggestion that ultimately led to this investigation.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Gain-Frame Message

College letter head

Date

Student ID

Student Name

Address

Dear XXXX,

Recently, you received a letter of admittance to Midwest Community College with instructions regarding the course placement process that determines your eligibility to enroll in college-level courses. If you do not have an ACT score that satisfies the course placement requirement, you will need to complete the COMPASS® placement test.

This placement test is very important. Achieving a placement test score that is at or above a college-ready level will allow you to enroll directly in college-level, subject-related courses, instead of enrolling in developmental courses, which do not count toward a degree or certificate and generally do not transfer to a four-year postsecondary institution. In addition, enrolling directly in college-level courses can reduce the total cost of attending college because developmental courses cost as much as $249.20 per three-credit course (within-district rate). Finally, students who enroll directly in college-level courses typically spend about one less semester in college to complete a credential, as compared with students who need to take developmental courses. Therefore, you are encouraged to achieve the highest score that you are able on the COMPASS® placement test.

Sincerely,

XXXX

Executive Director of Enrollment Services

Appendix 2: Loss-Frame Message

College letter head

Date

Student ID

Student Name

Address

Dear XXXX,

Recently, you received a letter of admittance to Midwest Community College with instructions regarding the course placement process that determines your eligibility to enroll in college-level courses. If you do not have an ACT score that satisfies the course placement requirement, you will need to complete the COMPASS® placement test.

This placement test is very important. Achieving a placement test score that is below a college-ready level will prevent you from enrolling in college-level, subject-related courses until you have completed successfully the developmental course prerequisites. Developmental course credits do not count toward a degree or certificate and generally do not transfer to a 4-year postsecondary institution. In addition, developmental courses can cost as much as $249.20 per three-credit course (within-district rate). Finally, students who need to take developmental courses typically spend about one additional semester in college to complete a credential, as compared with students who do not need to take developmental courses. Therefore, you are encouraged to achieve the highest score that you are able on the COMPASS® placement test.

Sincerely,

XXXX

Executive Director of Enrollment Services

Rights and permissions

About this article

Cite this article

Moss, B.G., Bahr, P.R., Arsenault, L. et al. Knowing is Half the Battle, or Is It? A Randomized Experiment of the Impact of Supplemental Notification Letters on Placement Exam Participation, Preparation, and Performance. Res High Educ 60, 737–759 (2019). https://doi.org/10.1007/s11162-018-9536-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-018-9536-9