Abstract

This paper estimates Bayesian Vector Autoregressive (BVAR) models, both spatial and non-spatial (univariate and multivariate), for the twenty largest states of the US economy, using quarterly data over the period 1976:Q1–1994:Q4; and then forecasts one-to-four quarters-ahead real house price growth over the out-of-sample horizon of 1995:Q1–2006:Q4. The forecasts are evaluated by comparing them with those from an unrestricted classical Vector Autoregressive (VAR) model and the corresponding univariate variant of the same. Finally, the models that produce the minimum average Root Mean Square Errors (RMSEs), are used to predict the downturns in the real house price growth over the recent period of 2007:Q1–2008:Q1. The results show that the BVARs, in whatever form they might be, are the best performing models in 19 of the 20 states. Moreover, these models do a fair job in predicting the downturn in 18 of the 19 states.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

This paper estimates Bayesian Vector Autoregressive (BVAR) models, both spatial and non-spatial (univariate and multivariate), for the twenty largest states (in terms of population) of the US economyFootnote 1, using quarterly data over the period 1976:Q1–1994:Q4; and then forecasts one-to-four quarters ahead real house price growth over the 48 quarters out-of-sample forecast horizon of 1995:Q1–2006:Q4. The forecasts are then evaluated by comparing them with the ones generated from an unrestricted classical Vector Autoregressive (VAR) model and the corresponding univariate variant of the same. Finally, the models that produce the minimum average Root Mean Square Errors (RMSEs), or in other words the “optimal” models, are used to predict the downturns in the real house price growth over the recent period of 2007:Q1–2008:Q1.

House price downturn in the last few quarters have been of concern in the US housing market. After an initial boom in the late 1990’s, and even into the early 2000’s, activity in the US housing market has of late waned (NAR 2006). Booms and busts in house prices can have significant impact on the confidence of consumer expenditure, and in turn, on the financial markets. Stock and Watson (2003) pointed to the role of asset prices in forecasting inflation, and have highlighted the dominance of house prices in this regard. Thus, a concern to all stakeholders is whether the growth in house prices is predictable. As such, the need to design models that can forecast house prices timely, and their possible turns, is of paramount importance. In this regard, the VARs, simply based on the variables of interest, which in our case are the real house price growths of the twenty largest US states, could be of tremendous value. Even though these models lack information about the possible fundamentals that might be affecting the housing market, they can be very handy when it comes to providing preliminary indication about where the variables of interest might be heading (Gupta and Das (2008) and Das et al. (2008)). The fact that VARs, especially BVARs, are quite well-suited in predicting turning points of macroeconomic variables has recently been substantiated by Dua and Ray (1995), Dua and Miller (1996), Del Negro (2001), Gupta and Sichei (2006), Gupta (2006, 2008), Zita and Gupta (2008) and Banerji et al. (2008), amongst others. Moreover, as indicated by Rapach and Strauss (2007, 2008), Gupta and Das (2008) and Das et al. (2008), it is also important to account for the effects of the house price of neighbouring states in predicting the house price of a specific state, and herein lies the rationale for using Spatial BVAR (SBVAR) models based on the Minnesota priorsFootnote 2, over and above the standard VAR and BVAR models, for forecasting house price.

To the best of our knowledge, this is the first attempt to predict the recent downturns in the real house price growth for the twenty largest states of the US economy. The remainder of the paper is organized as follows: “VARs, BVARs and SBVAR: Specification and Estimation” outlines the details of the structure and the estimation of the VAR, BVARs and the SBVAR comprising of the real house price growth of the twenty largest US states, while “VARs, BVARs and SBVAR Models for Forecasting House Prices in the Twenty largest US States” discusses the layout of the models, “Evaluation of Forecast Accuracy” compares the accuracy of the out-of-sample forecasts generated from the alternative models, and “Predicting the Recent Downturn” is devoted to analyzing the ability of the “optimal” modelFootnote 3 to predict the turning point in the real house price growth. Finally, “Conclusions” concludes and considers possible areas of future research by highlighting the limitations of this study.

VARs, BVARs and SBVAR: Specification and Estimation

The discussion in this section relies heavily on LeSage (1999), Gupta and Sichei (2006), Gupta (2006, 2007, 2008), Gupta and Das (2008) and Das et al. (2008).

The Vector Autoregressive (VAR) model, though ‘atheoretical’, is particularly useful for forecasting purpose. An unrestricted VAR model, as suggested by Sims (1980), can be written as follows:

where y is a (n × 1) vector of variables being forecasted, which in our case is the annualized real house price growth rates of the twenty largest states of the US economy; A(L) is a (n × n) polynomial matrix in the backshift operator L with lag length p, i.e., \(A\left( L \right) = A_1 L + A_2 L^2 + \ldots \ldots \ldots \ldots \ldots . + A_p L^p \) ; A0 is a (n × 1) vector of constant terms, and ɛ is a (n × 1) vector of error terms. We assume that \(\varepsilon \sim N\left( {0,\sigma ^2 I_n } \right)\), where I n is a n × n identity matrix.

Note the VAR model generally uses equal lag length for all the variables of the model. A drawback of VAR models is that many parameters need to be estimated, some of which may be insignificant. This problem of overparameterization, resulting in multicollinearity and a loss of degrees of freedom, leads to inefficient estimates and possibly large out-of-sample forecasting errors. One solution often adapted, is simply to exclude the insignificant lags based on statistical tests. Another approach is to use a near VAR, which specifies an unequal number of lags for the different equations.

However, an alternative approach to overcoming this overparameterization, as described in Litterman (1981), Doan et al. (1984), Todd (1984), Litterman (1986), and Spencer (1993), is to use a Bayesian VAR (BVAR) model. Instead of eliminating longer lags, the Bayesian method imposes restrictions on these coefficients by assuming that they are more likely to be near zero than the coefficients on shorter lags. However, if there are strong effects from less important variables, the data can override this assumption. The restrictions are imposed by specifying normal prior distributions with zero means and small standard deviations for all coefficients, with the standard deviation decreasing as the lags increase. The exception to this for the coefficient of the first own lag of a variable that has a mean of unity. Litterman (1981) used a diffuse prior for the constant term. This prior is popularly referred to as the ‘Minnesota prior’ due to its development at the University of Minnesota and the Federal Reserve Bank at Minneapolis.

Formally, as discussed above, the means and variances of the Minnesota prior take the following form:

where β i denotes the coefficients associated with the lagged dependent variables in each equation of the VAR, while β j represents any other coefficient. In the belief that lagged dependent variables are important explanatory variables, the prior means corresponding to them are set to unity. However, for all the other β j coefficients in a particular equation of the VAR, a prior mean of zero is assigned to suggest that these variables are less important to the model.

The prior variances \(\sigma _{\beta _i }^2 \) and \(\sigma _{\beta _j }^2 \)specify uncertainty about the prior means \(\overline \beta _i = 1\), and \(\overline \beta _j = 0\), respectively. Because of the overparameterization of the VAR, Doan et al. (1984) suggested a formula to generate standard deviations as a function of small number of hyperparameters w, d, and a weighting matrix f(i, j). This approach allows the forecaster to specify individual prior variances for a large number of coefficients based on only a few hyperparameters. The specification of the standard deviation of the distribution of the prior imposed on variable j in equation i at lag m, for all i, j and m, defined as S 1 (i, j, m), can be specified as follows:

with f(i, j) = 1, if i = j and k ij otherwise, with (0 ≤ k ij ≤ 1); g(m) = m −d, d > 0. Note that \(\widehat\sigma _i \) is the estimated standard error of the univariate autoregression for variable i. The ratio \({{\widehat\sigma _i } \mathord{\left/ {\vphantom {{\widehat\sigma _i } {\widehat\sigma _j }}} \right. \kern-\nulldelimiterspace} {\widehat\sigma _j }}\) scales the variables to account for differences in the units of measurement and hence, causes specification of the prior without consideration of the magnitudes of the variables. The term w indicates the overall tightness and is also the standard deviation on the first own lag, with the prior getting tighter as we reduce the value. The parameter g(m) measures the tightness on lag m with respect to lag 1, and is assumed to have a harmonic shape with a decay factor of d, which tightens the prior on increasing lags. The parameter f(i, j) represents the tightness of variable j in equation i relative to variable i, and by increasing the interaction, i.e., the value of k ij, we can loosen the prior.Footnote 5

In the literature, the values of overall tightness (w) are 0.1, 0.2, while those of the lag decay (d) are 1.0 and 2.0, with k ij = 0.5, which implies a weighting matrix (F) of the following form:

Since, the lagged dependant variable in each equation is thought to be important, F imposes \(\overline \beta _i = 1\) loosely, while, given that the β j coefficients are associated with variables presumed to be less important, the weighting matrix F imposes the prior means of zero more tightly on the coefficients of the other variables in each equation. Given that the Minnesota prior treats all variables in the VAR, except for the first own-lag of the dependent, in an identical manner, quite a few number of attempts have been made to alter this fact. Some studies have suggested relying more on the results from the loose versions of the prior (Dua and Ray (1995) and LeSage (1999)). Alternatively, LeSage and Pan (1995) have suggested the construction of the weight matrix based on the First-Order Spatial Contiguity (FOSC), which simply implies the creation of a non-symmetric F matrix that emphasizes the importance of the variables from the neighboring states more than that of the non-neighboring states. LeSage and Pan (1995) suggests the use of a value of unity on not only the diagonal elements of the weight matrix, as in the Minnesota prior, but also in place(s) that correspond to the variable(s) from other state(s) with which the specific state in consideration have common border(s). However, for the elements in the F matrix that corresponds to variable(s) from state(s) that are not immediate neighbor(s), LeSage and Pan (1995) propose a value of 0.1. We consider a simple example to understand the specification of the F matrix better. Suppose there are three states, namely, A, B and C. More importantly, suppose, A shares its borders with both B and C, however, B and C are both direct neighbors of only A. Given this, with the three states ordered alphabetically, the F matrix obtained based on FOSC looks as follows:

Based on the same idea presented above, and referring to the map of the USFootnote 6, given in Fig. 1, one can easily design the F matrix based on the FOSC prior, by the alphabetical orderingFootnote 7 of the twenty largest US states, namely, AZ, CA, FL, GA, IL, IN, MA, MD, MI, MO, NC, NJ, NY, OH, PA, TN, TX, VA, WA and WI. The formal representation of the F matrix has been presented in the Appendix of the paper.

The intuition behind this asymmetric F matrix is based on our lack of belief on the prior means of zero imposed on the coefficient(s) for price(s) of the neighboring state(s). Instead we believe that these variables do have an important role to play, hence, to express our lack of faith in the prior means of zero, we assign a larger prior variance, by increasing the weight values, to these prior means on the coefficients for the variables of the neighboring states. This in turn, allows the coefficients on these variables to be determined based more on the sample and less on the prior.

The BVARs and the SBVAR, based on the FOSC prior, are estimated using Theil’s (1971) mixed estimation technique. Specifically, suppose we denote a single equation of the VAR model as: \(y_1 = X\beta + \varepsilon _1 \), with \(Var\left( {\varepsilon _1 } \right) = \sigma ^2 I\), then the stochastic prior restrictions for this single equation can be written as:

Note, \(Var\left( u \right) = \sigma ^2 I\) and the prior means M ijm and σ ijm take the forms shown above for the Minnesota prior and for the FOSC prior. With (4) written as:

the estimates for a typical equation are derived as follows:

Essentially, the method involves supplementing the data with prior information on the distribution of the coefficients. The number of observations and degrees of freedom are increased by one in an artificial way, for each restriction imposed on the parameter estimates. The loss of degrees of freedom due to over- parameterization associated with a classical VAR model is therefore, not a concern in the BVARs and SBVAR.

VARs, BVARs and SBVAR Models for Forecasting House Prices in the Twenty Largest US States

Given the specification of the priors as in “VARs, BVARs and SBVAR: Specification and Estimation”, we estimate BVARs and a SBVAR model based on the FOSC prior for real house price growth of the twenty largest US states over the period 1976:01–1994:04, using quarterly data. Then we compute the out-of-sample one- through four-quarters-ahead forecasts for the period of 1995:01–2006:04, and compare the forecast accuracy relative to that of the forecasts generated by an unrestricted VAR and the univariate versions of the VAR, or alternatively, autoregressive (AR) models, and the BVARs (for the same set of priors). The choice of the 48 quarters out-of-sample horizon is motivated by the fact that marked differences were observed in housing price growth across U.S. regions since the mid-1990’s (Rapach and Strauss (2007, 2008)). Once we obtain the “optimal” model, i.e., the specific model for a particular state that produces, on average, the lowest Root Mean Squared Errors (RMSEs) over the period of 1995:01–2006:04, we use it to forecast ex ante and check whether the model in consideration could have predicted the downturn in the real house price growth over the period of 2007:01–2008:01.Footnote 8 As pointed above, the only variables included in the models are the real house price growth of the twenty largest US States. The U.S. state-level nominal housing price data, obtained from the Freddie Mac, consists of quarterly observations from 1975:1–2006:4. Using matched transactions on the same property over time to account for quality changes, the Conventional Mortgage Home Price Index (CMHPI) of the Freddie Mac provides a means for measuring the typical price inflation for houses within the U.S. The data used by Freddie Mac consists of both purchase and refinance-appraisal transactions, and consists of over 33 million homes. A real housing price series is obtained by dividing the state-level CMHPI by the personal consumption expenditure (PCE) deflator obtained from the Bureau of Economic Analysis (BEA). All data are in their seasonally adjusted form in order to, inter alia, address the fact that, as pointed out by Hamilton (1994:362), the Minnesota-type priors are not well suited for seasonal data. Ultimately, we compute the annualized growth rates for the real house prices by multiplying 400 to the differences in the natural logs of the same.

In each equation of the VARs, classical or Bayesian, spatial or non-spatial (univariate or multivariate), there are 41 parameters including the constant, given the fact that the model is estimated with two lagsFootnote 9 of each variable. Note Sims et al. (1990) indicate that, with the Bayesian approach entirely based on the likelihood function, the associated inference does not need to take special account of nonstationarity, since the likelihood function has the same Gaussian shape regardless of the presence of nonstationarity. Given this, we do not formally report the tests of stationarity.Footnote 10

The twenty-variable alternative BVAR models are estimated for an initial prior for the period of 1976:01–1994:04 and then, we forecast from 1995:01 through to 1995:04. Since we use two lags, the initial two quarters of the sample, 1976:01–1976:02, are used to feed the lags. We generate dynamic forecasts, as would naturally be achieved in actual forecasting practice. The models are re-estimated each quarter over the out-of-sample forecast horizon in order to update the estimate of the coefficients, before producing the four-quarters-ahead forecasts. This iterative estimation and four-steps-ahead forecast procedure was carried out for 48 quarters, with the first forecast beginning in 1995:01. This experiment produced a total of 48 one-quarter-ahead forecasts, 48-two-quarters-ahead forecasts, and so on, up to 48 4-step-ahead forecasts. We use the Kalman filter algorithm in RATSFootnote 11, for this purpose. The RMSEsFootnote 12 for the 48, quarter one through quarter four forecasts are then calculated for the twenty real house price growth rates of the models. The average of the RMSE statistic values for one- to four-quarters-ahead forecasts for the period 1995:01–2006:04 are then examined. Identical steps are followed to generate the forecasts from the univariate and the multivariate forms of the VAR models, besides the BVARs, spatial and non-spatial (univariate and multivariate) for alternative values of the hyperparameters defining the priors. The model that produces the lowest average RMSE value is selected as the ‘optimal’ model for a specific state, and is then used to generate ex ante forecasts over the period of 2007:01–2008:01 to check whether that specific model could have predicted the recent downturn in real house price growth for a particular state.

Evaluation of Forecast Accuracy

In this section, we evaluate the accuracy of forecasts generated by the BVAR models, both spatial and non-spatial (univariate and multivariate), by comparing the RMSEs from the out-of-sample forecasts of these models with the same set of statistics generated from an unrestricted-multivariate classical VAR and the univariate VARs. In Table 1, we compare the average RMSEs of one- to four-quarters-ahead out-of-sample-forecasts for the period of 1995:01–2006:04, generated by the univariate VAR, the VAR, five alternative univariate BVARs, five alternative multivariate BVARs, and the SBVAR based on the FOSC prior. At this stage, a elaborate on the choice of the evaluation criterion for the out-of-sample forecasts generated from Bayesian models. As Zellner (1986: 494) points out, the ‘optimal’ Bayesian forecasts will differ depending upon the loss function employed and the form of predictive probability density function. In other words, Bayesian forecasts are sensitive to the choice of the measure used to evaluate the out-of-sample forecast errors. However, Zellner (1986) points out that the use of the mean of the predictive probability density function for a series is optimal relative to a squared error loss function and the Mean Squared Error (MSE), and hence, the RMSE is an appropriate measure to evaluate performance of forecasts, when the mean of the predictive probability density function is used. This is exactly what we do in Table 1 when we use the average RMSEs over the one- to four-quarter-ahead forecasting horizon. The conclusions from Table 1 are summarized as follows:

-

(i)

There does not exist a unique ‘optimal’ model that performs the best in terms of lowest average RMSE for all the twenty states. Similar results were also obtained by Gupta and Das (2008) and Das et al. (2008) while analyzing the South African housing market;

-

(ii)

However, except for MA, in the remaining of the 19 of the 20 states, where the univariate VAR or the AR(2) model does the best, the BVARs, spatial or non-spatial (univariate or multivariate) outperforms the classical variants of the univariate and multivariate VARs;

-

(iii)

Specifically, the univariate BVAR3 (w = 0.1, d = 1.0, k ij = 0.001) outperforms all other models for IL, TN and WI, while, the univariate BVAR4 (w = 0.2, d = 2.0) is ‘optimal’ for AZ, TX and WA. The multivariate BVAR3 (w = 0.1, d = 1.0, k ij = 0.5) does the best for NC and VA, with the multivariate BVAR1 (w = 0.3, d = 0.5, k ij = 0.5) outperforming the alternative models for CA, FL, IN, MI, MO and OH, while the multivariate BVAR5 (w = 0.1, d = 2.0, k ij = 0.5) is the best-suited model for forecasting NJ. Finally, for GA, MD, NY and PA, the SBVAR model based on the FOSC prior is the outright performer;

-

(iv)

The athoeretical nature of the VARs make it quite difficult, if not impossible, to interpret the results, i.e., why is it the models perform in the way they do? However, given the basic structure of the VARs, univariate or multivariate, classical or Bayesian (spatial or non-spatial), we can make the following additional observations: (a) Generally, for the states where the univariate VAR and the univariate BVARs do the best, it essentially implies that in those states, what matters most in determining the current real house price growth rate is the own lagged real house price growth rate. Moreover, given that it is generally the tight priored univariate BVARs that stand out amongst that specific class of models, is indicative of the fact that most of the variance in the current real house price growth rate comes from the first own lag of the same. In this regard, it is not surprising to see univariate BVAR4 doing the best for AZ, TX and WA — the states that do not share their borders with any of the other states in the country; (b) In addition, for states where the multivariate BVARs are the optimal models, the majority of them (six out of nine) are the loose priored ones. This indicates that, lagged real house price growth rates of all the other states matter, besides the one in question plays a role in determining the current real house price growth rate, and; (c) Finally, for the states, where the SBVAR model stands out, namely, GA, MD, NY and PA, they are generally the ones with very prominent neighbors, as well as bordered by states, whose real house price growth rate, in turn, is determined mainly by itself, i.e., either by univariate BVARs or relatively tight priored multivariate BVARs.

In summary, what stands out though, is the fact that the BVARs, in whatever form they might be are the best performing models in majority of the cases, when compared to the classical variant of the univariate and multivariate VARs. Overall, the most loose priored multivariate BVAR does the best for six states, followed by the SBVAR for four cases. Two of the relatively tight priored univariate BVARs are the best performing models for three additional cases each, while the three of the remaining four cases are shared between the two relatively tight multivariate BVARs, with the univariate classical VAR standing out for just one state.

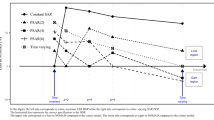

Predicting the Recent Downturn

This section is devoted to analyzing the capability of the ‘optimal’ model in predicting the recent downturn in the real house price growth rate of the 20 largest US states over the ex ante forecast period of 2007:01–2008:01.Footnote 13 As can be seen from the Forecast 1 curves in the Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20 and 21, the optimal models do quite well for GA, IL, MO, NC, NY, OH, PA, TN, TX, VA, WA and WI, and only partially well for AZ, CA, FL, IN, MD and MI. Overall, except for NJ and MA, the ‘optimal’ model corresponding to a specific state does reasonably well in tracking the downturn. Note the optimal model for MA was the univariate VAR, so the ‘optimal’ Bayesian models are well-equipped in predicting the downturn in 18 of the 19 cases, with the exception of NJ. However, in all the cases, where the ‘optimal’ models predicted the downturn correctly, they tended to under-predict the size of the decline in the real house price growth rate. This is not surprising, especially when one takes into account of the fact that though lagged values of house prices contains useful information, the recent downturn in the housing market has to do with the adverse effects on the fundamentals affecting the house prices. Nevertheless, the ability of the ‘optimal’ models, majority of which are Bayesian in nature, in predicting the recent downturn cannot be disregarded. These models, as shown here, could be used efficiently (in the sense that not too much information is required) to obtain a preliminary and prompt feel about where a specific variable might be headed, and hence, as noted by Del Negro (2001), can be of immense importance to policy makers.

It must be realized, that the choice of 2007:01 as the starting point for predicting the downturn was motivated by the fact, that it was the (uniform) period before which all the states have peaked in terms of their respective real house price growth rate. But, it must be emphasized, that one might tend to attribute the ability of the models in predicting the downturn correctly, to the fact that the peaks in the real house price growth rate had already occurred before 2007:01, and hence, the data contains information beyond the actual turning points. Given this, we decided to search for the peaks in the real house price growth rate, and repeat the exercise by starting our downturn predictions from a period before (or much closer to) the turning point. Based on our calculations, the real house price growth rate of 16 of the 20 states peaked at on or after 2004:03. The exceptions being IN, MI, OH and TX where the real house price growth rate peaked at 2001:01, 1996:02, 1995:02 and 2001:01 respectively. Given this, we revisit the ability of the ‘optimal’ models in predicting the downturn starting from 2004:01. The fact that the peaks for IN, MI, OH and TX, were reached quite early into the out-of-sample horizon, the downturn predictions were started from 2004:01 — a period beyond which majority of the states registered their highest real house price growth rates.

Based on the curve Forecast 2 in Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20 and 21, we observe the ‘optimal’ models to be essentially well-equipped in picking up the downward trend in the data. Interestingly, however, the ‘optimal’ models tend to overestimate the downturn until the more recent periods of sharp fall in the growth rates. Beyond 2006:04, the performance of the ‘optimal’ models, not surprisingly, are qualitatively quite similar to their performances observed, when one starts from 2007:01.Footnote 14

Conclusions

This paper estimates Bayesian Vector Autoregressive (BVAR) models, both spatial and non-spatial (univariate and multivariate), for twenty largest states of the US economy, using quarterly data over the period 1976:Q1–1994:Q4; and then forecasts one-to-four quarters ahead real house price growth over the 48 quarters out-of-sample forecast horizon of 1995:Q1–2006:Q4. The forecasts are then evaluated by comparing them with the ones generated from an unrestricted classical Vector Autoregressive (VAR) model and the corresponding univariate variant of the same. Finally, the models that produce the minimum average Root Mean Square Errors (RMSEs) are used to predict the downturns in the real house price growth over the recent period of 2007:Q1–2008:Q1.

In general, we draw the following conclusions: (a) The BVARs, in whatever form they might be, i.e., spatial or non-spatial (univariate or multivariate) are the best performing models in 19 of the 20 states considered, when compared to the classical variant of the univariate and multivariate VARs; (b) The ‘optimal’ Bayesian models do a fair job in predicting the downturn in 18 of the 19 states for which they produced the minimum RMSEs on average; (c) However, the ‘optimal’ models were always found to under-predict the size of the decline in the real house price growth rate, this perhaps, being an indication of the importance of the adverse effects of fundamentals on real house prices, over and beyond the information contained in the past house prices. Given this, an immediate extension of this study would be to analyze how well the Autoregressive Distributed Lag (ARDL) models developed by Rapach and Strauss (2007, 2008) would compare in predicting the turning points in the data. Moreover, an alternative approach would be to develop a Dynamic Factor Model (DFM) as in Das et al. (2008) that would allow for the role of large number of potential predictors, or one might want to delve into designing a large-scale BVAR model, along the lines of Gupta and Kabundi (2008a), which would allow for not only the role of large number of fundamentals affecting the real house price growth but also for asymmetric interaction from national-, regional and state-level (neighboring or non-neighboring) variables, and; (d) Finally, overall, the ability of the atheoretical Bayesian models, as quick and preliminary predictors of real house price growth rate cannot be disregarded.

At this stage, we point out at least two limitations to using a Bayesian approach for forecasting. Firstly, as it is clear from Table 1, the forecast accuracy is sensitive to the choice of the priors. So if the prior is not well specified, an alternative model used for forecasting may perform better. Secondly, in case of the Bayesian models, one requires to specify an objective function, for example the average RMSEs, to search for the ‘optimal’ priors, which in turn, needs to be optimized over the period for which we compute the out-of-sample forecasts. However, there is no guarantee that the chosen parameter values specifying the prior will continue to be ‘optimal’ beyond the period for which it was selected. Besides the fact that by 2007:01 all the states had attained their peaks for their respective real house price growth rates, this is another reason to why we decided to look for the ability of the ‘optimal’ models in predicting the downturn in the real house price growth rate, starting at 2007:01. Recall that the optimality of the priors were based on the minimum average value of one- to four-quarters-ahead RMSEs for the out-of-sample horizon of 1995:01–2006:04. Nevertheless, the importance of the Bayesian forecasting models, spatial or non-spatial (univariate or multivariate), cannot be underestimated. This has been widely proven in the forecasting literatureFootnote 15, and is also vindicated by our current study, which indicates the suitability of the Bayesian models in forecasting and predicting the turning points in the real house price growth rates of 20 largest states of the US economy.

Notes

Based on the 2000 census, these states, in alphabetical order, are: Arizona (AZ), California (CA), Florida (FL), Georgia (GA), Illinois (IL), Indiana (IN), Massachusetts (MA), Maryland (MD), Michigan (MI), Missouri (MO), North Carolina (NC), New Jersey (NJ), New York (NY), Ohio (OH), Pennsylvania (PA), Tennessee (TN), Texas (TX), Virginia (VA), Washington (WA) and Wisconsin (WI).

See "VARs, BVARs and SBVAR: Specification and Estimation" for further details.

A model is said to be optimal if it on average produces the minimum value for the specific statistic measuring the out-of-sample forecast performance. See "VARs, BVARs and SBVAR Models for Forecasting House Prices in the Twenty largest US States" and "Evaluation of Forecast Accuracy" for further details.

For an illustration, see Dua and Ray (1995).

The source for the US map is: http://www.submittheoffer.com/images/usa.gif.

It must however be pointed out, that alternative ordering of the twenty largest US states do not affect our final results in any way.

Based on our calculations of the real house price growth, we found all the 20 states under consideration to witness a decline in the real house price growth from 2007:01 onwards.

The choice of 2 lags is based on the unanimity of the sequential modified LR test statistic, Akaike information criterion (AIC), the final prediction error (FPE) criterion, and the Hannan-Quinn (HQ) criterion.

However, using the Augmented Dickey-Fuller (ADF), the Phillips-Perron (PP), Dickey-Fuller-Genaralized Least Squares (DF-GLS), and the Kwiatkowski-Phillips-Schmidt-Shin (KPSS) tests, all the twenty real house price growth rates were found to be integrated of order 1, i.e., I(1).

All statistical analysis was performed using WINRATS, version 7.0.

Note that if A t+n denotes the actual value of a specific variable in period t + n and t F t+n is the forecast made in period t for t + n, the RMSE statistic can be defined as \(\sqrt {\frac{1}{N}\sum {\left( {A_{t + n} - _t F_{t + n} } \right)^2 } } \). For n = 1, the summation runs from 1995:01–1995:04, and for n = 2, the same covers the period of 1995:02–1996:01, and so on.

The period 2007:01–2008:01 is dubbed ex ante simply because our sample for the models end at 2006:04.However, given the availability of data till 2008:01, we can compare the future forecasts from the model with the original data.

The downturn predictions are based on the “Forecast” command in RATS, which performs a recursive estimation of the models by updating the estimates of the coefficients based on additional data that is created through the one-step-ahead future forecast. This is why, when the starting point of the forecasting exercise is different, the downturn prediction for the period of 2007:01–2008:01 is not exactly identical for a particular model.

For example, Amirizadeh and Todd (1984), Kuprianov and Lupoletti (1984), Hoen et al. (1984), Hoen and Balazsy (1985), Kinal and Ratner (1986), Gruben and Long (1988a, b), LeSage (1990), Gruben and Hayes (1991), Shoesmith (1992, 1995), Dua and Ray (1995), Dua and Smyth (1995), Dua and Miller (1996), Dua et al. (1999), Gupta and Sichei (2006), Gupta (2006, 2007, 2008), Liu and Gupta (2007), Zita and Gupta (2008), Banerji et al. (2008), Das et al. (2008), Gupta and Das (2008), Gupta and Kabundi (2008a, b), and Liu et al. (2007, 2008).

References

Amirizadeh, H., & Todd, R. M. (1984). More growth ahead for ninth district states. Quarterly Review, Federal Reserve Bank of Minneapolis, Fall.

Banerji, A., Dua, P., & Miller, S. M. (2008). Performance evaluation of the New Connecticut leading employment index using lead profiles and BVAR models. Journal of forecasting, 25(6), 415–437. doi:10.1002/for.996.

Das, S., Gupta, R., & Kabundi, A. (2008). Is a DFM Well-suited for forecasting regional house price inflation? Working Paper No. 200814, Department of Economics, University of Pretoria.

Del Negro, M. (2001). Turn, turn, turn: predicting turning points in economic activity. Economic Review, Second Quarter, Federal Reserve Bank of Atlanta.

Doan, T. A., Litterman, R. B., & Sims, C. A. (1984). Forecasting and conditional projections using realistic prior distributions. Econometric reviews, 3(1), 1–100. doi:10.1080/07474938408800053.

Dua, P., & Ray, S. C. (1995). A BVAR model for the connecticut economy. Journal of forecasting, 14(3), 167–180. doi:10.1002/for.3980140303.

Dua, P., & Miller, S. M. (1996). Forecasting and analyzing economic activity with coincident and leading indexes: the case of Connecticut. Journal of forecasting, 15(7), 509–526. doi:10.1002/(SICI)1099-131X(199612)15:7<509::AID-FOR631>3.0.CO;2-G.

Dua, P., & Smyth, D. J. (1995). Forecasting U. S. home sales using BVAR models and survey data on households’ buying attitude for homes. Journal of forecasting, 14(3), 217–227. doi:10.1002/for.3980140306.

Dua, P., Miller, S. M., & Smyth, D. J. (1999). Using leading indicators to forecast U. S. home sales in a Bayesian vector autoregressive framework. Journal of Real Estate Finance and Economics, 18(2), 191–205. doi:10.1023/A:1007718725609.

Gupta, R. (2006). Forecasting the South African economy with VARs and VECMs. South African Journal of Economics, 74(4), 611–628. doi:10.1111/j.1813-6982.2006.00090.x.

Gupta, R. (2007). Forecasting the South African economy with Gibbs sampled BVECMs. South African Journal of Economics, 75(4), 631–643. doi:10.1111/j.1813-6982.2007.00141.x.

Gupta, R. (2008). Bayesian methods of forecasting inventory investment in South Africa. Forthcoming South African Journal of Economics.

Gupta, R., & Das, S. (2008). Spatial Bayesian methods for forecasting house prices in six metropolitan areas of South Africa. South African Journal of Economics, 76(2), 298–313. doi:10.1111/j.1813-6982.2008.00191.x.

Gupta, R., & Kabundi, A. (2008a). Forecasting macroeconomic variables using large datasets: dynamic factor model vs large-scale BVARs. Working Paper No. 200816, Department of Economics, University of Pretoria.

Gupta, R., & Kabundi, A. (2008b). A dynamic factor model for forecasting macroeconomic variables in South Africa. Working Paper No. 200815, Department of Economics, University of Pretoria.

Gupta, R., & Sichei, M. M. (2006). A BVAR model for the South African economy. South African Journal of Economics, 74(3), 391–409. doi:10.1111/j.1813-6982.2006.00077.x.

Gruben, W. C., & Hayes, D. W. (1991). Forecasting the Louisiana economy. Economic Review (March), 1–16.

Gruben, W. C., & Long, W. T. III (1988a). Forecasting the Texas economy: application and evaluation of a systematic multivariate time-series model outlook for 1989. Economic Review (January), 11–25.

Gruben, W. C., & Long, W. T. III (1988b). The New Mexico economy: outlook for 1989. Economic Review (November), 21–29.

Hamilton, J. D. (1994). Time series analysis, Second Edition. Princeton: Princeton University Press.

Hoen, J. G., & Balazsy, J. J. (1985). The Ohio economy: a time-series analysis. Economic Review, Federal Reserve Bank of Cleveland, Third Quarter, 25–36.

Hoen, J. G., Gruben, W. C., & Fomby, T. B. (1984). Time series forecasting model of Texas economy: a Comparison. Economic Review (May), 11–23.

Kinal, T., & Ratner, J. (1986). A VAR forecasting model of a regional economy: its construction and comparison. International Regional Science Review, 10(2), 113–126. doi:10.1177/016001768601000202.

Kuprianov, A., & Lupoletti, W. (1984). The economic outlook for the fifth district states in 1984: forecasts from vector autoregression models. Economic Review, Federal Reserve Bank of Richmond, February, 12–23.

LeSage, J. P., & Pan, Z. (1995). Using spatial contiguity as Bayesian prior information in regional forecasting models. International Regional Science Review, 18(1), 33–53. doi:10.1177/016001769501800102.

LeSage, J. P. (1999). Applied Econometrics Using MATLAB, http://www.spatial-econometrics.com.

Litterman, R. B. (1981). A Bayesian procedure for forecasting with vector autoregressions. Working Paper, Federal Reserve Bank of Minneapolis.

Litterman, R. B. (1986). Forecasting with Bayesian vector autoregressions — five years of experience. Journal of Business and Economic Statistics, 4(1), 25–38. doi:10.2307/1391384.

Liu, G., & Gupta, R. (2007). A small-scale DSGE model for forecasting the South African economy. South African Journal of Economics, 75(2), 179–193. doi:10.1111/j.1813-6982.2007.00118.x.

Liu, G., Gupta, R., & Schaling, E. (2007). Forecasting the South African economy: a DSGE-VAR approach. Working Paper No. 200724, Department of Economics, University of Pretoria.

Liu, G., Gupta, R., & Schaling, E. (2008). A new keynesian DSGE model for forecasting the South African economy. Forthcoming Journal of Forecasting.

NAR, National Association of Realtors. (2006). Existing-Home Sales Slip in April, (http://www.realtor.org/PublicAffairsWeb.nsf/Pages/EHS06April) Accessed on June 8, 2006.

Rapach, D. E., & Strauss, J. K. (2007). Forecasting real housing price growth in the eighth district States. Federal Reserve Bank of St. Louis. Regional Economic Development, 3(2), 33–42.

Rapach, D. E., & Strauss, J. K. (2008). Differences in housing price forecast ability across U.S. states (Forthcoming). International Journal of Forecasting.

Shoesmith, G. L. (1992). Co-integration, error correction and medium-term regional VAR forecasting. Journal of forecasting, 11(1), 91–109. doi:10.1002/for.3980110202.

Shoesmith, G. L. (1995). Multiple cointegrating vectors, error correction, and litterman’s model. International Journal of Forecasting, 11(4), 557–567. doi:10.1016/0169-2070(95)00613-3.

Sims, C. A. (1980). Macroeconomics and reality. Econometrica, 48(1), 1–48. doi:10.2307/1912017.

Sims, C. A., Stock, J. H., & Watson, M. W. (1990). Inference in linear time series models with some unit roots. Econometrica, 58(1), 113–144. doi:10.2307/2938337.

Spencer, D. E. (1993). Developing a Bayesian vector autoregression model. International Journal of Forecasting, 9(3), 407–421. doi:10.1016/0169-2070(93)90034-K.

Stock, J. H., & Watson, M. W. (2003). Forecasting output and inflation: the role of asset prices. Journal of Economic Literature, 41(3), 788–829. doi:10.1257/002205103322436197.

Theil, H. (1971). Principles of econometrics. New York: John Wiley.

Todd, R. M. (1984). Improving economic forecasting with Bayesian vector autoregression. Quarterly Review, Federal Reserve Bank of Minneapolis, Fall, 18–29.

Zellner, A. (1986). A tale of forecasting 1001 series: the Bayesian knight strikes again. International Journal of Forecasting, 2(4), 494–494. doi:10.1016/0169-2070(86)90094-4.

Zita, S. E., & Gupta, R. (2008). Modelling and forecasting the metical-rand exchange rate. Forthcoming ICFAI Journal of Monetary Economics.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The following is the design of the F matrix for the twenty largest US states based on the FOSC prior:

Rights and permissions

About this article

Cite this article

Gupta, R., Das, S. Predicting Downturns in the US Housing Market: A Bayesian Approach. J Real Estate Finan Econ 41, 294–319 (2010). https://doi.org/10.1007/s11146-008-9163-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11146-008-9163-x