Abstract

This study investigated the component skills underlying reading fluency in a heterogeneous sample of 527 eighth grade students. Based on a hypothetical measurement model and successive testing of nested models, structural equation modeling revealed that naming speed, decoding, and language were uniquely associated with reading fluency. These findings suggest that the ability to access and retrieve phonological information from long-term storage is the most important factor in explaining individual differences in reading fluency among adolescent readers. The ability to process meaning and decode novel words was smaller but reliable contributors to reading fluency in adolescent readers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The reading skills of students in secondary school are attracting increased scrutiny (Biancarosa & Snow, 2004). A recent survey indicates that over five million adolescents in the United States are not able to adequately read or understand the textbooks, teaching materials, or assignments used in their core academic classes (Perie, Grigg, & Donahue, 2005). According to Perie et al. (2005), 26% of middle school and high school students struggle to understand uncomplicated, short literacy tasks such as following written instructions including the entering of personal information on a form or locating the time of an event on a posted schedule. About 10% of adolescents leave elementary school as non-readers (Curtis, 2002). For minority students and those living in poverty, the statistics are even more alarming (Biancarosa & Snow, 2004). Thus, many secondary students struggle to meet the basic academic demands of middle school and high school because they have not mastered basic reading skills.

While considerable attention has focused on the key skills that beginning readers need to become proficient readers, far less attention has focused on the correlates of adolescent literacy and how to best teach adolescent struggling readers. This may be because the reading problems of adolescent struggling readers are more diverse and frequently greater in number and severity than those observed in elementary struggling readers (Vaughn et al., in press). Specifically, some secondary students may struggle with the same reading-related component skills as elementary students (e.g., the alphabetic principle, decoding, fluency), whereas other secondary students may struggle because of the cumulative effect of inadequate reading experience (e.g., scant vocabulary, limited background knowledge, and insufficient comprehension strategies) (Biancarosa & Snow, 2004). In addition, a small but significant proportion of adolescent struggling readers will present with significant deficits in language skills despite adequate development of word reading and fluency skills (Catts, Adlof, & Weismer, 2006; Leach, Scarborough, & Rescorla, 2003). Thus, despite the significant variation in the nature and severity of reading difficulties experienced by adolescent struggling readers, little research has focused on the factors underlying reading proficiency in adolescent readers.

One factor believed to underlie reading proficiency in both beginning readers and adolescent readers is fluency. The development of reading fluency is considered a critical component of general reading ability given its high association with improved reading comprehension (Chard, Vaughn, & Tyler, 2002; Fuchs, Fuchs, Hosp, & Jenkins, 2001; Fuchs, Fuchs, & Maxwell, 1988). Reading fluency is generally defined as the ability to read single words quickly and accurately both in and out of context (Jenkins, Fuchs, van den Broek, Espin, & Deno, 2003; Fuchs et al., 2001). This conceptualization of reading fluency is supported by research which indicates that sight word reading and text reading fluency are highly correlated. For example, Torgesen, Wagner, and Rashotte (1998) found that both sight word efficiency and phonemic decoding efficiency subtests of the test of word reading efficiency correlated 0.80 with the rate and accuracy scores of the Gray Oral reading test. Also, regression based analyses show that the additional contribution of other variables over and above sight word and non-word reading efficiency is very small (Torgesen, Rashotte, & Alexander, 2001). Because sight word efficiency and reading fluency are highly correlated and little variance remains unexplained when sight word efficiency predicts reading fluency, it can be argued that reading words and reading text share many of the same underlying skills and represent the same latent construct (Fletcher, Lyon, Fuchs, & Barnes, 2006).

Previous research suggests that four factors are primarily involved in the establishment of reading fluency: word reading accuracy, naming speed, working memory, and language comprehension (Berninger, Abbott, & Vermeulen, 2002; Russel, 2002; Torgesen et al., 2001; Wolf & Cohen, 2001). The first factor, word reading accuracy, refers to the ability to identify or decode words correctly (Torgesen & Hudson, 2006). According to Ehri (1997) a reader may use several methods to read words correctly. For example, readers may transform a word’s letters to sounds and blend them to obtain a pronunciation, chunk letters and blend them to obtain a pronunciation, read words by sight, identify words by analogy to known words, or use context and background knowledge to guess the words’ pronunciation and meaning. Use of these methods requires knowledge of the alphabetic principle (e.g., knowledge of how graphemes map onto phonemes), the ability to blend sounds to obtain the word’s pronunciation, the ability to store, and retrieve a large number of words from memory, and the ability to use the meaning to text to facilitate word recognition (Ehri & McCormick, 1998; Torgesen & Hudson, 2006; Tunmer & Chapman, 1996). Further, research into the predictive relationship between word reading accuracy and reading fluency has found small but significant independent contributions of word reading accuracy to reading fluency for beginning readers (Bowers, 1993; Geva & Zadeh, 2006; Torgesen et al., 2001). Thus, word reading accuracy appears to play an important role in the establishment of reading fluency.

The second factor, language comprehension, refers to the reader’s ability to construct meaning. Language comprehension might affect fluency in one of two ways. First, readers might adjust their reading rate in order to more fully understand what has just been read. Individual differences might be caused by variations in readers’ abilities to process different text types (e.g., technical, expository, narrative, persuasion, classification), make inferences, incorporate background knowledge, process sentence structures, and make connections between meaning of words and sentences (Torgesen & Hudson, 2006). Second, previous research suggests that words are read faster in context than in isolation (Jenkins et al., 2003; Stanovich, 1980) and that for beginning readers and struggling readers, context aids word identification (Ben-Dror, Pollatsek, & Scarpati, 1991; Bowey, 1985; Pring & Snowling, 1986). Previous research into the predictive relationship between reading fluency and language comprehension has found small but significant independent contributions of language to reading fluency for beginning readers (Geva & Zadeh, 2006; Torgesen et al., 2001). It may be that for older readers, language comprehension may account for a greater portion of the variance in reading fluency since the majority of readers are reading for meaning rather than learning to read. Thus it seems likely that language comprehension underlies reading fluency among adolescent readers.

The third factor, naming speed, as assessed by rapid automatized naming (RAN) tasks, requires speeded naming of serially presented stimuli such as letters and objects (Misra, Katzir, & Wolf, 2004). Previous research has shown that naming speed accounts for unique variance in reading fluency (Bowers, 1993; Geva & Zadeh, 2006; Schatschneider, Fletcher, Francis, Carlson, & Foorman, 2004; Torgesen, Wagner, Rashotte, Burgess, & Hecht, 1997). Although it has been demonstrated that naming speed’s contribution is unique and independent, Bowers (1993) found that its contribution was less than word reading abilities. This finding emphasizes the significant role decoding plays in beginning readers who are mastering grapheme–phoneme correspondences and building a sight word vocabulary. However, little is known about the relationship between naming speed and reading fluency in adolescent readers. Studies examining this relationship have exclusively been conducted on samples of beginning readers. Hence it is possible that naming speed accounts for greater variance in adolescent readers where there is less variability in word reading abilities at this point in reading development.

The fourth factor influencing how quickly one reads words is the reader’s working memory capacity or the ability to simultaneously store and manipulate information during complex tasks (Baddley, 2000). It is assumed that working memory is controlled by a limited capacity attentional system (Baddley, 2000). That is, a task can only be completed if the required cognitive demand does not exceed the resources available to the individual. In the case of reading, LaBerge and Samuels (1974) propose that when the pronunciations and meanings of words are recognized automatically, less effort or cognitive resources are used to decode words and greater cognitive resources can be allocated to the comprehension of text. In such instances, decoding and comprehension are simultaneously executed. However, struggling readers frequently utilize the full extent of cognitive resources available for the decoding of words. To fully understand text, the reader will switch attentional resources from decoding to comprehension. Such alternating is resource costly, slow, and often interferes with comprehension and compromises reading fluency (Brenzitz, 2006). Previous research has found that working memory uniquely contributed to reading fluency after controlling for word reading accuracy, word reading efficiency, and naming speed (Russell, 2002). Thus, it seems likely that working memory will play either a direct or indirect role in the establishment of reading fluency.

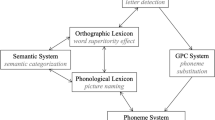

Given that individual differences in reading fluency are likely associated with multiple factors (Torgesen & Hudson, 2006) a model of reading fluency is provided in Fig. 1 to illustrate the multi-component nature of this skill. Specifically four cognitive factors are hypothesized to influence reading fluency: (1) decoding; (2) naming speed; (3) language comprehension; and (4) working memory. Figure 1 also includes nonverbal intelligence. Previous studies have found that nonverbal intelligence and general reading ability correlate (.40) (Fletcher, Denton, and Francis, 2005). Thus, in order to measure some of the individual differences that may or not be associated with fluency, nonverbal intelligence was included as a covariate.

Although prior work provides the basis for hypothesizing this model of reading fluency (Bowers, 1993; Geva & Zedah, 2006; Katzir et al., 2006; Russell, 2002; Torgesen et al., 2001) this body of research primarily involves elementary school children with reading difficulties and has not examined the nature of reading fluency in a large heterogeneous sample of adolescent readers who present the full range of reading ability. Because 26% of all adolescents in the United States read below the basic levels of reading proficiency and 10% leave elementary school as non-readers there presents a great need to understand which skills predict reading fluency among adolescent readers (e.g., Alliance for Excellent Education, 2003; Grigg, Daane, Jin, & Campbell, 2003; Hock & Deshler, 2003; Kirsch, Jungeblut, Jenkins, & Kolstad, 1993; Thernstrom & Thernstrom, 2003). In addition, previous studies have primarily used correlational or regression analyses. Traditional multiple regression procedures maximize prediction of a dependent variable by assigning weights to predictors. However, multiple regression does a poor job in sorting out the relative importance of variables (Kline, 2005). Further, most prior work included sight word reading efficiency as an independent variable (Katzir, 2002; Russell, 2002; Torgesen & Hudson, 2006). In these instances, sight word efficiency accounted for most of the unique variance in reading fluency, leaving little opportunity to identify skills that account for individual differences in reading fluency operationally defined as word level and text level fluency. Therefore, the present study sought to evaluate the extent to which the abilities illustrated in Fig. 1 accounted for unique variance in reading fluency among adolescent readers. The study extends previous research on the component skills that underlie reading fluency abilities by using a large, heterogeneous sample of adolescent readers and using a latent variable approach. In addition, the study tests the extent to which sight word reading and fluency of connected text represent the same underlying construct. Based on previous research, we hypothesized that decoding, naming speed, and language comprehension would all uniquely account for variance in reading fluency whereas working memory and nonverbal intelligence would share variance with all other factors in the model. Finally, we hypothesized that sight word reading and fluency of connected text would be highly correlated and represent a single construct in adolescent readers.

Method

Participants

The students in this investigation were selected from a population-based sample of 7,218 monolingual English-speaking students who participated in an epidemiological study of specific language impairments among kindergarten children in Iowa (see Tomblin et al., 1997). Following the completion of this epidemiological investigation, a subsample of children were recruited to participate in a longitudinal study of the language, literacy, and cognitive abilities of children (see Tomblin, Zhang, Weiss, Catts, & Ellis Weismner, 2004). Specifically, all students who failed the kindergarten language screening and diagnostic language battery as well as a random sample of children who passed both assessment batteries were invited to participate in the longitudinal study. Non-monolingual English speakers, children with autism or mental retardation, and children with sensory deficits or neurological disorders were excluded. In all, the sample included 123 children with language impairments; 103 children with nonverbal cognitive impairments; 102 children with language and nonverbal cognitive impairments; and 276 children without language or nonverbal cognitive impairments (Tomblin et al., 2004). The present study is based on data collected at the eighth grade follow up. Data were obtained from 527 of the 604 children. The language and nonverbal cognitive abilities of these 527 students as measured in kindergarten did not differ significantly from those of students who did not participate in the longitudinal study (Catts, Adlof, & Hogan, 2005).

Materials

The measures included in this study represented a subset of language, literacy, and nonverbal cognitive measures obtained from a larger assessment battery (see Table 1). Tasks commonly used to assess the constructs of reading fluency, and component skills that include, decoding, language comprehension, working memory, naming speed, and nonverbal cognitive abilities were selected for this investigation in order to test the model of reading fluency shown in Fig. 1. Multiple measures of each construct were included so that the relations among latent abilities could be examined independent of task-specific factors or measurement error (Kline, 2005). In the next section, we describe each specific task or assessment according to the latent construct it indexed.

Decoding

Latent decoding ability was indexed by The Woodcock reading mastery test-revised (WRMT-R; Woodcock, 1987) word attack and word identification subtests. The word attack subtest assessed participants’ ability to apply phonic and structural analysis skills in order to pronounce nonsense words in isolation. The word identification subtest of the WRMT-R assessed participants’ ability to read high and low frequency real words in isolation. Split-half reliability for both subtests exceeds .90.

Language comprehension

Latent language comprehension ability was indexed by the Peabody picture vocabulary test-revised (PPVT-R; Dunn & Dunn, 1987), the comprehensive receptive and expressive vocabulary test-revised (CREVT-R; Wallace & Hammil, 1994), two measures from the qualitative reading inventory-2 (QRI-2; Leslie & Caldwell, 1995), and the concepts and directions and recalling sentences subtests from the clinical evaluation of language fundamentals-3 (CELF-3; Semel, Wiig, & Secord, 1995).

The PPVT-R assessed participants’ receptive vocabulary skill. The split half reliability of the PPVT-R is .96. The CREVT-R assessed semantic abilities. For this test, the participant was presented a series of 10 pictures depicting familiar themes. He/she was asked to define words characterized in the picture. Alternate form reliability is .83.

Two passages of eighth grade reading difficulty from the QRI-2 assessed participants’ listening comprehension abilities. Specifically, participants listened to two audio taped passages (200–400 words in length) presented via headphones and then responded to 10 comprehension questions (i.e., five implicit questions and five explicit questions) presented orally by the examiner. Alternate form reliability is .80.

The CELF-3 concepts and directions subtest assessed abilities that include the understanding, recalling, and execution of oral commands containing syntactic structures that increase in length and complexity. The participant was required to identify pictured geometric objects in response to commands spoken by the examiner (e.g., Before you point to the little, white triangle, point to the little squares). Test–retest reliability is .69. The CELF-3 recalling sentences subtest assessed the recall and reproduction of sentences of increasing length and syntactic complexity. The participant was required to repeat sentences spoken by the examiner. Split-half reliability is .87.

Naming speed

Latent naming speed ability was indexed by the Rapid Letter Naming subtest of the comprehensive test of phonological processes (CTOPP; Torgesen, Wagner, & Rashotte, 2000), Form A, and two unstandardized measures of naming speed. The CTOPP, rapid letter naming subtest asks participants to name 72 English letters as quickly and accurately as possible. The two unstandardized measures of naming speed require participants to name as many letters (or colors) as quickly and as accurately as possible in 15 seconds. The scores on the two unstandardized measures was the number of letters or colors accurately identified in 15 seconds (Compton, Olson, DeFries, & Pennington, 2002). The test–retest reliability of rapid automatized naming (RAN)-Letters and RAN-Colors is .72 and .89, respectively.

Working memory

Latent working memory ability was indexed by two subtests from the competing language processing task (CLPT; Gaulin & Campbell, 1994), the Auditory Working Memory subtest from the Woodcock–Johnson-III test of cognitive abilities (WJ-III; Woodcock, McGrew, & Mather, 2001), and a nonword repetition task.

For the first listening span tasks adapted from the CLPT, the examiner stated a set of three word sentences. The student indicated whether each was true or false. After hearing all sentences within a set (i.e., 1–6) and specifying if they were true or false, the student was required to recall the last word of each sentence in the order presented. The second listening span task designed from the CLPT presented the student with 10 sets of sentences. The student indicated whether each was grammatical or agrammatical. Following the presentation of all sentences within the set (n = 2–6) the participant was required to recall the last word of each sentence in the order presented.

For the WJ-III auditory working memory subtest, the participant was presented an audio-recorded string of digits and words via headphones. The participant was required to reorder the information, repeating the objects first in sequential order followed by the digits in sequential order. Split-half reliability is .84.

Phonological short-term memory was assessed with a nonword repetition task. All children were administered all 16 items. Four sets of four nonwords were presented via headphones. One set was presented for each nonword length (i.e., one to four syllables). Syllables did not correspond to those comprising English words (Dollaghan & Campbell, 1998). Participants’ phonological short-term memory scores were calculated in terms of percentage of consonants correctly produced.

Nonverbal cognitive abilities

Latent nonverbal cognitive ability was indexed by the block design and picture completion subtests of Wechsler intelligence scale for children-III (WISC-III; Wechsler, 1991). The average internal consistency reliability coefficients for the Picture Completion and Block Design subtests are .77 and .69, respectively.

Reading fluency

Latent reading fluency ability was indexed by the test of word reading efficiency (TOWRE; Torgesen et al., 1998) sight word efficiency and phonemic decoding efficiency subtests, the Gray Oral reading test-3, and the words correct per minute for passage 7 of the GORT-3. Sight word efficiency assessed the number of real words that could be accurately read within 45 s. The phonemic decoding efficiency subtest assessed the number of pronounceable nonwords that could be accurately identified within 45 s. The test–retest reliability exceeds .84 for both subtests.

The Gray oral reading test-3 was administered to evaluate oral reading rate and accuracy (GORT-3; Wiederholt & Bryant, 1992). The participant was required to read aloud 2–12 passages as quickly and as accurately as possible. Following each passage, the participant was required to respond to five comprehension questions. For the purposes of this investigation, the Rate score, which represents the time in seconds required to read the passage and the number of oral reading errors, was used in the analyses. Split half reliability was .92.

Passage 7 of the GORT-3 was also administered to all participants following the standardized administration of the GORT-3. Passage 7 is 129 words in length and of a difficulty level of 1090 Lexilles (Lexille Framework for Reading, 2005). For this task, the participant was directed to read the passage as quickly and as accurately as possible. The reading fluency raw score for passage 7 was the number of words read correctly in 1 min. Reading fluency standard scores were obtained by calculating z-scores based on the 527 participants, weighted for prevalence of language and/or cognitive impairments in Iowa kindergarteners.

Procedures

Testing

Participants were individually tested during two 2-h sessions. All examiners possessed either a bachelor’s or master’s degree in speech-language pathology or education. Examiners certified in speech-language pathology administered all language measures. All examiners completed an extensive training program conducted by the investigators regarding test administration and scoring procedures for each task within the assessment battery. Testing was completed in specially designed vans that were parked at the participants’ public schools or homes.

Standardizing scores

To aid analyses and to put all scores on a common metric, all scores from the language, literacy, and nonverbal cognition measures were converted to z-scores based on the present sample means and standard deviations.

Weighting scores

As previously noted, the present sample was comprised of four qualitatively different groups of children. Approximately 50% of the sample was comprised of students with three different types of language and/or nonverbal cognitive impairments, and the remaining 50% was comprised of typically developing students. Thus, the sample contained a larger proportion of students with language and/or nonverbal impairments than what exists in the general population. This overrepresentation of language and/or cognitive impairments could potentially bias results and limit the extent to which findings regarding the language and literacy abilities of adolescents could be could be generalized to the general population. To correct for this bias, the likelihood that a student with his or her gender, language abilities, and nonverbal cognitive abilities would have participated in the representative sample investigated in the epidemiological study was calculated (Tomblin et al., 1997). Each student’s score was then weighted accordingly. Although this sample of 527 eighth grade students contained more students with language and/or nonverbal cognitive impairments than is typically observed in the general population, the scores of these students were given proportionally less weight to ensure that the results more closely represented the population of adolescents at large (see also Catts, Fey, & Zhang 1999, 2001 for further description of the weighting procedures).

Statistical approach

The major aim of the present study was to assess the influences of individual differences in language, literacy, and cognitive abilities on individual differences in reading fluency abilities. For a number of reasons, confirmatory factor analysis (CFA) and structural equation modeling (SEM) represent the most appropriate method to examine shared and specific effects of language, literacy, and cognition in explaining individual differences in reading fluency. First, CFA seeks to determine the goodness of fit of the a priori model of reading fluency. Second, SEM allows a more precise description of the specific variance that each language, literacy, and cognitive ability contributes to reading fluency since all abilities are evaluated simultaneously. Similarly, SEM offers information concerning the specific, unique contributions of the different factors to reading fluency.

Missing data and outliers

Three of the 527 cases were missing a small amount of data. Missing data were replaced using expectation maximization (EM). The weighted means and standard deviations for observed variables are included with the correlation matrix shown in Table 2. Scores were generally in the average range with some in the low average range, correlations were moderate for methodologically related skills.

Examination of the distributional properties of the raw data indicated that most raw scores and standard scores revealed some moderate departures from normality. Less than 2% of cases were univariate outliers, defined as scores for any tasks greater than 3.0 standard deviations from the mean. Approximately 2% of the cases were multivariate outliers, defined as a significant Mahalanobis distance of p < .001. Although the use of non-normal data may distort relations among the variables of a model and reduce model fits, analyses conducted with and without outliers did not differ significantly. Thus the skew and kurtosis was not corrected and outlying cases were not deleted from the sample. EQS (Bentler, 1995) was used to perform CFA and SEM of the raw data to evaluate the adequacy of the model shown in Fig. 1.

Results

Evaluation and comparison of measurement models

Preliminary confirmatory factor analysis of measurement models

CFA of the data tested whether the established dimensionality, shown in Fig. 1, and factor loading pattern fit this heterogeneous sample of adolescent readers. CFAs were conducted on covariance matrices with z-scores weighted to represent the general population of eighth grade students. Factor variances or factor disturbances were fixed to 1.0 to identify the models and standardize the factors so that factor covariances were readily interpretable. Better model fits were indicated by (a) small chi-square values relative to the number of degrees of freedom, (b) large probability values (i.e., >.05) associated with the model chi-square, (c) values for a comparative fit index (CFI) greater than .95, and (d) values for a root mean square residual less than .08 (Bentler, 1995).

Figure 1 illustrates a model of reading fluency (Model 1). Model 1 hypothesizes that reading fluency abilities of adolescents are largely driven by four core skills after controlling for nonverbal cognition: decoding, language comprehension, naming speed, and working memory. Model 1 operationally defines reading fluency as the ability to read words quickly and accurately both in and out of context (Jenkins et al., 2003) and suggests that the skills underlying word reading fluency and text reading fluency are largely similar. This operationalization was supported by a separate CFA that found a high correlation between latent word reading fluency and latent text reading fluency (.98). Therefore, in the primary analyses measures of word reading fluency and text reading fluency both indexed the latent ability of reading fluency.

Three alternate nested measurement models (see Table 3) were also tested in order to determine if the Model 1 fit indices could be improved with slight alterations. First, latent variable research by Shaywitz, Fletcher, and Shaywitz (1996) suggests that sentence span measures similar to the CELF-3 concepts and directions and recalling sentences more reliably index working memory than language comprehension. To test this notion, Model 2a examined if model fit could be improved by allowing both CELF-3 subtests to cross load on working memory and language factors. Model 2b tested whether model parsimony could be further improved without a decrement in model fit by dropping the CELF-3 subtest scores from the language factor and loading them solely on the Working Memory factor. The final measurement model, Model 3, evaluated if model fit could be further improved by correlating the residual variances of the two working memory subtests adapted from the CLPT since both utilized the same administration procedures.

Table 3, shows that Model 1, a six factor measurement model including decoding, naming speed, language comprehension, working memory, nonverbal cognition, and reading fluency, fit the data well (S-Bχ2 [df = 155] = 423.46, p < .001, CFI = .951). Model 2a evaluated the effect of allowing the CELF-3 subtest scores (i.e., Concepts and direction and recalling sentences) to cross load on both the language comprehension factor and the working memory factor relative to Model 1. Table 3 indicates that Model 2a, which included the two cross loadings, fit (S-Bχ2 [df = 153] = 341.76, p < .001, CFI = .966) significantly better than Model 1 (S-Bχ2 difference = 81.7, p < .001). Model 2b (Table 3) in which the CELF-3 subtests only indexed Memory, fit worse than Model 2a (S-Bχ2 [df = 155] = 362.08, p < .001, CFI = .962). Therefore, we retained the best fitting measurement model, Model 2a, which was a six-factor model with the CELF-3 recalling sentences and concept and directions subtests allowed load on both language comprehension and working memory.

The next alternative model, (see Model 3 in Table 3) determined if the residual variances of the two listening span measures should freely correlate. We had hypothesized that allowing the residual variances of these two measures to correlate would further improve the fit of Model 2a because the measures are very similar in design and procedures. Indeed, allowing the residual variances of the two Listening Span subtest scores to correlate resulted in an improvement in model fit, (S-Bχ2 [df = 152] = 315.28, p < .001, CFI = .967).

In summary, Satorra Bentler chi-square difference tests and comparisons of standardized fit indices determined that the best fitting measurement model (i.e., Model 3, Table 3) was a six factor model with CELF-3 concepts and directions and recalling sentences subtests cross loading on the Language Comprehension factor and the residual variances of the two listening span measures allowed to freely correlate. In Table 4, the standardized factor loading of Model 3 are displayed. Table 4 shows that the concepts and directions and recalling sentences subtests of the CELF-3 more reliably load on the working memory factor than the language comprehension factor. Additionally, rapid letter naming (e.g., CTOPP RAN-letters and 15 second naming of letters) is a better indicator of the naming speed factor than color naming. However, this could be simply a function of having two letter naming tasks and only one object naming task. Factor intercorrelations show that decoding is highly correlated with working memory (.68), language comprehension (.70), and reading fluency (.80). Language comprehension is highly correlated with nonverbal cognition (.72), working memory (.68), and moderately with reading fluency (.68). Further, naming speed correlates highly with reading fluency (.81).

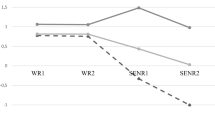

Primary structural equation models

Structural equation modeling is a statistical procedure that incorporates estimation of models with regressions among factors. In this investigation, two structural models were tested to examine the skills underlying reading fluency. In the first structural model latent reading fluency was indexed by the GORT-3 rate score, reading fluency score on GORT-3 passage 7, TOWRE sight word efficiency, and TOWRE phonemic decoding efficiency. This reading fluency factor was regressed on five component skill factors: decoding, working memory, language comprehension, naming speed, and nonverbal cognition (see Fig. 2). This structural model, Model 4, fit the data well (S-Bχ2 [df = 152] = 334.01, p < .001, CFI = .967; RMSEA = .048). This structural equation model entered all predictors simultaneously, paralleling simultaneous regression but with latent independent variables and a latent dependent variable. In Table 4, the standardized factor loadings of this structural model are displayed.

Model 4 operationally defined reading fluency as the ability to quickly and accurately read words in and out of context (Jenkins et al., 2003). In order to demonstrate that the skills underlying text fluency also underlie fluency for words and text, a second structural model was tested. In this structural model, latent Reading Fluency was indexed only by measures of connected text fluency, i.e., the GORT-3 Rate Score and reading fluency score on GORT-3 passage 7. This reading fluency factor was regressed on the same component skill factors: decoding, working memory, language comprehension, naming speed, and nonverbal cognition. This structural model, Model 5, also fit the data quite well (S-Bχ2 [df = 117] = 178.44, p < .001, CFI = .986; RMSEA = .032). In Table 4, the standardized factor loadings of this structural model are displayed.

In both structural models neither nonverbal cognition nor working memory accounted for unique variance in reading fluency among adolescent readers. Thus, the relation of nonverbal cognition and working memory with reading fluency in adolescents is accounted for by variance they have in common with decoding, language comprehension, and naming speed. Findings also indicated that after controlling for the effects of nonverbal cognition and working memory, decoding, language comprehension, and naming speed accounted for unique variance in reading fluency. Specifically, when reading fluency was indexed by measures of word fluency and text fluency, naming speed accounted for the largest proportion of variance in reading fluency, uniquely accounting for 28% of the variance. Decoding uniquely accounted for 15% of the variance in reading fluency. Language comprehension uniquely accounted for 5% of the variance in reading fluency. Further the model itself accounted for 88% of the total variance in reading fluency with 40% representing shared variance. When reading fluency was indexed solely by measures of text fluency, the same pattern of finding emerged. Naming speed accounted for 25% of the variance in reading fluency, decoding accounted for 10%, and language comprehension accounted for 8.5% of the variance in reading fluency. The model accounted for 83% of the total variance in Reading Fluency with 39.5% representing shared variance.

Discussion

The present study examined the extent to which a latent variable model of reading fluency might account for individual differences in reading fluency among adolescent readers. These findings show that after controlling for nonverbal cognition, the ability to decode text, language comprehension, and naming speed largely explained individual differences in reading fluency among adolescent readers. Decoding, language comprehension, and naming speed account for a significant portion of the unique variance in reading fluency. No unique effects of nonverbal cognition and working memory were revealed. Further, this model accounted for greater than 80% of the variance in reading fluency.

The results of this study clearly show that decoding, the ability to identify words accurately, is an important component of reading fluency. In order to secure complete representations of words in memory (e.g., spelling, pronunciation, and meaning) the reader needs sufficient familiarity with the sounds letters make, how to segment words into phonemes and blend sounds to generate the word’s pronunciation, and how to identify words that are spelled irregularly (Ehri, 1997). Finally, readers must practice mapping the pronunciation of a word onto its spelling (Ehri, 1997). These fundamental components of word reading accuracy maintain their significance even in later stages of reading development.

Language comprehension also accounted for unique variance in reading fluency. Language comprehension, or the ability to process meaning, helps readers construct mental representations of the text (Kintsch & van Dijk, 1978). According to Kintsch and van Dijk (1978), the mental representations generated by the reader represent semantic interpretations of the text. These mental representations are stored in memory for subsequent use. Uses include comprehension monitoring, inference making, understanding figurative language, defining words, and figuring out unknown words (Cragg & Nation, 2006; Nation, Clarke, Marshall, & Durand, 2004; Perfetti, Marron, & Foltz, 1996; Yuiil & Oakhill, 1991). Interestingly, language comprehension played a greater role in explaining individual differences in reading fluency when reading fluency was indexed solely by measures of connected text. This suggests that language comprehension may contribute to students’ ability to fluently read because the activation of meaning facilitates the identification of words (Jenkins et al., 2003) and helps the reader to understand what was read (Torgesen et al., 2006).

Although the results of the study show that decoding ability and language comprehension play important roles in the establishment of reading fluency among adolescent readers, naming speed as measured by RAN tasks was the factor most uniquely related to reading fluency. RAN accounted for approximately 25% of the variance in reading fluency, over and above language comprehension and decoding ability. This finding differs from results of studies that have evaluated the relative importance of naming speed among beginning readers. For example, several studies of beginning readers that provide zero order correlational data have demonstrated that decoding abilities are more highly correlated with reading fluency than naming speed (Geva & Zadeh, 2006; Schatschneider et al., 2004). In addition, regression analyses conducted by Bowers (1993) revealed that in grades 2 and 4, decoding accounted for greater unique variance in reading fluency than naming speed (after controlling for phonological awareness abilities). Collectively, these findings suggest that for beginning readers the most limiting factor is word reading abilities. That is, at this stage in reading development, readers are primarily focused on learning to read words accurately and building a sight word vocabulary. Results of this study, however, suggest that for adolescent readers, the most limiting factor is associated with naming speed as measured by RAN tasks. By adolescence, individual differences in decoding impact reading fluency ability to a much lesser degree and factors related to individual differences in RAN tasks impact reading fluency ability to a much greater degree.

This naturally leads one to consider what RAN tasks measure. Early hypotheses regarded RAN performance as an index of phonological processing abilities (Wagner & Torgesen, 1987). This account proposed that RAN tasks evaluated one’s ability to access phonological codes from long term storage. In support of this view, several studies have demonstrated that RAN, phonological memory, and phonological awareness are moderately to highly correlated skills that share a significant proportion of variance (Anthony et al., 2006; Anthony, Williams, McDonald, & Francis, 2007; Schatschneider, Carlson, Francis, Foorman, & Fletcher, 2002; Wagner, Torgesen, Laughon, Simmons, & Rashotte, 1993; Wagner, Torgesen, & Rashotte, 1997, 1999). Wolf and Bowers (1999) however, stress that although RAN and phonological awareness share many similar underlying processes, RAN is not exclusively a phonologically based factor. They hypothesize that RAN tasks, such as rapid automatized naming for letters, is related to the rate at which a reader can activate orthographic patterns from print. This is supported by evidence that demonstrated that the unique contribution of naming speed to reading was greater (than phonological awareness) for orthographic awareness tasks (Manis, Doi, & Bhadha, 2000). Alternately, others suggest that RAN tasks tap one’s general speed of processing. According to this hypothesis, poor performance on RAN tasks reflects slowing within the central nervous system (Nicholson & Fawcett, 1999; Tallal, Miller, Jenkins, & Merzenich, 1997; Wolff, 1993). It follows from this view that poor readers will have deficiencies on any task involving speed or serial processing of linguistic and nonlinguistic information (Catts, Gillespie, Leonard, Kail, & Miller, 2002). Others suggest that RAN tasks may also tap executive functions that involve response inhibition and dual task processing (Clarke, Hulme, & Snowling, 2005). According to this explanation, students with reading disabilities have a difficult time engaging executive processes while reading. It follows that, readers’ poor performance on RAN tasks is not due to inaccurate retrieval of phonological codes, but rather poor control over the cognitive processes required to commit phonological retrieval. This explanation is supported by studies demonstrating that students with reading disabilities also present difficulties with response inhibition and dual task processing (Purvis, & Tannock, 2000; Savage, Cornish, Manly, & Hollis, 2006).

In sum, numerous explanations have been offered concerning what is measured by RAN tasks. Unfortunately, there is still little consensus among investigators about the nature of these task. Therefore, our understanding of the unique contributors to fluency might be advanced further by looking to factors associated with RAN, such as general processing speed and executive function, rather than RAN itself. Such an approach might also have more direct implications for intervention.

Limitations

Although the findings of this study show that word reading accuracy, naming speed, and language comprehension are the primary skills underlying reading fluency, these results should be viewed as preliminary and requiring replication. There are other factors that should be included in future studies to more fully examine the validity of this model of reading fluency and to more precisely explain individual differences in reading fluency. For example, speed of processing may be facilitated by reading practice. This study did not survey student’s reading history in order to better understand the nature of processing speed differences. Also, we did not have assessments available to measure other nonlinguistic aspects of speed of processing, even though naming speed is largely accepted as an acceptable measure of general speed of processing (Catts et al., 2001; Kail & Hall, 1994). Finally, text difficulty was not manipulated to determine the extent to which reading fluency abilities and skills underlying reading fluency vary as a function of text difficulty. It is likely that decoding plays a more critical role when text difficulty increases whereas language comprehension plays a more critical role when text difficulty decreases.

Implications

In short the findings of the present study suggest that if a reader is to attain fluency he/she must first have adequate word reading accuracy skills. The reader must develop strong grapheme–phoneme mapping skills such that infrequent presentations of a given word in text lead to strong representations of the word in memory. Second, the results of this study suggest that reading fluency is also influenced by one’s ability to process language for meaning. By teaching comprehension strategies that help students gain an understanding for words (i.e., vocabulary) and help students improve sentence and text comprehension, students will be better able to comprehend connected text and read it fluently. Third, the results of this study suggest that naming speed as measured by RAN tasks plays a significant role in the establishment of reading fluency. Because there is little consensus regarding what RAN tasks measure, it is unreasonable to suggest that interventions should include rapid automatized naming activities. Instead interventions should include ample opportunity to practice reading connected text. Practice likely results in higher quality phonological and orthographic representations in memory, which supports more accurate and potentially faster verbal processing (i.e., access of phonological and orthographic codes from memory) during reading.

References

Alliance for Excellent Education. (2003). The alliance for excellent education’s first annual American high school policy conference: Challenges confronting high schools: Literacy, adequacy, and equity. Washington, DC: Author.

Anthony, J. L., Williams, J. M., McDonald, R., Corbitt-Shindler, D., Carlson, C. D., & Francis, D. J. (2006). Phonological processing and emergent literacy in Spanish speaking preschool children. Annals of Dyslexia, 56, 239–270.

Anthony, J. L., Williams, J. M., McDonald, R., & Francis, D. J. (2007). Phonological processing and emergent literacy in younger and older preschool children. Annals of Dyslexia, 57, 113–137.

Baddley, A. (2000). Short-term and long-term working memory. In E. Tulving & F. I. M. Crank (Eds.), The Oxford handbook of memory (pp. 77–92). New York, NY: Oxford University Press Inc.

Ben-Dror, I., Pollatsek, A., & Scarpati, S. (1991). Word identification in isolation and in context by college dyslexic students. Brain and Language, 40, 471–490.

Bentler, P. M. (1995). EQS structural equations program manual. Encino, CA: Multivariate Software.

Berninger, V., Abbott, R. D., Vermeulen, K. (2002). Comparison of faster and slower responders to early intervention in reading: Differentiating feature of their language profiles. Learning Disabilities Quarterly, 25, 59–76.

Biancarosa, G., & Snow, C. E. (2004). Reading next—a vision for action and research in middle and high school literacy: A report to Carnegie Corporation of New York. Washington, DC: Alliance for Excellence in Education.

Bowey, J. A. (1985). Contextual facilitation in children’s oral reading in relation to grade and decoding skill. Journal of Experimental Child Psychology, 40, 23–48.

Bowers, P. G. (1993). Text reading and rereading: Determinants of fluency beyond word recognition. Journal of Reading Behavior, 25, 133–153.

Brenzitz, Z. (2006). Fluency in reading: Synchronization of processes. Mahwah, NJ: Lawrence Erlbaum Associates.

Catts, H. W., Adlof, S., Hogan, T. P. (2005). Are specific language impairments and dyslexia distinct disorders? Journal of Speech, Language, and Hearing Research, 48, 1378–1396.

Catts, H. W., Adlof, S., & Weismer, S. E. (2006). Language deficits in poor comprehenders: A case for the simple view of reading. Journal of Speech, Language, and Hearing Research, 49, 278–293.

Catts, H., Fey, M. E., & Zhang, X. (1999). Language basis of reading and reading disabilities: Evidence from a longitudinal investigation. Scientific Studies of Reading, 3, 331–361.

Catts, H. W., Fey, M. E., & Zhang, X. (2001). Estimating the risk of future reading difficulties in kindergarten children: A research-based model and its clinical implementation. Language, Speech, and Hearing Services in Schools, 31, 38–50.

Catts, H. W., Gillespie, M., Leonard, L., Kail, R. V., & Miller, C. (2002). The role of speed of processing, rapid naming, and phonological awareness in reading achievement. Journal of Learning Disabilities, 35, 32–50.

Chard, D. J., Vaughn, S., & Tyler, B. J. (2002). A synthesis of research on effective interventions for building reading fluency with elementary students with learning disabilities. Journal of Learning Disabilities, 35, 386–406.

Clarke, P., Hulme, C., & Snowling, M. (2005). Individual differences in rapid automatized naming and reading: A response timing analysis. Journal of Research in Reading, 28, 73–86.

Compton, D. L., Olson, R. K., DeFries, J. C., & Pennington, B. F. (2002). Comparing the relationships among two different versions of alphanumeric rapid automatized naming and word level reading skills. Scientific Studies of Reading, 6, 343–368.

Cragg, L., & Nation, K. (2006). Exploring written narrative in children with poor reading comprehension. Educational Psychology, 26, 55–72.

Curtis, M. B. (2002). Adolescent reading: A synthesis of research. Boston: Lesley College, The Center for Special Education.

Dollaghan, C., & Campbell, T. (1998). Nonword repetition and child language impairment. Journal of Speech, Language, and Hearing Research, 41, 1136–1146.

Dunn, L. M., & Dunn, L. M. (1987). Peabody picture vocabulary test (3rd ed.). Circle Pines, MN: AGS Publishing.

Ehri, L. C. (1997). Sight word learning in normal readers, dyslexics. In B. Blachman (Ed.), Foundations of reading acquisition and dyslexia: Implications for early intervention (pp. 163–189). Mahwah, NJ: Lawrence Erlbaum Associates.

Ehri, L., & McCormick, S. (1998). Phases of word learning: Implications for instruction with delayed and disabled readers. Reading and Writing Quarterly: Overcoming Learning Difficulties, 14, 135–163.

Fletcher, J. M., Denton, C. D., & Francis, D. J. (2005). Validity of alternate approaches for the identification of learning disabilities: Operationalizing unexpected underachievement. Journal of Learning Disabilities, 38, 545–552.

Fletcher, J. M., Lyon, G. R., Fuchs, L., & Barnes, L. (2006). Learning disabilities: Integrating science, practice, and policy. New York: Guilford Press.

Fuchs, L. S., Fuchs, D., Hosp, M. K., & Jenkins, J. R. (2001). Oral reading fluency as an indicator of reading competence: A theoretical, empirical, and historical analysis. Scientific Studies of Reading, 5, 239–256.

Fuchs, L. S., Fuchs, D., & Maxwell, L. (1988). The validity of informal reading comprehension measures. Remedial and Special Education, 9, 20–28.

Gaulin, C., & Campbell, T. (1994). Procedure for assessing verbal working memory in normal school-age children: Some preliminary data. Perceptual and Motor Skills, 79, 55–64.

Geva, E., & Zadeh, Z. Y. (2006). Reading efficiency in native English-speaking and English-as-a-second language children: The role of oral proficiency and underlying cognitive-linguistic processes. Scientific Studies of Reading, 10, 31–57.

Grigg, W. S., Daane, M. C., Jin, Y., & Campbell, J. R. (2003). The nation’s report card: Reading 2002 (NCES 2003–521). Washington, DC: National Center for Education Statistics.

Hock, M. F., & Deshler, D. D. (2003). Adolescent literacy: Ensuring that no child is left behind. Principal Leadership, 13, 55–61.

Jenkins, J. R., Fuchs, L. S., van den Broek, P., Espin, C., & Deno, S. L. (2003). Accuracy and fluency in list and context reading of skills and reading disabled groups: Absolute and relative performance levels. Learning Disabilities Research and Practice, 18, 237–245.

Kail, R., & Hall, L. K. (1994). Processing speed, naming speed, and reading. Developmental Psychology, 30, 949–954.

Katzir, T. (2002). Multiple pathways to dysfluent reading: A developmental—componential investigation of the development and breakdown of fluent reading. Unpublished dissertation. Tufts University.

Katzir, T., Youngsuk, K., Wolf, M., O’Brien, B., Kennedy, B., Lovett, M., & Morris, R. (2006). Reading fluency: The whole is more than the parts. Annals of Dyslexia, 56, 51–82.

Kintsch, W., & van Dijk, T. (1978). Toward a model of text comprehension and production. Psychological Review, 85, 363–394.

Kirsch, I. S., Jungeblut, A., Jenkins, L., & Kolstad, A. (1993). Adult literacy in America. A first look at the findings of the National Adult Literacy Survey. (NCES 1993–275). U. S. Department of Education, Office of Educational Research and Improvement. Washington, D.C: U.S. Government Printing Office.

Kline, R. B. (2005). Principles and practice of structural equation modeling (2nd ed.). New York: The Guilford Press.

LaBerge, D., & Samuels, S. J. (1974). Toward a theory of automatic information processing in reading. Cognitive Psychology, 6, 293–323.

Leach, J. M., Scarborough, H. S., & Rescorla, L. (2003). Late-emerging reading disabilities. Journal of Educational Psychology, 95, 211–224.

Leslie, L., & Caldwell, J. (1995). Qualitative reading inventory-II. New York: Addison-Westly.

Lexille (2005). Lexille framework for reading [computer software]. Durham, NC: MetaMetrics, Inc.

Manis, F. R., Doi, L. M., & Bhadha, B. (2000). Naming speed, phonological awareness, and orthographic knowledge in second graders. Journal of Learning Disabilities, 33, 325–333.

Mather, N., & Woodcock, R. W. (2001). Examiner’s manual. Woodcock–Johnson III tests of cognitive abilities. Itasca, IL: Riverside Publishing.

Misra, M., Katzir, T., & Wolf, M. (2004). Neural systems for rapid automatized naming in skilled readers: Unraveling the RAN-reading relationship. Scientific Studies of Reading, 8, 241–256.

Nation, K., Clarke, P., Marshall, C. M., & Durand, M. (2004). Hidden language impairments in children: Parallels between poor reading comprehension and specific language impairment. Journal of Speech, Hearing, and Language Research, 41, 199–211.

Nicholson, R. I., & Fawcett, A. J. (1999). Developmental dyslexia: The role of the cerebellum. Dyslexia, 5, 155–172.

Perfetti, C. A., Marron, M. A., & Foltz, P. W. (1996). Sources of comprehension failure: Theoretical perspectives and case studies. In C. Cornoldi & J. Oakhill (Eds.), Reading comprehension difficulties: Processes and intervention (pp. 137–165). Mahwah, NJ: Erlbaum Lawrence Associates.

Perie, M., Grigg, W. S., & Donahue, P. L. (2005). The nation’s report card: Reading 2005(NCES 2006–451). U.S. Department of Education, Institute of Education Sciences,National Center for Education Statistics. Washington, DC: U.S. Government Printing Office.

Pring, L., & Snowling, M. (1986). Developmental changes in word recognition: An information-processing account. Quarterly Journal of Experimental Psychology: Human Experimental Psychology, 38, 395–418.

Purvis, K. L., & Tannock, R. (2000). Phonological processing, not inhibition, differentiates ADHD and reading disability. Journal of the American Academy of Child and Adolescent Psychiatry, 39, 485–494.

Russel, E. D. (2002). An examination of the nature of reading fluency. Unpublished dissertation. University of Rhode Island.

Savage, R., Cornich, K., Manly, T., & Hollis, C. (2006). Cognitive processes in children’s reading and attention: The role of working memory, divided attention, and response inhibition. British Journal of Psychology, 97, 365–385.

Schatschneider, S., Carlson, C. D., Francis, D. J., Foorman, B. R., & Fletcher, J. M. (2002). Relationship of rapid automatized naming and phonological awareness in early reading development: Implications for the double deficit hypothesis. Journal of Learning Disabilities, 35, 245–256.

Schatschneider, C., Fletcher, J. M., Francis, D. J., Carlson, C. D., & Foorman, B. R. (2004). Kindergarten prediction of reading skills: A longitudinal comparative analysis. Journal of Educational Psychology, 96, 265–282.

Semel, E., Wiig, E., & Secord, W. (1995). Clinical evaluation of language fundamentals-3. San Antonio, TX: Psychological Corporation.

Shaywitz, S. E., Fletcher, J. M., & Shaywitz, B. A. (1996). A conceptual model and definition of dyslexia: Findings emerging from the Connecticut Longitudinal Study. In J. H. Beichman, N. Cohen, M. M. Konstantareas, & R. Tannock (Eds.). Language, learning, and behavior disorders: Developmental, biological, and clinical perspectives (pp. 199–223). New York, NY: Cambridge University Press.

Stanovich, K. E. (1980). Toward an interactive-compensatory model of individual differences in the development of reading fluency. Reading Research Quarterly, 16, 32–71.

Tallal, P., Miller, S. L., Jenkins, W. M., & Merizenich, M. W. (1997). The role of temporal processing in developmental language-based learning disorders: Research, clinical implications. In B. Blachman (Eds.), Foundations of reading acquisition (pp. 49–66). Hillsdale, NJ: Erlbaum.

Thernstrom, A. M., & Thernstrom, S. (2003). No excuses: Closing the racial gap in learning. New York: Simon and Schuster.

Tomblin, J. B., Records, N., Buckwalter, P., Zhang, X., Smith, E., & O’Brien, M. (1997). Prevalence of specific language impairment in kindergarten children. Journal of Speech and Hearing Research, 40, 1245–1260.

Tomblin, J. B., Zhang, X., Weis, A., Catts, H., & Weismer, S. (2004). Dimensions of individual differences in communication skills among primary grade children. In M. Rice & S. Warren (Eds.), Developmental language disorders: From phenotypes to etiologies (pp. 53–76). Mahwah, NJ: Lawrence Erlbaum Associates.

Torgesen, J., & Hudson, R. (2006). Reading fluency: Critical issues for struggling readers. In S. J. Samuels & A. Farstrup (Eds.). Reading fluency: The forgotten dimension of reading success. Newark, DE: International Reading Association.

Torgesen, J. K., Rashotte, C. A., & Alexander, A. W. (2001). Principles of fluency instruction in reading: Relationships with established empirical outcomes. In M. Wolf (Ed.), Dyslexia, fluency, and the brain (pp. 333–356). Parkton, MD: York Press.

Torgesen, J., Wagner, R., & Rashotte, C. (1998). Test of word reading efficiency. Austin, TX: Pro-Ed.

Torgesen, J., Wagner, R, & Rashotte, C. (2000). Comprehensive test of phonological processing. Austin, TX: Pro-Ed.

Torgesen, J. K., Wagner, R. K., Rashotte, C. A., Burgess, S., & Hecht S. (1997). Contributions of phonological awareness and rapid automatic naming ability to the growth of word-reading skills in second- to fifth-grade children. Scientific Studies of Reading, 1, 161–185.

Tunmer, W., & Chapman, J. (1996). Language prediction skill, phonological recoding ability, and beginning reading. In C. Hulme & R. M. Joshi (Eds.), Reading and spelling: Development and disorder. Mahwah, NJ: Lawrence Erlbaum Associates.

Vaughn, S., Fletcher, J. M., Francis, D., Denton, C., Wanzek, J., Cirino, P., Barth, A. E., Romain, M. Response to intervention with older students with reading difficulties. Learning and Individual Differences, in press.

Wagner, R., & Torgesen, J. K. (1987). The nature of phonological processing and its causal role in the acquisition of reading skills. Psychological Bulletin, 101, 192–212.

Wagner, R. K., Torgesen, J. K., Laughon, P., Simmons, K., & Rashotte, C. A. (1993). Development of young readers’ phonological processing abilities. Journal of Educational Psychology, 85, 83–103.

Wagner, R. K., Torgesen, K. K., & Rashotte, C. A. (1999). Comprehensive test of phonological processing. Austin, TX: Pro-Ed.

Wagner, R. K., Torgesen, J. K., Rashotte, C. A., Hecht, S. A., Barker, T. A., Burgess, S. R., Donahue, J., & Garon, T. (1997). Changing relations between phonological processing abilities and word-level reading as children develop from beginning to skilled readers: A 5-year longitudinal study. Developmental Psychology, 33, 468–479.

Wallace, G., & Hammil, D. (1994). Comprehensive receptive and expressive vocabulary test. Austin, TX: Pro-Ed.

Wechsler, D. (1991). Wechsler intelligence scale for children-III. San Antonio, TX: The Psychological Corporation.

Wiederholt, J. L., & Bryant, B. R. (1992). Gray oral reading test-3. Austin, TX: Pro-Ed.

Wolf, M., & Bowers, P. G. (1999). The double-deficit hypothesis for the developmental dyslexias. Journal of Educational Psychology, 91, 415–438.

Wolf, M., & Katzir-Cohen, T. (2001). Reading fluency and its intervention. Scientific Studies of Reading, 5, 211–239.

Wolff, P. H. (1993). Impaired temporal resolution in developmental dyslexia. Annals of the New York Academy of Sciences, 682, 87–103.

Woodcock, R. (1987). Woodcock reading mastery tests—revised. Circle Pines, MN: American Guidance Service.

Woodcock, R. W., McGrew, K. S., & Mather, N. (2001). Woodcock–Johnson III test of cognitive abilities. Itasca, IL: Riverside Publishing.

Yuiil, N, & Oakhill, J. V. (1991). Children’s problems in text comprehension. Cambridge: Cambridge University Press.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Barth, A.E., Catts, H.W. & Anthony, J.L. The component skills underlying reading fluency in adolescent readers: a latent variable analysis. Read Writ 22, 567–590 (2009). https://doi.org/10.1007/s11145-008-9125-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11145-008-9125-y