Abstract

Purpose

This study seeks to estimate clinically significant change in quality of life (QOL) appraisal by comparing changes in appraisal over 6 months of follow-up in people with multiple sclerosis who experienced no change in symptoms versus those with worsening symptoms. This estimation is important for enabling valid interpretation of longitudinal change, both in terms of ensuring the comparison of scores and for response shift estimation.

Methods

This is a secondary analysis of longitudinal data (N = 859) of participants in the North American Research Committee on Multiple Sclerosis registry. Patient groupings were characterized on the basis of self-reported symptom change over 1 year of follow-up and compared in terms of their responses to the QOL Appraisal Profile. Bonferroni adjustments reduced the type I error rate, and interpretation was restricted to those comparisons with small or larger effect sizes using Cohen’s criteria.

Results

The Symptoms Unchanged group evidenced more change in Frame of Reference goal delineation themes and Combinatory Algorithm, whereas the Symptoms Worse group evidenced more change in Sampling of Experience and Standards of Comparison items. The group differences were, however, not large or statistically significant in most cases, likely due to being under-powered to detect interaction effects.

Conclusions

Many aspects of appraisal are relatively constant in the context of unchanging and changing symptom experience, but symptom changes led patients to make substantial shifts in what experiences they sample when thinking about their health-related QOL. These underlying cognitive processes may help people to maintain homeostasis in their perceived QOL.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Understanding how individuals appraise their health-related quality of life (QOL) is important to evaluate meaningful change over time. When individuals experience a health state change, they may change their internal standards (i.e., recalibration), values (i.e., reprioritization), or meaning (i.e., reconceptualization) of the target construct one is asking them to self-report [1, 2]. These response shifts are to be expected with evaluative constructs, which are assessed by idiosyncratic rather than objective criteria [3]. Evaluative ratings of QOL are products of an appraisal process, where individuals must consider what QOL means to them, what experiences they have had that are relevant to QOL, how experiences compare to other circumstances or outcomes, and the relative importance of different experiences [4]. Although philosophers and clinicians have long noted response shift phenomena [5–7], clinical investigators have in the past decade recognized that response shift effects not only may obfuscate important treatment-related changes, but may indeed be a desired effect in rehabilitation and psychosocial interventions [8–10]. Response shift has now been studied and recognized to affect adaptation to a wide range of health conditions, including multiple sclerosis (MS) [11–14], cancer [9, 15–27], stroke [28, 29], diabetes [30, 31], geriatrics [32–34], palliative care [35–38], dental disorders [39], HIV/AIDS [40, 41], and orthopedics [42].

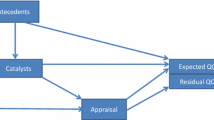

Past research has documented that there are substantial differences in how QOL is appraised within and between individuals. There are two primary reasons to study appraisal processes. First, to ensure comparability of QOL scores within or between individuals, one would need to know that the same appraisal parameters were emphasized. This is called a contingent true score [3]. Predicting QOL scores within or between individuals is contingent on being able to measure changes in appraisal due to maturation and aging, changes in health-related function, personal resources (psychosocial, financial, etc.), other life events, and random fluctuations. Second, one studies appraisal processes to estimate response shifts over time: one can examine how much changes in appraisal explain the discrepancy between expected and observed QOL [4]. This “discrepancy” can be thought of as the residuals in a regression model, reflecting unexplained variance in the outcome. This examination relies on the assumption that the change in appraisal is meaningful. It is, however, unknown what part is random fluctuation, what part is due to maturation and aging, and what part is due to health-related changes in function. It would be helpful to have an estimate of clinically significant change in appraisal due to health-related changes in function to enable valid interpretation of such change, both in terms of ensuring the comparison of valid contingent true scores and for response shift estimation. A way to get at such an estimate is to look at change in appraisal over time in patients who have stable health in comparison with those who do not have stable health.

Characterizing signal versus noise in appraisal changes

We focus on the four appraisal processes described in the Rapkin and Schwartz [4] response shift model: (1) Frame of Reference: What life domains are relevant to an individual’s QOL? (2) Sampling of Experience: What experiences does the individual consider pertinent within these domains? (3) Standards of Comparison: What points of reference does the individual use to evaluate these experiences? and (4) Combinatory Algorithm: What is the relative importance attributed to these different evaluations in forming an overall QOL rating? These appraisal processes determine perceived QOL. Appraisal measures have been used to understand instances when ratings of QOL differ appreciably from expected values—for example, when an individual’s QOL ratings remain stable or even improve despite worsening health status.

Background on multiple sclerosis

The present work involves people with MS, the most prevalent chronic progressive neurological disease among young adults worldwide. In the USA, there are approximately 400,000 cases of MS, with an incidence of nearly 200 new cases each week [43]. The illness course is generally characterized as either relapsing–remitting (85 %) or progressive (15 %) [43]. Recent therapeutic advances in treating this autoimmune disease have, however, transformed both treatment options and the natural history of MS [44, 45]. When used early in the course of disease [44], disease-modifying therapies are leading to increasing numbers of people with MS who are experiencing stable health without progression of disability. Recent meta-analyses suggest that the typical effects of therapeutic agents are generally small (e.g., ξ = 0.20), using standard clinical outcome measures (e.g., Expanded Disability Status Scale (EDSS) [46] or the MS Functional Composite [47]). Such small effect sizes are thus not easily detected [48, 49]. Further, as patient-reported outcome measures become increasingly standard in MS clinical trials, the detection of treatment effects depends on the outcome measure’s reliability, validity, and responsiveness. Person factors—such as response shift phenomena—may mitigate the reliability, validity, and responsiveness of health-related QOL measures. Thus, understanding how appraisal processes fluctuate in stable and non-stable patients is relevant to efficacy and comparative effectiveness studies of current and new disease-modifying therapies.

Hypotheses

We expect fluctuations in appraisal over time to occur. We expect appraisal to be influenced by health state changes and other life events. We thus hypothesize that appraisal processes will exhibit fluctuation of lesser magnitude in stable patients than in non-stable patients.

Methods

Sample

This project involved secondary analysis of stable and non-stable subsamples selected from an existing longitudinal data set of 859 people who provided data in a supplemental survey administered to the North American Research Committee on Multiple Sclerosis (NARCOMS) registry population [50]. This self-report registry includes over 36,000 individuals of age 18 or over, reporting clinician-diagnosed MS. Bi-annual survey updates using either paper or secure web-based survey forms capture data on demographics, disease characteristics, disability, treatments, and access to healthcare providers. Potential candidates for the study were selected from those NARCOMS registry participants who completed the latest two semi-annual update surveys online and resided in the USA. All participants participated voluntarily in a web-based add-on survey after they completed the Spring 2010 and Fall 2010 semi-annual updates. This study was approved by the New England Institutional Review Board, and NARCOMS is also reviewed by the IRB at the University of Alabama at Birmingham. All participants provided written informed consent.

Measures

Variables identifying stable patients

An overall symptom transition item querying perceived change over the past 6 months was included, ranging from 1 (much worse) to 7 (much better), with a score of four indicating no change. This item was included in the last data collection time point, i.e., 6 years after study entry.

Primary outcome variable

Cognitive appraisal processes were assessed using the QOL Appraisal Profile [4]. This measure includes open-ended (i.e., qualitative) and multiple-choice questions to assess the four appraisal parameters described above. Frame of Reference was assessed using eight open-ended text items that asked participants to describe: (a) what QOL means to them at this time (QOL Definition); and (b) concerns that were most important to their current life satisfaction (Goal Delineation), specifically: (1) What are the main things you want to accomplish? (2) What are the main problems that you want to solve? (3) What situations do you want to prevent or avoid? (4) What things do you want to keep the same as they are now? (5) What things do you want to accept as they are? (6) What demands and responsibilities do you want to let go of or reduce? (7) Of the things that you just mentioned, which ones did you think about the most when filling out this questionnaire? Close-ended Likert-scaled items were used to assess Sampling of Experience (14 items, each ranging from 0 to 4), Standards of Comparison (8 items, each ranging from 0 to 4), and Combinatory Algorithm (16 items each ranging from 1 to 3). The QOL Appraisal Profile was administered at the last two time points, i.e., five-and-a-half and 6 years following study entry.

Statistical analysis

Creating stable subsample

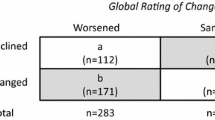

We identified patients who endorsed “Symptoms Unchanged” (i.e., a score = 4 on a 0–7 scale) or “Symptoms Worse” (i.e., a score of ≤3) over the past 6 months on the follow-up Symptom Transition item. The Symptoms Unchanged group was used as the referent group (coded as “0”), and the Symptoms Changed group was coded as “1.” A two-sample proportionality test was used to compare the demographic characteristics of the Symptoms Unchanged and Symptoms Worse subgroups.

Coding the QOL appraisal profile

The open-ended data were coded by at least two trained coders, and inter-rater agreement was moderately high (mean kappa 0.65). For this analysis, we conservatively used only those codes on which both raters agreed in order to reduce random variation in the data. This approach reduced the number of different QOL themes attributed to different individuals. The QOL Definition text data were coded according to a standardized protocol [51] with themes originally derived from an extensive sorting procedure [52]. QOL Definition themes were coded as “0” if they were not reflected in the individual’s written text response and “1” if they were reflected in the individual’s written text response. Goal themes were rated on a series of seven items describing goal content, such as goals related to interpersonal involvement, roles, mood states, and health. For each of an individual’s goal statements, items were coded as “0” if they were not relevant to the individual’s written text response and “1” if they were reflected in the individual’s written text response. The seven goal items were summed, resulting in scores that ranged from 0 to 7 for each of the goal theme codes.

Fluctuations in appraisal over time

Multi-level mixed-effects regression models [54] were used to investigate group, time, and group-by-time effects in response to the QOL Appraisal Profile over the 6 months of follow-up [53]. For continuous outcomes (e.g., those QOL Appraisal scores based on Likert-scaled items), multi-level mixed-effects linear regression models were computed [53]. For dichotomous outcomes (e.g., the coded open-ended QOL Definition data), multi-level mixed-effects logistic regression models were computed [54].

Multiple comparisons

Past research on the QOL Appraisal Profile has revealed that the item responses do not reduce to composite scores that are consistent within or between patient groups over time [51, 55]. Consequently, we do not believe that it is proper to utilize data reduction strategies such as principal components analysis. Rather, we work with the item-level data within appraisal parameters and use the findings for descriptive purposes [55]. This approach leads to an inflated type I error rate due to the large number of comparisons. When discussing prevalence of fluctuations, we will present both raw (unadjusted) and Bonferroni-adjusted results [56] to account for multiple comparisons. When inferring difference in the content of appraisal processes, we will only interpret those comparisons with effects sizes characterized by Cohen [57] to be at least of small magnitude (i.e., ≥0.20). This approach will thus provide an indication of the “noise” in estimating the prevalence of appraisal fluctuations over time while only interpreting the robust group differences. We then evaluated whether adjusting for important covariates that distinguished the groups modified the findings; in this case, we only examined those comparisons that were statistically significant after Bonferroni adjustment.

Stata Release 12 [58] was used for all analyses.

Results

Sample

Table 1 shows the sample demographic characteristics for the study sample, as well as for the Symptoms Unchanged (N = 343) and Symptoms Worse (N = 374) subgroups. Participants in the study sample had a mean age of 55 years, and 74 % of the participants were female, which is consistent with the gender distribution in MS [59]. Less than half of the sample was employed, with a median annual household income between $50,000 and $100,000 in the whole sample. Ninety-seven percent of participants reported living in a private home. In terms of health behaviors, 59 % endorsed drinking alcohol monthly or less often, and 88 % were non-smokers. The median body mass index characterized the sample as overweight (body mass index of 25–29.9), with 30 % in the overweight category (over 25) and 29 % in the obese category (over 30).

A comparison of subgroup differences (Table 1) suggests that the Symptoms Unchanged subgroup had a higher proportion of females (p < 0.0001), was younger (p < 0.02), was more likely to be employed full-time, and was less likely to be unemployed (p < 0.003 and 0.001, respectively). These variables were entered as covariates in final models that were statistically significant even after Bonferroni correction. Models that adjusted for significant demographic covariates identified in Table 1 did not modify the findings described below (data not shown).

Frame of reference

Prevalence

Both groups evidenced statistically important appraisal change in their QOL Definition with regard to two themes (Table 2). With regard to goal delineation themes, the Symptoms Unchanged group evidenced changes in six themes, whereas the Symptoms Worse group evidenced changes in two themes (Table 3). There were no significant group-by-time interactions for Frame of Reference, both in terms of QOL Definition (Table 2) and Goal Delineation (Table 3). These changes reflected small to moderate effect sizes (ES range 0.21–0.51).

Content

Both groups evidenced changes in QOL Definition such that Contentment and Family/Friends were mentioned more often in the follow-up than at baseline (Table 2). These changes reflected small effect sizes (ES range 0.25–0.32). Goal delineation themes that changed in the Symptoms Unchanged patients reflected content related to community involvement, financial concerns, independent functioning, interpersonal relationships, living situations/housing, and MS-related goals. In contrast, the Symptoms Worse group evidenced change in goal delineation themes related to only financial concerns and interpersonal relationships (Table 3). These changes reflected small to moderate effect sizes (ES range 0.21–0.51).

Sampling of experience

Prevalence

Patients in the Symptoms Unchanged group evidenced changes in one Sampling of Experience item, whereas patients in the Symptoms Worse group evidenced changes in four Sampling of Experience items. There were four significant group-by-time interactions for Sampling of Experience (Table 4), although none of these effects met Cohen’s criteria [57] for even small effect sizes.

Content

Patients in the Symptoms Unchanged group were more likely to focus on their MS over time, whereas patients in the Symptoms Worse group were less likely to think about the worst moments, recall recent episodes, focus on their MS, or take into account what their doctor told them (Table 4). Significant group-by-time interactions revealed differences in change by group regarding thinking about the worst moments, recalling recent episodes, focusing on MS, and trying to communicate the seriousness of the condition (Table 4).

Standards of comparison

Prevalence

There were no changes over time in Standards of Comparison in the Symptoms Unchanged group, whereas the Symptoms Worse group evidenced a change in one item, reflecting an effect size smaller than Cohen’s criterion (Table 5). There were no significant group-by-time interactions for Standards of Comparison (Table 5).

Content

The Symptoms Unchanged patients showed no change in Standards of Comparison. The Symptoms Worse group were less likely to emphasize a time in their past before their MS (Table 5).

Combinatory algorithm

Prevalence

The Symptoms Unchanged group evidenced change on one item related to how they combine information to answer QOL questions, reflecting an effect size smaller than Cohen’s criterion. The Symptoms Worse group did not evidence change on any item assessing this appraisal process. There were no significant group-by-time interactions for Combinatory Algorithm (Table 6).

Content

The Symptoms Unchanged patients showed an increase in focusing on things they are proud of, whereas the Symptoms Worse patients did not evidence fluctuation in any combinatory algorithm item (Table 6).

Discussion

Understanding the base rate for appraisal changes over time is an important first step to operationalizing response shifts on the basis of appraisal changes in studies of health-related QOL. This study is the first to our knowledge to examine fluctuations in appraisal over time in chronically ill patients characterized by symptom change over the past 6 months. The sample included large enough numbers of patients in each group and balanced group sizes to permit robust statistical inference about within-group patterns, but the study is under-powered to detect between-group differences in patterns of change. We found that while there are differences between the groups in changes in appraisal over time, the differences are not large nor are they statistically significant in most cases. This null finding is likely due to being under-powered to detect interaction effects. For example, in the Frame of Reference analyses of goal delineation changes, the Symptoms Unchanged group evidenced change in three times the number of items as compared to the Symptoms Worse group, but there were no significant group-by-time interaction effects. A power analysis of one comparison, where there was a significant group difference on Community Involvement in one group but not the other, revealed only 35 % power.

Although there were within-group changes detected within each appraisal process, the prevalence of changes varied by appraisal process: sometimes we found more change in the Symptoms Unchanged group (e.g., Frame of Reference goal delineation themes, Combinatory Algorithm), and other times, we found more changes in the Symptoms Worse group (e.g., Sampling of Experience items, Standards of Comparison). Thus, there appear to be many aspects of appraisal that are relatively constant in the context of unchanging and changing symptom experience, but there are other aspects of appraisal that appear to differentiate the groups. In our study, symptom changes led patients to make substantial shifts in what experiences they sample when thinking about their health-related QOL.

Considering our findings in light of the idea of a contingent true score [3], it would seem that the two comparison groups had a similar contingent true score in terms of Frame of Reference QOL Definition, Standards of Comparison, and Combinatory Algorithm—that is, three of the five aspects of appraisal measured. This finding would suggest meaningfully comparable scores over time in terms of these underlying appraisal processes

The changes in Sampling of Experience appraisals are, however, different by group. Thus, the health state changes are associated with differential change in this particular appraisal processes. This idea is consistent with the Rapkin and Schwartz theoretical model of response shift [4], which operationalizes response shift as an epiphenomenon that is inferred when changes in appraisal explain the discrepancy between expected and observed outcome scores. Future research should evaluate whether the Rapkin and Schwartz [4] operationalization of response shift is viable given this item-based use of the QOL Appraisal Profile. Although findings from this study serve to validate the profile as a measure of the constructs in the Rapkin and Schwartz model, there is still a need to determine how appraisal assessment can be improved so it behaves more like a psychometric tool.

These findings have implications for the responsiveness of patient-reported outcome tools. We know from research on clinically significant change that responsiveness is context-specific. In other words, the magnitude of responsiveness estimates depends on the sample, the intervention, the measures, the period of follow-up, etc. [60]. Based on our findings, both Symptoms Unchanged and Symptoms Worse patients are undergoing notable and distinct changes in different aspects of appraisal processes. It is likely that an outcome tool’s responsiveness is affected by these appraisal changes, and also likely that the minimally important difference of a tool will vary as a function of underlying appraisal processes [61].

It is possible that the appraisal parameters most amenable to change among Symptoms Unchanged patients are relevant to maintaining homeostasis in perceived QOL and a QOL “set point” [62]. Our finding that healthier (Symptoms Unchanged) patients showed greater variability in goal delineation (Frame of Reference) suggests that when symptoms are more stable, people have a broader and more diverse array of goals that they consider when thinking about quality of life. In contrast, sicker (Symptoms Worse) people have more limited foci for their goals but more varied experience sampling. Consequently, they are forced to restrict their goals but think about more varied aspects of their experience in order to adapt to changing circumstances.

Although this study breaks new ground in our understanding of base rate and health-related appraisal changes, the limitations of this study should be acknowledged. First, the data are entirely self-reported, so triangulating patient- and clinician-reported or biological indicators of disease progression is not possible. Second, the analyses are descriptive and many comparisons were computed. Although we reduced the type I error rate by using a Bonferroni adjustment for multiple comparisons, there were still a number of “comparison families” [63] investigated, so a risk remains of finding differences due to chance. The study was also under-powered to detect group-by-time interactions so our findings are likely conservative estimates. The robustness of our findings would thus need to be tested in other independent samples. Finally, the study focused on a sample of chronically ill people and included people with unchanged and worsened symptom experience over 6 months. It is possible that the nature of the sample affected the results. To assess the generalizability of our findings, future research would need to replicate the study on stably healthy people as compared to a group who becomes sick.

In summary, there are notable changes in appraisal among MS patients whose health state is changing, but there are also appraisal fluctuations in stable patients. These fluctuations may reflect how individuals maintain a QOL “set point,” and may provide insight into how variability is necessary for maintaining healthy, dynamic systems [64]. Future research might replicate our study in other patient samples and investigate whether specific patterns of change in appraisal are associated with better or more adaptive QOL outcomes. Meaningful changes in appraisal among patients with worsening symptom experience may reflect response shifts [52], as well as other relevant constructs such as coping flexibility [64], neurocognitive challenges, existential angst, and measurement error [65]. Future research should consider these alternative hypotheses when designing the study so that contrasting hypotheses/theories can be tested.

References

Sprangers, M. A., & Schwartz, C. E. (1999). Integrating response shift into health-related quality of life research: A theoretical model. Social Science and Medicine, 48(11), 1507–1515.

Schwartz, C. E., & Sprangers, M. A. (1999). Methodological approaches for assessing response shift in longitudinal health-related quality-of-life research. Social Science and Medicine, 48(11), 1531–1548.

Schwartz, C. E., & Rapkin, B. D. (2004). Reconsidering the psychometrics of quality of life assessment in light of response shift and appraisal. Health and Quality of Life Outcomes, 2, 16.

Rapkin, B. D., & Schwartz, C. E. (2004). Toward a theoretical model of quality-of-life appraisal: Implications of findings from studies of response shift. Health and Quality of Life Outcomes, 2(1), 14.

Aristotle, Nicomachean Ethics 384-322 BC.

Socrates, The Republic, Book IX. 469-399 BC.

Albrecht, G. L., & Devlieger, P. J. (1999). The disability paradox: High quality of life against all odds. Social Science and Medicine, 48(8), 977–988.

Schwartz, C. E., & Sprangers, M. A. G. (1999). Introduction to symposium on the challenge of response shift in social science and medicine. Social Science and Medicine, 48(11), 1505–1506.

Schwartz, C. E., et al. (1999). An evaluation of a psychosocial intervention for survivors of childhood cancer: Paradoxical effects of response shift over time. Psychooncology, 8(4), 344–354.

Schwartz, C. E., et al. (2007). Response shift theory: Important implications for measuring quality of life in individuals with disability. Archives of Physical Medicine and Rehabilitation, 88, 529–536.

Becker, H., et al. (2000). Goal attainment scaling to measure individual change in intervention studies. Nursing Research, 49(3), 176–180.

Evers, K. J., & Karnilowicz, W. (1996). Patient attitude as a function of disease state in multiple sclerosis. Social Science and Medicine, 43(8), 1245–1251.

Paterson, B. L. (2003). The koala has claws: Applications of the shifting perspectives model in research of chronic illness. Qualitative Health Research, 13(7), 987–994.

Schwartz, C. E., & Sendor, M. (1999). Helping others helps oneself: Response shift effects in peer support. Social Science and Medicine, 48(11), 1563–1575.

Bernhard, J., et al. (1999). Quality of life as subjective experience: Reframing of perception in patients with colon cancer undergoing radical resection with or without adjuvant chemotherapy. Swiss Group for Clinical Cancer Research (SAKK). Annals of Oncology, 10(7), 775–782.

Bernhard, J., et al. (2001). Response shift in the perception of health for utility evaluation: An explorative investigation. European Journal of Cancer, 37(14), 1729–1735.

Boyd, N. F., et al. (1990). Whose utilities for decision analysis? Medical Decision Making, 10(1), 58–67.

Breetvelt, I. S., & Van Dam, F. S. (1991). Underreporting by cancer patients: The case of response-shift. Social Science and Medicine, 32(9), 981–987.

Cella, D., Hahn, E. A., & Dineen, K. (2002). Meaningful change in cancer-specific quality of life scores: Differences between improvement and worsening. Quality of Life Research, 11(3), 207–221.

Chapman, G. B., et al. (1998). Prostate cancer patients’ utilities for health states: How it looks depends on where you stand. Medical Decision Making, 18(3), 278–286.

Hagedoorn, M., Sneeuw, K. C., & Aaronson, N. K. (2002). Changes in physical functioning and quality of life in patients with cancer: Response shift and relative evaluation of one’s condition. Journal of Clinical Epidemiology, 55(2), 176–183.

Jansen, S. J. T., et al. (2001). Response shift in quality of life measurement in early-stage breast cancer patients undergoing radiotherapy. Quality of Life Research, 9, 603–615.

Kagawa-Singer, M. (1993). Redefining health: Living with cancer. Social Science and Medicine, 37(3), 295–304.

Oort, F. J., Visser, M. R. M., & Sprangers, M. A. G. (2005). An application of structural equation modeling to detect response shifts and true change in quality of life data from cancer patients undergoing invasive surgery. Quality of Life Research, 14, 599–609.

Rees, J., et al. (2005). The measurement of response shift in patients with advanced prostate cancer and their partners. Health and Quality of Life Outcomes, 3(21), 1–8.

Sprangers, M. A., et al. (1999). Revealing response shift in longitudinal research on fatigue—the use of the thentest approach. Acta Oncologica, 38(6), 709–718.

Tederous-Williams, M. (2003). Response shift in women who have been pregnant with cancer. Quality of Life Research, 12(7), 783.

Ahmed, S., et al. (2005). Using the patient generated index to evaluate response shift post-stroke. Quality of Life Research, 14, 2247–2257.

Ahmed, S., et al. (2005). Change in quality of life in people with stroke over time: True change or response shift? Quality of Life Research, 14, 611–627.

Postulart, D., & Adang, E. M. (2000). Response shift and adaptation in chronically ill patients. Medical Decision Making, 20(2), 186–193.

Wikby, A., et al. (1993). Coping behavior and degree of discrepancy between retrospective and prospective self-ratings of change in quality of life in Type 1 diabetes mellitus. Diabetic Medicine, 10, 851–854.

Daltroy, L. H., et al. (1999). Discrepancies between self-reported and observed physical function in the elderly: The influence of response shift and other factors. Social Science and Medicine, 48(11), 1549–1561.

Heidrich, S. M., & Ryff, C. D. (1993). The role of social comparisons processes in the psychological adaptation of elderly adults. Journal of Gerontology, 48(3), 127–136.

Rijken, M., et al. (1995). Subjective well-being of elderly women: Conceptual differences between cancer patients, women suffering from chronic ailments and healthy women. British Journal of Clinical Psychology, 34, 289–300.

Rees, J., et al. (2004). Measuring quality of life in patients with advanced cancer. European Journal of Palliative Care, 11(3), 104–106.

Schwartz, C. E., et al. (2004). Measuring patient treatment preferences in end-of-life care research: Applications for advance care planning interventions and response shift research. Journal of Palliative Medicine, 7(2), 233–245.

Schwartz, C. E., et al. (2002). Early intervention in planning end-of-life care with ambulatory geriatric patients: Results of a pilot trial. Archives of Internal Medicine, 162(14), 1611–1618.

Schwartz, C. E., et al. (2005). Evaluation of the Missoula-VITAS quality of life index—revised: Research tool or clinical tool? Journal of Palliative Medicine, 8(1), 121–135.

Ring, L., Höfer, S., Heuston, F., Harris, D., & O’Boyle, C. A. (2005). Response shift masks the treatment impact on patient reported outcomes (PROs): The example of individual quality of life in edentulous patients. Health & Quality of Life Outcomes, 3, 55.

Li, Y., & Rapkin, B. (2009). Classification and regression tree analysis to identify complex cognitive paths underlying quality of life response shifts: A study of individuals living with HIV/AIDS. Journal of Clinical Epidemiology, 62, 1138–1147.

Rapkin, B. D., Schwartz, C. E., & Sprangers, M. A. G. (2000). Personal goals and response shifts: Understanding the impact of illness and events on the quality of life of people living with AIDS 8400. In C. E. Schwartz & M. A. G. Sprangers (Eds.), Adaptation to changing health: Response shift in quality-of-life research (pp. 53–71). Washington, D.C.: American Psychological Association.

Razmjou, H., et al. (2006). Response shift in outcome assessment in patients undergoing total knee arthroplasty. Journal of Bone & Joint Surgery, 88(12), 2590–2595.

NMSS. (2005). Multiple sclerosis information sourcebook. New York, NY: Information Resource Center and Library of the National Multiple Sclerosis Society.

Rammohan, K. W., & Shoemaker, J. (2010). Emerging multiple sclerosis oral therapies. Neurology, 74(Supplement 1), S47–S53.

Tremlett, H., et al. (2010). New perspectives in the natural history of multiple sclerosis. Neurology, 74, 2004–2015.

Kurtzke, J. F. (1983). Rating neurologic impairment in multiple sclerosis: An expanded disability status scale (EDSS). Neurology, 33(11), 1444–1452.

Cutter, G. R., et al. (1999). Development of a multiple sclerosis functional composite as a clinical trial outcome measure. Brain, 122, 101–112.

Filippini, G., et al. (2003). Interferons in relapsing remitting multiple sclerosis: A systematic review. Lancet, 361, 545–552.

Rice, G. P. A., et al. (2001). Interferon in relapsing-remitting multiple sclerosis. Cochrane Database of Systematic Reviews, 4, Article No CD002002. doi:10.1002/14651858.CD002002.

NARCOMS. (2013). North American Research Committee on Multiple Sclerosis (NARCOMS) Registry. Available from: http://narcoms.org/.

Rapkin, B. A., et al. (2011). User manual for the quality of life appraisal profile. Concord, MA: DeltaQuest Foundation, Inc.

Bobinski, J., & Lipinski, M. (2009). EORTC risk tables—a new diagnostic tool in urology. Pol Merkur Lekarski, 27(162), 524–528.

Laird, N. M., & Ware, J. H. (1982). Random-effects models for longitudinal data. Biometrics, 38, 963–974.

Hedeker, D. (2003). A mixed-effects multinomial logistic regression model. Statistics in Medicine, 22(9), 1433–1446.

Schwartz, C. E., et al. (2013). Cognitive reserve and appraisal in multiple sclerosis. Multiple Sclerosis and Related Disorders, 2, 36–44.

Miller, R. G. (1981). Simultaneous statistical inference (2nd ed.). Berlin: Springer.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112, 155–159.

StataCorp. (2011). Stata statistical software: Release 12. College Station, TX: StataCorp LP.

Matthews, W. B. (Ed.). (1991). McAlpine’s multiple sclerosis (2nd ed.). New York: Churchill Livingstone.

Beaton, D. E., et al. (2001). A taxonomy for responsiveness. Journal of Clinical Epidemiology, 54(12), 1204–1217.

Finkelstein, J. A., Quaranto, B. R., & Schwartz, C. E. (2013). Threats to the internal validity of spinal surgery outcome assessment: Recalibration response shift or implicit theories of change? Applied Quality of Life Research. doi:10.1007/s11482-013-9221-2.

Schwartz, C. E., & Sprangers, M. A. (2009). Reflections on genes and sustainable change: Toward a trait and state conceptualization of response shift. Journal of Clinical Epidemiology, 62(11), 1118–1123.

Miller, R. G. J. (1991). Simultaneous statistical inference. New York: Springer.

Schwartz, C. E., et al. (1998). Self-reported coping behavior in health and disease: Assessment with a card sort game. Behavioral Medicine, 24(1), 41–44.

Schwartz, C. E., & Daltroy, L. H. (1999). Learning from unreliability: The importance of inconsistency in coping dynamics. Social Science and Medicine, 48(5), 619–631.

Acknowledgments

The authors had full access to the original data. Part of the data collected for this work was provided in the context of a Consortium of MS Centers/Global MS Registry Visiting Scientist Fellowship to Dr. Schwartz, which was supported through a Foundation of the Consortium of Multiple Sclerosis Centers grant from EMD Serono, Inc. CMSC/Global MS Registry is supported by the Consortium of Multiple Sclerosis Centers and its Foundation. We thank Gary Cutter, Ph.D., Stacey Cofield, Ph.D., and Rita Bode, Ph.D., for data management services early in the project and Ruth Ann Marrie, MD, Ph.D., and Robert Fox, MD for helpful comments on an earlier draft of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schwartz, C.E., Quaranto, B.R., Rapkin, B.D. et al. Fluctuations in appraisal over time in the context of stable versus non-stable health. Qual Life Res 23, 9–19 (2014). https://doi.org/10.1007/s11136-013-0471-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-013-0471-0