Abstract

Purpose

Patient-reported outcomes (PROs) are used increasingly for individual patient management. Identifying which PRO scores require a clinician’s attention is an ongoing challenge. Previous research used a needs assessment to identify EORTC-QLQ-C30 cutoff scores representing unmet needs. This analysis attempted to replicate the previous findings in a new and larger sample.

Methods

This analysis used data from 408 Japanese ambulatory breast cancer patients who completed the QLQ-C30 and Supportive Care Needs Survey-Short Form-34 (SCNS-SF34). Applying the methods used previously, SCNS-SF34 item/domain scores were dichotomized as no versus some unmet need. We calculated area under the receiver operating characteristic curve (AUC) to evaluate QLQ-C30 scores’ ability to discriminate between patients with no versus some unmet need based on SCNS-SF34 items/domains. For QLQ-C30 domains with AUC ≥ 0.70, we calculated the sensitivity, specificity, and predictive value of various cutoffs for identifying unmet needs. We hypothesized that compared to our original analysis, (1) the same six QLQ-C30 domains would have AUC ≥ 0.70, (2) the same SCNS-SF34 items would be best discriminated by QLQ-C30 scores, and (3) the sensitivity and specificity of our original cutoff scores would be supported.

Results

The findings from our original analysis were supported. The same six domains with AUC ≥ 0.70 in the original analysis had AUC ≥ 0.70 in this new sample, and the same SCNS-SF34 item was best discriminated by QLQ-C30 scores. Cutoff scores were identified with sensitivity ≥0.84 and specificity ≥0.54.

Conclusion

Given these findings’ concordance with our previous analysis, these QLQ-C30 cutoffs could be implemented in clinical practice and their usefulness evaluated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The use of patient-reported outcome (PRO) measures in clinical practice for individual patient management involves having a patient complete a questionnaire about his/her functioning and well-being and providing that patient’s scores to his/her clinician to inform care and management [1, 2]. The procedure is analogous to laboratory tests that inform the clinician about the patient’s health—the difference being that PROs are based on scores from patient-reported questionnaires rather than values from chemical or microscopic analyses. The use of PROs for individual patient management has been consistently shown to improve clinician–patient communication [3–6]. It has also been shown to improve detection of problems [6–9], affect management [5], and improve patient outcomes, such as symptom control, health-related quality-of-life, and functioning [3, 10, 11].

Although we have demonstrated that PROs can effectively identify the issues that are bothering patients the most [12], an ongoing challenge to the use of PROs in clinical practice is determining which scores require a clinician’s attention. That is, after patients complete the PRO questionnaire, their responses are scored and a score report is generated. However, for clinicians reviewing the scores, it is not intuitive which scores represent a problem that should motivate action. Various methods have been applied to assist with score interpretation, including providing the mean score for the general population for comparison [3] or highlighting scores using the lowest quartile from the general population as a cutoff [13]. However, these methods do not actually reflect whether a score represents an unmet need from the perspective of the patient, which would require a clinician’s attention.

To address this issue, in a previous study, we used the Supportive Care Needs Survey-Short Form (SCNS-SF34) to determine cutoff scores on the European Organization for Research and Treatment of Cancer (EORTC) Quality of Life Questionnaire-Core 30 (QLQ-C30) that identify unmet needs [14]. We demonstrated that QLQ-C30 scores can discriminate between patients with and without unmet needs; however, the study was conducted in a limited sample (n = 117) of breast, prostate, and lung cancer patients from a single institution. The present analysis was undertaken to attempt to replicate the findings using a new and larger sample.

Patients and methods

Research design and data source

The objective of this study was to test the replicability of the QLQ-C30 cutoff scores from our previous study. To address this objective, we conducted a secondary analysis of data originally collected in the validation study of the Japanese version of the Supportive Care Needs Survey-Short Form (SCNS-SF34-J). The methods of this Japanese study have been reported previously [15]. Briefly, ambulatory breast cancer patients were recruited from the Oncology, Immunology and Surgery outpatient clinic of Nagoya City University Hospital. Inclusion criteria included diagnosis of breast cancer, age at least 20 years, awareness of cancer diagnosis, and Eastern Cooperative Oncology Group (ECOG) performance status of 0–3. Exclusion criteria were severe mental or cognitive disorders or inability to understand Japanese. Participants were selected at random using a list of visits and a random number table to limit the number of patients enrolled each day.

After providing written consent, subjects completed a paper survey that included the SCNS-SF34-J (validated in the parent study [15]) and the Japanese version of the EORTC-QLQ-C30 (described below). In addition to these PRO questionnaires, the survey included basic sociodemographic questions. Patients were instructed to return the completed survey to the clinic the following day, and follow-up by telephone was used to clarify inadequate answers. The attending physician provided ECOG performance status, and information on cancer stage and treatments was abstracted from the patients’ medical records.

The SCNS-SF34 was originally developed by investigators in Australia to identify unmet needs cancer patients have in five domains: physical and daily living, psychological, patient care and support, health system and information, and sexual [16, 17]. The 34-item questionnaire uses five response options: 1 = not applicable, 2 = satisfied, 3 = low unmet need, 4 = moderate unmet need, and 5 = high unmet need and a recall period of the “last month.” To calculate domain scores, we averaged the scores of the items within the domain; thus, domain scores >2.0 reflected some level of unmet need.

The QLQ-C30 [18] is a cancer health-related quality-of-life questionnaire that has been widely used in clinical trials and investigations using PROs for individual patient management [3, 6, 11, 19]. It includes five function domains (physical, emotional, social, role, and cognitive), eight symptoms (fatigue, pain, nausea/vomiting, constipation, diarrhea, insomnia, dyspnea, and appetite loss), as well as global health/quality-of-life and financial impact. Subjects respond on a four-point scale from “not at all” to “very much” for most items. Most items use a “past week” recall period. Raw scores are linearly converted to a 0–100 scale with higher scores reflecting higher levels of function and higher levels of symptom burden. The Japanese version of the QLQ-C30 has been validated previously [20].

The Japanese study was approved by the Institutional Review Board and Ethics Committee of Nagoya City University Graduate School of Medical Sciences [15]. A de-identified dataset was provided to the Johns Hopkins investigators for this analysis, which was exempted for review by the Johns Hopkins School of Medicine Institutional Review Board.

Analyses

The data were analyzed using the methods applied in the original study using the SCNS-SF34 to identify cutoff scores on the QLQ-C30 that represent unmet need [14]. First, we dichotomized the SCNS-SF34 item and domain scores into no unmet need (scores ≤ 2.0) versus some unmet need (scores > 2.0). We then tested the ability of QLQ-C30 domain scores to discriminate between patients with and without an unmet need using the SCNS-SF34 domains and items we tested in our previous analysis (see Table 1 for a summary of the SCNS-SF34 items/domains tested for each QLQ-C30 domain). Variables for the discriminant analysis were selected to correspond as closely as possible to the content of the QLQ-C30 domains. In some cases, the content was quite similar (e.g., pain on the QLQ-C30 and pain on the SCNS-SF34). For a few QLQ-C30 domains, there was no SCNS-SF34 item or domain with similar content. In these cases we used a generic SCNS-SF34 item such as “feeling unwell a lot of the time.”

The discriminative ability of each QLQ-C30 domain score was summarized using the area under the receiver operating characteristic (ROC) curve (AUC). The AUC summarizes the ability of QLQ-C30 scores to discriminate between patients with and without a reported unmet need. Higher AUCs indicate better discriminative ability. For the domains with AUC ≥ 0.70, we then calculated the sensitivity and specificity, as well as the positive and negative predictive values, associated with various QLQ-C30 cutoff scores. We used a threshold of AUC ≥ 0.70 because Hosmer and Lemeshow suggest that values below 0.70 represent poor discrimination, between 0.70 and 0.80 represent acceptable discrimination, and above 0.80 represent excellent discrimination [21]. It was also the standard used for our previous analysis [14]. We hypothesized that compared to our original analysis, (1) the same QLQ-C30 domains would have AUC ≥ 0.70, (2) the same SCNS-SF34 items would be best discriminated by the QLQ-C30 and thus provide the highest AUC, and (3) the sensitivity and specificity of our original cutoff scores would be supported. Analyses were performed using statistical freeware R version 2.15.1.

Results

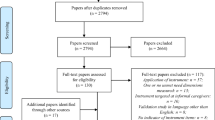

The sample has been described previously [15]. Briefly, from a pool of 420 potential participants, 12 were excluded due to declining participation (n = 7), cognitive deficits (n = 2), advanced disease (n = 1), and failure to respond after consenting (n = 2). The study sample included 408 subjects with a mean age of 56 years, 100 % female, 76 % married, and 45 % employed full- or part-time. The ECOG performance status was 0 for 90 % of the sample; the clinical stage was I or II for 71 %; 93 % had received surgery, 44 % chemotherapy, and 39 % radiation; and the median time from diagnosis was 701 days (range 11–17,915 days). Complete data were available for all 408 subjects, with the exception of one participant who was missing a single SCNS-SF34 item. That observation was excluded from analyses that required that item.

Table 1 shows which SCNS-SF34 items/domains were used to evaluate the discriminative ability for each QLQ-C30 domain, as well as the resulting AUCs both from our original analysis [14] and from this replication analysis. The AUCs were largely similar between studies. As hypothesized, the same six QLQ-C30 domains with AUCs ≥ 0.70 in the original analysis had AUCs ≥ 0.70 in the replication sample. Further, the SCNS-SF34 item that was best discriminated by the QLQ-C30 with the highest AUC in the original analysis also had the highest AUC in the replication sample. The following QLQ-C30 domain–SCNS-SF34 item pairings were used: physical function–work around the home (AUC = 0.74), role function–work around the home (AUC = 0.70), emotional function–feelings of sadness (AUC = 0.75), pain–pain (AUC = 0.74), fatigue–lack of energy/tiredness (AUC = 0.75), and global health/QOL–feeling unwell a lot of the time (AUC = 0.76).

Using these pairings, we evaluated the sensitivity, specificity, and predictive value of various cutoff scores on the QLQ-C30 (Table 2). Again, the results were largely similar between the original analysis and this replication sample. Examples of cutoff scores (sensitivity, specificity) from the replication sample are as follows: physical function <90 (0.85, 0.65); role function <90 (0.85, 0.62); emotional function <90 (0.84, 0.60); global health/QOL < 70 (0.86, 0.56); pain >10 (0.93, 0.54); and fatigue >30 (0.86, 0.62). Thus, each domain had at least one cutoff score with sensitivity ≥0.84 and specificity ≥0.54. This means that patients who reported unmet needs in a domain were identified correctly at least 84 % of the time and that patients who reported no unmet needs in a domain were identified correctly at least 54 % of the time using these cutoffs. In general, the negative predictive values (NPVs) associated with these cutoffs were higher than the positive predictive values (PPVs), with the NPVs ranging from 0.86 to 0.94 and PPVs ranging from 0.33 to 0.58. This means that if a patient was identified by the cutoff as not having an unmet need in a domain, 86–94 % of the time they did not report an unmet need and that if a patient was identified by the cutoff as having an unmet need, 33–58 % of the time they actually did report an unmet need. While we describe these cutoff scores for illustrative purposes, the specific cutoff scores used in a given application should be determined based on the relative importance of sensitivity and specificity.

Discussion

This analysis was undertaken to test the generalizability of the findings from our previous study which evaluated the ability of different cutoff scores on the QLQ-C30 to identify patients with an unmet need in a given domain. Such cutoff scores facilitate the interpretation of PROs used clinically for individual patient management by helping clinicians determine which scores deserve further attention. Currently, there are few guides available to help clinicians determine which PRO scores represent a problem. For example, in PatientViewpoint, the PRO webtool used at Johns Hopkins [13, 22], we highlight in yellow QLQ-C30 domain scores representing the lowest quartile based on published general population norms [23] as an indication to the clinician reviewing the report that the patient may be having a problem in this area. However, these cutoff scores using distributions of the data are not empirically based on whether the score is likely to represent a problem from the patient’s perspective. For example, the results from this analysis suggest that domain scores <90 on role or emotional function likely represent a patient-reported unmet need. However, at our institution, we are currently using cutoff scores <66.7 for these two domains, based on the population distribution of scores. This means that our current cutoffs are missing patients with unmet needs with scores between 67 and 90. Based on the results of this analysis, we will explore changing the cutoffs to those presented here to highlight QLQ-C30 scores for the clinician’s attention.

Our findings should be interpreted in the context of the study’s strengths and limitations. First, the approach of using the SCNS-SF34 to identify QLQ-C30 cutoff scores only works well for the six QLQ-C30 domains where there is content overlap between the SCNS-SF34 and QLQ-C30. For the domains without a corresponding SCNS-SF34 item to use for comparison, we do not have indicators of appropriate cutoffs. Future research could address this issue by using items similar in format to the SCNS-SF34 but covering the content of the relevant QLQ-C30 domains for which no data are currently available. Also, the SCNS-SF34 uses a recall period of the “past month,” whereas the QLQ-C30 generally uses a recall period of the “past week.” Ideally, the comparison between scores would be made with questionnaires that use the same recall period. The study design used in both the current sample and the original analysis was cross-sectional, so while absolute cutoff scores can be identified, important changes in scores are not addressed. Research from longitudinal studies using both the QLQ-C30 and SCNS-SF34 could explore changes in scores representing an unmet need.

Notably, this validation sample used QLQ-C30 and SCNS-SF34 data collected using the Japanese versions of the questionnaires. That we found such similarity between our original analysis and the current sample, despite differences in language and culture, suggests that these findings are robust. While the Japanese study provided a new sample to test our original cutoffs, and almost four times as many patients, only breast cancer patients were enrolled in the Japanese study, whereas our original analysis included three different cancer types (breast, prostate, and lung). Also, the Japanese sample included women with a wide range of time since diagnosis (11–17,915 days). The symptom burden for women who had completed treatment years previously may be lower than for women in active treatment. Nevertheless, given the substantial concordance between this replication sample and our original sample, we believe there is adequate evidence to support implementing these cutoffs in PatientViewpoint and other applications of the QLQ-C30 being used in clinical practice.

The next important step will be to evaluate whether clinicians and patients find these cutoffs helpful. A key consideration is which cutoff to use. We presented several example cutoff scores for illustrative purposes here, but the cutoff scores appropriate for a specific application depend on the relative importance between sensitivity and specificity. That is, the more likely a cutoff score is to identify patients with unmet needs (true positives), the more likely it will also identify patients without an unmet need (false positives). Thus, it is important to consider the implications of false positives versus false negatives.

In general, the use of PROs for individual patient management involves helping the clinician identify problems the patient may be experiencing and facilitating a focused discussion of PRO topics that might otherwise go unaddressed. This is essentially a screening function. We therefore expect follow-up of a “positive” score based on the cutoff to involve the clinician simply asking the patient about the issue and determining whether there is something that can and should be done to address any unmet needs. Given that this requires a minimal effort, it may be appropriate to favor high sensitivity over high specificity. However, it is also important to avoid alert fatigue, a phenomenon that leads to clinician inattention to potential problems and resistance to the tools in general. In addition, if the cutoff scores were to be applied by, for example, generating an automatic page to the clinician, then false positives would be much more problematic. Another issue is how to address PRO scores representing an unmet need. In previous research, we developed a range of suggestions for how to address issues identified by PRO questionnaires [24]. However, it is important to consider resource and reimbursement limitations for certain services (e.g., psychosocial services, home care), as well as their effectiveness, before implementing them as part of care pathways. Consideration of how these cutoff scores will be applied in practice will help determine the appropriate compromise between sensitivity and specificity.

In summary, this analysis was conducted to replicate our original analysis to determine whether specific cutoff scores effectively identify patients with unmet needs. For the QLQ-C30 domains with appropriate SCNS-SF34 content matches, our findings from the original analysis were largely supported. This suggests that these cutoff scores could be applied in practice, with an evaluation of their effectiveness from the clinician and patient perspectives. Specifically, it will be important to see how clinicians actually respond when presented with information from PROs using these (or other appropriate) cutoffs and whether the information helps increase clinicians’ awareness of unmet needs. Further research is also needed to identify cutoff scores for QLQ-C30 domains without SCNS-SF34 content matches, as well as to identify changes in scores that represent unmet need. In the meantime, the results for these six domains provide critical guidance to clinicians interpreting PRO reports on which scores require their attention.

Abbreviations

- AUC:

-

Area under the curve

- ECOG:

-

Eastern Cooperative Oncology Group

- EORTC-QLQ-C30:

-

European Organization for the Research and Treatment of Cancer Quality of Life Questionnaire Core 30

- NPV:

-

Negative predictive value

- PPV:

-

Positive predictive value

- PRO:

-

Patient-reported outcome

- ROC:

-

Receiver operating characteristic

- SCNS-SF34:

-

Supportive Care Needs Survey-Short Form-34

References

Snyder, C. F., & Aaronson, N. K. (2009). Use of patient-reported outcomes in clinical practice. The Lancet, 374, 369–370.

Greenhalgh, J. (2009). The applications of PROs in clinical practice: What are they, do they work, and why? Quality of Life Research, 18, 115–123.

Velikova, G., Booth, L., Smith, A. B., et al. (2004). Measuring quality of life in routine oncology practice improves communication and patient well-being: A randomized controlled trial. Journal of Clinical Oncology, 22, 714–724.

Berry, D. L., Blumenstein, B. A., Halpenny, B., et al. (2011). Enhancing patient-provider communication with the electronic self-report assessment for cancer: A randomized trial. Journal of Clinical Oncology, 29, 1029–1035.

Santana, M. J., Feeny, D., Johnson, J. A., et al. (2010). Assessing the use of health-related quality of life measures in the routine clinical care of lung-transplant patients. Quality of Life Research, 19, 371–379.

Detmar, S. B., Muller, M. J., Schornagel, J. H., Wever, L. D. V., & Aaronson, N. K. (2002). Health-related quality-of-life assessments and patient-physician communication. A randomized clinical trial. Journal of the American Medical Association, 288, 3027–3034.

Greenhalgh, J., & Meadows, K. (1999). The effectiveness of the use of patient-based measures of health in routine practice in improving the process and outcomes of patient care: A literature review. Journal of Evaluation in Clinical Practice, 5, 401–416.

Marshall, S., Haywood, K., & Fitzpatrick, R. (2006). Impact of patient-reported outcome measures on routine practice: A structured review. Journal of Evaluation in Clinical Practice, 12, 559–568.

Haywood, K., Marshall, S., & Fitzpatrick, R. (2006). Patient participation in the consultation process: A structured review of intervention strategies. Patient Education and Counseling, 63, 12–23.

Cleeland, C. S., Wang, X. S., Shi, Q., et al. (2011). Automated symptom alerts reduce postoperative symptom severity after cancer surgery: A randomized controlled clinical trial. Journal of Clinical Oncology, 29, 994–1000.

McLachlan, S.-A., Allenby, A., Matthews, J., et al. (2001). Randomized trial of coordinated psychosocial interventions based on patient self-assessments versus standard care to improve the psychosocial functioning of patients with cancer. Journal of Clinical Oncology, 19, 4117–4125.

Snyder, C. F., Blackford, A. L., Aaronson, N. K., et al. (2011). Can patient-reported outcome measures identify cancer patients’ most bothersome issues? Journal of Clinical Oncology, 29, 1216–1220.

Snyder, C. F., Blackford, A. L., Wolff, A. C., et al. (2012). Feasibility and value of PatientViewpoint: a web system for patient-reported outcomes assessment in clinical practice. Psycho-Oncology,. doi:10.1002/pon.3087.

Snyder, C. F., Blackford, A. L., Brahmer, J. R., et al. (2010). Needs assessments can identify scores on HRQOL questionnaires that represent problems for patients: an illustration with the Supportive Care Needs Survey and the QLQ-C30. Quality of Life Research, 19, 837–845.

Okuyama, T., Akechi, T., Yamashita, H., et al. (2009). Reliability and validity of the Japanese version of the short-form supportive care needs survey questionnaire (SCNS-SF34-J). Psycho-Oncology, 18, 1003–1010.

Bonevski, B., Sanson-Fisher, R. W., Girgis, A., et al. (2000). Evaluation of an instrument to assess the needs of patients with cancer. Cancer, 88, 217–225.

Sanson-Fisher, R., Girgis, A., Boyes, A., et al. (2000). The unmet supportive care needs of patients with cancer. Cancer, 88, 226–237.

Aaronson, N. K., Ahmedzai, S., Bergman, B., et al. (1993). The European organization for research and treatment of cancer QLQ-C30: a quality-of-life instrument for use in international clinical trials in oncology. Journal of the National Cancer Institute, 85, 365–376.

Velikova, G., Brown, J. M., Smith, A. B., & Selby, P. J. (2002). Computer-based quality of life questionnaires may contribute to doctor-patient interactions in oncology. British Journal of Cancer, 86, 51–59.

Kobayashi, K., Takeda, F., Teramukai, S., et al. (1998). A cross-validation of the European organization for research and treatment of cancer QLQ-C30 (EORTC QLQ-C30) for Japanese with lung cancer. European Journal of Cancer, 34, 810–815.

Hosmer, D. W., & Lemeshow, S. (2000). Applied Logistic Regression (2nd ed.). Chichester, New York: Wiley.

Snyder, C. F., Jensen, R., Courtin, S. O., Wu, A. W., & Website for Outpatient QOL Assessment Research Network. (2009). PatientViewpoint: A website for patient-reported outcomes assessment. Quality of Life Research, 18, 793–800.

Fayers, P. M., Weeden, S., Curran, D., & on behalf of the EORTC Quality of Life Study Group. (1998). EORTC QLQ-C30 Reference Values. Brussels: EORTC (ISBN: 2-930064-11-0).

Hughes, E. F., Wu, A. W., Carducci, M. A., & Snyder, C. F. (2012). What can I do? Recommendations for responding to issues identified by patient-reported outcomes assessments used in clinical practice. Journal of Supportive Oncology, 10, 143–148.

Acknowledgments

This analysis was supported by the American Cancer Society (MRSG-08-011-01-CPPB). The original data collection was supported in part by Grants-in-Aid for Cancer Research and the Third Term Comprehensive 10-Year Strategy for Cancer Control from the Ministry of Health, Labour and Welfare, Japan. Drs. Snyder and Carducci are members of the Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins (P30CA006973). The funding sources had no role in study design, data collection, analysis, interpretation, writing, or decision to submit the manuscript for publication.

Conflict of interest

The authors report no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Snyder, C.F., Blackford, A.L., Okuyama, T. et al. Using the EORTC-QLQ-C30 in clinical practice for patient management: identifying scores requiring a clinician’s attention. Qual Life Res 22, 2685–2691 (2013). https://doi.org/10.1007/s11136-013-0387-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-013-0387-8