Abstract

Background Methods based on item response theory (IRT) that can be used to examine differential item functioning (DIF) are illustrated. An IRT-based approach to the detection of DIF was applied to physical function and general distress item sets. DIF was examined with respect to gender, age and race. The method used for DIF detection was the item response theory log-likelihood ratio (IRTLR) approach. DIF magnitude was measured using the differences in the expected item scores, expressed as the unsigned probability differences, and calculated using the non-compensatory DIF index (NCDIF). Finally, impact was assessed using expected scale scores, expressed as group differences in the total test (measure) response functions. Methods The example for the illustration of the methods came from a study of 1,714 patients with cancer or HIV/AIDS. The measure contained 23 items measuring physical functioning ability and 15 items addressing general distress, scored in the positive direction. Results The substantive findings were of relatively small magnitude DIF. In total, six items showed relatively larger magnitude (expected item score differences greater than the cutoff) of DIF with respect to physical function across the three comparisons: “trouble with a long walk” (race), “vigorous activities” (race, age), “bending, kneeling stooping” (age), “lifting or carrying groceries” (race), “limited in hobbies, leisure” (age), “lack of energy” (race). None of the general distress items evidenced high magnitude DIF; although “worrying about dying” showed some DIF with respect to both age and race, after adjustment. Conclusions The fact that many physical function items showed DIF with respect to age, even after adjustment for multiple comparisons, indicates that the instrument may be performing differently for these groups. While the magnitude and impact of DIF at the item and scale level was minimal, caution should be exercised in the use of subsets of these items, as might occur with selection for clinical decisions or computerized adaptive testing. The issues of selection of anchor items, and of criteria for DIF detection, including the integration of significance and magnitude measures remain as issues requiring investigation. Further research is needed regarding the criteria and guidelines appropriate for DIF detection in the context of health-related items.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The purpose of this paper is to illustrate methods based on item response theory (IRT) that can be used to examine differential item functioning (DIF). The companion paper by Crane and colleagues [1] illustrates the use of the ordinal logistic regression (OLR) approaches of Swaminathan and Rogers [2], Zumbo [3] and Crane et al. [4]; some of the analyses in that paper were based on a modified IRT approach. There are several other methods for DIF detection, which are reviewed in a recent special issue of Medical Care [5]. The advantages and disadvantages of different DIF detection methods are also reviewed in that issue [6]. While a discussion of these issues is beyond the scope of this paper; several simulation studies reviewed in that special issue support the use of the IRTLR approach to DIF detection.

This paper applies an IRT-based approach to the detection of DIF in physical function and general distress item sets. DIF was examined with respect to gender, age and race. These demographic variables were selected on theoretical grounds. DIF should be performed with respect to variables that are hypothesized to affect the relationship between the item response and the ability (disability) targeted for study. Previous studies have identified DIF in measures of affective disorder [7–9] and physical function [10–12] with respect to one or more of the three background variables examined.

The method used for DIF detection that is described in this paper was the IRT log-likelihood ratio (IRTLR) approach [13, 14]. DIF magnitude was assessed using the differences in expected item scores, expressed as the unsigned probability differences [15], and calculated using the non-compensatory DIF (NCDIF) index [16, 17]. Finally, impact was assessed using expected scale scores, expressed as group differences in the total test (measure) response functions. These latter functions show the extent to which DIF cancels at the scale level (DIF cancellation). The measures, sample and background are described in the paper by Crane and colleagues [1], and this information is briefly summarized in the Methods section.

Definition of DIF: DIF analysis in the context of health-related constructs involves three factors: item response, disability (ability) level and subgroup membership; the research question is how item response is related to disability for different subgroups. The relationship implied by this question is often defined in terms of item parameters so that DIF analysis frequently examines differences in these parameters. DIF analysis is concerned with the question of whether or not the likelihood of item (category) endorsement is equal across subgroups. A key issue is whether the method used is conditional or non-conditional; only conditional methods that take disability/ability into account are acceptable. The necessity of a conditioning variable has been illustrated by Dorans and Holland [18] and Dorans and Kulick [19], in the context of Simpson’s [20] paradox. They provide examples showing that if two groups vary in the distribution of ability, overall, an item will appear to favor the group with more functional ability. However, examination of differences in proportions endorsing an item (claiming independence in function) at different ability levels or groupings can actually show a reverse pattern. Thus, as pointed out by Dorans and Kulick [19], it is important to compare the comparable, by controlling for disability/ability before examining differences in performance between groups on an item.

Another key issue is the nature of the conditioning variable. IRT disability/ability estimates are often used because observed scores (typically used in logistic regression) may not be adequate proxies for latent health status, and may result in false DIF detection, particularly with shorter scales (see Millsap and Everson [21]). However, logistic regression methods do not need to be limited to the use of observed scores. Latent conditioning variables can be used, as was done in the companion paper [1].

DIF in the context of IRT: A basic concept in IRT is that a set of items is being used to measure an underlying attribute (also called a trait or state, e.g., a health condition, state of emotional distress, functional ability, disability or disorder); the central concern is how the item responses are related to the trait. For the example presented in this paper, the underlying attributes are scored in the positive direction, and reflect physical functional ability and positive emotional state (lack of general distress).

Different models are used to model binary (dichotomous) items, as contrasted with ordered categorical (polytomous) items. A mixture of such items was used in the scales analyzed. The following discussion pertains to binary items; an explication of the model for polytomous items is discussed in the Appendix. The expectation is that respondents who are not disabled would be more likely than those who are disabled to respond asymptomatically (in a non-symptomatic direction) to an item measuring ability. Conversely, a person with disability is expected to have a lower probability of responding in a non-disabled direction to the item. The curve that relates the probability of an item response to the underlying health condition measured by the item set is known as an item characteristic curve (ICC). This curve can be characterized by two parameters in some forms of the model: a discrimination parameter (denoted as a) that is proportional to the slope of the curve, and a location (also called difficulty, or severity) parameter (denoted as b) that is the point of inflection of the curve. (See also the Appendix.) According to the IRT model illustrated by the Figures contained within this paper, an item shows DIF if people from different subgroups but at the same functional ability level have unequal probabilities of endorsement. For example, in the absence of DIF, African-American people with mild disability should have the same chance of a given response to a particular physical functioning ability item as do white people with mild disability. Put another way, the absence of DIF is demonstrated by ICCs that are the same for each group of interest.

Description of the Model

The following analyses were conducted using the two parameter mixed logistic (for binary) and graded (for polytomous, ordered response category) item response models (see Hambleton et al. [22]; Lord [23]; Lord and Novick [24]; Samejima [25]). Important first steps (not presented here) in the analyses include examination of model assumptions (such as unidimensionality) and model fit. These analyses were conducted prior to release of these data sets, and provided evidence of essential unidimensionality.

Example of the Model: An example is shown in Fig. 1. The curves for two self-identified race groups for the item, “trouble with a long walk”, represent the relationship between the probability of a positive (unimpaired) response and physical functioning ability. The fact that the curves are not identical and that there is space between the curves (area) indicates that some DIF is present. In this example, the curves are parallel and do not cross. This shows what is called “Uniform DIF” or “Unidirectional DIF”. As an example of the meaning, locate the point on the solid curve corresponding to .5 along the x (ability) axis, also referred to as theta (θ), and draw a line to the y axis (probability of response). The intersection of these ICCs with a vertical line provides the probability of item endorsement for individuals, given selected levels of ability. For example, the probability of a randomly selected African-American person of above average physical function (θ = .5) responding that s/he has no trouble "with a long walk" is higher (.62) than for a randomly selected White person (.44) at the same ability level. Specifically, at this ability level (theta = .5), the DIF results in a difference in response probabilities of .18. In fact, across much of the ability continuum, African-American respondents are more likely than White respondents of the same ability level to endorse the category, "no trouble", resulting in a difference in the areas under the curves for the two groups. It takes more ability for Whites than for African-Americans to claim that they have no trouble with a long walk. For example, a probability of .62 for Whites corresponds to a higher ability level (theta closer to 1.0) than for African-Americans. Thus, this item is not performing in the same manner for both groups, and model-based significance tests indicated that this item exhibited DIF: it maximally discriminates (separates ability levels) at higher levels of functional ability for Whites as contrasted with African-Americans. This also is demonstrated by the higher b (or severity) parameter estimate for White respondents (.61) than for African-American respondents (.35).

This difference is also apparent in the raw data, and can be illustrated by examining the crosstabulation between item response and race classification for a selected observed score level. For example, moving from the latent variable model just discussed to the more familiar sum score, it is observed that for raw sum score levels 26–32 on the Physical Function scale (reflecting above average physical function), 31.6% of African-American persons, as contrasted with 17.2% of White persons responded that they had “no trouble” with a long walk. In the absence of DIF, it would be expected that these percentages would be roughly equal. In educational testing this would be regarded as an easier item for African-American people because more African-American people responded that they had “no trouble”, or “got it right”. However, this interpretation makes little sense in health and mental health assessment, in which speaking of item severity is more appropriate. (It is also noted that the practice of scoring symptom scales in the positive health direction, as was done in these analyses, might result in some confusion; however, because the scale had been used in this fashion, the decision was made to score the items to conform to past applications.) Shown in the Appendix are formulas and illustrations of calculations.

The item shown in Fig. 1 has equal discrimination (a) parameters because this graphic reflects the result from IRTLR where the discrimination parameters were found to be equivalent, and were constrained to be equal in the final analysis. Figure 2 is an example of non-uniform DIF for an item with three response categories, where the curves cross. The curve associated with level 3, “not limited at all walking one block”, shows that the probability of response is higher for African-Americans than for Whites at lower levels of ability, but the reverse is observed for higher levels of ability.

A point of clarification is that when the a parameters are freely estimated, they most likely will not be identical; however, the test for non-uniform DIF is to determine whether or not, after constraining the a parameters to be equal, the likelihood of the model is statistically significantly worse, indicating DIF. For this example the a parameter estimates were not exactly equal for the two groups originally (a = 3.53 for Whites and 2.64 for African-Americans) and, in fact, were significantly different, indicating non-uniform DIF, prior to the Bonferroni [26] correction. Thus, in this particular case the actual a’s were used in the plots in Fig. 2 in order to illustrate the basic points about non-uniform DIF.

Although the Bonferroni [26] method was used to adjust for multiple comparisons, other approaches, for example, Benjamini–Hochberg (B-H) [27, 28], have been recommended as more powerful for adjustment (see Steinberg [29]; Thissen et al. [30]; and Orlando et al. [31] for examples). For this example, there were few differences in results between the two approaches; thus the Bonferroni method was used for consistency with the approach used in the companion paper.

IRTLR modeling: IRTLR is based on a nested model comparison approach, used for identification of items exhibiting DIF. The concept is to test first a compact (or more parsimonious) model with all parameters constrained to be equal across groups for a studied item (together with the anchor items) (model 1), against an augmented model [2] with one or more parameters of the study item freed to be estimated distinctly for the two groups. The procedure involves comparison of differences in log-likelihoods (−2LL) (distributed as chi-square) associated with nested models; the resulting statistic is evaluated for significance with degrees of freedom equal to the difference in the number of parameter estimates in the two models. For example, the G2 statistic would have 2 degrees of freedom for each tested item from a 2PL model (i.e., for binary items with difficulty (severity) and discrimination parameters constrained equal vs. estimated freely for the two groups). For the graded response model, the degrees of freedom increase with the number of b (difficulty or severity) parameters estimated. (There is one less b estimated than there are response categories.) It is noted that IRTLR is based on a hierarchical structure, such that b parameters are tested for uniform DIF only if the tests of the a parameters are not significant. Tests of b parameters are performed, constraining the a parameters to be equal; in that context, if the a parameters are found to differ, further tests of the b parameters are not warranted. The rationale is that if the slopes are not equal, then the curves must cross, and the threshold parameter is useful only for testing whether the crossing point is near the threshold (in which case the test is not significant) or not (in which case the test is significant). This can be contrasted with one of the OLR approaches examined by Crane and colleagues [1], in which log-likelihood tests of both non-uniform and uniform DIF are examined in a two-step procedure.

Anchor Items: If no prior information about DIF in the item set is available, initial DIF estimates can be obtained by treating each item as a “studied" item, while using the remainder as “anchor" items. Anchor items are assumed to be without DIF, and are used to estimate theta (ability), and to link the two groups compared in terms of ability. This process of log-likelihood comparisons is performed iteratively for each item. (See the Steps in the analyses below for an illustration.)

While one recommendation (see Thissen [14]) is to reject as anchor items all items meeting the criteria in 1a below, this can result in the selection of a very small anchor set for some comparisons. As discussed below, our view was that a somewhat larger anchor set would be preferable for this example. Anchor item selection is an area that requires additional research. While as few as one anchor item could be used, in general more anchor items may be associated with less conceptual drift in terms of the construct measured, and one simulation study found that a larger number of anchor items (10 as contrasted with 4 or 1) resulted in greater power for DIF detection (Wang et al. [32]).

Steps in the analyses

Presented below is an example of the use of IRTLR. The following procedures for performing the analyses are adapted from Orlando et al. [31]). Examples of the use of IRTLR can be found in Orlando and Marshall [33] and Teresi et al. [34]).

DIF detection

A general description of the steps is provided below; comments refer to the physical function example shown in the tables and graphs.

Identification of anchor items

1a. The first comparison is between a model with all parameters constrained to be equal for any two comparison groups, including the studied item, and a model with separate estimation of all parameters for the studied item. IRTLRDIF is designed using stringent criteria for DIF detection, so that if any model comparison results in a chi-square value greater than 3.84 (d.f. = 1), indicating that at least one parameter differs between the two groups at the .05 level, the item is assumed to have DIF. The results are then reviewed so that the chi-square statistic is evaluated using the correct degrees of freedom, which are dependent on the number of response categories for an item. Non-DIF items are selected as anchor items.

As an example, the G2 for the overall test of all parameters equal versus all free for one of the studied items was 3.9 (4 d.f.); the G2 for the a’s was .1, (1 d.f.) and the G2 for the b’s was 3.8 (3 d.f. corresponding to the three b’s estimated for a four category item). Note that the overall G2 is the sum of those for the a’s and b’s because the models are nested. Note also that 3.84 (1 d.f.) is the threshold for testing whether any parameter evidences DIF, assuming a theoretical probability that all DIF is in one parameter.

1b. If there is any DIF, further model comparisons are performed. For the two-parameter model, the a parameter (referred to as the slope or discrimination) is constrained to be equal, and the b parameter (referred to as difficulty, location, threshold or severity) is estimated freely; this model is compared to that with both a and b parameters estimated freely (for all other items the parameters are constrained to be equal for both groups). This is a test of DIF in the a parameter.

The same procedure is followed with respect to the tests of DIF for the b parameters. For all models, all items are constrained to be equal within the anchor set, and the a parameter for the item tested is also constrained to be equal. Two models are compared, one in which the b’s are the same and one in which the b’s are different. The value of G2 for the last model tests for DIF in the b parameters when the a parameters are constrained equal and the b parameters are free to be estimated as different. The G2 for this last model is derived by subtraction of the G2 for evaluation of the a parameters from the overall G2 value evaluating any difference (G2 all equal—G2 a’s equal).

For example, for item 5 (trouble with a long walk), the overall G2 for all equal vs. all parameters free is 11.0, with the DIF observed for the b parameter (G2 = 9.7), while the G2 for the test of the a parameter was 1.3. In the current analyses of race groups, 13 items out of 23 physical function items were identified as anchor items.

Purification of the anchor set

2. Even if anchor items were identified prior to the analyses using IRTLRDIF, additional items with DIF may be identified. All of the candidate anchor items are again evaluated, following the procedures described in step 1 (but only for the anchor items), in order to exclude any additional items with DIF, and to finalize the anchor set. At each step of the purification process, ability estimates (θ) are based on the anchor set used at that stage. It is noted that the item studied is included in the theta estimate. As an example, for the gender comparisons, originally 10 anchor items were identified; at the stage two confirmation process, two additional items with DIF were removed from the anchor set; these are shown in Table 2.

Final DIF detection

3. After the anchor item set is defined, all of the remaining (non-anchor) items are evaluated for DIF against this anchor set. Some items that have been identified as having DIF in earlier stages of the analyses can convert to non-DIF with the use of a purified anchor. However, these items (that converted) are not added to the anchor pool for further iterative purification. At this point in the analyses of the general distress item set, one non-anchor item was no longer found to have DIF. Items with values of G2 indicative of DIF in this last stage are subject to adjustment of p values for multiple comparisons, used in order to reduce over-identification of items with DIF. For this example, the Bonferroni method was used. The p value is divided by the number of items.

Final parameter estimation and adjustment for multiple comparisons

4. The final model for a studied scale was estimated using MULTILOG, and all items were included in this model. Parameter estimates for the anchor items as well as those items in which no DIF was observed are set to be equal across groups (using a command for all equal or fixed) in this final model specification; for the items exhibiting DIF in either the a or b parameters, item parameters are estimated as different (freed) for the two groups. Specifically, if the DIF is only in the a parameter, the a is estimated as different, together with b′s. (As explicated above, IRTLRDIF performs tests of the b parameter, constraining the a to be equal; thus once the a is found to be significant, no further test of the b parameter(s) is performed, in which case, the b parameter(s) would be set to be different.) If the DIF is in the b parameter, only the b parameter is estimated as different.

The final parameter estimates and their standard errors were obtained from applications of MULTILOG. Theta estimates at this point are based on the entire item set with parameters estimated as described above. These thetas can be used in the evaluation of DIF magnitude and impact, described below. An area for study is the identification of the best theta estimate for use when individual ability estimates are to be used, e.g., in computerized adaptive testing or for construction of a “DIF-free” theta estimate for use in analyses of relationships among variables.

Evaluation of DIF magnitude

5. Following these analyses, graphs of item response functions are useful in examining magnitude of DIF. The magnitude of DIF refers to the degree of difference in item performance between or among groups, conditional on the trait or state being examined. Examination of the magnitude of DIF has been based on evaluation of theoretically invariant parameters or statistics flowing from a model, such as the odds ratio.

Expected item scores can be examined as measures of magnitude. An expected item score is the sum of the weighted (by the response category value) probabilities of scoring in each of the possible categories for the item.

6. A method for quantification of the difference in the average expected item scores is the non-compensatory DIF (NCDIF) index (the average squared difference in expected item scores for a given individual as a member of the focal group, and as a member of the reference group) used by Raju and colleagues [16]. (See also Chang and Mazzeo [35]), who demonstrated that items with identical IRFs or expected scores have equivalent item category response functions under certain polytomous response models, including the graded response model used here. The implication of this work is a generalization from binary to some of the more commonly used polytomous response models of the IRF invariance assumptions that permit DIF detection.)

In essence this method provides an estimate of what expected score would obtain for an individual if s/he was scored based on the parameters and ability estimates for group X, and then based on the ability and parameter estimates for group Y. (See the Appendix.) The advantage of this magnitude measure is that NCDIF is based on the actual distribution of individual estimated thetas, rather than on an arbitrary range of ability. While chi-square tests of significance are available, these were found to be too stringent, over identifying DIF. Cutoff values established based on simulations [36, 37], provide an estimate of the magnitude of item-level DIF. For example, for dichotomous items the NCDIF cutoff is 0.006; for polytomous items with three response options the cutoff is .024; for four response options the cutoff is 0.054; for five it is .096; and for polytomous items with six response options the cutoff is 0.150. Use of this method requires that thetas be estimated separately for each group, and equated together with the item parameters prior to calculation of expected item scores. (Equating constants are purified iteratively, if DIF is detected.)

Evaluation of impact of DIF

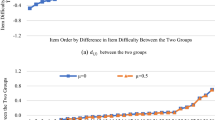

7. Expected item scores (see Fig. 3) can be summed to produce an expected scale score, which provides evidence regarding the effect of the DIF on the total score (see Fig. 4). Group differences in these test response functions provide measures of impact.

Methods

Measures

Twenty three physical functioning ability items and 15 general distress items were analyzed. These items were selected from a larger item set taken from four measures described elsewhere in this special issue, and in the companion paper. The process by which the items were selected included exploratory and confirmatory factor analyses; these methods are described elsewhere in this special issue. The 23 items measuring physical function were scored in the positive direction, and positive physical function was measured. The 15 items measuring general distress were also scored in the positive direction, so that a high score was indicative of positive affect.

Sample

Data were collected as part of the Quality of Life Evaluation in Oncology Project funded by the National Cancer Institute (RO1 CA 60068, David Cella PI). This study was of patients with cancer or HIV/AIDS. Data were analyzed with respect to age, gender and race. The sample sizes used in the analyses shown in the Figures and Tables were 236 African-Americans and 1324 whites, 719 females and 914 males and 1183 younger (less than 66 years of age) and 449 older subjects.

Software

Software used was IRTLRDIF developed by Thissen [14], and available on his website, and MULTILOG (Thissen [13]). The IRTLR approach to DIF detection is discussed in Thissen et al. [38]). IRTLRDIF can be used for the analyses performed in the first steps, followed by application of MULTILOG.

Follow-up examination of magnitude of item-level DIF was conducted using expected item scores and area statistics. These expected scores can be plotted for different values of theta using software such as EXCEL (see Fig. 3 and the Appendix).

Additionally the non-compensatory DIF index of Raju (Raju and colleagues [16]; Flowers and colleagues [17]) contained in DFIT (Raju [39]) was examined. (See also Collins et al. [40] and Morales and colleagues [41] for examples.) In order to assess DIF magnitude, Raju’s program DFITP5 was used. To run this program, it is necessary to run MULTILOG separately for the two groups under study, and then to place the parameter estimates for the two groups on the same metric. (When thetas and item parameters are obtained separately for each group, they have to be equated in order to be on the same metric scale. Equating is performed iteratively; originally no DIF is assumed; however, if DIF is detected, the item showing DIF is excluded from the equating algorithm.) For this purpose, Baker’s EQUATE program [42] was used in an iterative fashion. In the first run, all items in the scale were used as the anchor set. Next, the program DFITP5 was run, and those items with values above the recommended cut-off for NCDIF were excluded from the anchor set for the next run of the EQUATE program. The equating constants resulting from this second run of EQUATE were the ones used for the final run of DFITP5, to evaluate DIF magnitude.

Impact of DIF on the total score was examined using test response functions. The method for integration of magnitude and impact measures with significance testing is an area requiring further research.

Results

Example of IRTLR using items measuring physical functioning and general distress

Tables 1 through 6 show the final result for the physical function and general distress item sets. The tables show the anchor items without DIF, and the studied items with separately estimated parameters for the two groups. This result represents the final analyses, so that if no new DIF was observed in any of the prior iterative purification stages, the a′s are estimated as the same. Tables 7 and 8 show the summary results, including the analyses of magnitude. Figures 5 through 9 show the expected item and scale scores for items that were significant after Bonferroni correction, depicting DIF magnitude and impact, respectively.

Physical function

As shown in Table 1, prior to adjustment for multiple comparisons, 13 anchor items were identified and 10 items were identified that showed DIF with respect to race (summarized in Table 7); three with non-uniform DIF. (One item, “walk one block” was borderline, p = .051). After adjustment, six items showed DIF (Table 1), four with relatively higher magnitude (NCDIF—expected item score difference values above cutoff) (Table 7). For example, after the adjustment, the six items that evidenced uniform DIF were: “trouble with a long walk”; “lack of energy”, “able to work”, “vigorous activities”, “lifting or carrying groceries”, “walk more than a mile”. The item: “walk one block”, also showed significant non-uniform DIF using IRTLRDIF, prior to, but not after the Bonferroni correction for multiple comparisons. Four of these items evidenced a relatively large magnitude of DIF: “long walk”, “lack of energy”, “vigorous activity” and “lifting or carrying groceries”. Most of these items were more severe indicators for White than for African-American respondents; the exception was “lifting or carrying groceries”, which was a more severe indicator for African-Americans. For example, examination of the expected item scores (Fig. 5) show that for most items the solid curves for Whites is below the curve for African-Americans, indicating that conditional on functional status, on average White respondents are less likely to respond that they are capable of performing the task. The reverse pattern is observed with respect to the curve for “lifting or carrying groceries”.

The analysis based on gender initially identified 8 anchor items and 15 with DIF (Table 2); however, after purification 12 items with DIF were identified, all with uniform DIF. After adjustment, four items with uniform DIF were identified: 3, 11, 12, 16, “difficulty with personal care”, “short of breath”, “lack of energy”, “problems lifting or carrying groceries” (see Table 7). Among these, none evidenced DIF of high magnitude. (It is noted that one item (“strenuous activities”), identified before the Bonferroni correction as evidencing uniform DIF, was also identified as having higher magnitude DIF, however, the value was just over the threshold.) As shown in Fig. 6, most of these items were more severe indicators for males than females; the exception was “lifting or carrying groceries”, which was a more severe indicator for females. For this latter item, on average, it takes somewhat more capability for females than for males to claim that they have little difficulty “lifting or carrying groceries”. Numerous items (14 out of 23) evidenced DIF with respect to age, even after the Bonferroni adjustment (see Table 7.) However, three were of high magnitude: “limited in hobbies, leisure activities”, “vigorous activities”, and “bending, kneeling and stooping”. There was a mixture in terms of whether the items were more severe for older or younger persons, with some (e.g., “vigorous activities”) more severe for older persons, and some (e.g., “able to work”) for younger persons. It is noted that “bending, kneeling, stooping” showed non-uniform DIF for age, and was a relatively poor discriminator for younger people (a = .33), as contrasted with older persons (a = 1.78). This means that the item was not well-related to physical function for younger people. Similarly, “shortness of breath” was not a well-discriminating item, in general.

Based on prior experience examining DIF in health-related applications, the results indicate relatively low magnitude of DIF in the physical function item set for race and gender; however, somewhat more DIF was evidenced with respect to age. About 60% of the items showed DIF, even after Bonferroni correction, and it was difficult to obtain an anchor set. Originally, three items without DIF were identified; several iterations were necessary in order to obtain a final anchor set of items. Further testing indicated that all three of these items evidenced DIF, and a different three-item anchor set was produced. Because the DIF was in different directions, overall DIF cancellation was observed at the scale level; however, use of individual items out of context of the scale, for example in computer adaptive testing, could be problematic for individual assessment. Evaluation of the impact of DIF using the test response functions (shown in Fig. 4 for race, Fig. 6 for gender, and Fig. 7 for age) indicates that the impact of DIF on the test score is trivial.

General distress

Examination of the general distress item set for DIF based on race shows that six anchor items were initially identified (see Table 4). Eight out of 9 items originally identified with DIF evidenced DIF after purification, but before Bonferroni correction. After correction only two showed DIF, both uniform: “worry abut dying” and “felt worried” (see Table 8). Neither item demonstrated high magnitude DIF. While the direction was mixed, the indicators were somewhat more severe for African-Americans than for Whites, indicating that more positive mental health was required for endorsement of the item at most response levels. (However, inspection of Fig. 8 shows that the difference was small.) Seven anchor items were used in the analyses of gender DIF (see Table 5). After purification, six out of 15 items showed DIF for gender; however, only two were significant after Bonferroni adjustment (“able to enjoy life” and “content with my quality of life”), and none demonstrated high magnitude DIF. As shown in Fig. 8, the indicators were more severe for men. (This can also be seen in Table 5 where the b (severity) parameters are higher for males than for females.

Age comparisons demonstrated seven items with DIF in the first iteration (see Table 6), and six after purification (Table 8), but before correction (all with uniform DIF, except for “content with my quality of life”). After correction, two items showed uniform DIF: “worry about dying” and “felt calm and peaceful”. “Worry about dying” was a more severe indicator for the younger cohort. Items that did not discriminate as well as others for most groups were “content with my quality of life”, “worry about dying” and for women, “able to enjoy life”. The magnitude of DIF was not large, and the impact trivial (Figs. 8, 9).

Summary of findings: The analyses presented above were intended to illustrate the IRTLRDIF procedures and the calculation of magnitude and impact measures. The substantive findings indicated that there was a relatively small magnitude of DIF in the item sets. Examination of the expected item scores, and calculation of NCDIF for the race group comparison identified four items of higher magnitude; these included three items related to mobility and physical functioning: “trouble with a long walk”; “vigorous activities” and “lifting or carrying”. “Lack of energy” also evidenced a relatively greater magnitude of DIF. Four items were identified with gender DIF after adjustment for multiple comparisons, none with high magnitude. Three items were of higher magnitude of DIF for age group comparisons: “limited in hobbies, leisure activities, “vigorous activities”, “bending, kneeling, stooping”. In total, six items showed relatively larger magnitude of DIF with respect to physical function across the three comparisons: “trouble with a long walk” (race), “vigorous activities” (race, age), “bending, kneeling stooping” (age), “lifting or carrying groceries” (race), “limited in hobbies, leisure” (age), “lack of energy” (race). None of the general distress items evidenced high magnitude DIF, although “worrying about dying” showed some DIF with respect to both age and race, after adjustment.

Discussion

The fact that many physical function items showed DIF with respect to age, even after Bonferroni adjustment, indicates that the instrument may be performing differently for these groups. While the magnitude and impact of DIF at the item and scale level was minimal, caution should be exercised in the use of subsets of these items, as might occur with selection for clinical decisions or for computerized adaptive testing. In the companion paper, Crane and colleagues [1] also found that the impact of DIF was small; however, these authors concluded that the impact of DIF related to race on the General Distress scale could affect some individuals.

Comparison of OLR and IRTLR results

The findings from the two analyses (OLR and IRTLR) of the General Distress scale agree in terms of the number of items identified pre- and post-adjustments, and with respect to DIF magnitude; however, there is some disagreement in terms of the individual items identified. Using the OLR method, based on significance tests after Bonferroni correction, six items were identified as having DIF. Using the IRTLR approach, and after Bonferroni correction, five items were observed to have DIF. After considering DIF magnitude, the OLR method identified two items with DIF: worry about dying, and content with quality of life. These two items were also identified with DIF using the IRTLR approach; however, none of the items evidenced high magnitude DIF using DFIT NCDIF criteria.

Crane and colleagues [1] identified 14 items with DIF in the Physical Functioning scale, using significance tests with Bonferroni adjustments. The IRTLR approach identified 16. Incorporation of a DIF magnitude measure into the OLR modeling procedure resulted in the identification of five items. Use of the DFIT magnitude adjustment, in the context of the IRT approach, reduced the number of identified items to seven; however, only two of the items were in common across the methods: limited in hobbies and bending, kneeling, stooping. Both sets of analyses demonstrated minimal impact of DIF; however, Crane and colleagues [1] observed that there could be DIF impact associated with race on the General Distress scale for some individuals.

Caveats: As with all parametric models, lack of model fit can result in errors in DIF detection, as can lack of proper purification through selection of anchor items. Finally, although not discussed, a first step is examination of dimensionality. If the assumption (of most IRT models used in DIF detection) of unidimensionality is not met, DIF detection will be inaccurate. While extensive tests of dimensionality were conducted, and the item sets were selected to be essentially unidimensional, an unanswered question is what constitutes being unidimensional enough for IRT DIF methods? Typically, violations of assumptions or model fit will lead to false DIF detection. Therefore, it is important to select the correct model prior to application of DIF methods. As mentioned above, the issues of selection of anchor items, and of criteria for DIF detection, including the integration of significance and magnitude measures remain as issues requiring investigation. Additionally, the issue of which purified theta is best to use requires study. Further research is needed regarding the criteria and guidelines appropriate for DIF detection in the context of health-related items. Different magnitude measures and procedures for flagging salient DIF may have contributed to the discrepancies in DIF detection between the two methods. Further simulation studies are needed. Despite these possible caveats, the IRTLR method has been used frequently to detect DIF in educational and psychological assessment measures, and as such is a relatively mature method. While many DIF detection methods exist, both of the methods presented in these two companion papers can be recommended for use in the evaluation of health and quality-of-life measures because both allow the identification of non-uniform DIF, which may be of concern in such measures.

References

Crane, P. K., Gibbons, L. E., Ocepek-Welikson, K., Cook, K., Cella, D., Narasimhalu, K., Hays, R., & Teresi, J. (2007). A comparison of two sets of criteria for determining the presence of differential item functioning using ordinal logistic regression (this issue).

Swaminathan, H., & Rogers, H. J. (1990). Detecting differential item functioning using logistic regression procedures. Journal of Educational Measurement, 27, 361–370.

Zumbo, B. D. (1999) A handbook on the theory and methods of differential item functioning (DIF): Logistic regression modeling as a unitary framework for binary and Likert-type(ordinal) item scores. Ottawa, Canada: Directorate of Human Resources Research and Evaluation, Department of National Defense. Retrieved from http://www.educ.ubc.ca/faculty/zumbo/DIF/index.html.

Crane, P. K., van Belle, G., & Larson, E. B. (2004). Test bias in a cognitive test: Differential item functioning in the CASI. Statistics in Medicine, 23, 241–256.

Teresi, J. A., Stewart, A. L., Morales, L., & Stahl, S. (2006). Measurement in a multi-ethnic society. Special Issue of Medical Care, 44(Suppl. 3), S1–S210.

Teresi, J. A. (2006). Different approaches to differential item functioning in health applications: Advantages, disadvantages and some neglected topics. Medical Care, 44, S152–S170.

Cole, S. R., Kawachi, I., Maller, S. J., Munoz, R. F., & Berkman, L. F. (2000). Test of item-response bias in the CES-D scale: Experiences from the New Haven EPESE study. Journal of Clinical Epidemiology, 53, 285–289.

Gallo, J. J., Cooper-Patrick, L., & Lesikar, S. (1998). Depressive symptoms of Whites and African-Americans aged 60 years and older. Journal of Gerontology, 53B, 277–285.

Mui, A. C., Burnette, D., & Chen, L. M. (2001). Cross-cultural assessment of geriatric depression: A review of the CES-D and GDS. Journal of Mental Health and Aging, 7, 137–164.

Fleishman, J. A., & Lawrence, W. F. (2003). Demographic variation in SF-12 scores: True differences or differential item functioning? Medical Care, 41(Suppl), 75–86.

Gelin, M. N., Carleton, B. C., Smith, M. A., & Zumbo, B. D. (2004). The dimensionality and gender differential item functioning of the Mini-Asthma Quality-of-Life Questionnaire (MINIAQLQ). Social Indicators Research Dordrecht, 68, 81.

Roorda, L. D., Roebroeck, M. E., van Tilburg, T., Lankhorst, G. J., Bouter L. M., Measuring Mobility Study Group (2004). Measuring activity limitations in climbing stairs: Development of a hierarchical scale for patients with lower-extremity disorders living at home. Archives of Physical Medicine and Rehabilitation, 85, 967–971.

Thissen, D. (1991). MULTILOG TM User’s Guide. Multiple, categorical item analysis and test scoring using item response theory. Chicago: Scientific Software Inc.

Thissen, D. (2001). IRTLRDIF v2.0b; Software for the computation of the statistics involved in item response theory likelihood-ratio tests for differential item functioning. Available on Dave Thissen’s web page.

Camilli, G., & Shepard, L. A. (1994). Methods for identifying biased test items. Sage Publications: Thousand Oaks, California.

Raju, N. S., van der Linden, W. J., & Fleer, P. F. (1995). IRT-based internal measures of differential functioning of items and tests. Applied Psychological Measurement, 19, 353–368.

Flowers, C. P., Oshima, T. C., & Raju, N. S. (1999). A description and demonstration of the polytomous DFITframework. Applied Psychological Measurement, 23, 309–326.

Dorans, N. J., & Holland, P. W. (1993). DIF detection and description: Mantel-Haenszel and standardization. In P. W. Holland & H. Wainer (Eds.), Differential item functioning (pp. 35–66). Lawrence Erlbaum Associates: Hillsdale, NJ.

Dorans, N. J., & Kulick, E. (2006). Differential item functioning on the MMSE: An application of the Mantel Haenszel and Standardization procedures. Medical Care, 44 (Suppl. 3), S107–S114.

Simpson, E. H. (1951). The interpretation of interaction contingency tables. Journal of the Royal Statistical Society (Series B), 13, 238–241.

Millsap, R. E., & Everson, H. T. (1993). Methodology review: Statistical approaches for assessing measurement bias. Applied Psychological Measurement, 17, 297–334.

Hambleton, R. K., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of item response theory. Newbury Park, California: Sage Publications Inc.

Lord, F. M. (1980). Applications of item response theory to practical testing problems. Hillsdale, New Jersey: Lawrence Erlbaum.

Lord, F. M., & Novick, M. R. (1968). Statistical theories of mental test scores. Reading, Massachusetts: Addison-Wesley Publishing Co.

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika Monograph Supplement 17: Richmond, VA: William Byrd Press.

Bonferroni, C. E. (1936). Teoria statistica delle classi e calcolo delle probabilità. Pubblicazioni del R Istituto Superiore di Scienze Economiche e Commerciali di Firenze, 8, 3–62.

Williams, V. S. L., Jones, L. V., & Tukey, J. W. (1999). Controlling error in multiple comparisons, with examples from state-to-state differences in educational achievement. Journal of Educational and Behavioral Statistics, 24, 42–69.

Benjamini, Y., & Hochberg, Y. (1995). Controlling for the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society, Series B, 57, 289–300.

Steinberg, L. (2001). The consequences of pairing questions: Context effects in personality measurement. Journal of Personality and Social Psychology, 81, 332–342.

Thissen, D., Steinberg, L., & Kuang, D. (2002). Quick and easy implementation of the Benjamini-Hochberg procedure for controlling the false discovery rate in multiple comparisons. Journal of Educational and Behavioral Statistics, 27, 77–83.

Orlando-Edelen, M., Thissen, D., Teresi, J. A., Kleinman, M., & Ocepek-Welikson, K. (2006). Identification of differential item functioning using item response theory and the likelihood-based model comparison approach: Application to the Mini-mental status examination. Medical Care, 44, S134–S142.

Wang, W. C., Yeh, Y. L., & Yi, C. (2003). Effects of anchor item methods on differential item functioning detection with likelihood ratio test. Applied Psychological Measurement, 27, 479–498.

Orlando, M., & Marshall, G. N. (2002). Differential item functioning in a Spanish Translation of the PTSD Checklist: Detection and evaluation of impact. Psychological Assessment, 14, 50–59.

Teresi, J., Kleinman, M., & Ocepek-Welikson, K. (2000). Modern psychometric methods for detection of differential item functioning: Application to cognitive assessment measures. Statistics in Medicine, 19, 1651–1683.

Chang, H. -H., & Mazzeo, J. (1994). The unique correspondence of the item response function and item category response functions in polytomously scored item response models. Psychometrika, 39, 391–404.

Fleer, P. F. (1993). A Monte Carlo assessment of a new measure of item and test bias. [dissertation] Illinois Institute of Technology. Dissertation Abstracts International 54-04B, 2266.

Flowers, C. P., Oshima, T. C., & Raju, N. S. (1995). A Monte Carlo assessment of DFIT with dichotomously-scored unidimensional tests. [dissertation] Atlanta, GA: Georgia State University.

Thissen, D., Steinberg, L., & Wainer, H. (1993). Detection of differential item functioning using the parameters of item response models. In P. W. Holland & H. Wainer (Eds.), Differential item functioning (pp. 123–135). Hillsdale, NJ: Lawrence Erlbaum Inc.

Raju, N. S. (1999). DFITP5: A Fortran program for calculating dichotomous DIF/DTF [computer program]. Chicago: Illinois Institute of Technology.

Collins, W. C., Raju, N. S., & Edwards, J. E. (2000). Assessing differential item functioning in a satisfaction scale. Journal of Applied Psychology, 85, 451–461.

Morales, L. S., Flowers, C., Gutiérrez, P., Kleinman, M., & Teresi, J. A. (2006). Item and scale differential functioning of the Mini-Mental Status Exam assessed using the DFIT methodology. Medical Care, 44, S143–S151.

Baker, F. B. (1995). EQUATE 2.1: Computer program for equating two metrics in item response theory [Computer program]. Madison: University of Wisconsin, Laboratory of Experimental Design.

Cohen, A. S., Kim, S.-H., & Baker, F. B. (1993). Detection of differential item functioning in the graded response model. Applied Psychological Measurement, 17, 335–350.

Oshima, T.C., Raju, N.S., Nanda, A.O. (2006). A new method for assessing the statistical significance in the differential functioning of items and tests (DFIT) framework. Journal of Educational Measurement, 43, 1–17.

Acknowledgements

The authors thank Douglas Holmes, Ph.D. for his review of several versions of this manuscript. The authors also thank three anonymous reviewers and the editor for their helpful comments related to an earlier version of this manuscript. These analyses were conducted on behalf of the Statistical Coordinating Center to the Patient Reported Outcomes Information System (PROMIS) (AR052177). Funding for analyses was provided in part by the National Institute on Aging, Resource Center for Minority Aging Research at Columbia University (AG15294), and by the National Cancer Institute through the Veteran’s Administration Measurement Excellence and Training Resource Information Center (METRIC). An earlier version of this paper was presented at the National Institutes of Health Conference on Patient Reported Outcomes, Bethesda, June, 2004.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Illustration: Calculation of Boundary and Category Response Functions, Expected Item and Scale Scores and Non-compensatory Differential Item Functioning (DIF) Indices: Polytomous items with ordinal response categories

This appendix is an illustration of the calculation of several indices used in determining the presence, magnitude and impact of DIF using item response theory. Illustrations can also be found in Collins et al. [40]; Orlando-Edelen et al. [31]; Thissen et al. [38]; and Thissen [13, 14].

Boundary Response Functions: The Samejima [25] graded response model, which assumes ordinal categories can be used to model polytomous items (see also Cohen et al. [43]. The model is based on calculation of a series of cumulative dichotomies resulting in cumulative probabilities of responding in a category or higher. One models the probability that a randomly selected individual with a specific level of physical functioning will respond in category k or higher. The boundary response function defines the cumulative probability of scoring in category k or higher:

There are k−1 such dichotomies. For a three category item, scored 0,1,2: the first cumulative dichotomy is between people who have a zero response vs those who selected response 1 or 2. The second cumulative dichotomy is between those who selected 0 or 1 vs 2 and higher. To illustrate using the example shown in Fig. 2, calculations are presented for θ = −1.0.

The probability of category 0 or higher = 1 (because every one scores either 0 or higher).

For category 1: P(x = 1 or higher) = 1/{1 + exp[−a(θ −bk1)]}

For Whites: P(x = 1 or higher) = 1/{1 + exp[−3.53((−1)−(−.90))]} = .4127

For African-Americans: P(x = 1 or higher) = 1/{1 + exp[−2.64((−1)−(−1.19))]} = .6228

For category 2:

P(x = 2 or higher (there is no higher)) = 1/1 + exp[−a(θ)−bk2)]

For Whites: P(x = 2 or higher) = 1/{1 + exp[−3.53((−1)−(−.07))]} = .0360

For African-Americans: P(x = 2 or higher) = 1/{1 + exp[−2.64((−1)−(−.01))]} = .0683

Category response functions: The above formula does not give the probability of responding in a specific category; to get this probability, the adjacent probability is subtracted out. Note that boundary response functions are usually used because they have a consistent form across levels; category response functions do not, and are more difficult to compare and interpret. Note also that when an item is binary, the category response function for the second category (for an item coded 0,1 this is 1) is the same as the item response (boundary response) function.

To obtain the category response P(x = k), subtract out the probability that P is in a higher category: P(k)−P(k+1).

For k = 0 Pi0(θ) = [Pi0(θ)−Pi1(θ)] = [1−Pi1(θ)]

For k = 1 Pi1(θ) = [Pi1(θ)−Pi2(θ)]

For k = 2 Pi2(θ) = [Pi2(θ)− 0]

For \({\varvec{\theta} {\bf =-1.0:}}\)

For Whites: Pi0 = 1 − .4127 = .5873

For African-Americans: Pi0 = 1 − .6228 = .3772

For Whites: Pi1(θ = −1.0) = .4127 − .0360 = .3767

For African-Americans: Pi1(θ = −1.0) = .6228 − .0683 = .5545

For Whites: Pi2(θ = −1.0) = .0360

For African-Americans: P i2(θ = −1.0) = .0683

The shapes of the CRFs are not the same, because they are no longer cumulative. A person with a specific theta level will have a separate probability of response for each response category. This category response function provides the probability that a randomly selected individual at say θ = 0 (average ability) will respond in category k.

Computing expected item and test scores: Expected item and test (scale) scores can be computed for both dichotomous and polytomous items. Lord and Novick and Birnbaum [24, p 386] introduce the notion of true scores in the context of IRT. A person’s true score is their expected score, expressed in terms of probabilities for binary items and in terms of weighted probabilities for polytomous items. The test characteristic curve described in Lord and Novick related true score or averaged expected test score to theta.

For a dichotomous item scored 0 and 1, the expected or true score is simply the probability of scoring in the ‘1’ category, given an individual’s estimated ability or Pi(θs), where P i (θs) is the probability of scoring a ‘1’ on item i for subject s.

(Here it is assumed that c (guessing) parameters are estimated at 0).

For a polytomous item, taking a graded response form, the expected score is the sum of the weighted probabilities of scoring in each of the possible categories for the item. For an item with 5 response categories, coded 0 to 4, for example, this sum would be:

For the graded response item with k categories, there are k−1 estimated bs and so in the example above, there will be four probabilities computed.

Recall that in the graded response model, the boundary response function defines the cumulative probability of scoring in category k or higher:

Thus, the probability of scoring above category k must be subtracted out in order to obtain the probability of scoring in the category. (This was illustrated in the previous section.)

Note that Pi(θs) = 1/{1 + exp[−ai(θs−bi1)]} − 1/{1 + exp[−ai(θs−bi2)]}.

As an example, the expected or true score as a member of the African-American group is the sum of the weighted probabilities of scoring in each of the possible categories for each θ, coded 0,1,2.

A true or expected score for an individual of mild disability (θ = −1.0) as a member of the white group would be:

A true or expected score for an individual of mild disability (θ = −1.0) as a member of the African-American group would be:

The expected test score for a subject with estimated ability θ is simply the sum of the expected item scores for that individual. Plots of expected scores against theta can then be constructed for given values of theta. Individual expected scores are used in the calculation of magnitude and impact indices discussed below.

Computing Non-Compensatory Differential Item Functioning (NCDIF): Two measures developed by Raju and colleagues [16] are based on IRT (see also Flowers et al. [17]). These measures are compensatory and non-compensatory DIF, or CDIF and NCDIF. NCDIF is more like indices of DIF such as the area statistics and Lord’s chi-square; the assumption is that all other items in the test are unbiased, except for the studied item. CDIF does not make this assumption. The advantage of these measures over Lord’s chi-square and the area statistics, such as Raju’s signed and unsigned area statistic is that they are based on the actual distribution of the ability estimates within the group for which it is desired to estimate bias, rather than the entire theoretical range of theta. If, for instance, most members of the focal group fall within the range of theta from −1 to 0, rather than between −1 to +1 on the continuum, the area statistics will give an inaccurate estimate of DIF.

NCDIF is computed exactly like the unsigned probability difference of Camilli and Shepard [15]. For each subject in the focal group, two estimated scores are computed. One is based on the subject’s ability estimate and the estimated a, b and c parameters for the focal group, and the other based on the ability estimate and the estimated a, b and c parameters for the reference group. Each subject’s difference score (d) is squared, and these squared difference scores are added for all subjects (j = 1, n) to obtain NCDIF.

As an example, NCDIF for item i is the average difference squared between the true or expected scores for an individual (s) as a member of the focal group (F) and as a member of the reference group (R). Using the example shown above for a person at theta=-1.0,

This quantity is then summed across people and averaged ∑d / n. This value is the NCDIF, which (as mentioned above) is also the unsigned probability difference (UPD) illustrated by Camilli and Shepard [15]. NCDIF is the average difference between the true (expected) scores for groups, and provides a measure of DIF magnitude. New methods for determining NCDIF cutoffs for binary items have recently been described [44].

Rights and permissions

About this article

Cite this article

Teresi, J.A., Ocepek-Welikson, K., Kleinman, M. et al. Evaluating measurement equivalence using the item response theory log-likelihood ratio (IRTLR) method to assess differential item functioning (DIF): applications (with illustrations) to measures of physical functioning ability and general distress. Qual Life Res 16 (Suppl 1), 43–68 (2007). https://doi.org/10.1007/s11136-007-9186-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-007-9186-4