Abstract

This study examines whether the inequality between universities is increasing in terms of research output, in the context of the New Public Management (NPM) regime based higher education reform in South Korea. Recent reforms in higher education sectors around the world illustrate a number of characteristics of NPM, with performance-based funding standing out among others. Performance-based funding has brought up several concerns, especially with unintended consequences of the reforms such as a widening gap in the research activities of universities. We provide an exploratory case study of the South Korean higher education system where performance-based funding programs are rampant, using a novel panel dataset comprised of all the general four-year universities (n = 184) in 2009–2015. The descriptive analysis of the temporal trend of research output inequality among universities shows that the answer of whether the gap is widening or not depends greatly on the use of indices of inequality. We report the conflicting results between the ‘relative’ and ‘absolute’ inequality index when applied to the dataset. Our findings are followed by the discussion on the measurements of inequality and their axioms regarding the institutional Matthew effect, suggesting more consideration on the nature of the data and the context.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With increasing contribution of academic research to innovation, universities are frequently touted as the key institution in the knowledge-based economy due to their capacities for generating knowledge, discovery, and invention. Given this, governments around the world have entered into an implicit social contract with universities with the expectation that the universities would take up the functions going beyond education, such as R&D and entrepreneurial activities (Altbach and Salmi 2011; Etzkowitz 2004), providing the financial support for the latter in the form of research funding. Yet, even though their importance is recognized more than ever as one of the central axes of Triple Helix of innovation, universities cry out under the increasing competition with the others and the pressure for improving performance (Geiger 2004).

Higher education governance reforms inspired by New Public Management (NPM) and reinforced by university rankings are referred to the main culprit of intensified tension between and within universities of different capacities and various functions (Münch 2016). In the name of public accountability and efficiency, the NPM-inspired reforms introduced around the world has drawn on competitive performance-based funding as an instrument to enhance university performance (Geuna and Rossi 2015; Hicks 2012; de Boer et al. 2007).Footnote 1 Under performance-based funding, most universities except a handful of elite institutions have been stressed out due to greater financial pressure, which has led to debates and concerns about the unintended consequences of NPM-inspired reforms in the higher education sector (Auranen and Nieminen 2010; Krücken 2014; Leišyte 2016; Münch 2013).

One of the debates on the impact of performance-based funding attracting the interest of a broad spectrum of scholars and policymakers is whether or not stratification and inequality between universities are intensifying (Myklebust 2016; Beerkens 2013; Hillman 2016; Jongbloed and Lepori 2015). Several studies have looked into this issue, for the sake of the description of the phenomenon itself (Lau and Rosen 2016), or due to its association with other issues, such as the allocative efficiency of funding (Zhi and Meng 2016) and a reproduction of social stratification (Davies and Zarifa 2012). Existing studies, however, tend to focus on inequality in terms of an allocation of financial resources, with only a few exceptions such as Halffman and Leydesdorff (2010), although the stratification of universities based on research performance inequality has been a critical topic.

This study takes the question posed by Beerkens (2013) as a starting point–“is competitive funding likely to concentrate research in fewer universities or perhaps, on the contrary, level the playing field?” Most previous studies have examined universities’ research input, as it is directly related to performance-based funding. It is also meaningful, however, to switch the focus from research input to output because the latter is another core element of performance-based funding, the analysis of which can illustrate the actual knowledge production structure. In an effort to answer whether competitive funding concentrates research in a few universities, this study examines the degree and trend of inequality in university research performance in South Korea, a country where performance-based schemes are rampant at every level of government funding distribution.

Our exploratory analysis of the longitudinal trends of inequality in university research output utilizes a novel dataset containing all universities in Korea (n = 184) for 7 years (2009–2015) subject to governmental performance-based funding. It makes two significant contributions to the existing research. First, we use the publication count data considering the size of the universities and coauthored papers, which gives a more accurate account of the extent of research activities in each university. Second, our analysis refines the methods of describing the potential stratification of universities by employing different types of inequality indices. While this research does not explore the causal links underlying inequality in research performance among universities directly, it addresses one of the most important issues that need to be tackled in any causal analysis of university research performance gaps, namely, whether such gaps are widening or not.

One of the key results is the longitudinal pattern of inequality showing opposite trends depending on the index of inequality. Inequality in research output turns out to diminish over time when analyzed with a ‘relative’ inequality index widely used in previous studies, whereas it shows an upward trend when analyzed with an ‘absolute’ inequality index. The answer to whether a gap is widening among universities thus hinges on the measure of inequality chosen for analysis. While it may not be surprising to find different results with different indicators of inequality, it is notable that the existing literature has rarely employed the absolute inequality index which could give an opposite result.

Our supplementary analysis of the mobility of the universities in the research output ranking over time as well as the strata of universities helps to explain the contradictory findings between two indices of inequality with a more detail account of research production structure. We dwell on these findings further by discussing the different features of two indices of inequality and why the absolute index may be a more appropriate measure of inequality in the context of university performance comparison.

2 Literature review

2.1 Institutional Matthew effect of research performance

Discussions on inequality in the realms of scientific endeavors and practices are several decades old. Prior observations of the skewed distribution of scientific publications and citations (Lotka 1926; Price 1963) are often summarized as the ‘Matthew effect,’ named after Robert K. Merton. Often expressed as ‘the rich get richer, the poor get poorer,’ the Matthew effect refers to a cumulative advantage that eminent scientists receive in the scientific community in the reward system of prestigious reputation and high recognition, plentiful resources, and high productivity (Merton 1968). Empirical studies of the Matthew effect have found the inequality in publication counts to become larger as the cohorts get older (Allison et al. 1982; Allison and Stewart 1974). Much effort has been made to unearth various factors underlying differential productivity of scientists such as the effect of the incentive system rewarding publications directly on the inequality of productivity (Kim and Bak 2016).

In addition to those studies examining the Matthew effect at an individual level, there has also been considerable interest in the Matthew effect at an institutional level. Research focusing on the institutional level has looked into the effects of prestigious institutional affiliation on the maintenance of high faculty productivity even with the control of the selection bias (Allison and Long 1990) and the increasing stratification of universities suspected to result from competitive pressures due to performance-based funding and university ranking (Münch 2013). Many studies of the institutional Matthew effect have found resource allocation among universities to be becoming more unequal or at least maintaining high skewness (Davies and Zarifa 2012; Lau and Rosen 2016; Wu 2015; Xie 2014). Notably, these studies tend to focus mostly on one side of the story, i.e., the inputs such as research funds or endowments.

More recently scholars have moved forward to touch upon the output side of the institutional Matthew effect, testing the effects of university stratification and inequality in resources, finding no evidence of positive externalities of stratification (Zhi and Meng 2016). Münch and Schäfer (2014) examined whether the UK succeeded in improving its universities in the world university rankings by adopting the performance-based funding in comparison with Germany that had a different funding scheme, finding that UK’s plan proved to be ineffective. While some of the studies of the institutional Matthew effect consider inequality in research output such as the one comparing the degree of inequality between research input and output which shows more skewed distributions in the input side (Shibayama 2011; Ville et al. 2006), there is very little research that directly addresses the development of inter-university inequality in research output.

Since the core of the Matthew effect as a cumulative advantage lies in the circular mechanism of reinforcement where more input leads to more output which feedbacks into more input, it is crucial to examine more closely the degree of inequality in research performance among universities in order to understand better the structure of university stratification. Furthermore, output measures such as publication counts are more direct indicators of performance than input measures like resources and thus form the very basis of performance-based funding. Yet only a few studies on the institutional Matthew effect deal with the issue of inequality among universities on research output.

Due to the scant amount of research and varying contexts of the research, it is difficult to derive a firm conclusion about the degree of the institutional Matthew effect in terms of research performance. Some studies of the Australian case show conflicting results, with Ville et al. (2006) reporting the decreasing inequality among universities and Beerkens (2013) finding a widening gap between them. Analyzing top 194 US universities, Brint and Carr (2017) find inequality of publication numbers unchanged over time. A study of the Dutch case by Moed et al. (1999) finds a narrowing gap among Dutch universities from the 1980s to the 1990s. Halffman and Leydesdorff (2010) also obtained a similar result for the Top 500 universities in the Academic Ranking of World Universities (ARWU), though each country showed a different trend over time. Given the dearth of the previous research, our study explores the institutional Matthew effect of research as a basis for further discussion on how the research structure in the higher education sector has changed over time in the context of the recent NPM-driven performance-based funding system.

2.2 Korean higher education funding policy

In this study, we examine the Korean higher education reform as the case of the NPM-inspired performance-based funding scheme potentially exacerbating inequality among universities in research performance. As some scholars have pointed out, there are significant international differences and path-dependent patterns in the performance-based funding system, one of the most widespread instruments of NPM-based higher education reforms across the countries (Auranen and Nieminen 2010; De Boer et al. 2007; Davies and Zarifa 2012; Hicks 2012). This is no wonder given that NPM is not a theory or analytical method but rather an umbrella term including several characteristics (Broucker and De Wit 2015). In the following, we briefly provide an overview of the recent Korean higher education reform concentrating on how the funding policy is designed and implemented (see Yu (2014) for a comprehensive review of the reform).

It was the “May 31st Education Reform Plan” in 1995 in which the Korean government first introduced the NPM principles with competitive performance-based funding programs in higher education (Byun 2008). With this reform, performance-based funding programs started to take a greater portion of the total budget for higher education. The so-called ‘special purpose’ funding tied to performance, as opposed to ‘general purpose’ funding, increased progressively from the mid-2000s, finally becoming dominant in the allocation of funding for higher education by the late 2000s (Ban 2017; Kim 2014; Shin 2012). Hence, as its name indicates, the universities receiving the funding were held accountable for the proper use to achieve the designated purpose. This provides a reason that some of the recent studies examining the governance structure of Korean higher education characterize it as “decentralized centralization” in which the government gives autonomy to universities while controlling them with performance-based funding allocation (Shin 2011).

The Brain Korea 21 Program (BK21) undertaken during the years of 1999–2005 is noteworthy in this regard. As the first prominent competitive performance-based funding program, it is considered as a precursor for various funding programs that exist today. The Korean government utilized BK21 to induce Korean universities to pursue academic excellence and thereby transform them into research universities (Park and Leydesdorff 2010; Shin 2009).

While it was becoming a global phenomenon to use the massive funding to incentivize universities, the Korean government’s promotion of research universities was more strategic and goal-oriented (see Hur and Bessey 2013 for a comparison with Germany). One of the key features of BK21 was “concentration by selection,” echoed by successive funding programs such as the 2nd Phase BK21 (2006–2012), the World Class University Program (2008–2012, shortened as WCU) and the BK21 Plus Program (2013–2019, shortened as BK21+). These programs have all aimed at specific numeric targets such as the elevation of ten research universities into the World’s Top 200 (NABO 2011) or three elite universities into the World’s Top 30 (MEST 2010). They allocated government funding more favorably to the elite universities (Shin 2009).

These performance-based funding programs have wielded powerful influence since their introduction, with the Korean government funding more top-tier universities. According to the National Research Foundation of Korea (NRF), the Korean government is a primary sponsor of university R&D activities (74.8%). Moreover, the top 20 universities take account of 62% of the total public research funding distributed to universities, which has remained unchanged in the last 3 years. Furthermore, the list of those top 20 universities itself has been almost the same, with each year seeing at most one university changed on the list (NRF 2015).

This highly concentrated funding structure of universities since the introduction of performance-based funding programs may be characterized as “stratification by classification,” as specified in official government documents such as the Ten-Year Basic Plan for Higher Education Funding announced in 2010. This plan classifies universities into four tiers based on several parameters, including the size of external grants and SCI publications. Among about 200 general 4-year universities, the top tier of 20 universities would be cultivated into world-class universities, the second-tier of about 50 universities would be promoted to focus on industrial collaboration and education, the third-tier universities would be concentrating on lifelong education, and the last tier would be excluded from university funding programs and put on the list of merger or closure (MEST 2010a). This stratification by classification seems to have led to the “specialization by stratification,” as each tier has evolved to conduct functions of quite different foci. In such effort to assign specific roles to different groups of universities, the governmental reforms of higher education in South Korea have in a sense aimed to create inequality in research capacities among universities.

Such a stratified structure of university funding brought up serious concerns about inequality among universities to the point that the institutional Matthew effect is considered as a fait accompli from the critics of the performance-based funding system (Ban 2017; Han and Kim 2017). Yet there is little empirical research on the effects of performance-based funding on inequality among South Korean universities, with even the description of the phenomenon rarely done with actual data.

2.3 Inequality measurements: relative versus absolute

Although our main focus is the inequality and stratification of research among universities, it is important to review some prior work on inequality measurement in economics, as much of theoretical developments on equality relates to income inequality. Among several issues on inequality measurement, we focus on the debate on the relative and absolute inequality measure as our research is interested in the temporal trend of inequality. In fact, all frequently used inequality measures can be categorized into either relative or absolute inequality with similar characteristics respectively, which means that the result is generalizable even if we use only one index for each (Chakravarty and Lugo 2016).

The decades-long debate whether relative or absolute indices are the proper measures for inequality starts from the recognition that the indices are not value-free. Rather the different normative assumptions are combined to the different axioms of these summary measures of inequality (Kolm 1976). Relative inequality measures are based on the scale invariance axiom; they maintain constancy if properties of each entity change equiproportionally. Meanwhile, the absolute measures follow the translation invariance axiom, which means that the same absolute amount of property changes in entities would not affect the inequality. In short, the absolute measure of inequality is size-dependent, whereas the relative measure is not. Thus, there is no inherent reason to expect both to be correlated; in fact, they can be considered as measures of different aspects of distribution—the absolute measure related to the differences in size of entities under comparison and the relative measure related to the differences in relative positions of entities within the distribution.

As for income, the relative inequality measures dominated both academic and practical statistics due to several reasons (Milanovic 2016, pp. 27–29). However, Amiel and Cowell (1999) with others have shown that a considerable number of people do not agree with the concept of relative inequality given by its underlying axioms of the measurement including the scale invariance axiom. As two inequality measures have shown an entirely opposite trend for the global inequality, the debate seems to be revitalized (Atkinson and Brandolini 2010; Niño-Zarazúa and Roope 2016; Ravallion 2014).

In contrast to the debate on relative versus absolute inequality in economics, the research on the Matthew effect among scientists or universities has not shown much interest in the issue of measurement of equality. This is largely because much of the existing research on the Matthew effect follows the methodology of Paul D. Allison and his colleagues, pioneers of empirical research on the Matthew effect, that draws on the relative inequality measures due to its scale-invariant feature for inter-disciplinary comparison of scientific productivity (Allison and Stewart 1974; Allison 1980). Thereafter, although the research was expanded to a comparison and inequality in national, institutional, individual level, the relative inequality indices such as Gini coefficient and the modified coefficient of variation (Allison et al. 1982) dominated the studies of inequality measurement.

This domination of relative equality indices was mainly due to the nature of datasets utilized by the previous studies being consistent with relative inequality. When analyzing inter-institutional inequality and stratification, for example, scholars tended to calculate a total number of publications from a university (Brint and Carr 2017; Halffman and Leydesdorff 2010; Moed et al. 1999; Sin 2011; Ville et al. 2006; Zheng et al. 2008). The relative inequality index corrects for this size effect with its scale-invariance. However, when the set of university’s total publication counts is used to measure the gap between universities, it cannot be of much use for cross-institutional comparison because it highly depends on the size of the universities and therefore the result of comparison is likely to reflect just the size difference rather than the real differences in research performance. Also, the prior research on inequality among universities has not considered the fractional count of multi-author publications, which can lead to potential bias (Stephan and Levin 1991).

In the following exploratory analysis, we examine whether inequality has been rising in research output among universities in recent years in South Korea. Our analysis complements the previous research by applying both relative and absolute inequality indices, taking into account the size of universities, and utilizing the fractional account of publications of multiple authors. Also, we could apply both relative and absolute inequality indices to expand the inquiry.

3 Data and methods

The data for our empirical investigation come from 184 universities in South Korea covering 7 years (2009–2015) available from the Korean Council for University Education (KCUE) under the Ministry of Education. The KCUE database on universities is utilized as a basis for the Ministry of Education to evaluate and accredit universities as well as to allocate funding with the performance-related criteria.

In order to measure inequality in research output, we use the most common output measure, publication counts per full-time faculty. Among the four categories of publications in the KCUE database,Footnote 2 the publications indexed in KCI (Korea Citation Index) and SCI/SCOPUS are used for this analysis, as they are the most critical indicators for performance-based research funding allocation in addition to being the most commonly used evaluation measure around the world (Geuna and Rossi 2015, Ch. 5). By calculating the research output per faculty rather than counting whole publications of a university which highly depends on the university size, one can regard this number as the degree of research activity or research productivity of the corresponding university.

Also, we draw on the fractional counting of co-authored papers considering the differential contribution of each author in which the contribution of a lead or corresponding author is counted as 2 / (n + 2) and that of other authors as 1 / (n + 2) with n being the total number of authors upper-bounded at 15 for more than 15 authors. Fractional counting is an important methodological improvement given the rise of collaborative research in the recent years. While there exists a concern that fractional counting disincentivizes research collaboration as it gives a smaller weight to individual contribution (Leydesdorff and Park 2017, Park et al. 2016), we use it not only to follow the official publication counting measure of KCUE and NRF but also for two reasons. First, fractional counting can ameliorate the problems of inflation and double counting in assessing the volume of publications (Stephan and Levin 1991). Second, as our analysis is to measure institutional capacity rather than gauging the degree of networking or linkages among researchers, fractional counting will do for our analytical purpose.Footnote 3

South Korea has over 400 post-secondary institutions, which are classified into two types. One is general 4-year universities, and the other is various sorts of colleges including industrial colleges, teachers’ colleges, junior colleges, and cyber colleges. This analysis includes only the general universities and industrial colleges (n = 193), as their research output data are recorded by the accreditation system. The final number of universities in the dataset comes down to 184 excluding those institutions that were shut down or merged during our study period.

Given the longitudinal dimension of our dataset, we apply the following method to capture the temporal trend of inequality in research output among universities. We first calculate relative Gini coefficients and absolute Gini coefficients for the distribution of each publication count for each year. There are two approaches to calculating the relative Gini coefficient. One is to sum all differences between entities as in Eq. (1), and the other is to calculate how the overall distribution is far from the perfect equality as in Eq. (2). As the two formulae are equivalent (Kimura 1994), the choice would not make any differenceFootnote 4:

where n represents the number of universities, y is an attribute (i.e., publication counts) of each entity i and j, \(\mu\) is the mean value of each subset of data, and \(\phi\) is a cumulative sum of the attributes up to the i-th entity with the attributes of entities ordered in ascending order.Footnote 5

In addition to comparing the trends of university research output inequality with two Gini coefficients, we also check the mobility of each university’s ranking in the annual research performance per faculty. Since the Gini coefficient summarizes a distributional structure, it cannot identify the dynamics within the structure.Footnote 6 We make up for this limitation by examining the mobility of universities in terms of the university ranking in research output, which would show how universities are stratified and to what extent. Mobility is examined by the rank–rank slope, which is simply the regression coefficient of the rank for a given year on those of the previous year. Borrowed from Lau and Rosen (2016), this measure is often used to examine inter-generational (e.g., parent–children) mobility (Chetty et al. 2014). The rank–rank slope close to one implies near-perfect immobility of the structure, i.e., an indication of rigid stratification among universities.

In addition to the mobility of universities with regard to research performance indicators, we further explore the trends of these indicators for each stratum with the following procedure. First, we rank the universities for each indicator by summing 7 years of the data. Second, fixing the ranks and strata of each university, we calculate the slope of the over-time changes of each indicator for each university with the Ordinary Least Squares (OLS) method. The average values of the slopes are then compared across universities of different ranks and strata.

4 Results

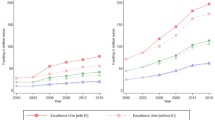

The first-cut results are presented in Table 1 and Fig. 1 in terms of descriptive statistics. Several points are interesting to note. Although the Korean universities are publishing in domestic journals more than international journals because it is relatively less competitive to publish in domestic journals, they have been successful in raising their visibility from the global academia over the recent years, as seen in the mean value of SCI publications per full-time faculty that is multiplied about 1.77 times from 2009 to 2015. This prominent growth—compared to their KCI publication trends which remained constant—indicates that Korean universities have been putting much more effort into the SCI publications.

Also, some degree of inequality is readily visible in the distribution of scientific publications among universities from the two boxplots in Fig. 1. While standard deviation of KCI publications has largely remained the same, the standard deviation of SCI publications shows significant growth, especially on the upper side, which is suggestive of more dispersed distribution.

Figure 2 shows the results on the relative and absolute Gini coefficients for each year of domestic and SCI publications. There are noteworthy findings from the trends of the Gini coefficients over time. First, there is greater inequality in international publications than in domestic publications among Korean universities with the relative Gini coefficient for the former hovering above 0.50 throughout the 7 years. Second, while both relative and absolute Gini coefficients remain nearly constant in the distribution of KCI publications, SCI publications show the opposite trends for the two inequality indices. The relative Gini coefficient has decreased, whereas the absolute Gini coefficients have increased over time, almost mirroring each other in the opposite direction. In the discussion section, we will delve into this result more at length.Footnote 7

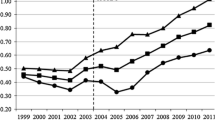

In Fig. 3, we plotted the calculations of the rank–rank slope with the 95% confidence interval. The closer the slope is to one, the more immobile the universities’ position in the distribution is. Again, the same contrast between SCI and KCI publications is visible here. The slope coefficients are nearly 0.95 for SCI publications with a narrow confidence interval, whereas they are smaller for KCI publications. It is quite evident that there is strong immobility in international research output ranking of universities, compared to domestic research output ranking. In other words, the structure of university research is highly stratified as for international publications, as universities rarely change their relative positions in the rankings of international publications.

As shown in the above rank-and-rank slope results, it is easy to fix the strata of universities’ ranking for their SCI publication counts as the rankings remain largely constant. In Table 2, we have tracked each stratum regarding SCI publications whose ranking distribution seems to be largely fixed. What is shown in Table 2 is the average slope of publication counts over time for the period of 2009–2015 for the universities that are now stratified into quintiles, quartiles, and certain top & bottom percentages. The results are very much similar across the three calculations. The rate of growth in SCI publications is much higher in upper strata universities. For instance, over the 7 years, the growth of SCI publications is 0.03 for the top 20% universities but only 0.001 for the bottom 20% universities. This is indicative of a widening gap between the universities’ research output. Together with the findings from the absolute Gini index, they reveal an ongoing institutional Matthew effect in terms of publication counts in Korean higher education.

Integrating the subsequent analysis results of the rank–rank slope and the trend of each stratum in Fig. 3 and Table 2, we may explain the opposite trends by different inequality measures. To simplify the problem, let us group the top 30% and the last 70% division of Table 2c as University A and University B, respectively. University A’s faculty has written 0.03 paper more over the 7 years on average, while University B’s faculty has only written 0.01 paper more over the same period. The absolute gap is then 0.02 publication count, which then increases absolute inequality. However, the same change would mean a reduction in relative inequality, because the percent change of research performance by University A’s faculty is smaller than that of University B’s faculty; the former published more than three times than the latter at the starting year of this study period.

5 Discussion

This study has explored the institutional Matthew Effect in South Korean universities out of concern with the increasing worry about the unintended consequences of the NPM-driven funding schemes for university research. Our descriptive analysis checked the degree of inequality in research output among 184 South Korean universities in the last 7 years (2009–2015), reporting the first time of Korean case of the topic which is valuable considering the small number of relevant previous studies.

The main contributions of this paper are twofold. One is that we utilized the research output dataset of the whole South Korean universities which considers the size of the university and the co-authors of each publication. By using fractional publication counts per full-time faculty, our analysis complements the previous research that overlooks the issue of proper modification and application of the data and the measurements. The other contribution is that we applied multiple inequality measures with different characteristics, namely relative and absolute Gini coefficients. As Atkinson (2013) points out, a different subject for the inquiry of inequality deserves a different measurement. Our paper brought this measurement issue to the study of research inequality among universities. One of its central findings indeed concerns the contradicting results for the degree of unequal distribution of universities regarding scientific publications through prestigious outlets such as SCI journals. Different measures turn out to give different results not only in terms of size but also of direction, as the relative and the absolute inequality measures show the opposite sign of the trend.

Which one is then a proper measure in tracking the trend of inequality in university research performance over time? Since there is nothing technically wrong about relative and absolute Gini coefficients showing different results, what we need to discuss is which measure (or which axiom) reflects the nature of the data at hand better.

In fact, there are equally legitimate reasons to use relative and absolute inequality indices for the current context of university performance evaluation. On the one hand, absolute inequality measures may be preferred to the relative one due to a typical S-curve growth of scientists’ research activities. Our fractional count of publications per faculty captures the average degree of research activities per faculty in a university more precisely. As professors cannot write dozens of papers at the same time in a year by his own without collaborations, this fractional count cannot be extremely large. It is easy to increase publications by 10% at lower level performance, but it gets harder to do the same at the higher level performance. Even if resources are concentrated on the top universities, they cannot keep improving their quantitative research output proportionally, which implies that it is hard to apply the scale invariance axiom in university publication data.

For instance, in our dataset, the increasing publication rate of the most productive 10% universities (0.0329) was smaller than the next 10% universities (0.0331). The growth rate seems already faltering in the top stratum. This is in line with the other evidence showing that the returns to resources for the production of scientific research tend to decrease at the top universities (Shibayama 2011; Zhi and Meng 2016).

On the other hand, the scale invariant axiom can still apply in inter-university comparison due to the same reason that Paul D. Allison pointed out. If each university is specialized in different disciplines, then university publications reflect not only differences in institutional factors but also inter-disciplinary differences. Our dataset, fortunately, excludes several unique higher education institutions such as those specializing in the STEM fields such as KAIST or GIST, so we may not need to worry much about such a possibility. Still, as long as the universities show distinct disciplinary strengths, the relative inequality measures may still make sense.

On a final note, we need to take into account the specific context of Korean higher education reforms epitomized by the performance-based programs including BK21 and several ensuing programs such as the WCU and BK21+. In addition to these programs seeking mainly academic excellence and thus highly skewed to top-tier universities, the government has provided a different kind of still performance-based programs for lower-tier universities, most notably the Leaders in Industry-university Cooperation Program (LINC), 2012–2016, succeeded now by LINC+.

There have been serious concerns about performance-based funding programs such as BK21 or LINC among the scholars of Korean higher education policy. For one, the stratification by classification of Korean universities reinforced by performance-based funding has resulted in quite different levels of institutional autonomy across different strata of universities. The very top tier universities (about top 10%) benefitting from academic excellence driven programs such as BK21 enjoy more autonomy with generous government funding support. At the same time, the next tier universities are increasingly pressured to change their own research policies in more pro-industry directions to get the LINC funding, even if they aspire to be identified as research universities (Shin and Kim 2012; Lim 2015; Kwon 2012).

Universities below the very top tier but still in the first quarter of the distribution are relatively in favorable conditions because they could get funding from both or one of the main programs (BK or LINC). They can utilize those investments to improve their research, industry cooperation and education capacity. However, their autonomy from the government is limited as they have to compromise their policies and identities to some extent (Han 2015; Kim and Bak 2016; DGUGSPress 2013).

Lower-tier universities struggle and compete very much for the small amount of funding from LINC or other minor funding programs. Many lower-tier universities do not even apply for BK21 because they understand that they do not meet the requirements or qualifications regarding research performance. As the most serious victims of the institutional Matthew effect and performance-based funding system, they try to secure the government’s funding programs by reforming their identities to be fit in these programs. It is no wonder that there is very little institutional autonomy in those universities (Kwon 2013; Bae 2013; Park 2013).

In the age of government-funded science, the relationship between the government and the universities is hardly symmetrical. It is all the more so in South Korea that has long been governed under the developmental regime of heavy government involvement in almost every sector. No university is fully autonomous or independent from government funding policy, yet there are significant differences in how strongly universities perceive themselves deprived of institutional autonomy. Particularly the victims of the institutional Matthew effect find it much harder to maintain their own institutional identity and financial sustainability (Kim 2013; Park 2015; Lee and Choi 2016).

These concerns with the identity of lower-tier universities and the competitive pressures they face might explain the modest increase in research output of those universities. With scientific publications counted in performance evaluation, these universities are forced to publish anyway. They cannot concentrate on research, however, because they cannot assure themselves of neither the research funding nor the identity as a research institution. In the current situation where the South Korean government evaluates universities by absolute counts of publications rather than relative growth in publications, it makes more sense to use the absolute measure of inequality.

In short, the reality of unequal distribution of research output among the Korean universities is that universities are not competing on an equal footing but in a highly skewed distribution of resources, reputations, and institutional autonomy. The reality seems to be much closer to what we find from the trends calculated using the absolute Gini coefficient. Despite the overall growth, the mobility of universities in the ranking is limited, and their strata are growing apart as we have shown.

In this study, we mainly touched on the measurement issue of research activity inequality among universities. We also reviewed the Korean higher education policy focused on NPM governance reforms as represented by performance-based funding, discussing at length the situation of the universities in the context of reforms. This review and discussion were to offer the argument that the perceived type of inequality among the key stakeholders in the higher education sector in a given policy context is closer to the absolute inequality. Clearly, further research should follow to demonstrate the causal relations between the policy and the inequality development. Future studies may use our study as a basis to reveal and confirm factors underlying such causal relationships.

Notes

Performance-based funding here refers to various sorts of funding schemes based performance such as evaluation-, competition-, selection- and assessment-based funding analyzed in the previous literature.

The four categories of journals classified by the NRF are (i) KCI (the citation index for domestic academic journals created by NRF), (ii) non-KCI domestic journals, (iii) SCI/SCOPUS (including SSCI, A&HCI, SCIE), and (iv) non-SCI/SCOPUS international journals.

In our dataset, the numbers of publication counts are rounded to three decimal places.

While the relative Gini coefficient ranges from zero (perfect equality) to one (perfect inequality), there is no upper bound for absolute Gini coefficients which depend on the mean value of the given attribute.

One of the axioms for inequality measures is ‘anonymity’ so that the measures only refer to distribution of attributes without assigning or identifying the attributes to particular members of the group.

Gradations on the right side of the figures are for the absolute Gini coefficients, which are converted by multiplying averages of the mean value of each publication to the left side gradations of the figures, which are for the relative Gini coefficients.

References

Allison, P.D.: Inequality and scientific productivity. Soc. Stud. Sci. 10, 163–179 (1980)

Allison, P.D., Long, J.S.: Departmental effects on scientific productivity. Am. Sociolog. Rev. 55, 469–478 (1990)

Allison, P.D., Stewart, J.A.: Productivity differences among scientists: evidence for accumulative advantage. Am. Sociolog. Rev. 39, 596–606 (1974)

Allison, P.D., Long, J.S., Krauze, T.K.: Cumulative advantage and inequality in science. Am. Sociolog. Rev. 47, 615–625 (1982)

Altbach, P.G., Salmi, J. (eds.): The Road to Academic Excellence: The Making of World-Class Research Universities. World Bank, Washington, DC (2011)

Amiel, Y., Cowell, F.: Thinking about inequality. Cambridge University Press, Cambridge (1999)

Atkinson, A.B.: Health Inequality, Health Inequity, and Health Spending. In: Eyal, N., Hurst, S.A., Norheim, O.F., Wikler, D. (eds.) Inequalities in Health: Concepts, Measures, and Ethics, pp. 27–36. Oxford University Press, New York (2013)

Atkinson, A.B., Brandolini, A.: On analyzing the world distribution of income. World Bank Econ. Rev. 24, 1–37 (2010)

Auranen, O., Nieminen, M.: University research funding and publication performance—an international comparison. Res. Policy 39, 822–834 (2010)

Bae, M.: Regional universities show different responses to BK21 Plus initiative. Joongdo Ilbo. Retrieved from http://www.joongdo.co.kr/jsp/article/article_view.jsp?pq=201304080250 (2013). Accessed 31 July 2016 (in Korean)

Ban, S.-J.: Issues and tasks of government initiated financial support programs on universities. J. Educ. Policy 1, 139–160 (2017). (in Korean)

Beerkens, M.: Competition and concentration in the academic research industry: an empirical analysis of the sector dynamics in Australia 1990–2008. Sci. Public Policy. 40, 157–170 (2013)

Brint, S., Carr, C.E.: The scientific research output of U.S. research universities, 1980–2010: continuing dispersion, increasing concentration, or stable inequality? Minerva (2017). https://doi.org/10.1007/s11024-017-9330-4

Broucker, B., De Wit, K.: New public management in higher education. In: Huisman, J., de Boer, H., Dill, D.D., Souto-Otero, M. (eds.) The Palgrave International Handbook of Higher Education Policy and Governance, pp. 57–75. Palgrave Macmillan UK, London (2015)

Byun, K.: New public management in korean higher education: is it reality or another fad? Asia Pacific Educ. Rev. 9, 190–205 (2008)

Chakravarty, S., Lugo, M.A.: Multidimensional indicators of inequality and poverty. In: Adler, M.D., Fleurbaey, M. (eds.) The Oxford Handbook of Well-Being and Public Policy, pp. 246–285. Oxford University Press, New York (2016)

Chetty, R., Hendren, N., Kline, P., Saez, E.: Where is the land of opportunity? The geography of intergenerational mobility in the United States. Q. J. Econ. 129, 1553–1623 (2014)

Davies, S., Zarifa, D.: The stratification of universities: structural inequality in Canada and the United States. Res. Soc. Stratif. Mobil. 30, 143–158 (2012)

de Boer, H., Enders, J., Schimank, U.: On the Way towards New Public Management? The Governance of University Systems in England, the Netherlands, Austria, and Germany. In: Jansen, D. (ed.) New Forms of Governance in Research Organizations, pp. 137–152. Springer, Dordrecht (2007)

de Price, D.J.S.: Little Science, Big Science. Columbia University Press, New York (1963)

DGUGSPress.: Comment on BK21 Plus initiative winner selection result. DGUGSpress. Retrieved from http://www.dgugspress.com/news/articleView.html?idxno=1116 (2013). Accessed 31 July 2016 (in Korean)

Etzkowitz, H.: The evolution of the entrepreneurial university. Int. J. Technol. Glob. 1, 64–77 (2004)

Geiger, R.L.: Knowledge and Money: Research Universities and the Paradox of the Marketplace. Stanford University Press, Stanford (2004)

Geuna, A., Rossi, F.: The University and the Economy: Pathways to Growth and Economic Development. Edward Elgar Publishing, Cheltenham (2015)

Halffman, W., Leydesdorff, L.: Is inequality among universities increasing? Gini coefficients and the Elusive Rise of Elite Universities. Minerva 48, 55–72 (2010)

Han, H.: Jeonbuk Univ, Education-Research-Globalization three elements… Winning 7 funding initiatives. Segye Ilbo. Retrieved from http://www.segye.com/content/html/2015/11/26/20151126003204.html (2015). Accessed 31 July 2016 (in Korean)

Han, J., Kim, S.: How rankings change universities and academic fields in Korea. Korean J. Sociolog. 51, 1–37 (2017). (in Korean)

Hicks, D.: Performance-based university research funding systems. Res. Policy 41, 251–261 (2012)

Hillman, N.: Why Performance-Based College Funding Doesn’t Work. The Century Foundation. Retrieved from https://tcf.org/content/report/why-performance-based-college-funding-doesnt-work/ (2016). Accessed 8 Nov 2017

Hur, J.-Y., Bessey, D.: A comparison of higher education reform in South Korea and Germany. Asia Pacific Educ. Rev. 14, 113–123 (2013)

Jongbloed, B., Lepori, B.: The funding of research in higher education: mixed models and mixed results. In: Huisman, J., de Boer, H., Dill, D.D., Souto-Otero, M. (eds.) The Palgrave International Handbook of Higher Education Policy and Governance, pp. 439–462. Palgrave Macmillan UK, London (2015)

Kim, Y.: “Government annihilates small-strong univ.”…President of Sogang Univ. resists to their drop out of BK21. Yonhapnews. Retrieved from http://news.naver.com/main/read.nhn?mode=LSD&mid=sec&sid1=102&oid=001&aid=0006436542 (2013). Accessed 31 July 2016 (in Korean)

Kim, H.-H.: An Analysis of Government Funding Policy and the Finance Structure of Universities in Korea. Seoul National University, Ph.D. Diss (2014). (in Korean)

Kim, D.H., Bak, H.-J.: How do performance-based incentives influence inequality among scientists’ research performance? In: 2016 APPAM International Conference, pp. 1–30. London (2016)

Kimura, K.: A micro-macro linkage in the measurement of inequality: another look at the Gini coefficient. Qual. Quant. 28, 83–97 (1994)

Kolm, S.-C.: Unequal inequalities. I. J. Econ. Theory 12, 416–442 (1976)

Krücken, G.: Higher education reforms and unintended consequences: a research agenda. Stud. High. Educ. 39, 1439–1450 (2014)

Kwon, H.: LINC “We can select more ‘site-adhered’ rather than ‘technological innovation based’”. The Professors Times. Retrieved from http://www.kyosu.net/news/articleView.html?idxno=24857 (2012). Accessed 31 July 2016 (in Korean)

Kwon, H.: BK21 Plus Why did applications converged on ‘small project team’?. The Professors Times. Retrieved from http://www.kyosu.net/news/articleView.html?idxno=27503 (2013). Accessed 31 July 2016 (in Korean)

Lau, Y., Rosen, H.S.: Are universities becoming more unequal? Rev. High. Educ. 39, 479–514 (2016)

Lee, E., Choi, Y.: “Demanding STEM and also liberal arts…Whose tune should we dance to”. Donga Ilbo. Retrieved from http://news.donga.com/3/all/20160328/77244561/1 (2016). Accessed 31 July 2016 (in Korean)

Leišytė, L.: New public management and research productivity—a precarious state of affairs of academic work in The Netherlands. Stud. High. Educ. 41, 828–846 (2016)

Leydesdorff, L., Park, H.W.: Full and fractional counting in bibliometric networks. J. Informetr. 11, 117–120 (2017)

Lim, K.: University funding initiatives, SNU 46.5 billion won ranked at the 1st. The Korea Economic Daily. Retrieved from http://www.hankyung.com/news/app/newsview.php?aid=2015100964141&intype=1 (2015). Accessed 31 July 2016. (in Korean)

Lotka, A.: The frequency distribution of scientific productivity. J. Wash. Acad. Sci. 16, 317–323 (1926)

Merton, R.K.: The matthew effect in science: the reward and communication systems of science are considered. Science 159(80), 56–63 (1968)

MEST (Ministry of Education, Science and Technology).: Future Korea opening with creative talents and advancement of science and technology. In: 2011 Operational Report, 17 December 2010b. (in Korean)

MEST (Ministry of Education, Science and Technology).: Ten-Year Basic Plan for Higher Education Funding. (2010a). (in Korean)

Milanovic, B.: Global Inequality: A New Approach for the Age of Globalization. Harvard University Press, Cambridge (2016)

Moed, H.F., van Leeuwen, T.N., Visser, M.S.: Trends in publication output and impact of universities in The Netherlands. Res. Eval. 8, 60–67 (1999)

Münch, R.: Academic Capitalism: Universities in the Global Struggle for Excellence. Routledge, Abingdon (2013)

Münch, R.: Academic capitalism. In: Thompson, W.R. (ed.) Oxford Research Encyclopedia of Politics. Oxford University Press, USA (2016)

Münch, R., Schäfer, L.O.: Rankings, diversity and the power of renewal in science. A comparison between Germany, The UK and The US. Eur. J. Educ. 49, 60–76 (2014)

Myklebust, J.P., Dobson, I.R.: Academics question pressure to merge universities, http://www.universityworldnews.com/article.php?story=20160916170857191, (2016)

NABO (National Assembly Budget Office).: Evaluation of World Class University (WCU) program. Program Evaluation 11–11. (2011). (in Korean)

Niño-Zarazúa, M., Roope, L., Tarp, F.: Global inequality: relatively lower, absolutely higher. Rev. Income Wealth (2016). https://doi.org/10.1111/roiw.12240

NRF (National Research Foundation of Korea).: 2015 University research activity survey analysis report. (2015). (in Korean)

Park, C.: Daejeon universities show lukewarm reactions to ‘BK21 Plus’…‘a pie in the sky’. News1 Korea. Retrieved from http://news1.kr/articles/?1141770 (2013). Accessed 31 July 2016 (in Korean)

Park, Y.: Government-led reform makes universities as private institutions for getting a job. The Korea Economic Daily. Retrieved from http://www.hankyung.com/news/app/newsview.php?aid=2015040797391&intype=1 (2015). Accessed 31 July 2016 (in Korean)

Park, H.W., Leydesdorff, L.: Longitudinal trends in networks of university-industry-government relations in South Korea: the role of programmatic incentives. Res. Policy 39, 640–649 (2010)

Park, H.W., Yoon, J., Leydesdorff, L.: The normalization of co-authorship networks in the bibliometric evaluation: the government stimulation programs of China and Korea. Scientometrics 109, 1017–1036 (2016)

Ravallion, M.: Income inequality in the developing world. Science 344(80), 851–855 (2014)

Shibayama, S.: Distribution of academic research funds: a case of Japanese national research grant. Scientometrics 88, 43–60 (2011)

Shin, J.C.: Building world-class research university: the Brain Korea 21 project. High. Educ. 58, 669–688 (2009)

Shin, J.C.: South Korea: decentralized centralization–fading shared governance and rising managerialism. In: Locke, W., Cummings, W.K., Fisher, D. (eds.) Changing Governance and Management in Higher Education, pp. 321–342. Springer, Netherlands (2011)

Shin, J.C.: Higher education development in Korea: western university ideas, Confucian tradition, and economic development. High. Educ. 64, 59–72 (2012)

Shin, H., Kim, B.: Korea University and Yonsei University give up LINC initiative, ‘Why?’. Daily University News Network. Retrieved from http://news.unn.net/news/articleView.html?idxno=107813 (2012). Accessed 31 July 2016 (in Korean)

Sin, S.-C.J.: Longitudinal trends in internationalisation, collaboration types, and citation impact: a bibliometric analysis of seven LIS journals (1980–2008). J. Libr. Inf. Stud. 9, 27–49 (2011)

Stephan, P.E., Levin, S.G.: Inequality in scientific performance: adjustment for attribution and journal impact. Soc. Stud. Sci. 21, 351–368 (1991)

Ville, S., Valadkhani, A., O’Brien, M.: The distribution of research performance across Australian Universities, 1992–2003, and its implications for “Building Diversity”. Aust. Econ. Pap. 45, 343–361 (2006)

Wu, J.: Distributions of scientific funding across universities and research disciplines. J. Informetr. 9, 183–196 (2015)

Xie, Y.: “Undemocracy”: inequalities in science. Science 344(80), 809–810 (2014)

Yu, H.: Korean education in changing economic and demographic contexts. In: Park, H., Kim, K. (eds.) Korean Education in Changing Economic and Demographic Contexts, pp. 97–113. Springer, Singapore (2014)

Zeileis, A.: Ineq: measuring inequality, concentration, and poverty. R package version 0.2-13. https://CRAN.R-project.org/package=ineq. (2014)

Zheng, M., Junpeng, Y., Cheng, S., Zhiyu, H., Zhenglu, Y., Yuntao, P., Yishan, W.: Using Lorenz Curve and Gini Coefficient to reflect the inequality degree of S & T publications: an examination of the institutional distribution of publications in China and other Countries. In: Proceedings of Fourth International Conference on Webometrics, Informetrics and Scientometrics & Ninth COLLNET Meeting, pp. 1–9 (2008)

Zhi, Q., Meng, T.: Funding allocation, inequality, and scientific research output: an empirical study based on the life science sector of Natural Science Foundation of China. Scientometrics 106, 603–628 (2016)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jeon, J., Kim, S.Y. Is the gap widening among universities? On research output inequality and its measurement in the Korean higher education system. Qual Quant 52, 589–606 (2018). https://doi.org/10.1007/s11135-017-0652-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11135-017-0652-y