Abstract

As adopting information and communications technology to deliver instruction and facilitate learning, course management systems (CMSs) offer an alternative capability to enhance management practices. Based on innovation diffusion theory, this study explores CMS effectiveness (EF) and reliability (RL), and considers both perceived innovative attributes (IA) and demographic characteristics. This study also exams the moderating effect of complexity (CX) and mediating effect of function evaluation (FE) on the causal relationship between IA and outcome variables (i.e., EF, RL). Analysis also includes the differential effects of three types of CMSs and gender differences. Participants were 238 undergraduates, majored in business or management, who volunteered to complete an online survey. Results show that perceived IA affect RL and EF, but not FE. CX moderates the effect of perceived IA on RL, but does not moderate the effects of perceived IA on FE and EF. EF, but not FE, appears to mediate the effects of perceived IA on RL. There is no significant difference in model fit between genders, but there is among the type of CMS solution group. Conclusions and implications are offered regarding the future research for program leaders and practitioners.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Management education has expanded and discussed considerably as a form of professional development to enhance managerial practices. Academia scholars and organization practitioners have been seeking to bridge the gap existed ostensibly due to the fact that the two parties look at managerial practices from different perspectives. To understand the potential issues, recent studies have focused on management education (Baldwin et al. 2011; Bartunek and Egri 2012; Egri 2012; Engwall 2007; Ireland 2012; Yen-Chun Jim et al. 2010), specifically the issues of value (Aldag 2012; Greve 2012; Moosmayer 2012), gender dissonance (Kelan and Jones 2010; Tella and Mutula 2008), and knowledge management system (Martins and Kellermanns 2004; Redpath 2012). The utilization of knowledge management system assisted by information and communications technologies (ICTs) in the management (business) education is the gist of the study. Since colleges and universities increasingly offer courses online, students need skills to succeed in these relatively innovative ICT-supported learning environments (Wadsworth et al. 2007). Technological innovations often fail because researchers pay too much attention to technical or product-related features, and tend to ignore user acceptance, which is one of the most important parameters (Verdegem and Marez 2011). However, relatively little attention has been given to the role of perceived IA in explaining why technologies are used (or not) (Braak and Tearle 2007). Fulk and Gould (2009) called for published research to report in great detail the features of technologies and the contexts in which they and their users are situated during the period of the study. Alias and Zainuddin (2005) posited that general diffusion theories should be used to bridge instructional theories. The social construction viewpoint often treats ICTs as an objective and external force affecting organizational structure (Rogers 1995). More recent work of Rogers (2003) has emphasized the importance of understanding the attributes of an innovation, asserting that the perception of these attributes has a significant effect on predicting the future adoption of that particular innovation.

1.1 Course management systems (CMSs)

The use of CMSs has become a set of tools that load instruction and learning especially their ability to generate informed predictions for the likely adoption of IS/IT. Morgan (2003) reported in both pedagogical impact and institutional resource consumption, course management systems form the academic system equivalent of enterprise resource planning (ERP) systems (p. 8). Morgan (2003) also brought up that the introduction of the enterprise-level CMS in higher education begins a new and important journey of a thousand miles (p. 85). In its simplest form, a CMS allows an instructor to post information on the Web without having to know HTML or other computer languages, and provides users with a set of tools and a framework to develop online course content. A CMS also facilitates subsequent teaching and management (Lane 2008; Trotter 2008). Morgan (2003) posited that making CMSs available to faculty members and students raises the following questions: which products to adopt, how to provide them to faculty, and how to maximize their effectiveness (p. 15). Users of media and technologies tend to be goal-oriented and purposive. In an empirical study of 730 faculty and instructional staff in the University of Wisconsin system, Morgan (2003) reported that faculty members tend to use static tools for storing syllabi and class materials, posting announcements, and dealing with administrative tasks, in addition to the gradebook, assessment, and discussion group tools. The active initiative of users plays an intervening role in the use of media or technology in a learning arena. Course management systems (CMSs) are playing an increasingly critical role in meeting the academic needs of higher education (Morgan 2003). In the context of electronic courseware adoption (i.e., CMSs), faculty members are often selective in using specific functions of the system to fulfill their needs (Park et al. 2007). At the same time, faculty members are likely to actively evaluate the functions to assess their value for improving teaching performance. Users who favorably evaluate CMS functions are more likely to use, and a stronger behavioral intention to keep using, the technology than those who do not (Park et al. 2007). The motives and strategies of those who adopt CMSs, particularly in educational settings, have been an issue for years even though the use of CMSs in higher education is not clear or well-researched (Casmar and Peterson 2002). Though there are many examples in higher education where the use of CMS is well-acknowledged and fully embedded into courses, its use is still patchy in certain aspects and not yet fully confirmed (Jacobsen 2000).

Shen et al. (2006) proposed a model of how social influences (i.e. subjective norms) shape online learners’ perceptions toward usage of course delivery systems based on Theory of Reasoning Action (TRA). And they indicated that better understanding the factors that influence perceived usefulness and perceived ease-of-use in online learning has potential to improve the design and implementation of learning systems. Based on Technology Acceptance Model, Martins and Kellermanns (2004) developed and tested a model predicting business school students’ acceptance of a Web-based course management system, i.e. WebCT. Their finding revealed that students were more likely to perceive an innovative CMS as useful when they perceived greater performance incentives as a changing motivator to use the system. Martins and Kellermanns (2004) also suggested that future research could explore the effects of student participation in the decision to use a particular CMS on student acceptance of the system. Concerning which product to adopt as the major solution, Malikowski (2008) pointed out that many CMSs from different vendors tend to offer the same core features, but each system labels the feature differently. In their study on bridging CMS technology and learning theory, Malikowski et al. (2007) suggested that CMSs should categorize features by their function, instead of their name. The core and most frequently-used CMS features are (a) posting and transmitting information and material relevant to the courses, (b) recording learning performance, (c) administering quizzes/surveys, (d) managing group collaboration asynchronously, and (e) managing and scheduling course-related resources/events (Berking and Gallagher 2011; Malikowski et al. 2006). System characteristics directly affect user beliefs and acceptance of technology in different contexts (Davis et al. 1989; Lau and Woods 2009; Selim 2003). Introducing user perception of technology effectiveness, Abdalla (2007) proposed technology effectiveness model (TEM) and validated the model with the e-Blackboard learning in one university. Results suggested that the ease of use and the usefulness positively influenced student’s attitudes towards the system, which in turn determines technology’s effectiveness.

CMSs are sometimes labeled as learning management systems (LMSs) within the user community, but they are distinctly different in the sense that they do not deliver the core learning experiences—those are provided live in classrooms (Berking and Gallagher 2011). Berking and Gallagher (2011) defined that LMSs are optimized for the delivery of learner-led and embedded learning in an asynchronous communication format. That is, LMSs are used primarily in the business and government training community, while CMSs are most commonly used in higher education. Because CMSs are designed more for education than for training, there is likely no point in comparing their advantages and disadvantages. Examples of CMSs include Blackboard\(^{{\circledR }}\), WebCT\(^{{\circledR }}\), eCollege\(^{{\circledR }}\), Moodle (open source), Scholar360\(^{{\circledR }}\), WebStudy\(^{{\circledR }}\), Wisdom Master\(^{ {\circledR }}\) (Taiwan domestically), and others developed by academic organizations. LMS examples include Moodle (open source), Oracle Learning Management\(^{{\circledR }}\), Google CloudCourse, CompanyCollege LMS\(^{{\circledR }}\), SAP Enterprise Learning\(^{{\circledR }}\), and SCORM Cloud\(^{{\circledR }}\). Specifically, Wisdom Master\(^{{\circledR }}\) is a platform programmed by SUN NET Technology. Currently, Wisdom Master\(^{{\circledR }}\) serves as major CMS solution for more than 70 % of colleges and universities in Taiwan. This study intends to examine different solutions of CMSs: international solution (e.g., Blackboard \(^{{\circledR }})\), domestic solution (e.g., Wisdom Master\(^{{\circledR }}\)), and university self-developed.

1.2 Innovation diffusion theory (IDT)

Diffusion of innovation should be research-based, and one of the best known researchers is Everett Rogers (Romiszowski 2004). His work has provided a theoretical basis for much of the research in the field of innovation diffusion. Rogers (2003) defined diffusion as the process by which an innovation is communicated through certain channels over time among the members of a social system (p. 5). Innovation diffusion then can be considered to be the reasons why, and the process through which, an innovation is adopted by people in a specific setting or community (Braak and Tearle 2007, p. 2967). Innovation diffusion theory offers insights into different aspects of this process. Rogers (1995) identified and structured his work on four aspects of innovation diffusion: the diffusion process, individual human characteristics that affect people’s adoption of an innovation, people’s perceptions of an innovator’s characteristics, and the pattern of the changing rate of adoption of an innovation as it passes through different stages. Rogers (1995) posited the following:

The diffusion research literature indicates that much effort has been spent in studying “people” differences in innovativeness (that is, in determining the characteristics of the different adopter categories) but that relatively little effort has been develoted to analyzing “innovation” differences (that is, in investigating how the properites of innovations affect their rate of adoption). This latter type of research can be of great value in predicting people’s reactions to an innovation. (p. 204)

Diffusion of an innovation occurs through a five-step process: knowledge-persuasion-decision-implementation-confirmation (Rogers 1995). Persuasion is the stage in which the individual is aspired to get to know the innovation, and actively seeks information/detail about the innovation(Rogers 1995). That is, perceived characteristics of innovation occur in the persuasion stage. Building on Rogers’s early work in 1983, Dearing and Meyer (1994) proposed a set of eleven attributes of an innovation profile to determine the degree of innovation adoption. Rogers (1995) identified five innovative attributes (IA), which he postulated affected its adoption: relative advantage (RA), compatibility (CP), complexity (CX), observability (OB), and trialability. Of these five attributes, RA, CX, and CP are primarily associated with decisions of either adoption or rejection. Considering the research context, this study considers the IA proposed by Rogers (1995) and Dearing and Meyer (1994).

Following Roger’s view of both 1995 and 2003, the current study adopts a production utilization standpoint to validate whether proposed IA differ among ICT-assisted environments (i.e., CMSs). Oh et al. (2003) theorized that IA, as antecedents of attitudes toward technology, are mediated through perceived ease of use and perceived usefulness. In a study about developing an innovation scale of computer attributes for learning, Braak and Tearle (2007) found those IA have a significant effect on ICT use for learning (i.e., CMSs). Chang and Tung (2008) combined innovation diffusion theory and the technology acceptance model in addition to two external variables perceived system quality and computer self-efficacy to examine behavioral intentions to use the online learning course websites. They found that CP, perceived usefulness, perceived ease of use, perceived system quality, and computer self-efficacy were critical factors.

1.3 Gendered difference: dissonance and consistency

A number of empirical studies documented the differential gender attitudes toward the use of computing technologies (Caspi et al. 2006; Chou et al. 2011, Ding et al. In Press; Kay and Lauricella 2011; Lin et al. 2012). Earlier research indicated females tend to express negative attitudes and less confidence toward technology use in computer-mediated learning environments (Dambrot 1985; Gutek and Bikson 1985; Neuman 1991; Reinen and Plomp 1997). To evaluate gender differences in ICT attitudes, Broos (2005) administered a quantitative study (\(n=1,058\)) to a sample of 18 years or older. Broos (2005) reported that gender differences appeared in three aspects of attitudes towards new communications technology: computer anxiety, computer liking, and internet attitude. Males were generally more self-assured about ICTs, whereas females revealed an attitude of hesitation. Moreover, the influence of prior experience with computers works differently for males and females. Baloglu and Çevik (2008) proposed that there may be different levels of gender anxiety now than previously, especially in an era when computers are more commonly integrated into our daily lives. Bulter et al. (2005) reported that females demonstrated greater career-related IT skills than males in basic computer skills, spreadsheet programs, database programs, and website creation. The female students in the study demonstrated greater IT skills than the males, and placed higher value on those skills, demonstrated greater use of computer-mediated platforms for learning (e.g., Blackboard\(^{{\circledR }})\), and outperformed males in academic achievement in an online setting (DeNeui and Dodge 2006). Gender also devotes different daily time spent on the use of the Internet for communication, e-mailing, chatting, information access, downloading, and entertainment (Akman and Mishra 2010).

Contrary to these findings of gender differences in ICT acceptance, recent research presents opposing results. Kay and Lauricella (2011) examined whether the use of laptops in higher education classrooms is disproportionally advantageous to males and disadvantageous to females in two types of behavior: on-task behaviors [note-taking, academic activities, instant messaging (IM)] and off-task behaviors (e-mail, IM, games, movies, distractions). They found no gender differences with respect to IM for academic purposes. However, regarding off-task behavior, females were more distracted by their peers’ use of laptops than males, whereas males reported that they played significantly more games during class. Previous research on the gender differences in ICT perceptions and acceptance of ICT-assisted learning has reported mixed results. Therefore, this study assumes that gender difference is no longer rooted in the availability of ICT access, but in gender traits such as user intention and behaviors. The gender issue has shifted from a focus on broad-stroke, holistic differences to more specific considerations such as specific domain knowledge acquisition and instructional methodology (Lin and Overbaugh 2009). Malikowski et al. (2006) found that traditions and norms affect LMS adoption more than course size or program type. However, organizational factors such as course discipline, course type (mandatory or elective), class size, staff size, instructor status, and timing of the course within the study program, remain mostly neglected in the context of LMS use and satisfaction (Naveh et al. 2010). In the current environment of rapidly evolving ICT applications, it is even more substantial to develop a comprehensive framework to understand the antecedents and corresponding contexts of technology acceptance in management education. Therefore, this study investigates the causal relationship between IA and CMS reliability (RL) among college student users. This study considers five of proposed antecedents as IA, and considers the remaining four as more essential components of CMS functionality attributes.

2 Materials and methods

To determine the role of CMS innovation in the effectiveness and RL of CMSs, this study considers both the moderating effect of CX and the intervening effect of function evaluation (FE) on the causal relationship between IA and outcome variables. This study also examines the differences in effects produced by different CMSs and genders.

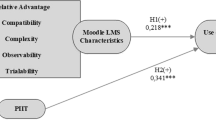

Structural equation modeling (SEM) is a commonly-used approach to regression analysis and factor analysis (Eye et al. 2003). This study applies SEM to validate the hypothesized overall model (Fig. 1). For each variable that adopts the same numerical measurement scale (i.e., Likert Scale of 1–7), no transformation of scale is used. A composite score of perceived IA was created by summing RA, economic advantage (EA), CP, OB, and divisibility (DV). Perceived IA is hypothesized to have an effect on FE, effectiveness (EF), and RL. Each of these effects is hypothesized to be moderated by CX, so an interaction term (i.e., a product term of IA and CX) is created to examine moderating effect of CX.

2.1 Participants and procedures

The participants were a purposive sample of 238 college students enrolled in management courses offered via CMSs (i.e., Wisdom Master\(^{{\circledR }}\), Blackboard\(^{{\circledR }}\), and self-developed) in Taiwan. The demographic composition of the participants was 59.2 % (\(n=141\)) males and 40.8 % (\(n=97\)) females. Of the participants, 43.3 % (\(n=103\)) were taking courses via Wisdom Master\(^{{\circledR }}\) (abbreviated as WM and coded as CMS 1), 17.2 % (\(n=41\)) were using Blackboard\(^{{\circledR } }\) (abbreviated as Bb and coded as CMS 2), and 39.5 % (\(n=94\)) were using their university’s self-developed CMS (abbreviated as SD and coded as CMS 3). The set of online scales was administered by the researchers via Google Docs\(^{\text{ TM }}\), and took about 10–15 min to complete. The students were invited to respond to the scales on a voluntary basis. No course credits or other rewards were given to the participants. The participants were assured of confidentiality. Due to the use of a non-random sample, generalizing of the results of this study is limited to similar groups.

2.2 Measures

2.2.1 Perceived innovative attributes

Following prior studies (Braak 2001; Braak and Goeman 2003), this study accounts for the nature of the innovation as the adoption of CMS use for learning in higher education. The scale, which consists of the five attributes and 14 items, was expected to be strongly related to the actual use of computers for learning. The questionnaire measured how much students used computers for learning (see Appendix 1). The participants rated their perception level on a 7-point Likert scale, ranging from strongly disagree (1) to strongly agree (7). The internal consistency of the scale is .97.

2.2.2 Scale of function evaluation (FE)

Eight items from the scale measured the belief of self-efficacy by phrasing them as individuals’ judgments of their perception to use the CMS to produce overall attainments, as opposed to accomplishing specific sub-tasks. The sample item is I feel confident in using online discussion board. The items were ranked by a 7-point Likert scale with an internal consistency of .93.

2.2.3 Scale of reliability (RL)

Two items from the scale were adapted to the conceptual definition of being consistent in communication. Verdegem and Marez (2011) defined RL as a dimension of perceived risk that is not covered by other determinants (p. 413). The items are: (1)using CMS for learning is better than not using it; (2)using CMS for course management is better than not using it. The items were ranked by a 7-point Likert scale, with an internal consistency of .90.

2.2.4 Scale of effectiveness (EF)

Two items from the scale were adapted to the conceptual definition of being relatively capable in achieving an idea state. The items are: (1) CMS assists me to learn effectively; (2) CMS assists me to fulfill course requirement effectively. The items were ranked by a 7-point Likert scale, with an internal consistency of .89.

2.2.5 Scale of complexity (CX)

Two items from the scale were adapted to the conceptual definition of how easy to understand and to use. The items are: (1) CMS is relatively easy to understand; (2) CMS is user-friendly. The items were ranked by a 7-point Likert scale, with an internal consistency of .87.

3 Results and discussion

Each composite score was centered by its mean before analysis. Kenny and Judd (1984) recommended that raw scores should be mean centered prior to the formation of product terms and formal analysis. Correlations among five perceived IA (i.e., RA, EA, CP, OB, and DV) are all above .75 and contribute to Cronbach’s Alpha of .94. Factor analysis shows that each of these five domains has a factor loading of at least .9 on one factor, as they are proposed to represent a latent construct of a perceived IA. This study adopts a composite score of IA rather than a latent factor of IA (i.e., a latent construct reflected by RA, EA, CP, OB, and DV). This decision was made in the current study considering its small sample size that can induce upward bias and overestimated parameter values for testing the latent interaction effect (Sun et al. 2011). The correlations among variables ranged from approximately .6–.9 (Table 1).

Jaccard and Choi (1996) indicated that if the interaction term consists of a single variable (as would be the case in a single product term between continuous variables), the approximate sample size needed to achieve a power of .8 at \(\alpha \) = .05 is 130. Although this is a ballpark estimate in SEM-based regression analysis, the current study (with a sample size of 238) effectively monitors the issue of statistical power in using a single interaction term rather than five product terms (i.e., each of RA, EA, CP, OB, and DV is multiplied by CX) to represent the latent construct of interaction between perceived IA and CX.

3.1 Moderating effect of complexity

Based on the full model in Fig. 1, Table 2 summarizes the results for Model 1 (with both CX and interaction of IA*CX), Model 2 (with CX as a moderator), and Model 3 (with no CX as a moderator). Perceived IA and CX consistently had statistically significant effects on FE, effectiveness, and RL at an alpha level of .05. Effectiveness also had a statistically significant effect on RL.

Including CX and IA*CX significantly improved model fit, with the p-values for changing \(\chi ^{2}\) being \(<\).0001 (Model 3–2) and .0152 (Model 2–1), respectively. However, only by including the interaction effect (i.e., IA*CX) is it possible to achieve an acceptable model fit of \(\chi ^{2} = .389\) (df = 1, \(p = .5326\)), a root mean square error of approximation (RMSEA) close to zero, and Bentler–Bonett Normed Fit Index (NFI) close to one. This moderating effect is also shown by a statistically significant path estimate of \(-\).109 from the interaction term of IA*CX to RL. CX acted as a moderator of the effect of perceived IA on RL. However, CX failed to moderate the effects of perceived IA on FE and effectiveness, as shown by the very small path estimates of IA*CX to FE (.003) and IA*CX to EF (.009) respectively. To preserve more degrees of freedom for subsequent analyses, the remainder of this study uses a reduced model that does not specify these two paths (i.e., IA*CX to FE and IA*CX to EF).

3.2 Mediating effect of effectiveness and function evaluation

This section examines the roles of FE and effectiveness as mediators of the effect of perceived innovation attributes on RL. Based on a reduced model (i.e., excluding paths of IA*CX to FE and IA*CX to EF from the diagram in Fig. 1), Table 3 summarizes the results for Model 4 (reduced model), Model 5 (reduced model using only FE as a mediator), Model 6 (reduced model using only EF as a mediator), and Model 7 (reduced model excluding both FE and EF as mediators). Figure 2 shows that perceived IA and CX consistently had statistically significant effects on FE, effectiveness, and RL at an alpha level of .05. Effectiveness also had a statistically significant effect on RL.

Using FE, effectiveness, or a combination of these factors as mediators of the effect of perceived innovation attributes on RL produced acceptable model fits, as indicated by an RMSEA value close to zero and a Bentler–Bonett NFI close to one in Models 4, 5, and 6. Not using FE and effectiveness as mediators adversely affected model fit, with Model 7 showing a p-value for the change of \(\chi ^{2}\) as \(<\) .0001. RL was more affected by effectiveness (with path estimates from EF to RL = .372 and R-square in RL as .76 in Models 4 and 6) than by FE (with a path estimate from FE to RL = \(-\).044 and R-square in RL as .73 in Model 5). When including EF as a mediator of the effect of perceived IA on RL, the path estimate of IA to RL dropped from .583 in Model 7 to .285 in Models 4 and 6. When including only FE as a mediator, the same path had no substantial change of estimates (i.e., .594 in Model 5 compared to .583 in Model 7). Effectiveness appears to be a stronger mediator than FE for the effect of perceived IA on RL.

Perceived IA consistently had significant effects on effectiveness (i.e., path estimates of IA \(\rightarrow \) EF = .785 in Model 4 and .767 in Model 6) at an alpha level of .05, even when Model 4 used FE as a mediator of the effect of IA on EF. FE does not appear to mediate the effect of perceived IA on effectiveness.

3.3 Differences among three types of CMS users

This section compares two model fits from fitting any two CMS groups as the same model or independent models. Table 4 shows that p-values for change of model fits are .022 for CMS 1 versus CMS 2, \(<\) .001 for CMS 1 versus CMS 3, and .006 for CMS 2 versus CMS 3. All values of \(\Delta \)CFI, \(\Delta \)Gamma hat, and \(\Delta \)McDonald’s NCI exceeded \(-\)0.01, \(-\)0.001, and \(-\)0.02 respectively, which suggests to reject the null hypothesis of invariance based on the simulation study of Cheung and Rensvold (2002). In other words, each CMS group appears using a unique structural model for predicting RL. When CMS groups were estimated as independent models, 87, 72, and 67 % of variance in RL for CMS 1, 2, and 3 were explained respectively. When fitting any two CMS groups as independent models, RMSEA consistently dropped close to zero, unlike the RMSEA of fitting them as the same model. Since models with no cross-group constraint fitted reasonably well, no further exploration of weak measurement invariance was pursued (Meredith 1993). For CMS 1, CX had the greatest effect on RL with a path estimate of CX \(\rightarrow \) RL as .462. For CMS 2, perceived IA had the greatest effect on RL with a path estimate of IA \(\rightarrow \) RL as .436. For CMS 3, effectiveness had a greater effect on RL with a path estimate of EF \(\rightarrow \) RL as .553.

3.4 Differences between genders

This section compares two model fits from fitting two gender groups as the same model or independent models. Table 5 below shows that the p-value for change of model fits is .953. In other words, there was no statistically significant difference between gender groups in terms of the structural models used to predict RL. Although \(\Delta \)Gamma hat \(>-\)0.001 and \(\Delta \)McDonald’s NCI \(>-\)0.02 suggested to reject the null hypothesis of invariance based on Cheung and Rensvold (2002) study, path estimates between gender groups didn’t appear to be drastically different. Proportion of variances in variables FE, EF, and RL also appeared comparable between gender groups.

3.5 Level of reliability in various groups of CMS, gender, and interaction of IA*CX

As illustrated in Table 6, this section summarizes average RL scores in different groups of CMS, genders, and interaction using centered-by-mean IA*CX (i.e., Group 1: both IA and CX are positive; Group 2: both IA and CX are negative; Group 3: IA is positive and CX is negative; Group 4: IA is negative and CX is positive). Because the scale of CX is coded to conceptually define the ease of understanding and using course management systems, a higher value of CX represents greater ease. For Group 1, CMS 1 had the highest average RL of 2.22, followed by 2.16 for CMS 2 and 1.86 for CMS 3. However, in Groups 2 and 3 (i.e., when both IA and CX were below averages or only CX was below averages), subjects in CMS 1 had the lowest average RL of \(-\)2 and .24. The average RL in CMS 1 might be more sensitive than CMS 2 and CMS 3 to changes in the number of IA and level of CX. This observation supports the results in Table 4, because CMS 1 is the only group that produced significant estimates on both paths of IA \(\rightarrow \) RL and CX \(\rightarrow \) RL.

For Group 4, when attributes were perceived as less innovative but easier to understand or use, female subjects in CMS 3 had the lowest average RL of \(-\).36, while subjects in all other CMS groups had positive average RL. The exact opposite trend appeared in Group 3, in which female subjects of CMS 3 had the highest average RL of 1.64, when attributes were perceived as innovative but more complex. This suggests that RL perceived by female subjects in CMS 3 might be more affected by perceived IA than by CX. This observation echoes the results of Table 5, in which female subjects typically have a stronger path estimate on IA \(\rightarrow \) RL (i.e., .299) than on CX \(\rightarrow \) RL (i.e., .233). However, this observation requires further verification from future studies, as there were only two female subjects from CMS 3 in Group 3 and three female subjects from CMS 3 in Group 4.

4 Conclusion and discussion

The purpose of this study is to validate the proposed model of causal relationship among the abovementioned attributes, and presents the following findings. Perceived IA consistently affected FE, effectiveness, and RL. Yet, FE did not appear to mediate the effect of perceived IA on effectiveness. The effect of IA on RL was mediated by effectiveness, but not FE. CX acted as a moderator of the effect of perceived IA on RL, but did not moderate the effects of perceived IA on FE and effectiveness. Each CMS group used a unique structural model for predicting RL. These findings revealed no gender effect in terms of the structural models for predicting RL.

Despite the potential of CMSs for learning, many teaching faculty use CMSs merely as a delivery mechanism for course content/material communication (Vovides et al. 2007). Teaching faculty tend to be the lead facilitators of CMS usage, and students are more likely to be recipients. The participants of this study are college students, who are recipients in terms of the CMS application. Therefore, their perception towards the kind of CMS solution might be confounded by the instruction. To control this factor, we purposively invited the intact groups taught by faculty with similar andragogy. Still, it is unclear how much students can really benefit from the aid of ICTs as the study intended to understand the effectiveness of the CMSs. Osborn (2010) suggested that instructors presenting tutorial materials via a CMS (i.e., Blackboard\(^{{\circledR }})\) should provide external incentives to maximize learning. He stressed that this tactic is especially critical for general education courses or classes in which learners are not intrinsically motivated to learn. In the context of computer-mediated communication (CMC) attribution, Braak (2001) hypothesized that the higher the perceived congruency between the attributes of CMC as an innovation and familiarity of teaching practice, the more likely teachers are to use CMC. In other words, the perceived attributes of CMC are strongly related to technological innovativeness and attitudes towards using CMC.

Another related issue of prime importance for the acceptance of CMSs (at least in higher education) is the workload that it creates for students and staff (Romiszowski 2004). As initiatives are put in place to extend and integrate the use of CMSs for instruction and learning in higher education, it is important that relevant instruments be made available to help predict or indicate likely adoption patterns, and hence highlight areas for pre-emptive action (Braak and Tearle 2007). More, Shen et al. (2006) reported that the social nature of the online learning course plays a mediating role in the use of the technology, still much research is need to articulate how the social influences interact with other antecedents and under what circumstances the social beliefs are more or less influential. The use of ICTs does not guarantee andragogy or effective instruction. By attempting to combines ICT diffusion theories with the instructional theories employed in educational settings, this study provides useful implications for educators, scholars, and practitioners.

References

Abdalla, I.: Evaluating effectiveness of e-blackboard system using tam framework: a structural analysis approach. AACE J. 15(3), 279–287 (2007)

Akman, I., Mishra, A.: Gender, age and income differences in internet usage among employees in organizations. Comput. Hum. Behav. 26(3), 482–490 (2010). doi:10.1016/j.chb.2009.12.007

Aldag, R.J.: Bump it with a trumpet: on the value of our research to management education. Acad. Manag. Learn. Education 11(2), 285–292 (2012)

Alias, N.A., Zainuddin, A.M.: Innovation for better teaching and learning: adopting the learning management system. Malays. Online J. Instr. Technol. 2(2), 27–40 (2005)

Baldwin, T.T., Pierce, J.R., Joines, R.C., Farouk, S.: The elusiveness of applied management knowledge: a critical challenge for management educators. Acad. Manag. Learn. Education 10(4), 583–605 (2011). doi:10.5465/amle.2010.0045

Baloglu, M., Çevik, V.: Multivariate effects of gender, ownership, and the frequency of use on computer anxiety among high school students. Comput. Hum. Behav. 24(6), 2639–2648 (2008). doi:10.1016/j.chb.2008.03.003

Bartunek, J.M., Egri, C.P.: Introduction:can academic research be managerially actionable? what are the requirements for determining this? Acad. Manag. Learn. Education 11(2), 244–246 (2012)

Berking, P., & Gallagher, S. (2011). Choosing a Learning Management System. http://creativecommons.org/licenses/by-nc-sa/3.0/. Accessed 20 Feb 2012

Braak, V.J.: Factors influencing the use of computer mediated communication by teachers in secondary schools. Comput. Education 36(1), 41–57 (2001)

Braak, V.J., Goeman, K.: Differences between general computer attitudes and perceived computer attributes: development and validation of a scale. Psychol. Rep. 92(2), 655–660 (2003)

Braak, V.J., Tearle, P.: The computer attributes for learning scale (CALS) among university students: scale development and relationship with actual computer use for learning. Comput. Hum. Behav. 23(6), 2966–2982 (2007)

Broos, A.: Gender and information and communication technologies (ICT) anxiety: male self-assurance and female hesitation. CyberPsychol. Behav. 8, 21–31 (2005)

Bulter, R., Ryan, R., Chao, T.: Gender and technology in the liberal arts: aptitudes, attitudes, and skills acquisition. J. Inf. Technol. Education 4, 347–362 (2005)

Casmar, S., & Peterson, N. (2002). Personal factors influencing faculty’s adoption computer technology: a model framework. http://coe.sdsu.edu/scasmar/site2002.pdf. Accessed 20 Feb 2012

Caspi, A., Chajut, E., Saporta, K.: Participation in class and in online discussions: gender differences. Comput. Education 50(3), 718–724 (2006)

Chang, S.-C., Tung, F.-C.: An empirical investigation of students’ behavioural intentions to use the online learning course websites. British J. Educational Technol. 39(1), 71–83 (2008)

Cheung, G.W., Rensvold, R.B.: Evaluating goodness-of-fit indexes for testing measurement invariance. Struct. Equ. Model. 9(2), 233–255 (2002)

Chou, C., Wu, H.-C., Chen, C.-H.: Re-visiting college students’ attitudes toward the internet-based on a 6-T model: gender and grade level difference. Comput. Education 56(4), 939–947 (2011). doi:10.1016/j.compedu.2010.11.004

Dambrot, F.H.: The correlates of sex differences in attitudes toward and involvement with computers. J. Vocat. Behav. 27(1), 71–86 (1985)

Davis, F.D., Bagozzi, R.P., Warshaw, P.R.: User acceptance of computer technology: a comparison of two theoretical models. Manag. Sci. 35, 982–1003 (1989)

Dearing, J.W., Meyer, G.: An exploratory tool for predicting adoption decisions. Sci. Commun. 16(1), 43–57 (1994)

DeNeui, D.L., Dodge, T.L.: Asynchronous learning networks and student outcomes: the utility of online learning components in hybrid courses. J. Instr. Psychol. 33(4), 256–259 (2006)

Ding, N., Bosker, R.J., Harskamp, E.G.: Exploring gender and gender pairing in the knowledge elaboration processes of students using computer-supported collaborative learning. Comput. Education. doi:10.1016/j.compedu.2010.06.004 (in press)

Egri, C.P.: Introduction: developing conversations about management education. Acad. Manag. Learn. Education 11(1), 124–124 (2012). doi:10.5465/amle.2012.0031

Engwall, L.: The anatomy of management education. Scand. J. Manag. 23(1), 4–35 (2007). doi:10.1016/j.scaman.2006.12.003

Fulk, J., Gould, J.J.: Features and contexts in technology research: a modest proposal for research and reporting. J. Comput. Mediat. Commun. 14(3), 764–770 (2009). doi:10.1111/j.1083-6101.2009.01469.x

Greve, H.R.: Correctly assessing the value of our research to management education. Acad. Manag. Learn. Education 11(2), 272–277 (2012)

Gutek, B.A., Bikson, T.K.: Differential experiences of men and women in computerized offices. Sex Roles 13(3), 123–136 (1985)

Ireland, R.D.: Management research and managerial practice: a complex and controversial relationship. Acad. Manag. Learn. Education 11(2), 263–271 (2012)

Jaccard, J., Choi, K.W.: LISREL Approaches to Interaction Effects in Multiple Regression. Sage Publications, Thousand Oaks, CA (1996)

Jacobsen, D. M.: Examining technology adoption patterns by faculty in higher education. Paper presented at the ACEC2000: learning technologies, teaching and the future of schools, Melbourne, Australia, 6–9 July 2000.

Kay, R.H., Lauricella, S.: Gender differences in the use of laptops in higher education: a formative analysis. J. Educational Comput. Res. 44(3), 361–380 (2011)

Kelan, E.K., Jones, R.D.: Gender and the MBA. Acad. Manag. Learn. Education 9(1), 26–43 (2010)

Kenny, D.A., Judd, C.M.: Estimating the nonlinear and interactive effects of latent variables. Psychol. Bull. 96, 201–210 (1984)

Lane, L.M.: Toolbox or trap? course managment systems and pedagogy. EDUCAUSE Q. 31(2), 4–6 (2008)

Lau, S.-H., Woods, P.C.: Understanding learner acceptance of learning objects: the role of learning object characteristics and individual differences. British J. Educational Technol. 40(6), 1059–1075 (2009)

Lin, M.-C., Tutwiler, M.S., Chang, C.-Y.: Gender bias in virtual learning environments: an exploratory study. British J. Educational Technol. 43(2), E59–E63 (2012). doi:10.1111/j.1467-8535.2011.01265.x

Lin, S., Overbaugh, R.C.: Computer-mediated discussion, self-efficacy, and gender. British J. Educational Technol. 40(6), 999–1013 (2009)

Malikowski, S.R.: Factors related to breadth of use in course management systems. Internet High. Education 11(2), 81–86 (2008). doi:10.1016/j.iheduc.2008.03.003

Malikowski, S.R., Thompson, M.E., Theis, J.G.: External factors associated with adopting a CMS in resident college courses. Internet High. Education 9(3), 163–174 (2006). doi:10.1016/j.iheduc.2006.06.006

Malikowski, S.R., Thompson, M.E., Theis, J.G.: A model for research into course management systems: bridging technology and learning theory. J. Educational Comput. Res. 36(2), 149–173 (2007)

Martins, L.L., Kellermanns, F.W.: A model of business school students’ acceptance of a web-based course management system. Acad. Manag. Learn. Education 3(1), 7–26 (2004)

Meredith, W.: Measurement invariance, factor analysis and factorial invariance. Psychometrika 58(4), 525–543 (1993)

Moosmayer, D.C.: A model of management academics’ intentions to influence values. Acad. Manag. Learn. Education 11(2), 155–173 (2012)

Morgan, G.: Faculty use of course management systems, vol. 2. EDUCAUSE Center for Applied Research, Boulder, CO (2003)

Naveh, G., Tubin, D., Pliskin, N.: Student LMS use and satisfaction in academic institutions: the organizational perspective. Internet High. Education 13(3), 127–133 (2010). doi:10.1016/j.iheduc.2010.02.004

Neuman, D.: Naturalistic inquiry and the perseus project. Comput. Humanit. 25(4), 239–246 (1991)

Oh, S., Ahn, J., Kim, B.: Adoption of broadband Internet in Korea: the role of experience in building attitudes. J. Inf. Technol. 18(4), 267–280 (2003)

Osborn, D.: Do print, web-based, or blackboard integrated tutorial strategies differentially influence student learning in an introductory psychology class? J. Instr. Psychol. 37(3), 247 (2010)

Park, N., Lee, K.M., Cheong, P.H.: University instructors’ acceptance of electronic courseware: an application of the technology acceptance model. J. Comput. Mediat. Commun. 13(1), (2007). Article 9

Redpath, L.: Confronting the bias against on-line learning in management education. Acad. Manag. Learn. Education 11(1), 125–140 (2012). doi:10.5465/amle.2010.0044

Reinen, I.J., Plomp, T.: Information technology and gender equality: a contradiction in terminis? Comput. Education 28, 65–78 (1997)

Rogers, E.M.: Diffusion of Innovations, 4th edn. Simon and Schuster, New York (1995)

Rogers, E.M. (ed.): Diffusion of Innovations, 5th edn. Free Press, New York (2003)

Romiszowski, A.J.: How’s the e-learning baby? factors leading to success or failure of an educational technology innovation. Educational Technol. 44(1), 5–27 (2004)

Selim, H.M.: An empirical investigation of student acceptance of course websites. Comput. Education 40(4), 343–360 (2003)

Shen, D., Laffey, J., Lin, Y., Huang, X.: Social influence for perceived usefulness and ease of use of course delivery systems. J. Interact. Online Learn. 5(3), 270–282 (2006)

Sun, S., Konold, T.R., Fan, X.: Effects of latent variable nonnormality and model misspecification on testing structural equation modeling interactions. J. Exp. Education 79, 231–256 (2011)

Tella, A., Mutula, S.M.: Gender differences in computer literacy among undergraduate students at the University of Botswana: implications for library use. Malays. J. Libr. Inf. Sci. 13(1), 59–76 (2008)

Trotter, A.: Blackboard vs. Moodle, Education Week’s Digital Directions (2008). 21

Verdegem, P., De Marez, L.: Rethinking determinants of ICT acceptance: towards an integrated and comprehensive overview. Technovation 31(8), 411–423 (2011). doi:10.1016/j.technovation.2011.02.004

von Eye, A., Spiel, C., Wagner, P.: Structural equations modeling in developmental research concepts and applications. Methods Psychol. Res. Online 8(2), 75–112 (2003)

Vovides, Y., Sanchez-Alonso, S., Mitropoulou, V., Nickmans, G.: The use of e-learning course management systems to support learning strategies and to improve self-regulated learning. Educational Res. Rev. 2(1), 64–74 (2007). doi:10.1016/j.edurev.2007.02.004

Wadsworth, L.M., Husman, J., Duggan, M.A., Pennington, M.N.: Online mathematics achievement: effects of learning strategies and self-efficacy. J. Dev. Education 30(3), 6–14 (2007)

Yen-Chun Jim, W.U., Shihping, H., Lopin, K.U.O., Wen-Hsiung, W.U.: Management education for sustainability: a web-based content analysis. Acad. Manag. Learn. Education 9(3), 520–531 (2010). doi:10.5465/amle.2010.53791832

Acknowledgments

The authors gratefully acknowledge the subsidy of this research grant (99-2511-S-142-012-98WFA0D00038) by the National Science Council of Taiwan.

Author information

Authors and Affiliations

Corresponding author

Additional information

Preliminary version of this paper were presented at the 2012 Hawaii International Conference on Education, Jan 5–8.

Appendix 1

Rights and permissions

About this article

Cite this article

Lin, S., Shih, TH. & Chuang, SH. Validating innovating practice and perceptions of course management system solutions using structural equation modeling. Qual Quant 48, 1601–1618 (2014). https://doi.org/10.1007/s11135-013-9864-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11135-013-9864-y