Abstract

DEA models are not amenable to differential arguments for extreme efficient units. Consequently, function representations of the approximating technology are not differentiable in the usual sense. Dually, this nondifferentiability is manifested by multiple optima to the Charnes et al. (Eur J Oper Res 2:429–444, 1978) DEA problem. This paper shows how a “calculus” can be applied to DEA, and, in particular, how this “calculus” resolves the resulting weight choice problem uniquely. The “calculus” is based on the concept of willingness to pay and well-known results in the convex analysis literature (Rockafellar, Convex analysis, 1970) for directional derivatives and their associated superdifferentials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

A central folk wisdom of efficiency analysis is that Data Envelopment Analysis (DEA) models are not amenable to differential arguments. This belief emerges because DEA technologies are conservative approximations derived as appropriate hulls of observed input and output data. Those “hulling” operations inevitably introduce “kinks” into the resulting frontiers of the enveloped data. These kinks, in turn, are typically inherited by function representations of the DEA technology.

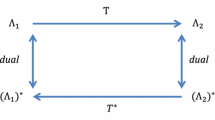

This lack of smoothness has limited the adoption of DEA and closely related methods by economists and others not specializing in efficiency analysis. But, in and of itself, it is not inherently important, particularly because it typically only occurs on sets of measure zero. Problems arise for efficiency analysis, however, because those sets of measure zero frequently correspond to “extreme” efficient points for the conservative approximation to the technology. These are precisely the points on which efficiency analysts frequently focus. And, as is well-known, “kinks” in primal (quantity) space map into “flats” in dual (price) space (McFadden 1978). Thus, the most familiar manifestation of this lack of differentiability is the lack of unique multipliers (shadow prices) for “extreme” efficient units. This multiplicity of multipliers for efficient units has led some to suggest that specific criteria should be developed for selecting appropriate multipliers from among the many contained in the dual “flat” corresponding to the primal “kink” associated with “extreme” efficient units (Färe and Korhonen 2004; Cooper et al. 2007).

This paper demonstrates that that desired set of criteria is straightforward. First, however, it shows how generalized differential arguments can be applied to DEA representations of technologies. Because one cannot always think in terms of the usual notion of a derivative, a different differential concept must be used. That concept is the directional derivative. The convexity properties of DEA technologies ensure that directional derivatives almost always exist (even at traditionally nondifferentiable points associated with primal “kinks”). And, once properly understood, directional derivatives can be used in much the same fashion, with almost exactly the same intuition, as more usual differential arguments. As we show below, these derivatives also offer a unique and economically compelling solution to the shadow pricing problem associated with DEA technologies.

In what follows we first introduce our approach by way of a simple example that captures its essence. Then we introduce a general representation of the technology and the differential concept that we use. We show how that differential concept always yields computable and unique shadow prices (subject to an obvious normalization). Then we move on to the special case of DEA technologies and show how our concepts resolve some of the common problems that arise from lack of differentiability there.

1 An example

Let x denote a single input and y denote a single output. Assume that there exists a single observation (x, y) = (1, 1). The DEA technology that satisfies nonincreasing returns to scale and which is consistent with this observation is

where z is the intensity variable. This technology is visually illustrated in Fig. 1.

The graph of this technology is smooth everywhere but at the points (0, 0) and (1, 1). Unfortunately, (1, 1) is often the most interesting point because it is “extreme” efficient. But at (1, 1), the graph of T has infinitely many supporting hyperplanes with slopes in the range [0, 1]. From a dual perspective, this is, perhaps, noted most clearly by recognizing that the constraints dual to (z1 ≥ y, z1 ≤ x ) in the Charnes et al. (1978) formulation of the DEA problem require that for all \(\left(w,p\right)\in {\mathbb{R}}_{+}^{2}\)

Each of these hyperplanes defines a potentially legitimate shadow price for the technology. Therein lies the problem usually encountered in DEA analyses.

Notice, however, that for appropriate movements, the relevant shadow prices associated with T are clear cut. For example, starting at (1, 1), what would a rational decisionmaker be willing to pay for an extra unit of x if T were the true technology? The answer is zero because such changes will not bring about any output growth. Conversely, if one asked how much a rational decisionmaker facing this T must be compensated for a one unit decrease in the input to keep him indifferent to being at (1, 1), the answer would be 1. In what follows, we show how to appropriately generalize these simplified concepts of willingness to pay and willingness to accept in terms of directional derivatives to make differential analysis of DEA technologies both easy and economically meaningful.

2 Basics

We concentrate on input-based representations of the technology, while noting that our arguments extend directly to both output-based and graph-based representations of the technology with virtually no changes in the argument.Footnote 1 The technology is represented by a continuous input correspondence, \(V:{\mathbb{R}}_{+}^{M}\rightarrow 2^{{{\mathbb{R}}_{+}^{N}}},\) that maps points in output space, denoted by \(y\in {\mathbb{R}}_{+}^{M},\) into subsets of the input space, \({\mathbb{R}}_{+}^{N},\) and is defined as

We assume that the image of the correspondence, V (y), is convex for all \(y\in{\mathbb{R}}_{+}^{M}\) and exhibits free disposability of inputs so that \(V\left(y\right)+{\mathbb{R}} _{+}^{N}\subset V\left(y\right)\) for all \(y\in{\mathbb{R}}_{+}^{M}.\) Footnote 2

In what follows, we rely heavily on directional derivatives. Because these are closely related geometrically to the concept of a directional distance function, we, therefore, use directional distance functions as our function representations of the technology. The directional input distance function for \(g\in {\mathbb{R}}_{+}^{N}\backslash 0^{N}\) (results are symmetric for directional output distance functions) is defined by

if there exists β such that x−β g ∈ V(y) and −∞ otherwise. Given our assumptions on V(y), D(x, y, g) is nondecreasing and concave in x and satisfies (Chambers et al. 1996) the translation property

and the representation property

Because D(x, y, g) is concave in x (Rockafellar 1970, Theorem 23.1), its (one-sided) directional derivative

is a superlinear (positively linearly homogeneous and concave) function of x 0 with D′(x, y, g; 0) = 0 and

Moreover,

for all \(\beta \in {\mathbb{R}}\) Thus, directional derivatives for directional distance functions are translation invariant in the direction defining the directional distance function.

The superdifferential of D in x, which we denote as ∂D(x, y, g), is defined as

By basic results (Rockafellar 1970, Theorems 23.3 and 23.4),

or equivalently,

When D(x, y, g) is differentiable in x, then ∂D(x, y, g) is the singleton set {∇D(x, y, g)}, where ∇D(x, y, g) denotes the gradient of D(x, y, g) in x. When ∂D(x, y, g) is a singleton set, then D(x, y, g) is differentiable in x (Rockafellar 1970). Therefore, when D(x, y, g) is differentiable in x, then D′(x, y, g; x 0) is the inner product of the gradient and x 0, or more compactly

When D is differentiable everywhere but on a set of Lebesgue measure zero, ∂D(x, y, g) is computable using ∇D(x, y, g). Clarke (1983, Theorem 2.5.1) shows that if \(\Upgamma \subset{\mathbb{R}} ^{Q}\) is a set of (Lebesgue) measure zero such that D(x, y, g) is differentiable on its complement, \(\Upgamma ^{C}\subset{\mathbb{R}}^{Q},\) then

where co{} denotes the convex hull of the indicated set.

Using these results establishes:

Lemma 1

(Chambers et al. 2004; Chambers and Quiggin 2007) If v ∈ ∂D (x, y, g), then

and v ∈ ∂D(x + βg, y, g) for all \(\beta \in{\mathbb{R}}.\)

Proof

By (4),

while a symmetric argument establishes:

Therefore if v ∈ ∂D(x, y, g), by (5)

which gives the first part. The second part now follows from the fact that D′(x + βg, y, g; x 0) = D′(x, y, g; x 0) for all \(\beta \in {\mathbb{R}}.\) \(\hfill\square\)

Lemma 1 establishes two important facts that have important economic implications. First, the inner product of any element of ∂D(x, y, g) and g must equal one. As we shall see below, economically this reflects the fact that ∂D(x, y, g) contains the shadow prices of the inputs normalized by the shadow value of the numeraire bundle, g. Second, superdifferentials of directional distance functions are invariant to translations of the input vector in the direction of g.

To see precisely how Lemma 1 relates to shadow pricing results, note first that given input prices \(w\in {\mathbb{R}}_{++}^{N},\) the cost function associated with V (y) is defined:

if V(y) is nonempty and ∞ otherwise.Footnote 3 So long as there exists an x such that x−βg ∈ V(y) for some β, Chambers et al. (1996) have shown that by the representation property (2)

Take any solution to (8) and denote it by x*. In what follows, we term it efficient or cost-efficient. Now consider the directional derivative of (8) in an arbitrary direction, x 0, away from x*:

If x* is optimal, this expression must be nonnegative in all possible directions, whence

for all x 0. Using (5) thus establishes that \(\frac{w}{w^{\prime}g}\in \partial D\left(x^{\ast},y,g\right).\) When attention is restricted to efficient points, ∂D(x*, y, g) must contain all the viable normalized shadow or virtual prices for D at x*. Naturally, when weighted by the elements of the numeraire vector, these virtual prices sum to one by Lemma 1. (Importantly, as we show below, this constraint on shadow prices is always inherited by the Charnes et al. (1978) DEA formulation of D(x, y, g).)

There are several things to note. First, by taking x 0 = g while applying (4) to (9), one obtains

Hence, translations of x* in the direction of g yield no change in the objective function for (8). Thus, as demonstrated by Chambers (2001), if x* is a solution to (8) then so is any translation of it in the direction of g. This solution indeterminacy is resolved by setting D(x*, y, g) = 0 to ensure that x* is on the frontier of V(y).

Second, letting e i denote the ith element of the usual orthonormal basis, it follows from (10) that for an efficient point x*

Thus, any normalized price at which x* is efficient is an upper bound for D′(x*, y, g; e i ). Hence, D′(x*, y, g; e i ) measures what an efficient decisionmaker would be willing to pay for one extra unit of x i . For that reason, we refer to D′(x*, y, g; e i ) as the willingness to pay for a unit of x i (denominated in units of the numeraire commodity bundle g). Symmetrically, now consider a movement in the direction of −e i . Intuitively, this can be associated with the sale of one unit of x i . We have:

whence

and noting that

then establishes that it is appropriate to refer to −D′(x*, y, g; −e i ) as the willingness to accept for a unit of x i .

By the properties of the directional derivative:

The divergence between the willingness to accept and the willingness to pay emerges from the fact, illustrated in our initial example, that a unit operating at a kink point on an efficient frontier evaluates the acquisition of extra units of an input and the sale of some units of an input differently. Moreover, as a simple buy-low sell high intuition would suggest, the selling price in such instances should be larger than the buying price.

Even for such units, however, although there are potentially infinitely many shadow prices for x i , there are only two economically relevant shadow prices, the shadow buying price and the shadow selling price. These prices can diverge because of the nonsmoothness of the technology. But even though they diverge, they are unique.On the other hand, units facing a smooth technology, at the margin, are willing to buy and sell inputs at the same unique shadow price.

More generally, we shall refer to −D′(x*, y, g; −x 0) as the willingness to accept for x 0 at x* and D′(x*, y, g; x 0) as the willingness to pay. D′(x*, y, g; x 0) is superlinear (positive linearly homogeneous and superadditive) in x 0. Positive linear homogeneity implies that a renormalization of the units of x 0 leads to an equivalent renormalization of the willingness to pay. Suppose x 0 = x 1 + x 2, superaddivity implies that the gap between willingness to accept and willingness to pay for x 0 is smaller than the sum of the respective gaps for x 1 and x 2. Superadditivity also has important implications for the shadow pricing of inputs. It implies

More generally, because \(x^{0}=\sum_{n=1}^{N}x_{n}^{0}e_{n},\) superadditivity requires that

In evaluating the value of a small move in the direction of x 0 > 0N, it is improper to take each x n , multiply it by D′(x*, y, g; e n ), and then sum. This only provides a lower bound for the true willingness to pay. Depending upon their desired use, the multipliers selected to weight inputs can differ. Thus, while the selection criterion offered by the directional derivative always yields a unique willingness to pay for any move, the actual input weights chosen may change as different moves are considered. One particularly important manifestation emerges when x 0 = x*. Then the rule for multiplier selection is

Thus, the appropriate multipliers belong to arg min {v′x* : v ∈ ∂D(x*, y, g)}. Similarly,

However, if the technology is smooth at x, ∂D(x, y, g) = {∇D(x, y, g)}, and inputs have “unique” weights regardless of the direction of the move. Expression (6) then implies

Before applying these results, it is important to emphasize that the interpretation of D′(x*, y, g; x 0) as willingness to pay or of −D′(x*, y, g; −x 0) as willingness to accept is only sensible under the presumption that x* is efficient. That, however, does not mean that D′(x*, y, g; x 0) is irrelevant if x* is not efficient. Instead its interpretation needs to be modified. If x* is not efficient, then just as in the usual calculus, D′(x*, y, g; x 0) measures how the directional distance function will change as a result of a small move in the direction of x 0. But it is not then appropriate to identify D′(x*, y, g; x 0) with a willingness to pay. As a practical matter, however, when x* is not DEA “extreme” efficient, D′(x*, y, g; x 0) will be linear in x 0 (and not just superlinear) because ∂D(x*, y, g), in most practical instances, is typically a singleton for inefficient units. And while that singleton set is often referred to as containing a vector of shadow prices, it is now important to distinguish the programming notion of a shadow price from the economic notion of a shadow price. The economic notion always presumes efficiency, while the programming notion does not. Hence, those elements of ∂D(x*, y, g) are more properly thought of simply as gradients (presuming uniqueness) or supergradients when x* is not efficient.

3 Applying the calculus to DEA models

Suppose that one is given a data set (x k, y k) for k = 1, 2, ... , K where K is the number of observations. Then the constant returns to scale free disposal hull of the data is:

The corresponding directional distance function for a particular (x, y) is given by

To clarify the linkage between our concept of willingness to pay and DEA representations of the technology, rewrite this directional distance function in its Charnes et al. (1978) dual formulation as

where, as usual, \(\left(w, p\right)\in{\mathbb{R}} _{+}^{N+M}\) denote the the multipliers (shadow prices) of x and y, respectively. In this version of the optimization problem, Lemma 1 is directly reflected in the multiplier normalization constraint, w′g ≥ 1. Denote the solution set to (11) by:

By our earlier arguments and the normalization requirement (w′g ≥ 1),

Thus, the best DEA estimate of the willingness to pay for a small unit increase in x i is

Similarly, the best estimate of the willingness to accept a small unit decrease in x i is given by max {w i : (w, p) ∈ {(w*, p*)}}, while the corresponding willingness to pay for and accept a marginal perturbation of x in the direction x 0 (and −x 0) are given, respectively, by min {w′x 0 : (w, p) ∈{(w*, p*)}} and max{w′x 0 : (w, p) ∈ {(w*, p*)}}.

We illustrate with a simple example. There are two observations with two inputs and one output given by x 1 = x 2 = y = 1 and x 1 = 3, x 2 = 2, and y = 2. By (11), for g = (1, 1)

Thus, D T(K)(1, 1, 1, (1, 1) ) = 0, and at this point

The willingness to pay for a small increase in x i is given by

while the willingness to accept a small decrease in x i is given by

The willingness to pay for a small perturbation in the direction x 0 > 0 is

while the willingness to accept a small movement in the direction −x 0 is

To illustrate the superlinearity of willingness to pay, notice that

To illustrate the computation of D′(x*, y, g; x 0) for an inefficient unit, let us consider the point (3, 2, 2). Under constant returns to scale, this unit is clearly inefficient. Taking the directional vector to be g = (1, 0), one obtains:

with

Because (3, 2, 2) is not on the efficient frontier, it is inappropriate to interpret (w*, p*) in this instance in terms of willingness to pay or willingness to accept, but D T(K)′(3, 2, 1, (1, 0), x 0) still exists and is given uniquely by \(x_{1}^{0}.\) Now, however, it simply measures how D T(K)(3, 2, 2, (1, 0)) changes as we move in the direction x 0.

Taking the directional vector to be (1, 1) or (0, 1) gives D T(K)(3, 2, 2, g) = 0 with (w*, p*) = (0, 1, 1), whence \(D^{T\left(K\right)^{\prime}}\left(3,2,1,g,x^{0}\right)=x_{2}^{0}.\)

4 Conclusion

DEA models, as formulated by Charnes et al. (1978), are not amenable to differential arguments for “extreme” efficient units. The reason, of course, is that in the primal input–output space, these points coincide with “kinks” in the approximating technology. Consequently, function representations of the approximating technology are not there differentiable in the usual sense. Dually, this nondifferentiability is manifested by the existence of alternate optima to the Charnes et al. (1978) DEA problem, and, in turn, it naturally raises the question of which weights to use in evaluating the relative importance of inputs and outputs. This problem is more than just an idle theoretical curiosity because as Cooper et al. (2007) show, the presence of alternate optima yields different evaluations of the relative importance of inputs and outputs for efficient units depending upon the software used to analyze the data.

This paper shows how a “calculus” can be applied to DEA, and, in particular, how this “calculus” resolves this weight choice problem uniquely. While not frequently used within the DEA literature, the “calculus” is based on well-known results in the convex analysis literature (Rockafellar 1970; Clarke 1983) for directional derivatives and their associated superdifferentials. Here, we have shown by example how these results can be applied to resolve the shadow price (weight choice) problem. But is also clear that under suitable convexity assumptions on the graph of the technology, parallel methods can be used to calculate the elasticity of scale. In fact, we close this paper by noting that the elasticity of scale, as usually defined, is the ratio of a directional derivative to an observed output level. Thus, when properly extended, our "calculus" easily covers that case as well.

Notes

For output-based representations, arguments analogous to those below yield unique output multipliers, while for graph-based representations, analogous arguments yield input and output weights.

This is a weaker set of conditions than is usually imposed on DEA representations of technologies. Hence, the general differential developments here apply to a broader class of technologies than DEA.

It is known that the efficient subset in DEA models is bounded. By the specification of the DEA technology, the associated input set is always closed. Hence, using a DEA representation of the technology, c(w, y) can be specified in terms of \(w\in{\mathbb{R}}_{+}^{N}.\)

References

Chambers RG (2001) Consumers’ surplus as an exact and superlative cardinal welfare measure. Int Econ Rev 42:105–120

Chambers RG, Chung Y, Fare R (1996) Benefit and distance functions. J Econ Theory 70:407–419

Chambers RG, Färe R, Quiggin J (2004) Jointly radial and translation homothetic preferences: generalized constant risk aversion. Econ Theory 23:689–699

Chambers RG, Quiggin J (2007) Dual approaches to the analysis of risk aversion. Economica 74:189–213

Charnes A, Cooper WW, Rhodes E (1978) Measuring the efficiency of decision making units. Eur J Oper Res 2:429–444

Clarke FH (1983) Optimization and nonsmooth analysis. Wiley, New York

Cooper WW, Ruiz JL, Sirvent I (2007) Choosing weights from alternative optimal solutions of dual multiplier models. Eur J Oper Res 180:443–458

Färe R, Korhonen P (2004) Finding unique weights for efficient units in data envelopment analysis. Manuscript, Oregon State University

McFadden D (1978) Revenue, and profit functions. In: Fuss M, McFadden D (eds) Production economics: a dual approach to theory and applications. North-Holland, Amsterdam

Rockafellar RT (1970) Convex analysis. Princeton University Press, Princeton

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chambers, R.G., Färe, R. A “calculus” for data envelopment analysis. J Prod Anal 30, 169–175 (2008). https://doi.org/10.1007/s11123-008-0104-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11123-008-0104-8