Abstract

A substantial challenge in improving public health is how to facilitate the local adoption of evidence-based interventions (EBIs). To do so, an important step is to build local stakeholders’ knowledge and decision-making skills regarding the adoption and implementation of EBIs. One EBI delivery system, called PROSPER (PROmoting School-community-university Partnerships to Enhance Resilience), has effectively mobilized community prevention efforts, implemented prevention programming with quality, and consequently decreased youth substance abuse. While these results are encouraging, another objective is to increase local stakeholder knowledge of best practices for adoption, implementation and evaluation of EBIs. Using a mixed methods approach, we assessed local stakeholder knowledge of these best practices over 5 years, in 28 intervention and control communities. Results indicated that the PROSPER partnership model led to significant increases in expert knowledge regarding the selection, implementation, and evaluation of evidence-based interventions. Findings illustrate the limited programming knowledge possessed by members of local prevention efforts, the difficulty of complete knowledge transfer, and highlight one method for cultivating that knowledge.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Research on the effectiveness of evidence-based preventive interventions (EBIs) has shown their potential to reduce risk factors and promote positive development in youth (Mihalic et al. 2004; O’Connell et al. 2009; Spoth et al. 2008), Despite this evidence, EBIs have yet to be widely utilized by local communities (Wandersman and Florin 2003; Ringwalt et al. 2009). As a result, there have been few demonstrations of their potential public health impact at the community level. This diminished reach is believed to be, in part, due to the inadequate capacity of local service delivery systems to disseminate evidence-based prevention programs (Spoth and Greenberg 2005). In particular, it is likely that local stakeholders have insufficient knowledge regarding how to adopt, implement, and evaluate these programs (i.e., programming knowledge), which is often a substantial barrier to local program utilization (Adelman and Taylor 2003; Wandersman et al. 2008).

Community-based, comprehensive prevention approaches implemented through locally based coalitions have become increasingly popular to address behavioral health problems such as violence, substance abuse, and teenage pregnancy (see Roussos and Fawcett 2000). The growing popularity of a comprehensive community approach is due to developments in both theory and practice (e.g., Communities that Care–Hawkins et al. 2009; The PROSPER (PROmoting School-community-university Partnerships to Enhance Resilience) Delivery System–Spoth and Greenberg 2005, 2011). Further, policy makers recognize that discrete programs are rarely sufficient to alter community-wide prevalence rates (Butterfoss et al. 1993). Such community-focused initiatives have recently demonstrated their ability to improve local capacity and facilitate high-quality program delivery (Hawkins et al. 2009; Spoth et al. 2007b; Wandersman et al. 2008). A fundamental assumption of these approaches is that knowledge of effective programs and their operations is an important component of capacity building (Feinberg et al. 2004; Gomez et al. 2005). As part of this process, local teams are provided technical assistance (TA) with the goal of building the teams’ capacities to select, implement, and monitor EBIs. While TA is a valuable tool for supporting team functioning (e.g., planning, communication, recruitment–O’Donnell et al. 2000), it is unclear whether this support successfully develops the team members’ knowledge of prevention programming.

This paper evaluates the knowledge of prevention programming exhibited by community stakeholders participating in a randomized-control trial of the PROSPER model. A mixed methods approach is used to describe and evaluate community leader knowledge. Our analysis includes both a qualitative description of leaders’ programming knowledge, and a quantitative evaluation of knowledge development among PROSPER participants relative to leaders in control communities.

Prevention Systems & Technical Assistance

Wandersman et al. (2008) have created a useful conceptual model to characterize the components and needs of prevention diffusion. This model identifies systems vital to delivery and sustainability of EBIs. Of particular relevance here are prevention delivery systems–at the level of practitioners and providers–and corresponding prevention support systems. Specifically, the prevention support system provides TA in order to build the capacity of local delivery system infrastructures, which in turn sustain program implementation (for review, see Johnson et al. 2004; Wandersman et al. 2008).

Prevention systems, which operate under a community-partnership model, often develop collaborative relationships with technical support systems (e.g., Cooperative Extension System [CES], NGO’s) and community entities (e.g., local schools and agencies) to share resources and address public health issues (Hawkins et al. 2002; Spoth and Greenberg 2005). Representatives from diverse groups are sought to form teams that coordinate EBI implementation and foster local collaboration. Training and TA are provided to facilitate adoption, implementation, and evaluation of EBIs by local teams. While general team functioning is often improved by the delivery of TA (Feinberg et al. 2002), relatively little work has evaluated how TA and team maturation may improve team members’ expertise and capacity (e.g., Feinberg et al. 2002; Riggs et al. 2008) and none has sought to qualitatively assess its impact on membership knowledge across time.

The PROSPER project combines prevention support and delivery systems that build sustainable partnerships among communities, schools and land-grant universities to promote diffusion of EBIs. PROSPER utilizes two existing service delivery systems–embedded cooperative extension agents and local public schools–to implement school- and family-based EBIs. The CES, which is located in over 3,000 US counties, has a variety of mechanisms for the delivery of TA to its local extension agents (see Backer and Rogers 1999). Both the public schools and the CES have wide reach, but historically lacked the programming capacity to faithfully deliver EBIs pertaining to substance-abuse prevention (Molgaard 1997; Spoth et al. 2004). The partnership with cooperative extension and the support from its extensive infrastructure are salient, unique features of PROSPER that distinguish it from other prevention delivery systems (e.g., Communities that Care, Getting to Outcomes, Common Language). Other distinguishing features concern differences in (1) local community team size, composition, and functions; (2) intervention menus; and (3) strategies for conducting ongoing, proactive technical assistance.

PROSPER builds local capacity in part through the activities of university prevention and extension faculty and prevention coordinators, who are CES or prevention-oriented university staff dedicated to providing TA to PROSPER communities. PROSPER teams are co-led by the local CES agent and a school representative, and are also comprised of 5–15 additional members representing various community interests (e.g., parents, law enforcement, faith-based institutions, and substance abuse and mental health agencies). The team recruits participants, implements interventions, monitors implementation fidelity, as well as performs resource and sustainability planning generation activities. Additionally, teams select from a menu of evidence-based programs and choose a family-based program to implement in 6th grade and a school-based program to implement in 7th grade.

Each prevention coordinator delivers TA to three or four prevention teams (about 1 day a week devoted to each team). Ongoing, proactive TA addresses a variety of programming issues including program selection, planning for program delivery, monitoring implementation, assessing program effectiveness, supporting resource generation, and promoting team sustainability (Spoth et al. 2007a). TA was provided through a variety of mediums, including biweekly phone calls, onsite consulting, electronic correspondence and learning community meetings (see Spoth et al. 2004; Spoth and Greenberg 2011).

Through this framework, PROSPER has effectively mobilized community prevention efforts, enhanced family and youth protective factors and decreased youth substance abuse (relative to comparison communities–Redmond et al. 2009; Spoth et al. 2007c, 2011). In addition, PROSPER programs have served over 10,000 youth (Spoth et al. 2007a); were implemented with high levels of fidelity (i.e., average of 90% program adherence), and sustained for up to 6 consecutive years (Spoth et al. 2007b, under review)

Knowledge of Prevention Programming

While it is expected that PROSPER community team members will become more knowledgeable as they receive TA, there has been no empirical assessment of what knowledge, if any, has actually been gained. Greater understanding of community leaders’ knowledge and how that knowledge develops during involvement in coordinated prevention efforts might inform future capacity-building processes. Here, we consider three of the most salient areas of programming knowledge–adoption, implementation, and evaluation– and further explore specific knowledge domains within these areas (i.e., knowledge of prevention program information sources, standards of evidence, methods for assuring program fidelity, and program evaluation techniques).

Finding and evaluating the best-fitting EBIs has become increasingly complicated for local community prevention teams, given that terms such as science-based, scientifically-tested, and research-based can be deceptive and claims of effectiveness are sometimes exaggerated. One type of resource that has assisted communities in finding EBIs are lists of programs with substantial evidence demonstrating program effectiveness (e.g., Mihalic et al. 2001; SAMHSA 2011). To further understand the sources local leaders use to find EBIs, we solicited their knowledge of these lists and other sources of prevention programs (i.e., sources of program).

The second knowledge domain considers the standards of evidence used by local leaders to determine whether a program is effective and appropriate for their community. Prevention scientists have previously developed formal standards of evidence that can be used to guide discussion of this knowledge domain (e.g., Flay et al. 2005; Halfors and Godette 2002). Ideally, program effectiveness is determined using randomized-control, time-series, or matched-control designs with long-term follow-ups that examine whether programs yield significant improvements in distal public health outcomes (Flay et al. 2005).

A third domain of knowledge concerns the mechanics of implementing EBIs. This domain includes issues such as participant recruitment and program delivery (Durlak and Dupre 2008). For instance, program delivery requires an understanding of how to ensure programs are faithfully replicated (i.e., fidelity assurance). In addition, this domain involved local leaders’ recognition of the importance of training, the reasons for maintaining fidelity, and methods for monitoring program adherence (e.g., Aarons et al. 2009; Durlak 1998; Fixsen et al. 2005).

Lastly, program evaluation is essential to assessing the impact of local prevention efforts and increasingly required by funding agencies (Wandersman and Florin 2003). Scientifically-sound and skillful program evaluations can serve as a tool for soliciting funding and thus are integral to future sustainability. Because of the importance of ongoing program evaluation for sustaining program infrastructure and delivery, PROSPER provides TA to build teams’ capacity to assess the impact of their own local efforts.

This report summarizes findings on changes in knowledge of local leaders from the pretest, at the time of PROSPER team formation, to the ongoing operations and sustainability phase 5 years later. By the fifth project year, teams have implemented both an evidence-based, family- and school focused interventions for 3 years, and have already raised local sustainability funds to continue program delivery. These activities illustrate increases in the sustainability of local efforts as the teams mature.

Methods

Community Recruitment

The PROSPER project recruited 14 communities in Iowa and 14 communities in Pennsylvania based upon four criteria that included (1) school district enrollment between 1,301 and 5,200 students, (2) at least 15% of families eligible for reduced-cost lunch, (3) a maximum of 50% of the adult population could be employed or attending a college or university, and (4) the community could not be involved in other university-affiliated, youth-focused prevention initiatives. Participating communities were predominantly rural and had a median household income of $37,070. Communities were matched by geographic location and size; each pair of communities was randomized into intervention and control conditions. Detailed descriptions of community recruitment, selection and randomization are available in previous reports (see Spoth et al. 2004, 2007c).

Participants

We conducted annual interviews with PROSPER team members and, in control communities, comparable individuals representing the community sectors involved in PROSPER teams. The sample of PROSPER team members and control community respondents analyzed in this report included 422 participants who engaged in at least 1 year of data collection. PROSPER team members included local Cooperative Extension, school, substance abuse and mental health agency representatives, as well as other community stakeholders. Our goal in recruiting participants within the control communities was to sample the equivalent of key team members who would have served on a PROSPER team had they received the partnership-based model.

Participation rates for the PROSPER teams and control site counterparts’ were high across all years. Over 98% of those who were recruited for the survey participated. In the 14 intervention communities, the average number of respondents per team across all years was 16.9 (ranging from 12 to 24). In the 14 control communities, the average number of respondents per community was 10.8 (ranging from 9 to 17). The final sample included 271 PROSPER community leaders and 151 control community leaders.

Procedure

Participants in both the intervention and control conditions were interviewed annually to assess their programming knowledge in each domain. Specifically, participants’ knowledge of program sources, standards of evidence and program evaluation were assessed from pretest–at the time the teams were formed–to 4 years later; knowledge of fidelity assurance was assessed from pretest to 6 years later. After completing the annual interview, respondents received monetary compensation for their participation ($20).

Measures

Interviews included four open-ended questions concerning programming knowledge. To elicit knowledge of program sources, participants were asked: “If someone asked you for the names of a couple of good prevention programs for youth, where would you go to research effective prevention programs?” To elicit knowledge of standards of evidence, participants responded to: “What kinds of information do you look for to decide if a program is backed by good research?” To elicit knowledge of fidelity assurance, participants were asked: “How can you ensure effective implementation of a prevention program–that is, make sure it was delivered the way it was designed?” To elicit knowledge of program evaluation participants were asked: “What are the best ways to decide if a prevention program is working well in your community?” A follow-up solicitation was employed to prompt participants to expand upon and clarify their initial responses.

Methods of Analyses

The study utilized an across-stage mixed model design (see Johnson and Onwuegbuzie 2004) to evaluate the programming knowledge of community leaders. Qualitative analyses were used to organize and describe the community leaders’ programming knowledge as well as to dichotomize responses based upon level of programming expertise. Then quantitative analyses were used to determine if PROSPER team members were significantly more knowledgeable of prevention programming than community leaders in the control condition.

Qualitative Analyses

Participant responses were coded using a grounded theory analytical approach (GT–see Creswell 2009; Glaser 1992; Johnson and Onwuegbuzie 2004). Through these analyses, categories were created, compared to the data, refined, re-compared and then re-refined. This iterative process facilitated the development of knowledge categories for each of the four knowledge domains (Table 1). Coding occurred within the three traditional stages of GT (i.e., open, axial and selective) (Strauss & Corbin 1998). During open coding, responses were fractured into discrete units (i.e., indicators). Distinct categories began to appear during analysis of the first wave and were fleshed out during subsequent waves. The axial coding phase focused on assessing the relationships between different concepts and coalescing indicators into more general categories. Selective coding was then used to synthesize the conceptual model of each knowledge domain around a core category (Strauss & Corbin 1998). Saturation appeared to occur after analyzing the first two waves of data, and was tested by analyzing the remainder of the waves with a focus on revealing cases incongruent with the induced categories. Coding was conducted by doctoral students on the project and evaluation of inter rater reliability indicated high agreement, with Cohen’s kappa coefficients ranging between .89 and .98 across knowledge domains. Kappa coefficients provide an estimate of percent age agreement adjusted for chance agreement, between the raters (Landis and Koch 1977).

We employed a dichotomous coding procedure to develop coding categories. The dichotomous categories distinguished between the highest level of programming expertise and all other responses within the four different knowledge domains (0 = non-expert level, 1 = expert level). These ratings were used in the subsequent quantitative analyses. We utilized a variety of techniques to increase our findings’ reliability and validity, guided by criteria summarized in the literature (see Pope et al. 2000; Strauss and Corbin 1992), including longitudinal data collection (credibility), triangulation of information by sampling diverse groups (transferability), blind cross-validation by experts in the field (trustworthiness), protection of participant confidentiality (dependability), observation of change across time (reactivity management), and implementation of formal interview protocols to reduce bias (confirmability).

Quantitative Analyses

To analyze the coded measures of programming expertise, logistic multilevel models were used to examine differences between conditions across time (categorical). These models were tested using the PROC GLIMMIX procedure in SAS 9.1 (SAS Institute Inc. 2004), which evaluated the binary knowledge outcome measure for the expert responses. PROC GLIMMIX allows link function, which for dichotomous outcome data is the logit, and error terms for non-normally distributed dependent variables (in this case binomial). This procedure was used to analyze the three-level, nested design of the model, with individuals nested within communities and each individual measured across time (Littell et al. 2006, p., 729). PROC GLIMMIX utilizes a full information maximum likelihood approach (FIML) to account for missing data and capitalizes on all available information in the sample. “State” (i.e., Iowa and Pennsylvania) was included in the model as a time-invariant covariate (i.e., fixed effect). Interaction terms were added to the model, with a Condition × State term to account for possible differences between the control and PROSPER communities in the two states, a Condition × Time term to account for possible differences between the control and PROSPER communities at different points in time (the term of interest), and a three-way interaction term (Condition × Time × State). We also examined a number of error structures using information criterion fit indices (i.e., AIC and BIC), a procedure that provides optimal inferences for the model’s fixed effects.

Results

Qualitative Results

Qualitative analyses of community prevention programming knowledge provided robust insights into how EBIs are adopted, implemented, and evaluated. Categories describing leaders’ knowledge of prevention program sources, standards of evidence, methods of fidelity assurance, and program evaluation are presented below.

Source of Program

A variety of information sources were nominated by local stakeholders. Sources for program information could be differentiated by type, with program sources originating from a variety of fields including education, criminal justice/law enforcement, academia, health services, and prevention. Another dimension of program source knowledge was whether the source of program information came from a local, state or national information network. Lastly, “quality” described whether the source endorsed the use of research evidence in evaluating programs. Examples of sources ranged from “Ask my local school counselor” or “the internet” to “consult the Blueprint’s Website.” An expert knowledge score was given to individuals’ responses that nominated a specific authoritative source of EBIs (e.g., Safe & Drug Free Schools, Safe Communities Program, CDC, IBS Blueprints for Violence Prevention, and the National Registry of Evidence-based Programs & Practices).

Standards of Evidence

Community leaders offered a wide array of standards on which they based their program adoption decisions. Categories could be broadly separated into the program’s characteristics and the program’s analytic outcomes. Program characteristics included the program fit (e.g., “Does it meet the needs of our community?”), customer satisfaction (“Ask the teachers if they like it”), credibility (“Does it come from a trusted source?”), and whether it was on a prevention list. The types of analytic outcomes community leaders considered were the evaluation design (“Was there a control group?”, “Was there follow-up data?”), the degree of replication and relevance (“Have the findings been shown in a community like ours?”), and the results of outcome analysis (“Did it lead to a change in the kids’ behavior?”). An expert knowledge score was given if individuals’ responses indicated evaluation of program effectiveness was based upon one of three factors: (1) research design quality (e.g., inquiry into whether comparison groups, a longitudinal design, representative sample sizes were used), (2) program outcome data and statistical analyses of the program, or (3) the program’s presence on an authoritative prevention list (e.g., Blueprints, NREPP/SAMHSA).

Fidelity Assurance

The question concerning fidelity assurance led to a variety of responses regarding how to maintain implementation quality. Responses were grouped into four meta-categories: factors or methods related to organization, facilitators, program selection and implementation. Organizational methods suggested for maintaining fidelity included organizational communication (“regular team meetings”) and adoption of clear organizational goals (“agree on a common mission”). Many community leaders maintained they could ensure fidelity through hiring and training capable facilitators as well as through clear expectations that facilitators adhere to all protocols (“By following the model with fidelity”). Stakeholders also reported selecting effective programs that had adequate organizational or participant fit (“right program for our kids”) as a method believed to maintain program fidelity. Lastly, responses described how fidelity could be assured by evaluating implementation quality through solicitation of facilitators (“check in with facilitators”), participant interviews (“ask participants if they like the program, if they think it helps”) and monitoring programs through direct observations of program delivery as they were implemented. An expert knowledge score was given if a response described a specific method for assuring fidelity that included implementation monitoring, high-quality facilitator training, or strict program adherence.

Program Evaluation

Community knowledge of program evaluation was coded into four categories: assessment, outcome analysis, program congruity, and participant engagement. ‘Assessment’ responses ranged from those that sought to passively assess the program (e.g., anecdotal evidence), casually solicit participant feedback (“ask the kids,” “talk to the police”), deploy an instrument to formally measure participants (“collect data,” “survey parents”), or carry out planned evaluations. Outcome measures ranged from non-specific to program specific outcomes. For instance, some stakeholders focused on ‘program congruity’ with local needs (“What are teachers and kids saying?” “Is it affordable”?), while others focused specifically on aspects of ‘participant engagement’ (“Are they coming to the program?”, “They vote with their feet”). An expert knowledge score was given if a response provided a specific, high-quality method for evaluating program effectiveness, including instrument deployment (i.e., surveys, interviews), evaluation of change in outcomes (i.e., observed increases in positive outcomes, decreases in negative outcomes), or planned evaluations (i.e., randomized control, time-series, or matched-control designs with long-term follow-ups).

Quantitative Results

Table 2 displays the percentage of participants who possessed ‘expert-level’ knowledge by intervention group and time period. To examine whether there were any pre test differences on the four dichotomized expertise variables, and how those variables changed across time, a logistic multilevel analysis that considered time as a categorical variable (repeated-measures ANOVA) was conducted. No pretest or state-level differences were found between conditions. Table 3 displays the multi level logistic regression findings; the significance of the Condition × Time interaction terms indicates whether there is differential change in expertise between conditions.

Source of Program

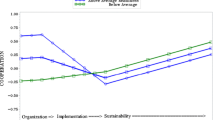

As Table 3 indicates, less than a tenth of local stakeholders could nominate a quality source of EBIs at baseline. Although the proportion of respondents nominating an authoritative source of EBIs showed an upward trend (Fig. 1a), this change was not significant F (3, 689) = 2.48, p = .06. Moreover, PROSPER and control communities did not significantly differ in expertise regarding program sources across time (Table 3).

Standards of Evidence

About one-fifth of stakeholders held expert-level standards of evidence at baseline. A significant Condition × Time effect was found regarding knowledge of “Standards of Evidence” between the intervention and control condition, F (3, 707) = 3.12, p = .025. As shown in Table 3 and Fig. 1b, PROSPER team members showed significant improvement in expert knowledge between pretest and the second year, t (712) = 2.28 p < .023, while control stakeholders showed no change across time.

Fidelity Assurance

Less than one fifth of stakeholders described expert methods of fidelity assurance at baseline. A significant Condition × Time effect was found regarding knowledge of “Fidelity Assurance” between the intervention and control conditions, F (5, 1075) = 4.46, p < .001. As shown in Table 3 and Fig. 1c, there was a significant increase in expertise among PROSPER team members between the pretest and the second year of involvement in the project, t (1222) = 3.84 p < .01 and again between the fourth and fifth year of the project, t (846) = −5.14 p < .001, while control stakeholders showed no change across time.

Program Evaluation

Slightly more than a tenth of stakeholders described expert methods of program evaluation at baseline. A significant Condition × Time effect was found regarding knowledge of “Program Evaluation” between the intervention and control conditions F (3, 731) = 2.60, p = .05. As shown in Table 3 and Fig. 1d, there was a significant increase in expertise among PROSPER team members between the baseline measurement and beginning of the second year of involvement in the project t (758) = −4.15 p < .001, while control stakeholders showed no change across time.

Discussion

Utilizing a randomized-control trial at the community level, this study investigated the effects of the PROSPER partnership model on local knowledge of prevention programming. Employing a series of open-ended questions, we assessed community leaders’ knowledge of program selection, implementation and evaluation. More specifically, we assessed local knowledge of evidence-based prevention programming sources, standards of program evidence, methods of fidelity assurance, and program evaluation.

Analyses of the participants “expert” knowledge revealed that their understanding of selection, implementation and evaluation of EBIs significantly and meaningfully increased among PROSPER community leaders across time. Specifically, a greater proportion of PROSPER community leaders than their counterparts in the control communities demonstrated expertise at the final year of measurement–4 to 6 years after pretest–in knowledge of “standards of evidence” (38% vs. 20.7%), “fidelity assurance” (47.2% vs. 9.9%), and “program evaluation” (38.2% vs. 14.6%).

However, there was no significant impact on PROSPER team members’ knowledge of prevention program sources (e.g., the CSAP, SAMHSA, Blueprints lists) compared to control communities (15.4% vs. 11%). This may, in part, be due to the fact that within the PROSPER model community leaders are provided with brief menus of pre-screened and project-approved EBIs. Consequently, since team members were not required to search for quality sources of prevention programming, there were likely limited opportunities to learn about sources of information regarding high-quality effective programs. The general pattern of findings, nonetheless, provide support for the effectiveness of the PROSPER project to cultivate programming expertise in community leaders.

Previous reports demonstrate the impact of PROSPER programming on student reports of substance abuse outcomes at 1.5 years (end of 8th grade) and 4.5 years (end of 11th grade) post-intervention (Spoth et al. 2007c, 2008, 2011), as well as on mediating factors including substance abuse attitudes, family warmth and communication (Redmond et al. 2009). In addition, observations of program implementation demonstrated sustained high fidelity for both family- and school-based interventions (Spoth et al. 2007c, 2011). Findings also indicated the viability of the PROSPER model as long as 3 years after federal funding for program delivery was withdrawn, as all 14 PROSPER sites were able to generate funding to continue high-quality programming (Perkins et al. 2011).

While we have not yet conducted a complete economic analysis, a recent single-state cost analysis of PROSPER estimated the cost of forming and supporting a local PROSPER team over time to be between $81,488–$106,224 per year. In turn, the fiscal costs to cultivate local stakeholder knowledge–to the levels shown in these analyses–were estimated to range from $7,793 and $8,192 per year. Importantly, analyses suggested greater cost efficiencies when programs were delivered using the PROSPER model, as compared to implementation without a formal delivery and support system, with an estimated societal net benefit worth between $6,307 and $6,377 per person, in the case of the family program (Crowley et al. 2011).

The project’s data collection utilized an open-ended set of interview questions to assess the participants’ knowledge of prevention. This approach led to a rich set of responses. By then employing a grounded theory approach, these qualitative analyses described leaders’ knowledge in a more refined way than quantitative procedures alone could accomplish. Such analysis led us to induce categories of “expert” knowledge that we believe to be more accurate than simply providing interviewees with multiple-choice items that may elicit greater response bias. It was by using these categories that more sophisticated, multilevel analyses could be employed with a greater degree of confidence. Therefore, we believe that the use of a mixed methods approach provided greater insight and more accurate inferences than could be gained by utilizing either method in isolation.

Responses from interviews were categorized in order to describe the types of information participants’ possess regarding evidence-based prevention programming. The categories indicate that community leaders draw on a wide variety of knowledge sources when making decisions about program adoption, implementation, and evaluation. These findings indicate that few of the sources, standards, and methods advocated for by prevention scientists are known by most community leaders. This is part of the “disconnect” that is often noted between current research knowledge and what is known to–and utilized by–policymakers (Brownson et al. 2006). In fact, at the pre test in 2002, very few community leaders had any ‘expert knowledge’ regarding aspects of evidence-based prevention programs, and it appears that most local decision-making regarding programming is done with limited knowledge of scientific principles of evidence. Thus, there is still a significant gap between science and its translation to local action (Wandersman, et al. 2008) that requires new models of communication and technical assistance to support community leaders (O’Connell et al. 2009). This gap may be wider in rural areas than in urban areas, but at present there are no commensurate data from urban stakeholders.

Whereas the PROSPER model of proactive TA clearly led to much higher rates of expert knowledge than was found in the control communities, even after 5 years, less than half of the team members interviewed showed an expert level of knowledge. Thus, even within a program that has been effective both in increasing community expertise and delivering significant improvements in youth outcomes, there is still substantial need for further team member and community education on evidence-based programming. While this percentage of expertise may not be ideal, it is also unrealistic to assume that all team members would develop such knowledge. In fact, the benefit of a coalition or team approach is that participants can pool their knowledge and expertise; thus, it may be sufficient for only some team members to hold the requisite expertise–although it would be important for the team as a whole to value such knowledge.

Although we seek to illustrate the value of using mixed methods to harvest robust data that are then rigorously evaluated, a limitation of this approach is that many elements of the qualitative analysis cannot be further analyzed using quantitative methods. For instance, an important issue in diffusion of prevention programs is the degree to which program adaptation is appropriate or not. While local leaders’ response reflected the importance of this question, available data did not allow us to further assess community leaders’ knowledge of program adaptation with quantitative approaches.

This study shows how most rural and small-town community leaders involved in prevention programming for youth have limited expertise regarding evidence-based prevention programming. There is clearly still a large information gap in translating science to practice. Nonetheless, the analyses indicate that the PROSPER model was effective in cultivating community-level prevention programming expertise. These findings support growing evidence that demonstrates the value of robust training and proactive TA, such as that provided in PROSPER, for successful program diffusion. It should be noted that this study could have pushed more deeply into the knowledge base of local leaders with further qualitative analyses, but instead focused on utilizing a mixed methods approach to assess our research questions. While past work has provided evidence of PROSPER’s effectiveness in diffusing EBIs, this analysis demonstrates that PROSPER also increases knowledge of how to adopt, implement and evaluate evidence-based prevention programs. Consequently, further work should seek to demonstrate an empirical link between knowledge cultivated by prevention systems, such as PROSPER, and the successful diffusion of evidence-based prevention programs.

References

Aarons, G., Sommerfeld, D., Hecht, D., Silovsky, J., & Chaffin, M. (2009). The impact of evidence-based practice implementation and fidelity monitoring on staff turnover. Journal of Consulting and Clinical Psychology, 77, 270–280.

Adelman, H., & Taylor, L. (2003). On sustainability of project innovations as systemic change. Journal of Educational and Psychological Consultation, 14, 1–25.

Backer, T., & Rogers, E. (1999). Dissemination best practices workshop briefing paper: State-of-the-art review on dissemination research and dissemination partnership. Encino, CA: NCAP.

Brownson, R., Royer, C., Ewing, R., & McBride, T. (2006). Researchers and policymakers: Travelers in parallel universes. American Journal of Preventive Medicine, 3, 164–72.

Butterfoss, F., Goodman, R., & Wandersman, A. (1993). Community coalitions for prevention and health promotion. Health Education Research, 8, 315–330.

Creswell, J. (2009). Research design: Qualitative, quantitative, and mixed methods approaches. Thousand Oaks, CA: Sage.

Crowley, M., Jones, D., Greenberg, M., Feinberg., M., & Spoth, D. (2011). Resource consumption of a dissemination model for prevention programs: The PROSPER partnership model. Journal of Adolescent Health. (in press)

Durlak, J. (1998). Why program implementation is important. Journal of Prevention & Intervention in the Community, 17, 5–18.

Durlak, J., & DuPre, E. (2008). A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41, 327–350.

Feinberg, M., Greenberg, M., Osgood, D., Anderson, A., & Babinski, L. (2002). The effects of training community leaders in prevention science. Evaluation and Program Planning, 25, 245–259.

Feinberg, M., Greenberg, M., & Osgood, D. (2004). Readiness, functioning, and effectiveness in community prevention coalitions. American Journal of Community Psychology, 33, 163–176.

Fixsen, D., Naoom, S., Blase, K., Friedman, R., & Wallace, F. (2005). Implementation research: A synthesis of the literature. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute, National Implementation Research Network.

Flay, B., Biglan, A., Boruch, R., Castro, F., Gottfredson, D., Kellam, S., et al. (2005). Standards of evidence: Criteria for efficacy, effectiveness and dissemination. Prevention Science, 6, 151–175.

Glaser, B. (1992). Basics of grounded theory analysis. Mill Valley, CA: Sociology Press.

Gomez, B., Greenberg, M., & Feinberg, M. (2005). Sustainability of community coalitions: An evaluation of Communities That Care. Prevention Science, 6, 199–202.

Halfors, D., & Godette, D. (2002). Will the “Principles of Effectiveness” improve prevention practice? Health Education Review, 17, 461–470.

Hawkins, J., Catalano, R., & Arthur, M. (2002). Promoting science-based prevention in communities. Addictive Behaviors, 27, 951–976.

Hawkins, D., Oesterle, S., Brown, E., Arthur, M., Abbott, R., Fagan, A., et al. (2009). Results of a type 2 translational research trial to prevent adolescent drug use and delinquency. Archives of Pediatric Adolescent Medicine, 163, 789–793.

Johnson, K., Hays, C., Center, H., & Daley, C. (2004). Building capacity and sustainable prevention innovations: A sustainability planning model. Evaluation and Program Planning, 27, 135–149.

Johnson, R., & Onwuegbuzie, A. (2004). Mixed methods research: A research paradigm whose time has come. Educational Researcher, 33, 14–26.

Landis, R., & Koch, G. (1977). The measurement of observer agreement for categorical data. Biometrics, 3, 159–74.

Littell, R., Milliken, G., Stroup, W., Wolfinger, R., & Schabenberger, O. (2006). SAS for mixed models (2nd ed.). Cary, NC: SAS Institute Inc.

Mihalic, S., Irwin, K., Elliott, D. S., Fagan, A., & Hansen, D. (2001). Blueprints for violence prevention. Bulletin. Washington, DC: U.S. Department of Justice, Office of Justice Programs, Office of Juvenile Justice and Delinquency Prevention.

Mihalic, S., Irwin, K., Fagan, A., Ballard, D., & Elliott, D. (2004). Successful program implementation: Lessons from Blueprints. Electronic report. U.S. Department of Justice, Office of Justice Programs). Retrieved August 11, 2011, from http://www.ojp.usdoj.gov.

Molgaard, V. (1997). The extension service as key mechanism for research and services delivery for prevention of mental health disorders in rural areas. American Journal of Community Psychology, 25, 515–544.

O’Connell, M., Boat, T., & Warner, K. (Eds.). (2009). Mental, emotional, and behavioral disorders among young people. Washington, DC: National Academy.

O’Donnell, L., Scattergood, P., Adler, M., San Doval, A., Barker, M., Kelly, J., et al. (2000). The role of technical assistance in the replication of effective HIV interventions. AIDS Education and Prevention, 12, 99–111.

Perkins, D., Feinberg, M., Greenberg, M., Johnson, L., Chilenski, S., Mincemoyer, C., et al. (2011). Team factors that predict to sustainability indicators for community-based prevention teams. Evaluation and Program Planning, 34, 283–291.

Pope, C., Ziebland, S., & Mays, N. (2000). Analysing qualitative data. BMJ, 320, 114–116.

Redmond, C., Spoth, R., Shin, C., Schainke, L., Greenberg, M., & Feinberg, M. (2009). Long-term protective factor outcomes of evidence-based interventions implemented by community teams through community-university partnership. Journal of Primary Prevention, 30, 513–530.

Riggs, N., Nakawatase, M., & Pentz, M. (2008). Promoting community coalition functioning: Effects of project STEPP. Prevention Science, 9, 63–72.

Ringwalt, C., Vincus, A., Ennett, S., Hanley, S., Bowling, J., & Rohrbach, L. (2009). The prevalence of evidence-based substance use prevention curricula in U.S. middle schools in 2005. Prevention Science, 10, 33–40.

Roussos, S., & Fawcett, S. (2000). A review of collaborative partnerships as a strategy for improving community health. Annual Review of Public Health, 21, 369–402.

SAS Institute Inc. (2004). SAS/STAT 9.1 User’s Guide. Cary, NC: SAS Institute Inc.

Spoth, R., & Greenberg, M. (2005). Toward a comprehensive strategy for effective practitioner-scientist partnerships and larger-scale community benefits. American Journal of Community Psychology, 35, 107–126.

Spoth, R., & Greenberg, M. (2011). Impact challenges in community science-with-practice. American Journal of Community Psychology, 48, 106–119.

Spoth, D., Greenberg, M., Bierman, K., & Redmond, C. (2004). PROSPER community-university partnership model for public education systems. Prevention Science, 5, 31–39.

Spoth, R., Clair, S., Greenberg, M., Redmond, C., & Shin, C. (2007a). Toward dissemination of evidence-based family interventions. Journal of Family Psychology, 21, 137–145.

Spoth, R., Guyll, M., Lillehoj, C., Redmond, C., & Greenberg, M. (2007b). PROSPER study of evidence-based intervention implementation quality by community-university partnerships. Journal of Community Psychology, 35, 981–999.

Spoth, R., Redmond, C., Shin, C., Greenberg, M., Clair, S., & Feinberg, M. (2007c). Substance use outcomes at 18 months past baseline from the PROSPER community-university partnership trial. American Journal of Preventive Medicine, 32, 395–402.

Spoth, R., Greenberg, M., & Turrisi, R. (2008). Preventive interventions addressing underage drinking. Pediatrics, 121, 311–336.

Spoth, R., Redmond, C., Clair, S., Shin, C., Greenberg, M., & Feinberg, M. (2011). Preventing substance misuse through community health partnerships and evidence-based interventions. American Journal of Preventive Medicine, 4, 440–447.

Strauss, A., & Corbin, J. (1992). Basics of qualitative research: Techniques and procedures for developing grounded theory. Thousand Oaks, CA: Sage.

Strauss, A., & Corbin, J. (1998). Basics of qualitative research: Techniques and procedures for developing grounded theory. Thousand Oaks, CA: Sage.

Substance Abuse and Mental Health Administration (2011). National Registry of Effective Programs. Retrieved August 11, 2011, from http://nrepp.samhsa.gov/.

Wandersman, A., & Florin, P. (2003). Community interventions and effective prevention. American Psychologist, 58, 441–448.

Wandersman, A., Duffy, J., Flaspohler, P., Noonan, R., Lubell, K., Stillman, L., et al. (2008). Bridging the gap between prevention research and practice. American Journal of Community Psychology, 37, 171–181.

Author information

Authors and Affiliations

Corresponding author

Additional information

Work on this paper was supported by research grant R01 DA 013709 from the National Institute on Drug Abuse.

Rights and permissions

About this article

Cite this article

Crowley, D.M., Greenberg, M.T., Feinberg, M.E. et al. The Effect of the PROSPER Partnership Model on Cultivating Local Stakeholder Knowledge of Evidence-Based Programs: A Five-Year Longitudinal Study of 28 Communities. Prev Sci 13, 96–105 (2012). https://doi.org/10.1007/s11121-011-0250-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11121-011-0250-5