Abstract

An extensive literature addresses citizen ignorance, but very little research focuses on misperceptions. Can these false or unsubstantiated beliefs about politics be corrected? Previous studies have not tested the efficacy of corrections in a realistic format. We conducted four experiments in which subjects read mock news articles that included either a misleading claim from a politician, or a misleading claim and a correction. Results indicate that corrections frequently fail to reduce misperceptions among the targeted ideological group. We also document several instances of a “backfire effect” in which corrections actually increase misperceptions among the group in question.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

“It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.”

-Mark Twain

A substantial amount of scholarship in political science has sought to determine whether citizens can participate meaningfully in politics. Recent work has shown that most citizens appear to lack factual knowledge about political matters (see, e.g., Delli Carpini and Keeter 1996) and that this deficit affects the issue opinions that they express (Althaus 1998; Kuklinski et al. 2000; Gilens 2001). Some scholars respond that citizens can successfully use heuristics, or information shortcuts, as a substitute for detailed factual information in some circumstances (Popkin 1991; Sniderman et al. 1991; Lupia 1994; Lupia and McCubbins 1998).Footnote 1

However, as Kuklinski et al. point out (Kuklinski et al. 2000, p. 792), there is an important distinction between being uninformed and being misinformed. Advocates of heuristics typically assume that voters know they are uninformed and respond accordingly. But many citizens may base their policy preferences on false, misleading, or unsubstantiated information that they believe to be true (see, e.g, Kuklinski et al. 2000, p. 798). Frequently, such misinformation is related to one’s political preferences. For instance, after the U.S. invasion of Iraq, the belief that Iraq had weapons of mass destruction before the invasion was closely associated with support for President Bush (Kull et al. 2003).

From a normative perspective, it is especially important to determine whether misperceptions, which distort public opinion and political debate, can be corrected. Previous research in political science has found that it is possible to change issue opinions by directly providing relevant facts to subjects (Kuklinski et al. 2000; Gilens 2001; Howell and West 2009). However, such authoritative statements of fact (such as those provided by a survey interviewer to a subject) are not reflective of how citizens typically receive information. Instead, people typically receive corrective information within “objective” news reports pitting two sides of an argument against each other, which is significantly more ambiguous than receiving a correct answer from an omniscient source. In such cases, citizens are likely to resist or reject arguments and evidence contradicting their opinions—a view that is consistent with a wide array of research (e.g. Lord et al. 1979; Edwards and Smith 1996; Redlawsk 2002; Taber and Lodge 2006).

In this paper, we report the results of two rounds of experiments investigating the extent to which corrective information embedded in realistic news reports succeeds in reducing prominent misperceptions about contemporary politics. In each of the four experiments, which were conducted in fall 2005 and spring 2006, ideological subgroups failed to update their beliefs when presented with corrective information that runs counter to their predispositions. Indeed, in several cases, we find that corrections actually strengthened misperceptions among the most strongly committed subjects.

Defining Misperceptions

To date, the study of citizens’ knowledge of politics has tended to focus on questions like veto override requirements for which answers are clearly true or false (e.g. Delli Carpini and Keeter 1996). As such, studies have typically contrasted voters who lack factual knowledge (i.e. the “ignorant”) with voters who possess it (e.g. Gilens 2001). But as Kuklinski et al. (2000) note, some voters may unknowingly hold incorrect beliefs, especially on contemporary policy issues on which politicians and other political elites may have an incentive to misrepresent factual information.Footnote 2

In addition, the factual matters that are the subject of contemporary political debate are rarely as black and white as standard political knowledge questions. As Kuklinski et al. write (1998, p. 148), “Very often such factual representations [about public policy] are not prior to or independent of the political process but arise within it. Consequently, very few factual claims are beyond challenge; if a fact is worth thinking about in making a policy choice, it is probably worth disputing.” We must therefore rely on a less stringent standard in evaluating people’s factual knowledge about politics in a contemporary context. One such measure is the extent to which beliefs about controversial factual matters square with the best available evidence and expert opinion. Accordingly, we define misperceptions as cases in which people’s beliefs about factual matters are not supported by clear evidence and expert opinion—a definition that includes both false and unsubstantiated beliefs about the world.

To illustrate the point, it is useful to compare our definition with Gaines et al. (2007), an observational study that analyzed how students update their beliefs about the war in Iraq over time. They define the relevant fact concerning Iraqi WMD as knowing that weapons were not found and describe the (unsupported) belief that Iraq hid or moved its WMD before the U.S. invasion as an “interpretation” of that fact. Our approach is different. Based on the evidence presented in the Duelfer Report, which was not directly disputed by the Bush administration, we define the belief that Saddam moved or hid WMD before the invasion as a misperception.

Previous Research on Corrections

Surprisingly, only a handful of studies in political science have analyzed the effects of attempts to correct factual ignorance or misperceptions.Footnote 3 First, Kuklinski et al. (2000) conducted two experiments attempting to counter misperceptions about federal welfare programs. In the first, which was part of a telephone survey of Illinois residents, randomly selected treatment groups were given either a set of relevant facts about welfare or a multiple-choice quiz about the same set of facts. These groups and a control group were then asked for their opinions about two welfare policy issues. Kuklinski and his colleagues found that respondents had highly inaccurate beliefs about welfare generally; that the least informed people expressed the highest confidence in their answers; and that providing the relevant facts to respondents had no effect on their issue opinions (nor did it in an unreported experiment about health care). In a later experiment conducted on college students, they asked subjects how much of the national budget is spent on welfare and how much should be spent. Immediately afterward, the experimental group was provided with the correct answer to the first question. Unlike the first experiment, this more blunt treatment did change their opinions about welfare policy.

In addition, Bullock (2007) conducted a series of experiments examining the effects of false information in contemporary political debates. The experiments employed the belief perseverance paradigm from psychology (c.f. Ross and Lepper 1980) in which treatment group is presented with a factual claim that is subsequently discredited. The beliefs and attitudes of the treatment group are then compared with a control group that was not exposed to the false information. All three experiments found that treated subjects differed significantly from controls (i.e. showed evidence of belief perseverance) and that they perversely became more confident in their beliefs. In addition, two experiments concerning events in contemporary politics demonstrated that exposure to the discredited information pushed party identifiers in opposing (partisan) directions.

Three other studies considered the effects of providing factual information on issue-specific policy preferences. Gilens (2001) also conducted an experiment in which survey interviewers provided relevant facts to subjects before asking about their opinions about two issues (crime and foreign aid). Like the second Kuklinski et al. experiment (but unlike the first one), he found that this manipulation significantly changed respondents’ issue opinions. (His study focused on factual ignorance and did not investigate misinformation as such.) Similarly, Howell and West (2009) showed that providing relevant information about education policy alters public preferences on school spending, teacher salaries, and charter schools. Finally, Sides and Citrin (2007) find that correcting widespread misperceptions about the proportion of immigrants in the US population has little effect on attitudes toward immigration.

While each of these studies contributes to our understanding of the effect of factual information on issue opinions, their empirical findings are decidedly mixed. In some cases, factual information was found to change respondents’ policy preferences (Kuklinski et al. 2000 [study 2]; Bullock 2007; Gilens 2001; Howell and West 2009), while in other cases it did not (Kuklinkski et al. 2000 [study 1]; Sides and Citrin 2007). This inconsistency suggests the need for further research.

We seek to improve on previous studies in two respects. First, rather than focusing on issue opinions as our dependent variable, we directly study the effectiveness of corrective information in causing subjects to revise their factual beliefs. It is difficult to interpret the findings in several of the studies summarized above without knowing if they were successful in changing respondents’ empirical views. Second, we present corrective information in a more realistic format. The corrective information in these studies was always presented directly to subjects as truth. Under normal circumstances, however, citizens are rarely provided with such definitive corrections. Instead, they typically receive corrective information in media reports that are less authoritative and direct. As a result, we believe it is imperative to study the effectiveness of corrections in news stories, particularly given demands from press critics for a more aggressive approach to fact-checking (e.g. Cunningham 2003; Fritz et al. 2004).

Theoretical Expectations

Political beliefs about controversial factual questions in politics are often closely linked with one’s ideological preferences or partisan beliefs. As such, we expect that the reactions we observe to corrective information will be influenced by those preferences. In particular, we draw on an extensive literature in psychology that shows humans are goal-directed information processors who tend to evaluate information with a directional bias toward reinforcing their pre-existing views (for reviews, see Kunda 1990 and Molden and Higgins 2005). Specifically, people tend to display bias in evaluating political arguments and evidence, favoring those that reinforce their existing views and disparaging those that contradict their views (see, e.g., Lord et al. 1979; Edwards and Smith 1996; Taber and Lodge 2006).

As such, we expect that liberals will welcome corrective information that reinforces liberal beliefs or is consistent with a liberal worldview and will disparage information that undercuts their beliefs or worldview (and likewise for conservatives).Footnote 4 Like Wells et al. (2009), we therefore expect that information concerning one’s beliefs about controversial empirical questions in politics (i.e. factual beliefs) will be processed like information concerning one’s political views (i.e. attitudes). This claim is consistent with the psychology literature on information processing but inconsistent with general practice in political science, which has tended to separate the study of factual and civic knowledge from the study of political attitudes.

There are two principal mechanisms by which information processing may be slanted toward preserving one’s pre-existing beliefs. First, respondents may engage in a biased search process, seeking out information that supports their preconceptions and avoiding evidence that undercuts their beliefs (see, e.g., Taber and Lodge 2006). We do not study the information search process in the present study, which experimentally manipulates exposure to corrective information rather than allowing participants to select the information to which they are exposed. We instead focus on the process by which citizens accept or reject new information after it has been encountered. Based on the perspective described above, we expect that citizens are more likely to generate counter-arguments against new information that contradicts their beliefs than information that is consistent with their preexisting views (see, e.g., Ditto and Lopez 1992; Edwards and Smith 1996; Taber and Lodge 2006). As such, they are less likely to accept contradictory information than information that reinforces their existing beliefs.

However, individuals who receive unwelcome information may not simply resist challenges to their views. Instead, they may come to support their original opinion even more strongly—what we call a “backfire effect.”Footnote 5 For instance, in a dynamic process tracing experiment, Redlawsk (2002) finds that subjects who were not given a memory-based processing prime came to view their preferred candidate in a mock election more positively after being exposed to negative information about the candidate.Footnote 6 Similarly, Republicans who were provided with a frame that attributed prevalence of Type 2 diabetes to neighborhood conditions were less likely to support public health measures targeting social determinants of health than their counterparts in a control condition (Gollust et al. 2009). What produces such an effect? While Lebo and Cassino interpret backfire effect-type results as resulting from individuals “simply view[ing] unfavorable information as actually being in agreement with their existing beliefs” (2007, p. 723), we follow Lodge and Taber (2000) and Redlawsk (2002) in interpreting backfire effects as a possible result of the process by which people counterargue preference-incongruent information and bolster their preexisting views. If people counterargue unwelcome information vigorously enough, they may end up with “more attitudinally congruent information in mind than before the debate” (Lodge and Taber 2000, p. 209), which in turn leads them to report opinions that are more extreme than they otherwise would have had.

It’s important to note that the account provided above does not imply that individuals simply believe what they want to believe under all circumstances and never accept counter-attitudinal information. Ditto and Lopez (1992, p. 570), preference-inconsistent information is likely to be subjected to greater skepticism than preference-consistent information, but individuals who are “confronted with information of sufficient quantity or clarity… should eventually acquiesce to a preference-inconsistent conclusion.” The effectiveness of corrective information is therefore likely to vary depending on the extent to which the individual has been exposed to similar messages elsewhere. For instance, as a certain belief becomes widely viewed as discredited among the public and the press, individuals who might be ideologically sympathetic to that belief will be more likely to abandon it when exposed to corrective information.Footnote 7

As the discussion above suggests, we expect significant differences in individuals’ motivation to resist or counterargue corrective information about controversial factual questions. Defensive processing is most likely to occur among adherents of the ideological viewpoint that is consistent with or sympathetic to the factual belief in question (i.e. liberals or conservatives depending on the misperception).Footnote 8 Centrists or adherents of the opposite ideology are unlikely to feel threatened by the correction and would therefore not be expected to process the information in a defensive manner. We therefore focus on ideology as the principal between-subjects moderator of our experimental treatment effects.

Specifically, we have three principal hypotheses about how the effectiveness of corrections will vary by participant ideology:

Hypothesis 1 (Ideological Interaction):

The effect of corrections on misperceptions will be moderated by ideology.

Hypothesis 2a (Resistance to Corrections):

Corrections will fail to reduce misperceptions among the ideological subgroup that is likely to hold the misperception.

Hypothesis 2b (Correction Backfire):

In some cases, the interaction between corrections and ideology will be so strong that misperceptions will increase for the ideological subgroup in question.

To fix these ideas mathematically, we define our dependent variable Y as a measure of misperceptions (in practice, a five-point Likert scale in which higher values indicate greater levels of agreement with a statement of the misperception). We wish to estimate the effect of a correction treatment to see if it will reduce agreement with the misperception. However, we expect that the marginal effect of the correction will vary with ideology, which we define as the relevant measure of predispositions in a general political context (in practice, a seven-point Likert scale from “very liberal” to “very conservative”). Thus, we must include an interaction between ideology and the correction in our specification. Finally, we include a control variable for political knowledge, which is likely to be negatively correlated with misperceptions, to improve the efficiency of our statistical estimation. We therefore estimate the following equation:

Using Eq. 1, we can formalize the three hypotheses presented above. Hypothesis 1, which predicts that the effect of the correction will be moderated by ideology, implies that the coefficient for the interaction between correction and ideology will not equal zero (\( \beta_{3} \ne 0 \)).Footnote 9 Hypothesis 2a, which predicts that the correction will fail to reduce misperceptions among the ideological subgroup that is likely to hold the misperception, implies that the marginal effect of the correction will not be statistically distinguishable from zero for the subgroup (\( \beta_{1} + \beta_{3} *{\text{Ideology = 0}} \) for liberals or conservatives). Alternatively, Hypothesis 2b predicts that the correction will sometimes increase misperceptions for the ideological subgroup in question, implying that the marginal effect will be greater than zero for the subgroup (\( \beta_{1} + \beta_{3} *{\text{Ideology > 0}} \)).

Finally, we discuss two other factors that may influence the extent of information processing which are considered in the empirical analysis below. First, participants may be more likely to counter-argue against corrective information based on the perceived importance of the issue, which is consistent with experimental results showing that perceived importance increases systematic processing of messages (see, e.g., Chaiken 1980).Footnote 10 Second, findings such as those of Taber and Lodge (2006) might suggest political knowledge as a potential moderator. We see two potential ways that political knowledge may affect the effectiveness of corrections. On the one hand, individuals with more knowledge may be better able to resist new information or provide counterarguments, but they may also be more likely to recognize corrective information as valid and update their beliefs accordingly. As such, we include knowledge as a control variable but make no strong prediction regarding its effects as a moderator.

Research Design

To evaluate the effects of corrective information, we conducted four experiments in which subjects read mock newspaper articles containing a statement from a political figure that reinforces a widespread misperception. Participants were randomly assigned to read articles that either included or did not include corrective information immediately after a false or misleading statement (see Appendix for the full text of all four articles). They were then asked to answer a series of factual and opinion questions.

Because so little is known about the effectiveness of corrective information in contemporary politics, we designed the experiments to be realistic as possible in capturing how citizens actually receive information about politics. First, we focus on controversial political issues from contemporary American politics (the war in Iraq, tax cuts, and stem cell research) rather than the hypothetical stories commonly found in psychology research (e.g. Johnson and Seifert 1994). As a result, our experiments seek to correct pre-existing misperceptions rather than constructing them within the experiment. While this choice is likely to make misperceptions more difficult to change, it increases our ability to address the motivating concern of this research—correcting misperceptions in the real world. In addition, we test the effectiveness of corrective information in the context of news reports, one of the primary mechanisms by which citizens acquire information. In order to maximize realism, we constructed the mock news articles using text from actual articles whenever possible.

Given our focus on pre-existing misperceptions, it is crucial to use experiments, which allow us to disentangle the correlations between factual beliefs and opinion that frustrate efforts to understand the sources of real-world misperceptions using survey research (e.g. Kull et al. 2003). For instance, rather than simply noting that misperceptions about Iraqi WMD are high among conservatives (a finding which could have many explanations), we can randomize subjects across conditions (avoiding estimation problems due to pre-existing individual differences in knowledge, ideology, etc.) and test the effectiveness of corrections for that group and for subjects as a whole.

A final research design choice was to use a between-subjects design in which we compared misperceptions across otherwise identical subjects who were randomly assigned to different experimental conditions. This decision was made to maximize the effect of the corrections. A within-subjects design in which we compared beliefs in misperceptions before and after a correction would anchor subjects’ responses on their initial response, weakening the potential for an effective correction or a backfire effect.

The experiments we present in this paper were all conducted in an online survey environment with undergraduates at a Catholic university in the Midwest.Footnote 11 Study 1, conducted in fall 2005, tests the effect of a correction on the misperception that Iraq had WMD immediately before the war in Iraq. Study 2, which was conducted in spring 2006, includes a second version of the Iraq WMD experiment as well as experiments attempting to correct misperceptions about the effect of tax cuts on revenue and federal policy toward stem cell research.

As noted above, we define misperceptions to include both false and unsubstantiated beliefs about the world. We therefore consider two issues (the existence of Iraqi WMD and the effect of the Bush tax cuts on revenue) in which misperceptions are contradicted by the best available evidence, plus a third case (the belief that President Bush “banned” stem cell research) in which the misperception is demonstrably incorrect.

Study 1: Fall 2005

The first experiment we conducted, which took place in fall 2005, tested the effect of a correction embedded in a news report on beliefs that Iraq had weapons of mass destruction immediately before the U.S. invasion. One of the primary rationales for war offered by the Bush administration was Iraq’s alleged possession of biological and chemical weapons. Perhaps as a result, many Americans failed to accept or did not find out that WMD were never found inside the country. This misperception, which persisted long after the evidence against it had become overwhelming, was closely linked to support for President Bush (Kull et al. 2003).Footnote 12 One possible explanation for the prevalence of the WMD misperception is that journalists failed to adequately fact-check Bush administration statements suggesting the U.S. had found WMD in Iraq (e.g. Allen 2003). As such, we test a correction condition embedded in a mock news story (described further below) in which a statement that could be interpreted as suggesting that Iraq did have WMD is followed by a clarification that WMD had not been found.

Another plausible explanation for why Americans were failing to update their beliefs about Iraqi WMD is fear of death in the wake of September 11, 2001 terrorist attacks. To test this possibility, we drew on terror management theory (TMT), which researchers have suggested may help explain responses to 9/11 (Pyszczynski et al. 2003). TMT research shows that reminders of death create existential anxiety that subjects manage by becoming more defensive of their cultural worldview and hostile toward outsiders. Previous studies have found that increasing the salience of subjects’ mortality increased support for President Bush and for U.S. military interventions abroad among conservatives (Cohen et al. 2005; Landau et al. 2004; Pyszczynski et al. 2006) and created increased aggressiveness toward people with differing political views (McGregor et al. 1998), but the effect of mortality salience on both support for misperceptions about Iraq and the correction of them has not been tested. We therefore employed a mortality salience manipulation to see if it increased WMD misperceptions or reduced the effectiveness of the correction treatment.

Method

130 participantsFootnote 13 were randomly assigned to one of four treatments in a 2 (correction condition) × 2 (mortality salience) design.Footnote 14 The Appendix provides the full text of the article that was used in the experiment. Subjects in the mortality salience condition are asked to “Please briefly describe the emotions that the thought of your own death arouses in you” and to “Jot down, as specifically as you can, what you think will happen to you as you physically die and once you are physically dead.” (Controls were asked versions of the same questions in which watching television is substituted for death.)

After a distracter task, subjects were then asked to read a mock news article attributed to the Associated Press that reports on a Bush campaign stop in Wilkes-Barre, PA during October 2004. The article describes Bush’s remarks as “a rousing, no-retreat defense of the Iraq war” and quotes a line from the speech he actually gave in Wilkes-Barre on the day the Duelfer Report was released (Priest and Pincus 2004): “There was a risk, a real risk, that Saddam Hussein would pass weapons or materials or information to terrorist networks, and in the world after September the 11th, that was a risk we could not afford to take.” Such wording may falsely suggest to listeners that Saddam Hussein did have WMD that he could have passed to terrorists after September 11, 2001. In the correction condition, the story then discusses the release of the Duelfer Report, which documents the lack of Iraqi WMD stockpiles or an active production program immediately prior to the US invasion.Footnote 15

After reading the article, subjects were asked to state whether they agreed with this statement: “Immediately before the U.S. invasion, Iraq had an active weapons of mass destruction program, the ability to produce these weapons, and large stockpiles of WMD, but Saddam Hussein was able to hide or destroy these weapons right before U.S. forces arrived.”Footnote 16 Responses were measured on a five-point Likert scale ranging from “strongly disagree” (1) to “strongly agree” (5).Footnote 17

Results

The results from Study 1 largely support the backfire hypothesis, as shown by two regression models that are presented in Table 1.Footnote 18

Model 1 estimates the effect of the correction treatment; a centered seven-point ideology scale ranging from strongly liberal (−3) to strongly conservative (3); an additive five-question scale measuring political knowledge using conventional factual questions (Delli Carpini and Keeter 1996); and the mortality salience manipulation. As expected, more knowledgeable subjects were less likely to agree that Iraq had WMD (p < 0.01) and conservatives were more likely to agree with the statement (p < 0.01). We also find that correction treatment did not reduce overall misperceptions and the mortality salience manipulation was statistically insignificant.Footnote 19

In Model 2, we test whether the effect of the correction is moderated by subjects’ political views by including an interaction between ideology and the treatment condition. As stated earlier, our hypothesis is that the correction will be increasingly ineffective as subjects become more conservative (and thus more sympathetic to the claim that Iraq had WMD). When we estimate the model, the interaction term is significant (p < 0.01), suggesting that the effect of the correction does vary by ideology.Footnote 20

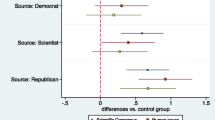

Because interaction terms are often difficult to interpret, we follow Brambor et al. (2006) and plot the estimated marginal effect of the correction and the 95% confidence interval over the range of ideology in Fig. 1.

For very liberal subjects, the correction worked as expected, making them more likely to disagree with the statement that Iraq had WMD compared with controls. The correction did not have a statistically significant effect on individuals who described themselves as liberal, somewhat left of center, or centrist. But most importantly, the effect of the correction for individuals who placed themselves to the right of center ideologically is statistically significant and positive. In other words, the correction backfired—conservatives who received a correction telling them that Iraq did not have WMD were more likely to believe that Iraq had WMD than those in the control condition.Footnote 21 (The interpretation of other variables does not change in Model 2.)

What are we to make of this finding? One possible interpretation, which draws on the persuasion literature, would point to source credibility as a possible explanation—conservatives are presumably likely to put more trust in President Bush and less trust in the media than other subjects in the sample (though we do not have data to support this conjecture). However, it is not clear that such an interpretation can explain the observed data in a straightforward way. Subjects in the no-correction and correction conditions both read the same statement from President Bush. Thus the backfire effect must be the result of the experimentally manipulated correction. If subjects simply distrusted the media, they should simply ignore the corrective information. Instead, however, conservatives were found to have moved in the “wrong” direction—a reaction that is hard to attribute to simple distrust. We believe the result is consistent with our theoretical account of goal-directed information processing.

Study 2: Spring 2006

In spring 2006, we conducted a series of additional experiments designed to extend our findings and test the generality of the backfire effect found in Study 1. We sought to assess whether it generalizes to other issues as well as other ideological subgroups (namely, liberals). The latter question is especially important for the debate over whether conservatism is uniquely characterized by dogmatism and rigidity (Greenberg and Jonas 2003; Jost et al. 2003a, b).

Another goal was to test whether the backfire effect was the result of perceived hostility on the part of the news source. Though we chose the Associated Press as the source for Study 1 due to its seemingly neutral reputation, it is possible that conservatives rejected the correction due to perceived media bias. There is an extensive literature showing that partisans and ideologues tend to view identical content as biased against them (Arpan and Raney 2003; Baum and Gussin 2007; Christen et al. 2002; Gunther and Chia 2001; Gunther and Schmitt 2004; Gussin and Baum 2004, 2005; Lee 2005; Vallone et al. 1985). Perceptions of liberal media bias are especially widespread in the U.S among Republicans (Eveland and Shah 2003; Pew Research Center for the People & the Press 2005). These perceptions could reduce acceptance of the correction or create a backfire effect.Footnote 22 To assess this possibility, we manipulated the news source as described below.

In Study 2, we used a 2 (correction) × 2 (media source) design to test corrections of three possible misperceptions: the beliefs that Iraq had WMD when the U.S. invaded, that tax cuts increase government revenue, and that President Bush banned on stem cell research. (The Appendix presents the wording of all three experiments.) By design, the first two tested misperceptions held predominantly by conservatives and the third tested a possible liberal misperception.Footnote 23

In addition, we varied the source of the news articles, attributing them to either the New York Times (a source many conservatives perceive as biased) or FoxNews.com (a source many conservatives perceive as favorable). 197 respondents participated in Study 2.Footnote 24 Interestingly, we could not reject the null hypothesis that the news source did not change the effect of the correction in each of the three experiments (results available upon request).Footnote 25 As such, it is excluded from the results reported below. We discuss this surprising finding further at the end of the results section.

Method—Iraq WMD

In our second round of data collection, we conducted a modified version of the experiment from Study 1 to verify and extend our previous results. For the sake of clarity, we simplified the stimulus and manipulation for the Iraq WMD article, changed the context from a 2004 campaign speech to a 2005 statement about Iraq, and used a simpler question as the dependent variable (see Appendix for exact wording).

Results—Iraq WMD

Regression analyses for the second version of the Iraq WMD experiment, which are presented in Table 2, differ substantially from the previous iteration.Footnote 26

Model 1 indicates that the WMD correction again fails to reduce overall misperceptions. However, we again add an interaction between the correction and ideology in Model 2 and find a statistically significant result. This time, however, the interaction term is negative—the opposite of the result from Study 1. Unlike the previous experiment, the correction made conservatives more likely to believe that Iraq did not have WMD.

It is unclear why the correction was effective for conservatives in this experiment. One possibility is that conservatives may have shifted their grounds for supporting the war in tandem with the Bush administration, which sought to distance itself over time from the WMD rationale for war. Nationally, polls suggested a decline in Republican beliefs that Iraq had WMD before the war by early 2006, which may have been the result of changes in messages promoted by conservative elites.Footnote 27 In addition, the correlation between belief that George Bush “did the right thing” in invading Iraq and belief in Iraqi WMD declined among conservative experimental participants from 0.51 in Study 1 to 0.36 in Study 2. This shift was driven by the reaction to the correction; the correlation between the two measures among conservatives increased in Study 1 from 0.41 among controls to 0.72 in the correction condition, whereas in Study 2 it decreased from 0.54 to 0.10.Footnote 28 Another possibility is that conservatives placed less importance on the war in spring 2006 than they did in fall 2005 and were thus less inclined to counterargue the correction (a supposition for which we find support in national pollsFootnote 29). Finally, it is possible that the results may have shifted due to minor wording changes (i.e. the shift in the context of the article from the 2004 campaign to a 2005 statement might have made ideology less salient in answering the question about Iraqi WMD, or the simpler wording of the dependent variable might have reduced ambiguity that allowed for counter-arguing).

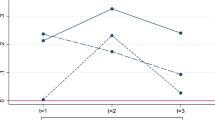

Even though a backfire effect did not take place among conservatives as a group, we conducted a post hoc analysis to see if conservatives who are the most intensely committed to Iraq would still persist in resisting the correction. Model 3 therefore includes a dummy variable for those respondents who rated Iraq as the most important problem facing the country today as well as the associated two- and three-way interactions with ideology and the correction condition. This model pushes the data to the limit since only 34 respondents rated Iraq the most important issue (including eight who placed themselves to the right of center ideologically). However, the results are consistent with our expectations—there is a positive, statistically significant interaction between ideology, the correction, and issue importance, indicating that the correction failed for conservatives who viewed Iraq as most important. To illustrate this point, Fig. 2 plots how the marginal effect of the correction varies by ideology, separating subjects for whom Iraq is the most important issue from those who chose other issues:

As the figure illustrates, the correction was effective in reducing misperceptions among conservatives who did not select Iraq as the most important issue, but its effects were null for the most strongly committed conservatives (the estimated marginal effect just misses statistical significance at the 0.10 level in the positive [wrong] direction).Footnote 30

Method—Tax Cuts

The second experiment in Study 2 tests subjects’ responses to the claim that President Bush’s tax cuts stimulated so much economic growth that they actually has the effect of increasing government revenue over what it would otherwise have been. The claim, which originates in supply-side economics and was frequently made by Bush administration officials, Republican members of Congress, and conservative elites, implies that tax cuts literally pay for themselves. While such a response may be possible in extreme circumstances, the overwhelming consensus among professional economists who have studied the issue—including the 2003 Economic Report of the President and two chairs of Bush’s Council of Economic Advisers—is that this claim is empirically implausible in the U.S. context (Hill 2006; Mankiw 2003; Milbank 2003).Footnote 31

Subjects read an article on the tax cut debate attributed to either the New York Times or FoxNews.com (see Appendix for text). In all conditions, it included a passage in which President Bush said “The tax relief stimulated economic vitality and growth and it has helped increase revenues to the Treasury.” As in Study 1, this quote—which states that the tax cuts increased revenue over what would have otherwise been received—is taken from an actual Bush speech. Subjects in the correction condition received an additional paragraph clarifying that both nominal tax revenues and revenues as a proportion of GDP declined sharply after Bush’s first tax cuts were enacted in 2001 (he passed additional tax cuts in 2003) and still had not rebounded to 2000 levels by either metric in 2004. The dependent variable is agreement with the claim that “President Bush’s tax cuts have increased government revenue” on a Likert scale ranging from strongly disagree (1) to strongly agree (5).

Results—Tax Cuts

The two regression models in Table 3 indicate that the tax cut correction generated another backfire effect.

In Model 1, we find (as expected) that conservatives are more likely to believe that tax cuts increase government revenue (p < 0.01) and more knowledgeable subjects are less likely to do so (p < 0.10). More importantly, the correction again fails to cause a statistically significant decline in overall misperceptions. As before, we again estimate an interaction between the treatment and ideology in Model 2. The effect is positive and statistically significant (p < 0.05), indicating that conservatives who received the treatment were significantly more likely to agree with the statement that tax cuts increased revenue than conservatives in the non-correction condition.

Figure 3 displays how the marginal effect of the correction varies by ideology.

As in the first Iraq experiment, the correction increases misperceptions among conservatives, with a positive and statistically significant marginal effect for self-described conservative and very conservative subjects (p < 0.05).Footnote 32 This finding provides additional evidence that efforts to correct misperceptions can backfire. Conservatives presented with evidence that tax cuts do not increase government revenues ended up believing this claim more fervently than those who did not receive a correction. As with Study 1, this result cannot easily be explained by source effects alone.

Method—Stem Cell Research

While previous experiments considered issues on which conservatives are more likely to be misinformed, our expectation was that many liberals hold a misperception about the existence of a “ban” on stem cell research, a claim that both Senator John Kerry and Senator John Edwards made during the 2004 presidential campaign (Weiss and Fitzgerald 2004). In fact, while President Bush limited federal funding of stem cell research to stem cell lines created before August 2001, he did not place any limitations on privately funded research (Fournier 2004).

In the experiment, subjects read a mock news article attributed to either the New York Times or FoxNews.com that reported statements by Edwards and Kerry suggesting the existence of a stem cell research “ban.” In the treatment condition, a corrective paragraph was added to the end of the news story explaining that Bush’s policy does not limit privately funded stem cell research. The dependent variable is agreement that “President Bush has banned stem cell research in the United States” on a scale ranging from “strongly disagree” (1) to “strongly agree” (5). (See Appendix for wording.)

Results—Stem Cell Research

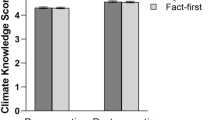

Table 4 reports results from two regression models that offer support for the resistance hypothesis.

In Model 1, we find a negative overall correction effect (p < 0.10), indicating that subjects who received the correction were less likely to believe that Bush banned stem cell research. We also find that subjects with more political knowledge were less likely to agree that a ban existed (p < 0.10). In Model 2, we again interact the correction treatment with ideology. The interaction is in the expected direction (negative) but just misses statistical significance (p < 0.14).

However, as Brambor et al. point out (2006, p. 74), it is not sufficient to consider the significance of an interaction term on its own. The marginal effects of the relevant independent variable need to be calculated for substantively meaningful values of the modifying variable in an interaction. Thus, as before, we estimate the marginal effect of the correction by ideology in Fig. 4.

The figure shows that the stem cell correction has a negative and statistically significant marginal effect on misperceptions among centrists and individuals to the right of center, but fails to significantly reduce misperceptions among those to the left of center. Thus, the correction works for conservatives and moderates, but not for liberals. In other words, while we do not find a backfire effect, the effect of the correction is again neutralized for the relevant ideological subgroup (liberals). This finding provides additional evidence that the effect of corrections is likely to be conditional on one’s political predispositions.

Results—News Media Manipulation

As we briefly mention above, the news source manipulation used in the experiments in Study 2 did not have significant effects. These manipulations, which randomly attributed the articles to either Fox News or the New York Times, were included in order to determine if perceived source biases were driving the results observed in Study 1. However, Wald tests found that including news source indicator variables and the corresponding two- and three-way interactions with the correction treatment and participant ideology did not result in a statistically significant improvement in model fit for any of the experiments in Study 2 (details available upon request).

How should we interpret these results, which differ with previous research on source effects in the persuasion literature? One possibility is that the news source manipulation, which consisted of changing the publication title listed at the top of the article (see Appendix), was simply too subtle. Perhaps a more visually striking reminder of the source of the article would have had a more significant effect. Similarly, the sources quoted within the news stories (e.g. President Bush, the Duelfer Report) may be the relevant ones for the purposes of comparison with previous findings. If we had manipulated the sources of the competing claims rather than the source of the news article, our results would likely have been different. Finally, it is possible that the lack of significant source effects is a more general property of two-sided message environments—Hartman and Weber (2009) find that the source framing effects observed in a one-sided message environment were no longer significant in a two-sided message environment.

Conclusion

The experiments reported in this paper help us understand why factual misperceptions about politics are so persistent. We find that responses to corrections in mock news articles differ significantly according to subjects’ ideological views. As a result, the corrections fail to reduce misperceptions for the most committed participants. Even worse, they actually strengthen misperceptions among ideological subgroups in several cases. Additional results suggest that these conclusions are not specific to the Iraq war; not related to the salience of death; and not a reaction to the source of the correction.

Our results thus contribute to the literature on correcting misperceptions in three important respects. First, we provide a direct test of corrections on factual beliefs about politics and show that responses to corrections about controversial political issues vary systematically by ideology. Second, we show that corrective information in news reports may fail to reduce misperceptions and can sometimes increase them for the ideological group most likely to hold those misperceptions. Finally, we establish these findings in the context of contemporary political issues that are salient to ordinary voters.

The backfire effects that we found seem to provide further support for the growing literature showing that citizens engage in “motivated reasoning.” While our experiments focused on assessing the effectiveness of corrections, the results show that direct factual contradictions can actually strengthen ideologically grounded factual beliefs—an empirical finding with important theoretical implications. Previous research on motivated reasoning has largely focused on the evaluation and usage of factual evidence in constructing opinions and evaluating arguments (e.g. Taber and Lodge 2006). By contrast, our research—the first to directly measure the effectiveness of corrections in a realistic context—suggests that it would be valuable to directly study the cognitive and affective processes that take place when subjects are confronted with discordant factual information. Two recent articles take important steps in this direction. Gaines et al. (2007) highlight the construction of interpretations of relevant facts, including those that may be otherwise discomforting, as a coping strategy, while Redlawsk et al. (forthcoming) argue that motivated reasoners who receive sufficiently incongruent information may become anxious and shift into more rational updating behavior.

It would also be helpful to test additional corrections of liberal misperceptions. Currently, all of our backfire results come from conservatives—a finding that may provide support for the hypothesis that conservatives are especially dogmatic (Greenberg and Jonas 2003; Jost et al. 2003a, b). However, there is a great deal of evidence that liberals (e.g. the stem cell experiment above) and Democrats (e.g., Bartels 2002, pp. 133–137; Bullock 2007; Gerber and Huber 2010) also interpret factual information in ways that are consistent with their political predispositions. Without conducting more studies, it is impossible to determine if liberals and conservatives react to corrections differently.Footnote 33

In addition, it would be valuable to replicate these findings with non-college students or a representative sample of the general population. Testing the effectiveness of corrections using a within-subjects design would also be worthwhile, though achieving meaningful results may be difficult for reasons described above. In either case, researchers must be wary of changing political conditions. Unlike other research topics, contemporary misperceptions about politics are a moving target that can change quickly (as the difference between the Iraq WMD experiments in Study 1 and Study 2 suggests).

Future work should seek to use experiments to determine the conditions under which corrections reduce misperceptions from those under which they fail or backfire. Many citizens seem or unwilling to revise their beliefs in the face of corrective information, and attempts to correct those mistaken beliefs may only make matters worse. Determining the best way to provide corrective information will advance understanding of how citizens process information and help to strengthen democratic debate and public understanding of the political process.

Notes

For instance, Jerit and Barabas (2006) show that the prevalence of misleading statements about the financial status of Social Security in media coverage of the issue significantly increased the proportion of the public holding the false belief that the program was about to “run out of money completely.”

In the experimental design sections below, we provide details about specific corrections and why one ideological group is likely to resist them while the other is likely to be more welcoming.

Backfire effects have also been observed as a result of source partisanship mismatches (Kriner and Howell n.d; Hartman and Weber 2009) or contrast effects in frame strength (Chong and Druckman 2007). The increase in support for the death penalty observed in Peffley and Hurwitz (2007) when whites are told that the death penalty is applied in a discriminatory faction against blacks could also be interpreted as a backfire effect. However, we focus on the type of backfire effect identified by Chong and Druckman as “occur[ring] in response to strong frames on highly accessible controversial issues that provoke counterarguing by motivated partisan or ideological individuals” (641).

Meffert et al. (2006) find a very similar result in another simulated campaign experiment.

We report evidence of this phenomenon below in the Study 2 experiment concerning the belief that Iraq had weapons of mass destruction immediately before the U.S. invasion.

Of course, another possible moderator is partisanship, which is highly correlated with ideology in contemporary American politics. The results reported below are substantively almost identical when partisanship is used as a moderator instead (details available upon request).

The signs of the coefficients will vary in practice depending on whether misperceptions about the issue are more likely among liberals or conservatives.

We examine such an explanation in the Iraq WMD portion of Study 2 below.

Participants, who received course credit for participation, signed up via an online subject pool management system for students in psychology courses and were provided with a link that randomly assigned them to treatment conditions. Standard caveats about generalizing from a convenience sample apply. In terms of external validity, college students are more educated than average and may thus be more able to resist corrections (Zaller 1992). However, college students are also known to have relatively weak self-definition, poorly formed attitudes, and to be relatively easily influenced (Sears 1986)—all characteristics that would seem to reduce the likelihood of resistance and backfire effects. In addition, as Druckman and Nelson note (2003, p. 733), the related literatures on framing, priming and agenda-setting have found causal processes that operate consistently in student and non-student samples (Kühberger 1998, p. 36, Miller and Krosnick 2000, p. 313). For a general defense of the use of student samples in experimental research, see Druckman and Kam (2010).

Evidence on WMD did not change appreciably after the October 2004 release of the Duelfer Report. No other relevant developments took place until June 2006, when two members of Congress promoted the discovery of inactive chemical shells from the Iran–Iraq War as evidence of WMD (Linzer 2006).

68 percent of respondents in Study 1 were female; 62 percent were white; 56 percent were Catholic. For a convenience sample, respondents were reasonably balanced on both ideology (48 percent left of center, 27 percent centrist, 25 percent right of center) and partisanship (27 percent Republican or lean Republican, 25 percent independent, 48 percent Democrat or lean Democrat).

The experiment was technically a 3 × 2 design with two types of corrections, but we omit the alternative correction condition here for ease of exposition. Excluding these data does not substantively affect the key results presented in this paper.

While President Bush argued that the report showed that Saddam “retained the knowledge, the materials, the means and the intent to produce” WMD, he and his administration did not dispute its conclusion that Iraq did not have WMD or an active weapons program at the time of the U.S. invasion (Balz 2004).

Again, the wording of this dependent variable reflects our definition of misperceptions as beliefs that are either provably false or contradicted by the best available evidence and consensus expert opinion. As we note earlier, it is not possible to definitely disprove the notion that Saddam had WMD and/or an active WMD program immediately prior to the U.S. invasion, but the best available evidence overwhelmingly contradicts that claim.

We use a Likert scale rather than a simple binary response for the dependent variable in each of our studies because we are interested in capturing as much variance as possible in subjects’ levels of belief in various misperceptions of interest. This variance is especially important to understanding the effect of corrections, which may change people’s level of agreement or disagreement with a claim without necessarily switching them from one side of the midpoint to another. In addition, when subjects are pushed from one side of the midpoint to another, the Likert scale allows us to capture the magnitude of the change.

OLS regression models are used in this paper to facilitate interpretation and the construction of marginal effect plots. Results are substantively identical with ordered probit (available upon request).

In addition, interactions between mortality salience and the correction condition were not statistically significant (results available upon request). As such, we do not discuss it further.

This interaction was not moderated by political knowledge. When we estimated models with the full array of interactions between knowledge, ideology, and corrections, we could not reject the null hypothesis that the model fit was not improved for any of the studies in this paper (results available upon request).

The raw data are especially compelling. The percentage of conservatives agreeing with the statement that Iraq had weapons of mass destruction before the US invasion increased from 32% in the control condition to 64% in the correction condition (n = 33). By contrast, for non-conservatives, agreement went from 22% to 13% (n = 97).

We also conducted an experiment correcting a claim made by Michael Moore in the movie “Fahrenheit 9/11” that the war in Afghanistan was motivated by Unocal’s desire to build an natural gas pipeline through the country. All results of substantive importance to this paper were insignificant. The full wording and results of this experiment are available upon request.

62 percent of respondents to Study 2 were women; 59 percent were Catholic; and 65 percent were white. The sample was again reasonably balanced for a convenience sample on both ideology (52 percent left of center, 17 percent centrist, 31 percent right of center) and partisanship (46 percent Democrat or lean Democrat, 20 percent independent, 33 percent Republican or lean Republican).

Three-way interactions between news source, the correction, and ideology were also insignificant (results available upon request).

Each of the statistical models in Study 2 excludes two subjects with missing data (one failed to answer any dependent variable questions and the other did not report his ideological self-identification).

65 subjects from Study 1 were asked this question, which was added to the instrument partway through its administration.

CBS and CBS/New York Times polls both show statistically significant declines in the percentage of conservatives who called Iraq the most important issue facing the country between September 2005 and May 2006 (details available upon request).

The estimated marginal effect of the correction is positive and statistically significant for liberals who did not choose Iraq as the most important issue. However, this effect is not clear in the raw data. Among subjects who placed themselves to the left of center, a two-sided t-test (unequal variance) cannot reject the null hypothesis that misperception levels of the correction and control groups are equal (p < 0.28).

Factcheck.org offers an excellent primer on the claim that the Bush tax cuts increased government revenue (Robertson 2007).

Again, the raw data are compelling. The percentage of conservatives agreeing with the statement that President Bush's tax cuts have increased government revenue went from 36 to 67% (n = 60). By contrast, for non-conservatives, agreement went from 31 to 28% (n = 136).

It is plausible, for instance, that the stem cell misperception failed to provoke a backfire effect because it was less salient to liberals than the WMD and tax cut misperceptions were for conservatives. Also, conservatives may have been more motivated to defend claims made by President Bush than liberals were to defend statements made by the Democratic Party’s defeated presidential ticket.

References

Allen, M. (2003). Bush: ‘We Found’ banned weapons. Washington Post. May 31, 2003. Page A1.

Althaus, S. L. (1998). Information effects in collective preferences. American Political Science Review, 92(3), 545–558.

Arpan, L. M., & Raney, A. A. (2003). An experimental investigation of news source and the Hostile Media Effect. Journalism and Mass Communication Quarterly, 80(2), 265–281.

Balz, D. (2004). Candidates use arms report to make case. Washington Post, October 8, 2004.

Bartels, L. M. (2002). Beyond the running tally: Partisan bias in political perceptions. Political Behavior, 24(2), 117–150.

Baum, M. A., & Gussin, P. (2007). In the eye of the beholder: How information shortcuts shape individual perceptions of bias in the media. Quarterly Journal of Political Science, 3, 1–31.

Baum, M. A., & Groeling, T. (2009). Shot by the messenger: Partisan cues and public opinion regarding national security and war. Political Behavior, 31(2), 157–186.

Brambor, T., Clark, W. R., & Golder, M. (2006). Understanding interaction models: Improving empirical analyses. Political Analysis, 14, 63–82.

Bullock, J. (2007). Experiments on partisanship and public opinion: Party cues, false beliefs, and Bayesian updating. Ph.D. dissertation, Stanford University.

Chaiken, S. (1980). Heuristic versus systematic information processing and the use of source versus message cues in persuasion. Journal of Personality and Social Psychology, 39(5), 752–766.

Chong, D., & Druckman, J. N. (2007). Framing public opinion in competitive democracies. American Political Science Review, 101(4), 637–655.

Christen, C. T., Kannaovakun, P., & Gunther, A. C. (2002). Hostile media perceptions: Partisan assessments of press and public during the 1997 United Parcel Service Strike. Political Communication, 19(4), 423–436.

Cohen, F., Ogilvie, D. M., Solomon, S., Greenberg, J., & Pyszczynski, T. (2005). American Roulette: The effect of reminders of death on support for George W. Bush in the 2004. Analyses of Social Issues and Public Policy, 5(1), 177–187.

Cunningham, B. (2003). Re-thinking objectivity. Columbia Journalism Review July/August 2003, 24–32.

Delli Carpini, M. X., & Keeter, S. (1996). What Americans know about politics and why it matters. New Haven: Yale University Press.

Ditto, P. H., & Lopez, D. F. (1992). Motivated skepticism: use of differential decision criteria for preferred and nonpreferred conclusions. Journal of Personality and Social Psychology, 63(4), 568–584.

Druckman, J. N., & Kam, C. D. (2010). Students as experimental participants: A defense of the ‘Narrow Data Base.’ In J. N. Druckman, D. P. Green, J. H. Kuklinski, & A. Lupia (Eds.), Handbook of experimental political science. New York: Cambridge University Press.

Druckman, J. N., & Nelson, K. R. (2003). Framing and deliberation: How citizens’ conversations limit elite influence. American Journal of Political Science, 47(4), 729–745.

Edwards, K., & Smith, E. E. (1996). A disconfirmation bias in the evaluation of arguments. Journal of Personality and Social Psychology, 71(1), 5–24.

Eveland, W. P., Jr., & Shah, D. V. (2003). The impact of individual and interpersonal factors on perceived media bias. Political Psychology, 24(1), 101–117.

Fournier, R. (2004). First Lady Bashes Kerry Stem Cell Stance. Associated Press August 9, 2004.

Fritz, B., Keefer, B., & Nyhan, B. (2004). All the President’s spin: George W. Bush, the media and the truth. New York: Touchstone.

Gaines, B. J., Kuklinski, J. H., Quirk, P. J., Peyton, B., & Verkuilen, J. (2007). Interpreting Iraq: Partisanship and the meaning of facts. Journal of Politics, 69(4), 957–974.

Gerber, A. S., & Huber, G. A. (2010). Partisanship, political control, and economic assessments. American Journal of Political Science, 54(1), 153–173.

Gilens, M. (2001). Political ignorance and collective policy preferences. American Political Science Review, 95(2), 379–396.

Gollust, S. E., Lantz, P. M., & Ubel, P. A. (2009). The polarizing effect of news media messages about the social determinants of health. American Journal of Public Health, 99(12), 2160–2167.

Greenberg, J., & Jonas, E. (2003). Psychological motives and political orientation–the left, the right, and the rigid: comment on Jost et al. (2003). Psychological Bulletin, 129(3), 376–382.

Gunther, A. C., & Chia, S. C.-Y. (2001). Predicting pluralistic ignorance: The hostile media perception and its consequences. Journalism and Mass Communication Quarterly, 78(4), 688–701.

Gunther, A. C., & Schmitt, K. (2004). Mapping boundaries of the hostile media effect. Journal of Communication, 54(1), 55–70.

Gussin, P., & Baum, M. A. (2004). In the eye of the beholder: An experimental investigation into the foundations of the hostile media phenomenon. Paper presented at 2004 meeting of the American Political Science Association, Chicago, IL.

Gussin, P., & Baum, M. A. (2005). Issue bias: How issue coverage and media bias affect voter perceptions of elections. Paper presented at 2005 meeting of the American Political Science Association, Washington D.C.

Hartman, T. K., & Weber, C. R. (2009). Who said what? The effects of source cues in issue frames. Political Behavior, 31(4), 537–558.

Hill, P. (2006). House or Senate shake-up likely to end tax cuts. Washington Times October 5, 2006.

Howell, W. G., & West, M. R. (2009). Educating the public. Education Next, 9(3), 41–47.

Jerit, J., & Barabas, J. (2006). Bankrupt rhetoric: How misleading information affects knowledge about social security. Public Opinion Quarterly, 70(3), 278–303.

Johnson, H. M., & Seifert, C. M. (1994). Sources of the continued influence effect: When misinformation in memory affects later inferences. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20(6), 1420–1436.

Jost, J. T., Glaser, J., Kruglanski, A. W., & Sulloway, F. J. (2003a). Political conservatism as motivated social cognition. Psychological Bulletin, 129(3), 339–375.

Jost, J. T., Glaser, J., Kruglanski, A. W., & Sulloway, F. J. (2003b). Exceptions that prove the rule-using a theory of motivated social cognition to account for ideological incongruities and political anomalies: Reply to Greenberg and Jonas (2003). Psychological Bulletin, 129(3), 383–393.

Kriner, D., & Howell, W. G. (N.d.). Political elites and public support for war. Unpublished manuscript.

Kühberger, A. (1998). The influence of framing on risky decisions: A meta-analysis. Organizational Behavior and Human Decision Processes, 75(1), 23–55.

Kuklinski, J. H., & Quirk, P. J. (2000). Reconsidering the rational public: cognition, heuristics, and mass opinion. In A. Lupia, M. D. McCubbins, & S. L. Popkin (Eds.), Elements of reason: Understanding and expanding the limits of political rationality. London: Cambridge University Press.

Kuklinski, J. H., Quirk, P. J., Schweider, D., & Rich, R. F. (1998). ‘Just the Facts, Ma’am’: Political facts and public opinion. Annals of the American Academy of Political and Social Science, 560, 143–154.

Kuklinski, J. H., Quirk, P. J., Jerit, J., Schweider, D., & Rich, R. F. (2000). Misinformation and the currency of democratic citizenship. The Journal of Politics, 62(3), 790–816.

Kull, S., Ramsay, C., & Lewis, E. (2003). Misperceptions, the media, and the Iraq war. Political Science Quarterly, 118(4), 569–598.

Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498.

Landau, M. J., Solomon, S., Greenberg, J., Cohen, F., Pyszczynski, T., Arndt, J., et al. (2004). Deliver us from evil: The effects of mortality salience and reminders of 9/11 on support for President George W. Bush. Personality and Social Psychology Bulletin, 30(9), 1136–1150.

Lau, R. R., & Redlawsk, D. (2001). Advantages and disadvantages of cognitive heuristics in political decision making. American Journal of Political Science, 45(4), 951–971.

Lebo, M. J., & Cassino, D. (2007). The aggregated consequences of motivated ignorance and the dynamics of partisan presidential approval. Political Psychology, 28(6), 719–746.

Lee, T.-T. (2005). The liberal media myth revisited: An examination of factors influencing perceptions of media bias. Journal of Broadcasting & Electronic Media, 49(1), 43–64.

Linzer, D. (2006). Lawmakers cite weapons found in Iraq. Washington Post June 22, 2006.

Lodge, M., & Taber, C. S. (2000). Three steps toward a theory of motivated political reasoning. In A. Lupia, M. D. McCubbins, & Samuel. L. Popkin (Eds.), Elements of reason: Understanding and expanding the limits of political rationality. London: Cambridge University Press.

Lord, C. G., Ross, L., & Lepper, M. R. (1979). Biased assimilation and attitude polarization: The effects of prior theories on subsequently considered evidence. Journal of Personality and Social Psychology, 37(11), 2098–2109.

Lupia, A. (1994). Shortcuts versus encyclopedias: Information and voting behavior in California insurance reform elections. American Political Science Review, 88(1), 63–76.

Lupia, A., & McCubbins, M. D. (1998). The democratic dilemma: Can citizens learn what they need to know?. New York: Cambridge University Press.

Mankiw, G. (2003). Testimony before the U.S. Senate Committee on Banking, Housing, and Urban Affairs. May 13, 2003.

McGregor, H. A., Lieberman, J. D., Greenberg, J., Solomon, S., Arndt, J., Simon, L., et al. (1998). Terror management and aggression: Evidence that mortality salience motivates aggression against worldview-threatening others. Journal of Personality and Social Psychology, 74(3), 590–605.

Meffert, M. F., Chung, S., Joiner, A. J., Waks, L., & Garst, J. (2006). The effects of negativity and motivated information processing during a political campaign. Journal of Communication, 56, 27–51.

Milbank, D. (2003). For Bush tax plan, a little inner dissent. Washington Post February 16, 2003. Page A4.

Miller, J. M., & Krosnick, J. A. (2000). News media impact on the ingredients of presidential evaluations: Politically knowledgeable citizens are guided by a trusted source. American Journal of Political Science, 44(2), 301–315.

Molden, D. C., & Higgins, E. T. (2005). Motivated thinking. In K. J. Holyoak & R. G. Morrison (Eds.), The Cambridge handbook of thinking and reasoning. New York: Cambridge University Press.

Peffley, M., & Hurwitz, J. (2007). Persuasion and resistance: Race and the death penalty in America. American Journal of Political Science, 51(4), 996–1012.

Pew Research Center for the People & the Press (2005). Public more critical of press, but goodwill persists: Online newspaper readership countering print losses. Poll conducted June 8–12, 2005 and released June 26, 2005. Downloaded December 6, 2008 from http://people-press.org/reports/pdf/248.pdf.

Popkin, S. (1991). The reasoning voter. Chicago: University of Chicago Press.

Priest, D., & Pincus, W. (2004). U.S. ‘Almost All Wrong’ on weapons. Washington Post October 7, 2004.

Program on International Policy Attitudes (2004). PIPA-knowledge networks poll: Separate realities of Bush and Kerry supporters. Downloaded December 30, 2009 from http://www.pipa.org/OnlineReports/Iraq/IraqRealities_Oct04/IraqRealities%20Oct04%20quaire.pdf.

Program on International Policy Attitudes (2006). Americans on Iraq: Three years on. Downloaded December 30, 2009 from http://www.worldpublicopinion.org/pipa/pdf/mar06/USIraq_Mar06-quaire.pdf.

Pyszczynski, T., Abdollahi, A., Solomon, S., Greenberg, J., Cohen, F., & Weise, D. (2006). Mortality salience, martyrdom, and military might: The great satan versus the axis of evil. Personality and Social Psychology Bulletin, 32(4), 525–537.

Pyszczynski, T., Solomon, S., & Greenberg, J. (2003). In the wake of 9/11: The psychology of terror. Washington, DC: American Psychological Association.

Redlawsk, D. (2002). Hot cognition or cool consideration? Testing the effects of motivated reasoning on political decision making. Journal of Politics, 64(4), 1021–1044.

Redlawsk, D. P., Civettini, A. J. W., & Emmerson, K. M. (Forthcoming). The affective tipping point: Do motivated reasoners ever ‘Get It’?” Political Psychology.

Robertson, L. (2007). “Supply-side Spin.” Factcheck.org. June 11, 2007. Downloaded December 6, 2008 from http://www.factcheck.org/taxes/supply-side_spin.html.

Ross, L., & Lepper, M. R. (1980). The perserverance of beliefs: Empirical and normative considerations. In R. A. Shweder (Ed.), Fallible judgment in behavioral research: New directions for methodology of social and behavioral science (Vol. 4, pp. 17–36). San Francisco: Jossey-Bass.

Sears, D. O. (1986). College sophomores in the laboratory: Influences of a narrow data base on social psychology’s view of human nature. Journal of Personality and Social Psychology, 51(3), 515–530.

Shani, D. (2006). Knowing your colors: Can knowledge correct for partisan bias in political perceptions? Paper presented at the annual meeting of the Midwest Political Science Association.

Shapiro, R. Y., Bloch-Elkon, Y. (2008). Do the facts speak for themselves? Partisan disagreement as a challenge to democratic competence. Critical Review 20(1–2):115–139.

Sides, J., & Citrin, J.(2007). “How large the huddled masses? The causes and consequences of public misperceptions about immigrant populations.” Paper presented at the 2007 annual meeting of the Midwest Political Science Association, Chicago, IL.

Sniderman, P. M., Brody, R. A., & Tetlock, P. E. (1991). Reasoning and choice: Explorations in political psychology. New York: Cambridge University Press.

Taber, C. S., & Lodge, M. (2006). Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science, 50(3), 755–769.

Vallone, R. P., Ross, L., & Lepper, M. R. (1985). The hostile media phenomenon: Biased perception and perceptions of media bias in coverage of the ‘Beirut Massacre’. Journal of Personality and Social Psychology, 49(3), 577–585.

Wells, C., Reedy, J., Gastil, J., & Lee, Carolyn. (2009). Information distortion and voting choices: The origins and effects of factual beliefs in initiative elections. Political Psychology, 30(6), 953–969.

Weiss, R., & Fitzgerald, M. (2004). Edwards, first lady at odds on stem cells. Washington Post August 10, 2004.

Zaller, J. (1992). The nature and origins of mass opinion. New York: Cambridge University Press.

Author information

Authors and Affiliations

Corresponding author

Additional information

A previous version of this paper was presented at the 2006 annual meeting of the American Political Science Association. We thank anonymous reviewers, the editors, and audiences at the University of North Carolina at Chapel Hill, Northwestern University, and the Duke Political Science Graduate Student Colloquium for valuable feedback.

Appendix

Appendix

Study 1 (WMD): News Text

Wilkes-Barre, PA, October 7, 2004 (AP)—President Bush delivered a hard-hitting speech here today that made his strategy for the remainder of the campaign crystal clear: a rousing, no-retreat defense of the Iraq war.

Bush maintained Wednesday that the war in Iraq was the right thing to do and that Iraq stood out as a place where terrorists might get weapons of mass destruction.

“There was a risk, a real risk, that Saddam Hussein would pass weapons or materials or information to terrorist networks, and in the world after September the 11th, that was a risk we could not afford to take,” Bush said.

[Correction]

While Bush was making campaign stops in Pennsylvania, the Central Intelligence Agency released a report that concludes that Saddam Hussein did not possess stockpiles of illicit weapons at the time of the U.S. invasion in March 2003, nor was any program to produce them under way at the time. The report, authored by Charles Duelfer, who advises the director of central intelligence on Iraqi weapons, says Saddam made a decision sometime in the 1990s to destroy known stockpiles of chemical weapons. Duelfer also said that inspectors destroyed the nuclear program sometime after 1991.

[All subjects]

The President travels to Ohio tomorrow for more campaign stops.

Study 1 (WMD): Dependent Variable

Immediately before the U.S. invasion, Iraq had an active weapons of mass destruction program, the ability to produce these weapons, and large stockpiles of WMD, but Saddam Hussein was able to hide or destroy these weapons right before U.S. forces arrived.

-

Strongly disagree [1]

-

Somewhat disagree [2]

-

Neither agree nor disagree [3]

-

Somewhat agree [4]

-

Strongly agree [5]

Study 2, Experiment 1 (WMD): News Text

[New York Times/FoxNews.com]

December 14, 2005

During a speech in Washington, DC on Wednesday, President Bush maintained that the war in Iraq was the right thing to do and that Iraq stood out as a place where terrorists might get weapons of mass destruction.

“There was a risk, a real risk, that Saddam Hussein would pass weapons or materials or information to terrorist networks, and in the world after September the 11th, that was a risk we could not afford to take,” Bush said.

[Correction]

In 2004, the Central Intelligence Agency released a report that concludes that Saddam Hussein did not possess stockpiles of illicit weapons at the time of the U.S. invasion in March 2003, nor was any program to produce them under way at the time.

[All subjects]

The President travels to Ohio tomorrow to give another speech about Iraq.

Study 2, Experiment 1 (WMD): Dependent Variable

Immediately before the U.S. invasion, Iraq had an active weapons of mass destruction program and large stockpiles of WMD.

-

Strongly disagree [1]

-

Somewhat disagree [2]

-

Neither agree nor disagree [3]

-

Somewhat agree [4]

-

Strongly agree [5]

Study 2, Experiment 2 (Tax Cuts): News Text

[New York Times/FoxNews.com]

August 6, 2005

President George W. Bush urged Congress to make permanent the tax cuts enacted during his first term and draft legislation to bolster the Social Security program, after the lawmakers return from their August break.

“The tax relief stimulated economic vitality and growth and it has helped increase revenues to the Treasury,” Bush said in his weekly radio address. “The increased revenues and our spending restraint have led to good progress in reducing the federal deficit.”

The expanding economy is helping reduce the amount of money the U.S. government plans to borrow from July through September, the Treasury Department said on Wednesday. The government will borrow a net $59 billion in the current quarter, $44 billion less than it originally predicted, as a surge in tax revenue cut the forecast for the federal budget deficit.

The White House’s Office of Management and Budget last month forecast a $333 billion budget gap for the fiscal year that ends Sept. 30, down from a record $412 billion last year.

[Correction]