Abstract

Teacher education institutions conduct information and communications technology (ICT) courses to prepare preservice teachers (or initial teacher education candidates) to support their teaching practice with appropriate ICT tools. ICT course evaluations based on preservice teachers’ perception of course experiences are limited in indicating the kinds of ICT integration knowledge or technological pedagogical content knowledge (TPACK) preservice teachers have gained throughout the course. Preservice teachers’ ICT course experiences was found to influence their intentions to integrate ICT but its influence on their TPACK perceptions, if better understood, can inform teacher education institutions about the design of ICT courses. This study describes the design and validation of an ICT course evaluation instrument that examines preservice teachers’ perceptions of ICT course experiences and TPACK. Hierarchical regression analysis was performed on survey results collected from a graduating cohort of 869 Singapore preservice teachers who had undergone a compulsory ICT course during their teacher training program. These preservice teachers were being prepared to teach the different subject areas at primary, secondary, and junior colleges (or postsecondary institutions for 17–19 year olds) in Singapore. The regression model showed that preservice teachers’ perceived TPACK was first influenced by their perceptions of course experiences that supported the development of intermediary TPACK knowledge components such as technological knowledge and technological pedagogical knowledge. The methodological implications for the design of ICT course evaluation surveys and the practical applications of survey results to the refinement of ICT course curriculum are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Teacher education institutions typically conduct information and communications technology (ICT) courses for preservice teachers (or initial teacher education candidates) to teach them about strategies for supporting their teaching practice with the appropriate ICT tools (Kleiner et al. 2007). The evaluation of ICT courses for preservice teachers have traditionally been based upon preservice teachers’ perceptions of their course experiences with respect to course content, course delivery, and learning environment. The conception of technological pedagogical content knowledge (TPACK) framework of Mishra and Koehler (2006) shifted the focus of ICT course evaluations towards TPACK surveys that assess preservice teachers’ knowledge for integrating ICT into their lesson activities, that is, their knowledge for ICT integration. This is because the TPACK framework provided a theoretical explanation for the long-standing observation that ICT courses instructing preservice teachers about technological skills alone does not adequately prepare them to integrate ICT into their lesson activities (Brush and Saye 2009; Moursund and Bielefeldt 1999; Pierson 2001). The framework proposes that K-12 teachers draw upon their technological knowledge, pedagogical knowledge, and content knowledge as the three basic knowledge sources when integrating ICT into their lesson activities. They also make connections among these three knowledge sources to derive four other types of knowledge termed as technological content knowledge, technological pedagogical knowledge, pedagogical content knowledge, and TPACK. These seven types of knowledge constitute teachers’ knowledge base for ICT integration.

Studies from the USA, Taiwan, and Singapore show that some educational institutions in these countries are using the seven TPACK constructs to design surveys for evaluating both preservice and in-service teachers’ learning through ICT courses (Chai et al. 2010; Graham et al. 2009; Koh et al. 2010; Schmidt et al. 2009). This study examines how TPACK surveys can be integrated with surveys of preservice teachers’ ICT course experiences for a more holistic evaluation of ICT courses. TPACK surveys evaluate the knowledge perceptions of teachers but not their ICT course experiences, which are the main conduit used by teacher education institutions to develop teachers’ TPACK. A more comprehensive evaluation of ICT courses therefore can be achieved if both aspects are included. Additionally, their relationships need to be examined so that insights can be derived for course improvement.

The development of TPACK surveys are still in its early stages and construct validation of TPACK surveys are still lacking. This paper contributes to the development of ICT course evaluation by describing the design and validation of a survey instrument that examines both preservice teachers’ perceptions of course experiences and TPACK perceptions. The survey results examined were obtained from a cohort of 869 graduating preservice teachers who were trained to teach in primary, secondary, and junior college levels at Singapore schools. Junior colleges refer to postsecondary education of the 17–19 year olds. Following this, a hierarchical regression analysis was used to model the contribution of course experience and TPACK constructs to preservice teachers’ TPACK. The implications of these results on ICT course design, as well as the methodological issues associated with TPACK-focused ICT course evaluation, will be discussed.

2 Approaches to ICT course evaluation

Two broad approaches have been used to design instruments for the evaluation of ICT courses for preservice teachers.

2.1 Course experience in ICT course evaluation

Before the formation of the TPACK framework, preservice teachers’ perceptions of their ICT course experiences during teacher education have been used as a basis for ICT course evaluation. This typically includes preservice teachers’ perceptions of the learning environment, course content, and course delivery they experienced during the conduct of their ICT courses. The perceptions of course content, course delivery, and learning environment as factors in ICT course evaluations can be linked to technology adoption models. Technology adoption theories provide a lens to understand how and why an individual adopts innovations by revealing the undergirding cognitive, emotional, and contextual conditions (Straub 2009). Take for instance Roger’s Innovation Diffusion Theory (IDT; Rogers 1995). In this theory, a five-stage process namely: (1) awareness, (2) persuasion, (3) decision, (4) implementation, and (5) confirmation, has been outlined for understanding individual adoption. ICT courses expose preservice teachers to different types of technologies as well as their strengths and weaknesses during teacher education. These are designed to shape stages 1 and 2 of this process by enhancing preservice teachers’ self-efficacy and attitudes towards technology adoption. These factors have positive influence on preservice teachers’ decision to integrate computers into their lessons when they transit from a teacher trainee to a full-fledged school teacher (Wozney et al. 2006). As a result, they can also impact the preservice teachers’ subsequent stages of ICT adoption. The Technology Acceptance Model (TAM) is another model for examining technology adoption. In contrast with IDT that analyzed the type of adoption environment, TAM focused on the innovation itself (Davis 1989). Two perceived characteristics have been identified to be important, namely, perceived ease of use and perceived usefulness. While perceived ease of use refers to the “degree to which a person believes that using a particular system would be free of effort” (Davis 1989, p. 320), perceived usefulness is defined as “the degree to which a person believes that using a particular system would enhance his or her job performance” (Davis 1989, p. 320). Course content, delivery, and learning environment are factors that will directly influence teachers’ perceived ease of use. Teachers’ perceptions of the use of a technological tool in the classroom are influenced by the depth to which features and affordances of that tool are being addressed in a course. Likewise, the conditions of a learning environment that facilitate the testing of a tool will also influence the perceived ease of use. Some studies of Singapore preservice teachers (e.g., Teo et al. 2009) showed that these factors influenced their computer attitudes. Therefore, the examination of preservice teachers’ perceptions about ICT course content, course delivery, and learning environment can reveal the nature of experiences that influence their decisions about integrating ICT into their teaching practices.

Studies such as Wozney et al. (2006) and Teo et al. (2009) showed that the evaluation of ICT course experiences perceived by preservice teachers can provide some indication of their intention to integrate ICT. However, the adoption of TPACK as a framework for ICT evaluation by some educational institutions (see Chai et al. 2010; Graham et al. 2009; Schmidt et al. 2009) point to the need to examine the kinds of knowledge about ICT integration that teachers perceived to have developed throughout ICT courses. Therefore, it is worthwhile to consider this aspect through ICT course evaluations.

2.2 ICT Course Evaluations with TPACK Surveys

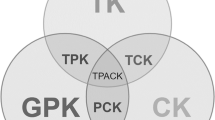

The TPACK framework was built upon the conception of Shulman (1986) on pedagogical content knowledge which considered teachers’ knowledge of teaching practices as a unique knowledge form that was built upon their pedagogical knowledge and content knowledge. In a similar fashion, Mishra and Koehler (2006) proposed that the integration of teachers’ technological knowledge, pedagogical knowledge, and content knowledge can be considered as TPACK (See Fig. 1).

TPACK framework, as depicted by Mishra and Koehler (2006, p. 1025)

The seven types of teacher ICT integration knowledge used in the construction of TPACK surveys are defined as follows:

-

1.

Technological knowledge (TK)—knowledge of technology tools.

-

2.

Pedagogical knowledge (PK)—knowledge of teaching methods.

-

3.

Content knowledge (CK)—knowledge of subject matter.

-

4.

Technological pedagogical knowledge (TPK)—knowledge of using technology to implement teaching methods.

-

5.

Technological content knowledge (TCK)—knowledge of subject matter representation with technology.

-

6.

Pedagogical content knowledge (PCK)—knowledge of teaching methods with respect to subject matter content.

-

7.

Technological pedagogical content knowledge (TPACK)—knowledge of using technology to implement constructivist teaching methods for different types of subject matter content.

Early studies in this area have attempted to trace teachers’ TPACK development through qualitative analysis of their learning behaviors during ICT courses (e.g., Koehler et al. 2007), which was insightful. TPACK surveys can be used to complement qualitative TPACK studies because they are comparatively easier to replicate across large cohorts, which can inform teacher educators about the broad trends and patterns in teachers’ TPACK development. Despite these advantages, the lack of validated TPACK surveys has limited its widespread adoption in ICT course evaluation. To date, several TPACK surveys in the areas of pre K-6 education, science education, e-learning facilitation, and web-based resource use have been developed (Archambault and Crippen 2009; Lee and Tsai 2010; Schmidt et al. 2009). The internal reliabilities for these instruments have been established but construct validation proved more challenging. Koh et al. (2010) administered Schmidt et al.’s survey with a large sample of 1,185 Singapore preservice teachers and managed to validate the factors of CK and TK items using factor analysis. The technology-related factors of TPK, TCK, and TPACK loaded together as one factor whereas the PK and PCK items loaded as another. These results were largely similar to findings reported by Archambault and Barnett (2010) and Lee and Tsai (2010).

Chai et al. (2011) found better construct validation with a TPACK for Meaningful Learning Survey that was customized to assess an ICT course that emphasized constructivist pedagogical approaches. The items for TK, PK, CK, TPK, and TPACK were validated whereas the TCK and PCK items merged with other constructs. Chai et al. (2011) added a stem “Without using technology” to better distinguish the PCK items from its technology-related factors. Factor analysis of the survey results collected from 214 Singapore preservice teachers successfully yielded the seven TPACK factors as postulated by Mishra and Koehler (2006). Therefore, such approaches for the design of TPACK survey items can be considered for ICT course evaluation.

While TPACK surveys can reveal teachers’ perceptions of their ICT integration knowledge, the major TPACK surveys reviewed above have nevertheless omitted the examination of preservice teachers’ perception of ICT course experiences. The linkages between preservice teachers’ perceptions of course experiences and their TPACK have yet to be thoroughly examined. To do so, there is a need for survey instruments that capture preservice teachers’ satisfaction with respect to course delivery, course content, and supporting environment, as well as their TPACK perceptions. Such an instrument will improve the uses of ICT course evaluations because it enables teacher education institutions to track how their ICT course content, course delivery, and learning environment contribute to the development of preservice teachers’ knowledge for ICT integration, that is, their TPACK. This study attempts to construct such a survey by adapting items from both the TPACK for Meaningful Learning Survey as well as the Technology Acceptance Model survey by Teo et al. (2009).

2.3 Research Focus

Given the above analysis, this study therefore seeks to examine:

-

1.

The construct validity of an ICT course evaluation that assess factors related to both Course Experience and TPACK of Singapore preservice teachers

-

2.

Singapore preservice teachers’ perception of course experience and TPACK with respect to an ICT course

-

3.

The contributions of course experience and TPACK factors to Singapore pre-service teachers’ TPACK

3 Methodology

This study uses a cross-sectional survey design to obtain information from a large pool of participants at a single point in time (Creswell 2005). Participants are the preservice teachers from the Singapore’s only nationally-recognized teachers’ training institute, which prepares preservice teachers to teach at primary, secondary, and junior college levels through three kinds of programs. The Diploma in Education (DipEd) program is a 2-year course that prepares preservice teachers without a degree to teach in primary schools. The Post-Graduate Diploma in Education (PGDE) program prepares preservice teachers who are degree holders to teach at primary schools, secondary schools, and junior colleges. Therefore, the PGDE program is further divided into three levels, the PGDE (primary), the PGDE (secondary), and the PGDE (junior college). The Bachelor programs are 4-year undergraduate degrees in Education for the training of preservice teachers to teach at primary and secondary schools. To obtain a reasonable representativeness for the survey results, a compulsory ICT course that prepares preservice teachers in integrating ICT into their lesson design was used as a subject matter to elicit participants’ perceptions. With this, preservice teachers from the DipEd and PGDE programs were chosen to take part in the survey, giving rise to a participation rate amounting to about three quarters of the total preservice teacher population at the institute. All the preservice teachers undergo the ICT course in their first year of study. Those from the Bachelor programs were dropped from the study because there was a 3-year gap between their attendance of the ICT course and the survey administration. Due to this time lag, the preservice teachers from this program may not have strong recall of their ICT course experiences. The researchers felt that these preservice teachers’ perceptions of the ICT course may have been compromised and therefore did not select them for the study

3.1 The ICT Course

All preservice teachers need to attend the ICT course at the National Institute of Education in Singapore. The course is a 12-week course named “ICT for Meaningful Learning”. Preservice teachers meet weekly at 2-hour tutorial sessions. The course comprises two parts; the first part is 4-week long consisting of tutor-led discussions incorporating preservice teachers’ peer-teaching to learn the five dimensions of meaningful learning that are adapted from Howland et al. (2012). The five dimensions are: (1) engaging prior knowledge, (2) learning by doing, (3) real-world contexts, (4) collaborative learning, and (5) self-directed learning. Understanding these dimensions allows preservice teachers to move on to the second part of the course which focuses on designing lessons using ICT. In this part of learning, preservice teachers need to select three ICT tools from a suite of nine tools that include interactive whiteboards, Hot Potatoes™ for developing computer-based quizzes, as well as web-based tools such as blogs, podcasts, and the Knowledge Forum™. These tools are selected in consultation with their tutor. Preservice teachers then learn how to apply the selected tools to design a technology-enabled lesson for a topic from their teaching subject based on the five dimensions they learned earlier. The highlight of the lesson design activity is for preservice teachers to demonstrate their abilities in integrating technologies, pedagogy, and content knowledge to deliver a well-planned classroom lesson. In short, it is the TPACK of the preservice teachers that the course aims to develop.

3.2 Sample

The sample for this study consists of preservice teachers from the DipEd and PGDE programs. Despite the subdivision of the PGDE program, both DipEd and PGDE programs conducted the same ICT course requiring preservice teachers to go through the same course content and activities.

In total, 869 student teachers from both programs took part in the survey representing a participation rate of about 75 %. The demographics of the participants are given in Table 1.

3.3 Administration of the Survey

The survey, printed in hard copies, was administered at the end of the last semester before the preservice teachers’ graduation from their respective programs. It was conducted in a lecture hall under the supervision of course coordinators and two administrative staff. The role of the coordinators was merely to explain the purpose of the survey and to answer queries. Preservice teachers were given the choice of opting out of the survey and their participations to the survey were entirely voluntarily. Table 1 shows that 289 preservice teachers opted out of the survey. After the survey, responses were digitized and coded using SPSS.

3.4 Instrumentation

The survey instrument comprises two sections. The first section comprises 14 questions related to three constructs of course experience: course delivery, course content, and course environment that were adapted from the Technology Acceptance Model survey by Teo et al. (2009) that were validated through confirmatory factor analysis of survey responses obtained from 483 Singapore preservice teachers who attended the same ICT course at the said institution. The formation of these items were based the course aims and requirements and the questions were adapted by a team of lecturers who had taught the course for several years. The purpose of the section is to find out preservice teachers’ satisfaction with the course.

The next section comprises 30 questions taken from the TPACK for Meaningful Learning Survey by Chai et al. (2011). This survey was chosen as its items for the seven TPACK constructs were successfully validated through confirmatory factor analysis of responses from 336 Singapore preservice teachers who were attending the ICT course in Chai et al.’s study. It addressed the five dimensions of meaningful learning covered in the course. All items on the survey were rated on a seven-point Likert-type scale with the following options: 1, strongly disagree; 2, disagree; 3, slightly disagree; 4, neither agree nor disagree; 5, slightly agree; 6, agree; and 7, strongly agree. The survey was validated by the course departmental head who is well-versed with the content of the ICT course and another senior member in the department; both have many years of survey experience. Differences in opinions about survey items were mediated through negotiations.

3.5 Data Analysis

The first research focus of this study was to examine the construct validity of the instrument. The internal reliability of the instrument was first assessed by finding the Cronbach alpha of the survey instrument. Following the approach recommended by Hair et al. (2010), confirmatory factor analysis was carried out using AMOS 20 to validate the constructs for the Course Experience scale and TPACK scale that were defined a priori. The standardized regression weights, average variance extracted, as well as model fit indices were used to establish construct validity.

For the second research focus, it was hypothesized that there will be significant differences in terms of how teachers perceive the factors that define course experience and TPACK. This was examined through repeated-measures of ANOVA. The possibility of significant relationships between the factors of course experience and TPACK constructs were first examined using Pearson’s correlations. Following this, regression analysis was carried out to examine the impacts of students’ perceptions of course experience and TPACK factors on the dependent variable of TPACK. This addressed the third research focus. It was hypothesized that these factors will have significant positive influence on preservice teachers’ perception of TPACK.

4 Results

4.1 Research focus 1—Construct validity of instrument

The overall reliability of the instrument was high (α = 0.97). All items for the three constructs of course experience loaded significantly with standardized regression weights of at least 0.70 as specified by Hair et al. (2010; see Table 2). The average variance extracted was 0.67 and there was also satisfactory model fit was obtained (χ 2 = 353.93, χ 2/df = 4.92, p < 0.0001, TLI = 0.96, CFI = 0.97, RMSEA = 0.067 (LO90 = 0.060, HI90 = 0.074), SRMR = 0.03). The internal reliabilities of these constructs were high (course delivery, α = 0.89; course content, α = 0.94; support/environment, α = 0.89).

For the TPACK scale, items TK1 (I have technical skills to use computers effectively), TK6 (I am able to use social media (e.g., Blog, Wiki, and Facebook), and TPACK4 (I can provide leadership in helping others to coordinate the use of content, technologies, and teaching approaches at my school and/or district) were removed as their loadings were less than 0.70. The other 27 items loaded significantly according to the seven-factor structure theorized by Mishra and Koehler (2006; see Table 3). The average variance extracted was 0.71 and there was also satisfactory model fit was obtained (χ 2 = 1,535.14, χ 2/df = 5.12, p < 0.0001, TLI = 0.93, CFI = 0.94, RMSEA = 0.069 (LO90 = 0.065, HI90 = 0.072), SRMR = 0.04). The internal reliabilities of these factors were high (CK, α = 0.92; PK, α = 0.91; PCK, α = 0.91; TK, α = 0.89; TPK, α = 0.92; TCK, α = 0.89; TPACK, α = 0.94). Therefore, construct validation of the survey was established for the scales for both course experience and TPACK.

4.2 Research focus 2—Perceptions of course experience and TPACK

Preservice teachers’ ratings for the three aspects of course experience were positive as their mean ratings were close to or above 5 on the seven-point scale (course delivery: M = 5.21, SD = 0.90; course content: M = 4.93, SD = 1.03; support/environment: M = 5.30, SD = 0.89). A repeated-measures ANOVA with a Greenhouse–Geisser correction determined that there were statistical differences among preservice teachers’ mean ratings for the three aspects of course experience (F (1.89, 1636.00) = 126.42, p < 0.001), which supported the hypothesis.

Post hoc tests using the Bonferroni correction showed that the preservice teachers’ rating of course delivery was significantly higher than that for course content whereas their rating for course content was significantly lower than that for support/environment. Therefore, the preservice teachers were more satisfied with course delivery than the course content. Teachers’ rating for support/environment was significantly higher than course delivery, suggesting that they were more satisfied with the support/environment than with course delivery.

The preservice teachers were also confident of the different aspects of their TPACK as the mean rating of these factors above 5 on the seven-point scale (CK: M = 5.37, SD = 0.93; PK: M = 5.19, SD = 0.80, PCK: M = 5.23, SD = 1.00; TK: M = 5.18, SD = 1.05; TPK: M = 5.21, SD = 0.84; TCK: M = 5.08, SD = 0.99; TPACK: M = 5.26, SD = 0.85). A repeated measures ANOVA with a Greenhouse–Geisser correction determined that there were statistical differences among preservice teachers’ mean ratings for seven aspects of TPACK (F (4.67, 4055.02) = 18.18, p < 0.001). Using the Bonferroni correction, post hoc tests showed that the preservice teachers were most confident of their CK as it was significantly higher than all the other five TPACK constructs. The preservice teachers were largely confident about teaching with and without ICT because there were no significant differences among their ratings of PK, PCK, TK, TPK, and TPACK. Preservice teachers’ perception of PK was only significantly higher than their perceptions for TCK. However, within the technology-related factors, the preservice teachers were least confident of their TCK as it was rated significantly lower than TK, TPK, and TPACK. Preservice teachers were equally confident of their TK, TPK, and TPACK as there were no significant differences among these variables. These results indicated that preservice teachers were generally confident about their TK as well as using ICT-related pedagogies (TPK and TPACK). However, they were not as confident about the use of content-specific subject technology tools (TCK).

4.3 Research focus 3—Relationship between perceived course experience and TPACK perceptions

Table 4 suggests possible relationships between course experience factors and TPACK factors. Course delivery and support/environment generally have moderate positive correlation with TPACK factors as they largely ranged from 0.30 to 0.60 (see Fraenkel and Wallen 2003). Among these, the correlation with TPACK was the highest. Comparatively, TPACK is more strongly correlated with the TPACK factors rather than course experience factors. CK, PK, TK, TPK, and TCK, in particular, had strong positive correlations that were 0.60 and above. These results could imply that course content, course delivery, and support/environment functioned as the conditions that support preservice teachers to foster TPACK whereas TPACK is more strongly influenced by how teachers integrate knowledge within the course context. These findings were therefore used to guide the development of a hierarchical multiple linear regression model with TPACK as the dependent variable. Using the Enter method, that Course content, course delivery, and support/environment were defined as the first block of independent variables. According to Mishra and Koehler (2006), TK, PK, and CK were the three basic sources of TPACK. These fostered intermediate knowledge sources such as TPK and PCK that are integrated to form TPACK. Therefore, TK, PK, and CK were entered as the second block of independent variables while PCK, TCK, and TPK were entered as the third block to more clearly study the effects of these groups of variables. The regression model was significant and explained 79 % of the total model variance (see Table 5).

The R 2 of model 1 showed that the course experience factors accounted for 35 % of the model variance. Model 2 showed that the addition of the basic knowledge sources of TK, PK, and CK accounted for another 26 % of the model variance whereas the addition of the intermediate variables raised the model variance explained by 18 %.

Analysis of model 3 shows that among the factors for course experience, the preservice teachers perceived course delivery to be the significant predictor of their TPACK whereas the other two factors were not significant. The preservice teachers perceived all the TPACK factors to be significant predictors. Interestingly, PCK was significant but negative. Among these, the perceived effects of TPK and TCK were the largest whereas the effects of TK, PK, CK, and course content were substantially smaller. Therefore, the hypothesis that course experience and TPACK factors have significant positive influence on TPACK is largely supported in terms of TPACK factors (except for PCK) and partially supported for course experience factors.

5 Discussion

5.1 ICT course design

The preceding results showed that this graduating cohort of Singapore preservice teachers had higher ratings for course delivery and course environment. Nevertheless, the regression model revealed that the course content emphasizing practical examples and hands-on ICT integration assignments were most influential to their TPACK development. The variance explained by the hierarchical regression model also suggests that such kinds of course activities serve as effectual conditions for teachers to draw upon their basic knowledge sources of TK, PK, and CK to further develop intermediate knowledge sources such as TPK and TCK. The addition of intermediate variables added 18 % to the total model variance, which was substantial. The formation of technology-related intermediate variables of TPK and TCK in turn had the largest contribution to TPACK in the final model. This finding supports the contention that TPACK is best developed through design experiences as these present preservice teachers with concrete scenarios to integrate different TPACK factors (Koehler and Mishra 2005; Koehler et al. 2007). While there is a need to emphasize design experiences in the ICT course, these results do not imply that course delivery and course environment can be ignored because these factors influenced preservice teachers’ computer adoption attitudes in some ICT evaluation studies. For instance, activities that are longer in duration (contact hours plus follow-up), have meaningful engagement of teachers in relevant activities, provides access to new technologies for teaching and learning, have all been found to be important conditions in providing quality learning experiences for teachers (Adelman et al. 2002; Porter et al. 2000; Sparks 2002 ). The preservice teachers could have considered the design experiences to be more related to their TPACK formation. It is unclear, however, if negative relationships with TPACK would occur if preservice teachers’ satisfaction with these factors were very low.

Two aspects need to be addressed with respect to improving the specific ratings for the factors of course experience and TPACK that surfaced from the ANOVA results. The preservice teachers were generally positive about their ICT course experiences however their ratings for course content were slightly lower than that for the two other aspects. There are two possible reasons for this. First, preservice teachers attended the course with the expectations of learning how to use specific technology, such as video editing software. The course however focused on the integration of technology into instruction, thereby creating some disjoint in preservice teachers’ perception about the course content. Second, learning about technology integration cannot be separated from student-centered pedagogies that emphasized analytical thinking and problem solving (Bransford et al. 2000; Goldman et al. 2006). This is in contrast with teacher-directed instruction which emphasized the memorization of facts and the practice of procedures. Therefore, unless preservice teachers are convinced of the student-centered paradigm of teaching and learning, the course content remained challenging for some. To improve preservice teachers’ learning about student-centered pedagogies, a more participative approach in the form of reciprocal teaching has been introduced into the course. Through the process of peer teaching, the intent is to deepen preservice teachers’ understanding about studentcentered pedagogies and hopefully transform their epistemology (Divaharan et al. 2011).

Their ratings for the TPACK factors suggest that the preservice teachers were equally confident of their TK, TPK, and TPACK. TPACK is related to the design of ICT lessons for specific content and topics whereas TPK is related with the knowledge of technologically supported pedagogies, which is more general (Cox and Graham 2009). As the course was designed to have preservice teachers learn about technology integration by experiencing technology (learn by doing), these results suggest that the course pedagogies employed have successfully supported preservice teachers to transfer general models of TPK into the context of their teaching subjects (TPACK).

In spite of this, the course content has since been improved by expanding opportunities for preservice teachers to design topic-specific lesson ideas. By lesson ideas, they differ from a lesson plan in terms of their scope of design. In lesson ideas, the focus is at the activity level rather than designing for the entire lesson (presumably made up of a series of activities). Additionally, preservice teachers engage in such design activities for multiple times over the course rather than doing it as a one-time assessment. The rationale for this is to engage preservice teachers in a knowledge creation mode where they start thinking about how technology can be infused into specific topics at the onset of their career (Divaharan et al. 2011).

5.2 ICT course evaluation

The study results show that for the preservice teachers in this study, both course experience factors and TPACK factors are relevant for the evaluation of this ICT course. The course experience factors accounted for about 35 % of the regression model variance. These were conditions that facilitated the development of the TPACK factors which in turn contributed the other 44 % of the regression model variance. ICT evaluations that focus on TPACK assessments only gain an insight about preservice teachers’ knowledge perceptions (e.g., Schmidt et al. 2008, 2009). Evaluations that focus on preservice teachers’ course experiences may capture some aspects of their perceived learning but the use of standardized TPACK items not only provides a stronger theoretical grounding for the assessment of preservice teachers’ ICT integration knowledge; it also allows for comparisons to be made across cohorts and different types of ICT courses. This is an area where TPACK surveys have yet to be exploited. Some colleges of education have also integrated the instruction of ICT integration into methods courses (Kleiner et al. 2007). Since the TPACK factors also include PK, CK, and PCK, such kinds of course evaluations can also support the evaluation of both the technological and nontechnological aspects of methods courses.

The statistical relationships between course experiences and TPACK can be used to inform the design of qualitative evaluation studies that examine how specific ICT instructional strategies foster TPACK. An open feedback channel has since been instituted online where preservice teachers can give comments about course content as well as on the process of learning. This channel is open throughout the 12-week course and depending on the type of feedback, follow-up actions with the preservice teachers may be conducted when necessary.

5.3 Methodological issues

Methodologically, the study results support the current view that TPACK is a complex construct still needing validation (Archambault and Barnett 2010; Cox and Graham 2009). This study adds further evidence to the results of the study by Chai et al. (2011) with 214 preservice teachers, which has been the only one reporting validation of the seven-construct TPACK survey. While researchers have suggested that teachers’ inexperience with ICT integration and teaching practices could have resulted in their inability to distinguish between TPK, TCK, and TPACK (Koh et al. 2010; Lee and Tsai 2010), this study shows the contrary. Cox and Graham (2009) further suggested that when teachers become very familiar with using certain ICT tools, the TPACK associated with these tools may become absorbed into teachers’ PCK. It did not appear that the graduating preservice teachers were experiencing these effects. Nevertheless, this is an area needing more examination as comparisons of TPACK profiles between preservice and in-service teachers have yet to be published.

On the other hand, there is also a need to consider if the item design had an impact on the results. Specifically, the negative relationship between PCK and TPACK could be because the PCK items emphasized the stem, “Without using technology”. The wording of items may have led the respondents to perceive it as being contradictory to technology-related pedagogies. These results could also suggest that these graduating preservice teachers may still be grappling with the demands of the constructivist-oriented dimensions of meaningful learning adapted from Howland et al. (2012) for this course. This kind of pedagogical dissonance (Windschitl 2002) could be expected as studies of in-service teachers have found that even though various ICT tools can be exploited for constructivist-oriented lessons, they are still using them to support their current teaching practices which are largely focused on information transmission (Gao et al. 2009; Lim and Chai 2008; Starkey 2010). More consideration needs to be given to retesting and revalidating the items of PCK in view of these observations.

5.4 Limitations and Future Directions

This study has only been carried out with Singapore graduating preservice teachers. The findings are not generalizable to beginning preservice teachers, beginning teachers, or experienced teachers. Therefore, an area of future research would be to replicate the study with different groups of teachers, both within and outside Singapore. A longitudinal study with a cohort of teachers throughout preservice training and in-service professional development can also be considered. The changing relationship between teachers’ perceptions of course satisfaction and TPACK throughout their career can therefore be better understood.

Secondly, this study only examined one hierarchical regression model of preservice teachers’ TPACK development where course experience factors are first entered, followed by TK, PK, and CK, and finally by TPK and PCK. This model was largely based on the postulations of Mishra and Koehler (2006). Koh and Divaharan (2011) found that preservice teachers are able to consider factors related to TPK and TPACK as they begin to learn an ICT tool. In reality, all TPACK factors could also emerge simultaneously from students’ course experiences. Additionally, the interaction among course experience and TPACK factors could possibly influence teachers’ perception of TPACK. These are limitations of this study which can be further explored in future studies. Different models of preservice teachers’ TPACK formation as well as the interactions among factors could be examined and compared in future studies through structural equation modeling or regression models.

Thirdly, it is not clear if the merging of TCK, TPK, and TPACK items was due to item design or preservice teachers’ characteristics. Therefore, besides replicating the study with different groups of teachers as suggested earlier, these items could also be redesigned and tested separately. Since TPACK dealt with ICT integration knowledge for specific subject topics, the design of TPACK items with respect to specific subject topics and pedagogical approaches could also be considered. This may improve the specificity of the TCK, TPK, and TPACK items which will in turn improve their construct validity.

6 Conclusion

This study analyzed the relationship between Singapore preservice teachers’ perceptions of course satisfaction and TPACK with respect to an ICT course they attended. Its hierarchical regression model found that the preservice teachers’ perceptions of TPACK were first influenced by their perceptions of course experiences which in turn influenced their perceptions of the intermediary TPACK constructs. This explained a plausible process of preservice teachers’ TPACK development. The results also suggest that incorporating both course experiences and TPACK measures into ICT course evaluation can lend deeper insights about the contributions of course activities to preservice teachers’ development of ICT integration knowledge. This is an area that can be further considered for ICT course evaluation.

References

Adelman, N., Donnelly, M. B., Dove, T., Tiffany-Morales, J., Wayne, A., & Zucker, A. (2002). The integrative studies of educational technologies: Professional development and teachers’ use of technology. Arlington, VA: SRI International.

Archambault, L. M., & Barnett, J. H. (2010). Revisiting technological pedagogical content knowledge: Exploring the TPACK framework. Computers in Education, 55(4), 1656–1662. doi:10.1016/j.compedu.2010.07.009.

Archambault, L. M., & Crippen, K. (2009). Examining TPACK among K-12 online distance educators in the United States, vol. 9. Contemporary Issues in Technology and Teacher Education Retrieved from http://www.citejournal.org/vol9/iss1/general/article2.cfm

Bransford, J. D., Brown, A. L., Cocking, R. R., Donovan, M. S., & Pellegrino, J. W. (Eds.). (2000). How people learn: Brain, mind, experience, and school (Expandedth ed.). Washington, DC: National Academy Press.

Brush, T., & Saye, J. W. (2009). Strategies for preparing preservice social studies teachers to integrate technology effectively: Models and practices. Contemporary Issues in Technology and Teacher Education, 9(1), 46–59.

Chai, C. S., Koh, J. H. L., & Tsai, C. C. (2010). Facilitating preservice teachers’ development of technological, pedagogical, and content knowledge (TPACK). Educational Technology and Society, 13(4), 63–73.

Chai, C. S., Koh, J. H. L., & Tsai, C. C. (2011a). Exploring the factor structure of the constructs of technological, pedagogical, content knowledge (TPACK). The Asia-Pacific Education Researcher, 20(3), 595–603.

Chai, C. S., Koh, J. H. L., Tsai, C. C., & Tan, L. W. L. (2011b). Modeling primary school pre-service teachers’ technological pedagogical content knowledge (TPACK) for meaningful learning with information and communication technology (ICT). Computers in Education, 57(1), 1184–1193. doi:10.1016/j.compedu.2011.01.007.

Cox, S., & Graham, C. R. (2009). Diagramming TPACK in practice: Using and elaborated model of the TPACK framework to analye and depict teacher knowledge. TechTrends, 53(5), 60–69.

Creswell, J. W. (2005). Educational research: Planning, conducting, and evaluating quantitative and qualitative research. New Jersey: Pearson Education.

Davis, F. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13, 319–340.

Divaharan, S., Lim, W. Y., & Tan, S. C. (2011). Walk the talk: Immersing preservice teachers in the learning of ICT tools for knowledge creation. Australasian Journal of Educational Technology, 27(8), 1304–1318.

Fraenkel, J. R., & Wallen, N. E. (2003). How to design and evaluate research in education. New York, NY: McGraw-Hill.

Gao, P., Choy, D., Wong, A. F. L., & Wu, J. (2009). Developing a better understanding of technology based pedagogy. Australasian Journal of Educational Technology, 25(5), 714–730.

Goldman, S. R., Lawless, K., Pellegrino, J. W., & Plants, R. (2006). Technology for testing and learning with understanding. In J. M. Copper (Ed.), Classroom teaching skills (8th ed., pp. 185–234). Boston: Houghton Mifflin.

Graham, R. C., Burgoyne, N., Cantrell, P., Smith, L., St Clair, L., & Harris, R. (2009). Measuring the TPACK confidence of inservice science teachers. Tech Trends, 53(5), 70–79.

Hair, J. F., Jr. , Black, W. C., Babin, B. J., Anderson, R. E., & Tatham, R. L. (2010). Multivariate data analysis: A global perspective (7th ed.). Upper Saddle River, NJ: Pearson Education.

Howland, J. L., Jonassen, D., & Marra, R. M. (2012). Meaningful learning with technology (4th ed.). Boston, MA: Allyn & Bacon.

Kleiner, B., Thomas, N., & Lewis, L. (2007). Educational technology in teacher education programs for initial licensure. Washington, DC: National Center for Education Statistics.

Koehler, M. J., & Mishra, P. (2005). What happens when teachers design educational technology? The development of technological pedagogical content knowledge. Journal of Educational Computing Research, 32(2), 131–152.

Koehler, M. J., Mishra, P., & Yahya, K. (2007). Tracing the development of teacher knowledge in a design seminar: Integrating content, pedagogy and technology. Computers in Education, 49(3), 740–762.

Koh, J. H. L., & Divaharan, S. (2011). Developing pre-service teachers’ technology integration expertise through the TPACK-Developing Instructional Model. Journal of Educational Computing Research, 44(1), 35–58.

Koh, J. H. L., Chai, C. S., & Tsai, C. C. (2010). Examining the technology pedagogical content knowledge of Singapore pre-service teachers with a large-scale survey. Journal of Computer Assisted Learning, 26(6), 563–573.

Lee, M. H., & Tsai, C. C. (2010). Exploring teachers’ perceived self efficacy and technological pedagogical content knowledge with respect to educational use of the World Wide Web. Instructional Science, 38, 1–21.

Lim, C. P., & Chai, C. S. (2008). Teachers’ pedagogical beliefs and their planning and conduct of computer-mediated classroom lessons. British Journal of Educational Technology, 39(5), 807–828.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Moursund, D., & Bielefeldt, T. (1999). Will new teachers be prepared to teach in a digital age? A national survey on information technology in teacher education. Santa Monica, CA: Milken Family Foundation.

Pierson, M. E. (2001). Technology integration practice as a function of pedagogical expertise. Journal of Research on Computing in Education, 33(4), 413–430.

Porter, A. C., Garet, M. S., Desimone, L., Yoon, K. S., & Birman, B. F. (2000). Does professional development change teaching practice? Results from a three-year study (U.S. Department of Education Report No. 2000–04). Washington, D.C.: U.S. Department of Education.

Rogers, E. M. (1995). Diffusion of innovations. New York: Simon and Schuster.

Schmidt, D. A., Sahin, E. B., Thompson, A., & Seymour, J. (2008). Developing effective technological pedagogical and content knowledge (TPACK) in PreK-6 teachers. Paper presented at the Society for Information Technology and Teacher Education International Conference 2008 Chesapeake, VA.

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for preservice teachers. Journal of Research on Technology in Education, 42(2), 123–149.

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4–14. doi:10.3102/0013189x015002004.

Sparks, D. (2002). Designing powerful professional development for teachers and principals. Oxford, OH: National Staff Development Council.

Starkey, L. (2010). Teachers’ pedagogical reasoning and action in the digital age. Teachers and Teaching: theory and practice, 16(2), 233–244.

Straub, E. T. (2009). Understanding technology adoption: Theory and future directions for informal learning. Review of Educational Research, 79(2), 625–649.

Teo, T., Lee, C. B., Chai, C. S., & Choy, D. (2009a). Modelling pre-service teachers’ perceived usefulness of an ICT-based student-centred learning (SCL) curriculum: A Singapore study. Asia Pacific Education Review, 10(4), 535–545.

Teo, T., Lee, C. B., Chai, C. S., & Wong, S. L. (2009b). Assessing the intention to use technology among pre-service teachers in Singapore and Malaysia: A multigroup invariance analysis of the Technology Acceptance Model (TAM). Computers in Education, 53(3), 1000–1009.

Windschitl, M. (2002). Framing constructivism in practice as the negotiation of dilemmas: An analysis of the conceptual, pedagogical, cultural, and political challenges facing teachers. Review of Educational Research, 72(2), 131–175.

Wozney, L., Venkatesh, V., & Abrami, P. (2006). Implementing computer technologies: Teachers’ perceptions and practices. Journal of Technology and Teacher Education, 14(1), 173–207.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Koh, J.H.L., Woo, HL. & Lim, WY. Understanding the relationship between Singapore preservice teachers’ ICT course experiences and technological pedagogical content knowledge (TPACK) through ICT course evaluation. Educ Asse Eval Acc 25, 321–339 (2013). https://doi.org/10.1007/s11092-013-9165-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11092-013-9165-y