Abstract

A probabilistic technique is developed to assess the hazard from meteotsunamis. Meteotsunamis are unusual sea-level events, generated when the speed of an atmospheric pressure or wind disturbance is comparable to the phase speed of long waves in the ocean. A general aggregation equation is proposed for the probabilistic analysis, based on previous frameworks established for both tsunamis and storm surges, incorporating different sources and source parameters of meteotsunamis. Parameterization of atmospheric disturbances and numerical modeling is performed for the computation of maximum meteotsunami wave amplitudes near the coast. A historical record of pressure disturbances is used to establish a continuous analytic distribution of each parameter as well as the overall Poisson rate of occurrence. A demonstration study is presented for the northeast U.S. in which only isolated atmospheric pressure disturbances from squall lines and derechos are considered. For this study, Automated Surface Observing System stations are used to determine the historical parameters of squall lines from 2000 to 2013. The probabilistic equations are implemented using a Monte Carlo scheme, where a synthetic catalog of squall lines is compiled by sampling the parameter distributions. For each entry in the catalog, ocean wave amplitudes are computed using a numerical hydrodynamic model. Aggregation of the results from the Monte Carlo scheme results in a meteotsunami hazard curve that plots the annualized rate of exceedance with respect to maximum event amplitude for a particular location along the coast. Results from using multiple synthetic catalogs, resampled from the parent parameter distributions, yield mean and quantile hazard curves. Further refinements and improvements for probabilistic analysis of meteotsunamis are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Meteorologically induced tsunamis, termed meteotsunamis (Monserrat et al. 2006), can cause harbor damage and injuries, as exemplified by a recent event along the northeast U.S. Atlantic coast on June 13, 2013. This event as well as the Boothbay (Maine) meteotsunami in 2008 (Vilibić et al. 2013) and the Daytona Beach (Florida) event in 1992 (Churchill et al. 1995; Sallenger et al. 1995) are three U.S. examples of many meteotsunamis around the world that have proved to be hazardous. Other locations where meteotsunami have notably struck include the Balearic Islands (Monserrat et al. 1991), the Adriatic Sea (Vilibić et al. 2004), Japan (Hibiya and Kajiura 1982; Asano et al. 2012), and the Kuril Islands (Rabinovich and Monserrat 1998). Current understanding of meteotsunamis comes from both theoretical/numerical studies (e.g., Vilibić 2008; Vennell 2010) and careful examination of case study observations (Rabinovich and Monserrat 1996; Monserrat et al. 2006). These previous studies have identified several atmospheric causes for meteotsunamis, and for meteotsunami amplitudes to be significant, several wave propagation resonance conditions that need to be satisfied.

As more is learned about the conditions in which meteotsunamis are formed, a question that arises is how might this phenomenon be assessed from a natural hazards perspective? One approach is the design storm concept in which a characteristic storm or small set of storms is used to determine the surge levels at a coastal site (see Resio et al. 2009). Another approach is based on probabilistic analysis, in which natural hazard severity is estimated for a given probability of exceedance. This approach is expanding in the assessment of flood hazards, in general (U.S. Nuclear Regulatory Commission 2013), and phenomena such as tsunami (Geist and Parsons 2006) and storm surge (Resio et al. 2009), in particular.

In this paper, we propose a framework for the probabilistic analysis of meteotsunamis. In the next section, we provide a brief background of meteotsunamis and the causes of resonant amplification. This is followed by the Probabilistic Framework section in which we indicate the assumptions inherent in probabilistic analysis and we develop an aggregation equation for meteotsunamis, as well as one specific to squall lines as examined in the demonstration study. The next three sections describe a demonstration study using (1) ASOS station data used to determine squall line parameters and their distributions, (2) numerical modeling of meteotsunami waves, and (3) results for several locations along the northeast U.S. coastline. We then discuss how probabilistic analysis of meteotsunamis can be further refined and improved.

2 Background

Meteotsunamis are caused by a variety of atmospheric phenomena, including atmospheric gravity waves, frontal passages, and squall lines that rapidly propagate over the ocean, primarily across continental shelves (Monserrat et al. 2006; Vilibić et al. 2013). Isolated pressure jumps, such as those associated with squall lines and derechos, are primarily considered in this study, to demonstrate how probabilistic analysis is developed from general equations for a specific type of meteotsunami. A comprehensive probabilistic analysis of meteotsunamis would include all other types of atmospherically induced events.

A key factor in the generation of meteotsunamis is the speed of the pressure disturbance relative to the phase speed of long waves in the ocean. When the speed of the pressure disturbance approaches the long-wave speed, the coupled wave becomes amplified: an effect termed Proudman resonance (Proudman 1929, Lamb 1932). Amplification from Proudman resonance increases with time. When the translation (U) speed equals the long-wave phase speed (Froude number Fr = 1), Hibiya and Kajiura (1982) derived the theoretical increase in amplitude Δη for a simple atmospheric pressure model as

where W is the horizontal distance of the linear increase in pressure at the front, x f is the distance traveled by the front (fetch), and Δη* is the barometric amplitude given in terms of the pressures change Δp by Δη* = −Δp/(ρg). Proudman resonance can also be written as a function of time (Vilibić 2008) for Fr = 1:

Therefore, the fetch or duration of the disturbance is critical in generating significant meteotsunami waves. Vilibić (2008) shows that for atmospheric disturbances propagating over a sloping shelf (i.e., varying Fr), Proudman resonance is more significant for large disturbance widths relative to the shelf width, whereas there is a greater separation of coupled and decoupled waves for narrow disturbances. Proudman resonance also selects a specific frequency range of ocean waves, as indicated by Monserrat et al. (2006).

Other resonant effects can amplify meteotsunamis. Disturbances propagating parallel to the coast excite fundamental mode edge waves (Greenspan 1956). In terms of edge wave excitation, meteotsunamis are similar to earthquake and landslide tsunami sources located along the continental shelf, although the geologic sources appear to excite a wider range of near-field edge wave modes (Carrier 1995; Fujima et al. 2000; Lynett and Liu 2005; Geist 2012). In many of the locations were meteotsunamis were damaging, harbor resonance (e.g., Raichlen and Lee 1992) was a critical factor. Several studies highlight the use of spectral analysis to examine many of these effects (e.g., Rabinovich and Monserrat 1998; Vilibić et al. 2005; Monserrat et al. 2006; Asano et al. 2012).

Accompanying ocean waves coupled during Proudman resonance are different types of decoupled waves. Vennell (2007) demonstrates that as the coupled disturbance passes over bathymetric discontinuities (e.g., shelf edge, ridges, canyons, etc.) or coastlines, fully or partially reflected phases are generated. Propagation of waves sub-parallel to the shelf edge may generate resonant shelf modes (Monserrat et al. 2006; Vennell 2010). As an atmospheric disturbance moves into deeper water, small amplitude waves are decoupled and propagate ahead of the disturbance. Finally, for supercritical disturbances moving faster than the ocean phase speed (Fr > 1), a barotropic wake is generated (Mercer et al. 2002).

3 Probabilistic framework

A probabilistic approach to assessing the hazard from meteotsunamis entails analyzing the occurrence of events in time. The null hypothesis of meteotsunami occurrence is that events are randomly distributed in time according to a stationary Poisson process. Under this assumption, the time between events, termed the inter-event time (τ), is independent of the history of past events and is exponentially distributed according to \(f\left( \tau \right) = \lambda e^{ - \lambda \tau }\), where \(f\left( \tau \right)\) is the probability density function of τ and λ is the rate parameter. The probability that one or more meteotsunamis with amplitude A > A 0 will occur in time T is then given by \(P\left( {A > A_{0} } \right) = 1 - e^{ - \lambda T}\). In many flood applications, the objective is to determine annualized probabilities (T = 1 year) termed the annual exceedance probability (AEP). In addition, for small values of λ in units of year−1, AEP ≈ λ.

Because the historical record of meteotsunamis in any given region is small and because small meteotsunamis are not routinely identified in tsunami catalogs (Rabinovich 2012), the data that form the basis of our probabilistic analysis are the meteorological conditions (parameterized by source vector ψ) that could give rise to meteotsunamis. A maximum wave amplitude is derived from numerical simulation of ocean waves from numerous combinations of the meteorological conditions. We plot a meteotsunami hazard curve by calculating the Poisson exceedance rate as a function of maximum event amplitude for a given location along the shoreline. As described below, this is similar to both probabilistic tsunami hazard analysis (PTHA) and probabilistic storm surge forecasting that develops hazard assessments from parameterization and sampling of source processes. The calculated number of meteotsunamis above a certain threshold can then be compared to the number of historically observed meteotsunamis as a check of the probabilistic calculations.

The probabilistic framework for analyzing meteotsunami hazards is developed from the probabilistic analysis of both tsunamis and hurricane storm surge. PTHA aggregates tsunami amplitude or runup from various locations, source types, and source parameters using a numerical propagation model (e.g., Geist and Parsons 2006; Geist et al. 2009). For each source type, there is a dominant parameter that measures source potency, such as seismic moment for earthquakes and volume for landslides. Most other source parameters scale with the source potency parameter. Although probabilistic storm surge analysis also relies on a numerical hydrodynamic model, there are several parameters, aside from location, that affect storm surge severity that are evaluated according to their joint probability (Resio et al. 2009; Toro et al. 2010). The initial framework for probabilistic analysis of meteotsunamis can include different sources, a numerical hydrodynamic model, and considerations of all parameters that influence near-shore wave amplitudes. The joint probability method can be further expanded to include other sources of sea-level fluctuations, such as from tides and other surges, in addition to meteotsunamis (Pugh and Vassie 1978; Rabinovich et al. 1992).

To compute the annualized Poisson exceedance rate λ for meteotsunamis, the joint probability of atmospheric parameters \(f\left( {\psi_{i} } \right)\) for each meteotsunami source represented by index i (e.g., atmospheric gravity wave, and squall line) is integrated with the probability of wave amplitude being exceeded for each combination of source parameters \(P\left( {A > A_{0} |\psi_{i} } \right)\):

where ν i is the annual rate of occurrence for a particular atmospheric source over the entire range of its parameters. In PTHA, sources are commonly restricted in space to major fault zones (earthquakes) or continental/island margins (landslides) (ten Brink et al. 2014) and the aggregation equations include a second integral over location. In contrast, the source parameter vector for meteotsunami hazards \(\left( {\psi_{i} } \right)\) includes a distributed location parameter (e.g., shoreline crossing coordinate), similar to what is specified in probabilistic storm surge analysis. The source parameter vector is general, in that it can range from kinematic parameters, to the parameters used in high-resolution mesoscale modeling (e.g., Horvath and Vilibić 2014). The latter is analogous to PTHA that uses dynamic fault rupture models to determine the coupled system of solid earth seismic and ocean waves in place of conventional kinematic earthquake parameters (Geist and Oglesby 2013).

For the demonstration study presented in this paper, only a single source, isolated pressure disturbances from squall lines, is initially considered, so that the summation and source type index (i) in the above general aggregation equation are dropped. Furthermore, it is initially assumed that the source parameters are independent so that the joint probability distribution is given by the product of each marginal distribution:

where Δp is the maximum pressure change, U the translation speed, T f the period, and L the length of the disturbance. Each of these parameters can be determined from historical weather station and radar data as described in the Sect. 4 below.

The term \(P\left( {A > A_{0} |\Delta p,U,T_{f} ,L} \right)\) is computed from numerical modeling and includes natural variability in one or more of the parameters, termed aleatory uncertainty. If aleatory uncertainty is not included, \(P\left( {A > A_{0} |\Delta p,U,T_{f} ,L} \right)\) is given by the Heaviside function \(H\left[ {A_{\text{comp}} \left( {\Delta p,U,T_{f} ,L} \right) - A_{0} } \right]\) (cf. Resio et al. 2009), where \(A_{\text{comp}} \left( {\Delta p,U,T_{f} ,L} \right)\) is the amplitude computed from the numerical model.

Because meteotsunami wave amplitudes can be accurately computed using numerical methods (Vilibić 2005), the above integral equation is implemented using Monte Carlo methods. The probability distribution associated with each source parameter is randomly sampled to construct a synthetic catalog of relevant atmospheric events. Methods are available to sample most of the common distributions (Press et al. 2007). Calculations using multiple, resampled synthetic catalogs result in mean and quantile hazard curves.

As an alternative to Monte Carlo sampling, continuous response functions have been developed for hurricane storm surge forecasting (Irish et al. 2009, 2011). The response function is specific to a shoreline coordinate and is evaluated based on the critical source parameters (e.g., central pressure and pressure radius in the case of hurricane storm surge). The advantage of using these response functions is that they are continuous analytic functions that obviate sampling errors associated with the discrete method, especially important for extreme hazard values (Irish et al. 2009).

Various sources of uncertainty are incorporated into the hazard curves using different methods based on a distinction between epistemic and aleatory uncertainty. Epistemic uncertainty is also referred to as knowledge uncertainty that can be reduced by the collection of new data, whereas aleatory uncertainty relates to the natural or stochastic uncertainty inherent in the physical system. In PTHA, epistemic uncertainty is included through the use of logic trees (Annaka et al. 2007), whereas aleatory uncertainty is included through the \(P\left( {A > A_{0} |\psi_{i} } \right)\) term (Geist et al. 2009). In PTHA, for example, the variability of slip within an earthquake rupture zone translates to normally distributed near-shore tsunami amplitudes (e.g., Parsons and Geist 2009). For storm surge forecasting, parametric and modeling uncertainty are included with special error terms in the aggregation equation (Resio et al. 2009).

4 Data

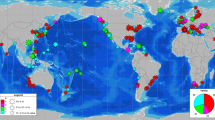

The study region to demonstrate the probabilistic analysis is the northeast Atlantic coast of the U.S. (Fig. 1). Observations from the U.S. Automated Surface Observing System (ASOS) were used to determine parameters of long-lasting squall lines (Rotunno et al. 1988) and derechos along the Atlantic coast. Derechos are common in the eastern U.S. in the summer months and are defined by Bluestein (1993) as mesoscale convective systems that produce straight-line wind gusts greater than 26 m/s over a length of 400 km or greater. The ASOS stations are located at most airports throughout the U.S. Table 1 lists parameters for the squall lines used in the probabilistic analysis of meteotsunamis starting on January 1, 2000. Prior to this, observations were only recorded once per hour at each ASOS station instead of once per minute (prior to 1990, they were recorded once every 3 h). Since squall lines propagate quickly, the once per hour observations make it impossible to accurately calculate the period of the pressure jump. Determination of the parameters is described below.

Doppler radar data were used to determine where the most intense part of the squall line crossed the coastline, and the pressure jump was measured on the nearest ASOS station. Many of the squall lines had weak tornadoes embedded in them. These tornadoes had no effect on the pressure data. The pressure drop inside these weak tornadoes is so localized that they could pass 30 m from the ASOS tower and the pressure sensor would not be able to detect them. Length measurements were taken from radar data, simply by measuring approximately how long the squall lines were from end to end. Because of coarse resolution in the radar data, these measurements were rounded to the nearest multiple of 50 km. Because there are sometimes missing data points in the ASOS time series, nominally sampled at 1-min intervals, the period of the pressure jump is rounded to the nearest multiple of 5 min.

Speed measurements likely have the highest error. The U.S. National Weather Service measures speed of storms from radar data by calculating the propagation distance over time (in comparison, satellite data is used for hurricanes). However, because of the coarse resolution in the radar data, there is significant error. Speed measurements are rounded to the nearest multiple of 5 km/h (and then converted to m/s), and the error is within at most ±5 m/s. Slower moving squall lines are at a higher risk for larger speed errors.

Probability distributions were estimated using the maximum likelihood method for the meteotsunami source parameters listed in Table 2. For each parameter, both normal and lognormal distributions were tested. The distribution with the better fit was chosen as the optimal parameter distribution. The Kolmogorov–Smirnov test was used to determine whether the optimal distribution could be rejected at the 95 % confidence level. The model distributions for each parameter passed this test (p value given in Table 2).

5 Numerical model

The numerical hydrodynamic model used in the Monte Carlo procedure for the probabilistic computation is based on the finite-difference approximation to the linear long-wave equations. This wave model is particularly efficient and can be parallelized for the large number of computations necessary for the probabilistic analysis. For a case study of similar scope, Hibiya and Kajiura (1982) indicate that the nonlinear advection term associated with the shallow-water wave equations, as well as bottom friction, wind stress, wave breaking, and effects of the Coriolis force can be ignored to first-order approximation. The influence of wind stress depends on both the speed and duration of sustained winds as discussed by Vilibić et al. (2005). The linear long-wave equations are implemented using the leap-frog finite-difference method (Aida 1969; Satake 2007), forced by the moving atmospheric disturbance. For the open ocean boundaries of the model, radiating boundary conditions are implemented (Reid and Bodine 1968). Computations are carried to the 5 m isobath, where a reflection boundary condition is imposed.

Forcing of the ocean waves is given by a moving pressure change oriented in a straight-line trajectory with the functional form \(\eta_{a} (x - Ut)\), where U is the horizontal speed of the pressure disturbance (Hibiya and Kajiura 1982; Vennell 2007). The finite-difference method is particularly sensitive to the irregularities in the forcing functions. For this study, we use the smooth spatial functions developed by Lynett and Liu (2005) for landslide tsunamis. In the above equations, 1 hPa of atmospheric pressure change translates to -1 cm of wave height. Other one-sided forcing functions for meteotsunamis were used in previous studies (Martinsen et al. 1979; Hibiya and Kajiura 1982; Mercer et al. 2002; Vennell 2007), whereas a one-cycle sine function (two-sided forcing) was investigated by Vilibić et al. (2005). Vilibić (2005) indicates the sensitivity of wave evolution to the shape of the forcing function.

Results from the numerical hydrodynamic model and forcing function are compared to tide-gauge observations from the June 13, 2013, meteotsunami event along the northeast U.S. Atlantic coast. In Fig. 2, the maximum meteotsunami amplitude at the 5 m isobath is plotted as a function of latitude. The computed maximum amplitudes compare well with the observations at seven tide-gauge stations that had clear signals of the meteotsunami. The detided data were obtained from the National Tsunami Warning Center (http://wcatwc.arh.noaa.gov/previous.events/?p=06-13-13) and NOAA/PMEL (http://nctr.pmel.noaa.gov/eastcoast20130613/). For this event, decoupled phases reflected from the coastline, the continental shelf edge, and from canyons that incise the shelf edge (Fig. 3) appear to be important, as was also indicated by Pasquet and Vilibić (2013) for previous meteotsunamis along the U.S. Atlantic coast. It is likely that waves originating from a barotropic wake were generated in very shallow water to some extent (Mercer et al. 2002, Vilibić 2008). Sensitivity analysis of the parameters listed in Table 2 relative to the parameters associated with the 2013 meteotsunami indicate that the pressure change and speed of the atmospheric disturbance (relative to the continental shelf water depth) have the most influence on maximum wave amplitudes at the coast. Length, width, trajectory, and polarity of the disturbance have significant secondary effects on the waves as they approach the coast.

The Monte Carlo simulations using this numerical model were performed with region-specific information. First, squall line trajectories are approximately between an easterly or southeasterly heading in this region. This is implemented as aleatory uncertainty by applying a uniform trajectory distribution between headings of 70° and 145° from north. Second, there does not appear to be a preferential latitude for shoreline crossing in the study region. Again, a uniform distribution is used to determine shoreline crossing position within the model domain: longitude 76°8′W–64°33′W and latitude 29°0′N–43°22′N (Fig. 1).

6 Results: U.S. Northeast Coast

To perform the Monte Carlo calculations, a synthetic catalog of events is complied by sampling each of the parametric distributions listed in Table 2. For normal distributions, sampling is performed using the Box–Müller method (1958). Sampling lognormal distributions are performed from a direct transformation of normal variates. Each parameter is sampled independently, consistent with Eq. (4). The sample size of the catalog is 116, the number of squall line events recorded in this region by ASOS stations from 2000 to 2013. For each entry in the synthetic catalog, numerical modeling is performed to determine the maximum amplitude at the 5 m isobath. Because some simulations yield zero or very low amplitudes at a given site, the source rate ν is calculated based on a minimum threshold amplitude (0.05 m in the demonstration study). Results are aggregated and displayed as a meteotsunami hazard curve that plots exceedance rate as a function of maximum amplitude at the site.

The hazard curves from twenty different synthetic squall line catalogs are shown for Atlantic City, NJ in Fig. 4. For each catalog, synthetic squall lines cross the shoreline throughout the model domain (Fig. 1); the overall annual rate of squall line lines in this region is identical to that observed by the ASOS stations (Table 1). The annualized exceedance rate (λ) is plotted along the left vertical axis. For high rates, λ is not equivalent to the AEP and therefore AEP is shown along the right vertical axis. There is substantial variation in the hazard curve for individual catalogs (light blue lines) at low exceedance probabilities and high amplitude. However, the mean hazard curve from all catalogs (heavy blue line) is smooth. The mean hazard curve can be characterized by a power-law relation for amplitudes between approximately 0.05 and 0.5 m and tapered for amplitudes greater than approximately 0.5 m. Quantile hazard curves can also be derived from the multiple synthetic catalogs.

Empirical meteotsunami hazard curves for Atlantic City, NJ (log–log plot): exceedance rate as a function of maximum amplitude for an event. AEP given on right-hand scale. Light blue lines: results for individual synthetic catalogs (each catalog consists of 116 events). Heavy blue line: mean of all synthetic catalogs. Square indicates observed rate of meteotsunamis at Atlantic City (Rabinovich 2012)

The probabilistic computations can be checked against empirical observations of meteotsunamis at Atlantic City. Rabinovich (2012) indicates that there were 12 possible meteotsunamis among the strong seiche events detected at the Atlantic City tide-gauge stations from late 2007 through 2011. This results in an exceedance rate of approximately three events/year, not including uncertainty caused by the open time intervals before the first and after the last event. The minimum threshold for the trough-to-crest wave height is 30 cm, which we assume is approximately equivalent to a 15 cm amplitude threshold (solid square in Fig. 4). This value falls just below the computed hazard curve; it is possible that we may be slightly overestimating the rate of meteotsunamis according to our parameterization of the phenomenon. However, there may also be more than 12 meteotsunamis recorded during 2007–2011: Rabinovich (2012) indicates that more than 40 significant seiches were detected in this time period with wave heights greater than 30 cm (assumed amplitude of 15 cm). Overall, the computed hazard curve at Atlantic City is roughly consistent with tide-gauge observations, although there is large uncertainty in the empirical rates.

An analytic probability model can represent the mean hazard curve according to the following equation:

where \(1 - F\left( {A_{0} } \right)\) is the complementary cumulative distribution function (i.e., survival function) for the probability model. For many natural hazards, the tapered Pareto distribution (TPD) best fits the historical data (Geist and Parsons 2014). Shown in Fig. 5 is the TPD model (red line) and the generalized Pareto distribution (GPD) model (green line) fit to the mean hazard curve at four locations. The TPD is parameterized by the power-law exponent β and a corner amplitude A c , with the survival function given by

where A t is the observation threshold. The form of the GPD survival function used in this study is given by

where γ is an additional shape parameter. Of the four locations, Montauk and Atlantic City have the lowest estimated power-law exponent likely caused by the wide shelf where Proudman resonance can develop and multiple reflections that arise from the shelf edge and coastline around the New York Bight (Fig. 3). Conversely, the Chesapeake site has the highest power-law exponent and lowest corner amplitude of the four sites shown in Fig. 5. The GPD model tends to extrapolate to higher amplitudes at low exeedance rates than the GPD model, although the TPD and GPD models are similar for the Montauk site. Overall, the hazard curves appear to be affected by the shelf width near the site measured along the predominant squall line trajectory.

Extrapolated hazard curves using two probability models (log–log plot). Red line tapered Pareto model (Geist and Parsons 2014). Green line GPD model. Mean hazard curve from Monte Carlo simulations shown by blue line. Hazard curves for four locations are shown (see Fig. 1): a Chesapeake Bay Bridge tunnel, VA; b Ocean City, MD; c Atlantic City, NJ; and d Montauk, NY

7 Discussion

This study describes a framework for conducting probabilistic hazard assessments for meteotsunamis. This method complements PTHA designed for geologic sources (ten Brink et al. 2014) for a comprehensive assessment of long-wave coastal hazards. The demonstration study described in the previous section is a simple implementation of the probabilistic method. There are several areas, however, where improvements can be made for future case studies, such as the inclusion of other atmospheric sources, better representation of atmospheric and hydrodynamic processes, and a more comprehensive probabilistic treatment. Each of these topics is discussed briefly below.

The aggregation Eq. (3) is generalized to include meteotsunami sources in addition to pressure disturbances from squall lines. Although squall lines are a dominant source of meteotsunamis along the U.S. Atlantic coast, the focus on this particular atmospheric phenomenon is also because of the tractable parameterization as meteotsunami sources and the availability of the ASOS data necessary for estimating source parameter distributions. Atmospheric gravity waves are another common cause of meteotsunamis worldwide (Monserrat et al. 1991), as exemplified by the 2008 Boothbay event (Vilibić et al. 2013). It is more difficult to parameterize these sources, short of performing high-resolution mesoscale modeling (Renault et al. 2011; Vilibić et al. 2013; Horvath and Vilibić 2014). In addition, it is unclear if enough data are available to construct distributions of the parameters that control atmospheric gravity waves. It should be emphasized, however, that a comprehensive probabilistic analysis would include these and other sources of meteotsunamis.

Characterization of squall lines in the demonstration study is homogeneous and stationary. A more realistic representation would be to model the time-dependent nature of storm systems. This has been discussed with respect to hurricane storm surge in terms of bias by not including evolutionary features of storms (Resio et al. 2009). An alternative would be to perform storm-track modeling as discussed by Vickery et al. (2000) for hurricanes and by Rotunno et al. (1988) for squall lines. In addition, spatial heterogeneity in the pressure distribution could be considered as a source of aleatory uncertainty in probabilistic analysis.

The numerical modeling presented in this study approximates most of the important resonance mechanisms associated with meteotsunamis, including Proudman, shelf, and edge wave resonance. However, small-scale modeling of harbor resonance was not included that can further amplify meteotsunami waves (Monserrat et al. 2006; Vilibić et al. 2008). A nested-grid scheme can be employed to model the different scales of the waves from the open ocean to harbors (e.g., Vilibić et al. 2008). Runup also can be modeled using a nested-grid scheme, with other terms such as bottom friction and wave breaking necessary for near-shore simulations. Near-shore amplification and focusing may be important in simulating runup, noting the large runup at Barnegat, NJ from eyewitness observations for the June 13, 2013, meteotsunami relative to maximum amplitude readings from tide-gauge stations.

In addition to the event non-stationarity associated with the evolution of storm systems, there are also sources of non-stationarity associated with the event catalogs. For example, there may be a measurable effect on the probabilistic hazard curve caused by seasonality of the events, depending on the exceedance probabilities of interest. Long-term climate change and sea-level rise may also significantly affect the probability calculations (e.g., Tebaldi et al. 2012).

The distribution selected for any of the parameters may be strongly influenced by the number of data points available, particularly if the actual distribution is heavy tailed. Geist and Parsons (2014) demonstrate that natural hazards that follow a Pareto distribution may appear to have an artificially low upper limit, or even appear as a different distribution, owing to the effects of undersampling. In particular, although a normal distribution is used for the pressure differential associated with squall lines in the demonstration study, the actual distribution may have a heavier tail. For example, Resio et al. (2009) use the Gumbel distribution to model the pressure differential associated with hurricanes. Additional data may also indicate certain dependencies among parameters, so that the joint probability distribution is more complex than indicated here. The use of copulas (functions linking marginal distributions in a multivariate distribution) can greatly simplify calculations in these cases (e.g., Salvadori and De Michele 2004).

Overall, probabilistic hazard analysis strives for a mutually exclusive and completely exhaustive characterization of the hazard. In particular, when numerical models are employed, there is a trade-off between an accurate representation of natural phenomena and computational performance. Various methods developed from storm surge forecasting, such as optimal sampling (Resio et al. 2009; Toro et al. 2010) and continuous response functions (Irish et al. 2011), may be applicable to meteotsunami probabilistic analysis.

8 Summary

A framework for the probabilistic analysis of meteotsunamis has been introduced. In its general form, the analysis aggregates the hazard from different possible sources (squall lines, atmospheric gravity waves, etc.) and uses a numerical wave propagation model for each source and parameter combination. The result is a meteotsunami hazard curve that plots the annual rate of exceedance (or AEP) as a function of the maximum event amplitude. A demonstration study for the northeast U.S. is developed for squall line sources only, in which meteorological parameters are determined from ASOS station observations. Probability distributions for each parameter are estimated, and a Monte Carlo approach is used to develop hazard curves from random sampling of the distributions and numerical linear long-wave modeling of the meteotsunami itself. The shape of the tsunami hazard curve is best fit by a tapered or generalized Pareto distribution. Site-to-site variations in the hazard curves appear to be caused by regional variations in the shelf width (affecting Proudman resonant amplification) and the orientations of the coastline and shelf edge (affecting decoupled reflections).

References

Aida I (1969) Numerical experiments for the tsunami propagation—the 1964 Niigata tsunami and the 1968 Tokachi-Oki tsunami. Bull Earthq Res Inst 47:673–700

Annaka T, Satake K, Sakakiyama T, Yanagisawa K, Shuto N (2007) Logic-tree approach for probabilistic tsunami hazard analysis and it applications to the Japanese Coasts. Pure Appl Geophys 164:577–592

Asano T, Yamashiro T, Nishimura N (2012) Field observations of meteotsunami locally called “abiki” in Urauchi Bay, Kami-Koshiki Island, Japan. Nat Hazards 64:1685–1706

Bluestein HB (1993) In synoptic–dynamic meteorology in midlatitudes: observations and theory of weather systems, vol 2. Oxford University Press, Oxford, p 594

Box GEP, Muller ME (1958) A note on the generation of random normal deviates. Ann Math Stat 29:610–611

Carrier GF (1995) On-shelf tsunami generation and coastal propagation. In: Tsuchiya Y, Shuto N (eds) Tsunami: progress in prediction, disaster prevention and warning, vol 4. Kluwer, Dordrecht, pp 1–20

Churchill DD, Houston SH, Bond NA (1995) The Daytona Beach wave of 3–4 July 1992: a shallow-water gravity wave forced by a propagating squall line. Bull Am Meteorol Soc 76:21–32

Fujima K, Dozono R, Shigemura T (2000) Generation and propagation of tsunami accompanying edge waves on a uniform shelf. Coast Eng J 42:211–236

Geist EL (2012) Near-field tsunami edge waves and complex earthquake rupture. Pure Appl Geophys. doi:10.1007/s00024-012-0491-7

Geist EL, Oglesby DD (2013) Earthquake mechanics and tsunami generation. In: Beer M, Patelli E, Kougioumtzoglou I, Au IS-K (eds) Encyclopedia of Earthquake Engineering, Springer, New York

Geist EL, Parsons T (2006) Probabilistic analysis of tsunami hazards. Nat Hazards 37:277–314

Geist EL, Parsons T (2014) Undersampling power-law size distributions: effect on the assessment of extreme natural hazards. Nat Hazards 72:565–595

Geist EL, Parsons T, ten Brink US, Lee HJ (2009) Tsunami probability. In: Bernard EN, Robinson AR (eds) The sea, vol 15. Harvard University Press, Cambridge, pp 93–135

Greenspan HP (1956) The generation of edge waves by moving pressure distributions. J Fluid Mech 1:574–592

Hibiya T, Kajiura K (1982) Origin of the Abiki phenomenon (a kind of seiche) in Magasaki Bay. J Oceanogr Soc Jpn 38:172–182

Horvath K, Vilibić I (2014) Atmospheric mesoscale conditions during the Boothbay meteotsunami: a numerical sensitivity study using a high-resolution mesoscale model. Nat Hazards. doi:10.1007/s11069-014-1055-1

Irish JL, Resio DT, Cialone MA (2009) A surge response function approach to coastal hazard assessment. Part 2: quantification of spatial attributes of response functions. Nat Hazards 51:183–205

Irish JL, Song YK, and Chang K-A (2011) Probabilistic hurricane surge forecasting using parameterized surge response functions. Geophys Res Lett 38. doi:10.1029/2010GL046347

Lamb H (1932) In hydrodynamics, vol 6. Dover Publications, Mineola, p 768

Lynett PJ, Liu PL-F (2005) A numerical study of run-up generated by three-dimensional landslides. J Geophys Res 10. doi:10.1029/2004JC002443

Martinsen EA, Gjevik B, Röed LP (1979) A numerical model for long barotropic waves and storm surges along the western coast of Norway. J Phys Oceanogr 9:1126–1138

Mercer D, Sheng J, Greatbatch RJ, Bobanović J (2002) Barotropic waves generated by storms moving rapidly over shallow water. J Geophys Res 107. doi:10.1029/2001JC001140

Monserrat S, Ibbetson A, Thorpe AJ (1991) Atmospheric gravity waves and the “Rissaga” phenomenon. Q J R Meteorol Soc 117:553–570

Monserrat S, Vilibić I, Rabinovich AB (2006) Meteotsunamis: atmospherically induced destructive ocean waves in the tsunami frequency band. Nat Hazards Earth Syst Sci 6:1035–1051

Parsons T, Geist EL (2009) Tsunami probability in the Caribbean region. Pure appl Geophys 165:2089–2116

Pasquet S, Vilibić I (2013) Shelf edge reflection of atmospherically generated long ocean waves alon gthe central U.S. East Coast. Cont Shelf Res 66:1–8

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (2007) In numerical recipes: the art of scientific computing, vol 3. Cambridge University Press, Cambridge, p 1256

Proudman J (1929) The effects on the sea of changes in atmospheric pressure. Geophys J Int 2:197–209

Pugh DT, Vassie JM (1978) Extreme sea levels from tide and surge probability. In: coastal engineering Proceedings, 16th international conference on Coastal Engineering, Hamburg, pp 911–30

Rabinovich AB (2012) Meteorological tsunamis along the east coast of the United States. In: Towards a meteotsunami warning system along the U.S. Coastline (TMEWS), Institute of Oceanography and Fisheries, Croatia, 7 pp. http://jadran.izor.hr/tmews/results/Report-Rabinovich-TGdata-catalogues-analysis.pdf

Rabinovich AB, Monserrat S (1996) Meteorological tsunamis near the Balearic and Kuril islands: descriptive and statistical analysis. Nat Hazards 13:55–90

Rabinovich AB, Monserrat S (1998) Generation of meteorological tsunamis (large amplitude seiches) near the Balearic and Kuril Islands. Nat Hazards 18:27–55

Rabinovich AB, Shevchenko GV, Sokolova SE (1992) An estimation of extreme sea levels in the northern part of the Sea of Japan. La Mer 30:179–190

Raichlen F, Lee JJ (1992) Oscillation of bays, harbors, and lakes. In: Herbich JB (ed) Handbook of coastal and ocean engineering, vol 3. Gulf Publishing Company, Houston, pp 1073–1113

Reid RO, Bodine BR (1968) Numerical model for storm surges in Galveston Bay. J Waterw Harb Division A.C.E. 94:33–57

Renault L, Vizoso G, Jansá A, Wilkin J, Tintoré J (2011) Toward the predictability of meteotsunamis in the Balearic Sea using regional nested atmosphere and ocean models. Geophys Res Lett 38. doi:10.1029/2011GL047361

Resio DT, Irish J, Cialone M (2009) A surge response function approach to coastal hazard assessment—part 1: basic concepts. Nat Hazards 51:163–182

Rotunno R, Klemp JB, Weisman ML (1988) A theory for strong, long-lived squall lines. J Atmos Sci 45:463–485

Sallenger AH, List JH, Gelfenbaum G, Stumpf RP, Hansen M (1995) Large wave at Daytona Beach, Florida, explained as a squall-line surge. J Coastal Res 11:1383–1388

Salvadori G, De Michele C (2004) Frequency analysis via copulas: theoretical aspects and applications to hydrological events. Water Resour Res 40. doi:10.1029/2004WR003133

Satake K (2007) Tsunamis. In: Kanamori H, Schubert G (eds) Treatise on geophysics, volume 4-earthquake seismology, vol 4. Elsevier, Amsterdam, pp 483–511

Tebaldi C, Strauss BH, and Zervas CE (2012) Modelling sea level rise impacts on storm surges along US coasts. Environ Res Lett 7. doi:10.1088/748-9326/7/1/014032

ten Brink US, Chaytor JD, Geist EL, Brothers DS, Andrews BD (2014) Assessment of tsunami hazard to the U.S. Atlantic margin. Mar Geol 353:31–54

Toro GR, Resio DT, Divoky D, Niedoroda AW, Reed C (2010) Efficient joint-probability methods for hurrican surge frequency analysis. Ocean Eng 37:125–134

U.S. Nuclear Regulatory Commission (2013) In: Nicholson TJ, Reed W (eds) Proceedings of the workshop on probabilistic flood hazard assessment (PFHA). NUREG/CP-0302, Rockville

Vennell R (2007) Long barotropic waves generated by a storm crossing topography. J Phys Oceanogr 37:2809–2823

Vennell R (2010) Resonance and trapping of topographic transient ocean waves generated by a moving atmospheric disturbance. J Fluid Mech 650:427–443

Vickery PJ, Skerlj PF, Twisdale LA (2000) Simulation of hurricane risk in the U.S. using empirical track model. J Struct Eng 126:1222–1237

Vilibić I (2005) Numerical study of the Middle Adriatic coastal waters’ sensitivity to the various air pressure travelling disturbances. Ann Geophys 23:3569–3578

Vilibić I (2008) Numerical simulations of the Proudman resonance. Cont Shelf Res 28:574–581

Vilibić I, Domijan N, Orlić M, Leder N, and Pasarić M (2004) Resonant coupling of a traveling air pressure disturbance with the east Adriatic coastal waters. J Geophys Res 109. doi:10.1029/2004JC002279

Vilibić I, Domijan N, Čupić S (2005) Wind versus air pressure seiche triggering in the Middle Adriatic coastal waters. J Mar Syst 57:189–200

Vilibić I, Monserrat S, Rabinovich AB, Mihanović H (2008) Numerical modelling of the destructive meteotsunami of 15 June, 2006 on the coast of the Balearic Islands. Pure appl Geophys 165:2169–2195

Vilibić I, Horvath K, Strelec Mohović N, Monserrat S, Marcos M, Amores Á et al (2013) Atmospheric processes responsible for generation of the 2008 Boothbay meteotsunami. Nat Hazards. doi:10.1007/s10069-013-0811-y

Acknowledgments

The authors appreciate the constructive comments during review of this paper by Alexander Rabinovich, Richard Signell, and an anonymous reviewer.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Geist, E.L., ten Brink, U.S. & Gove, M. A framework for the probabilistic analysis of meteotsunamis. Nat Hazards 74, 123–142 (2014). https://doi.org/10.1007/s11069-014-1294-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-014-1294-1