Abstract

The occurrence of several recent “extreme” earthquakes with their significant loss of life and the apparent failure to have been prepared for such disasters has raised the question of whether such events are accounted for in modern seismic hazard analyses. In light of the great 2011 Tohoku-Oki earthquake, were the questions of “how big, how bad, and how often” addressed in probabilistic seismic hazard analyses (PSHA) in Japan, one of the most earthquake-prone but most earthquake-prepared countries in the world? The guidance on how to properly perform PSHAs exists but may not be followed for a whole range of reasons, not all technical. One of the major emphases of these guidelines is that it must be recognized that there are significant uncertainties in our knowledge of earthquake processes and these uncertainties need to be fully incorporated into PSHAs. If such uncertainties are properly accounted for in PSHA, extreme events can be accounted for more often than not. This is not to say that no surprises will occur. That is the nature of trying to characterize a natural process such as earthquake generation whose properties also have random (aleatory) uncertainties. It must be stressed that no PSHA is ever final because new information and data need to be continuously monitored and addressed, often requiring an updated PSHA.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past few years, the term “extreme event” has gained popularity in the lexicon of natural hazards, due to the recent and perceived rare occurrence of several particularly devastating earthquakes. Even more recently, “black swan” has become a common term to describe an event that is a surprise, has a major impact, and in hindsight is often inappropriately rationalized (Taleb 2010). The recent 2004 moment magnitude (M) 9.2 Sumatra and 2011 M 9.0 Tohoku-Oki, Japan earthquakes can be regarded as “extreme events” or “black swans” in terms of their size because in the past 200 years, fewer than 10 earthquakes have reached M 9.0 or greater (Table 1). Both earthquakes can also be regarded as extreme because of the significant loss of life (>200,000 and >20,000 deaths, respectively). However, we can also consider recent devastating earthquakes such as the 2010 M 7.0 Haiti earthquake as an extreme event with the loss of life at possibly more than 300,000 even though it was of moderate size. Thus, extreme events can be “extreme” either because of the resulting hazard or the resulting losses or both. It is important to distinguish between hazard and losses when considering extreme events and the fundamental questions of “how big, how bad, and how often” should be asked in the context of hazard and risk analyses.

In the session “Preparing for the extreme: quantifying the probabilities and uncertainties of extreme hazards” at the 2011 European Science Foundation (ESF) Conference Understanding Extreme Geohazards: The Science of the Disaster Risk Management Cycle, the question that was posed was “how do seismic risk assessments account for epistemic gaps at the upper end of the magnitude scale.” There are two components to this question: the methodology and the inputs into that methodology. This paper focuses on the methodology and attempts to answer how well the three fundamental questions of how big, how bad, and how often are included in modern seismic hazard analyses and subsequently seismic risk analyses. Were the three earthquakes mentioned above, certainly surprises albeit not rare events, included in or even contemplated in seismic hazard analyses and subsequent hazard mitigative action? These questions have been raised by not only the public and decision makers but by scientists themselves, particularly with regard to the 2011 Tohoku-Oki earthquake where Japan, the most advanced country in the world in terms of earthquake safety, was “caught off guard” and not prepared for the ensuing disaster (Fig. 1) (Geller 2011; Stein et al. 2011, 2012; Nöggerath et al. 2012).

Was the lack of preparedness due to the inability to predict the ensuing hazard because of a flaw in seismic hazard analysis or were there other reasons? The Fukishima nuclear power plant disaster is an example of the lack of Japanese preparedness, in this case by the Tokyo Electric Power Company (Nöggerath et al. 2012). The impact and the implications of the Fukishima incident have been far-reaching. For example, the U.S. Nuclear Regulatory Commission has recommended that the seismic hazard of all 104 nuclear power plants in the United States be re-evaluated.

As will be discussed, the failure to predict extreme events in PSHA is not a defect in methodology but a failure to capture the legitimate range of technically supportable interpretations among the entire informed technical community and the uncertainties in those interpretations. In the following, we review the components of modern (PSHA) guidelines to properly perform PSHAs and discuss the apparent failure to predict the hazard from the 2011 Tohoku-Oki earthquake and seismic risk in the context of rare earthquakes.

2 Seismic hazard versus seismic risk

One of the issues in discussing both seismic hazard and seismic risk is that the two terms are often used mistakenly interchangeably. Hazard is the consequence of a natural event such as an earthquake, volcanic eruption, landslide, and flood. Earthquake hazards include strong ground shaking, liquefaction, ground deformation, tsunami, seiche, and seismically induced landslide. Seismic risk is the potential consequence (losses) of a seismic hazard. It is defined probabilistically as the product of hazard, vulnerability, and value. The potential loss of life, injuries, and economic losses are seismic risks.

Regions that have a high seismic hazard need not have a corresponding high seismic risk. For example, California is one such region. The 1994 M 6.7 Northridge, California earthquake that occurred at the northern edge of the Los Angeles metropolitan area resulted in only 57 deaths, although the economic losses ($20 billion) were high. In contrast, the slightly larger 2010 M 7.0 Haiti earthquake, which was a direct hit to the capital city of Port-au-Prince, resulted in possibly more than possibly 300,000 deaths. The significant difference in losses is due to the contrasting levels of vulnerability and hence risk, that is, in southern California seismic hazard mitigation activities have been ongoing for decades, whereas in Haiti, very little, if any, mitigation has taken place.

In Table 2, the ten deadliest earthquakes on record are listed. All of the impacted countries have a high seismic hazard and are highly vulnerable (high seismic risk). Three of the deadliest events occurred in China and three in the Middle East. Note, only one of the earthquakes (2004 Sumatra) is among the top ten largest events (Table 1) and only two earthquakes (1923 Tokyo and 2004 Sumatra) occurred along a subduction zone where the world’s largest events have occurred (Table 1).

3 Seismic hazard analysis

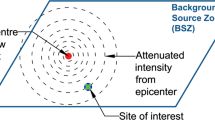

In modern seismic hazard analysis, the objective is to evaluate the severity of an earthquake hazard by characterizing the sources of damaging earthquakes in terms of the distribution of magnitudes they can produce (including the maximum events) and how frequently the events occur, and the relationship(s) between the earthquake characteristics and the hazard parameters (e.g., strength of ground shaking). Because there can be large epistemic uncertainties (see later discussion) in the input parameters such as maximum magnitude, the state-of-the-practice is to perform a PSHA (Fig. 2). Modern building codes such as the International Building Code are based on the results of a PSHA for strong ground shaking.

The steps in performing a PSHA. Source McGuire (2004)

The objective of a PSHA is to estimate the probability that a specified level of hazard will be exceeded (Fig. 2). That probability can be on an annual basis or for building codes expressed as for example, the 10 % probability of exceedance in 50 years. (The annual probability of exceedance can also be expressed in terms of a return period.) So to perform a PSHA, one needs to input the “how big” (the range of earthquakes up to the maximum earthquake) and the “how often” (the rate at which earthquakes of a given magnitude occur) (Fig. 2).

To answer “how bad” in terms of hazard (not risk), a relationship between magnitude and distance with a hazard parameter is needed. In a PSHA for strong ground shaking, the relationships are called ground motion prediction equations (GMPE) (Fig. 2). GMPEs are models and no single model is the correct one because generally no single interpretation can represent a complex earth process. Hence, I prefer to call them ground motion prediction models to emphasize they have associated uncertainties.

PSHA is in contrast to deterministic seismic hazard analysis where the frequency or rate of earthquakes is not directly considered. Also called a scenario analysis, a deterministic analysis simply estimates how bad a hazard could be regardless of the probability of that earthquake occurring. Because modern society has moved to risk-informed decision-making where hazard needs to be expressed probabilistically, PSHA is generally required rather than deterministic analysis.

One of the major benefits of PSHA is that it allows for explicit treatment of uncertainty. It has now been recognized that there are two different types of uncertainty (SSHAC 1997). Epistemic uncertainty is due to the lack of present-day knowledge. For example, the maximum earthquake that a particular seismic source can produce has epistemic uncertainty. By definition, epistemic uncertainty can be reduced through further research and gathering of more and better data (SSHAC 1997). If the maximum earthquake has not been observed on a seismic source, its magnitude has to be estimated based on other data and so it will have epistemic uncertainty. If the largest earthquake were to occur, be observed and recognized as such, the epistemic uncertainty in the maximum earthquake can be reduced. Epistemic uncertainties in both input parameters and models are accommodated in PSHA through the use of logic trees where alternative interpretations are considered and weighted (Fig. 3).

The second type of uncertainty is aleatory uncertainty (variability) or randomness. Theoretically, aleatory uncertainty cannot be reduced with more research or data (SSHAC 1997). For example, the rupture process of a future earthquake on a given fault is considered an aleatory uncertainty. Admittedly, the separation of epistemic and aleatory uncertainty is somewhat arbitrary and, in fact, a particular process or parameter may contain both types of uncertainties. It is important to distinguish between epistemic and aleatory uncertainty because they are treated differently in PSHA (SSHAC 1997).

4 PSHA guidelines

PSHA has become an increasingly important tool for providing the basis for design and safety-related decision-making at all levels of both the private sector and government. The level of sophistication applied to PSHA has increased dramatically over the past four decades since the technique was first introduced by Cornell (1968). As PSHA was implemented more and more in different forms in the United States for critical and important facilities, it became clear that it was the time to establish more uniform and up-to-date guidelines for future studies.

In 1997, guidelines were established in the United States for the proper performance of PSHAs for critical facilities. The Senior Seismic Hazard Analyses Committee’s (SSHAC 1997) guidelines stress the importance of (1) proper and full incorporation of uncertainties and (2) inclusion of the range of diverse technical interpretations that are supported by data. I would suggest that if the philosophy behind these guidelines had been followed, the Tohoku-Oki earthquake would have been considered in PSHAs in Japan (see following discussion).

According to SSHAC (1997), the most important and fundamental fact that must be understood about a PSHA is that its objective can only be attained with significant uncertainty. The SSHAC (1997) guidelines state that the following should be sought in a properly executed PSHA project for a given difficult technical issue: (1) a representation of the legitimate range of technically supportable interpretations among the entire informed technical community, and (2) the relative importance or credibility that should be given to the differing hypotheses across that range.

Experts tend to underestimate uncertainties. They tend to act as proponents of a particular model rather than being true evaluators of all the available models. There are several reasons why this bias exists. One reason is that generally experts are so invested in their own model and convinced that their model is correct that they have not or will not adequately evaluate alternative models and interpretations and all sources of uncertainties. This process is called “anchoring.”

One key issue that often confronts teams of individuals performing PSHAs is the desire to reach consensus on model parameters. That can be particularly challenging if there is a really broad distribution of legitimate interpretations. However, SSHAC (1997) identifies different types of consensus and then concludes that one key source of difficulty is the failure to recognize that (1) there is not likely to be “consensus” (as the word is commonly understood) among the various experts; and (2) no single interpretation concerning a complex earth sciences issue is the “correct” one.

What constitutes a successful PSHA? SSHAC (1997) states that when independently applied by different groups, the PSHAs would yield “comparable” results, defined as results whose overlap is within the broad uncertainty bands that inevitably characterize PSHA results. For this to be true, the uncertainties in the methodology must be confronted and dealt with head-on. No PSHA analyst should attempt less, and no PSHA sponsor should accept less (SSHAC 1997). Regardless of the scale of the PSHA study, the goal remains the same: to represent the center, the body, and the range of technical interpretations that the larger informed technical community would have if they were to conduct the study.

One of the key issues in PSHAs is how long are the results stable? A typical response to this question is that if the range of possible model interpretations and uncertainties has been properly accommodated in the PSHA, the hazard should be stable over time. Theoretically, future changes to the inputs into a PSHA due to new data or interpretations fall within the body and range of technical interpretations, and as such the inputs and hence results are judged to be stable. However, stability is very difficult to achieve in light of some very significant changes that have occurred in the earthquake sciences due to new research. In particular, every new earthquake seems to result in new lessons about the earthquake process. This in a sense reflects the relative immaturity of earthquake science. For example, the mean hazard as expressed on the U.S. National Seismic Hazard Maps developed by the U.S. Geological Survey changed by as much as 20–30 % from the 2002 to 2008 maps because of changes in the ground motion prediction models (Petersen et al. 2008). It appears that significant changes may also occur in the 2013 versions of the maps also because of new ground motion models.

In my experience, in practice, PSHA results in the United States are at best only stable for one to two decades. Hence, the results of PSHAs should be considered to have limited stability, and the practice of monitoring advancements in earthquake science and their impacts on hazard by a project sponsor even after a PSHA has been completed is essential to the PSHA process. For critical and important facilities and structures, for example, nuclear power plants, a strong regulatory review process and periodic evaluation are critical.

5 Tohoku-Oki earthquake

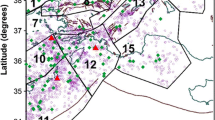

Was the 2011 Tohoku-Oki earthquake considered in seismic hazard analyses and mitigative actions in Japan? The dramatic overtopping of tsunami barriers was a clear indication that the size of the 2011 earthquake and its resulting tsunami were underestimated (Fig. 1). As pointed out by Geller (2011), the earthquake occurred in an area of the Japanese national earthquake hazard map that was designated as one of relatively low hazard. Many pronouncements by prominent Japanese seismologists indicate that a M 9 earthquake was believed to be very unlikely if not impossible along the northern Japan subduction zone because no such event had been observed in the 1,000-year historical record.

Although there was no well-documented case of a M 9 earthquake occurring along the Japan subduction zone, the 869 Jogan earthquake was suggested by Minoura et al. (2001) to have been similar to the 2011 event based on an evaluation of paleotsunami deposits. A number of publications and presentations were given on this possibility prior to 2008 (Nöggerath et al. 2012). Although the evidence was limited, why was this possibility not considered in the PSHA for the national hazard maps? A likely cause for this oversight was that many experts were anchored to the historical record. There has been an obvious trend in seismic hazard analyses worldwide including, to some extent, in the United States to rely too heavily on the historical earthquake record. In hindsight, the 1,000-year historical record did not cover a complete seismic cycle in northern Japan. If the SSHAC philosophy had been followed, this anchoring would have been avoided and more concerted efforts to incorporate the latest paleotsunami data would have been made.

What if no paleotsunami evidence had become available? It might still be argued that a M 9 earthquake should have been considered in PSHAs based on the evidence from other subduction zones worldwide where apparently “segmented” subduction zones have historically had multiple segments rupturing together in larger than anticipated earthquakes, such as the 2004 Sumatra earthquake (e.g., Satake and Atwater, 2007). Similar to the 2011 Tohoku-Oki earthquake, there had been no known predecessor of the 2004 Sumatra earthquake in historical times. The portion of the Sunda subduction zone that ruptured during that event was thought to rupture in much smaller events (M < 8).

Three earthquakes in 1942 (M 7.9), 1958 (M 7.8), and 1979 (M 7.7) ruptured along a 500-km-long section of the South American subduction zone in northern Peru and southern Ecuador (Kanamori and McNally 1982). In 1906, all three segments ruptured together in a M 8.8 earthquake. If the 1906 earthquake had not been observed, the prevailing model may have been a segmented subduction zone with a low probability of multiple-segment ruptures such as the 1906 earthquake. The lack of consideration of great earthquakes in hazard analysis and mitigation can be viewed as the result of an over-reliance on the historical record with inadequate attention to the uncertainties.

If the available paleotsunami data had been taken more seriously by decision-makers and the uncertainties in estimating the largest possible earthquake on the northern Japan subduction zone had been better appreciated, then may be more effective mitigation measures would have been taken to reduce the losses incurred in the 2011 earthquake. Of course, the decision of whether to mitigate involves more than a knowledge of the hazard. It was not the inability to predict the hazard or losses in the 2011 Tohoku-Oki earthquake that was at fault here. No complicated mathematics were needed.

Subsequent to the ESF conference, several papers have been published that address this question. In a series of papers, Geller (2011), Stein et al. (2011, 2012) delve into why probabilistic hazard maps can be flawed. In particular, the 2010 Japanese national seismic hazard map predicted significantly lower hazard in the Tohoku area compared to other parts of Japan reflecting the general surprise among the Japanese scientific community when the 2011 Tohoku earthquake occurred. Geller (2011) stated that because the Japanese national hazard map utilized the well-known “characteristic” earthquake model and the related “seismic gap” model, “the hazard map and the methods used to produced it are flawed and should be discarded.”

However, the authors’ criticism is not focused so much on the methodology of producing hazard maps but on the inputs into the maps. In this case, the failure was on the part of those who provided the seismic source characterization input for the Tohoku region. They failed to recognize that (1) M 9 earthquakes were possible if multiple segments ruptured together and (2) that paleotsunami evidence was already available that suggested that possibility (see following discussion). Stein et al. (2012) suggest two approaches to improving hazard maps: (1) the uncertainties in the maps should be assessed and clearly communicated to potential users, and (2) the maps should undergo rigorous and objective testing. In a rebuttal to the Stein et al. (2011) article, Hanks et al. (2012) conclude that “unforeseen earthquakes occur in unexpected places is a simple fact of life, not a failure of PSHA.”

6 Seismic risk

We know, by and large, that there will be extreme losses on a global scale in earthquake-prone areas that have a large concentrated population that is vulnerable because of poorly engineered buildings. We were aware of this situation in Haiti prior to the 2010 earthquake. Large earthquakes in 1700 and 1751 had previously destroyed Port-au-Prince. The history of destructive earthquakes in highly seismic and vulnerable countries (Table 2) indicates where future disasters will occur again.

Truly rare and destructive earthquakes are also possible and such extreme events present the greatest challenges to society in terms of risk-based decisions on seismic design and mitigation. Such rare earthquakes occur in intraplate (generally intracontinental) regions with low seismic strain rates and recurrence intervals of 10,000 years or more. These events are popularly referred to as “low probability, high consequence” events in the hazard/risk vocabulary. The issue that we face in terms of extreme events is not that we cannot estimate their hazard and the resulting risk but how should society allocate limited resources to mitigate their effects given more probable hazards and losses. Needless to say, the uncertainties in characterizing earthquake sources and processes are larger in the regions of low seismicity and infrequent large earthquakes.

What is a low probability event? In his book Seismic Hazard and Risk Analysis, McGuire (2004) states that when the annual probability of danger is below 1 in 100,000 per year, it is often considered miniscule in comparison with other dangers and can be ignored. He cites as an example that the average risk of an individual fatality from extreme weather in the central and eastern United States is about 1 in 5 million and people still live in flood- and wind-prone areas. On the other hand, McGuire (2004) notes that probabilities of 1 in 100 are considered too high and hence efforts are made to reduce the risk. It is the middle ground between these extremes where decisions on what is an “acceptable” probability or risk need to be made. For example, couched in terms of hazard probabilities, ordinary buildings in most modern countries are designed to hazard levels of 1 in 475 (based on the now superseded Uniform Building Code). Currently, the United States has changed to an annual probability of 1 in 2,475 for their building code (International Building Code) to address lower probability earthquakes in the central and eastern United States The recommendation for high-hazard dams, those that could result in the loss of life if they failed, is that they should be designed to hazard levels of 1 in 3,000 to 1 in 10,000 (USCOLD 1998). Nuclear power plants and other nuclear facilities are generally designed to hazard levels of 1 in 10,000 (US Nuclear Regulatory Commission).

An example of a rare earthquake is the 1887 Sonoran, Mexico earthquake of estimated M 7.5. The event was felt widely throughout the southwestern United States and northwestern Mexico with only a few reported deaths in the lowly populated region (Bull and Pearthree 1988). The source of the earthquake, the Pitaycachi, Teras, and Oates faults had been dormant more than 30,000 to 40,000 years prior to the 1887 event based on paleoseismic studies (Suter 2008). If such an earthquake was to occur again, the consequences will be much more severe than they were in 1887.

The moniker of a “low probability, high consequence” event has unfortunately been placed on earthquakes that are not really rare. They are often relatively frequent even at the human timescale. They are perceived as rare because they do not occur in a “lifetime” but in periods of multiple lifetimes. Were the 2004 Sumatra, 2010 Haiti, and 2011 Tohoku-Oki earthquakes low probability events? The answer is no. The 2004 earthquake was preceded by a similar characteristic earthquake 600 years ago (Monecke et al. 2008). Although the source of the 2010 Haiti earthquake is still unclear at this time, earthquakes of similar size had occurred in the region the past few hundred years (Mann et al. 2008). Finally, the predecessor to 2011 may have been the 869 Jogan earthquake only 1,100 years prior.

7 Conclusions

Are extreme earthquakes accounted for in modern seismic hazard analysis? Are the questions of how big, how bad, and how often adequately addressed in PSHA? At issue is the extent to which guidelines such as those espoused by SSHAC (1997) are adhered to. When a PSHA fails to adequately estimate future hazard, the problem is not the methodology but a failure to adequately incorporate the uncertainties in our knowledge of earthquake behavior. Even then, there is no guarantee that all extreme events will be recognized; there are always unanticipated surprises. We must recognize that the results of even the best PSHAs have a limited “warranty.” Although we seek stability in hazard predictions, the record suggests that hazard estimates may only be stable for one to two decades at best. It is imperative that we recognize no PSHA is ever final. Because our knowledge of earthquake processes continues to progress along a steep learning curve, new information and data need to be continuously monitored and addressed in updated PSHAs. Unfortunately, even when extreme events are predicted, decisions to mitigate their impacts will be complicated by economic, political, and societal reasons.

References

Bull WB, Pearthree PA (1988) Frequency and size of quaternary surface rupture of the Pitaycachi fault, northeastern Sonora, Mexico. Bull Seismol Soc Am 78:956–978

Cornell CA (1968) Engineering seismic risk analysis. Bull Seismol Soc Am 58:1583–1606

Geller RJ (2011) Shake-up time for Japanese seismology. Nature 472:407–409

Hanks TC, Beroza GC, Toda S (2012) Have recent earthquakes exposed flaws in or misunderstandings of probabilistic seismic hazard analysis? Seismol Res Lett 83:759–764

Kanamori H, McNally K (1982) Variable rupture mode of the subduction zone along the Ecuador-Colombia coast. Bull Seismol Soc Am 72:1241–1253

Mann P, Calais E, Demets C, Prentice CS, Wiggins-Grandison M (2008) Entiquillo-Plantain garden strike-slip fault zone: a major seismic hazard affecting Dominican Republic, Haiti and Jamaica: 18th Caribbean geological conference (http://www.ig.utexas.edu/jsf/18_cgg/Mann3.htm)

McGuire RK (2004) Seismic hazard and risk analysis. Earthquake Engineering Research Institute, Oakland

Minoura K, Imamura F, Sugawa D, Kono Y, Iwashita T (2001) The 869 Jogan tsunami deposit and recurrence interval of large-scale tsunami on the Pacific coast of northeast Japan. J Nat Disaster Sci 23:83–88

Monecke K, Finger W, Klarer D, Kongko W, McAdoo BG, Moore AL, Sudrajat SU (2008) A 1,000-year sediment record of tsunami recurrence in northern Sumatra. Nature 455:1232–1234

Nöggerath J, Geller RJ, Gusiakov VK (2012) Fukushima: the myth of safety, the reality of geoscience. Bull Atomic Sci 67:37–46

Petersen MD, Frankel AD, Harmsen SC, Mueller CS, Haller KM, Wheeler RL, Wesson RL, Zeng Y, Boyd OS, Perkins DM, Luco N, Field EH, Wills CJ, and Rukstales KS (2008) Documentation for the 2008 update of the United States National Seismic Hazard Maps. US Geological Survey Open-File Report 2008-1128, p 61

Satake K, Atwater BF (2007) Long-term perspectives on giant earthquakes and tsunamis at subduction zones. Earth Planet Sci 35:374–439

Senior Seismic Hazard Advisory Committee (SSHAC) (1997) Recommendations for probabilistic seismic hazard analysis: guidance on uncertainty and use of experts. US Nuclear Regulatory Commission, Vol 1, NUREG/CR-6372

Stein S, Geller RJ, Liu M (2011) Bad assumptions or bad luck: why earthquake hazard maps need objective testing. Seismol Res Lett 82:623–626

Stein S, Geller RJ, Liu M (2012) Why earthquake hazard maps often fail and what to do about it. Tectonophysics 562–563:1–25

Suter M (2008) Structural configuration of the Otates fault (Southern Basin and Range Province) and its rupture in the 3 May 1887 Mw 7.5 Sonora, Mexico, earthquake. Bull Seismol Soc Am 98:2879–2893

Taleb NN (2010) The Black Swan, Second Edition, The Impact of the Highly Improbable. Random House, New York

U.S. Committee on Large Dams (USCOLD) (1998) Updated guidelines for selecting parameters for dam projects. 2 appendices p 50

Acknowledgments

My thanks to Hans-Peter Plug, Stuart Marsh, and the ESF for their invitation to participate in the Conference on “Extreme Geohazards.” My appreciation to Melinda Lee for her assistance in the preparation of this paper, to Eliza Nemser and two anonymous reviewers for their reviews and to Editor-in-Chief T.S. Murty for his assistance.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wong, I.G. How big, how bad, how often: are extreme events accounted for in modern seismic hazard analyses?. Nat Hazards 72, 1299–1309 (2014). https://doi.org/10.1007/s11069-013-0598-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-013-0598-x