Abstract

The main purpose of this paper is to document the challenges within spatial risk and vulnerability assessments in order to instigate an open discussion about opportunities for improvements in approaches to disaster risk. This descriptive paper identifies challenges to quality and acceptance and is based on a case study of a social vulnerability index of river floods in Germany. The findings suggest the existence of a vast range of challenges, most of which are typical for many spatial vulnerability assessments. Some of these challenges are obvious while others, such as the stigmatisation of ‘the vulnerable’ in vulnerability maps, are probably less often the subject of debate in the context of quantitative assessments. The discussion of challenges regarding data, methodology, concept and evaluation of vulnerability assessment may help similar interdisciplinary projects in identifying potential gaps and possibilities for improvement. Moreover, the acceptance of the outcomes of spatial risk assessments by the public will benefit from their critical and open-minded documentation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Disaster risk and vulnerability within the context of a specific hazard or multiple hazards is increasingly being quantified and mapped. Characterisation and identification of hazards, vulnerability and risk is still a major research objective in disaster risk research (International Council for Science 2008). Risk maps combine information about the hazard, the triggering factors and the impacts. Vulnerability indicators and indices have been developed for several areas of vulnerability including social (Cutter et al. 2003; Tapsell et al. 2002; Fekete 2010), economic (Meyer et al. 2007), environmental (Damm 2010) and other areas. Spatial overlay maps combining multiple information and analysis layers using geographic information systems (GIS) have become standard applications. However, maps developed on the basis of vulnerability and risk indicators may contain conceptual and methodological quality problems, as well as limitations in terms of their interpretation and acceptance by users.

This paper presents only a small proportion of the problems and challenges commonly associated with spatial risk assessments and deals mainly with the limitations of spatial and quantitative assessments. The author is aware of the rich body of literature on qualitative research methods (for example Bernard 2006) and the wide field of qualitative disaster risk and vulnerability studies (for instance Hewitt 1983; Wisner et al. 2004), however, the specific limitations and quality requirements for non-spatial risk and vulnerability assessments are beyond the scope of this paper.

Cartographic and data representation effects have been extensively documented for spatial assessments. These include the effects of spatial resolution (Meentemeyer 1989), heterogeneous representation units, such as the Modifiable Area Unit Problem (Openshaw 1984), traps of generalisation or stereotyping individuals (Robinson 1950), ‘ecological fallacy’ (Meentemeyer 1989; Cao et al. 1997), or challenges related to the selection of scale type and spatial level (Gibson et al. 1998; Fekete et al. 2010). Context, which is often regarded as crucial for vulnerability assessments, can be blurred by aggregation (O’Brien et al. 2004). In many standard vulnerability index papers (see the literature review in Fekete 2009c), the limitations and challenges are only briefly presented and often not in a structured way. For instance, there is little in the literature that deals explicitly with the limitations of socio-economic indicators and ‘quantifiable vulnerability’ (King 2001: 153; Benson and Twigg 2004; Steinführer and Kuhlicke 2007; Schauser et al. 2010).

Often, risk or vulnerability maps are produced for the sake of providing scientific knowledge. Increasingly, this type of spatial assessment aims at providing ‘policy-relevant’ information. However, among scientists, little is known about how these maps or products are perceived by their users or the public. Vulnerability maps may be published and communicated with unanticipated and unintended reactions or even misinterpretation by their recipients. Despite these issues, there is a lack of applied research or recommendations about how these types of pitfalls in their use should be handled. The framework of the International Risk Governance Council (IRGC 2008: 16) and the risk management framework ISO/IEC 31010:2009 (ISO 2009) point out that there should be a risk framing phase before a risk assessment and an evaluation phase thereafter. Risk communication is necessary during all of these phases. Nevertheless, which aspects need to be considered before conducting an assessment?

Vulnerability maps, in particular, are currently stimulating earnest debate about their conceptual and methodological robustness and, in some cases, about their legitimacy. As many scientists may be unaware of the potential pitfalls in their use or, may not be equipped to handle such hazards, this paper highlights key problem areas and offers a number of possible solutions. A desirable outcome would be that the paper triggers a discussion of the potential quality and acceptance aspects of spatial risk and vulnerability assessments. This may help to bridge the cliché divide between social and natural scientists, between top–down and bottom–up approaches, and qualitative versus quantitative arguments. ‘Interdisciplinarity’, ‘transdisciplinarity’, or ‘multidisciplinarity’ are buzzwords in important research programmes (International Council for Science 2008). But the application in multi-disciplinary, multi-institutional or cross-cutting projects proves to be difficult. A number of challenges confronting successful interdisciplinary collaboration are addressed in this paper. The key objective of the paper, however, is to outline a number of quality and acceptance challenges in conducting spatial vulnerability or risk assessments which may be of use to other researchers.

1.1 Range and diversity of risk or vulnerability approaches

There is a considerable range and diversity of approaches to risk or vulnerability analyses. While this case study is a typical spatial, top–down approach using semi-quantitative indicators and GIS, the content, structure and products of other assessments can be quite different. For instance, quantitative approaches exist that focus solely on hazard modelling or on vulnerability measurements. These measurements can be conducted in almost any discipline or area including social, environmental, economic, political, institutional, physical, technical and psychological fields. Not only are risks researched in these areas, but also risk perception, risk communication and especially the links between hazard and vulnerability aspects.

All of these areas are also subject to qualitative research approaches. It exceeds the scope of this paper to provide examples from the literature or case studies for all types of hazards, be they natural, technical or human-induced hazards or for all types of vulnerability or risks. In fact, there are already a great number of texts that provide an entry into the topic, and it is not appropriate to list and treat them here as any discussion may be unjust and exclude certain authors. For this reason, this paper focuses on a specific case study and only very briefly sets the context to the work of the United Nations University—Institute for Environment and Human Security (UNU-EHS) which provides the case study’s conceptual setting. The book Measuring Risk (Birkmann 2006) provides a guide to the various quantitative and qualitative types of vulnerability and risk assessments.

2 The case of a social vulnerability index related to flood hazards

The currently prevailing culture in the scientific community is to publish only results that are successful. Similarly, there is a certain tendency to present only the positive aspects of any given empirical or theoretical approach. The following section analyses mainly the limitations of an assessment that was conducted by the author; its successes have been documented elsewhere already (Fekete 2010). The application of a degree of self-criticism and a demonstration of the range of limitations in the case study is intended to facilitate not just an awareness of differing aspects of quality and acceptance, but also to foster interdisciplinary communication.

The case study involved the development of a social vulnerability index of river floods. The problem-framing behind the development of the case study was largely connected to research actions suggested by the Hyogo Framework for Action (HFA 2007). Within the HFA, vulnerability and risk assessments are demanded for dealing with natural disasters and the societal consequences of disasters. Vulnerability and risk assessments are a key topic of the United Nations University—Institute for Environment and Human Security UNU-EHS. For instance, the UNU-EHS Expert Working Group on Measuring Vulnerability to Hazards of Natural Origin was active in setting up a research agenda on this topic and in conducting applied research (UNU-EHS/ADRC 2005).

An interdisciplinary research project was created to conduct research on this issue by three scientific institutions; UNU-EHS, the German Aerospace Centre—DLR, and the GeoResearch Centre—GFZ. The project, named DISFLOOD (2005–2009) integrated four PhD research studies, combined several layers of social and socio-ecological vulnerability data with flood hazard information using flood scenarios, hydrological event sets and remote sensing methodology. The ambition was to increase knowledge of a previously rather neglected aspect of flood risks, that of social vulnerability, and to make such complex information accessible and transparent to experts, policy makers, and the public by using web-GIS. Moreover, a social vulnerability index was seen as desirable in specifically expanding existing damage assessments by capturing a range of exposure, susceptibility and capacity information about humans and society. The combination of the index with thematic mapping by the use of GIS was undertaken to allow the visualisation of the information over large regions along the rivers Elbe and Rhine in Germany (Fekete 2010). As a result, the social vulnerability index map showed that apart from exposure, other demographic factors help to identify regions with relative higher or lower vulnerability to river floods. There are certain regions or locations visible that bear a relative higher ‘hypothetical’ vulnerability. However, the main objective was to demonstrate the vulnerability concept and methodology through the use of this map.

2.1 Challenges related to data used in the vulnerability assessment

Typical problems of socio-economic indicators (King 2001) such as data quality, gaps and missing values, updating issues, data decay or normalisation were present during the development of the social vulnerability index.

2.1.1 Data gaps and data mining

Data mining was a major constraint in the production of the vulnerability assessment. At the time of project DISFLOOD (2005–2009), census data was accessible only for large regions of complete rivers in Germany in aggregated form using federal statistics. These statistics are provided at county or municipality scale, and this practically defined the resolution using GIS. Methods such as disaggregation were used only in a limited way, since average values had to be assumed which do not reflect reality. For instance, in order to calculate the exposure to floods, a mean distribution of the population per settlement area had to be assumed, due to lack of more precise data. Flood hazard maps were accessible for the river Rhine from an international commission (IKSR 2001). The integration of the Danube areas and some areas downstream on the river Elbe had to be dropped since the flood extent maps of HQ200 and greater events were not available until the end of the DISFLOOD project in 2009. Certain demographic data such as the proportion of immigrants per county could not be used as the information was available only in aggregated form at higher levels. Other information such as infrastructure locations or land cover cadastres had to be dropped as they were too costly for research institutions, especially for large regions. For some international project partners, the German system was incomprehensible, in that the project had to pay for data from official sources that had already been paid for by the taxpayers.

The federal political structure of Germany posed a major constraint in data collection. While certain institutions were very helpful, a good deal of resentment and reluctance to provide data existed regarding national overviews. Since disaster risk management responsibility is positioned at the county and ‘Länder’ level (Federal States), in a bottom–up principle, there was often open reluctance to see the need of cross-regional overviews on flood risks. The reasons appeared to stem from fears of interference from local sovereignty and top–down regulative ambitions of the national authorities.

Data availability and accessibility are hidden denominators for deciding between data-rich small scale demonstration studies or limited data large-scale studies. The remote sensing data delivered land cover products which were limited in resolution to settlement areas of 25 ha minimum (CORINE land cover product). However, this data source was still more accessible in terms of financial resources than the higher resolution official land cover used by the federal agencies.

2.1.2 Criticism concerning data

Apart from the aspects described in the previous section, the data used in the case study has been subjected to criticism by scientific audiences. For instance, it has often been observed that the sizes of the counties differ widely, and this raises demands for data normalisation relative to the number of residents and settlement area per county.

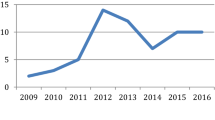

A problem with generating averaging overviews using demographic data is shown in Fig. 1, where the socio-economic indicator is composed of unemployment rates, education levels and apartment type per county in Germany. The whole eastern part of Germany is depicted as relatively vulnerable by using this indicator since the unemployment rate is generally higher there when compared to the average of German counties. This is an effect which still relates to the political situation after German re-unification. It is also an effect of data aggregation that resulted from the ambition of the researcher to set all counties in Germany relative to each other.

Another criticism has been that the vulnerability maps are static and do not include different temporal effects. The main purpose, however, was to develop the index and produce a pilot map. This static map was seen as a starting point for continuous monitoring by the preparation of an updated version of the index map every year. While demographic data is available for continuous updating, extreme flood events (fortunately) do not occur often enough to allow for time-series mapping.

2.2 Challenges regarding the methods used in the vulnerability assessment

Quality aspects are usually well described for most methods in both the natural and social sciences. The acceptance of methods, however, is an interdisciplinary challenge.

2.2.1 Indicators and aggregation

As a general rule, indicators can only explain a certain phenomenon or trend to a limited extent and cannot explain the whole picture. The main challenge is to derive a set of indicators which is on the one hand minimal and applicable, and on the other hand explains the phenomenon as clearly as possible. It is the artificial setting that a statistical method and index creates that is rejected by those who compare it with reality. A composite index simply shows patterns or areas where many general indications accumulate. It provides indications for assuming a potential vulnerability and no more. Validation with a real event might show that those patterns are correct, however, it provides no guarantee that in the next specific flood event this pattern will hold true. Still, it must be acknowledged that, especially for a topic so close to human life and to social issues such as social vulnerability, a composite semi-quantitative index is difficult to accept. Indeed, many concerns about the aggregation of vulnerabilities or risk are highly justified. In the use of similar methods such as the risk matrix, with similar aggregation and colour-coding, the critique and pitfalls of the aggregation of risks or risk factors is documented even in standards and norms such as the ISO 31010:2009 (ISO 2009). Additional aspects of the indicators and aggregation are related to the evaluation of the final product and are therefore covered in later sections of the paper.

Many aspects of data aggregation or the composition of indicators are standard challenges for researchers. Guidelines on data aggregation techniques can be found in many texts (for example Nardo et al. 2005). The quality criteria of quantitative vulnerability indicators have been described for example by Benson and Twigg (2004: 55, 104); or Birkmann (2006). The fundamental issue about aggregation, however, is the acceptance of the aggregation of indicators or whether there is an acceptance of a composite index at all. There are major concerns about the aggregation of indicators or even factors that appear to be incommensurable, such as combining age, income or housing type. While this is a standard technique in many demographic or economic indices, within risk and vulnerability research it appears to be a major issue. A general concern of any method of generalisation is certainly true here; aggregation levels out, builds averages and hides extreme values. The argumentation for using the aggregation of several social vulnerability factors follows the notion that one negative factor can principally be evened out by a positive factor. Also, one specific factor cannot be directly compared with another factor, for any given case. For example, old age as an indication for increased susceptibility to floods is not evened out by a high level of experience. Experience may even backfire where experience suggests that the flood will never reach any higher than before and therefore that evacuation is not necessary.

The critique by colleagues of the aggregation of vulnerability indicators in the case study is quite justified. It may have been more useful to use the indicators separately in order to avoid blurring the picture. Nevertheless, the extreme values of individual factors might be important in highlighting potential vulnerability. Moreover, it is questionable whether one factor or indicator such as age can be evened out by another factor. Other methods and forms of representation other than averaging and composite indices should be considered for future studies. Such options are Pareto-ranking, z-scoring or other data aggregation techniques (Nardo et al. 2005; Simpson and Katirai 2006). However, normalisation can distort the resulting risk picture when z-scores highlight extreme values, or aggregation methods such as Pareto-ranking result in different risk patterns (Fekete 2010). Alternative methods for the representation of data may consist of individual indicators with related visualisation options. These could be individual maps or simply tables.

2.2.2 Weighting

The weighting of indicators was simply avoided, and this was intentional. In respect to the multiple unknowns of the importance of one demographic factor over another, there was a lack of support for the provision of robust weightings. Weighting was certainly a better option for topics that were more familiar and established than social vulnerability research was at that time. However, as some colleagues have responded, even the avoidance of weighting is in itself a certain kind of weighting. This is because not weighting means equal weighting within the aggregation of input variables or indicators. This may be a problem because of its principal assumption for instance that the age of people has the same value as income. Although ethically this is very questionable, it is used within certain risk assessments which are predominantly quantitative. While there was no intention in the case study to pursue such comparisons, the equal weighting and aggregation of different demographic variables might have lead to such an impression. Weighting in this case blends a methodological technique with its perception and acceptance by external observers such as a scientific audience or the users of such assessments.

2.2.3 Subjectivity in statistics

During the development of the social vulnerability index it became evident that, especially during data and parameter selection, subjectivity is inherent even in supposedly objective statistical methods such as factor analysis or logistic regression (Nardo et al. 2005; Fekete 2010).

Factor analysis is a multivariate analysis technique used to identify information packaging by considering the interdependencies between all variables (Bernard 2006: 495). Factor analysis was used in this case study to identify profiles of social groups regarding certain characteristics such as income, gender, or age and to link these groups to context variables such as building type, urban or rural context and medical care. The objective in using factor analysis is to reduce the number of variables and to derive a set of variables that characterise social vulnerability aspects (Fekete 2010: 41). A degree of subjectivity in the selection of the variables and in concurrent steps was observed during this research process and documented (Fekete 2010, 2009c).

Logistic regression analysis is used to explain differences between groups or for predicting membership of groups (Fromm 2005). It was used in this case study to compare the social factors derived by the factor analysis with certain test categories. The test category for flood impact is, for example, whether people had to leave their home or not and whether they had to seek emergency shelter in a real-case flood in 2002. With these dependent variables, certain independent variables such as age, income, job situation can be compared and statistically approved (Fekete 2010: 52). When using this method, subjectivity is greatest in the initial selection of the input variables. The determination of suitable ‘test categories’, especially regarding social vulnerability, is problematic and more research is needed in this field (Fekete 2010, 2009c).

The selection and composition of input variables was guided by the theoretical concept of vulnerability and the theoretical framework used. It is treated in the respective chapter below.

2.2.4 Simplicity and transparency

Certain statistical methods may influence the resulting risk index more than is expected; this is even the case for such indices such as the Human Development Index (Lüchters and Menkhoff 1996). Therefore, some argue that risk and vulnerability indices should be created to be as simple and transparent as possible (Gall 2007). Especially in the field of applied science with target groups such as the public, media or policy makers, it is argued that simple methods should be emphasised, rather than the ambition of experts to generate more sophisticated methods (Gall 2007). In fact, this demand for simplicity and transparency could foster mutual acceptance of methods across disciplines.

Simplicity and transparency were the guiding principles in the selection of factor analysis, while logistic regression was selected to provide a sound and robust testing methodology for the validity of the identified social vulnerability indicators. Other methods such as Jackknife repetition tests (Backhaus et al. 2006: 454) and bootstrapping analyses with 1,000 repetitions (Moore and MacCabe 2006: 14–27) were used only to underpin this soundness. Simplicity and transparency were pursued when the complex information of several input variables was reduced by factor analysis to a few indicators. These indicators were displayed in the final product, both individually and in a composite form.

As it transpired, the intended transparency was hampered not only by the integration of a range of social aspects of vulnerability in an index. The maps depicting the individual components of the index and the indicators of social vulnerability were limited in transparency, because they originated from the factor analysis. The factor analysis was used to see which patterns of commonalities arose without too much guidance by the researcher, but mainly as a result of the statistical method itself. However, factor analysis leaves open to interpretation what the resulting artificial indicators actually represent.

Advancement of the methodologies was less of an ambition in this study, since its objective was rather to focus on social vulnerability and to document it in a simple and accessible manner. In terms of the use of GIS, typical effects of cartography and spatial statistics have been only very generally analysed using spatial autocorrelation tests (Fekete 2010). Applying ‘Maslow’s hammer’, however, having GIS at hand certainly tempted the author to search mainly for data that could be mapped.

‘if all you have is a hammer, everything looks like a nail’

Abraham Maslow

2.3 Challenges within the social vulnerability measurement concept and framework

When considering the issues of concept and frameworks, it seems that questions of quality are more questions of acceptance. For holistic frameworks, the completeness of sectors, factors, reactions chains, scales and context, respectively and system environment, are the keys. For frameworks guiding the implementation or operationalisation of a theoretical concept, the objective is to narrow down all aspects to a manageable and simplified level. However, these aspects of quality may be regarded quite differently by different disciplines. The disciplinary focus and the intention of the observer are very influential in the development and application of the frameworks. Although completeness or applicability is one aspect, timeliness and trends in disaster risk science might be even more influential in how the frameworks are actually used or accepted.

2.3.1 Vulnerability as a concept

The vulnerability index aims at expanding the quality of explanation of what is actually measured. Conceptually, vulnerability expands the explanation of risk on the impact side by differentiating three conceptual vulnerability components: exposure, susceptibility and capacities. The theoretical background of the sustainability triangle (Birkmann 2006), socio-ecological systems (Turner et al. 2003) and integrated, multi-disciplinary and multi-sectoral views on different phases of the disaster cycle all contribute in embedding the vulnerability index and its role into the complex picture of disaster risk science. The applicability of the vulnerability index rests in the operationalisation of a complex concept and in the validation of the index. Potential strategies and measures that are derived from the social vulnerability index can be identified by first using the components of vulnerability: exposure, susceptibility or capacities. For instance, exposure could be reduced, or capacities improved. Second, vulnerability is embedded in a theoretical framework wherein the entry points for hazard impact, risk mitigation and preparedness for the different social, economic and ecological spheres are outlined (Fekete 2010: 21). This conceptual ‘corset’ improves the transferability of this approach to other cases and especially improves its integration with other studies on hazard or vulnerability factors. Real transferability and integration with other vulnerability sectors outside of the project, however, would still need to be shown. The limitations and challenges that remain are vast, as the previous sections have shown. Another constraint in the vulnerability approach is its close relationship to certain terminology and schools of thought that are in a state of flux. In the lively field of interdisciplinary disaster science, concepts, terms and ‘buzzwords’ appear and decay frequently. Therefore, the vulnerability index may well be soon superseded by fresher terms.

2.3.2 Operationalisation of vulnerability

Another challenge and achievement within the case study research was the application and operationalisation of vulnerability as a concept. The application of the ‘BBC model’ (Birkmann 2006) on social vulnerability or the ‘Turner et al. model’ (Turner et al. 2003) by the project partner on socio-economic vulnerability has been extensively documented (Fekete 2010; Damm 2010). Both models are descriptive conceptual frameworks that try to explicitly outline vulnerability and capacities’ aspects and set the context to risks. Despite having the conceptual frameworks at hand, there was still a demand for an in-depth investigation of social vulnerability evidence, conceptual components, theoretical backgrounds, academic disciplines and measurement methods. Unfortunately, conceptual frameworks do not deliver handbooks for operationalisation. Furthermore, the setting and content of vulnerability aspects, data sources and so on vary for every case study. The conceptual splitting of vulnerability into the three widely used components of exposure, sensitivity/susceptibility and capacity (Adger 2006) was helpful in identifying and structuring factors of vulnerability that might otherwise have been overlooked. At the same time, this conceptual distinction has proved a major challenge. This is because susceptibility and capacities, exposure and hazard information, and also vulnerability and risk are difficult to delineate from each other. Referring to the observations made regarding aggregation in the previous sections of this paper, it may also be considered appropriate not to aggregate certain vulnerability components. Instead, conceptual components such as exposure and hazard, or susceptibility and capacities may contain redundant variables. The separate display of each component may be conceived as an individual assessment that focuses on the same disaster topic, but from a different angle and with its own distinctive foot-prints. This may offer a way to join vulnerability/capacities assessments (Anderson and Woodrow 1998) with modern resilience approaches. Resilience approaches often contain very similar variables and objectives as vulnerability approaches (Turner et al. 2003; Tierney and Bruneau 2007). By dispensing with the ambition to aggregate all components in a quantitative way, different components could still be ‘measured’ and quantified, perhaps in a conceptually and methodologically more distinctive and sound manner. The consequence would be a new conceptual understanding of risk that is less integrative. It would conceptually be a more disintegrated or pluralised disaster risk assessment type.

2.3.3 Hazard-independent vulnerability

There exists a debate about whether there is a set of parameters that describe social vulnerability independently of the hazard exposure (e.g. Schneiderbauer 2007). Within the project around the case study, it was finally agreed that these parameters are called the ‘social susceptibility index’. This index includes susceptibilities and capacity parameters. Red colours show areas with prevailing negative demographic profiles, indicating a relative higher susceptibility. On the other hand, green areas indicate regions with prevailing positive factors, or capacities to cope with or adapt to the flood hazard. It was argued that this set of variables can be aggregated and displayed independently of any hazard information. The advantage is that this indicator set can be applied to any flood hazard, be it from small or large rivers, or be extended to coastal floods or flash floods. Demographic susceptibilities and capacities to cope with flood-related hazards can be found among all populations, if there are no specific variables included that only refer to experiences with rivers or floods. This was the case for the variables used for the factor analysis in this study. This was necessary because no statistical information was available for such large regions. For statistical validation, however, information of a real event flood context was able to be used. This meant that only those regions that had been exposed to the flood case in 2002 were validated (see section below on the validation). With this validation step, those variables that were proved as having had an effect in a real event were filtered. Only these were represented in the resulting susceptibility, capacities or vulnerability maps, and therefore they contain demographic information about potential social vulnerability that might be applied for different future flood events.

Adding exposure information to the susceptibility index allows for the calculation of the overall vulnerability index. While this is a plausible approach, it might be argued that the combination of susceptibility and hazard information already represents a risk map. This flood disaster risk would, however, be determined only by very limited range of hazard information—the flood area of a single flood scenario—an extreme flood event, exceeding HQ200. Therefore, the term ‘risk’ was reserved for the integration of various vulnerability indices and hazard information types by all project partners of DISFLOOD.

2.4 Internal evaluation of the vulnerability assessment

There are several ways of evaluating, verifying or validating spatial vulnerability information (Fekete 2009c). It can be done statistically with the same data set using statistical methods, and it can be done using a second, independent data set and testing it with the same method as the first one. Validation can be achieved by going into the field and ‘ground-truthing’ data and observations. However, evaluation is even more complex as it involves how the results are perceived by the end-users and others, whether they be an academic audience or the observed people themselves. This latter type of evaluation is referred to as ‘external acceptance’ and is treated in Sect. 2.5.

2.4.1 Statistics

The validation of the composite index and it components could be achieved by testing the demographic parameters used against a second, independent data set of a real flood event (Fekete 2009c). While this result is valid at the county level within the frame set by the data, methods and concept applied, it may not be applicable at the local level. The limitations of the determination of social vulnerability at the household level by general demographic factors have been demonstrated by an in-depth study of certain communities on the same river, the Elbe (Steinführer and Kuhlicke 2007). On the other hand, the benefits of comparing social vulnerability assessments at the local level with the regional level, and using the same framework, has been shown in other cases (Fekete et al. 2010).

The selection of the administrative scale by using the county level was a compromise in data availability. Despite the harmonisation of the demographic data over the area size and population density, the resulting index map still has a tendency to highlight highly urbanised counties. While care has been taken to allow a balanced comparison with rural counties, there still is a tendency for typical social vulnerability parameters to become condensed in urban areas.

Some colleagues share the view that a ‘journal for non-significant results’ is missing. In this regard, the main near-misses of the social vulnerability index were, for example, its failure to prove that not only very old people but also very young people are highly vulnerable. In fact, no significant results could be obtained for young age groups concerning floods in the case study.

2.4.2 Validation by expert opinion

Weighting is a difficult topic, as can be observed in the case study (see previous section). A questionnaire was developed and distributed among fellow flood risk and social vulnerability researchers in order to collect feedback about the social vulnerability variables. Surveys and telephone interviews among experts in disaster risk management and in academia did not cross the pre-testing phase. Too much scepticism was met in comparing one social factor with another (Fekete 2010). Moreover, experts versed in both social vulnerability and historic flood experiences were hard to find. They often did not feel confident enough to generalise local experiences from larger regions, such as from all the counties along one major river. The challenges of incorporating expert feedback using Delphi methods, for example, are documented for other similar vulnerability and risk index approaches in Schmidt-Thomé (2006). Expert opinion other than related to the validation questionnaire and telephone survey is treated in the sections below.

2.5 External acceptance and evaluation of the vulnerability assessment

The acceptance of risk assessments by other scientists, experts, decision makers and the public is very much dependent on the objective of these studies and their target group. This case study is similar to many other spatial large-scale risk assessments. The objective was to provide a general overview whereas local context-specific studies intend to show vulnerabilities in more detail. Therefore, the selection of the size of the research area and the size of the units of analysis seem to be closely linked to the selection of the methodology and outcome (Fekete et al. 2010). This case study required the creation of an overview over whole river basins and to visualise the results explicitly and transparently. Therefore, maps were selected as a representation method for risks. Risks, however, can be visualised or identified within spatial observation units only in a limited way (Egner and Pott 2010). For instance, county units are simply not precise enough to locate risks which are intrinsically connected to human beings.

2.5.1 Science as a target group

For the primary target group of the assessment, researchers, the objective was to find out whether spatial patterns of flood exposure and social vulnerabilities could be detected in the research area. The methodology has already been applied in other countries such as the USA, and the intention was to transfer this approach to Germany. Moreover, the aim was to find out whether social inequalities or demographic factors in Germany can contribute to disaster risks. At that time, there existed a great interest in the community to investigate social vulnerability because disasters such as the Indian Ocean tsunami in 2004 and Hurricane Katrina in 2005 revealed that certain social groups suffer more during disasters and afterwards than the general population. Spatial vulnerability analyses and interdisciplinary approaches were of special interest, and complied for instance to the Hyogo Framework for Action (HFA 2007). For the interdisciplinary exchange of information about hazards and vulnerabilities, the methodology of using indicators and GIS was found to be very useful in integrating various sources of data and different layers of results. Both qualitative and quantitative data can be merged.

One major criticism by the scientific community to the presentation of the social vulnerability index focused upon the generalisation of social vulnerability by applying a general set of quantitative indicators for large areas or for whole population groups. Some researchers investigated the usefulness of the vulnerability assessment case study and provide critique to the methodology, conceptual framework and applicability of the indicators (Schauser et al. 2010; Tapsell et al. 2010; Kuhlicke et al. 2011). One of the studies, comparing social vulnerability assessments in different European countries, suggests that social vulnerability is very context-specific. This study reveals problems associated with using vulnerability indicators at broad scales (Kuhlicke et al. 2011).

There exists a rather sustainable discussion of opposing opinions in the disaster science community on bottom up versus top–down approaches. It is often related to spatial or scale issues (Fekete et al. 2010), and it largely favours qualitative over quantitative approaches. It is also strongly related to local-based empirical research versus regional overviews based on quantifiable and rather deterministic research methods.

In fact, the multilateral understanding and acceptance of terminology, methods and academic backgrounds between the project partners, as well as dialogue with the research community, proved to be the major challenge facing interdisciplinary research in this case study.

2.5.2 Decision makers as a target group

For the second target group of decision makers, public authorities and flood risk managers, the objective was to provide an easily comprehensible overview on hazards that do not stop at borders. In Germany, this was found to be a specific challenge due to its federal system. Disaster responsibility in Germany is located at the county level, but is also located at Länder (federal states) level. This meant that the selection of county units and large regional overviews promised to be valuable for disaster risk managers and public authorities. However, few authorities at the local or regional level showed interest, while some expressed scepticism regarding the intentions behind regional overviews (see previous sections in the case study). At the national level, the nature of the federal system means there exists no authority in Germany specifically responsible for flood risk management.

For the target group of politicians, such maps were considered useful in explaining risks in a visually intuitive way. However, this places great responsibilities on the map providers to produce correct and validated results. It also carries the risk of misunderstanding or even misuse. In hindsight, the effects of how the map would be used by various end-users was not known or investigated thoroughly. The main objective was to analyse scientifically the usefulness of the approach, to make current conceptual vulnerability frameworks applicable and to identify vulnerability. In order to receive funding and to meet the expectations of applied science, the descriptions of potential ‘end-users’ were a major issue at the outset. However, as the research and the implementation of its results consumed most of the attention, a thorough investigation of the pros and cons of its acceptability and usefulness for the target groups was not conducted.

2.5.3 Perceptions of ‘the vulnerable’—stereotypes?

One major concern related to the social vulnerability index is the stereotyping of ‘the vulnerable’ (Handmer 2003). Maps use, just like other methods, an averaging technique for spatial units. Stereotyping effects due to generalisation and loss of context have been recognised for a long time in geography and ecology (Robinson 1950; Meentemeyer 1989), however, in other disciplines, these hazards may be less familiar. Therefore, for example, counties displayed with a deep red colour indicating high vulnerability, suggest that the whole area of that county is highly vulnerable. This hides the diversity that exists in that county. Producing such maps is very much linked to risk communication since such maps create widespread public interest and heated discussion. A good deal of care was taken in describing the specific context of society, its demographics and river flood evidence of damage to people in Germany (Fekete 2009b, 2010). Yet the necessity for relating vulnerability and transferability to the context remain open questions that the study could not address without testing. A number of other studies have picked up the social vulnerability index and are about to evaluate its usefulness and transferability (Kuhlicke et al. 2011; Tapsell et al. 2010). Its critical evaluation and improvement by other researchers is highly important and welcomed, and it is hoped that a critical review of the index’s limitations will stimulate further developments in this field.

It is not only the decision makers and practitioners who may experience problems with the production of a vulnerability map. Interestingly, the mapped population responds quite differently to vulnerability or risk maps, depending on their cultural background and the purpose behind the maps. In some cases, the residents of a specific locality rejected their map unit being depicted in a red colour that indicated high vulnerability. The reasons for this vary; most people do not like to be stigmatised as ‘victims’, while their neighbours look ‘better’. While this reaction may be found in industrialised countries such as Germany, the reaction may be totally different in countries receiving development aid (Fekete 2009a). In such situations, some residents and politicians may demand ‘their’ map unit to be the ‘most vulnerable’ in order to receive funding for risk management activities.

3 Discussion of general limitations of spatial risk and vulnerability assessments

While this paper presents a descriptive analysis of only one case study of a vulnerability assessment, there are many issues that are valid for other vulnerability and risk assessments.

3.1 Pitfalls of transparency—‘hypothetical’ vulnerability

Even when transparency is achieved with best efforts, it should not be assumed that the information will be understood and used as intended. Transparency has two faces; it may help to understand results more easily but, at the same time, misunderstandings can be produced. Vulnerability maps provide good examples. Maps and GIS allow for the easy visualisation of highly complex issues. This reduction of complexity to visual images has a downside; a lack of knowledge by the audience about mapping effects or a lack of transparent documentation and descriptions about the map may lead to a false interpretation. In the worst case, maps may be misunderstood, misused or interpreted as a false reality or even as stigmatising certain areas or groups. A map represents space and observations; it does not aim at reproducing reality. The vulnerability index is based on the data available and is subject to several constraints. These are data constraints, dis/-aggregation techniques, and the objectives of the researcher, project partners and the users of the results.

The maps are not intended to suggest that every object within every square metre covered by a colour for high vulnerability is indeed highly vulnerable. The vulnerability depicted by the index map is a ‘relative’ vulnerability, or even more a ‘potential’, ‘hypothetical’, ‘estimated’ or ‘assumed’ vulnerability, as opposed to a ‘revealed’ vulnerability after a disastrous flood event (Fekete 2010).

Maps of vulnerability may be good communication tools, but only when transparency is sound. Transparency in this sense involves not only the delivery of a good description and legend, but also the level of understanding of the readers of the maps. One solution might be that the producers of the maps address the process of risk communication to the end-users very closely. Another viewpoint may be that even misunderstandings and debates such as ‘stigmatisation’ by maps are better than no discussion about risks at all. In this sense, maps are not neutral, and they motivate people to take action.

3.2 The known unknowns—limitations of prediction

Some major limitations of any hazard or risk map are the unknowns that are not recorded by the maps. For example, earthquake maps display only the ‘known’ part of the information, such as fault lines or historic epicentres. Earthquake maps usually do not show historical earthquakes that occurred before monitoring started. In Germany, earthquakes are recorded that date back for around 500 years. In geological time spans, 500 years is not of great significance. The areas where earthquakes have not occurred during the past 500 years are not depicted as risky. But does this really guarantee that these areas are risk-free? This question has major implications, as many building codes and areas designated for the construction of power plants, for instance, are directed towards a safety target related to a recurrence period of 500 years. This limitation of capturing ‘unknowns’, or the disregard of certain factors simply because information is not available in a suitable form, is a typical feature of any quantitative assessment, and not just confined to earthquakes. Flood recurrence probability is recalculated each time another major flood disaster happens. So-called 100-year floods have occurred in Germany twice within years in the same river. This is not just a problem of flood hazard or risk calculation, but also of risk communication.

The prediction of risk and vulnerability is limited because of the nature of uncertainty and the complexity of certain aspects. Flooding risk is non-linear, non-deterministic and contains chaotic features. This makes it difficult to predict the probability of future events. Predictions about social systems are difficult and hazardous, too (cf. Richardson 2005: 622). Even the usefulness of prediction or forecasting is disputable. For almost any recent disaster, for instance Hurricane Katrina or the events in Japan in 2011, studies emerge in the aftermath that have more or less predicted the disaster. But what impact did these predictions have? Prediction has even more pitfalls, such as the over- or underestimation of risks. The nuclear events in Fukushima in 2011 demonstrated once more that even a calculated risk of less than 1:100,000 may happen within one generation twice on a global risk awareness scale.

3.3 Limitations of place-based risk measurement approaches

The combination of a multitude of vulnerability factors does not guarantee that other non-captured or unknown factors might not be equally important. Each disaster is different and the continuous improvement of measurements and the conceptual lens can only help to approximate but never to absolutely predict the extent, timing and characteristics of such events.

As another problem, externalised effects, dynamics and interdependencies are difficult to represent in a specific spatial location. For example, in a typical flood risk map the hazards and vulnerabilities existing within the observed boundaries are captured. The external effects such as upstream hazards are difficult to represent in a given observation unit downstream. Even more challenging are dynamic interdependencies such as where a power failure originating in one region affects remote regions elsewhere, but does not affect regions in-between.

Another feature is ‘inherited uncertainty’. Uncertainties in primary data are inherited by secondary data sets and this is especially so for spatial approaches using GIS that are characterised by aggregating various sources of data. Uncertainties in the primary data might be ‘visible’ by missing values or ‘cloaked’ when averages are used, or even ‘hidden’ when numbers are based on assumptions, miscalculations or errors. Numbers are generally assumed to be reliable and this hinders the detection of wrong values. In some instances, spatial data errors can easily be detected. For example, bordering topographic maps from different sources often exhibit discrepancies. The researcher using one data set or map must take an assumption which will be inherited in the model and concurrently in the resulting maps.

4 Outlook

The above mentioned aspects are just examples of the multitude of challenges associated with the spatial approach to measuring risks. The case study is an example for using a constructed space concept. The vulnerability areas are constructed entities designed for making risk ‘measurable’. This paper acknowledges that such concepts have many constraints. There exists a necessity to advance existing frameworks that concern the role of the researcher or observer, who measures and at the same time imbues the results of what is being measured with perceptions. On the other hand, dealing with disaster risks and vulnerabilities is a practical challenge that often demands pragmatic and applied assessments and management. In terms of pragmatic understanding, even simplistic spatial assessments should not be abandoned, given that in many areas and for many risks no information at all is available. The value of maps should not be underestimated as they provide a quick overview and first impression. However, their limitations and pitfalls should be more extensively documented and discussed.

GIS, maps and semi-quantitative indicators are tools for risk analysis and for risk communication. Risk and vulnerability maps, statistics or narratives can be quickly assembled and applied. But a thorough investigation of the content quality and a transparent documentation is paramount for their acceptance by other disciplines or audiences. Risk maps or index ranges only provide an interface or a platform for communicating scientific assumptions about risks.

This paper can only be a starting point to trigger discussion. Disaster risk science needs to investigate more about what is important to communicate and how results are perceived by the public and decision makers. There is a dearth of studies and criteria to assess the acceptance of risk assessments. One key question for future investigations could be: How do we assess the quality of how scientists, decision and policy makers, media, and the public understand and perceive risk (and vulnerability) assessments?

Some statements that are useful for future investigations of quality criteria, the pitfalls of policy applications and for streamlining interdisciplinary approaches are:

-

There is a demand for studies that assess the quality of their results and the application of these results by the users

-

Numbers and tables are as hazardous as maps in terms of their potential for misunderstandings and stigmatisation by end-users

-

A culture of critique needs to be developed for the review of data, methods and concepts, and more knowledge is necessary about how scientific outcomes can be used or misused.

References

Adger WN (2006) Vulnerability. Glob Environ Change 16:268–281

Anderson MB, Woodrow PJ (1998) Rising from the ashes: development strategies in times of disaster. Lynne Rienner, Boulder

Backhaus K, Erichson B, Plinke W, Weiber R (2006) Multivariate analysemethoden, 11th edn. Springer, Berlin

Benson C, Twigg J (2004) ‘Measuring mitigation’ methodologies for assessing natural hazard risks and the net benefits of mitigation—a scoping study. ProVention Consortium, Geneva

Bernard HR (2006) Research methods in anthropology. Qualitative and quantitative approaches, 4th edn. Altamira Press, Oxford

Birkmann J (ed) (2006) Measuring vulnerability to natural hazards: towards disaster resilient societies. United Nations University Press, Tokyo

Cao C, Lam NSN (1997) Understanding the scale and resolution effects in remote sensing and GIS. In: Quattrochi DA, Goodchild MF (eds) Scale in remote sensing and GIS. Lewis Publishers, Boca Raton, pp 57–72

Cutter SL, Boruff BJ, Shirley WL (2003) Social vulnerability to environmental hazards. Soc Sci Q 84(2):242–261

Damm M (2010) Mapping social-ecological vulnerability to flooding. A sub-national approach for Germany. Graduate Series (3). United Nations University—Institute for Environment and Human Security (UNU-EHS), Bonn

Egner H, Pott A (eds) (2010) Geographische Risikoforschung. Zur Konstruktion verräumlichter Risiken und Sicherheiten. Erdkundliches Wissen 147. Working group book. Stuttgart, Steiner Verlag

Fekete A (2009a) Report on “Disaster preparedness in South Caucasus”, Armenia. PN 07.2050.8-001.00 GTZ, Mar 2009, unpublished. Gesellschaft für Technische Zusammenarbeit (GTZ), Eschborn

Fekete A (2009b) The Interrelation of social vulnerability and demographic change in Germany. In: IHDP open meeting 2009, the 7th international science conference on the human dimensions of global environmental change, Bonn

Fekete A (2009c) Validation of a social vulnerability index in context to river-floods in Germany. Nat Hazards Earth Syst Sci 9:393–403

Fekete A (2010) Assessment of social vulnerability to river-floods in Germany. Graduate Series (4). United Nations University—Institute for Environment and Human Security (UNU-EHS), Bonn

Fekete A, Damm M, Birkmann J (2010) Scales as a challenge for vulnerability assessment. Nat Hazards 55(3):729–747. doi:10.1007/s11069-009-9445-5:729–747

Fromm S (2005) Binäre logistische Regressionsanalyse. Eine Einführung für Sozialwissenschaftler mit SPSS für Windows, Bamberger Beiträge zur empirischen Sozialforschung, Schulze, G.; Akremi, L., Bamberg

Gall M (2007) Indices of social vulnerability to natural hazards: a comparative evaluation. PhD dissertation, University of South Carolina

Gibson C, Ostrom E, Ahn TK (1998) Scaling issues in the social sciences. International Human Dimensions Program (IHDP). IHDP Working Paper No 1. Bonn

Handmer J (2003) We are all vulnerable. Aust J Emerg Manage 18(3):55–60

Hewitt K (1983) Interpretations of calamity: from the viewpoint of human ecology (The risks & hazards series, 1). Allen & Unwin Inc., Winchester

(IKSR) Internationale Kommision zum Schutz des Rheins (2001) Rheinatlas. International Commission for the Protection of the Rhine, Koblenz

International Council for Science (2008) A science plan for integrated research on disaster risk: addressing the challenge of natural and human-induced environmental hazards. Paris

International Organization for Standardization (2009) ISO/IEC 31010:2009. Risk management—risk assessment techniques. Geneva

(IRGC) International Risk Governance Council (2008) An introduction to the IRGC risk governance framework. International Risk Governance Council, Geneva

King D (2001) Uses and limitations of socioeconomic indicators of community vulnerability to natural hazards: data and disasters in Northern Australia. Nat Hazards 24:147–156

Kuhlicke C, Scolobig A, Tapsell S, Steinführer A, De Marchi B (2011) Contextualizing social vulnerability: findings from case studies across Europe. Nat Hazards. doi:10.1007/s11069-011-9751-6. Published online 02 Mar 2011

Lüchters G, Menkhoff L (1996) Human development as statistical artefact. World Dev 24(8):1385–1392

Meentemeyer V (1989) Geographical perspectives of space, time, and scale. Landsc Ecol 3(3/4):163–173

Meyer V, Haase D, Scheuer S (2007) GIS-based multicriteria analysis as decision support in flood risk management. Umwelt Forschungs Zentrum (UFZ) Discussion Papers 6/2007. Leipzig

Moore DS, MacCabe GP (2006) Introduction to the practice of statistics. Freeman, New York

Nardo M, Saisana M Saltelli A, Tarantola S, Hoffman A, Giovannini E (2005) Handbook on constructing composite indicators: methodology and user guide. OECD Statistics Working Paper. JT00188147, STD/DOC(2005)3

O’Brien K, Leichenko R, Kelkar U, Venema H, Aandahl G, Tompkins H et al (2004) Mapping vulnerability to multiple stressors: climate change and globalization in India. Glob Environ Change 14:303–313

Openshaw S (1984) The modifiable areal unit problem. Geo Books, Norwich

Richardson K (2005) The hegemony of the physical sciences: an exploration in complexity thinking. Futures 37:615–653

Robinson WS (1950) Ecological correlations and the behavior of individuals. Am Sociol Rev 15(3):351–357

Schauser I, Otto S, Schneiderbauer S, Harvey A et al. (2010) Urban regions: vulnerabilities, vulnerability assessments by indicators and adaptation options for climate change impacts—scoping study. The European Topic Centre on Air and Climate Change (ETC./ACC). ETC./ACC Technical Paper 2010/12. Dec 2010. AH Bilthoven

Schmidt-Thomé P (Ed) (2006) Final report. The spatial effects and management of natural and technological hazards in Europe—ESPON 1.3.1. ESPON—European Spatial Planning Observation Network

Schneiderbauer S (2007) Risk and vulnerability to natural disasters—from broad view to focused perspective. Doctoral thesis. FU Berlin

Simpson DM, Katirai M (2006) Indicator issues and proposed framework for a disaster preparedness index (DPi), Working Paper 06-03, Center for Hazards Research and Policy Development, University of Louisville

Steinführer A, Kuhlicke C (2007) Social vulnerability and the 2002 flood. Country Report Germany (Mulde River). Floodsite Report Number T11–07-08. UFZ Centre for Environmental Research, Leipzig

Tapsell SM, Penning-Rowsell EC, Tunstall SM, Wilson TL (2002) Vulnerability to flooding: health and social dimensions. Philos Trans R Soc A 360:1511–1525

Tapsell S, McCarthy S, Faulkner H, Alexander M (2010) Social vulnerability to natural hazards. CapHaz-Net WP4 Report, Flood Hazard Research Centre—FHRC, Middlesex University, London

Tierney K, Bruneau M (2007) Conceptualizing and measuring resilience: a key to disaster loss reduction. Transportation Research Board of the National Academies, May–June, 2007, pp 14–17

Turner BL, Kasperson RE, Matson PA, McCarthy JJ, Corell RW, Christensen L et al (2003) A framework for vulnerability analysis in sustainability science. Proc Natl Acad Sci USA 100(14):1–6

(UNISDR) United Nations International Strategy for Disaster Reduction Secretariat (2007) Hyogo framework for action 2005–2015: building the resilience of nations and communities to disasters. United Nations International Strategy for Disaster Reduction Secretariat, Geneva

(UNU-EHS/ADRC) United Nations University—Institute for Environment and Human Security, Asian Disaster Reduction Center (2005) Expert workshop. Measuring vulnerability. UNU-EHS, Kobe, Japan. In: Birkmann J (ed) Working Paper No. 1. UNU-EHS, Bonn

Wisner B, Blaikie P, Cannon T, Davis I (2004) At risk—natural hazards, people’s vulnerability and disasters, 2nd edn. Routledge, London

Acknowledgments

The author is especially grateful for the inspirations and critique of Christian Kuhlicke and Annett Steinführer, and the input of ideas and feedback provided by Stefan Kienberger and Niklas Gebert regarding this specific paper. The author is also indebted to the constructive feedback received from anonymous reviewers. This paper summarises experiences and work spanning more than 10 years, and there are numerous other people and institutions to thank for their support, encouragement, funding and ideas.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fekete, A. Spatial disaster vulnerability and risk assessments: challenges in their quality and acceptance. Nat Hazards 61, 1161–1178 (2012). https://doi.org/10.1007/s11069-011-9973-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-011-9973-7