Abstract

In recent years, new remote-sensed technologies, such as airborne and terrestrial laser scanner, have improved the detail and the quality of topographic information, providing topographical high-resolution and high-quality data over larger areas better than other technologies. A new generation of high-resolution (≤3 m) digital terrain models (DTMs) is now available for different areas and is widely used by researchers, offering new opportunities for the scientific community. These data call for the development of a new generation of methodologies for an objective extraction of geomorphic features, such as channel heads, channel networks, bank geometry, debris-flow channel, debris-flow deposits, scree slope, landslide and erosion scars, etc. A high-resolution DTM is able to detect the divergence/convergence of areas related to unchannelized/channelized processes with better detail than a coarse DTM. In this work, we tested the performance of new methodologies for an objective extraction of geomorphic features related to shallow landsliding processes (landslide crowns), and bank erosion in a complex mountainous terrain. Giving a procedure that automatically recognizes these geomorphic features can offer a strategic tool to map natural hazard and to ease the planning and the assessment of alpine regions. The methodologies proposed are based on the detection of thresholds derived by the statistical analysis of variability of landform curvature. The study was conducted on an area located in the Eastern Italian Alps, where an accurate field survey on shallow landsliding, erosive channelized processes, and a high-quality set of both terrestrial and airborne laser scanner elevation data is available. The analysis was conducted using a high-resolution DTM and different smoothing factors for landform curvature calculation in order to test the most suitable scale of curvature calculation for the recognition of the selected features. The results revealed that (1) curvature calculation is strongly scale-dependent, and an appropriate scale for derivation of the local geometry has to be selected according to the scale of the features to be detected; (2) such approach is useful to automatically detect and highlight the location of shallow slope failures and bank erosion, and it can assist the interpreter/operator to correctly recognize and delineate such phenomena. These results highlight opportunities but also challenges in fully automated methodologies for geomorphic feature extraction and recognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years topographic survey techniques such as airborne and terrestrial laser scanner improved the detail of terrain analysis (Ackerman 1999; Kraus and Pfeifer 2001; Briese 2004; Slatton et al. 2007; Tarolli et al. 2009). A new generation of high-resolution (≤3 m) digital terrain models (DTMs) are now widely available, offering new opportunities for the scientific community to use detailed representations of surface features. Some features that can be recognized may reflect erosion/deposition activity due to rainfall and/or tectonics. Their analyses are accurate as much as accurate and detailed is the information that can be derived from topographic data. Looking at the last few years, we can mention the use of high-resolution topography for characterization and differentiation of landslide morphology and for the determination of the location and distribution of landslide activity (Chigira et al. 2004; McKean and Roering 2004; Glenn et al. 2006; Ardizzone et al. 2007; Booth et al. 2009), for geomorphological mapping of glacial landforms (Smith et al. 2006) and for recognition of depositional features on alluvial fans (Staley et al. 2006; Frankel and Dolan 2007). Other works highlighted the suitability of high-resolution topography for the characterization of channel bed morphology (Cavalli et al. 2008), for water surface mapping (Höfle et al. 2009), and for the calculation of slope for headwater channel network analysis (Vianello et al. 2009). In Trevisani et al. (2009), a LiDAR-derived DTM is analyzed using variogram maps to characterize and compare different morphological features, showing that geostatistical approach on local search window can efficiently synthesize the spatial variability of topography representing suitable “fingerprints” of surface morphology.

Other authors discussed about the critical issues and limits of high-resolution DTMs for the numerical modeling of shallow landslides (Tarolli and Tarboton 2006; Tarolli and Dalla Fontana 2008). In the last years, some researchers started to use high-resolution topography and landform curvature for channel network extraction (Lashermes et al. 2007; Tarolli and Dalla Fontana 2009; Passalacqua et al. 2010a, b; Pirotti and Tarolli 2010). They proposed semiautomatic procedures able to objectively detect thresholds in topographic curvature for channel network recognition, thus providing a valid alternative to classical methodologies for mapping channel network. All these works using LiDAR indicate the effectiveness of high-resolution elevation data and the related landform curvature maps for the analysis of land surface morphology, especially for the recognition of channelized processes. Nevertheless, in the alpine landscapes, several other geomorphic features are present like those related to shallow landslides and debris-flow channels. Finding methodologies to automatically recognize these features really represent a challenge and a useful tool for natural hazard mapping and environmental planning in such regions. Shallow landsliding phenomena are critical since in steep, soil-mantled landscapes, they can generate debris flows that scour low-order channels, deposit large quantities of sediment in higher-order channels, and pose a significant hazard (Borga et al. 2002). In the past decades, landslide distribution has been estimated and mapped using GIS-based statistical models (Carrara 1983; Carrara et al. 1991, 1999; Carrara and Guzzetti 1995; Guzzetti et al. 1999). The most relevant advance for landslide hazard analysis is related to the morphometric variables (Pike Richard 1988; Carrara et al. 1991, Carrara and Guzzetti 1995; Montgomery and Dietrich 1994; Pack et al. 1998) and to the estimation of the spatial variation of soil attributes (Moore et al. 1993; Dietrich et al. 1995) derived from Digital Terrain Models (DTMs). In general, there are different approaches proposed to assess landslide hazards: (1) field analysis to recognize areas susceptible to landslides; (2) projection of future patterns of instability through analyses of landslide inventories (DeGraff 1985); (3) statistical quantitative approach for medium-scale surveys or inventory-based method (the multivariate or bivariate statistical analysis) (Carrara 1983; Carrara et al. 1991; Carrara and Guzzetti 1995; Clerici et al. 2002); (4) stability ranking based on criteria such as slope, lithology, land form, or geologic structure (Hollingworth and Kovacs 1981; Montgomery et al. 1991); (5) physically based or process-based approach for failure probability analysis (Hammond et al. 1992; Montgomery and Dietrich 1994; Pack et al. 1998; Borga et al. 2002; Crosta and Frattini 2003; Tarolli and Tarboton 2006), and the issue of modeling the propagation of landslide phenomena such as falls and flows/avalanches to predict the potentially affected areas (Wieczorek et al. 1999; Iovine et al. 2003).

The aim of this work is to use the high-resolution topography to automatically recognize landslide crowns and features related to bank erosion of channels. We tested the methods introduced by Tarolli and Dalla Fontana (2009), Pirotti and Tarolli (2010), and Lashermes et al. (2007), and we proposed others based on statistical analysis of variability to define objective thresholds of landform curvature for feature extraction. We tried to set the most suitable scale of curvature calculation for the recognition of selected features. We suggested a guideline for such applications, indentifying also limits and at the same time future challenges in automated methodologies for geomorphic feature extraction from high-resolution topography.

2 Study area

The study area, part of Rio Cordon basin, covers 0.2 km2, and it is located in the Dolomites, a mountain region in the Eastern Italian Alps (Fig. 1). The elevation ranges from 1,969 to 2,205 m above sea level (a.s.l.) with an average of 2,064 m a.s.l. The slope angle is 27.4° in average and 69.6° at maximum.

The area has a typical Alpine climate with a mean annual rainfall of about 1,100 mm. Precipitation occurs mainly as snowfall from November to April. Runoff is dominated by snowmelt in May and June, and summer and early autumn floods represent an important contribution to the flow regime. Several shallow landslides were triggered on steep screeds at the base of cliffs during summer storm events (Tarolli et al. 2008). Soil thickness varies between 0.2 and 0.5 m on topographic spurs up to depths of 1.5 m on topographic hollows. The vegetation covers the 96.1% of the area and consists in high-altitude grassland (89.7% of the area) and sporadic tall forest (6.4%). The remaining 3.9% of the area is un-vegetated talus deposits. Several field surveys were conducted during the past few years including LiDAR survey (data acquired during snow-free conditions in October 2006). The shallow landslides related to the study area were documented by repeated surveys based on DGPS (differential global positioning system) ground observations in the period 1995–2001 (Dalla Fontana and Marchi 2003) and summer 2008–2009. A further field survey has been done on June 2010. Analysis of these data indicates that small, shallow debris-flow scars heal rapidly and that they are difficult to detect after as few as 3–4 years. About 68% of the surveyed landslides were triggered by a very intense and short-duration storm on September 14, 1994 (Lenzi 2001; Lenzi et al. 2004). The storm, with duration of 6 h, caused the largest flood recorded during 20 years of observation on the Rio Cordon basin. Due to the short duration of the storm, few slope instabilities were observed on entirely soil-covered slopes, while several landslides were triggered on slopes just below rocky outcrops (Tarolli et al. 2008). An important new sediment source was formed on May 11, 2001, during an intense snowmelt event without rainfall following a snowy winter (Lenzi et al. 2003, 2004). Soil saturation mobilized a shallow landslide (L1 in Figs. 1, 2a) covering an area of 1,905 m2 which then turned into a mud flow moving along a small tributary (Fig. 2b). A 4,176-m3 debris fan was formed at the confluence with the Rio Cordon, providing to the main channel fine sediments to be transported downstream (Lenzi et al. 2003). This new landslide area (Fig. 2a) triggered during 2001 is considered in this work, and it is located in a neighboring area of the Rio Col Duro basin where steep slopes, a narrow valley, and ancient landslide deposits are present as well. Along the small tributary affected by the mud flow of 2001, several shallow landslides were mapped (L2, L3 in Figs. 1, 2b); in general, the whole tributary should be considered a likely unstable region since different slope failures were checked in the field during the last survey of early June 2010. During the same field campaign also other slope instabilities were found in proximity of the other two main landslide areas and along the channels.

2.1 LiDAR data specifications and DTM interpolation

The LiDAR data and high-resolution aerial photographs were acquired in October 2006 using an ALTM 3100 OPTECH and Rollei H20 Digital camera from a helicopter flying at an average altitude of 1,000 m above ground level during snow-free conditions. The flying speed was 80 knots, the scan angle 20°, and the pulse rate 71 kHz. The survey point density design was specified to be greater than 5 points/m2, recording up to 4 returns, including first and last. LiDAR point measurements were filtered into returns from vegetation and bare ground using the Terrascan™ software classification routines and algorithms. The absolute vertical accuracy, evaluated by a direct comparison between LiDAR and ground DGPS elevation points, was estimated in flat areas to be less than 0.3 m, an acceptable value for LiDAR analyses in the field of geomorphology (McKean and Roering 2004; Glenn et al. 2006; Frankel and Dolan 2007; Tarolli and Dalla Fontana 2009; Pirotti and Tarolli 2010). High-resolution digital aerial photographs at a resolution of 0.15 m were also collected during the same flight. The LiDAR bare ground data set was used to generate a DTM at 0.5 m resolution using the natural neighbor interpolator (Sibson 1981). Since a rougher and more realistic representation of morphology is able detect also smaller convergences/divergence that are critical for the recognition of morphological features (Pirotti and Tarolli 2010), we decided to use the natural neighbor interpolator in order to leave a coarser morphology avoiding smoothing effects given by other methodologies such as Spline or Kriging.

3 Methods

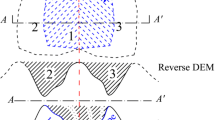

3.1 Landform curvature

Evans (1972, 1979, 1980) considers five terrain parameters that may be defined for any two-dimensional continuous surface. These correspond to groups of 0-, 1st-, and 2nd-order differentials, where the 1st- and 2nd-order functions have components in the xy and orthogonal planes. The DTM surface is approximated to a bivariate quadratic function in the form (Evans 1979):

where x, y, and Z are local coordinates, and a to f are quadratic coefficients. The coefficients in Eq. (1) can be solved within a moving window using simple combinations of neighboring cells. Other methods have also been proposed for the calculation of the various terrain parameters (e.g., Horn 1981; Travis et al. 1975; Zevenbergen and Thorne 1987). However, Evans’ (1979) method is one of the most precise at least for first-order derivatives (Shary et al. 2002). While it might not be the best method for all applications, it performs well in the presence of elevation errors (Albani et al. 2004; Florinsky 1998). The standard method to solve the Eq. (1) involves calculating the parameters of a central cell and its eight neighborhood in a moving 3 × 3 cell window. The purpose of this fitting is to enable the easy calculation of the first and second derivatives of the surface, and these values can be used to calculate slope, aspect, and various curvatures. To perform terrain analysis across a variety of spatial scales, Wood (1996) solved the bi-quadratic equation using an n × n window with a local coordinate system (x, y, z) defined with the origin at the pixel of interest (central pixel).

For the purpose of this work, we decide to use the landform curvature as a useful tool to recognize in detail the main geomorphic features related to erosion processes. Curvature is a second spatial derivative of the terrain elevations. It is one of the basic terrain parameters described by Evans (1979) and is commonly used in digital terrain analysis. In general, the most appropriate curvature form depends on the nature of the surface patch being modeled: computational and interpretive simplicity may dictate a single measure for an entire DTM. The two most frequently calculated forms are profile and plan curvature (Gallant and Wilson 2000). Profile curvature is the curvature of the surface in the steepest down-slope direction. It describes the rate of change of slope along a profile in the surface and may be useful to highlight convex and concave slopes across the DTM. Plan curvature is the curvature of a contour drawn through the central pixel. It describes the rate of change of aspect in plan across the surface and may be useful to define ridges, valleys, and slopes along the side of these features. Since these two measures involve the calculation of the slope vector, they remain undefined for quadratic patches with zero gradient (i.e., the planar components d and e are both zero). In such cases, alternative measures independent of slope and based solely on surface geometry need to be substituted. Evans (1979) suggests two measures of minimum (concavity) and maximum curvature (convexity):

Calculation of the first and second derivatives using a local window is scale-dependent. The derived parameters are only relevant to the resolution of the DTM and the neighboring cells used for calculation. Wood (1996) proposed a multiple-scale parameterization by generalizing the calculation for different window sizes. The Eqs. (2) and (3) become:

where g is the grid resolution of the DTM and k is the size of the moving window.

The Eqs. (4) and (5) has been used as a useful method for multi-scale terrain analysis (Wilson et al. 2007) and for morphometric feature parameterization (Eshani and Quiel 2008). A mean curvature derived from these two formulae has been used by Pirotti and Tarolli (2010) for channel network extraction. Cavalli and Marchi (2008) applied the same generalization procedure to plan curvature formulation, for the characterization of surface morphology. In our case study, landslide crowns and features related to bank erosion correspond to convex slope breaks forming ridges within likely unstable area. Since ridges are related to surface convexity, for this work we decided to use the C max (Eq. 4) as optimal for feature recognition.

A progressively increasing moving window size has been then considered for the calculation of curvature, in order to incorporate the majority of scale variations. The curvature has been calculated using different kernels of 3 × 3, 5 × 5, 7 × 7, 9 × 9, 15 × 15, 21 × 21, 23 × 23, 25 × 25, 29 × 29, 31 × 31, and 33 × 33 cells, respectively, on 0.5-m DTM resolution. We decided to use such analyses in order to test the effectiveness of different landform curvature maps with different smoothing factor for feature extraction. We did not consider moving windows greater than 33 cells (~16.5 m) because they result in a too smoothed surface, which is less effective on the reproduction of suitable and detailed surface morphology.

3.2 Feature extraction

For the extraction of analyzed features, we considered four methodologies based on statistical analysis of variability of C max: (1) multiples of curvature standard deviation (as proposed by Tarolli and Dalla Fontana (2009) for channel head recognition and channel network extraction), (2) interquartile range, (3) median absolute deviation (MAD), (4) quantile–quantile plot (Q–Q plot) defining threshold of curvature (as proposed by Lashermes et al. 2007). These statistical parameters have been evaluated for each curvature maps calculated with different moving windows (Table 1). The extracted features have been then filtered, selecting only those areas with slope values greater than 35°. We adopted such threshold value of slope, because according to laboratory analysis (Borga et al. 2002; Tarolli et al. 2008), it corresponds to internal frictional angle at which most of slope instabilities in the area occur. For slope evaluation, we used the same background theory and kernels used for curvature calculation (Wood 1996) in order to guarantee a consistency in the analysis.

3.2.1 Standard deviation

Tarolli and Dalla Fontana (2009) used threshold ranges identified as m-times the standard deviation (σ) of curvature as an objective method for hollow morphology recognition, and channel network extraction from high-resolution topography. In our case, we consider m-times the σ of C max. The method should be summarized as follows:

where m is a real number.

3.2.2 Interquartile range

Interquartile range (IQR) is a measure of statistical dispersion, being equal to the difference between the third and first quartiles. The IQR is essentially the range of the middle half (50%) of the data. Using the middle 50%, the IQR is less affected by outliers or extreme values. The method identifies threshold values as m-times the IQR of C max, and it should be summarized as follows:

where

where Q 3 and Q 1 are third and first quartiles.

3.2.3 Mean absolute deviation

The mean absolute deviation (MAD) of a data set is the average of the absolute deviations from the mean, and it is a summary statistic of statistical dispersion of variability. It is a more robust estimator of scale than the sample variance or standard deviation since it is more resilient to outliers in a data set. In the standard deviation, the distances from the mean are squared, so on average, large deviations are weighted more heavily, and thus outliers can heavily influence it. In the MAD, the magnitude of the distances of a small number of outliers is irrelevant. The formula is expressed as follows:

where \( \overline{{C_{\max}}} \) is the mean of C max of all the data set. The adopted method for feature extraction identifies threshold ranges as m-times the MAD of C max, and it should be summarized as follows:

3.2.4 Quantile–quantile plot

The quantile–quantile plot (Q–Q plot) is a probability plot consisting in a variable (in our case landform curvature values, C max) plotted against the standard normal deviate of the same exceedance probability. The deviation from a straight line indicates a deviation of the pdf from Gaussian. In the work of Lashermes et al. (2007) and Passalacqua et al. (2010a, b), Q–Q plots of landform curvature were used to objectively define curvature thresholds for channel network extraction. They suggested that the deviation from the normal distribution records an approximate break in which higher curvature values delineate well-organized valley axes and lower (but still positive) values record the disordered occurrence of localized convergent topography. In this work, we suggested that the deviation from the normal distribution recorded in the C max Q–Q plot (on positive side that, following Evans’ approach, corresponds to ridges, therefore to divergent topography) represents the likely threshold for feature extraction.

The adopted method identifies threshold ranges as m-times the Q–Q plotthr, and it should be summarized as follows:

where the term “thr” is related to the break in the plot. In the Fig. 3, an example of a Q–Q plot (Fig. 3b) given for the raster of curvature evaluated using a 9 × 9 moving window (Fig. 3a) is showed. In this example, the threshold for the feature extraction (the break in the positive tail of the plot) corresponds to a value of C max of 0.60832 (~0.61) (m−1). In Fig. 3c, features extracted using such threshold are shown.

4 Results and discussion

In Fig. 4, maps of C max obtained, respectively, with different kernels of 3 × 3, 9 × 9, 15 × 15, 29 × 29 are showed. Looking at these maps, it is possible to note the effects of different window sizes on curvature calculation. The smaller window size for curvature calculation (3 × 3, Fig. 4a) does not seem to be suitable for a good recognition of surface features, since only a limited area is investigated. Using the 3 × 3 kernel, only the 8 nearest neighbors for each pixel are used for curvature calculation; therefore, the analysis is sensible to any surface changes caused by 1-meter scale features (rocks, boulders, and non-significant morphology). Wider window sizes decrease such noise, resulting in a better surface morphology recognition (Fig. 4b, c). It can be easily noted how ridges (that correspond to positive C max, red color in the map) are recognized in detail. Larger windows, as 29 × 29 cells (Fig. 4d), are not so representative since the curvature map is too smooth. For evaluation carried out using such window sizes, the investigated area is too large and morphological features sited in place far from erosion areas could be reached in the curvature analysis providing misinterpretations of erosional features. Based on these visual considerations, a better result is related to a not too small or too large kernel for curvature calculation. These considerations are similar to those obtained in the work by Pirotti and Tarolli (2010), where they suggest that window size for curvature calculations has to be related to size of the features to be detected. These authors suggest that the best ratio between information and noise and thus the best results for channel network recognition are found when window size is about twice three times the maximum size of the investigated features.

Figure 5 shows features extracted using the 1, 2, 3, and 4 Q–Q plotthr threshold values. The curvature is calculated with 9 × 9 moving window. We decided to show these examples in order to test the effect of thresholding operation on geomorphic feature extraction.

The progressive increase in m value (Eq. 11) is related to a better ridge recognition, and a progressively decrease in noises on hillslopes. However, larger values of m are at the same time related to a progressive loss of information of extracted features. Similar trend is obtained also with other cutoff methodologies (Eqs. 6, 7, 10) proposed in this work.

4.1 Accuracy assessment of feature extraction

Understanding how to choose the best algorithm for a particular task is one of the keys for feature extraction. This chapter discusses how to select and evaluate algorithms and addresses how these choices can affect the performance. The final product to test is a raster map segmented into two classes: during the thresholding process, individual pixels on the maps are marked as ‘object’ pixels (feature) if their value satisfies the thresholding formulation and as ‘non-object’ pixel otherwise.

Two significant statistical errors may occur during this classification process, type I error and type II error. The context is that there is a “null hypothesis” that corresponds to a default situation (in our case that an area is not related to an erosional feature). The “alternative hypothesis” is, in our case, that the area refers indeed to an erosion process. The goal is to determine whether the null hypothesis has been correctly discarded in favor of the alternative by the extraction methodology. If methodology rejects null hypothesis when it is indeed true (non-erosion areas labeled as erosional features), a type I error (false positive) is shown; if it accepts the null hypothesis when it is false (actual erosional element marked as non-erosional features), a type II error (false negative) is provided.

To assess how well results represent the ground truth, the landslide crown and the instability areas polylines digitized in the field by DGPS were converted to raster with a buffer of 2 m on each side for a total of 4 m width. The buffer size was chosen because it soundly envelopes the convex slope breaks among erosion location and allows the correction of issues like the likely shift (<1 m) due to horizontal accuracy that could be present in the DTM respect to GPS ground survey. The resulting raster was used as reference and compared with each of the results derived from each of the twelve window sizes and multiples of the \( \sigma_{{C_{\max } }} \), \( {\text{IQR}}_{{C_{\max } }} \), \( {\text{MAD}}_{{C_{\max } }} \) and Q–Q plotthr as threshold. The matching-extracted areas are defined as true positive (TP), underlining the fact that the extraction method has correctly detected the features (null hypothesis has been correctly discarded), while the un-matching-extracted areas are considered as false positive (FP) because the extracted features are not correct. The areas within the buffer that are not extracted by the methods are interpreted as false negative (FN).

A quality evaluation on performances aims at finding the best cutoff value that gives the best result according to user’s expectation and that reduces the two types of potential mistakes (FP and FN) in the semiautomatic process (Lee et al. 2003). According to Heipke et al. (1997), it is possible to measure the “goodness” of the final extraction results through an index (Eq. 12) that takes into account the percentage of the reference data, which is explained by the extracted areas as well as the percentage of correctly extracted features.

Quality therefore varies between 0 for extraction with no overlap between extracted and observed features and the optimum value of 1, for extraction where these coincide perfectly. Such kind of evaluation is not meant to evaluate the extraction and the matching results in an absolute way, rather it is used to compare the results of different algorithms (Heipke et al. 1997).

The evaluation of cutoff methodologies has been done considering quality results that have been compared according to the following factors:

-

kernel size used for slope evaluation (k s ) (Fig. 6a)

-

kernel size used for curvature evaluation (k c ) (Fig. 6b)

-

choice of m for thresholding (Fig. 6c)

According to the overall accuracy, all the methodologies show higher precision in classifying the features using maps filtered for slope evaluated for k s of 5 (2.5 m); as the value of k s increases, the accuracy of pixel segmentation decreases (Fig. 6a). According to the choice of k c , better performances are obtained when kernel size for curvature evaluation reaches 10.5 m: quality becomes optimal using window sizes that are nor too small nor too big (Fig. 6b).

Referring to the threshold choice (m), the segmentation algorithms using \( \sigma_{{C_{\max } }} \), \( {\text{IQR}}_{{C_{\max } }} \), and Q–Q plotthr as measure of variability give almost the same optimal results when m ranges between 1.25 and 1.75 (Fig. 6c). When considering the \( {\text{MAD}}_{{C_{\max } }} \) as thresholds, similar results are obtained for m ranging from 2 to 2.5 (Fig. 6c).

The best-quality value considering all the combinations of k s , k c , and m is 0.21, and it is obtained through 1.5 \( {\text{IQR}}_{{C_{\max } }} \)on 10.5 m kernel size (k c of 21) filtered for slope evaluated for k s equal to 5. Other algorithms provide similar results (Fig. 6c), since threshold values for feature extraction are similar (Table 1). The best results always refer to curvature maps evaluated for k c equal to 21 and filtered according to slope evaluated for k s of 5.

Figure 7a shows the map of features surveyed in the field, while in the Fig. 7b, extracted features under the best performance of our methodologies are shown. Looking at Fig. 7b, one can note that the features (red arrows) related to shallow landslide crowns, and bank erosion are correctly labeled. Differently, at the top of the study area, where the morphology is complex due to the presence of erratic boulders, our methodologies tend to recognize features where convex slope breaks are not related to instability. This explains the low values of the quality index of 0.21.

The figure shows (a) the surveyed features for a comparison, and (b) the geomorphic features (red arrows are related to the extracted features representing the main slope instabilities investigated) corresponding to the best extraction of all proposed methodologies (threshold value of 1.5 IQR of C max calculated with a 21 × 21 cells moving window)

These results show that a completely automated feature recognition is not fully reliable in areas with complex morphology. Nevertheless, this methodology should be considered as a first and relatively fast approach to slope instability mapping when using high-resolution topography. According to Fig. 7b, even if a significant number of false positives is detected, it is easy to discriminate areas where the main instability are located, since these clearly show organized patterns of discontinuity, very similar to the investigated features (Fig. 7a).

5 Conclusions

This work analyzed four objective methodologies for landslide crowns, and bank erosion recognition in a complex mountainous terrain through landform curvature. The methodologies use the statistical analysis of variability of landform curvature in order to define a likely threshold for feature extraction. We tested also the suitability of different smoothing factors for landscape curvature calculation and suggested the minimal standards required for such analyses. The extracted features were then compared with the field surveyed features.

The results indicate the threshold value of 1.5 IQR of maximum curvature (C max) calculated with a 21 × 21 moving window (10.5 m wide) as the best for the extraction of the analyzed features. These results reflect similar considerations as those suggested in previous works on channel network extraction through landform curvature (Tarolli and Dalla Fontana 2009; Pirotti and Tarolli 2010). The window size for curvature calculations has to be related to the morphology and features size to be detected. Small window sizes for curvature calculation are not suitable for a good recognition of surface features since only a limited area is investigated. Very large window sizes are not so representative since curvature maps are too smooth and morphological features sited in place far from areas actually subjected to slope instabilities could be reached providing a misinterpretations of extracted features. In our case, we found a window size of 10.5 m to be the optimal for the best surface representation. Nevertheless, some noises related to the complex morphology of the upper part of the study area tend to affect the performance of our methods. It is important to note that the result related to the windows size of 10.5 m is site specific, and it may change in an areas with different morphology.

Automatic extraction of geomorphic features as landslide crowns and bank erosion mapping based on thresholding operations was proven as efficient in terms of time consumption and valid to associate shapes and pattern derived from high-resolution topography with real topographic signature of earth surface processes on the ground. The approach, anyway, presents some limits, especially in areas with complex morphology where also other surface features not related to slope instabilities are detected. Nevertheless, this fast and preliminary interpretation could meet the requirements for emergency planning. The issue of a full automatic feature extraction in area with different morphologies and with different feature sizes is a subject of future research. Another important issue that needs to be investigated should be the automatic object shape recognition in order to avoid noises such as those reached at the top of our study area. The extraction of geomorphic features from airborne LiDAR data according to this approach can be at this stage considered for modeling integration (i.e., terrain stability and erosion models) and can be used to interactively assist the interpreter/user on the task of shallow landslide and bank erosion hazard mapping.

References

Ackerman F (1999) Airborne laser scanning—present status and future expectations. ISPRN J Photogramm Remote Sens 54:64–67

Albani M, Klinkenberg B, Andison DW, Kimmins JP (2004) The choice of window size in approximating topographic surfaces from digital elevation models. Int J Geogr Inform Sci 18(6):577–593

Ardizzone F, Cardinali M, Galli M, Guzzetti F, Reichenbach P (2007) Identification and mapping of recent rainfall-induced landslides using elevation data collected by airborne LiDAR. Nat Hazards Earth Sys Sci 7(6):637–650

Booth AM, Roering JJ, Perron JT (2009) Automated landslide mapping using spectral analysis and high-resolution topographic data: Puget Sound lowlands, Washington, and Portland Hills, Oregon. Geomorphology 109:132–147. doi:10.1016/j.geomorph.2009.02.027

Borga M, Dalla Fontana G, Cazorzi F (2002) Analysis of topographic and climatic control on rainfall-triggered shallow landsliding using a quasi-dynamic wetness index. J Hydrol 268:56–71

Briese C (2004) Breakline modeling from airborne laser scanner data, Ph.D. Thesis. Institute of Photogrammetry and Remote Sensing, Vienna University of Technology

Carrara A (1983) A multivariate model for landslide hazard evaluation. Math Geol 15:403–426

Carrara A, Guzzetti F (eds) (1995) Geographical information systems in assessing natural hazards. Kluwer, Dordrecht, p 353

Carrara A, Cardinali M, Detti R, Guzzetti F, Pasqui V, Reichenbach P (1991) GIS Techniques and statistical models in evaluating landslide hazard. Earth Surf Process Landf 16:427–445

Carrara A, Guzzetti F, Cardinali M, Reichenbach P (1999) Use of GIS technology in the prediction and monitoring of landslide hazard. Natural Hazards 20:2–3, 117–135

Cavalli M, Marchi L (2008) Characterisation of the surface morphology of an alpine alluvial fan using airborne LiDAR. Natural Hazards Earth Syst Sci 8:323–333. doi:10.5194/nhess-8-323-2008

Cavalli M, Tarolli P, Marchi L, Dalla Fontana G (2008) The effectiveness of airborne LiDAR data in the recognition of channel bed morphology. Catena 73:249–260. doi:10.1016/j.catena.2007.11.001

Chigira M, Duan FJ, Yagi H, Furuya T (2004) Using an airborne laser scanner for the identification of shallow landslides and susceptibility assessment in an area of ignimbrite overlain by permeable pyroclastics. Landslides 1(3):203–209

Clerici A, Perego S, Tellini C, Vescovi P (2002) A procedure for landslide susceptibility zonation by the conditional analysis method. Geomorphology 48:349–364

Crosta GB, Frattini P (2003) Distributed modelling of shallow landslide triggered by intense rainfall. Nat Hazards Earth Sys Sci 3:81–93

Dalla Fontana G, Marchi L (2003) Slope-area relationships and sediment dynamics in two alpine streams. Hydrol Process 17(1):73–87

DeGraff JV (1985) Using isopleth maps of landslide deposits as a tool in timber sale planning. Bull Am As Eng Geologists 22:445–453

Dietrich EW, Reiss R, Hsu ML, Montgomery DR (1995) A process-based model for colluvial soil depth and shallow landsliding using digital elevation data. Hydrol Process 9:383–400

Eshani AH, Quiel F (2008) Geomorphometric feature analysis using morphometric parameterization and artificial neural networks. Geomorphology 99:1–12

Evans IS (1972) General geomorphology, derivatives of altitude and descriptive statistics. In: Chorley RJ (ed) Spatial analysis in geomorphology. Methuen & Co. Ltd, London, pp 17–90

Evans IS (1979) An integrated system of terrain analysis and slope mapping. Final report on grant DA-ERO-591–73-G0040. University of Durham, England

Evans IS (1980) An integrated system of terrain analysis and slope mapping. Zeitschrift für Geomorphologic Suppl-Bd 36:274–295

Florinsky IV (1998) Accuracy of local topographic variables derived from digital elevation models. Int J Geogr Inf Sci 12(1):47–61

Frankel KL, Dolan JF (2007) Characterizing arid-region alluvial fan surface roughness with airborne laser swath mapping digital topographic data. J Geophys Res Earth Surf 112:F02025. doi:10.1029/2006JF000644

Gallant JC, Wilson JP (2000) Primary topographic attributes. In: Wilson JP, Gallant J (eds) Terrain analysis: principles and applications. Wiley, New York, pp 51–85

Glenn NF, Streutker DR, Chadwick DJ, Thackray GD, Dorsch SJ (2006) Analysis of LiDAR-derived topographic information for characterizing and differentiating landslide morphology and activity. Geomorphology 73:131–148

Guzzetti F, Carrara A, Cardinali M, Reichenbach P (1999) Landslide hazard evaluation: a review of current techniques and their application in a multi-scale study, Central Italy. Geomorphology 31:181–216

Hammond CJ, Prellwitz RW, Miller SM (1992) Landslides hazard assessment using Monte Carlo simulation. In: Bell DH (ed) Proceedings of 6th international symposium on landslides, Christchurch, New Zealand, Balkema, vol 2. pp 251–294

Heipke C, Mayer H, Wiedemann C, Jamet O (1997) Automated reconstruction of topographic objects from aerial images using vectorized map information. Int Arch Photogramm Remote Sens 23:47–56

Höfle B, Vetter M, Pfeifer N, Mandlburger G, Stötter J (2009) Water surface mapping from airborne laser scanning using signal intensity and elevation data. Earth Surf Proc Land 34(12):1635–1649

Hollingworth R, Kovacs GS (1981) Soil slumps and debris flows: prediction and protection. Bull As Eng Geol 18:17–28

Horn BKP (1981) Hill shading and the reflectance map. Proc IEEE 69(1):14–47

Iovine G, Di Gregorio S, Lupiano V (2003) Debris-flow susceptibility assessment through cellular automata modeling: an example from 15–16 December 1999 disaster at Cervinara and San Martino Valle Caudina (Campania, southern Italy). Nat Hazards Earth Sys Sci 3:457–468

Kraus K, Pfeifer N (2001) Advanced DTM generation from LIDAR data. Int Arch Photogramm Remote Sens 34(3/W4):23–35

Lashermes B, Foufoula-Georgiou E, Dietrich WE (2007) Channel network extraction from high resolution topography using wavelets. Geophys Res Lett 34:L23S04. doi:10.1029/2007GL031140

Lee S, Shan J, Bethel JS (2003) Class-guided building extraction from Ikonos imagery. Photogramm Eng Remote Sens 69(2):143–150

Lenzi MA (2001) Step-pool evolution in the Rio Cordon, Northeastern Italy. Earth Surf Process Landf 26:991–1008

Lenzi MA, Mao L, Comiti F (2003) Interannual variation of suspended sediment load and sediment yield in an Alpine catchment. Hydrol Sci J 48(6):899–915

Lenzi MA, Mao L, Comiti F (2004) Magnitude-frequency analysis of bed load data in Alpine boulder bed stream. Water Resour Res 40:W07201. doi:10.1029/2003WR002961

Mckean J, Roering J (2004) Objective landslide detection and surface morphology mapping using high-resolution airborne laser altimetry. Geomorphology 57:331–351. doi:10.1016/S0169-555X(03)00164-8

Montgomery DR, Dietrich WE (1994) A physically based model for the topographic control on shallow landsliding. Water Resour Res 30:1153–1171

Montgomery DR, Wright RH, Booth T (1991) Debris flow hazard mitigation for colluvium-filled swales. Bull Assoc Eng Geol 28:303–323

Moore ID, Gessler PE, Nielsen GA, Peterson GA (1993) Soil attribute prediction using terrain analysis. Soil Sci Soc Am J 57:443–452

Pack RT, Tarboton DG, Goodwin CN (1998) The SINMAP approach to terrain stability mapping, 8th congress of the international association of engineering geology, Vancouver, British Columbia, Canada

Passalacqua P, Do Trung T, Foufoula-Georgiou E, Sapiro G, Dietrich WE (2010a) A geometric framework for channel network extraction from lidar: nonlinear diffusion and geodesic paths. J Geophys Res 115:F01002. doi:10.1029/2009JF001254

Passalacqua P, Tarolli P, Foufoula-Georgiou E (2010b) Testing space-scale methodologies for automatic geomorphic feature extraction from LiDAR in a complex mountainous landscape. Water Resources Research 46:W11535. doi:10.1029/2009WR008812

Pike Richard J (1988) The geometric signature: quantifying landslide-terrain types from digital elevation models. Math Geol 20(5):491–511

Pirotti F, Tarolli P (2010) Suitability of LiDAR point density and derived landform curvature maps for channel network extraction. Hydrol Process 24:1187–1197. doi:10.1002/hyp.758

Shary PA, Sharaya LS, Mitusov AV (2002) Fundamental quantitative methods of land surface analysis. Geoderma 107:1–32

Sibson R (1981) A brief description of natural neighbor interpolation. In: Barnett V (ed) Interpreting multivariate data. Wiley, Chichester, pp 21–36

Slatton KC, Carter WE, Shrestha RL, Dietrich WE (2007) Airborne laser swath mapping: achieving the resolution and accuracy required for geosurficial research. Geophys Res Lett 34:L23S10. doi:10.1029/2007GL031939

Smith MJ, Rose J, Booth S (2006) Geomorphological mapping of glacial landforms from remotely sensed data: an evaluation of the principal data sources and an assessment of their quality. Geomorphology 76:148–165. doi:10.1016/j.geomorph.2005.11.001

Staley DM, Wasklewicz TA, Blaszczynski JS (2006) Superficial patterns of debris flow deposition on alluvial fans in Death Valley, CA using airborne laser swath mapping. Geomorphology 74:152–163

Tarolli P, Dalla Fontana G (2008) High resolution LiDAR-derived DTMs: some applications for the analysis of the headwater basins’ morphology. Int Arch Photogramm Remote Sens Spatial Inf Sci 36(5/C55):297–306

Tarolli P, Dalla Fontana G (2009) Hillslope to valley transition morphology: new opportunities from high resolution DTMs. Geomorphology 113:47–56. doi:10.1016/j.geomorph.2009.02.006

Tarolli P, Tarboton DG (2006) A new method for determination of most likely landslide initiation points and the evaluation of digital terrain model scale in terrain stability mapping. Hydrol Earth Sys Sci 10:663–677

Tarolli P, Borga M, Dalla Fontana G (2008) Analyzing the infuence of upslope bedrockoutcrops on shallow landsliding. Geomorphology 93:186–200

Tarolli P, Arrowsmith JR, Vivoni ER (2009) Understanding earth surface processes from remotely sensed digital terrain models. Geomorphology 113:1–3. doi:10.1016/j.geomorph.2009.07.005

Travis MR, Elsner GH, Iverson WD, Johnson CG (1975) VIEWIT computation of seen areas, slope and aspect for land use planning. PSW 11/1975, Pacific Southwest Forest and Range Experimental Station, Berkley, California, USA

Trevisani S, Cavalli M, Marchi L (2009) Variogram maps from LiDAR data as fingerprints of surface morphology on scree slopes. Nat Hazards Earth Sys Sci 9:129–133. doi:10.5194/nhess-9-129-2009

Vianello A, Cavalli M, Tarolli P (2009) LiDAR-derived slopes for headwater channel network analysis. Catena 76:97–106. doi:10.1016/j.catena.2008.09.012

Wieczorek GF, Morrissey MM, Iovine G, Godt J (1999) Rock-fall potential in the Yosemite Valley, California. USGS Open File report 99-578

Wilson MFJ, O’Connell B, Brown C, Guinan JC, Grehan AJ (2007) Multiscale terrain analysis of multibeam bathymetry data for habitat mapping on the continental slope. Mar Geodes 30(1):3–35

Wood J (1996) The geomorphological characterisation of digital elevation models. Ph.D. Thesis, University of Leicester

Zevenbergen LW, Thorne C (1987) Quantitative analysis of land surface topography. Earth Surf Proc Land 12:47–56

Acknowledgments

This study was partly funded by the Italian Ministry of University and Research—GRANT PRIN 2005 “National network of experimental basins for monitoring and modelling of hydrogeological hazard”. Analysis resources were provided by the Interdepartmental Research Center for Cartography, Photogrammetry, Remote Sensing and GIS, at the University of Padova—CIRGEO. The authors are grateful to Ian S. Evans for his helpful advices and constructive discussion. We thank the Guest Editors and two anonymous reviewers for their insightful comments which improved our work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tarolli, P., Sofia, G. & Dalla Fontana, G. Geomorphic features extraction from high-resolution topography: landslide crowns and bank erosion. Nat Hazards 61, 65–83 (2012). https://doi.org/10.1007/s11069-010-9695-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-010-9695-2