Abstract

Usually the real-world (RW) datasets are imbalanced in nature, i.e., there is a significant difference between the number of negative and positive class samples in the datasets. Because of this, most of the conventional classifiers do not perform well on RW data classification problems. To handle the class imbalanced problems in RW datasets, this paper presents a novel density-weighted twin support vector machine (DWTWSVM) for binary class imbalance learning (CIL). Further, to boost the computational speed of DWTWSVM, a density-weighted least squares twin support vector machine (DWLSTSVM) is also proposed for solving the CIL problem, where, the optimization problem is solved by simply considering the equality constraints and by considering the 2-norm of slack variables. The key ideas behind the models are that during the model training phase, the training data points are given weights based on their importance, i.e., the majority class samples are given more importance compared to the minority class samples. Simulations are carried on a synthetic imbalanced and some real-world imbalanced datasets. The model performance in terms of F1-score, G-mean, recall and precision of the proposed DWTWSVM and DWLSTSVM are compared with support vector machine (SVM), twin SVM (TWSVM), least squares TWSVM (LSTWSVM), fuzzy TWSVM (FTWSVM), improved fuzzy least squares TWSVM (IFLSTWSVM) and density-weighted SVM for binary CIL. Finally, a statistical study is carried out based on F1-score and G-mean on RW datasets to verify the efficacy and usability of the suggested models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

SVM is one of the most widely accepted supervised ML models that was developed by Cortes and Vapnik [1]. SVM utilizes the structural risk minimization principle (SRM) that helps to reduce the generalization error [2, 3] and achieves high generalization performance. The classical SVM adopts the convex Hinge loss function. SVM guarantees optimality due to the nature of convex optimization and proper tuning of the regularization term. SVM seeks an optimal hyperplane by separating two classes using support vectors. It has been implemented in many fields such as, speaker and language recognition [4], pattern recognition [5], spam categorization [6], multiview learning [7], disease prediction [8], suspended sediment load prediction [9] and many more. However, the basic drawback of SVM is that it is computationally expensive. Jayadeva et al. [10] introduced an effective TWSVM to trim down the computational cost of traditional SVM. TWSVM solves two smaller nonparallel SVM quadratic programming problems (QPPs) instead of solving a bigger QPP. TWSVM has been successfully implemented in an extensive range of real-life problems including clustering [11], large scale data classification [12], software defect prediction [13] and so on. Ding et al. [14] and Ding et al. [15] reported theoretical research and practical achievements in binary and multiclass TWSVM respectively. To expand the kernel selection range as well as the generalization performance of TWSVM, Ding et al. [16] suggested a novel wavelet TWSVM based on glowworm swarm optimization. Khemchandani et al., [17] introduced a novel fuzzy membership induced TWSVM model, known as fuzzy TWSVM (FTWSVM). In FTWSVM the fuzzy information is provided to the datapoints so that each datapoint can contribute differently during the learning process. FTWSVM has been applied in several fields including class imbalance learning (CIL) [18], vehicle detection [19], pattern classification [20] and many more. Kumar and Gopal [21] introduced a new TWSVM called as least-squares TWSVM (LSTSVM) technique. LSTSVM tries to solve two SVM type problems by using linear constraints instead of inequality constraints. Because of this, its training speed gets enhanced. LSTSVM was successfully utilized in the areas of activity recognition [22], pattern classification [23], ECG signal classification [24] and so on. Furthermore, to perk up the training pace of FTWSVM, Borah et al. [25] developed a 2-norm fuzzy least-squares TWSVM (IFLSTWSVM) that is based on LSTSVM and fuzzy SVM. IFLSTWSVM is capable of dropping the consequence of outliers and noise in dataset. In addition, it also provides high training speed because of solving the systems of linear equations.

In machine learning (ML), the research on class imbalance learning (CIL) is crucial. This is based on two observations: (1) the CIL problem is pervasive in wide range of domains of great importance in the ML community. Medical diagnosis, oil spill detection in satellite radar images, fraudulent calls detection, speech recognition, risk management, text classification are a few of them; and (2) most popular state−of-the art classification models have been reported to be inadequate while dealing with the CIL problem [26]. The tremendous challenges of the CIL problem, as well as its frequent occurrence in ML and data mining applications, has piqued the attention of researchers. Recently, Du et al. [27] suggested a novel joint imbalance classification and feature selection model (JICFS). JICFS uses the L1 norm and iterative scheme for improved generalization performance. Liu et al. [28] introduced a novel ensemble model called self-paced ensemble (SPE) for highly imbalanced data classification. Even when there are a lot of overlapping classes and a skewed distribution, SPE can lead to good results. Another approach called adaptive ensemble of classifiers using regularization (AER) was suggested by Wang et al. [29]. AER can diminish the overfitting problem and the model efficiently deals with the numerical instability problem. Elvan et al. [30] developed an oversampling method called class decomposition and synthetic minority oversampling (CDSMOTE) for CIL problem. Unlike other under-sampling methods that lose data, CDSMOTE method keeps the majority of class instances while drastically reducing their dominance, resulting in a more balanced dataset and thus better results. A Local Distribution-based Adaptive Minority Oversampling (LAMO) model was developed by Wang et al. [31]. LAMO first selects the informative borderline minority instances as sample seeds based on their neighbours and the appropriate class distribution, then captures the local distribution of each sample based on its Euclidean distances from the adjacent majority and minority instances. Few prominent literatures related to imbalance data classification can be found in [32,33,34].

Weighted TWSVM models are new in the literature which deals with the CIL problem. Shao et al., [35] suggested an efficient weighted Lagrangian TWSVM (WLTSVM) to solve the binary CIL problem. The experimented results prove the efficiency of the WLTSVM model for solving the binary CIL problem with high generalization ability and training speed. Tomar et al., [36] introduced a weighted LSTSVM (WLSTSVM) to solve the imbalanced learning problem. WLSTSVM assigns weights to different samples based on some specific equation and proves that it reduces the effect of noise [37]. Hua and Ding, [38] suggested a new weighted least square projection TWSVM with local information (LIWLSPTSVM). LIWLSPTSVM improved the ability to generalize by making full use of the data affinity association information. Cha et al., [39] suggested a new model known as density-weighted (DW) support vector data description (DWSVDD). In DWSVDD, the weight generating algorithm is inspired by the concept of density. The data pointwise density is dependent on the distribution frequencies of the target data via the k-nearest neighbor (k-NN) model. In SVDD, the weighted algorithm returns comparatively high weights for data of greater importance (majority class) and low weights for data of lesser importance (minority class). In real-world situations, data is not linearly separable, resulting in classification mistakes. Furthermore, due to the presence of skewed datasets, data processing becomes harder. Hazarika and Gupta [40] discovered that when dealing with CIL problems, the SVM's learnt classification boundary tends towards the majority class (MAJ) due to the majority class's dominant influence on learning, lowering the classifier's generalization ability [41, 42]. The same penalty of misclassification for each training point is one of the prime causes. Therefore, they proposed a density-weighted SVM (DSVM-CIL) model to ensure that the final classification hyperplane does not bend towards the MAJ class. However, the main flaw of the DSVM-CIL model is that it necessitates solving a large QPP to obtain the decision classifier. This makes the model computationally complex. Further one can observe that during the weight calculation the k parameter of the weighted algorithm is fixed to 5 which might not always lead to the best result. Hence to enhance the generalization ability of DSVM-CIL and to explore the k parameter selection range in the weighted algorithm, we introduce a density-weighted TWSVM (DWTWSVM) for binary CIL. The proposed DWTWSVM solves two smaller QPPs to find the resulting decision surface, rather than solving one large QPP as in the case of DSVM-CIL. Therefore, the generalization of the decision surface for minority class samples is enhanced by DWTWSVM. An alternative formulation of DWTWSVM called DWTWSVM-2 is also shown. Further to reduce the computational complexity of the DWTWSVM model, a DWLSTSVM is also proposed. DWLSTSVM solves a pair of equality constraints rather than solving a pair of equality constraints as in DWTWSVM. The least-squares solution is produced in the DWLSTSVM model by considering the 2-norm of slack variables and solving optimization problems using linear constraints, which improves training speed when compared to the DWTWSVM model. Moreover, an alternative formulation is also derived for the DWLSTSVM and we have named it DWLSTSVM-2. The key contributions are:

-

(a)

The first model, i.e., DWTWSVM for binary class imbalance learning have been proposed using two different formulations. In the first formulation, the density weights are assigned directly to the slack variables. In the second formulation, the weights are assigned to the objective function as well as to the slack variables.

-

(b)

Moreover, DWLSTSVM for binary class imbalance learning has been proposed using two different formulations. In the first formulation, the density weights are assigned directly to the slack variables. In the second formulation, the weights are assigned to the objective function as well as to the slack variables.

-

(c)

Statistical comparison has been carried out with SVM, TWSVM, FTWSVM, IFLSTWSVM and DSVM-CIL using two different statistical tests: Friedman test with Nemenyi statistics and McNemar Test.

-

(d)

A density weight (DW) concept is used to calculate the weight matrix. The relative density of each data point is based on the distribution of the density by the k-NN approach in the training set.

The rest of this paper is prepared as follows: Sect. 2 gives the background study of SVM, TWSVM, LSTSVM, FTWSVM, IFLSTWSVM and DSVM-CIL. In Sect. 3, the DWTWSVM and the DWLSTSVM models are proposed and explained. Section 4 shows the experimental outcomes and the analysis of these outcomes. Section 5 gives the conclusion of this work.

2 Related Models

2.1 The SVM Model

SVM [1] finds separating hyperplane \(\phi (x)^{t} \omega + e\beta = 0,\) where \(\phi (x)\) is the mapping function which maps the training data \(x \in R^{n}\) to a high dimensional space. Suppose the output is \(y = \left( {y_{1} , \ldots ,y_{m} } \right)\) and \(y_{i} \in \left\{ { - 1,\; + 1} \right\}\) are class labels of the training matrix X that is of \(m \times n\) size, where \(i = 1,2, \ldots ,m\). The unknowns \(\omega\) and \(\beta\) are determined by solving the following constrained problem:

where, e is the one’s vector and \(\xi \ge 0\) indicates and slack variable (SV).\(C > 0\) is the penalty parameter.

The dual form of (1) is obtained by using the Lagrangian multiplier (LM) and then solving it by the Karush–Kuhn–Tucker condition (KKT) which can be shown mathematically as:

where \(Y = diag(y)\) and \(\ell \ge 0\) is considered as the Lagrangian multiplier.

For any unknown instance, \(x \in \Re^{n},\) the decision function can be generated as:

2.2 The TWSVM Model

TWSVM [10] searches for two non-parallel hyperplanes: \(K(x^{t} ,H^{t} )\omega_{1} + e\beta_{1} = 0\) and \(K(x^{t} ,H^{t} )\omega_{2} + e\beta_{2} = 0\) where \(H = \{ X_{1} ;X_{2} \} ,X_{1}\) and \(X_{2}\) are the training matrices of sizes \(l \times n\) and \(k \times n\) respectively. l is the total datapoints belongs to “Class 1” and k is the total datapoints that belong to “Class 2” where \(m = l + k\) indicates the total samples and \(n\) indicates the dimension of the datapoints. Suppose, \({\mathbf{y}} = \left( {{\mathbf{y}}_{1} ,...,{\mathbf{y}}_{m} } \right)\) is the output vector. \(\omega_{1} ,\omega_{2} ,\beta_{1}\) and \(\beta_{2}\) are achieved by solving two optimization problems:

and

\(\psi\) and \(\xi\) represent the SV. \(C_{1} ,C_{2} > 0\) are the penalty parameters and \(K( \cdot )\) indicates the Gaussian kernel.

To dual of (4) and (5), their dual can be determined by using the LM and solving them by using the KKT condition. The Wolfe’s duals of (4) and (5) can be expressed as:

and

where \(\ell_{1} \ge 0\) and \(\ell_{2} \ge 0\) are LMs. Also, \(L = [K(X_{1} ,H^{t} )e]\) and \(M = [K(X_{2} ,H^{t} )e]\) represents the nonlinear kernel function. For any input sample \(x \in \;\Re^{n} ,\) the classifier may be presented as:

2.3 The FTWSVM model

FTWSVM [17] uses a weighted parameter that is based on fuzzy membership (mem). The function of the mem is on the basis of its distance from the centroid. The mem function may be articulated as \(mem = 1 - \frac{{d_{c} }}{{\max (d_{c} ) + \kappa }},\) where \(d_{c}\) represents the Euclidean distance from the centroid to each datapoint and \(\kappa\) indicates a small positive integer to achieve a non-zero denominator [39]. The primal problems of FTWSVM may be expressed as:

and

where F1 and F2 represents the mem values of the data samples.

The corresponding Wolfe’s dual of (9) and (10) may be obtained as:

and

The decision classifier for a new instance, \(x \in \Re^{n}\) can be determined similarly as (8).

2.4 The LSTSVM Model

LSTSVM [21] solves two optimization problems by simply changing the inequality constraints of TWSVM by equality constraint i.e.,

and

In LSTSVM, the unknown parameters \(\omega_{1} ,\;\omega_{2} ,\;\beta_{1}\) and \(\beta_{2}\) are directly obtained by simply replacing the values of \(\psi\) and \(\xi\) in the primal equations as:

and

By taking the gradient of (15) and (16) and further equating them to zero,\(\omega_{1} ,\omega_{2} ,\beta_{1}\) and \(\beta_{2}\) can be expressed in matrix form as:

and

where \(L = K(X_{1} ,H^{t} )e\) and \(M = K(X_{2} ,H^{t} )e.\)

The resultant classifier can be obtained similarly as (8).

2.5 The IFLSTWSVM Model

IFLSTWSVM [25] seeks for a separating hyperplane like LSTSVM and also considers the 2-norm of slack variables. The classifying hyperplane can be achieved by solving two systems of linear equations:

and

where \(S_{1} = \;diag(F_{1} )\) and \(S_{2} = \;diag(F_{2} ).\)

In IFLSTWSVM, the unknowns \(\omega_{1} ,\omega_{2}\) and \(\beta_{1} ,\beta_{2}\) are directly obtained by simply replacing the values of \(\psi\) and \(\xi\) in (17) and (18) and further equating them to zero as:

and

Taking the gradient of (19) and (20) and further equating them to zero, \(\omega_{1} ,\omega_{2}\) and \(\beta_{1} ,\;\beta_{2}\) can be expressed in matrix form as:

and

where \(L = K(X_{1} ,H^{t} )e\) and \(M = K(X_{2} ,H^{t} )e.\)

For a new sample, \(x \in \Re^{n}\) the decision function can be obtained similarly as (8).

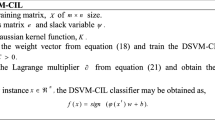

2.6 The DSVM-CIL Model

DSVM-CIL [40] seeks a separating hyperplane \(\phi (x)^{t} \omega + e\beta = 0\) in the input space. In DSVM-CIL, the density weights are directly multiplied with the SV. The separating hyperplane can be generated by solving the following problem of optimization:

D represents the DW, which is discussed in Sect. 3.

The Wolfe’s dual of (21) can be expressed as:

The unknowns \(\omega\) and \(\beta\) can be measured by differentiating it with respect to \(\ell\) and further equating to 0. For a new sample \(x \in \Re^{n} ,\) the decision function of DSVM-CIL can be generated as similar to (3).

3 Proposed Models

Cha et al., [39] have developed a new density-weighted SVDD to enhance the performance of traditional SVDD that was introduced by Tax and Duin, [43]. This weighted approach is based on the relative density of the datapoints are compared to the maximum k-NN distance of the dataset [44, 45]. Suppose, \(x_{p}\) and \(x_{p}^{k}\) denotes a datapoint and its kth-NN datapoint respectively and the distance between the two points is \(d(x_{p} ,x_{p}^{k} ).\) The DW [46] notion can be mathematically denoted as:

Density weights fall in between 0 and 1 i.e., \(0 < D < 1.\). The diagonal matrix from (23) can be written as:

In the feature space, the weight of the density can be calculated. However, the main motive is to determine the weight by illustrating the allocation of data in real space. DWTWSVM and DWLSTSVM utilize the non-linear kernel for high-dimensional data mapping for optimal data description. The data distribution could be modified in various techniques, and so differs from the initial data distribution. As a result, the DW in real space is estimated in the pursuit of a more applicable description that covers highly dense locations in the actual data distribution [39].

3.1 Proposed DWTWSVM

DWTWSVM seeks for a pair of non-parallel hyperplanes to maximize the margin of separation between two different classes and minimize its classification error. DWTWSVM applies the density weight to each datapoints according to their significance.

In the non-linear case, the primal problems of DWTWSVM may be shown as:

and

where \(0 < D_{1} < 1\) and \(0 < D_{2} < 1\) are the density weights for data samples derived from (23).

The Lagrange’s equations for (25) and (26) may be rewritten as:

and

where the vectors of LMs are donated by \(\alpha_{1} = \left( {\alpha_{11} , \ldots ,\alpha_{1q} } \right),\) \(\alpha_{2} = \left( {\alpha_{21} , \ldots ,\alpha_{2q} } \right),\) \(\gamma_{1} = \left( {\gamma_{11} , \ldots ,\gamma_{1q} } \right)\) and \(\gamma_{2} = \left( {\gamma_{21} , \ldots ,\gamma_{2q} } \right).\)

After implementing the Karush–Kuhn–Tucker condition on (27) and (28) by differentiating (27) and (28) with respect to \(\omega_{1} ,\omega_{2}\) and \(\beta_{1} ,\;\beta_{2}\) further equating to zero, the Wolfe’s dual of these equations may be obtained as:

and

where L and M can be expressed as \(L = [K(X_{1} ,H^{t} )e]\) and \(M = [K(X_{2} ,H^{t} )e].\)

Now, the values of \(\omega_{1} ,\;\omega_{2}\) and \(\beta_{1} ,\;\beta_{2}\) can be calculated as:

For an unknown example \(x \in \Re^{n}\), the classifier can be expressed similarly as (8)

3.2 Another Formulation for DWTWSVM (DWTWSVM-2)

Similar to DWTWSVM, DWTWSVM-2 also seeks for a pair of non-parallel hyperplanes to maximize the margin of separation between two different classes and minimize its classification error. However, DWTWSVM-2 assigns density weight to all samples rather than assigning it to the slack variables.

The primal problems of DWTWSVM-2 may be expressed as:

and

where d1,d2 and D1, D2 are the density weights for data samples derived from (23) and (24).

The Lagrange’s equations for (32) and (33) is:

and

where the vectors of Lagrange multipliers are expressed by \(\alpha_{1} = \left( {\alpha_{11} , \ldots ,\alpha_{1q} } \right),\alpha_{2} = \left( {\alpha_{21} , \ldots ,\alpha_{2q} } \right),\) \(\gamma_{1} = \left( {\gamma_{11} , \ldots ,\gamma_{1q} } \right)\) and \(\gamma_{2} = \left( {\gamma_{21} , \ldots ,\gamma_{2q} } \right).\)

Let, \(L = [d_{1} (K(X_{1} ,H^{t} )e],M = [d_{2} (K(X_{2} ,H^{t} )e]\) and \(u_{1} = \left[ \begin{gathered} \omega_{1} \hfill \\ \beta_{1} \hfill \\ \end{gathered} \right].\)

Now differentiating (34) with respect to \(\omega_{1}\) and \(\beta_{1}\) and further equating to zero, the Wolfe’s dual of (34) can be expressed as:

Similarly, the Wolfe’s dual of (35) can be expressed as:

where \(L = [K(X_{1} ,H^{t} )e]\) and \(M = [K(X_{2} ,H^{t} )e].\)

For an unknown sample, \(x \in \Re^{n}\), the class label may be determined as similar to (8).

3.3 Proposed DWLSTSVM

In DWLSTSVM, the diagonal matrix d, determined from (23) and (24) is directly multiplied by the slack variable and further considered the 2-norm of the SV instead of the 1-norm, which forms the strongly convex problem.

The primal problems of DWLSTSVM may be expressed as:

and

where the values of d1,d2 represents the density weights that are obtained from (23) and (24).

The equations, (38) and (39) can be expressed as:

and

where \(G = [K(X_{1} ,H^{t} )e],H = [K(X_{2} ,H^{t} )e],\) \(\nu_{1} = \left[ \begin{gathered} \omega_{1} \hfill \\ \beta_{1} \hfill \\ \end{gathered} \right]\) and \(\nu_{2} = \left[ \begin{gathered} \omega_{2} \hfill \\ \beta_{2} \hfill \\ \end{gathered} \right].\)

Now, by equating (40) and (41) to zero and by differentiating with respect to \(\omega_{1} ,\omega_{2}\) and \(\beta_{1} ,\beta_{2}\) it can be found that,

and

where \(\gamma_{1} = \frac{1}{{C_{1} }}\) and \(\gamma_{2} = \frac{1}{{C_{2} }}.\)

Remark 1

One can observe that both \((G^{t} d_{1} d_{1}^{t} G + \gamma_{1} H^{t} H + \delta I)^{ - 1}\) and \((M^{t} d_{2} d_{2}^{t} M + \gamma_{2} G^{t} G + \delta I)^{ - 1}\) are positive definite matrices because of the addition of extra regularization integer,\(\delta\) which makes DWLSTSVM more stable than LSTSVM.

Moreover, one can observe from (36) and (37) that the solution of DWLSTSVM requires matrix inversion twice. Hence, to further reduce its computational cost, the Sherman–Morrison–Woodbury (SMW) [36] is applied to measure (42) and (43) as:

and

where \(P = (L^{t} L + \delta I)^{ - 1}\) and \(Q = (M^{t} M + \delta I)^{ - 1} .\) Also \(J = Hd_{2}\) and \(R = Gd_{1} .\)

The non-linear DWLSTSVM classifier can be expressed as similar to (8).

3.4 Another Formulation for DWLSTSVM (DWLSTSVM-2)

In DWLSTSVM-2, the diagonal matrix d, determined from (23) and (24) is directly multiplied to the slack variable and further considered the 2-norm of slack variable instead of 1-norm, which makes the problem strongly convex.

The primal problems of DWLSTSVM-2 may be expressed as:

and

where the values of d1 and d2 represents the density weights that are obtained from (23) and (24). Equations (32) and (33) can be expressed as:

and

Now, by equating (46) to zero and by differentiating with respect to \(w_{1}\) and \(\beta_{1}\) we get

Consider, \(L = [d_{1} (K(X_{1} ,H^{t} )d_{1} e],M = [d_{2} (K(X_{2} ,H^{t} )d_{2} e]\) and \(v_{1} = \left[ \begin{gathered} w_{1} \hfill \\ \beta_{1} \hfill \\ \end{gathered} \right]\).

Combining (48) and (49) we get:

Rearranging the terms in (50) we get:

Similarly by solving (47) we found:

Equations (51) and (52) can be rewritten as:

and

The computational cost of (53) and (54) can be reduced by using the SMW principle as:

and

where \(P = (L^{t} L + \delta I)^{ - 1}\) and \(Q = (M^{t} M + \delta I)^{ - 1} .\)

and

where \(P = (L^{t} L + \delta I)^{ - 1}\) and \(Q = (M^{t} M + \delta I)^{ - 1} .\)

The non-linear DWLSTSVM-2 classifier can be expressed as similar to (8).

4 Experimental Setup, Numerical Results and Analysis

4.1 Graphical Representation Using Artificial Imbalanced Datasets

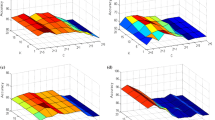

To visualize the behavior of the DWTWSVM model for different IRs, three different artificial datasets have been generated with IRs of 1, 5 and 10 respectively. The generated datasets follow Gaussian distribution with an equivalent probability such that \(x_{i} (y_{i} = 1) \approx N(\gamma_{1} ,\delta_{1} )\) and \(x_{i} (y_{i} = 1) \approx N(\gamma_{2} ,\delta_{2} ),\) where \(\gamma_{1} = [0.2, - 0.2],\gamma_{2} = [ - 0.2,0.2]\) and \(\delta_{1} = \delta_{2} = [0.15,0.15].\) The dataset with IR = 1 has a total of 100 simple, 50 of positive class and 50 of negative class. The dataset with IR = 5 and IR = 10 contains 250 and 500 negative samples respectively while the positive sample is fixed to 50. The linear kernel has been used for experimenting with these artificial imbalanced datasets. The datasets are normalized in between [0,1] before processing. We give more priority to the empirical risk rather than structural risk and set the parameter C = 1. The datasets and the classifiers obtained for different IRs using the TWSVM and the proposed DWTWSVM model is exposed in Fig. 1. One can observe that the DWTWSVM model is less sensitive to IRs of datasets compared to the TWSVM model.

4.2 Experiment on a KEEL Artificial Imbalanced Dataset and Real-World Datasets

This section explains the numerical simulations that are conducted on a synthetic imbalanced dataset and twenty-five real-world imbalanced datasets to measure the effectiveness and applicability of DWTWSVM and DWLSTSVM. The performances of the proposed DWTWSVM and DWLSTSVM are compared with SVM, TWSVM, FTWSVM, LSTSVM, IFLSTWSVM and DSVM-CIL. The simulations are done in MATLAB 2019b environment on a computer with 32 GB RAM, Intel Core i7 processor with a clock speed of 3.20 Ghz. To solve the QPP of SVM, TWSVM, FTWSVM, DSVM-CIL and DWTWSVM, the inbuilt “quadprog” function is used in MATLAB. Since the real-world datasets are generally not linearly separable hence, the non-linear kernel function is only considered as the kernel function in the experiments. The Gaussian kernel (GK) is used in this work. The GK may be expressed as: \(k(x_{l} ,x_{m} ) = - \exp ( - \mu ||x_{l} - x_{m} ||^{2} ),\) where \(x_{l}\) and \(x_{m}\) denotes any input data samples [47]. The optimal kernel parameter \(\mu\) is chosen from a wide range of \(\left\{ {2^{ - 5} , \ldots ,2^{5} } \right\}.\) The optimum C is chosen from \(\left\{ {10^{ - 5} , \ldots ,10^{5} } \right\}.\) Moreover, in the proposed DWTWSVM and DWLSTSVM, the optimum value of \(k\) is obtained from a range of {3, 5, 7, 9}. The optimum parameters, as well as the classifier performances, are determined using the typical5 fold cross-validation method. We perform the normalization as, \(\overline{x}_{ab} = \frac{{x_{ab} - x_{b}^{\min } }}{{x_{b}^{\max } - x_{b}^{\min } }},\) where \(x_{b}^{\min } = \mathop {\min }\limits_{a = 1, \ldots ,m} (x_{ab} )\) and \(x_{b}^{\min } = \mathop {\max }\limits_{a = 1, \ldots ,m} (x_{ab} )\) are the utmost and least values of the qth attribute of all input data \(x_{a}\) respectively. \(\overline{x}_{ab}\) is the normalized value of \(x_{ab}\) [48]. The imbalanced ratios (IR) of the datasets are calculated by using:

The performances of the algorithms are validated using their classification precision (Pr), recall (Rec), G-mean (GM) and F1-score. The Rec, Pr, GM and F1-scores can be measured as follows:

where TP, FP and FN denotes the true positive, false positive and false negative respectively [49].

To show the efficiency of the proposed models on the imbalanced dataset, experiments are conducted on an artificial imbalanced dataset. The dataset is obtained from KEEL imbalanced dataset repository [50] with 2 classes in which the data points are distributed arbitrarily and equally in 2D space. The data is highly imbalanced with an IR of 7. The precision, recall, F1-score, G-mean with their respective standard deviations and the total computational time in seconds (s) of the classifiers on each dataset are exhibited in Table 1.

The experimental results from Table 1 clearly indicate that the proposed DWTWSVM achieve higher generalization performance compared to other models for the artificial imbalanced dataset. It can be observed that our proposed DWTWSVM has obtained the best precision, recall, F1-score and Gmean. Also, our proposed DWLSTSVM has taken less computational time compared to SVM, TWSVM, FTWSVM, IFLSTWSVM, DSVM-CIL and DWTWSVM. The classifiers are portrayed in Fig. 2.

4.3 Experiment on Real-World Imbalanced Datasets

Moreover, to analyze the usefulness of the proposed models on RW datasets, numerical simulations are performed on twenty-five RW imbalanced datasets. These datasets are collected from the UCI machine learning repository [51]. The particulars of the datasets are presented in Table 2. We have classified the datasets into three types: (a) Low with IR less than 2, (b) Moderate with 2 < = IR < = 10 and (c) High with IR greater than 10.

The Rec, Pr, G-mean and F1-scores along with their standard deviations (SD) and training time are also tabulated in Table 3.

The following implications can be derived from Table 4:

Case1: In terms of F1-scores:

The proposed DWTWSVM and DWLSTSVM show the best results in 11 cases and 7 cases respectively out of 25 cases.

Case 2: In terms of GM:

The proposed DWTWSVM and DWLSTSVM reveal the best results in 13 cases and 6 cases respectively out of 25 cases.

Case 3: In terms of Pr:

DWTWSVM and DWLSTSVM show the best results in 12 and 10 cases respectively out of 25 cases.

Case 4: In terms of Rec:

DWTWSVM and DWLSTSVM perform best 11 and 8 cases respectively out of 25 cases.

Further, it can be concluded from Table 3 that the DWLSTSVM model takes less computation time in comparison with SVM, TWSVM, FTWSVM, DSVM-CIL and DWTWSVM models, while achieves similar computational speed as LSTSVM and IFLSTSVM. The average ranks (ARs) of these classifiers with respect to the F1-scores and GMs are portrayed in Tables 4 and 5.

In order to visualize the average values of the classifiers with respect to F1-score, Gmean, recall and precision, Fig. 3 is presented. The y-axis shows the average ranks on twenty–five real world datasets based on recall and precision. It is observable that the DWTWSVM shows the lowest average rank which reveals the efficacy of DWTWSVM models.

4.4 Friedman and Nemenyi Statistics on Imbalanced Real-World Datasets

As it can be observed from Tables 3, 4, and 5 that the proposed models do not show the best results in all of the cases, therefore statistical analysis for classifier comparison is carried out. Friedman test is a non-parametric statistical (NPS) method for model comparison [52]. To create the Friedman statistics, the ARs of the eight models for the twenty-five datasets are taken from Tables 4 and 5, where the rankings of the classifiers are reported for each dataset.

Case 1: Based on F1-score.

The Friedman test statistic can be formulated as:

Case 2: Based on G mean.

The Friedman test statistics can be obtained as:

The critical value \(C_{V}\) for \(F_{F}\) with (8–1) and (8–1) × (25–1) degrees of freedom is 2.78 for \(\alpha = 0.05\). As it can be noticed that, \(F_{F} > \;C_{V} ,\) therefore we can reject the null hypothesis. Additionally, the Nemenyi test is performed with \(p = 0.1\) as:

Few implications can be derived from the tests:

-

(a)

For F1-score: The differences between the ARs of the SVM, TWSVM, LSTSVM, IFLSTWSVM and DSVM-CIL with DWTWSVM are \(1.98,\;2.02,\;2.7,\;2.1\) and 2.14 which are higher than the CD. Hence, DWTWSVM outperforms SVM, TWSVM, LSTWSVM, IFLSTWSVM and DSVM-CIL in terms of generalization performances. However, the difference between the ARs of FTWSVM with DWTWSVM is \(\;1.58 < CD,\) which reveals no significant difference between the two models.

-

(b)

For Gmean: The differences between the ARs of the SVM, TWSVM, LSTSVM, IFLSTWSVM and DSVM-CIL with DWTWSVM are \(2.8558,\;2.0208,\;2.8125,\;2.3125\) and 2.25 which are larger than the CD. These indicate that DWTWSVM shows better performance compared to SVM, TWSVM, LSTWSVM, IFLSTWSVM and DSVM-CIL. Further, it can be noted that the difference between the ARs of FWTWSVM with DWTWSVM is \(\;1.7292 < CD,\) which reveals no significant difference between the two models.

To visualize the statistical analysis, the boxplot is presented in Figs. 4 and 5 where the x-axis shows the classifiers. In the y-axis, the upper quartile of each boxplot indicates the AR of the algorithms while the lower quartile shows the critical difference between the model and the best average rank model on the imbalanced RW datasets. The middle lines of the boxes denote the median of the upper and the lower limit. The dashed line from the point 1.926 indicates the CD line. It can be noticed from Figs. 4 and 5 that the lower limit of SVM, TWSVM, LSTSVM, IFLSTWSVM and DSVM-CIL falls above the CD line that reveals that DWTWSVM achieves the better generalization performance compared to SVM, TWSVM, LSTSVM, IFLSTWSVM and DSVM-CIL.

4.5 McNemar’s Test

McNemar’s test is an NPS method used on nominal data for deciding whether or not column and row marginal frequencies are the same. A sampling variance exists in many circumstances. Two proportions must be distinguished, considering that both proportions are not based on separate samples [53,54,55]. Therefore, we perform McNemar’s test to determine the difference between our best-suggested model, i.e., DWTWSVM and the other models on the same sample. The several p-values [56] obtained after McNemar's test are tabulated in Table 6 for a 90% level of significance when comparing the other classifiers with DWTWSVM.

One can observe from Table 6 that the proposed DWTWSVM shows significant results in 12, 24, 21, 24, 20, 11 and 20 cases out of 25 for SVM, TWSVM, LSTWSVM, FTWSVM, IFLSTWSVM, DSVM-CIL, and DWLSTSVM respectively.

To further demonstrate the results, the insensitivity plots of DWTWSVM and DWLSTSVM are shown for the Votes and WDBC datasets respectively on user-specified parameters, \((C,\mu )\) in Figs. 6 and 7. One can verify from Figs. 6 and 7 that both, DWTWSVM and DWLSTSVM are less sensitive to the user-specified values of C and \(\mu\).

Figures 8 and 9 shows the influence of k parameters on DWTWSVM and DWLSTSVM respectively on a few datasets. It is revealed from the figures that the F1-score do not drastically change for varying k value for both DWTWSVM and DWLSTSVM models.

5 Conclusion

This paper presents two new TWSVM models i.e., DWTWSVM and its 2 norm-based least squares model, DWLSTSVM to solve the binary class imbalance problem. Alternative formulations for DWTWSVM and DWLSTSVM are also presented. The DWTWSVM solves two smaller size density induced SVM problems where the density weights are directly multiplied by their slack variables. In the alternative formulation i.e., DWTWSVM-2, the density weights are multiplied to both, slack variables as well as the objective function. Moreover, DWLSTSVM performs faster in terms of computational speed than DWTWSVM while showing a similar type of generalization performance as DWTWSVM. Similar to DWTWSVM an alternate formulation of DWLSTSVM, i.e., DWLSTSVM-2 is also presented. In these proposed approaches, the adaptive weights are provided to all training data samples so that they can overcome the bias phenomenon of TWSVM and LSTSVM on imbalanced datasets. The results are established based on G-mean,\(F_{1}\)-scores, sensitivity and precision of the applied models on an artificial imbalanced dataset and twenty-five real-world imbalanced datasets. Experimental analysis has been carried out on an imbalanced artificial dataset and a few real-world datasets based on F1-score, G-mean, precision and recall. The results are compared with SVM, TWSVM, FTWSVM, IFLSTWSVM and DSVM-CIL. Experimental outcomes reveal that the proposed DWTWSVM can handle the class imbalance problem with high efficiency whereas the proposed DWLSTSVM performs faster than DWTWSVM and maintains an almost similar type of performance as DWTWSVM. However, the major limitations of DWTWSVM and DWLSTSVM is that the performances of these models depend on the selection of optimum parameters. In future, the selection of appropriate parameters for the models can be improved. Additionally, these models can also be applied to analyze noisy data classification problems. Moreover, as the present research is based on dealing with the binary class imbalanced learning problems. In future, it will be really interesting to extend these models for multiclass imbalanced learning problems.

References

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Wei CC (2012) Wavelet kernel support vector machines forecasting techniques: case study on water-level predictions during typhoons. Expert Syst Appl 39(5):5189–5199

Wang L, Gao C, Zhao N, Chen X (2019) A projection wavelet weighted twin support vector regression and its primal solution. Appl Intell, pp 1–21

Campbell WM, Campbell JP, Reynolds DA, Singer E, Torres-Carrasquillo PA (2006) Support vector machines for speaker and language recognition. Comput Speech Lang 20(2–3):210–229

Du SX, Wu TJ (2003) Support vector machines for pattern recognition. J-Zhejiang Univ Eng Sci 37(5):521–527

Drucker H, Wu D, Vapnik VN (1999) Support vector machines for spam categorization. IEEE Trans Neural Netw 10(5):1048–1054

Sun S, Xie X, Dong C (2018) Multiview learning with generalized eigenvalue proximal support vector machines. IEEE Trans Cybern 49(2):688–697

Anitha PU, Neelima G, Kumar YS (2019) Prediction of cardiovascular disease using support vector machine. J Innovat Electron Commun Eng 9(1):28–33

Hazarika BB, Gupta D, Berlin M (2020) A comparative analysis of artificial neural network and support vector regression for river suspended sediment load prediction. In: First international conference on sustainable technologies for computational intelligence, pp 339–349. Springer, Singapore

Jayadeva K, R., & Chandra, S. (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Wang Z, Shao YH, Bai L, Deng NY (2015) Twin support vector machine for clustering. IEEE Trans Neural Netw Learn Syst 26(10):2583–2588

Wang Z, Shao YH, Bai L, Li CN, Liu LM, Deng NY (2018) Insensitive stochastic gradient twin support vector machines for large scale problems. Inf Sci 462:114–131

Cao Y, Ding Z, Xue F, Rong X (2018) An improved twin support vector machine based on multi-objective cuckoo search for software defect prediction. Int J Bio-Inspired Comput 11(4):282–291

Ding S, Zhang N, Zhang X, Wu F (2017) Twin support vector machine: theory, algorithm and applications. Neural Comput Appl 28(11):3119–3130

Ding S, Zhao X, Zhang J, Zhang X, Xue Y (2019) A review on multi-class TWSVM. Artif Intell Rev 52(2):775–801

Ding S, An Y, Zhang X, Wu F, Xue Y (2017) Wavelet twin support vector machines based on glowworm swarm optimization. Neurocomputing 225:157–163

Khemchandani R, Jayadeva, Chandra S (2008) Fuzzy twin support vector machines for pattern classification. In: Mathematical programming and game theory for decision making (pp 131–142)

Gupta D, Richhariya B, Borah P (2018) A fuzzy twin support vector machine based on information entropy for class imbalance learning. Neural Comput Appl, pp 1–12

Cheon M, Yoon C, Kim E, Park M (2008) Vehicle detection using fuzzy twin support vector machine. In: SCIS & ISIS SCIS & ISIS 2008, pp 2043–2048. Japan Society for Fuzzy Theory and Intelligent Informatics

Chen SG, Wu XJ (2018) A new fuzzy twin support vector machine for pattern classification. Int J Mach Learn Cybern 9(9):1553–1564

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36(4):7535–7543

Khemchandani R, Sharma S (2016) Robust least squares twin support vector machine for human activity recognition. Appl Soft Comput 47:33–46

Mir A, Nasiri JA (2018) KNN-based least squares twin support vector machine for pattern classification. Appl Intell 48(12):4551–4564

Tang H, Dong P, Shi Y (2019) A new approach of integrating piecewise linear representation and weighted support vector machine for forecasting stock turning points. Appl Soft Comput 78:685–696

Borah P, Gupta D, Prasad M (2018) Improved 2-norm based fuzzy least squares twin support vector machine. In: 2018 IEEE symposium series on computational intelligence (SSCI), pp 412–419. IEEE.

Sun Y, Wong AK, Kamel MS (2009) Classification of imbalanced data: a review. Int J Pattern Recognit Artif Intell 23(04):687–719

Du G, Zhang J, Luo Z, Ma F, Ma L, Li S (2020) Joint imbalanced classification and feature selection for hospital readmissions. Knowl-Based Syst 200:106020.

Liu Z, Cao W, Gao Z, Bian J, Chen H, Chang Y, Liu TY (2020) Self-paced ensemble for highly imbalanced massive data classification. In: 2020 IEEE 36th international conference on data engineering (ICDE), pp 841–852. IEEE

Wang C, Deng C, Yu Z, Hui D, Gong X, Luo R (2021) Adaptive ensemble of classifiers with regularization for imbalanced data classification. Inf Fusion 69:81–102

Elyan E, Moreno-Garcia CF, Jayne C (2021) CDSMOTE: class decomposition and synthetic minority class oversampling technique for imbalanced-data classification. Neural Comput Appl 33(7):2839–2851

Wang X, Xu J, Zeng T, Jing L (2021) Local distribution-based adaptive minority oversampling for imbalanced data classification. Neurocomputing 422:200–213

Tian Y, Bian B, Tang X, Zhou J (2021) A new non-kernel quadratic surface approach for imbalanced data classification in online credit scoring. Inf Sci 563:150–165

Koziarski M (2020) Radial-based undersampling for imbalanced data classification. Pattern Recogn 102:107262

Choi HS, Jung D, Kim S, Yoon S (2021) Imbalanced data classification via cooperative interaction between classifier and generator. IEEE Trans Neural Netw Learn Syst

Shao YH, Chen WJ, Zhang JJ, Wang Z, Deng NY (2014) An efficient weighted Lagrangian twin support vector machine for imbalanced data classification. Pattern Recogn 47(9):3158–3167

Tomar D, Singhal S, Agarwal S (2014) Weighted least square twin support vector machine for imbalanced dataset. Int J Database Theory Appl 7(2):25–36

Tomar D, Agarwal S (2015) Twin support vector machine: a review from 2007 to 2014. Egyptian Inf J 16(1):55–69

Hua X, Ding S (2015) Weighted least squares projection twin support vector machines with local information. Neurocomputing 160:228–237

Cha M, Kim JS, Baek JG (2014) Density weighted support vector data description. Expert Syst Appl 41(7):3343–3350

Hazarika BB, Gupta D (2020) Density-weighted support vector machines for binary class imbalance learning. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05240-8

He H, Garcia EA (2009) Learning from imbalanced data. IEEE Trans Knowl Data Eng 21(9):1263–1284

Batuwita R, Palade V (2010) FSVM-CIL: fuzzy support vector machines for class imbalance learning. IEEE Trans Fuzzy Syst 18(3):558–571

Tax DM, Duin RP (2004) Support vector data description. Mach Learn 54(1):45–66

Golub GH, Van Loan CF (2013) Matrix computations (Vol 3). JHU Press, Baltimore

Dudani SA (1976) The distancE−weighted k-nearest-neighbor rule. IEEE Trans Syst Man Cybern 4:325–327

Gupta D, Natarajan N (2021) Prediction of uniaxial compressive strength of rock samples using density weighted least squares twin support vector regression. Neural Comput Appl, pp 1–8

Gupta D, Hazarika BB, Berlin M (2020) Robust regularized extreme learning machine with asymmetric Huber loss function. Neural Comput Appl. https://doi.org/10.1007/s00521-020-04741-w

Balasundaram S, Gupta D (2016) On optimization based extreme learning machine in primal for regression and classification by functional iterative method. Int J Mach Learn Cybern 7(5):707–728

Powers DM (2011) Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult-Valued Log Soft Comput 17

Dua D, Graff C (2019) UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science, zuletzt abgerufen am: 14.09. 2019. Google Scholar

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

McNemar Q (1947) Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 12(2):153–157

Cardillo G (2007) McNemar test: perform the McNemar test on a 2x2 matrix. http://www.mathworks.com/matlabcentral/fileexchange/15472

Everitt BS (1992) The analysis of contingency tables. CRC Press

Eisinga R, Heskes T, Pelzer B, TeGrotenhuis M (2017) Exact p-values for pairwise comparison of Friedman rank sums, with application to comparing classifiers. BMC Bioinform 18(1):68

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interests. The MATLAB codes for the proposed models can be found at: https://github.com/Barenya/densitycode

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hazarika, B.B., Gupta, D. Density Weighted Twin Support Vector Machines for Binary Class Imbalance Learning. Neural Process Lett 54, 1091–1130 (2022). https://doi.org/10.1007/s11063-021-10671-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10671-y