Abstract

We argue that some properties of sign language grammar have counterparts in non-signers’ intuitions about gestures, including ones that are probably very uncommon. Thus despite the intrinsic limitations of gestures compared to full-fledged sign languages, they might access some of the same rules. While gesture research often focuses on co-speech gestures, we investigate pro-speech gestures, which fully replace spoken words and thus often make an at-issue semantic contribution, like signs. We argue that gestural loci can emulate several properties of sign language loci (= positions in signing space that realize discourse referents): there can be an arbitrary number of them, with a distinction between speaker-, addressee- and third person-denoting loci. They may be free or bound, and they may be used to realize ‘donkey’ anaphora. Some gestural verbs include loci in their realization, and for this reason they resemble some ‘agreement verbs’ found in sign language (Schlenker and Chemla 2018). As in sign language, gestural loci can have rich iconic uses, with high loci used for tall individuals. Turning to plurality, we argue that repetition-based gestural nouns replicate several properties of repetition-based plurals in ASL (Schlenker and Lamberton 2019): unpunctuated repetitions provide vague information about quantities, punctuated repetitions are often semantically precise, and rich iconic information can be provided in both cases depending on the arrangement of the repetitions, an observation that extends to some mass terms. We further suggest that gestural verbs can give rise to repetition-based pluractional readings, as their sign language counterparts (Kuhn 2015a, 2015b; Kuhn and Aristodemo 2017). Following Strickland et al. (2015), we further argue that a distinction between telic and atelic sign language verbs, involving the existence of sharp boundaries, can be replicated with gestural verbs. Finally, turning to attitude and action reports, we suggest (following in part Lillo-Martin 2012) that Role Shift, which serves to adopt another agent’s perspective in sign language, has gestural counterparts. (An Appendix discusses possible gestural counterparts of ‘Locative Shift,’ a sign language operation in which one may co-opt a location-denoting locus to refer to an individual found at that location.)

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Goals

We argue in this piece that some non-trivial rules that constrain the use of signs (in particular in ASL [American Sign Language]) have analogues in some gestures in spoken language.Footnote 1 Thus there is in a sense a ‘gestural grammar’ which, despite severe limitations, shares some properties with sign language grammar.

While most gestural work focuses on co-speech gestures studied by way of corpora, we base our analysis on pro-speech gestures, which fully replace words (rather than accompanying them). Pro-speech gestures fulfill the grammatical and semantic role of full-fledged words; for this reason, they often lead to ungrammaticality if they are omitted.Footnote 2 The reason pro-speech gestures are a good point of comparison for signs is that, just like signs, they usually make at-issue contributions and must fulfill grammatical functions. By contrast, co-speech gestures are ‘parasitic’ on the words they co-occur with, and they were argued not to make at-issue contributions, possibly for this reason (Ebert and Ebert 2014; Schlenker 2018a, 2018b).Footnote 3 While we will use co-speech gestures to introduce discourse referents in gestural space, our focus will be on pro-speech gestures (also discussed in Slama-Cazacu 1976; Clark 1996, 2016; Fricke 2008; Ladewig 2011; Schlenker to appear; Tieu et al. 2019; see also Jouitteau 2004 and Ortega-Santos 2016; Ebert 2018).

To make things concrete, consider the example in (1) (here and throughout, we provide links to anonymized videos, also available in a separate folder; our consultants assessed the non-anonymized versions).

-

(1)

Whenever I can hire

-

- -

- [a mathematician] or

[a mathematician] or  -

- -

- [a sociologist], I pick

[a sociologist], I pick  -

- .

.Meaning: Whenever I can hire a mathematician or a sociologist, I pick the former.

(Video 3927, 1st sentence https://youtu.be/nU5xdXLV43c)

The first disjunct a mathematician is pronounced with an open hand on the right (glossed as  -

- -

- , and preceding the co-occurring expression, which is boldfaced) while the second disjunct a sociologist co-occurs with an open hand on the left (glossed as

, and preceding the co-occurring expression, which is boldfaced) while the second disjunct a sociologist co-occurs with an open hand on the left (glossed as  -

- -

- ). We will argue that these are gestural counterparts of ‘loci,’ positions in signing space that instantiate discourse referents or variables (Lillo-Martin and Klima 1990). As a result, when the sentence-final object of pick is replaced with a pointing gesture towards the right (glossed as

). We will argue that these are gestural counterparts of ‘loci,’ positions in signing space that instantiate discourse referents or variables (Lillo-Martin and Klima 1990). As a result, when the sentence-final object of pick is replaced with a pointing gesture towards the right (glossed as  -

- ), we obtain a sentence that is acceptable, and has a ‘donkey’ reading on which the gestural ‘pronoun’ is dependent on the (non-c-commanding) existential quantifier. It is worth noting that in this case him or her could be ambiguous between the two antecedents, whereas the pointing gesture isn’t: it is rather clear that the gesture is not just a code for a word.

), we obtain a sentence that is acceptable, and has a ‘donkey’ reading on which the gestural ‘pronoun’ is dependent on the (non-c-commanding) existential quantifier. It is worth noting that in this case him or her could be ambiguous between the two antecedents, whereas the pointing gesture isn’t: it is rather clear that the gesture is not just a code for a word.

Pro-speech gestures of this type are arguably very uncommon, and their properties can be teased apart only by establishing which are intuitively acceptable and which are deviant. It is thus essential to extend to gestural work the method routinely used to collect data on spoken and signed languages, based on acceptability judgments on constructed examples. We will start by summarizing a case, involving person markers in gestural verbs, in which this method was validated with experimental means. We will then extend it to new areas involving loci (= positions in signing/gestural space corresponding to discourse referents), plurality, pluractionality, telicity, and context shift, thus offering a diverse sample of grammatical rules that constrain pro-speech gestures. Our data will be based on the introspective judgments of three (non-signing) linguists; we will focus on cases in which there is significant agreement among our consultants, and we will leave a (necessary) extension using experimental means for future research.

In the present context, we follow standard usage in formal studies of sign language (as summarized for instance in Sandler and Lillo-Martin 2006) and treat the phenomena discussed below (loci, plurals, pluractionals, telicity, context shift) as being ‘grammatical.’ One reason for this terminology is that these phenomena share detailed formal properties with counterparts that are uncontroversially considered as grammatical in spoken language. But it should be kept in mind that sign language phenomena typically have an iconic componentFootnote 4 that their spoken language counterparts lack, a point that has been discussed in detail in recent studies (e.g. Schlenker 2017a, 2018c). In addition, there is a debate within sign language linguistics to determine which rules should count as ‘grammatical’ and which should be seen as ‘gestural’ in nature (see for instance Liddell 2003 for a statement of the ‘gestural’ position). The reader who disagrees with our terminological choices need not be distracted by them: our goal is to show, irrespective of terminology, that signs and pro-speech gestures share detailed formal properties in areas that have not been precisely described up to this point.

1.2 Why study gestural grammar?

While there has been considerable work on the interaction between language and gestures, only recently have there been attempts to study the formal semantics of gestures, as well as aspects of their formal grammar (Lascarides and Stone 2009; Giorgolo 2010; Ebert and Ebert 2014; Schlenker 2018a; Schlenker and Chemla 2018). This research direction has intrinsic interest because gestures offer a rich source of new data for linguistics and allied fields, but it is also essential to a proper comparison between spoken and signed languages.

There is no doubt that sign languages are full-fledged languages with the same general grammatical and semantic properties as spoken languages (with some modality-specific specificities [e.g. Sandler and Lillo-Martin 2006]). But some researchers have raised the possibility that, along certain dimensions at least, sign languages might be expressively richer than spoken languages because they have the same logical spine but richer iconic resources (e.g. Schlenker 2018c, 2018e). Other researchers have countered that the role of iconicity in this comparison cannot be properly assessed unless co-speech gestures are taken into account; in the words of Goldin-Meadow and Brentari (2017), “sign should not be compared with speech — it should be compared with speech-plus-gesture.” Gestures are thus essential to a comparison between sign and speech.

But which gestures should the comparison focus on? It has been argued that even when co-speech gestures are re-integrated in the comparison, there remain systematic differences between the two modalities because the contributions made by co-speech gestures are usually not at-issue, whereas iconic modulations in sign languages often can be (Ebert and Ebert 2014; Schlenker 2018a, 2018b). By contrast, some gestures (‘pro-speech gestures’) that fully replace words (rather than accompanying them) make at-issue contributions, and for this reason they will play a prominent role in the present comparison between gestural and sign language grammar. But being gestures, they lack the conventional character, semantic richness, and sophisticated grammatical rules of sign languages; thus we can at best hope to uncover a ‘proto-grammar’ for gestures.

Still, gestures in general and pro-speech gestures in particular might be important to understand the origins of sign languages. It is noteworthy that homesigners, who grow up without access to sign language, do end up developing gestural languages that share some properties of sign languages (e.g. Goldin-Meadow 2003; Coppola et al. 2013; Abner et al. 2015b), although they are also expressively and communicatively far less rich (hence the importance, emphasized in much research, of providing deaf children will full access to sign language, e.g. Mellon et al. 2015). It is thus natural to ask whether pro-speech gestures might display some grammatical-like properties.

On the syntactic side, Goldin-Meadow et al. (2008) showed that hearing speakers asked to use gestures to silently represent complex actions preferentially adopted an SOV order (subject - object - verb, or actor - patient - action), irrespective of the syntax of their native language. Furthermore, they did so both in communicative tasks (gesturing an entire action for an audience) and in non-communicative tasks (involving the arrangement of transparencies representing an event and its participants), which suggests that the preference is cognitive in nature.

In this piece, we attempt to investigate the acceptability of pro-speech gestures using a different method: we embed them in full-fledged spoken sentences, so that the grammatical spine remains that of English, with gestures ‘imported’ to fulfill certain syntactic and semantic functions.

We do not claim that pro-speech gestures are common—quite the opposite. It is all the more striking that they must follow some rules that seem to have counterparts in sign language—which might suggest that the two cases share a common cognitive/linguistic origin.

1.3 Goals and limitations

We will thus argue that several non-trivial properties of sign language grammar can be found in non-signers’ intuitions about pro-speech gestures. Indirectly, then, they know some properties of sign language grammar (although they usually don’t know that they know them, as these properties have nothing to do with common and often incorrect representations of sign language in non-signers). These results should be seen in the context of a broader comparison between sign language and gestures, and in particular of the finding that there are clear connections between the iconicity of signs and of gestures (Ortega et al. 2017).

There are also important limitations to our enterprise. As we explain below, we base our discussion on the judgments of three consultants who are native speakers of American English. We consulted them by way of a survey with videos of a native speaker of American English. Sprouse and Almeida (2012, 2013), Sprouse et al. (2013) have argued for the general validity of introspective methods in standard linguistic judgments. Tieu et al. (2017, 2018) have largely confirmed with experimental means early semantic judgments on co-speech gestures that appeared in the literature (Schlenker 2018a). Tieu et al. (2019) have done the same for semantic judgments on pro-speech gestures. Closer to our topic, we will briefly review experimental results from Schlenker and Chemla (2018) that confirm introspective judgments on the acceptability of some pro-speech gestures.

Still, it is fair to note that introspective methods are less well established for gestures than for speech, and our own survey suggests that there is more variation across consultants than one might expect for standard speech data. While we will concentrate on areas of agreement among consultants, this theoretical study ought to be followed by detailed experimental work in the future: as in all consultant work, concentrating on areas of agreement among subjects comes with the risk that noisy data give rise, post hoc, to apparent data stability; systematic methods must be used at a later stage to reach firmer conclusions. But as is the case in linguistics generally, we believe that this experimental stage is most fruitful if preceded by the kind of detailed theoretical and analytical work we seek to develop in this piece.

1.4 Organization

The rest of this article is organized as follows. We provide necessary background pertaining to gestural typology and elicitation methods in Sect. 2 (transcription conventions appear in Appendix I). An initial case pertaining to the interaction of ellipsis and loci in gestural verbs is summarized in Sect. 3; it has the advantage of being simultaneously based on introspective judgments and on experimental results that largely validate them. We consider cases involving several nominal as well as temporal and modal loci (Sect. 4), and focus on their uses in donkey anaphora (Sect. 5) and on their interaction with iconic conditions (Sect. 6). We then turn to expressions of plurality (Sect. 7), pluractionality (Sect. 8) and telicity (Sect. 9). We end the paper with possible gestural counterparts of sign language Role Shift (Sect. 10), before drawing some conclusions (Sect. 11). (Appendix II discusses less stable data pertaining to ‘Locative Shift,’ and Supplementary Materials contain the raw results of our gestural survey.Footnote 5)

2 Background: Typology, methods and transcriptions

2.1 Gesture typologies

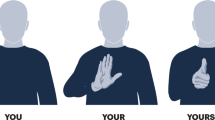

McNeill (2005) distinguishes between four types of gestures: iconic, metaphoric, deictic and beat. In a nutshell, iconic gestures resemble their denotations, metaphoric gestures may present “images of the abstract,” deictic gestures involve pointing (with the index finger, or other extensible body parts or held objects), while beats take “the form of the hand beating time.” Some authors, such as Giorgolo (2010), have a subcategory of ‘emblems,’ which are conventionalized gestures with a fixed meaning (e.g. the ‘thumb up’  gesture). We will primarily focus on iconic and ‘deictic’ gestures, although we will argue that some of the latter have anaphoric uses in addition to their deictic ones.

gesture). We will primarily focus on iconic and ‘deictic’ gestures, although we will argue that some of the latter have anaphoric uses in addition to their deictic ones.

Schlenker (2018b) proposes a pragmatic typology in which different types of gestures make different types of semantic/pragmatic contributions depending in part on whether they are syntactically eliminable and they have a separate time slot. The main claim is that co-speech gestures and co-sign facial expressions, which co-occur with the words they modify, trigger (weak) presuppositions of a special sort, called ‘cosuppositions’; post-speech gestures and post-sign facial expressions, which follow the words they modify, trigger supplements, i.e. the same kind of contribution as appositive relative clauses; while pro-speech gestures, which fully replace some words, are very free in their semantic contributions and can in particular be at-issue (the same claim is made about iconic modulations of words).Footnote 6 We note that pro- and post-speech gestures are more natural if accompanied with an onomatopoeia, which might be because silent words are uncommon in spoken language, and/or because the onomatopoeia makes the iconic representation more complete.Footnote 7 We do not usually encode these, and the reader should try to pick all-purpose onomatopoeias that minimally affect the semantic contribution of the relevant gestures.

Finally, Schlenker (to appear) argues that a rich typology of linguistic inferences can be reproduced within pro- and post-speech gestures, and the main results are replicated with experimental means in Tieu et al. (2019). Specifically, the proposal is that pro-gestures can trigger scalar implicatures and associated phenomena (Blind Implicatures), presuppositions and associated phenomena (so-called ‘anti-presuppositions’ due to Maximize Presupposition), homogeneity inferences that are characteristic of definite plurals, as well as some expressive inferences that are characteristic of some pejorative terms. And as mentioned before, post-speech gestures trigger inferences that are very close to the supplements triggered by appositive relative clauses. By contrast with these earlier works, our focus in the present paper will be on grammatical properties of pro-speech gestures, although inferential judgments will sometimes be helpful to establish them.

2.2 Elicitation methods and notations

Sign language data are usually cited from earlier publications and were elicited by way of the Playback Method, described for instance in Schlenker et al. (2013) (see Appendix I for transcription conventions). When quantitative acceptability judgments appear (as superscripts) at the beginning of sign language sentences, they are on a 7-point scale, with 7 = best (references of the form (ASL, 7, 204) are to the videos on which the sentences were recorded).

Gestural data were obtained in two steps (here too, transcription conventions appear in Appendix I). In an initial phase, examples were constructed using the author’s judgments and those of linguists that were informally consulted (native speakers of American English who are not signers).Footnote 8 In a second phase, judgments were systematically checked by (i) creating videos of all examples, produced by a native speaker of American EnglishFootnote 9 (they are linked, in anonymized form, to the examples); (ii) asking three colleagues for systematic judgments: all three are native signers of American English, none of them is a signer,Footnote 10 two have conducted research on gestures before, one of them hasn’t. We focus on areas of agreements among consultants, but the full survey and results can be found in the Supplementary Materials.

Acceptability scores (on a 7-point scale, with 7 = best) on English gestural data appear (as superscripts) at the beginning of examples. ‘Translations’ of gestures are justified by inferential judgments. When different consultants have different inferential judgments, we write ‘2/3 consultants’ to mean ‘2 consultants out of 3’ (since some consultants may offer several readings, the total may sum to more than 3). All raw data (acceptability as well as inferential judgments) can be found in the Supplementary Materials.

3 Initial case: Gestural agreement

Investigating gestures by way of acceptability judgments is not a fully accepted method yet, and thus we start with a brief summary of an initial case study in which introspective judgments were confirmed with experimental means.

3.1 Agreement verbs: Signs vs. gestures

Sign languages typically use positions in signing place, called ‘loci,’ to realize discourse referents (e.g. Sandler and Lillo-Martin 2006; Schlenker 2017a). Loci that denote elements of the discourse situation (including the signer and addressee) must correspond to their real position. Loci that correspond to other elements can be introduced in relatively arbitrary positions of the horizontal plane. One common way to realize pronouns is to ‘index,’ i.e. point towards, the relevant loci.

In ASL, some verbs, ‘agreement verbs’ (also called ‘directional verbs’ or ‘indicating verbs’), include loci in their realization. These have been argued to display the behavior of agreement markers (Lillo-Martin and Meier 2011), although alternative analyses have been offered as well (e.g. Liddell 2003; see Pfau et al. 2018 and Schembri et al. 2018 for a recent version of the debate). Schlenker and Chemla (2018) argue that agreement verbs have gestural counterparts. They further argue that the two constructions interact in similar ways with ellipsis. To introduce these findings, let us start by considering the ASL paradigm in (2), constructed around the agreement verb 1-GIVE-a or 1-GIVE-2.

-

(2)

-

a.

7 POSS-2 YOUNG BROTHER MONEY IX-1 1-GIVE-a. IX-2 IX-1 NOT.

‘Your younger brother, I would give money to. You, I wouldn’t.’

-

b.

4.7 POSS-2 YOUNG BROTHER MONEY IX-1 1-GIVE-a. IX-2 IX-1 NOT 1-GIVE-a.

-

c.

7 POSS-2 YOUNG BROTHER MONEY IX-1 1-GIVE-a. IX-2 IX-1 NOT 1-GIVE-2.

‘Your younger brother, I would give money to. You, I wouldn’t give money to.’ (ASL, 34, 1558; 4 judgments)

-

a.

Here the verb GIVE is realized by way of a movement from the first person locus 1 to the third person locus a (hence: 1-GIVE-a) or to the second person locus 2 (1-GIVE-2). (2)b,c are controls without ellipsis: they establish, unsurprisingly, that a second person object must trigger second person object agreement. But (2)a shows that under ellipsis things are different: on the assumption that the missing verb is copied from the antecedent clause, its object agreement marker can be disregarded in the elided clause, since otherwise the copied verb 1-GIVE-a would take a second person object argument.

Related effects are well known in connection with phi-features of spoken language pronouns. This is illustrated in (3), where both the third person features and the feminine features of her are ignored under ellipsis.

-

(3)

[Uttered by a male speaker] In my study group,

a. Mary did her homework, and I did too.

⇒ available bound variable reading in the second clause

b. Mary did her homework, and I did her homework too.

⇒ no bound variable reading in the second clause

(Schlenker and Chemla 2018)

Now the crucial observation is that the ASL data can to some extent be replicated with gestural verbs in English. Things are somewhat complicated by the fact that something like the second person version seems to do double duty as a neutral form, and hence it is glossed as (-2) in parentheses. Still, using a third person form with a second person object yields deviance, as shown by the boldfaced examples in (4).Footnote 11

-

(4)

Your brother, I am going to

-

- _

_ (/

(/  (-

(- )_

)_ ),

),and then you, I am going to ??

-

- _

_ /

/ (-

(- )_

)_ .

.(from Schlenker and Chemla 2018)

Crucially, when the gestural predicate occurs (with a bound variable) under ellipsis-like constructions, third person locus specifications can be ignored, both in VP-ellipsis in the strict sense, as in (5)b, and in the ‘stripping’ construction in (5)a.

-

(5)

Your brother, I am going to

-

- /

/  -

- , and then

, and then -

a.

[‘stripping’] you, too.

-

b.

[VP-ellipsis] you, I will as well.

-

a.

Importantly, Schlenker and Chemla (2018) showed that an online experiment with naive subjects confirmed the introspective judgments reported here. Specifically, in cases similar to (5)a, copying a gestural verb with third person object agreement (e.g.  -

- ) was acceptable under ellipsis, despite the fact that the object (you) is second person. By contrast, an overt control with the same mismatch (e.g. you, I am gonna

) was acceptable under ellipsis, despite the fact that the object (you) is second person. By contrast, an overt control with the same mismatch (e.g. you, I am gonna -

- ) was less acceptable. Thus a third person agreement marker can to some extent be ignored in the course of ellipsis resolution. More generally, initial gestural judgments by linguists can be replicated with naive subjects.

) was less acceptable. Thus a third person agreement marker can to some extent be ignored in the course of ellipsis resolution. More generally, initial gestural judgments by linguists can be replicated with naive subjects.

3.2 Theoretical directions

There are three broad directions that one could explore to explain the gestural and sign language data (see Schlenker and Chemla 2018 and also Schlenker 2016a).

(i) Person-based analyses: One possibility is that (i) the gestural verbs in (5) have a third person object marker which can be disregarded, and that (ii) person features can be disregarded in ellipsis resolution and under only if they result from agreement. The latter assumption is often made for phi-features more generally, for instance to account for the data in (3).

This analysis is insufficient to account for sign language data because there can be an unbounded number of third person loci: it seems that bound loci rather than person per se can be disregarded in the course of ellipsis resolution. As we will see below, this conclusion extends to gestural loci.

(ii) Variable-based analyses: An alternative is to take the loci to instantiate variables, and to allow for binding, as is represented in the gestural case in (6) for the sentence in (5)a: the locus variable a gets bound in the first part of the sentence, and the entire VP gets copied in the second part.

-

(6)

Your brother, λa I am going to

-

- , and then

, and thenyou too

a I am going to .

.

(iii) Agreement-based views: For the sign language case, Kuhn (2015a), followed by Schlenker (2016a), argues that treating loci as variables is not enough, and that in some cases one needs a rule that allows some loci to be inherited by way of agreement. On such an analysis, the locus a in (5)a is taken to be disregarded by ellipsis resolution, as in (7).

-

(7)

Analysis of (5)a

Your brother, λx

aI am going to x

xa, and thenyou too

x I am going to

x.

Without getting into details, we should mention that Schlenker and Chemla (2018) argue that the (complicated) ASL data that Kuhn (2015a) used to motivate his (agreement-based) conclusion can be replicated in the gestural case.Footnote 12

4 Simple loci

One striking observation in sign language is that there is no clear upper limit on the number of loci that can be simultaneously used besides limitations of performance; in this respect, loci sharply differ from rich discourse referent systems found in spoken language (see for instance Schlenker 2017a). Importantly, pronouns realized by way of locus indexing are constrained by some principles of Binding Theory, with some versions of reflexive and non-reflexive pronouns (e.g. Koulidobrova 2011; Sandler and Lillo-Martin 2006) and Strong Crossover effects (Schlenker and Mathur 2013).

We will now see that several properties of sign language loci can be replicated with pro-speech pointing in gestures (see for instance Cormier et al. 2013 for a comparison between sign language pronouns and co-speech pointing).

4.1 Nominal loci

Schlenker and Chemla (2018) only tested the existence of a single third person locus, and only did so by way of gestural agreement verbs. We will now go further and show that there can be several third person loci, and that they can be indexed by way of pro-speech pointing (see for instance (Kendon 2004) for a general discussion of pointing in gestures, including the diversity of pointing methods that can be used).Footnote 13

The method we use here and below is the following: we use co-speech gestures to introduce three loci corresponding to John, Mary and Sam, and we do so by way of open hands placed in the relevant position. These loci are then retrieved by way of pro-speech pointing. It was noted in Schlenker and Chemla (2018) that acceptability of pro-speech gestures is a bit ameliorated when they are clause-final, and we follow suit in testing clause-final positions whenever this is possible.

-

(8)

Yesterday I had a long conversation with

-

- -

- [John], then with

[John], then with  -

- -

- [Mary], then with

[Mary], then with  -

- -

- [Sam]. You know who the company’s gonna promote?

[Sam]. You know who the company’s gonna promote?a. 6.7

-

- .

.b. 6.3

-

- .

.c. 6.7

-

- .

.d. 6

-

- .

.e. 6

-

- .

.= John

= Mary

= Sam

= me

= you

(Video 3845 https://youtu.be/KLpow-YBNRs)

The acceptability of all pointing patterns in (8) is high (between 6 and 7 on a 7-point scale). And depending on which locus is indexed by way of pro-speech pointing, we obtain five different meanings for the answer: three pointing patterns correspond to the three introduced loci, and two additional ones are obtained by pointing towards the speaker or addressee. It is worth noting again that pointing gestures don’t just go proxy for pronouns: if John and Sam are males, answering him in this context would be intolerably ambiguous, as it would be entirely unclear whether John or Sam is being intended. Gestural pointing lifts the ambiguity.

Importantly, it appears to be difficult to establish an arbitrary locus for the addressee, which mirrors the observation that sign language loci denoting speech act participants (and more broadly deictic elements) preferably correspond to their real position.Footnote 14 An attempt is displayed in (9)

-

(9)

Tomorrow the boss will have a conversation with

-

- -

- [you] and with

[you] and with  -

- -

- [John]. And you know who the company will promote?

[John]. And you know who the company will promote?a. 4.3

-

- .

.b. 5.3

-

- .

.c. 3.3

-

- .

.= you

= John

= you

(Video 3853 https://youtu.be/Ja_AhMkVhSA)

Here an open hand  -

- -

- co-occurs with you (on the right) at the beginning of the sentence, while another open hand

co-occurs with you (on the right) at the beginning of the sentence, while another open hand  -

- -

- co-occurs with John. This is precisely the device used in (8) to establish loci, but now the result is degraded when you is indexed, be it in position

co-occurs with John. This is precisely the device used in (8) to establish loci, but now the result is degraded when you is indexed, be it in position  , as in (9), or in the addressee’s position

, as in (9), or in the addressee’s position  , as in (9)c (the sentence is more acceptable if the final gestural pointing refers to John, as in (9)b).Footnote 15

, as in (9)c (the sentence is more acceptable if the final gestural pointing refers to John, as in (9)b).Footnote 15

Sign language loci can be bound by quantifiers (e.g. Sandler and Lillo-Martin 2006; Schlenker et al. 2013). Does the same hold for gestural loci? For standard binding (under c-command), the only clear case we have involves existential quantifiers, as in (10); we will see in Sect. 5 that several other cases can be devised with donkey pronouns (hence without c-command).

-

(10)

Whenever there is a Board meeting,

-

- -

- [at least one manager] asks

[at least one manager] asks  -

- -

- [the CEO] to promote

[the CEO] to promotea. 5.3

-

-

b. 4

-

-

c. 4

-

-

d. 4

-

- .

.= him

= himself [= the CEO]

= you

= me

(Video 3869 https://youtu.be/TvXOIF-ateI)

Ratings suggest that  -

- can be dependent on the existential quantifier

can be dependent on the existential quantifier  -

- -

- [at least one manager], and inferential questions (displayed in the Supplementary Materials) suggest that the pointing gesture yields the intended reading. Other options are degraded. For

[at least one manager], and inferential questions (displayed in the Supplementary Materials) suggest that the pointing gesture yields the intended reading. Other options are degraded. For  -

- and

and  -

- , this might simply be because the loci

, this might simply be because the loci  and

and  that were established before are idle.Footnote 16 For

that were established before are idle.Footnote 16 For  -

- this might be because of a violation of Condition B, as is found without gestures in: *I asked the CEOito promote himi; but investigating this possibility would require a more sophisticated study.Footnote 17

this might be because of a violation of Condition B, as is found without gestures in: *I asked the CEOito promote himi; but investigating this possibility would require a more sophisticated study.Footnote 17

Binding by every- and none-type quantifiers is, for our consultants, more degraded. But this might be due to the realization we picked: in the case of (12), two consultants explicitly mentioned that the example would improve if the locus-establishing open hand  -

- -

- just co-occurred with manager rather than with no manager; we leave this question for future research.

just co-occurred with manager rather than with no manager; we leave this question for future research.

-

(11)

Whenever there is a Board meeting,

-

- -

- [every manager] asks

[every manager] asks  -

- -

- [the CEO] to promote

[the CEO] to promotea. 4

-

- .

.b. 4

-

- .

.c. 3.7

-

- .

.d. 3.7

-

- .

.= him

= himself [= the CEO]

= you

= me

(Video 3867 https://youtu.be/2wRveOVe3ls)

-

(12)

Whenever there is a Board meeting,

-

- -

- [no manager] ever asks

[no manager] ever asks  -

- -

- [the CEO] to promote

[the CEO] to promotea. 3.2

-

- .

.b. 2.8

-

- .

.c. 2.8

-

- .

.d. 2.8

-

- .

.= him

= himself [= the CEO]

= you

= me

(Video 3871 https://youtu.be/wv5sStoyZg4)

If pointing gestures can be bound under existential quantifiers, one might expect that they can also give rise to bound readings under ellipsis. The existence of such bound readings is attested in the literature on sign language (for ASL and LSF, see for instance numerous examples in Schlenker 2014). In our limited data, the preferred reading seems to be a strict one:

-

(13)

5.7 Whenever there is a Board meeting, the

-

- -

- [first manager] always asks the CEO promote

[first manager] always asks the CEO promote  -

- , and the

, and the  -

- -

- [second manager] does too!

[second manager] does too!Preferred reading: strict, i.e. the second manager always asks the CEO to promote the first manager (3/3 consultants; 1/3 consultants: ‘with difficulty,’ a bound variable reading is available)

(Video 3899 https://youtu.be/kzfXdbLL2cs)

Still, it is important to determine whether with the right context a bound variable reading emerges. This seems to be the case for some consultants but not others, as shown by the example in (14) and the individual results in (15): Consultants 2 and 3 allow for a bound reading in this scenario.

-

(14)

4.8 Whenever there is a Board meeting, the

-

- -

- [first] manager and the

[first] manager and the  -

- -

- [second] manager both look after their own interests. So the

[second] manager both look after their own interests. So the  -

- -

- [first manager] always asks the CEO promote

[first manager] always asks the CEO promote  -

- , and the

, and the  -

- -

- [second manager] does too!

[second manager] does too!2/3 consultants: strict reading 2/3 consultants: bound reading

(Video 3897 https://youtu.be/6-1cKHiKjY0)

-

(15)

Acceptability and inferential results for (14)

Video 3897

Acceptability

Reading

Consultant 1

2.5

strict

Consultant 2

6

bound

Consultant 3

6

strict or, with some effort, bound

4.2 Agreement verbs revisited

Having seen that several third person gestural loci can be introduced in the same sentence, we should go back to the agreement data discussed in Sect. 3, and extend them to display the use of several third person loci. In (16)c, we replicate the observation that bound third person loci can be ignored under ellipsis resolution, but now in an example that involves several person loci; overt locus mismatch as in (16)b is less acceptable.

-

(16)

When I was a kid, I often got into fights with

-

- -

- [your brother], but/andFootnote 18 also with

[your brother], but/andFootnote 18 also with  -

- -

- [your sister]. One morning,

[your sister]. One morning,a. 6.3 your brother, I tried to

-

- , and then your sister, I tried to

, and then your sister, I tried to  -

- .

.b. 4.5 your brother, I tried to

-

- , and then your sister, I tried to

, and then your sister, I tried to  -

- .

.c. 5.7 your brother, I tried to

-

- , and then your sister too!

, and then your sister too!⇒ the speaker tried to slap the addressee’s sister

(Video 3905 https://youtu.be/BVSuyFsuj4o)

As a result, the findings of Sect. 3 cannot be interpreted in terms of a simple distinction between third and second person. Rather, it appears that loci (rather than just standard person markers) can be ignored in the course of ellipsis resolution (as mentioned in Sect. 3.2 in connection with sign language, there are several ways to explain this phenomenon).

As an extension (but without considering ellipsis), one could ask whether cases of gestural agreement can be found beyond the object case. A non-object case is found in (17); it may either be analyzed in terms of subject agreement or spatial agreement (both types are instantiated in sign language).Footnote 19 Deviance is obtained if the gestural verbs  -

- -

- (display a plane take-off) and

(display a plane take-off) and  -

- -

- (displaying a helicopter take-off) originate from positions that do not correspond to those introduced with one plane and one helicopter respectively. Footnote 20

(displaying a helicopter take-off) originate from positions that do not correspond to those introduced with one plane and one helicopter respectively. Footnote 20

-

(17)

The company has

-

- -

- [one plane] and

[one plane] and  -

- -

- [one helicopter]. When the plane

[one helicopter]. When the planea. 6.3

-

- -

- -

- , the noise is unbearable, but when the helicopter

, the noise is unbearable, but when the helicopter  -

- -

- -

- , less so.

, less so.b. 3.8

-

- -

- -

- , the noise is unbearable, but when the helicopter

, the noise is unbearable, but when the helicopter  -

- -

- -

- , less so.

, less so.(Video 3917 https://youtu.be/OzgrrYKChI0)

In sum, the existence of loci, the person distinctions they display, and their behavior under ellipsis are reminiscent of sign language loci.

4.3 Temporal and modal loci

Schlenker (2013) argues that ASL loci can have temporal and modal uses, as is illustrated in (18) (it is uncontroversial that ASL loci can have locative uses as well, a point to which we revisit in Apendix II).

-

(18)

a. Context: Every week I play in a lottery.

7 IX-1 [SOMETIMES WIN]a. IX-1 [SOMETIMES LOSE]b. \(\hat{\overline{\mbox{IX-a}}}\) IX-1 HAPPY.

‘Sometimes I win. Sometimes I lose. Then [= when I win] I am happy.’ (ASL, 7, 204)

b. a. Context: The speaker is playing in a lottery.

6.8 NOW IX-1 [POSSIBLE RICH]a. [POSSIBLE SAME POOR]b. \(\hat{\overline{\mbox{IX-a}}}\) IX-1 LUCKY.

‘Now I might be rich. I might also still be poor. Then [= if I am rich] I am lucky.’ (ASL, 7, 196) (Schlenker 2013)

Can such uses be replicated with gestural loci? While we make no claim about the availability of these readings with index pointing (of the sort that was used above for nominal reference), full open hand pointing (palm up) is accepted by our consultants in the temporal case, as in (19), and to a lesser extent in the modal case, as in (20).

-

(19)

5.7 Every week John plays the lottery. Sometimes he

-

- -

- wins, and sometimes he

wins, and sometimes he  -

- -

- loses. And you know when I am nice to him?

loses. And you know when I am nice to him?  -

- -

- .

.⇒ the speaker is nice to John when John wins

(Video 3921 https://youtu.be/m3Z9N8AFzoE)

-

(20)

5 John might

-

- -

- win, and he might

win, and he might  -

- -

- lose. And you know in what case I’ll be nice to him?

lose. And you know in what case I’ll be nice to him?  -

- -

- .

.⇒ the speaker will be nice to John in case John wins

(Video 3925 https://youtu.be/lltteeqgrIk)

Temporal and modal uses of gestural loci should be explored in future research. If index pointing is worse in this case than full hand pointing, it would have to be explained why, in particular in connection with the diverse modes of pointing discussed by Kendon (2004).

5 Dynamic loci

5.1 Initial cases

5.1.1 Symmetric cases with sign language and with gestural loci

Schlenker (2011b) argues that loci can be the overt realization of dynamic discourse referents, as in the theories of ‘donkey anaphora’ developed in dynamic semantics (e.g. Kamp 1981; Heim 1982). The argument was based on examples such as (21): each indefinite introduces a locus within the WHEN-clause, but affects the value of pronouns found in the main clause. The indexings in (21)a and (21)b are both relatively acceptable (with a preference for anaphoric links that follow linear order), as long as the two pronouns index different loci. This is expected on standard theories of loci-qua-indices because using the same locus in the subject and object position would yield an odd coreferential reading, which entails that a Frenchman wonders who he lives with.

-

(21)

WHEN [FRENCH MAN]a a,b-MEET [FRENCH MAN]b,

‘When a Frenchman meets a Frenchman,’

a. IX-a WONDER WHO IX-b LIVE WITH.

‘the former wonders who the latter lives with.’

b. ? IX-b WONDER WHO IX-a LIVE WITH.

‘the latter wonders who the former lives with.’

c. # IX-a WONDER WHO IX-a LIVE WITH.

‘the former wonders who the former lives with.’

d. # IX-b WONDER WHO IX-b LIVE WITH.

‘the latter wonders who the latter lives with.’

(ASL, i P1040945; Schlenker 2011b)

Because the indefinites do not c-command the pronouns, standard binding cannot apply in these configurations. Dynamic binding offers one possible analysis (e.g. Kamp 1981; Heim 1982). E-type theories (e.g. Heim 1990; Elbourne 2005) offer another, according to which the pronouns realize concealed definite descriptions. But the examples were selected to make such an analysis difficult. The reason is this: depending on the E-type theory under consideration, IX-a would have to be paraphrased as the Frenchman (Elbourne 2005), or the Frenchman that meets a Frenchman (Heim 1990), and IX-b would then be analyzed as the Frenchman, or the Frenchman that a Frenchman meets (these are called ‘bishop examples’ in the literature because the most famous cases involved a bishop meeting a bishop). Elbourne’s theory has the advantage of elegance: for him, he is represented as  with ellipsis of Frenchman. But in the case at hand, ensuring that the two descriptions he and him (i.e.

with ellipsis of Frenchman. But in the case at hand, ensuring that the two descriptions he and him (i.e.  and

and  ) denote different individuals is non-trivial, as we will see when we discuss the theoretical consequences.

) denote different individuals is non-trivial, as we will see when we discuss the theoretical consequences.

Can similar data be replicated with gestural loci? Basic cases of donkey anaphora are easy to construct, as in (22), which was already discussed in simplified form in (1).

-

(22)

Whenever I can hire

-

- -

- [a mathematician] or

[a mathematician] or  -

- -

- [a sociologist], I pick

[a sociologist], I picka. 6.7

-

- . (= the mathematician)

. (= the mathematician)b. 6.7

-

- . (= the sociologist)

. (= the sociologist)(Video 3927 https://youtu.be/nU5xdXLV43c)

Cases with symmetric antecedents can be created as well, as shown in (23). As in sign language, using different loci in subject position (co-occurring with he) and in object position (as a pro-speech gesture) yields a disjoint reference reading. Using the same locus yields twice yields a locally coreferential reading, which is degraded, probably for plausibility reasons, and possibly because of a Condition B effect. Be that as it may, the two patterns of indexing yield entirely different readings.Footnote 21

-

(23)

Whenever

-

- -

- [a bishop] meets

[a bishop] meets  -

- -

- [a bishop],

[a bishop],a. 6

-

- -

- he blesses

he blesses  -

- , and then

, and then  -

- -

- he blesses

he blesses  -

- .

.⇒ the first bishop blesses the second bishop.Footnote 22

b. 4.5

-

- -

- he blesses

he blesses  -

- , and then

, and then  -

- -

- he blesses

he blesses  -

- .

.⇒ the first bishop blesses the first bishop.

(Video 3933 https://youtu.be/seR8COPvryU)

Finally, Elbourne (2005) noticed that when the two symmetric indefinite antecedents are conjoined in subject position, as in (24)b (which contrasts with the original ‘bishop’ example in (24)a), the result is degraded. He took this to be a positive result for his theory: with certain auxiliary assumptions, Elbourne could explain that the symmetric antecedents play entirely similar semantic roles as well, which makes it impossible for the pronoun-as-description to pick only one singular bishop (the idea was that in the subject-object case in (24)a, by contrast, the antecedent bishops play not just syntactically but also semantically distinct roles).

-

(24)

a. If a bishop meets a bishop, he greets him.

b. #If a bishop and a bishop meet, he greets him.

Schlenker (2011b) argues that this observation does not extend to ASL (and LSF) examples, as illustrated in (25).

-

(25)

WHEN SOMEONEa AND SOMEONEb LIVE TOGETHER, IX-a LOVE IX-b.

‘When someone and someone live together, the former loves the latter.’

(ASL, i P1040966; Schlenker 2011b)

Interestingly, pro- and co-speech pointing yields judgments that are closer to the sign language than to the spoken language data.Footnote 23 Thus in (26), we modify (23) so as to have conjoined antecedents. Unsurprisingly, without pointing, the examples in (26)a,b are very degraded. Importantly, a sharp amelioration is observed with pro-speech pointing as in (26)d, and also with co-speech pointing as in (26)c (the latter case is better—possibly because co-speech pointing is more standard than pro-speech pointing, and possibly also because the model’s realization of the pro-speech pointing didn’t include onomatopoeias, hence some completely silent ‘words’).

Notation: capitalized HE and HIM serve to encode phonological emphasis.

-

(26)

Whenever

-

- -

- [a bishop] and

[a bishop] and  -

- -

- [a bishop] meet,

[a bishop] meet,a. 1 he blesses him, and then he blesses him.

b. 2.2 HE blesses HIM, and then HE blesses HIM.

c. 7

-

- -

- HE blesses

HE blesses  -

- -

- HIM, and then

HIM, and then  -

- -

- HE blesses

HE blesses  -

- -

- HIM.

HIM.d. 5.7

-

- -

- HE blesses

HE blesses  -

- , and then

, and then  -

- -

- HE blesses

HE blesses  -

- .

.(Video 3937 https://youtu.be/QCvtjh8a33I)

In sum, a contrast that was found between English and ASL can be replicated internal to English, using English-plus-gesture as a way to replicate a version of the ASL facts.

5.1.2 Theoretical consequences

In Schlenker (2011b, 2017a), the sign language data summarized above were used to suggest that (i) dynamic approaches predict the correct patterns of indexing in the case of donkey sentences, while (ii) E-type approaches in general, and Elbourne’s analysis in particular, are faced with a dilemma: either they are refuted by the sign language data, or they must be brought so close to the dynamic analysis that they might end up becoming a variant of it. As we will see, the structure of the argument straightforwardly extends to our gestural data.

Schematically, we displayed above some examples similar to (27)a, but with sign language or gestural loci that realize a structure akin to (27)b: each antecedent is associated with a distinct locus, and each pointing index also retrieves a distinct locus; if instead two indexes retrieve the same locus, a coreferential reading is obtained.

-

(27)

a. If a bishop meets a bishop, he blesses him.

b. If [a bishop]x meets [a bishop]y, hex blesses himy.

c. If [a bishop] meets [a bishop], he

bishopblesses himbishop.c′. If [a bishop] meets [a bishop], he

bishop #1blesses himbishop #2.

The dynamic analysis can straightforwardly account for the data by positing that each indefinite introduces a separate variable. This allows each pronoun to depend on a different quantifier if \(he _{x}\) and himy carry different variables (we could also have \(he _{y}\)/himx, but not \(he _{x}\)/himx or \(he _{y}\)/himy: the pronouns must carry different variables to refer to different bishops, or else the sentence would be understood as involving self-blessingsFootnote 24).

Since the E-type analysis does not allow for co-indexing without c-command, it cannot adopt the same solution. But in Elbourne’s influential theory, it can still establish a formal link between the pronouns and their antecedents: it is the formal link provided by ellipsis resolution. On this view, if an indefinite and a pointing sign/gesture share a locus, the elided NP realized by the pronoun is obtained by copying it from the antecedent indefinite. But in symmetric examples such as (27)a, analyzed as in (27)c, all we get in this way are two identical descriptions the bishop and the bishop, and further measures are needed to ensure that they can denote different individuals.

To address this problem, Elbourne starts by positing that the if-clause quantifies over extremely fine-grained situations—so fine-grained, in fact, that a situation <x, y, meet> in which x meets y is different from a situation <y, x, meet> in which y meets x (this is the sense in which the subject and object bishop antecedents are not just syntactically but also semantically distinct). Still, this is not quite enough: in order to obtain the right meaning, the pronouns must still be endowed with some additional material—perhaps provided by the context—to pick out different bishops in a given situation of the form <bishop1, bishop2, meet>. Even with the device of very fine-grained situations, the analysis in (27)c is insufficient because it does not specify which bishop each pronoun refers to; in (27)c′, the pronouns are enriched with the (stipulated) symbols #1 vs. #2, which are intended to pick out the ‘first’ or the ‘second’ bishop in <bishop1, bishop2, meet>.

But the question is how these index-like objects end up in the Logical Form. This presents a dilemma for Elbourne’s theory. If the symbols #1 and #2 are inherited by the mechanism of ellipsis resolution itself, we will end up with something very close to a dynamic analysis: the antecedents carry a formal index, and the pronouns recover the very index carried by their antecedent, as is illustrated in (28).

-

(28)

If [a bishop]#1 meets [a bishop]#2, he

bishop #1blesses himbishop #2.

On the other hand, if #1 and #2 are provided by a mechanism—possibly a contextual one—which is independent from NP ellipsis resolution, we lose the desired connection between interpretation and pointing: we make the incorrect prediction that pointing signs or gestures corresponding to he and him can index the same locus while inheriting #1 and #2 respectively (since these are now provided by the context, not by pointing itself). This is of course undesirable.

The argument was initially developed with sign language data, but it is striking that it can now be developed just as well with gestural data.

5.2 Refinements

Schlenker (2011b) discusses various more sophisticated examples, involving generalized quantifiers as well as antecedents under negation; both cases have interesting theoretical consequences for dynamic semantics. The first can be replicated with gestural pointing; it isn’t yet clear that the second can be.Footnote 25

5.2.1 Dynamic anaphora to generalized quantifiers

❑ Sign language vs. gestural data

Schlenker (2011b) argues that with generalized quantifiers, ASL and LSF loci give rise to readings that resemble those obtained in spoken language, with the difference that the anaphoric links are overt. On the intended reading of (29), theyi refers to the maximal set of linguists that meet psychologists, and similarly theyk denotes the maximal set of psychologists that some linguists meet. Similar readings carry over to ASL and LSF, as illustrated for ASL in (30). It is easy to see that similar facts hold of the pro-speech pointing gesture IX-b in (31), where it is preferably interpreted to refer to the psychologists present rather than to psychologists in general.

-

(29)

When [more than 10 linguists]i meet [fewer than 4 psychologists]k, theyi (each) criticize themk.

-

(30)

IF LESS [THREE FRENCH PERSON HERE]a AND LESS [FIVE AMERICAN PERSON HERE]b, IX-arc-a WILL GREET-b IX-arc-b.

‘If less than three Frenchmen were here and less than five Americans were here, they [= the Frenchmen] would greet them [= the Americans].’ (ASL, 2, 117; Schlenker 2011b)

-

(31)

5.7 Whenever

-

- -

- [more than 10 linguists] meet

[more than 10 linguists] meet  -

- -

- [fewer than 4 psychologists],

[fewer than 4 psychologists],  -

- -

- they criticize

they criticize  -

- .

.⇒ the linguists present criticize the psychologists present [3/3 consultants] (possible but less preferred [1/3 consultants]: the linguists present criticize psychologists in general) (Video 3941 https://youtu.be/NzbFwZD_G_c)

❑ Theoretical consequences

These findings help address an important theoretical question for dynamic semantics: are discourse referents introduced by (i) all quantifiers (van den Berg 1996; Brasoveanu 2006; Nouwen 2003) or (ii) only existential ones (such as a man, two men, etc.; e.g. Kamp and Reyle 1993)? Kamp and Reyle (1993) postulated two different mechanisms of anaphora resolution for donkey anaphora: for existential quantifiers, they adopted a dynamic approach allowing for co-indexing without c-command. But for other quantifiers, they adopted a different mechanism, inspired by E-type theories, in which the dependent pronouns went proxy for definite descriptions. The sign language and gestural data discussed in this section suggest that no differential treatment is called for: loci appear to establish the same kind of formal and interpretive relation in the two cases, which argues for the homogeneous accounts developed by van den Berg, Brasoveanu and Nouwen, among others (for details in the context of sign language data, see Schlenker 2011b).

5.2.2 Dynamic anaphora across negation

❑ Sign language vs. gestural data

Schlenker (2011b) further argues that dynamic binding can occur across negation. The existence of such examples in English, as in (32), is not controversial, but their analysis is: the question is whether there is a formal anaphoric link between the donkey pronoun it and its antecedent an umbrella, despite the presence of the intervening negations. This question is of theoretical interest because early dynamic theories, such as Kamp (1981) and Heim (1982), predicted that negation should ‘break’ dynamic anaphoric links. Schlenker (2011b) argues that ASL examples make such a link visible, as in (33) and (34).

-

(32)

It is not true that John doesn’t have an umbrella. I have just seen it: it is red.

-

(33)

IX-1 NOT DOUBT SOMEONEa WILL GO MARS. IX-a WILL FAMOUS.

‘I don’t doubt that someone will go to Mars. He wil be famous.’ (ASL, i P1040982; Schlenker 2011b)

-

(34)

IX-1 DOUBT [NO DEMOCRAT PERSON IX-open-handa]a WILL MATCH SUPPORT HEALTH CARE BILL WITH [REPUBLICAN PERSON]b. IX-1 THINK IX-a WILL a-GIVE-b A-LOT MONEY.

‘I don’t think no Democrat will cosponsor the healthcare bill with a Republican. I think he [= the Democrat] will give him [= the Republican] a lot of money.’ (ASL, 2, 229; Schlenker 2011b).

Schlenker (2011b) further argues that anaphora to a none-type quantifier is possible if the negative quantifier is itself under a negative operator, so that it ends up having existential force, as in (35)—or for that matter in (34) if one considers anaphora to no Democrat.

-

(35)

IX-1 DOUBT NO ONEa WILL GO MARS. IX-a WILL FAMOUS.

‘I don’t think no one will go to Mars. He [= the person who goes to Mars] will be famous.’ (ASL, i, P1040980; Schlenker 2011b)

Our attempt to replicate the sign language data with gestures starts with a paradigm that should be degraded: in (36), pro-speech pointing attempts to establish donkey anaphora to quantifiers that fail to have existence implications; on any theory, this should be degraded, and it is.

-

(36)

-

- -

- [No Democrat] will strike a deal with

[No Democrat] will strike a deal with  -

- -

- [a Republican], but we’ll have to give a lot of money to

[a Republican], but we’ll have to give a lot of money toa. 4

-

- .

.= the Democrats in general

b. 3.7

-

- .

.= the Republicans in general [3/3 consultants], or: the Republican or Republicans who strike a deal with Democrats [1/3 consultants]

(Video 3943 https://youtu.be/4-Al0spbN0A)

The question is whether this example gets significantly ameliorated upon the addition of a negation at the beginning, as in (37). There is in fact an amelioration, but it is less striking than one might wish. Still, it is worth noting that the inferential judgments change as well: for lack of a ‘donkey’ reading, pertaining to the Democrats and Republicans that performed the relevant action, our consultants primarily obtained in (36) readings involving the Democrats or Republicans in general. Things are different in (37), which has the hallmarks of a ‘donkey’ reading pertaining to the Democrats or Republicans who performed the relevant action.

-

(37)

It is not true that

-

- -

- [no Democrat] will strike a deal with

[no Democrat] will strike a deal with  -

- -

- [a Republican], but we’ll have to give a lot of money to

[a Republican], but we’ll have to give a lot of money toa. 5

-

- .

.= the Democrat or Democrats who strike a deal with Republicans [3/3 consultants], or (less preferred) the Democrats in general [1/3 consultants]

b. 4.7

-

- .

.= the Republican or Republicans who strike a deal with Democrats [3/3 consultants], or (less preferred) the Republicans in general [1/3 consultants]

(Video 3947 https://youtu.be/DRSn9e6qKqs)

In English, a donkey pronoun can take as an antecedent a none-type quantifier found in a separate disjunct, as in (38). Schlenker (2011b) argues that this fact carries over to overt indexing in sign language, as in (39). As things stand, we have no evidence at all that this holds of gestural loci, as the judgments in (40) are rather degraded; in addition, inferential judgments are mixed.

-

(38)

Either there is no bathroom in this house or it is well hidden. (attributed to Barbara Partee; see also Geach 1962 and Evans 1977)

-

(39)

EITHER NO [DEMOCRAT IX-open-handa]a WILL MATCH SUPPORT HEALTH CARE BILL WITH [REPUBLICAN PERSON]b OR IX-a WILL a-GIVE-b A-LOT MONEY.

‘Either no Democrat will cosponsor the healthcare bill with a Republican, or he [= the Democrat] will give him [= the Republican] a lot of money.’ (ASL, 2, 230; Schlenker 2011b)

-

(40)

Either

-

- -

- [no Democrat] will strike a deal with

[no Democrat] will strike a deal with  -

- -

- [a Republican], or we’ll have to give a lot of money to

[a Republican], or we’ll have to give a lot of money toa. 3.7

-

- .

.= the Democrats in general [2/3 consultants], or the Democrat or Democrats who strike a deal with Republicans [2/3 consultants]

b. 3.3

-

- .

.= the Republicans in general [2/3 consultants], or the Republican or Republicans who strike a deal with Democrats [2/3 consultants]

(Video 3949 https://youtu.be/xmC5rq_3pdg)

❑ Theoretical consequences

Our gestural data weakly replicate sign language data that suggested that negation need not ‘break’ anaphoric dependencies, and that even negative quantifiers such as No NP can introduce discourse referents. Such facts are theoretically important because they might argue for relatively unconstrained theories of the establishment and retrieval of discourse referents, as argued in recent dynamic semantics and related works (see for instance Brasoveanu 2010 and Schlenker 2011b). The general idea is that co-indexing is more liberally established than one might have thought, including across negation: it is thus possible to co-index it and an umbrella in (41). But a semantic condition is responsible for ‘filtering out’ undesirable anaphoric possibilities. In (41), one can take the problem to be that the index i denotes the maximal set of umbrellas that John has, but this set is asserted by the first sentence to be empty, hence a presupposition failure triggered by the pronoun \(\mathit{it} _{i}\).

-

(41)

#John doesn’t have [an umbrella]i. Iti is red.

It is striking that not just sign language data but also gestural data might help bolster this conclusion. But it is also clear that much more work is needed: extant sign language data on this topic (from Schlenker 2011b) are from two language (ASL and LSF) and two consultants; and gestural data are less than striking, as mentioned above.

Last, but not least, there is no theoretical reason why the case with disjunction in (40) should behave differently from that of negation in (37), but in our data (40) is rather degraded. On liberal theories of co-indexing, for which the deviance of (41) is due to a presupposition failure rather than to a lack of co-indexing, the effect of double negation can be reproduced with disjunction, as illustrated in (42)a.

-

(42)

a. Either John doesn’t have an umbrella, or it is broken.

b. Either John doesn’t have an umbrella, or his umbrella is broken.

This result is expected because theories of presupposition projection typically assume that a presupposition found in the second disjunct can be satisfied by the negation of the first disjunct (Beaver 2001; Schlenker 2008, 2009). This explains why the existence presupposition of his umbrella in (42)b is satisfied, and similarly in (42)a the maximal set of umbrellas that John owns can be presupposed to be non-empty. But on such liberal views of co-indexing, (40)a,b would be expected to be relatively acceptable, contrary to our data. A more thorough investigation should be conducted, possibly with experimental means.

6 Iconic loci

6.1 Iconic loci in sign language

Schlenker et al. (2013) argue, following Liddell (2003) and Kegl (2004), that loci may simultaneously function as variables and as simplified pictures of their denotations: pointing signs can target high loci when the denoted individuals are tall (or powerful or important); and different agreement verbs target different parts of a ‘structured locus’ depending on their meaning (for Liddell, this was part of an argument that there are gestural elements in loci). Crucially, these examples display ‘iconicity in action’: if one talks about individuals that rotated in various positions, the targeted position gets rotated as well. In addition, Schlenker (2014) argues that the iconic specifications of loci behave like phi-features in that they can be ignored in the course of ellipsis resolution and in the ‘focus dimension’ under only, as in the English examples in (3) above.

The argument for ‘iconicity in action’ in Schlenker (2014) was based on ASL and LSF paradigms involving a short and a tall person training to be astronauts by being rotated in various positions (here we follow the summary from Schlenker 2017a). It will help to start from the diagram in (43)a, which displays two finger classifiers, one representing a tall astronaut (on the right) and the other representing a short astronaut (on the left). Four sentences were constructed in which the classifiers were rotated in four different ways, as seen in (43)b.

-

(43)

Tall vs. short person rotations: Schematic representation from the signer’s perspective

a. Two finger classifiers

b. 4 positions of the finger classifiers (in 4 sentences)

The initial goal was to show that (i) in ‘standing’ position, ‘tall person’ indexing could be higher than ‘short person’ indexing; but in addition, that (ii) the indexed position could rotate in accordance with the position of the denoted person on the assumption that there was a geometric projection between the structured locus and the denoted situation.

These points were tested by the discourse in (44), which makes reference to a tall and to a short individual, and explains that they were rotated as shown in (43), with \(\mathit{CL} _{a}\) and \(\mathit{CL} _{b}\) finger classifiers representing a tall and a short person respectively.

-

(44)

HAVE TWO ROCKET PERSON [ONE HEIGHT]a [ONE SHORT]b. THE-TWO-a,b PRACTICE DIFFERENT VARIOUS-POSITIONS [positions shown].

IX-a HEIGHT IX-b SHORT, CLa-[position]-CLb-[position].

‘There were two astronauts, onea tall, oneb short. They trained in various positions [various positions shown]. They were in position __ [1 position shown].

a.

IX-b_lower_part NOT.

IX-b_lower_part NOT.The tall one liked himself. The short one didn’t.’

b. *IX-a_upper_part LIKE SELF-a_upper_part. IX-b_lower_part NOT LIKE SELF-b_upper_part.

[intended:] The tall one liked himself. The short one didn’t like himself.’

(ASL; 17, 178, 179, 180, and 181)

Let us focus for the moment on the boxed part of (44)a. In the ‘vertical position, heads up’ depicted in (43)b1, the reflexive SELF-a_upper_part targeted a position above the knuckles because the classifier \(\mathit{CL} _{a}\) denoted a tall person. But as different cases of rotation were considered, the finger classifiers rotated accordingly, and the ‘upper part’ of the locus indexed by SELF-a_upper_part did as well, as depicted in (43)b2, b3, b4.

In addition, the second (elided) sentence of (44)a was designed to test whether ASL ellipsis makes it possible to disregard height specifications, just as phi-features could be disregarded in the English example in (3). Analogously, in (44)a the antecedent VP includes a reflexive pronoun indexing the upper part of a locus, which is adequate to refer to a tall but not to a short person. Despite this apparent mismatch, the elided sentence is acceptable—unlike the overt counterpart in (44)b, which includes a reflexive SELF referring to a short person but with high specifications. Thus in ASL height specifications can be ignored by the mechanism that computes ellipsis resolution, just as is the case for gender features in (3) above. Whether one should conclude from this that height specifications are grammatical features is another matter, and Schlenker (2014) was cautious in this connection.

6.2 Iconic loci in gestures

Schlenker and Chemla (2018) argue (but without experimental data on this particular point) that loci in gestural verbs can display height differences. Thus in (45), one can use a high gestural locus to talk about a tall person.

-

(45)

Context: The speaker is of normal height, and is talking to a very short person, whose brother is very tall.

Your giant brother, I am going to

-

-

/

/  (-

(- )

) , and then you, I am going to ??

, and then you, I am going to ?? (-

(- )

) /

/  (-

(- )

) .

.

Schlenker and Chemla (2018) further suggest that these specifications can be ignored under ellipsis, as shown in (46).

-

(46)

Context: The speaker is of normal height, and is talking to a very short person, whose brother is very tall.

Your giant brother, I am going to

-

-

/

/  (-

(- )

) /

/  -

-

/

/  (-

(- )

) , and then

, and thena. [‘stripping’] you, too.

Possible reading: you too, I will punch/slap.

b. [VP-ellipsis] you, I will as well.

Consider for instance the case in which the sentence involves a high locus with the gestural verb  (-

(- )

) , unmarked for person. If the missing VP of (46)b were copied from the first sentence, we would obtain something like: you, I will

, unmarked for person. If the missing VP of (46)b were copied from the first sentence, we would obtain something like: you, I will as well, where

as well, where  is the elided gestural verb. But its high locus specification should yield deviance, since the addressee is short, not tall.

is the elided gestural verb. But its high locus specification should yield deviance, since the addressee is short, not tall.

But do these examples display ‘iconicity in action’? To test this, we investigate cases comparable to (43)–(44), where rotated loci conveyed gradient information about the positions of the relevant individuals. In (47)a, high object agreement is acceptable for the person in upright position, and low object agreement is acceptable for the person in upside down position. If we try to apply high agreement for the person in upside down position, the result is deviant, as in (47)b. Finally, the ‘high’ specifications can be disregarded in the course of ellipsis resolution, as in (47)c.Footnote 26

-

(47)

When I was a kid, I often got into fights with your siblings. Once, in a space museum, they were both mock-training to become astronauts:

-

- -

- [your brother] was

[your brother] was  -

- -

- arotating into all sorts of weird positions, and

arotating into all sorts of weird positions, and  -

- -

- [your sister] was

[your sister] was  -

- -

-

[doing the same thing].

[doing the same thing].I waited until your brother was very high, like

-

- -

-

this, and your sister was very low, like

this, and your sister was very low, like  -

- -

-

this. And then,

this. And then,a. 6.3 your brother, I tried to

-

-

, and your sister, I tried to

, and your sister, I tried to  -

-

.

.b. 3.5 your brother, I tried to

-

-

, and your sister, I tried to

, and your sister, I tried to  -

-

.

.c. 5.7 your brother, I tried to

-

-

, and your sister too!

, and your sister too!(Video 3961 https://youtu.be/2y1Lc2JKeJQ)

These results only bear on the availability of high and low indexing with gestural verbs. A question for the future is whether they can be replicated with gestural pointing towards high and low position; our current results are hard to interpret in this connection.Footnote 27

6.3 Theoretical conclusions

We conclude that gestural loci, just like sign language loci, can simultaneously behave as logical variables and as simplified pictures of their denotations. At this point, our conclusion only applies to loci that are part of gestural agreement verbs; loci that are accessed by full pointing gestures require more work.

Remarkably, iconic specifications on bound variables share the behavior of the phi-features in (3) in that they can be disregarded in the course of ellipsis resolution (and under only). While this could be explained by positing that such iconic specifications are grammatical features, a weaker conclusion is called for, as it has not been shown that only grammatical features can be disregarded in such environments (see Schlenker 2014 for a discussion of this theoretical issue in the context of sign language data).

A limitation must be noted. Our data need not be taken to involve locus rotation, as in the sign language example in (43): our gestural example is constructed in such a way that the male character is positioned high and the female character is positioned low. While this undoubtedly pertains to locus height, no locus rotation is needed to account for our observations. Thus it remains to be seen whether there is evidence for locus rotation when the absolute position height of a gestural locus remains fixed.Footnote 28

7 Plurality

7.1 Three types of repetitions in ASL Footnote 29

Schlenker and Lamberton (2019) argue that three types of repetitions can be found in ASL (they follow in part Pfau and Steinbach 2006; Coppola et al. 2013; Abner et al. 2015b; see also Koulidobrova 2018). Punctuated repetitions are made of the discrete iteration of the same nominal sign in different parts of signing space. They are typically interpreted as providing precise information about the number of elements involved, one for each iteration.Footnote 30Unpunctuated repetitions involve iterations with shorter and less distinct breaks between them, which makes the iterations less distinct and sometimes harder to count (similar devices were investigated in homesigners by Coppola et al. 2013 and Abner et al. 2015b).Footnote 31 They provide vague information about the quantity of denoted objects, but larger number of repetitions and quicker repetitions indicate larger quantities. Finally, continuous repetitions can be applied to some (but definitely not all) mass terms, in which case they indicate that an entire area or space was filled with the relevant substance; if several continuous repetitions are involved, they serve to refer to several such areas. In all three cases, the arrangement of the iterations can provide iconic information about the arrangement of the objects or substances.

To illustrate, let us consider the paradigm in (48), which contrasts a horizontal and a triangular arrangement of the repetitions, both punctuated and unpunctuated; pictures have been added to help the reader visualize the two shapes in key conditions. The horizontal version involves the repetition of the sign in a left-to-right row in front of the signer, with the shape: …; the triangular version involves repetition in the shape of a vertical triangle signed from left to right, with the shape: ∴ (the tip appears above, in the middle). There are clear truth-conditional differences between the two cases, and the iconic contribution is interpreted within the scope of the conditional, which suggests that it can be at-issue.Footnote 32

-

(48)

Context: The speaker will be renting the addressee’s apartment; he knows it contains trophies, but he hasn’t seen them.

POSS-2 APT IF HAVE ________, IX-1 ADD 20 DOLLARS.

‘If your apartment has ____, I will add $20.’

a. 7 [TROPHY TROPHY TROPHY]horizontal

____ = three trophies forming a line

⇒ if there at least three trophies in a horizontal line, $20 will be added. Precise condition about numbers: no hesitation for the ‘exactly 3’ condition

b. 7 [TROPHY TROPHY TROPHY]triangle

____ = three trophies forming a line

⇒ if there at least three trophies forming a triangle, $20 will be added. Precise condition about numbers: no hesitation for the ‘exactly 3’ condition