Abstract

Digitalization accumulates data in a short period. Smart agriculture for crop identification for cultivation is a common problem in agriculture for agronomists. The generated data due to digitalization does not provide any useful information unless some meaningful information is retrieved from it. Therefore from the existing information system, prediction of decision for unseen associations of attribute values is of challenging. This paper presents a model that hybridizes a fuzzy rough set, real-coded genetic algorithm, and linear regression. The model works in two phases. In the initial phase, the fuzzy rough set is used to remove superfluous attributes whereas, in the second phase, a real-coded genetic algorithm is used to predict the decision values of unseen instances by making use of linear regression. The proposed model is analyzed for its viability using agricultural information system obtained from Krishi Vigyan Kendra of Thiruvannamalai district of Tamilnadu, India. Further, the accuracy of the proposed model is compared with existing techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Farmers in the olden days had difficulties in cultivating a plant in a specific place. They are not aware of which plant is suitable to cultivate in a specific place. They minutely measure the variations within the land and sprays the required fertilizers for increasing productivity. Smart agriculture is a recent and advanced technology adapted in agriculture for the rapid increase in agriculture productivity. With a smart agriculture system, farmers can monitor the needs of the plant and accordingly sprays the pesticide for enhanced productivity. In the modern days with full of digitalization, it is essential to think of smart agriculture to automate, to monitor the agriculture-related activities. Also, it is essential to increase production to meet the needs of a growing population to meet the demand for natural resources. It, in turn reduce the cost of the smart agriculture system. Simultaneously, increasing productivity, and identifying suitable crop based on soil and water conditions is an another issue. To automate the process, agriculture data should be fed to the smart agriculture system, and the farmers can understand that the productivity can be increased if such a kind of plant can be cultivated in that field.

In the current scenario, digitalization is achieved with the help of the internet with many devices like desktops, laptops and many more. Because of this, data is generated, and it cannot be manageable in certain situations. Extracting some meaningful information from it is critical. Another issue also associates data cleaning. It paves the way for improving the efficiency concerning time, storage, accuracy and many more. Additionally, handling uncertainties present in data is also of prime concern. Further, attribute reduction helps removeing superfluous attributes from the information system. All these lead to recent research in information retrieval, and it became challenging for the researcher.

Initially, statistical techniques were employed in agriculture to handle uncertainties in information retrieval. Fuzzy set was introduced later to handle uncertainties with the help of membership function [36]. But, designing membership function is a critical issue and requires expertise. Further to handle uncertainties in information retrieval rough set [28] was introduced. It deals with classification of elements ground on indiscernibility relation. However, an equivalence relation is not suitable for analyzing many problems. To handle such circumstances, equivalence relation is hypothesized to fuzzy equivalence relation. It leads to the fuzzy rough set and the rough fuzzy set [15]. Fuzzy-rough hybridization allowed the researchers to further refine it to achieve better results for feature selection, classification, prediction, and clustering [6, 20,21,22, 35]. Similarly, in another direction equivalence relation is hypothesized to fuzzy tolerance relation. It leads the way to rough set on fuzzy approximation space (RSFAS). Besides, hesitation is introduced and it leads the way to rough set on intuitionistic fuzzy approximation space with the help of intuitionistic fuzzy proximity relation [2]. Similarly, the rough set is extended to rough set on two universal sets [2, 25] and equivalence relation is hypothesized to binary relation. All these models are analyzed over various problems and in different dimensions [1, 9, 19, 23, 26, 33]. However, these theories generate much decision rules which include certain and approximate rules. Simultaneously, it creates a specific problem while implementing in real-life situations.

Similarly, the rough set is hybridized with many techniques. The rough set is hybridized with formal concept analysis and domain intelligence to minimize the number of rules [2]. However, domain intelligence requires expertise, and it leads to a limitation. Further, the rough set and neural network are integrated, and it is analyzed over smart agriculture information system [2, 14]. Again, RSFAS and formal concept analysis are combined and studied over marketing management [2]. Similarly, RSFAS is combined with Bayesian classification, soft set [2], and neural network [4, 10, 24]. The hybridization of rough set and neural network [2] is studied over smart agriculture for identification of suitable crops based on soil and water conditions. Similarly, integration of RSFAS and neural network is studied over smart agriculture [4]. Besides, intuitionistic fuzzy optimization technique is used in agriculture production [7, 27]. These applications are used for prediction without optimization of decision rules. It means that the prediction is based on certain and approximate rules.

Further to optimize the rules, the rough set and binary coded genetic algorithm (BCGA) is integrated and studied over smart agriculture [30]. When the information system contains many parameters, then bit representation of chromosomes in the encoding process is tedious. Also, it uses domain intelligence while studying over smart agriculture information system. To overcome the limitation of the encoding process, the rough set (RS) and real-coded genetic algorithm (RCGA) is integrated and studied over agriculture system for smart crop identification for cultivation ground on climatic, water, and soil conditions [31]. However, the accuracy of the model is reduced, and it leads to further research.

Keeping view to the above analysis, this research work integrates fuzzy-rough set, RCGA, and multiple linear regression. Initially, the fuzzy-rough set is used to remove the superfluous attributes. Further generation of decision rules is carried out. The prime advantage is that fuzzy-rough methodology merges vagueness of fuzzy set and indiscernibility concept of rough set. Additionally, the parameters are processed without any domain intelligence. Here fuzzy-rough dependency degree identifies the dependencies of a condition attribute to a decision attribute. The generated specific and approximate rules are passed into the RCGA in getting optimized decision rules. The projected model is analyzed over agriculture information system for smart crop identification ground on water, and soil conditions obtained from Krishi Vigyan Kendra (KVK) and soil testing laboratory of Tiruvannamalai, Tamilnadu, India.

Organization of remained part of the article is as follows: Section 2 describes the foundations of fuzzy-rough set. Section 3 focuses on regression analysis followed by RCGA with its crossover and mutation operator in Section 4. K-nearest neighbour (KNN) algorithm is presented in Section 5 followed by a proposed predicted model in Section 6. An empirical study on smart agriculture pertaining to crop identification based on water and soil conditions is presented in section 7. Results and analysis of the empirical study is discussed in Section 8. Finally, the article concludes in Section 9.

2 Foundations of fuzzy rough set

Rough set data analysis [28] supports only absolute parameter values. In a real-valued featured information system, it is necessary to perform a discretization before any analysis of rough set. However, discretization using fuzzy set is not exploited much. Therefore, in this article, we use fuzzy rough set (FRS) for data reduction while analyzing information system containing real values [16]. The prime concern in using FRS is to find the fuzzy indiscernibility class without any discretization.

The basic notion of FRS is ground on fuzzy indiscernibility relation. The introduction of fuzzy indiscernibility relation leads to fuzzy equivalence classes. The fuzzy indiscernibility relation on the universal set Z identifies the indistinguishability between two elements ui, uj ∈ Z. It is defined as μZ(ui, ui) = 1, μZ(ui, uj) = μZ(uj, ui), and μZ(ui, uk) ≥ μZ(ui, uj) ∧ μZ(uj, uk), where ui, uj, uk ∈ Z and μ represents the membership function. These conditions determine the fuzzy reflexive, fuzzy symmetric and fuzzy transitivity among elements and lead to fuzzy equivalence class [ui]Z. elements belonging to a fuzzy equivalence class [ui]Z, assume membership values concerning the given class and it lies in the interval [0,1]. The notion of a fuzzy equivalence class is the fundamental concept of FRS. Further using this, the concept of fuzzy lower and fuzzy upper approximations are defined.

Before we define fuzzy lower and upper approximations, let us define an information system, S. It has 2-tuples such that S = (Z, P), where Z = {u1, u2, u3,⋯ , un} is the universe and P = {p1, p2, p3,⋯ , pm} be the set of parameters or attributes containing both conditions and decision. Let p ∈ P. The fuzzy equivalence class induced by p ∈ P is given as Z/IND{p}. Let us assume Z/IND{p} = {Ap, Bp}. It means that the attribute p generates two fuzzy sets Ap and Bp. Let P = {p, q}, Z/IND{p} = {Ap, Bp}, and Z/IND{q} = {Aq, Bq}. The fuzzy indiscernibility class originated by Z/IND{p, q} carries the cartesian product of Z/IND{p}, and Z/IND{q}. It is for the reason that the elements may be the member of many equivalence classes. Thus,

Let Z/P = {C1, C2, C3,⋯ , Ck} be the fuzzy equivalence class generated on employing the parameters P. Let the target fuzzy set be Y. The fuzzy P-lower \((\mu _{\underline {P}Y}(u))\) and fuzzy P-upper \((\mu _{\overline {P}Y}(u))\) approximations are interpreted with membership of indipendent element u ∈ Z. It is defined as:

The order pair \((\underline {P}Y, \overline {P}Y)\) is known as a FRS, where the P-lower and P-upper approximation of individual element of Y is defined using (2) and (3) respectively. Besides, the membership of an element u ∈ Z belonging to such an equivalence class Ci ∈ Z/P, i = 1,2,⋯ , k is calculated by applying (4).

Another important concept of rough set is the attribute reduction. This allows to remove the unwanted attributes present in the decision system. The notion of parameter reduction is also extended to fuzzy rough set. The fuzzy positive region is elucidated as the conjunction of all fuzzy lower approximation of an element u ∈ Z. Assume \(P_{1}\subseteq P\) and \(P_{2}\subseteq P\). The elements of P1-fuzzy positive region of P2 has the membership as defined in (5). Similarly, the dependency of P1 on other parameter P2 is calculated by applying the dependency function as elucidated in (6). It is denoted as \(\gamma _{P_{1}}(P_{2})\).

In the traditional rough set, reduct R is the subset of parameters that may have the same information as the whole attribute set P. It means that the degree of dependency γR and γP are identical. If there is no uncertainty in the information system, then the degree of dependency in case of the rough set is 1. However, in the case of a FRS, the degree of dependency is not equal to 1 even if the decision system has no uncertainty. It is for the reason that the element may be a member of many fuzzy equivalence classes. Considering it, now we present fuzzy rough quick reduct (FRQR) algorithm that determines the dependency between condition attributes and the decision. Eventually, it starts with a null set and includes a parameter and calculates γ untill it arrives almost 1. It make an attempt to add an element into the reduct ground on the degree of dependency. The algorithm terminates when there is no increase in the degree of dependency in spite of including a new attribute. The following symbols are employed in the fuzzy rough quick reduct algorithm.

-

Pc : Conditional attributes

-

Pd : Decision attributes

-

R: Reduct

-

T: Temporary reduct

-

γoptimal: The optimal value of the degree of dependency

-

γinitial: Initial value of the degree of dependency

2.1 A numerical illustration

This section demonstrates the computational procedure of FRQR algorithm. Consider the decision system given in Table 1. The FRQR algorithm starts with a void reduct set initially and add on the attribute and finds out the dependency function. Dependency function is calculated by evaluating the lower approximation of conditional parameters concerning the decision parameter.

In the given example, Z = {u1, u2, u3,⋯, u9}; P = {P1, P2, P3, Pd}, where Pd is the decision attribute. The parameter P1 generates 3 classes such as Z/P1 = {p11, p12, p13} and the decision attribute has two decisions such that Z/Pd = {Y1, Y2}. In order to compute fuzzy rough quick reduct, initially lower approximations are to be computed. Let us consider the attribute P = {P1} and the decision attribute Pd. The fuzzy partitions generated are given as Z/P1 = {p11, p12, p13} and Z/Pd = {Y1, Y2}. To compute fuzzy P-lower approximation of decision Y1, \(\mu _{\underline {P}Y_{1}}(u)\), we have to consider each C ∈ U/P1 = {p11, p12, p13}. On considering C1 = {p11}, first of all we have to determine \(inf_{v\in Z}((1-\mu _{p_{11}}(u))\vee \mu _{Y_{1}}(u))\) for each u ∈ Z. The computation is given in Table 2. Further, we compute \(\mu _{p_{11}}(u) \wedge inf_{v\in Z}((1-\mu _{p_{11}}(u))\vee \mu _{Y_{1}}(u))\) to find out the membership value of each object belongs to class C1 in the fuzzy lower approximation. Similarly, \(\mu _{p_{12}}(u) \wedge inf_{v\in Z}((1-\mu _{p_{12}}(u))\vee \mu _{Y_{1}}(u))\) and \(\mu _{p_{13}}(u) \wedge inf_{v\in Z}((1-\mu _{p_{13}}(u))\vee \mu _{Y_{1}}(u))\) are computed to find out the membership value of each object belongs to class C2 = {p12} and C3 = {p13} respectively. For better understanding, Tables 3 and 4 presents the computation of greatest lower bound of the classes p12 and p13 respectively.

From the above computational Tables 2, 3, and 4, it is clear that the fuzzy P1-lower approximation of Y1 in which the object u1 belonging to \(\underline {P_{1}}Y_{1}\) with the membership value

Similarly, it can be obtained as \(\mu _{\underline {P_{1}}Y_{2}}(u_{1}) = 0.4\). Further, we compute the fuzzy positive region of the object u1 using the union operation of fuzzy P1-lower approximation. It is defined as \(\mu _{{POS}_{P_{1}}(P_{d})}(u_{1})= Sup(\mu _{\underline {P_{1}}Y_{1}}(u_{1}), \mu _{\underline {P_{1}}Y_{2}}(u_{1}))\) = 0.4. Likewise, we get

Thus the degree of dependency of Pd on P1, \(\gamma _{P_{1}}(P_{d})\), is computed using (6). It is given as:

Similarly, the degree of dependency of Pd on P2, \(\gamma _{P_{2}}(P_{d})\), and the degree of dependency of Pd on P3, \(\gamma _{P_{3}}(P_{d})\) are obtained as \(\gamma _{P_{2}}(P_{d}) = \frac {3.0}{9}\) and \(\gamma _{P_{3}}(P_{d}) = \frac {3.1}{9}\) respectively. According to the computation, the degree of dependency of the parameter P1 is greater than the degree of dependency of P2 and P3. Therefore, the reduct R = {P1}. Further, the search begins once again according to the algorithm and the degree of dependency for {P1, P2} and {P1, P3}are computed. It is given as:

According to the computation, a raise in the degree of dependency is occured. Thus the reduct is revised as R = {P1, P2}. The search procedure still continues and the degree of dependency for {P1, P2, P3} is obtained. The degree of dependency obtained as \(\gamma _{\{P_{1}, P_{2}, P_{3}\}}(P_{d}) = \frac {3.5}{9}\). Finally, the process terminates as there is no increase in the degree of dependency. Therefore, the final reduct R = {P1, P2}. It means that the attribute P3 is superflous and can be eliminated.

3 Multivariate analysis

Regression technique used for predictive analysis describe the relationship between independent variables and dependent variable. In an information system, attributes are considered as independent variables whereas decisions that are based on attributes may be considered as a dependent variable. It has applied to many real-life problems such as bankruptcy, time series modelling, stock market, and also weather telecast [3, 8, 17].

Multi variable regression analysis is an efficient procedure used for finding the association between the elements and its parameters that includes both conditions and decision. Formally, it is expressed as an equation of decision pd on parameters {p1, p2, p3,⋯ , pm}, where 𝜖 is the error term. Regression analysis approximately fit the relationship between pi and pd and so an error term is included. It is defined as the dissimilarity between the actual value (pd) and the predicted value \((\widehat {p_{d}})\) i.e., \(\epsilon = (p_{d} - \widehat {p_{d}})\).

The constants bi;1 ≤ i ≤ m are regression coefficients that represent the coefficient of the parameters {p1, p2, p3,⋯ , pm} and b0 is the interception. The increase or decrease of the predictive variable pd is influenced by regression coefficients.

4 Foundations of real-coded genetic algorithm

Optimization finds the optimal solution. The optimal solution either maximizes or minimizes the objective function. The maximization or minimization depends upon the problem undertaken. There are several methods available to get an optimal solution. Genetic algorithm is one such method. It follows the principle of natural selection and is a search based optimization. It starts with an encoding of chromosomes, and the collection of chromosomes is said to be the population. Each chromosome is evaluated for its fitness function value, either it can be a maximum or minimum value. The fitness function is defined based on the problem undertaken, and it forms the original concept of the genetic algorithm. The genetic algorithm iterates towards this fitness function and gets the optimized results. Followed by the fitness function, reproduction operators which uses selection, crossover, and mutation are applied. The reproduction operators are used to arrive at a new set of populations, and the genetic algorithm procedure starts again with the newly generated chromosomes. The chromosomal representation is determined based on the problem undertaken. It can be either binary coded representation [30] or real coded representation [18]. Real-coded genetic algorithm (RCGA) is used over real values. In this article, we adopt the real-coded genetic algorithm as our information system contains real values. A general structure of the genetic algorithm and basic concepts of RCGA is explained below for completeness of the paper.

4.1 Selection

Selection plays an important role in the reproduction phase of the genetic algorithm. The best individuals from a population are selected based on the fitness function. In predictive models, the fitness function is considered as minimization of error. RCGA iterates over this minimization function and terminates when the error is negligible. Several methods are available for selection. However, popular selection operators are roulette wheel selection and tournament selection [11].

4.1.1 Roulette wheel selection

In this section, we discuss roulette wheel selection briefly. Roulette wheel selection comprises of five steps as discussed below [11].

-

1.

Initially for all the chromosomes the fitness is calculated. Ground on the fitness, the average fitness, AG, is calculated using (9), where n refers to the size of population, and G(i) refers to ith chromosome fitness.

$$ AG = \frac{1}{n} \sum\limits_{i=1}^{n} G(i) $$(9) -

2.

Secondly for every chromosome, calculate the expected count E(i) using (10), where E(i) refers to expected count.

$$ E(i) = G(i) / AG $$(10) -

3.

In third step, probability of every chromosome is computed using (11), where ip refers to ith chromosome probability. Further, based on the probability a particular chromosome is selected.

$$ i_{p} = E(i)/n $$(11) -

4.

Compute the probability and then cumulative probability of the selection.

-

5.

The mating pool is created by engendering a random number between 0 and 1. Further these random numbers specifically identify the particular string.

4.1.2 Tournament selection

In tournament selection, chromosomes (individuals) compete with each other based on the fitness function. The highest fitness value individual wins and survives for the next generation. The individuals which losses the tournament does not survive anymore and it is eliminated from the mating pool [11]. Out of several tournament procedures available in the literature, binary tournament selection is popularly used for various applications. Keeping view to our application, we have selected binary tournament for our problem. Further, these chromosomes go through reproduction, crossover, and mutation.

4.2 Crossover

Exchanging of properties between parent features and child features is carried out using crossover operator. The offspring or the child generated with the crossover operator has the best features of parents. A RCGA has many crossover operators such as Laplace crossover, flat crossover, simple crossover, arithmetic crossover, and BLX-α crossover. This article consider flat crossover, Laplace crossover, and a simple crossover for analysis. These crossover operators are defined briefly below.

4.2.1 Laplace crossover

In Laplace crossover, child chromosome extracts the properties of their parent chromosome by following Laplace crossover procedure [12]. It is based on crossover probability. Crossover probability tells the rate in which crossover should be carried out. Consider the parent chromosome shown in (12). Engender a random number α ∈ [0,1]. A random number β is generated by inverting the Laplace distribution. It is defined as in (13), where a is the location parameter and b is the scale parameter.

The offspring engendered is either too far or closer from the parents. It is identified from the value of b. The offspring is too far from the parent, when the value of b is more and it is closer to the parent when the value of b is very less. The offsprings engendered are given as \({y^{1}_{i}}={p^{1}_{i}}+\beta \left |{p^{1}_{i}} - {p^{2}_{i}}\right |\) and \({y^{2}_{i}}={p^{2}_{i}}+\beta \left |{p^{1}_{i}} - {p^{2}_{i}}\right |\), where i is the length of the chromosome. If the value of \({y^{1}_{i}}\) and \({y^{2}_{i}}\) lies outside the range of the corresponding parameter, then a random number is engendered between the range [32]. The engendered offspring is given in (14).

For example, consider parent chromosomes as u1 = 0.3 0.2 0.5 0.1 0.2 and u2 = 0.5 0.3 0.6 0.8 0.3. Let us assume a = 0, b = 0.5, and let α = 0.2. On employing the above procedure, the offsprings generated are y1 and y2, where

4.2.2 Simple crossover

Simple crossover uses two parents and produces two offspring. In this crossover, a position between the length of the chromosome is choosen at random and new offsprings are produced by interchanging the chromosomes after the specified position. On considering parent chromosomes as defined in (12) and the random position as 2, the offsprings produced are given as y1 and y2, where

For example, consider parent chromosomes as u1 = 0.3 0.2 0.5 0.1 0.2; and u2 = 0.5 0.3 0.6 0.8 0.3. Considering the random position as 2, and by using simple crossover the offsprings y1 and y2 are produced aa given below.

4.2.3 Flat crossover

Flat crossover uses two parents and produces one offspring. Let u1 and u2 be two parent chromosomes as defined in (12). The offspring generated after flat crossover is given as \(y_{1} = {y_{1}^{1}} {y_{2}^{1}} {y_{3}^{1}}{\cdots } {y_{n}^{1}}\), where \({y_{i}^{1}}\) is a random number between \(\left [p_{i}^{1}, {p_{i}^{2}}\right ]\) for 1 ≤ i ≤ n.

For example, consider parent chromosomes as u1 = 0.3 0.2 0.5 0.1 0.2; and u2 = 0.5 0.3 0.6 0.8 0.3. By using flat crossover, the offspring produced is given as y1 = 0.350.250.530.40.21.

4.3 Power mutation operator

Power distribution is employed in designing the power mutation operator. Power mutation is defined using (15), where q is a random number such that \(q = (\overline {u}- {p_{j}^{l}})/({p_{j}^{u}} - \overline {u})\) is a random number. \({p_{j}^{l}} = Min(p_{j})\) and \({p_{j}^{u}} = Max(p_{j})\) represents the lower and upper value of the parameter (pj) respectively. The populated chromosome is denoted as \(\overline {u}\). Additionally, another random number s is computed using power distribution s = r1/h. Additionally, r ∈ [0,1] refers to a uniformly distributed random number and h refers to the index of the distribution and it varies from 0 to 1 [13].

4.4 Elitism

The individuals having best fitness value in the population are kept by elitism operator. Simultaneously, it discards the strings having worst fitness value. It comprises of following steps.

-

1.

The current population along with the next population are collected after the computing cycle.

-

2.

The chromosomes of double sized population are sorted in non-decreasing order of their fitness value.

-

3.

After the sorting, discard the bottom half of the population and preserve the upper half of the population for the next cycle.

-

4.

The selected chromosomes are again passed to real coded genetic algorithm for further processing.

5 Lazy learning algorithm

Lazy learning algorithm or K-nearest neighbor (KNN) is an instance based non-parametric technique generally used for classification and regression. IUsing this algorithm, unknown instances are classified based on the known instances using similarity measure. In KNN, the training samples are vectors in a multidimensional feature space. The feature space stores the vectors for analysis with a class to each vector. In classification, KNN stores the training data and classify the unknown instance to some class based on a similarity measure. The similarity measure can be defined by using Euclidean distance. It is also known as a lazy learner.

Consider a known instance ui = {pi1, pi2, pi3,⋯ , pim} and the offspring \(\hat {u}_{i} = \{\hat {p}_{i1}, \hat {p}_{i2}, \hat {p}_{i3}, \cdots , \hat {p}_{im}\}\). Known instance and offspring are apparently equal if the Euclidean distance between ui and \(\hat {u}_{i}\) is very small. The Euclidean distance between ui and \(\hat {u}_{i}\) is defined in (16). The prime objective is to find out approximate match between known instance and offspring [34].

Further for each chromosome, the square of the difference between actual and approximate decision value is obtained. If pid be the actual decision and \(\hat {p}_{id}\) be the approximate decision value of the ith chromosome, then the difference \(G(i) = (p_{id}-\hat {p}_{id})\) provides error rate of the ith chromosome. Additionally, the mean squared error (MSE) as defined in (17) is computed, and it becomes our fitness function. This fitness function is to be minimized to develop a better model.

6 Proposed research design

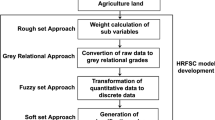

This section mainly focuses on the proposed research design and research methodology to predict the decision of the future instances. The agriculture information system contains continuous values. The attribute reduction policy of rough set cannot be applied directly as rough set works with categorical values. If we employ some discretization policy, then there is a loss of information. So, the concept of fuzzy rough set is used and fuzzy rough quick reduct algorithm is used to find out the reduct. This phase help us to remove superfluous attributes. The proposed prediction system is built by hybridizing fuzzy rough sets, RCGA, regression analysis, and k-nearest neighbour algorithm. The FRS is used for selecting the important features. It helps in removing the unwanted attributes. Ultimately, it minimizes the computations. Further analysis with the reduced information system is carried out with the real-coded genetic algorithm. The information system is trained with a real-coded genetic algorithm to get an almost accurate prediction. The training process integrates the RCGA, multiple linear regression, and k-nearest neighbour algorithm. The fitness function is defined based on regression analysis. The error occurred between the predicted decision value and the actual value in the information system is defined as the fitness function. The proposed system that consists of four modules is depicted in Fig. 1. Different modules of the proposed design consist of a data preparation module, a attribute selection module, decision system training and testing module, and decision validation module.

Data preparation module, proper structuring of data is carried out based on the objective. Before further analysis, on the structured data, a sequence of data cleaning tasks such as consistency check, removing noise, and data completeness is done to ensure that the data are as accurate as possible. Feature selection module identify the parameters that are superflous using FRQR algorithm. Once, the superflous attributes are identified, they are removed from the information system so as to minimize the computations. Finally, the data are divided into three parts such as training, testing, and validation. The training data is analyzed using RCGA along with linear regression and KNN algorithm for identification of crop for cultivation. Further, the testing phase identify the best combination of RCGA for the decision making. The results obtained are further validated with the help of validation data and the accuracy of the model is obtained.

The second phase of the proposed research design, various combinations of the RCGA due to selection and crossover operators are considered. As selection operators, we consider roulette wheel selection and tournament selection. Similarly, for crossover operator we consider flat crossover, Laplace crossover, and simple crossover. However, the mutation operator is kept as constant. The various combinations considered for analysis are roulette wheel with Laplace crossover (RWLX), roulette wheel with flat crossover (RWFX), roulette wheel with simple crossover (RWSX), tournament selection with flat crossover (TSFX), tournament selection with Laplace crossover (TSLX), tournament selection with simple crossover (TSSX). The fuzzy rough concept of the initial phase is hybridized with these combinations. The various methods considered for analysis are fuzzy rough roulette wheel with Laplace crossover (FRRWLX), fuzzy rough roulette wheel with flat crossover (FRRWFX), fuzzy rough roulette wheel with simple crossover (FRRWSX), fuzzy rough tournament selection with Laplace crossover (FRTSLX), fuzzy rough tournament selection with flat crossover (FRTSFX), fuzzy rough tournament selection with simple crossover (FRTSSX). Performance of all these combinations is compared for its accuracy, success rate, and execution time. For better understanding, the proposed research design of the second phase is depicted in Fig. 2. This phase has the prime objective to minimize the mean squared error as defined in (17).

Besides, the proposed design has some advantages. In this model, we have used RCGA that has certain precedence over BCGA [30]. It is because, in the case of BCGA, the search space is more while the data size is large. It is due to bit representation of attributes presentation in an information system. Also, more processing time is required for encoding and decoding the dataset in binary representation. Simultaneously, there is no processing involved in real-coded genetic algorithms for encoding and decoding, since the chromosomes are framed with the real values of the decision system. On the contrary, the FRS has advantages over the rough set. In the rough set processing, a real-valued decision system is reduced to the qualitative decision system by some discretization process. It may lead the way to loss of information. The discretization process is not involved in the fuzzy rough set, and so it has advantages over rough set while analyzing a real-valued information system.

7 Study on smart agriculture for crop suitability identification

This section analyzes the proposed model on considering a hypothetical study on smart agriculture crop suitability identification. The prime component of Indian occupation is agriculture, and most of the people survive with agriculture. The essential components for agriculture crop production are soil and water. But, the farmers are unknown on the variety of crops that can be cultivated on their land. Simultaneously, it may provide good revinue for their hard work. Therefore, it is essential for the farmers to pick out the crop to be fertilized at a particular place if the soil and water specifications are given. It helps in increasing not only the crop production but also the economy of India. Moreover, farmers are comfortable with this kind of prediction because they are aware of the crop to be fertilized in their land.

The present research work has used classification and prediction technique of data mining. The proposed model is tested for its accuracy, success rate by using the agricultural data obtained from KVK of Tiruvannamalai, Tamilnadu, India. Variety of crops such as paddy, tomato, and chillies are cultivated around every part of India. However, the growth of agriculture crop mainly depends on soil and water. Keeping view to the growth of agriculture crops, we have restricted our analysis for Tiruvannamalai district of Tamilnadu, India. In spite of the variety of crops cultivated here, we focus only on the vegetable growth of this region. 1039 villages in this district mainly survive with agriculture, and all the 1039 villages are considered. An aggregate of 4156 data are collected from Tiruvannamalai Krishi Vigyan Kendra and soil testing laboratory. The parameters are soil component (P1), major soil nutrients (P2), minor soil nutrients (P3), water component (P4), and water nutrients (P5). Soil component includes soil electrical conductivity (p11), and soil PH (p12). Major soil nutrients are nitrogen (p21), phosphorous (p22), and potassium (p23) whereas minor soil nutrients are ferrous (p31), zinc (p32), manganese (p33), and copper (p34). Water component includes water PH (p41), and water electrical conductivity (p42) whereas water nutrients include calcium (p51), magnesium (p52), and sodium (p53). In total 14 conditional attributes have a major influence on plant growth. The decision attribute, (Pd), is the agriculture crop that is primarily cultivated at the corresponding place. Various crops cultivated in these places are tapioca (8), little gourd (7), tomato (6), lady’s finger (5), bitter gourd (4), capsicum (3), chillies (2), snake gourd (1), and brinjal (0). The conditional and decision variables, its representation, and range of values are furnished in Table 5. Table 6 presents a sample information system containing all conditional attributes and decision.

7.1 Research methodology

The methodology involved in the analysis is discussed in this section. In the beginning, the information system is normalized to a form which is acceptable for analysis. The normalization procedure uses unit based normalization as it restricts all the data values in between 0 and 1. The normalization is done using (18), where Min(pj) refers to minimum value and Max(pj) refers to maximum value of the attribute pj. The normalized information is further converted to original form using (19).

Soon after normalization, the feature selection is carried out by using fuzzy rough set. Further, the agricultural information system is partitioned into three segments, such as training, testing, and validation. The training and testing dataset of the information system carries 60% of data while validation carry 40% data. The training set of 30% data is used to build a prediction model. Real-coded genetic algorithm starts its initial processing by framing the initial population. The conditional attributes after feature selection act as the chromosome. Whenever the crossover operators are applied, the offspring produced may fall out of the range and it may not be present in the dataset. A random number is computed within the range of the dataset to overcome this limitation.

Further, the mutation operator and elitism are applied over the resultant sequentially. The objective of applying optional operator elitism is to improve the overall RCGA performance. When an unknown test data is supplied, multiple linear regression evaluates the unknown test data against the training set employing the Euclidean distance similarity measure. Furthermore, the fitness function is defined based on the mean square error. The objective is to minimize the MSE. The training process is repeated until we get MSE < 0.5 or 10 times the same MSE. The testing dataset of 30% is used to measure the performance of FRRWLX, FRRWFX, FRRWSX, FRTSLX, FRTSFX, and FRTSSX. Further, the best computational procedure is identified.

Finally, the validation of the proposed model is carried out with the validation data of 40%. The validation is done by putting up confusion matrix. It determines the accuracy of the projected design ground on positive predictive value (precision) and sensitivity (recall). The precision, recall, and accuracy are defined below, where Tp refers to true positive; Tn refers to true negative; Fp refers to false positive, and Fn refers to false negative.

8 Experimental analysis

The projected model is analyzed using a computer having a configuration of 16 GB RAM; Intel Core i5-7200U CPU @ 2.50 GHz 2.70 GHz; and Windows 10 Pro - a 64-bit operating system; and Matlab is used for implementation. Initially, the information system is normalized using (18) before any analysis. Table 7 presents the normalized decision system of the sample decision system, Table 6.

The normalized decision system is analyzed with the FRS to get the features. Further the degree of dependency using (6) is computed. The degree of dependencies obtained on considering single parameter are given below. It is clear from the analysis that, the parameter P5 is significant. Further, we have computed the degree of dependencies of other parameters with P5. Again it is observed that the degree of dependency of parameters {P5, P3} is more than the degree of dependency of {P5, P1}, {P5, P2}, {P5, P4}, and P5. Therefore, {P5, P3} is selected. Again the degree of dependency of {P5, P3, P1}, {P5, P3, P2}, and {P5, P3, P4} are computed. It is clear that the parameters {P5, P3, P2} are selected as it is having a greater degree of dependency. To substantiate further, the degree of dependency of {P5, P3, P2, P1} and {P5, P3, P2, P4} are computed. But, the degree of dependency of parameters {P5, P3, P2, P1} and {P5, P3, P2, P4} are less than the degree of dependency of {P5, P3, P2} and so process terminates.

From the above analysis, it is clear that the parameters P1 and P4 are superflous and can be eliminated for further analysis. The chief parameters selected are P2, P3, and P5. The sub parameters of P1 and P4 that are not considered for analysis are soil electrical conductivity (p11), soil PH (p12), water PH (p41), and water electrical conductive (p42). Finally, three parameters P2, P3, and P5 comprising of 10 sub parameters are considered for obtaining the decisions. These 3 parameters comprising of 10 sub parameters are passed to RCGA for further processing.

8.1 Prelimnary setup

In feature selection, we identify the chief parameters of the decision system. Further, for building the model, the decision system is divided into training, testing, and validation. The training and testing comprises of 1247 (30%) elements each whereas validation comprises of 1662 (40%) elements. In the training phase of the RCGA, it is mandatory to fix the parameters for getting better accuracy. Results obtained are successful when mutation probability 0.01; crossover probability 0.9; with the scale parameters of Laplace crossover 0 and 0.1. Similarly, the tournament size is fixed as 3 for getting successful results. However, these parameter settings depend on the application, and need not be the same for all applications.

8.2 Results and discussions

Results obtained from six different methodologies are analyzed in this section. The results are analyzed in terms of accuracy, average time, and sucess rate. Comparison of six different methodologies is made for its performance with respect to prediction accuracy, successful runs, and average time. Let Sri refers to successful runs of ith methodology; Tri be the total runs of ith methodology; Mti be the minimal average time taken by ith methodology; Ati be the average time used by ith methodology; Opi be the elements that are envisioned correctly by the ith methodology; and Toi is total elements in the ith methodology. The performance estimation (PE) is defined in (20), where \({\beta _{1}^{i}}\), \({\beta _{2}^{i}}\), and \({\beta _{3}^{i}}\) are defined respectively in (21).

Additionally, the weights of different performance measure successful runs, execution time, and accuracy are w1, w2, w3 respectively. The weights take values in the range [0, 1] such that (w1 + w2 + w3) = 1. We have considered wi = k with wj = (1 − k)/2; j≠i, 1 ≤ i, j ≤ 3 for analyzing the methodologies, where k is a constant between [0,1]. The testing dataset is used to compare the performance evaluation PE of six methodologies mentioned earlier. It is challenging to visualize (6 × 3) incorporations in the graph when all the three performance measures are considered for all the methodologies. To interpret results clearly, it is ideal to keep two measures constant and vary the other measure from 0 to 1.

In the first case, execution time is obtained as performance estimation for all the six methodologies by giving equal weight to accuracy and successful run. Figure 3a better visualize the first case. Secondly, success rate is obtained as a performance estimation for all methodologies by giving equal weights to execution time, and accuracy. It is depicted in Fig. 3b. Finally, equal weighting is given to execution time and success rate. Further, accuracy for all methodologies is computed as a performance estimation. Figure 3c better visualizes the final case.

Further, we have also plotted the actual output concerning predicted output for all the methodologies. Multiple linear regression and RCGA is employed for obtaining the prediction. The predicted output for the distinct decisions from 0 to 8 together with actual output for all the methodologies are depicted in Fig. 4a to f. On analyzing Figs. 3 and 4, it is found that FRRWLX has minimal error rate. Additionally, the error rate for other methodologies FRRWFX, FRRWSX, FRTSLX, FRTSFX, and FRTSSX is more as compared to FRRWLX. It indicates that FRRWLX outperforms in perception with performance and error rate.

8.3 Decision validation

Finally, the proposed model is validated using validation dataset of 1662 elements. Prediction is carried out by inputting the elements without the decision attribute. The elements are analyzed for prediction using FRRWLX. The predicted and actual decision is compared and the confusion matrix is put up to validate the decision. Finally, positive predictive value, sensitivity, and accuracy is calculated. Table 8 presents the various metrices of the methodology FRRWLX.

8.4 Comparision of FRRWLX with existing methodologies

Comparison of the proposed methodology FRRWLX is done with other techniques to display the prospect of the contemplated research. Comparison regarding the accuracy of the proposed methodology FRRWLX, with six different models, are taken into consideration in this section. The six models taken for comparative analysis are Pawlak’s rough set (PRS) [28], association rule mining using rough set (ARMRS) of Rao and Mitra [29], rough set and Bayesian classification (RSBCL) [5], artificial neural network (ANN) [10], intuitionistic fuzzy optimization technique (IFOT) [7], and rough set real-coded genetic algorithm with roulette wheel selection and Laplace crossover (RSRWLC) of Rathi and Acharjya [31]. Empirical analysis data is taken into consideration for comparative analysis. The results obtained are presented in Table 9. It is well identified that the contemplated technique, FRRWLX performs well as compared to other techniques. Accuracy achieved with different methodologies FRRWLX, RSRWLC, PRS, ARMRS, RSBCL, ANN, and IFOT are 95.51%, 93.5%, 89.89%, 90.94%, 87.47%, 90.44%, and 88.53% respectively. The developed methodology FRRWLX achieves 2.01% better accuracy than RSRWLC model.

Further, FRRWLX is compared with RSRWLC [31] for its prediction accuracy, success rate and execution time. In Fig. 5a, both the methodologies FRRWLX and RSRWLC are compared with respect to success rate. Similarly, in Fig. 5b, both the methodologies FRRWLX and RSRWLC are compared with respect to execution time and in Fig. 5c, both the methodologies FRRWLX and RSRWLC are compared with respect to prediction accuracy. It is interesting to note from the Figures that the model FRRWLX is outperforming as compared to RSRWLC.

9 Conclusion

This investigation harmonizes fuzzy rough set with the RCGA. Initially fuzzy rough set, FRQR algorithm is used to identify the superflous attributes. These superflous attributes are removed as it do not have any influence in the information system. Further, in the training phase of decision system, RCGA employs regression analysis, and KNN algorithm to minimize the error. The fuzzy-rough technique is deployed for removal of superflous parameters whereas RCGA is used for prediction. K-nearest neighbour algorithm along with multiple linear regression is employed to minimize errors in training and testing phase. Six different methodologies FRRWLX, FRRWFX, FRRWSX, FRTSLX, FRTSFX, and FRTSSX, are analyzed for prediction. Agricultural dataset obtained from Krishi Vigyan Kendra and soil testing laboratory of Tiruvannamalai district of Tamilnadu, India is considered for analysis. From the experimental results, it is found that the methodology FRRWLX performs well as compared to with other methodologies. Further, comparison of the methodology FRRWLX with existing methodologies is carried out. It is found that the FRRWLX has prediction accuracy of 2.01% higher than the predicting accuracy of RSRWLC [28] which has higher accuracy than RARP, RS, RBCL, ANN, and IFOT. Additionally, FRRWLX has attained higher accuracy with less number of features as compared to RSRWLC. The proposed model helps in managing agriculture farm in many ways. It helps in identification crops to be cultivated in a land. Simultaneously, it can help analyze soil conditions and help farners in using minimum amount of fertilizers in thier land. In total, the proposed model helps in agriculture farm management using the help of information technology. The future work is stressed on the integration of fuzzy rough set with binary coded genetic algorithm to get improved accuracy. Besides, soft set on fuzzy approximation space integration with genetic algorithm could be explored.

References

Abed-Elmdoust A, Kerachian R (2012) Wave height prediction using the rough set theory. Ocean Eng 54:244–250

Acharjya DP, Abraham A (2020) Rough computing—A review of abstraction, hybridization and extent of applications. Eng Appl Artif Intell 96(11):103924. https://doi.org/10.1016/j.engappai.2020.103924

Adhikari R, Agrawal RK (2013) An introductory study on time series modeling and forecasting. LAP Lambert Academic Publishing, Germany

Anitha A, Acharjya DP (2017) Crop suitability prediction in Vellore district using rough set on fuzzy approximation space and neural network. Neural Comput Appl. https://doi.org/10.1007/s00521-017-2948-1

Acharjya DP, Roy D, Rahaman MA (2012) Prediction of missing associations using rough computing and Bayesian classification. Int J Intell Syst Appl 4(11):1–13

Bhatt RB, Gopal M (2005) On fuzzy-rough sets approach to feature selection. Pattern Recogn Lett 26(7):965–975

Bharati SK, Singh SR (2014) Intuitionistic fuzzy optimization technique in agricultural production planning: A small farm holder perspective. Int J Comput Appl 89(6):17–23

Chifurira R, Chikobvu D (2014) A weighted multiple regression model to predict rainfall patterns: principal component analysis approach. Mediterranean J Soc Sci 5(7):34–42

Cornelis C, De Cock M, Kerre EE (2003) Intuitionistic fuzzy rough sets: at the crossroads of imperfect knowledge. Expert Syst 20(5):260–s270

Dahikar SS, Rode SV (2014) Agricultural crop yield prediction using artificial neural network approach. Int J InnovRes Electr Electron Instrum Control Eng 2(1):683–686

Deb K (2012) Optimization for Engineering Design: Algorithms and Examples. PHI Learning Pvt. Ltd, New Delhi

Deep K, Thakur M (2007) A new crossover operator for real coded genetic algorithms. Appl Math Comput 188(1):895–911

Deep K, Thakur M (2007) A new mutation operator for real coded genetic algorithms. Appl Math Comput 193(1):211–230

Demartini E, Gaviglio A (2015) Bertoni, D, Integrating agricultural sustainability into policy planning: A geo-referenced framework based on Rough Set theory. Environ Sci Policy 54:226–239

Dubois D, Prade H (1990) Rough fuzzy sets and fuzzy rough sets. Int J Gen Syst 17(2-3):191–209

Dubois D, Prade H (1992) Putting rough sets and fuzzy sets together. In: Intelligent Decision Support. Kluwer Academic Publishers, Dordrecht, pp 203–232

Enke D, Grauer M, Mehdiyev N (2011) Stock market prediction with multiple regression, fuzzy type-2 clustering and neural networks. Procedia Comput Sci 6:201–206

Herrera F, Lozano M, Verdegay JL (1998) Tackling real-coded genetic algorithms: Operators and tools for behavioural analysis. Artif Intell Rev 12(4):265–319

Jensen R, Shen Q (2004) Fuzzy rough attribute reduction with application to web categorization. Fuzzy Sets Syst 141(3):469–485

Jensen R, Shen Q (2007) Fuzzy rough sets assisted attribute selection. IEEE Trans Fuzzy Syst 15(1):73–89

Jensen R, Shen Q (2009) New approaches to fuzzy rough feature selection. IEEE Trans Fuzzy Syst 17(4):824–838

Jensen R, Cornelis C (2011) Fuzzy rough nearest neighbour classification and prediction. Theor Comput Sci 412(42):5871–5884

Kuncheva LI (1992) Fuzzy rough sets: application to feature selection. Fuzzy sets Syst 51(2):147–153

Liu J, Goering CE, Tian L (2001) A neural network for setting target corn yields. Trans ASAE 44(3):705

Liu G (2010) Rough set theory based on two universal sets and its applications. Knowl Based Syst 23(2):110–115

Mckee TE (2000) Developing a bankruptcy prediction model via rough sets theory. Intelligent Systems in Accounting. Financ Manag 9(3):159–173

Papageorgiou EI, Markinos AT, Gemtos TA (2011) Fuzzy cognitive map based approach for predicting yield in cotton crop production as a basis for decision support system in precision agriculture application. Appl Soft Comput 11(4):3643–3657

Pawlak Z (1991) Rough Sets: Theoretical Aspects of Reasoning about Data. Kluwer Academic Publishers, Dordrecht

Rao DVJ, Mitra P (2005) A rough association rule based approach for class prediction with missing attribute values. In: Proceedings of the 2nd indian international conference on artificial intelligence, pp 2420–2431

Rathi R, Acharjya DP (2018) A rule based classification for agriculture vegetable production for Tiruvannamalai district using rough set and genetic algorithm. Int J Fuzzy Sys Appl 7(1):74–100

Rathi R, Acharjya DP (2018) A framework for prediction using rough set and real coded genetic algorithm. Arabian J Sci Eng 43(8):4215–4227

Singh A, Deep K (2015) Real coded genetic algorithm operators embedded in gravitational search algorithm for continuous optimization. Int J Intell Syst Appl 7(12):1–22

Sun B, Ma W, Liu Q (2013) An approach to decision making based on intuitionistic fuzzy rough sets over two universes. J Oper Res Soc 64(7):1079–1089

Tsoumakas G, Katakis I (2007) Multi label classification: An overview. Int J Data Warehousing Min 3(3):1–13

Wang X, Yang J, Teng X, Peng N (2005) Fuzzy rough set based nearest neighbor clustering classification algorithm. Fuzzy Systems and Knowledge Discovery, LNCS 3613. Springer, pp 370–373

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Conflict of Interests

First Author declares that he has no conflict of interest. Second Author declares that he has no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Acharjya, D.P., Rathi, R. An integrated fuzzy rough set and real coded genetic algorithm approach for crop identification in smart agriculture. Multimed Tools Appl 81, 35117–35142 (2022). https://doi.org/10.1007/s11042-021-10518-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-10518-7