Abstract

The multilevel image thresholding is one of the important steps in multimedia tools to understand and interpret the object in the real world. Nevertheless, 1-D Masi entropy is quite new in the thresholding application. However, the 1-D Masi entropy-based image thresholding fails to consider the contextual information. To address this problem, we propose a 2-D Masi entropy-based multilevel image thresholding by utilizing a 2-D histogram, which ensures the contextual information during the thresholding process. The computational complexity in multilevel thresholding increases due to the exhaustive search process, which can be reduced by a nature-inspired optimizer. In this work, we propose a leader Harris hawks optimization (LHHO) for multilevel image thresholding, to enhance the exploration capability of Harris hawks optimization (HHO). The increased exploration can be achieved by an adaptive perching during the exploration phase together with a leader-based mutation-selection during each generation of Harris hawks. The performance of LHHO is evaluated using the standard classical 23 benchmark functions and found better than HHO. The LHHO is employed to obtain optimal threshold values using 2-D Masi entropy-based multilevel thresholding objective function. For the experiments, 500 images from the Berkeley segmentation dataset (BSDS 500) are considered. A comparative study on state-of-the-art algorithm-based thresholding methods, using segmentation metrics such as – peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and the feature similarity index (FSIM), is performed. The experimental results reveal a remarkable difference in the thresholding performance. For instance, the average PSNR values (computed over 500 images) for the level 5 are increased by 2% to 4% in case of 2-D Masi entropy over 1-D Masi entropy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image segmentation is an important step in image processing, in which one can extract a set of meaningful homogeneous sub-regions [4]. The thresholding is one of the most popular approaches in image segmentation. The thresholding is generally founded on the similarity approach based on the pixel intensity and the corresponding histogram of an image. Several global thresholding methods are presented in the literature [13, 14, 45, 55, 56, 59, 74, 76] to segment the image, extract the patterns of interest. The thresholding is broadly classified into a bi-level and a multilevel approach. The bi-level thresholding partitions the image into two different sub-regions, whereas the multilevel image thresholding is an extension of the bi-level thresholding, in which the image is partitioned into more than two different sub-regions. As the bi-level thresholding does not provide us the desired pattern for real-life problems, the multilevel image thresholding is strongly recommended [7].

The histogram-based approach is more popular in thresholding-based image segmentation [55, 59], in which we simply need to determine the threshold (intensity) value that helps to partition the image into different subregions. Some valuable works found in the literature for the one-dimensional histogram-based thresholding approach are – Otsu’s method [43], Kapur’s entropy [25], Tsallis entropy [48, 50, 64, 65], Rènyi entropy [52], and Masi entropy [35]. To improve the segmentation performance, some researchers extended the one-dimensional histogram-based thresholding problem to a two-dimensional histogram-based thresholding problem such as 2-D Otsu’s method [33], 2-D Tsallis entropy [57], 2-D Renyi’s entropy [53], 2-D Tsallis–Havrda–Charvát entropy [54], edge magnitude [46], and gray gradient [47]. As the number of threshold increases in multilevel image thresholding, the complexity of the problem increases. To overcome the time complexity, some researchers suggested recursive algorithms [32, 49, 73] with the help of lookup tables. However, the computational time increases [62] as the number of threshold increases.

The time complexity in multilevel image thresholding can be resolved using a soft computing approach. In this framework, several methods are discussed in the literature, most of the popular techniques are based on nature-inspired algorithms. The genetic algorithm (GA) was used for optimal threshold values using a reduced length of histogram based on wavelet transform in [18]. The Cuckoo search (CS) is used to obtain the multilevel threshold value by Tsallis entropy [1], Kapur’s entropy [8], and edge magnitude [46] creation and shows an improvement of fitness value along with reduced computational time complexity. The artificial bee colony (ABC) was employed to reduce the computational time complexity to obtain the optimal multilevel threshold using maximizing the Kapur’s entropy in [21] and Tsallis entropy in [75]. The make computational efficient the minimum cross entropy-based multilevel image thresholding approach over exhaustive search method is demonstrated using particle swarm optimization (PSO) in [72], honey bee mating optimization (HBMO) in [20], and firefly algorithm (FA) in [22]. The differential evolution (DE) was employed in 2-D Tsallis entropy in [57] to obtain the optimal threshold value and shown more effective as compared to PSO, GA, ABC based approach. Ant colony optimization (ACO) was employed as an optimization problem in Otsu’s method in [78], which showed superior results over exhaustive Otsu’s method in quality of solution and processing time. The whale optimization algorithm (WOA) and moth-flame optimization (MFO) is used in [12] to obtain the optimal threshold value using Otsu’s method and found MFO shown better than WOA. The wind driven optimization (WDO) is used to obtain the multilevel threshold using maximizing the Kapur’s entropy in [8]. The gray wolf optimizer (GWO) shown stability to obtain the optimal threshold value using Otsu’s and Kapur’s entropy method in [26] when compared with PSO and bacteria foraging optimization (BFO). The crow search algorithm (CSA) is employed to obtain the optimal threshold value using maximizing the Otsu’s between class variance method in [11] and Kapur’s entropy method in [66]. The krill herd optimization (KHO) [5] shows its superiority to obtain the optimal threshold values as compared to BFO, PSO, GA, and MFO (using both Otsu’s and Kapur’s entropy-based multilevel image thresholding). The coral reef optimization (CRO) is employed in diagonal cross entropy (DCE) in [2] to obtain the optimal threshold value by preserving the edge information in the image. The Masi entropy-based multilevel image thresholding approach using the water cycle algorithm (WCA) was developed in [24], which demonstrates its superiority in quality and convergence rate when compared with Tsallis entropy.

The basic or original version of the optimization algorithm cannot be used for all types of problems. So, the researcher hybridizes or modified the basic version of the optimization algorithm to improve the performance and utilize them in the various specific optimization problem. An adaptive crossover bacterial foraging optimization algorithm (ACBFOA) [39] was proposed by combining the crossover mechanism of GA in the bacteria foraging optimization algorithm (BFOA) to enhance the searching optimal solution and then apply it to face recognition problem. A hybrid Cuckoo search-gravitational search algorithm (CS-GSA) was proposed in [40] to increase the exploration capability of CS using the gravitational search algorithm (GSA) parameter and then apply it to recursive filter design an signal processing application. A dividing-based many-objective evolutionary algorithm for large-scale feature selection (DMEA-FS) [28] was developed in the framework of many-objective evolutionary algorithm (MaOEO) for feature selection. A whale optimization algorithm – differential evolution (WOA-DE) is proposed in [31] by replacing the exploration phase of WOA using crossover and mutation operator of DE for better efficiency in 3D mesh modeling. A multi objective sparse span array (MOSSA) algorithm is proposed in [29] based on the framework of multi objective particle swarm optimization (MOPSO) for better antenna design. The Biogeography-based Optimization algorithm with Elite Learning (BBO-EL) is proposed in [9] by incorporating the elite learning operator based on PSO and successfully applied in multimodal medical image registration.

Some of the advancements in this framework and application to multilevel image thresholding are discussed here. The comparative quantum particle swarm optimization (CQPSO) is proposed in [17] to enhance the convergence rate and conquer the dimensionality problem and employed to obtain the optimal threshold efficiently using Otsu’s method. A modification of swarming and the reproduction behavior of BFOA leads to a modified bacterial foraging algorithm (MBFO) [58], which shows better performance than BFOA, PSO, and GA based approach for multilevel image thresholding using Kapur’s entropy. The FA utilizes the Brownian distribution in the Brownian distribution guided firefly algorithm (BDFA) is employed to obtain the optimal threshold value based on the Otsu’s method and shows better performance over FA in [63]. A hybridization of CS reset strategy in DE exhibits on hybrid differential evolution (HjDE) [38] and utilize in Otsu’s method based multilevel image thresholding to obtain threshold. A hybrid optimization algorithm bird mating optimization–differential evolution (BMO-DE) [3] by integrating DE in bird mating optimization (BMO) to increase the efficiency of Kapur’s entropy and Otsu’s method based multilevel image thresholding. To increase the efficiency of the Tsallis entropy in multilevel image thresholding an improved thermal exchange optimization is employed [67], which shows better performance than CSA, PSO, flower pollination algorithm (FPA), and bat algorithm (BA/BAT). Multi-objective differential evolution and firework algorithm for automatic simultaneous clustering and classification algorithm (MASCC-DE/FWA) [30] is developed by hybridizing the search strategy of DE and firework algorithm (FWA) to demonstrate the advantage of classification problem in image segmentation. This has motivated the authors to investigate a new multilevel image thresholding approach to enrich the image segmentation techniques as an important component of multimedia tools and applications.

The focus of the work is to develop a 2-D Masi entropy-based multilevel image thresholding to overcome the disadvantage of 1-D Masi entropy. The 1-D Masi entropy was successfully applied in bi-level thresholding in [42], and further extended to multilevel image thresholding in [23, 24, 27, 60]. As the 1-D Masi entropy depends on the image histogram that is formed in the knowledge of the occurrence of gray level information in the image. So the 1-D histogram suffers from the lack of contextual information, because it doesn’t consider the spatial correlation between pixels [20], which can be overcome by a 2-D histogram. This led us to extend the 1-D Masi entropy idea to the 2-D Masi entropy in multilevel image thresholding, which uses the 2-D histogram to obtain the Masi entropy. The 2-D Masi entropy-based multilevel image thresholding is an exhaustive search process of O(L2K) for K threshold in a L gray level image, which is quite expensive [62] . This led us to focus on another development, to find an efficient optimizer as the multilevel image thresholding problem can be considered as a specific optimization problem. For this purpose, we have chosen a new metaheuristic algorithm Harris hawks optimization (HHO) proposed in [19]. The HHO is inspired by the cooperative perching strategy of Harris hawks. The HHO has quite impressive results when compared with some well-known optimization algorithms – GA [61], PSO [61], biogeography-based optimization (BBO) [61], FPA [69], GWO [37], BA/BAT [70], FA [15], CS [16], MFO [36], teaching-learning-based optimization (TLBO) [51] and DE [61] described in [19].

However, the exploration behavior of Harris hawks depends on the equal perching chance (a probability of 0.5). Therefore, its exploration is limited and random. This has motivated us to use an adaptive perch probability decided by the fitness of the Harris hawks in an exploration stage. The second disadvantage in HHO is that in the mid of the search process the escape energy is limited within unity, which again limits the exploration. The second disadvantage is overcome by a leader-based mutation-selection approach. This leads us to propose a leader Harris hawks optimization (LHHO) based on the simultaneous mutation and crossover using the three best leader Harris hawks along with an adaptive perch probability to enhance the exploration. The LHHO inherently includes these two new ideas. The performance of the newly coined LHHO is evaluated using twenty-three well known classical unimodal and multimodal benchmark test functions [39, 41, 71], which shows significant improvement over the HHO due to enhanced exploration. This encourages us to employ the LHHO to find the optimal threshold values using the 2-D Masi entropy. To visualize the performance of our proposed 2-D Masi entropy, the results are compared with 1-D Masi entropy. For the experiment, 500 images from the Berkeley Segmentation Data Set (BSDS 500) [34] are considered. The segmented image obtained using optimal threshold value is evaluated using the well-known quantitative metric such as peak signal-to-noise ratio (PSNR) [1], feature similarity (FSIM) [77], and structure similarity (SSIM) [79]. For the comparison of the LHHO based multilevel image thresholding, other state-of-the-art optimization algorithm based methods such as – CS [1], PSO [72], FA [22], WDO [8], Sooty tern optimization algorithm (STOA) [10], DE [57] are used including the HHO [19].

The main contributions of this work are as follows:

-

A leader Harris hawks optimization (LHHO) is proposed to enhance the exploration capability of the HHO by augmenting the adaptive perch probability and leader-based mutation-selection approach. The LHHO is evaluated on 23 well-known unimodal and multimodal benchmark functions, which shows significant improvement over the HHO.

-

A 2-D Masi entropy-based multilevel image thresholding is suggested (extension of the 1-D Masi entropy) that uses a 2-D histogram considering the contextual information during the thresholding process.

-

An LHHO based multilevel image thresholding is proposed based on 2-D Masi entropy, which is validated using the BSDS 500 dataset and various state-of-the-art optimization algorithms.

The rest of the paper is organized as follows. In Section 2, a brief review of 1-D Masi entropy and the HHO algorithm are discussed. The proposed leader Harris hawks optimisations (LHHO) and its performance of benchmark functions are presented in Section 3. The 2-D Masi entropy-based thresholding approach is described in Section 4. The performance of the evolutionary 2-D Masi entropy-based multilevel image thresholding using the LHHO is presented in Section 5. Finally, the concluding remarks are drawn in Section 6.

2 Preliminaries

2.1 1-D Masi entropy-based image thresholding

A generalized entropy was introduced by Masi in [35], which is successfully applied to a bi-level thresholding in [42] and multilevel image thresholding in [23, 24, 27, 60]. The Masi entropy can handle the additive and non-extensive information in the physical system. The thresholding selection of an image using Masi entropy mainly depends on the 1-D histogram.

Let us consider a gray level image I of dimension M × N, which has L number of gray levels in the range of [0, L − 1]. The pixel with the gray level values at spatial coordinate (x, y) is represented as f(x, y), where x ∈ [1, 2, ⋯, M], and y ∈ [1, 2, ⋯, N]. Let ni represent the number of pixels with gray level value i, where i ∈ [0, 1, ⋯, L − 1]. Then the probability of each gray level can be represented as

and it must satisfy

The bi-level thresholding consists of two classes as foreground (Cf) and background (Cb), which is separated using a threshold value t ∈ [1, 2, ⋯, L − 2]. Then the foreground class probability (ωf) and background class probability (ωb) are defined as

and

The foreground class Masi entropy (Hf) and background class Masi entropy (Hb) are calculated as

and

where r is the entropic parameter is set to 0.5.

The bi-level thresholding based on Masi entropy is described by

and the optimal threshold t∗ based on Masi entropy can be defined as

The authors in [23, 24, 27, 60] extended the bi-level thresholding to multi-level thresholding using Masi entropy, where the number of thresholds is more than one. Let us consider that the image is divided into K + 1 classes as C = {C0, C1, ⋯, CK − 1, CK} which is a set of the foreground class C0, intermediate classes Ci = 1, 2, ⋯K − 1, and the background class CK based on the K threshold values t1, t2, ⋯, tK. The gray level values of different classes are defined as:

where 0 < t1 < t2 < ⋯ < tK < L − 1. So, let assign t0 = 0 and tK + 1 = L.

The different class probability for multilevel thresholds are defined as

Then, the Masi entropy Hj for the ith class calculated as:

The multilevel image thresholding based on Masi entropy is described by

and the optimal threshold \( \left\{{t}_1^{\ast },{t}_2^{\ast },\cdots, {t}_K^{\ast}\right\} \) based on Masi entropy can be defined as:

Finally, Eq. (13) is used as the fitness function to solve the problem of multilevel image thresholding in [23, 24, 27, 60]. However, different optimization techniques are suggested by these authors to maximize the objective function Eq. (13).

2.2 Harris hawks optimization (HHO)

In the year 2019, Harris hawks optimization (HHO), a population-based nature-inspired optimization algorithm is proposed in [19]. The HHO is inspired by the cooperative behavior and chasing style of the Harris Hawks to capture a prey called surprise pounce, which is also known as the “seven kills” strategy. Of the seven kills intelligent strategy, several hawks attack the prey cooperatively from different directions and converge on the detected prey. Sometimes, it also happens that some hawks suddenly move to another nearby place to find the new prey. Based on these behaviors of the Harris hawks, HHO is modeled with the exploration and the exploitation phases.

Let N population of hawks in search areas are cooperatively searching for the prey, then one can define Xi(t) as the current position vector of hawks for i (i = 1, 2, ⋯, N), Xprey(t) is the best position vector among all hawks in a search area or position vector of the prey for the current generation t (0 < t ≤ tmax) in a maximum generation tmax.

2.2.1 Exploration phase

The Harris hawks perch randomly on some location and wait to detect the prey based on the equal chance q for every two different strategies. One strategy is based on the position of other family members when q < 0.5, in which they are close enough to attack the prey. The other strategy is when the Harris hawk perches in some tall tree and waiting for the prey with a chance of q ≥ 0.5. These two strategies are modeled as:

where Xi(t + 1) is the next position vector of a hawk i (i = 1, 2, ⋯, N) in the generation t + 1, Xrandom(t) is the position vector of a randomly selected hawk in the generation t, Xm(t) is the mean position vector of all N hawks in the generation t and calculated as \( {X}_m(t)=\frac{1}{N}{\sum}_{i=1}^N{X}_i(t) \), LB is the lower bound of the search space, UB is the upper bound of the search space; and [r1, r2, r3, r4, q] are set of random numbers in the range (0, 1).

2.2.2 The transition between the exploration and the exploitation phase

The transition between the exploration and the exploitation depends on the escaping energy E of the prey in the range of (−2, 2), that can be modeled as:

where E0 is the initial escaping energy of the prey in the range of (−1, 1) calculated as:

The initial escaping energy 0 to −1 represents the prey that is physically flagging and 0 to 1 represents that the prey is strengthening. When the escaping energy |E| ≥ 1, the hawk tries to explore the prey location, and when |E| < 1, the hawk tries to exploit the nearby solutions.

2.2.3 Exploitation phase

The Harris hawks attack the intended prey, which is detected in the early phase by performing the surprise pounce in the exploitation phase. Let the prey always try to escape from the targeted area of hawks with an escaping chance r. When the escaping chance of r < 0.5, the prey successfully escapes, and for the escaping chance r ≥ 0.5, the prey fails to escape before the surprise pounce performed by the hawk. Based on the escaping energy E of the prey, the hawk performs soft besiege (softly encircle the prey from various directions) when |E| ≥ 0.5, and hard besiege (hardly encircle the prey from various directions) when |E| < 0.5. Based on the behavior of the prey, and the hawk chasing style, the HHO can be modeled using four possible attacking strategies as described below.

-

Soft besiege (r ≥ 0.5 and |E| ≥ 0.5 )

In this attacking strategy, the prey cannot escape, although it has enough energy to escape. During this attacking strategy, the hawk i (i = 1, 2, ⋯, N) softly encircle the prey until the prey is exhausted to perform the surprise pounce, which can be modeled as:

where J represents the jump strength of the prey during the escaping from the hawk target area, r5 is a random number in the range (0, 1).

-

Hard besiege (r ≥ 0.5 and |E| < 0.5 )

In this attacking strategy, the prey cannot escape as it is already exhausted. During this attacking strategy, the hawk i (i = 1, 2, ⋯, N) hardly encircles the prey and perform the surprise pounce. Which can be modeled as:

-

Soft besiege with progressive rapid dives (r < 0.5 and |E| ≥ 0.5 )

In this attacking strategy, the prey has enough energy to escape, and the hawk softly encircles the prey before a surprise pounce. The prey uses the levy flight (LV) [6, 68] movements to escape from the targeted area of the hawk.

Let the hawk i (i = 1, 2, ⋯, N) performs the soft besiege by evaluating the previous move and prey location as:

Then, the hawk compares its current move with the previous move to find it worthy or not. If it was not a worthy move, the hawk performs irregular, abrupt, and rapid dives while approaching the prey, which can be modeled as:

where S is a random vector of dimension 1 × D, D is the dimension of the problem and LF [6, 68] is the levy flight movements, which is calculated as:

where u and v are random variables in the range (0, 1), and β(=1.5) is a constant.

Then the hawk i (i = 1, 2, ⋯, N) positions are updated in the soft besiege with progressive rapid dives as:

-

Hard besiege with progressive rapid dives (r < 0.5 and |E| < 0.5 )

In this attacking strategy, the prey has not enough energy to escape, and the hawk hardly besieges before the surprise pounce to catch the prey. This attacking strategy is pretty like soft besiege with progressive rapid dives, but in this, the hawk considers the other hawks’ location for escaping prey. So, the update rule of position vector in this attacking strategy is given as

where

2.2.4 Pseudocode of HHO

In the beginning, let us identify the dimension of the problem as D, number of hawks employed in a search space as N, upper boundary and lower boundary of the search space as UB and LB, maximum iterations as tmax, and a fitness function as f of a given problem statement. Then initialize the position vector of ith hawk as \( {X}_i\left(t=1\right)=\left({x}_i^1,{x}_i^2,\cdots, {x}_i^D\right) \) for D dimension problem at generation t = 1.

3 The proposed leader Harris hawks optimization (LHHO)

The motivation for the development of the leader Harris hawks optimization (LHHO) comes from the exploration behavior of the Harris hawk. The disadvantage of the HHO is its limited exploration. The reason is the perching strategy, which depends on equal chance q [19]. As described in the HHO algorithm [19], the hawks perch based on the position of other family members if q < 0.5, otherwise perch in a random tall tree if q ≥ 0.5. This can be overcome with a perch probability of the individual hawk.

Exploration phase (|E| ≥ 1): Let us define an adaptive perch probability (\( {p}_{ap}^i \)) of ith hawk, which depends on the fitness value of the current hawk with position vector Xi as f(Xi) of ith hawk, fitness value of the best performing hawk with the position vector Xprey as f(Xprey) and fitness value of the worst-performing hawk with the position vector Xworst as f(Xworst). Then the adaptive perch probability (\( {p}_{ap}^i \)) can be modeled as

Then the exploration phase can be modeled as

where Xm(t) is the mean position vector of the current population of N hawks.

Exploitation phase (|E| < 1): The exploitation phase can be modeled four possible attacking strategies as described below which are like the HHO [19].

-

Soft besiege (r ≥ 0.5 and |E| ≥ 0.5 )

where J is the jump strength as of Eq. (18).

-

Hard besiege (r ≥ 0.5 and |E| < 0.5 )

-

Soft besiege with progressive rapid dives (r < 0.5 and |E| ≥ 0.5 )

The Yi and Zi can be calculated using Eq. (20) and Eq. (21), respectively.

-

Hard besiege with progressive rapid dives (r < 0.5 and |E| < 0.5 )

The Yi and Zi can be calculated using Eq. (25) and Eq. (26), respectively.

The HHO algorithm transition of the exploration to/from exploitation depends on the escape energy of the prey. The time-dependent behavior of the escape energy is presented in Fig. 1. As we can observe that after the 50% of the maximum iterations, the escape energy |E| is always below 1. This shows that the HHO algorithm only performs the exploitation after 50% of the maximum iteration. This shows that the exploration is restricted, as a result, the optimal value may fall to a local minimum. To supplement the HHO, a leader-based mutation-selection approach, that helps to explore until the end, is proposed.

Leader-based mutation-selection

Let us define the best hawks position vector \( {X}_{best}^t \), the second-best hawks’ position vector \( {X}_{best-1}^t \) and the third-best hawks’ vector \( {X}_{best-2}^t \) based on the fitness function value of the new position vector X(new) among N individual hawks. Then the new mutation position vector Xi(mut) for ith hawk can be defined as

where rand is a random number in the range (0, 1).

Then the position vector for the next generation Xi(t + 1) can be obtained by the selection process described in the Eq. (34). Similarly, the Xprey is updated using the Eq. (35).

3.1 Pseudocode of LHHO

In the beginning, assign the parameters N, D, UB, LB, tmax.

3.2 Performance evaluation of LHHO

To examine the performance of the proposed LHHO, a comparison with the HHO [19] (with the help of 23 well-known test functions (f1 − f23) chosen from the literature [39, 41, 71]) is made. It is noteworthy to mention here that the test functions are divided in three groups as unimodal test functions (f1 − f7), multimodal test functions with varied dimension (f8 − f13) and multimodal test functions with fixed dimension (f14 − f23), presented in Appendix 1. The unimodal test function has a unique optimal solution, and the convergence rate is more essential in the validation of an optimization algorithm. The multimodal test functions have many local minima, so avoidance of a local minimum is more concerned. Moreover, the performance at unimodal test function gives the exploitation capability, whereas the performance of multimodal test functions gives the exploration capability of the optimization algorithm.

The input parameters are chosen as N = 20, tmax = 500 and β = 1.5 for the performance evaluation of both the algorithms. Each algorithm runs 100 times to get the best optimal value ‘Best’, worst optimal value ‘Worst’, average optimal value ‘Avg’, the standard deviation ‘Std’, and the average time ‘AvgTime’ in seconds. The best values are represented in boldface.

The statistical results of unimodal test functions (f1 − f7) and multimodal test functions with varied dimension (f8 − f13) for dimension D = 30,100 are presented in Table 1 and Table 2, respectively. All the unimodal test functions have shown significant improvement in the LHHO as compared to the HHO for all the statistical parameters, which is explicit from Tables 1 and 2. The convergence curve of three unimodal test functions is shown in Fig. 2. In the multimodal test functions with varied dimension, f8,12,13 show the improvement while f9,10,11 produce the same result (both in the LHHO and the HHO). From the convergence curve for three multimodal test functions, as shown in Fig. 3, it is observed that the convergence of the LHHO is faster than the HHO concerning iterations. The convergence curves implicitly suggest that the LHHO is useful for function optimization.

The statistical results of multimodal test functions with fixed dimension (f14 − f23) are presented in Table 3 and the sample convergence curves are presented in Fig. 4. From a comparison (see Table 3), it is seen that the statistical parameter ‘Best’ performs in the same way in both the LHHO and HHO. However, the statistical parameters ‘Avg’, ‘Worst’, and ‘Std’ of the LHHO show their superiority over the HHO. To be precise, the overall statistical performance of the LHHO is better than the HHO. The LHHO takes 35% more time as compared to the HHO (for 500 iterations), which may be treated as a penalty to obtain better optimal solutions. However, the convergence is faster in the case of LHHO, because it takes less number of iterations than HHO. Finally, the LHHO shows better results/convergence as compared to the HHO due to the adaptive perching strategy and the leader-based mutation-selection approach to enhance the exploration, keeping unchanged the exploitation ability of the HHO. Interestingly, these two newly investigated features ensure better exploration by the LHHO.

4 The proposed 2-D Masi entropy-based multilevel image thresholding

In this section, we extend the idea of the 1-D Masi entropy [23, 24, 27, 60] to introduce a new 2-D Masi entropy-based multilevel image thresholding technique by utilizing the basic principle as described in Section 2.1 and the idea of 2-D histogram discussed in [44].

Here, we suggest an extension of the 1-D histogram of an image I of size M × N into a 2-D histogram. The local average g(x, y) of the gray level values of an image f(x, y) for a window of size w × w is formulated as:

Note that w < min(M, N) and \( \left\lfloor \frac{w}{2}\right\rfloor \) represents the integer part only.

The gray level values of the image f(x, y), and the local average g(x, y) of the gray level values are used to construct the 2-D histogram using the co-occurrences (nij).

Then the normalized 2-D histogram (pij) of the index (i, j) is approximated as:

where M × N represent the image size. A pictorial representation of a 2-D histogram is presented in Fig. 5, which covers a square region of size L × L.

The bi-level thresholding divides the image into two classes; known as the foreground class (Cf) and the background class (Cb) (using the threshold point (s, t)). The s is the local average threshold and t is the gray level threshold. The 2-D histogram plane for the bi-level thresholding is shown in Fig. 5a, in which the main interest areas useful for thresholding are 1st and 4th quadrants, as they represent the foreground and the background class information. The 2nd and 3rd quadrants generally contain edge and noise information only. The probability distribution of the foreground class φf and the background class φb is given as:

where i, j = 0, 1, ⋯, L − 1. Note that the contribution of 2nd and 3rd quadrants are negligible. Hence, φb(s, t) ≈ 1 − φf(s, t) *.

The 2-D Masi entropy of the foreground class (Hf(s, t)) and the background class (Hb(s, t)) are formulated as

and

where r is the entropic parameter set to 0.5.

The optimal threshold {s∗, t∗} for 2-D Masi entropy based bi-level thresholding can be formulated as a maximization criterion given as:

subject to the conditions 0 < s < L − 1, and 0 < t < L−1.

The multilevel image thresholding using the 2-D histogram for K threshold coordinates [s1t1, s2t2, ⋯, sKtK] [47, 57] are discussed here. The K threshold points separate the image into a set of K + 1 classes as C = {C0, C1, ⋯, CK − 1, CK}. The 2-D histogram plane of the multilevel thresholding for K + 1 classes is shown in Fig. 5b, in which the diagonal quadrants are of the main interest for thresholding. The other quadrants are ignored as they consist of edge and noise components. The probability distribution of different K + 1 classes is expressed as:

where i, j = 0, 1, ⋯, L − 1, and \( {\sum}_{k=0}^K{\varphi}_k\approx 1 \).

Then, the 2-D Masi entropy for the kth class (Hk(s, t)) is formulated as:

where 0 ≤ k ≤ K, s0 = t0 = 0, sK + 1 = tK + 1 = L − 1 and the entropic parameter r is set to 0.5.

The optimal threshold \( \left\{{s}_1^{\ast }{t}_1^{\ast },{s}_2^{\ast }{t}_2^{\ast },\cdots, {s}_K^{\ast }{t}_K^{\ast}\right\} \) for 2-D Masi entropy-based multilevel image thresholding is formulated as a maximization criterion given as:

subject to the conditions 0 < s1 < s2 < ⋯ < sK < L − 1, and 0 < t1 < t2 < ⋯ < tK < L − 1.

The maximization criterion presented in Eq. (46) is an exhaustive search of O(L2K) computation, which increases exponentially when K increases. This can be resolved by a good optimizer, which is fast and good enough to get the optimal solutions. Therefore, the newly proposed LHHO is used as a maximizer to obtain the optimal threshold values using Eq. (46) as an objective function.

5 Results and discussions

The proposed LHHO algorithm is used to obtain the optimal threshold values for the multilevel image thresholding. The suggested evolutionary-based multilevel image thresholding approach using 2-D Masi entropy is implemented. Some well-known evolutionary algorithms such as – HHO [19], CS [1], PSO [72], FA [22], WDO [8], STOA [10], and DE [57] are also used for a comparison to enrich the claim. It is noteworthy to mention here that the 1-D Masi entropy-based multilevel image thresholding technique is also implemented for a comparison. The validation of the performance is carried out with the help of the Berkeley segmentation dataset (BSDS 500) [34]. For the experiment, the parameters for the above evolutionary optimization methods are chosen the same as reported by the original work and are displayed in Table 4.

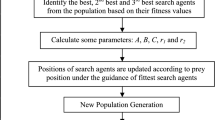

The BSDS 500 consists of 500 images, an extended version of the BSDS 300 public benchmark dataset used for the segmentation, and boundary detection. The BSDS 500 consists of a dataset of 200 training images and 300 testing images, which are composed of color images of a dimension of 481 × 321 or 321 × 481. We resize the BSDS 500 images to 256 × 256. These are used to evaluate the performance of the various optimization algorithms using 2-D Masi and 1-D Masi entropy-based multilevel image thresholding techniques. The well-known performance metrics - peak signal to noise ratio (PSNR) [1], feature similarity (FSIM) [77], and structural similarity (SSIM) [79] are used for the multilevel image thresholding performance evaluation. Each algorithm passes through 10 independent runs for stability analysis. The block diagram of the evolutionary algorithm for the multilevel image thresholding is shown in Fig. 6. Flowchart of the 2-D Masi entropy-based multilevel image thresholding using the LHHO is displayed in Fig. 7.

A deeper insight into the statistical analysis is provided here. The 2-D Masi and 1-D Masi entropy-based multilevel image thresholding methods are optimization (maximization) problems as described by Eqs. (13) and (46). Usually, PSNR, FSIM and SSIM metrics are used for result analysis. For a more detail analysis of the results, we supplement the statistical results with average fitness value ‘favg’ and a standard deviation ‘Std’. Note that the average fitness value and the standard deviation are computed among 10 independent runs. Here, we use threshold levels K as 2, 3, and 5 for an analysis. As the multilevel image thresholding problem is a maximization problem, the highest average fitness value (favg) is calculated using the best fitness values among 10 independent runs. The other parameters – average PSNR (PSNRavg), average FSIM (FSIMavg) and average SSIM (SSIMavg) are calculated from the best threshold values obtained from the best fitness value among 10 independent runs. Finally, these results are computed over 500 images considering the best fitness values among 10 independent runs. The results are good, as opposed to the earlier algorithms, encourage future applications.

The performance of the LHHO and other optimization algorithms in all 500 images considered from BSDS 500 is presented in Table 5. Statistical results computed over 500 images from BSDS 500 dataset are presented for analysis and interpretation. From a comparison of the statistical parameters, the evolutionary 2-D Masi entropy-based multilevel image thresholding achieves better results than the 1-D Masi entropy-based multilevel image thresholding. For instance, an increase of about 2% to 4% is observed in the case of PSNRavg values for K = 5. A similar trend is followed for other threshold levels. The reason behind such an improvement could be the inclusion of the contextual information. From Table 5, it is envisioned that the LHHO based multilevel image thresholding approach performs well compared with state-of-the-art optimization methods. Even more interesting is its consistent ‘Std’ data. Need to mention here that the computation over 500 images highlights its capability to solve image segmentation problems.

Exemplary results are provided here to ensure its usefulness for such applications. To provide a more in-depth analysis of the proposed method, three subjects from BSDS 500 with identification number 35049, 92014, and 159,045 are used for the experiment. To encourage readers, these examples are good enough to analyze and interpret. The sample subjects with the corresponding histograms are presented in Fig. 8. For the statistical parameter analysis together with ‘favg’ and ‘Std’, we use the best fitness value fbest among 10 independent runs. The ‘Opt. Th.’ parameter is the optimal threshold value obtained using an optimizer. The ‘PSNRbest’, ‘SSIMbest’ and ‘FSIMbest’ are the PSNR, SSIM, and FSIM values obtained by using the optimal threshold values. The good results primarily depend on the optimal threshold values. Especially, the role of an optimizer is crucial. Other factors influencing the good results are the contextual information and the inherent characteristics of images. In this context, nevertheless, it is justified to achieve the results presented here.

The statistical result of the subject 35,049 is presented in Table 6 while the results of the subject 92,014 and the subject 159,045 are displayed in Tables 7 and 8, respectively. From Tables 6, 7 and 8, it is seen that the evolutionary 2-D Masi entropy-based method using the LHHO outperforms than other techniques. The corresponding threshold images for subject 35,049, 92,014, and 159,045 are shown in Figs. 9, 10, and 11, respectively. It is observed that the best threshold image is the top left corner image that is corresponding to the evolutionary 2-D Masi entropy-based multilevel image thresholding using the LHHO. The different regions are clearly visible, because of the more visual information. The convergence curves (fitness vs. iteration) for the threshold level K = 5 are shown in Figs. 12, 13, and 14 for the subjects 35,049, 92,014, and 159,045, respectively. The proposed inbuilt mechanisms enforce the LHHO for a rapid convergence. Furthermore, the suggested algorithm takes a smaller number of iterations than other state-of-the-art methods. From this analysis, it is seen that the evolutionary 2-D Masi entropy-based multilevel image thresholding using the LHHO has inherent potential for the future applications in image processing.

The suggested LHHO has shown better results than state-of-the-art optimizers, because it inherits adaptive perching and mutation-selection mechanism to enhance the exploration. It is reiterated that the exploitation remains unchanged. Due to quick dispersal of the position of the Harris hacks in the search space, it converges towards optimal solution rapidly even with a lesser number of the iteration count. Nonetheless, the results obtained using 2-D Masi entropy-based method is better than the 1-D Masi technique, because the contextual information is enshrined.

6 Conclusions

In this work, a leader Harris hawks optimization (LHHO) algorithm is proposed to enhance the exploration capability of the HHO without reducing the exploitation capacity by inhibiting the adaptive perch probability and a leader-based mutation-selection approach. The LHHO showed better performance than the HHO when compared with well-known benchmark functions for function optimizations; both on quantitative (i.e. Best, Avg, Worst, and Std) and qualitative (i.e., convergence curve) performances. The LHHO is computationally expensive as compared to HHO, due to additional learning taking place via leader-based mutation-selection for a better optimal solution, is the only drawback. However, the convergence is faster, because it takes less number of iterations than the HHO. The LHHO can be used to solve the optimization problems in the different fields of engineering. In this work, the LHHO is tested on a single-objective problem, which may be further extended to a multi-objective problem. The possible future extension of the LHHO would be in opposition-based learning, chaos-based phases, and general type-2 fuzzy-based learning.

Further, the LHHO is used in the multilevel image thresholding to demonstrate its ability in image segmentation. For the image segmentation problem, in this work, a 2-D Masi entropy objective function (for multilevel image thresholding) is proposed. The 2-D Masi entropy is based on the underlying principle of 1-D Masi entropy and the advantage of the 2-D histogram, utilizes the contextual information during the thresholding process. Further, a proposal of an evolutionary 2-D Masi entropy-based multilevel image thresholding using the LHHO is suggested. Our proposal yields superior results than the 1-D Masi entropy-based method, because it extracts the contextual information efficiently. The comparison of various methods is made by using well-known performance metrics – PSNR, FSIM, and SSIM (in this article). The state-of-the-art algorithms are also used to envisage the effectiveness of the LHHO based multilevel image thresholding, which reveals that the LHHO quickly obtains the optimal threshold values in terms of the iteration count. There are merits in the proposal. To figure out, it provides us efficient segmentation results, a faster convergence, and attractive for ready implementations. The future scope of the work would be the multispectral image analysis, image compression, object detection, biomedical image segmentation, and in more general where we require computational intelligence-based image segmentation.

References

Agrawal S, Panda R, Bhuyan S, Panigrahi BK (2013) Tsallis entropy based optimal multilevel thresholding using cuckoo search algorithm. Swarm Evol Comput 11:16–30. https://doi.org/10.1016/j.swevo.2013.02.001

Agrawal S, Panda R, Abraham A (2018) A novel diagonal class entropy-based multilevel image Thresholding using coral reef optimization. IEEE Trans Syst man, Cybern Syst:1–9. https://doi.org/10.1109/TSMC.2018.2859429

Ahmadi M, Kazemi K, Aarabi A, Niknam T, Helfroush MS (2019) Image segmentation using multilevel thresholding based on modified bird mating optimization. Multimed Tools Appl 78:23003–23027. https://doi.org/10.1007/s11042-019-7515-6

Ayala HVH, dos Santos FM, Mariani VC, dos Coelho LS (2015) Image thresholding segmentation based on a novel beta differential evolution approach. Expert Syst Appl 42:2136–2142. https://doi.org/10.1016/j.eswa.2014.09.043

Baby Resma KP, Nair MS (2018) Multilevel thresholding for image segmentation using krill herd optimization algorithm. J King Saud Univ - Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2018.04.007

Barthelemy P, Bertolotti J, Wiersma DS (2008) A levy flight for light. Nature 453:495–498

Bhandari A (2015) Tsallis entropy based multilevel Thresholding for colored satellite image segmentation using evolutionary algorithms. Expert Syst Appl 42:8707–8730. https://doi.org/10.1016/j.eswa.2015.07.025

Bhandari AK, Singh VK, Kumar A, Singh GK (2014) Cuckoo search algorithm and wind driven optimization based study of satellite image segmentation for multilevel thresholding using Kapur’s entropy. Expert Syst Appl 41:3538–3560. https://doi.org/10.1016/j.eswa.2013.10.059

Chen Y, He F, Li H, Zhang D, Wu Y (2020) A full migration BBO algorithm with enhanced population quality bounds for multimodal biomedical image registration. Appl Soft Comput 93:106335. https://doi.org/10.1016/j.asoc.2020.106335

Dhiman G, Kaur A (2019) STOA: a bio-inspired based optimization algorithm for industrial engineering problems. Eng Appl Artif Intell 82:148–174. https://doi.org/10.1016/j.engappai.2019.03.021

Education H, Shahabi F, Pourahangarian F, Beheshti H (2019) A multilevel image thresholding approach based on crow search algorithm and Otsu method. J J Decis Oper Res 4:33–41. https://doi.org/10.22105/dmor.2019.88580

El Aziz MA, Ewees AA, Hassanien AE (2017) Whale optimization algorithm and moth-flame optimization for multilevel thresholding image segmentation. Expert Syst Appl 83:242–256. https://doi.org/10.1016/j.eswa.2017.04.023

Freixenet J, Muñoz X, Raba D et al (2002) Yet another survey on image segmentation: region and boundary information integration. Springer, Berlin Heidelberg, Berlin, Heidelberg, pp 408–422

Fu KS, Mui JK (1981) A survey on image segmentation. Pattern Recogn 13:3–16. https://doi.org/10.1016/0031-3203(81)90028-5

Gandomi AH, Yang X-S, Alavi AH (2011) Mixed variable structural optimization using firefly algorithm. Comput Struct 89:2325–2336. https://doi.org/10.1016/j.compstruc.2011.08.002

Gandomi A, Yang X-S, Alavi A (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29:245. https://doi.org/10.1007/s00366-012-0308-4

Gao H, Xu W, Sun J, Tang Y (2010) Multilevel Thresholding for image segmentation through an improved quantum-behaved particle swarm algorithm. IEEE Trans Instrum Meas 59:934–946. https://doi.org/10.1109/TIM.2009.2030931

Hammouche K, Diaf M, Siarry P (2008) A multilevel automatic thresholding method based on a genetic algorithm for a fast image segmentation. Comput Vis Image Underst 109:163–175. https://doi.org/10.1016/j.cviu.2007.09.001

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872. https://doi.org/10.1016/j.future.2019.02.028

Horng MHM-H (2010) Multilevel minimum cross entropy threshold selection based on the honey bee mating optimization. Expert Syst Appl 37:4580–4592. https://doi.org/10.1016/j.eswa.2009.12.050

Horng MHM-H (2011) Multilevel thresholding selection based on the artificial bee colony algorithm for image segmentation. Expert Syst Appl 38:13785–13791. https://doi.org/10.1016/j.eswa.2011.04.180

Horng MHM-H, Liou RJR-J (2011) Multilevel minimum cross entropy threshold selection based on the firefly algorithm. Expert Syst Appl 38:14805–14811. https://doi.org/10.1016/j.eswa.2011.05.069

Jia H, Peng X, Song W et al (2019) Masi entropy for satellite color image segmentation using tournament-based Lévy multiverse optimization algorithm. Remote Sens 11:942. https://doi.org/10.3390/rs11080942

Kandhway P, Bhandari AK (2019) A water cycle algorithm-based multilevel Thresholding system for color image segmentation using Masi entropy. Circuits, Syst Signal Process 38:3058–3106. https://doi.org/10.1007/s00034-018-0993-3

Kapur JNN, Sahoo PKK, Wong AKCKC (1985) A new method for gray-level picture thresholding using the entropy of the histogram. Comput Vision, Graph Image Process 29:273–285. https://doi.org/10.1016/0734-189X(85)90125-2

Khairuzzaman AKM, Chaudhury S (2017) Multilevel thresholding using grey wolf optimizer for image segmentation. Expert Syst Appl 86:64–76. https://doi.org/10.1016/j.eswa.2017.04.029

Khairuzzaman AK, Chaudhury S (2019) Masi entropy based multilevel thresholding for image segmentation. Multimed Tools Appl 78:33573–33591. https://doi.org/10.1007/s11042-019-08117-8

Li H, He F, Liang Y, Quan Q (2020) A dividing-based many-objective evolutionary algorithm for large-scale feature selection. Soft Comput 24:6851–6870. https://doi.org/10.1007/s00500-019-04324-5

Li H, He F, Chen Y, Luo J (2020) Multi-objective self-organizing optimization for constrained sparse array synthesis. Swarm Evol Comput 58:100743. https://doi.org/10.1016/j.swevo.2020.100743

Li H, He F, Chen Y (2020) Learning dynamic simultaneous clustering and classification via automatic differential evolution and firework algorithm. Appl Soft Comput 96:106593. https://doi.org/10.1016/j.asoc.2020.106593

Liang Y, He F, Zeng X (2020) 3D mesh simplification with feature preservation based on whale optimization algorithm and differential evolution. Integr Comput Aided Eng 27:417–435. https://doi.org/10.3233/ICA-200641

Liao P-S, Chen T-S, Chung P-C (2001) A fast algorithm for multilevel Thresholding. J Inf Sci Eng 17:713–727

Liu J, Li W, Tian Y (1991) Automatic thresholding of gray-level pictures using two-dimension Otsu method. In: China., 1991 international conference on circuits and systems, vol 1, pp 325–327

Martin D, Fowlkes C, Tal D, Malik J (2001) A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings eighth IEEE international conference on computer vision, ICCV 2001, vol 2, pp 416–423

Masi M (2005) A step beyond Tsallis and Renyi entropies. Phys Lett A 338:217–224. https://doi.org/10.1016/j.physleta.2005.01.094

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowledge-Based Syst 89:228–249. https://doi.org/10.1016/j.knosys.2015.07.006

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Mlakar U, Potočnik B, Brest J (2016) A hybrid differential evolution for optimal multilevel image thresholding. Expert Syst Appl 65:221–232. https://doi.org/10.1016/j.eswa.2016.08.046

Naik MK, Panda R (2016) A novel adaptive cuckoo search algorithm for intrinsic discriminant analysis based face recognition. Appl Soft Comput 38:661–675. https://doi.org/10.1016/j.asoc.2015.10.039

Naik MK, Samantaray L, Panda R (2016) A hybrid CS–GSA algorithm for optimization. In: Hybrid soft computing approaches: research and applications, pp 3–35

Naik MK, Wunnava A, Jena B, Panda R (2020) 1. Nature-inspired optimization algorithm and benchmark functions: a literature survey. In: Bisht DCS, Ram M (eds) Computational Intelligence, 3rd edn. De Gruyter, Berlin, Boston, pp 1–26

Nie F, Zhang P, Li J, Ding D (2017) A novel generalized entropy and its application in image thresholding. Signal Process 134:23–34. https://doi.org/10.1016/j.sigpro.2016.11.004

Otsu (1979) Otsu_1979_otsu_method. IEEE Trans Syst Man Cybern C:62–66. https://doi.org/10.1109/TSMC.1979.4310076

Pal NR, Pal SK (1989) Entropic thresholding. Signal Process 16:97–108. https://doi.org/10.1016/0165-1684(89)90090-X

Pal NR, Pal SK (1993) A review on image segmentation techniques. Pattern Recogn 26:1277–1294. https://doi.org/10.1016/0031-3203(93)90135-J

Panda R, Agrawal S, Bhuyan S (2013) Edge magnitude based multilevel thresholding using cuckoo search technique. Expert Syst Appl 40:7617–7628. https://doi.org/10.1016/j.eswa.2013.07.060

Panda R, Agrawal S, Samantaray L, Abraham A (2017) An evolutionary gray gradient algorithm for multilevel thresholding of brain MR images using soft computing techniques. Appl Soft Comput 50:94–108. https://doi.org/10.1016/j.asoc.2016.11.011

Pavesic N, Ribaric S (2000) Gray level thresholding using the Havrda and Charvat entropy. In: 2000 10th Mediterranean Electrotechnical conference. Information technology and Electrotechnology for the Mediterranean countries. Proceed MeleCon (cat. No.00CH37099) 2:631–634

Peng-Yeng Y, Ling-Hwei C (1994) A new method for multilevel thresholding using symmetry and duality of the histogram. In: proceedings of ICSIPNN ‘94. International Conference on Speech, Image Processing and Neural Networks. Pp 45–48 vol.1

Portes de Albuquerque M, Esquef IA, Gesualdi Mello AR, Portes de Albuquerque M (2004) Image thresholding using Tsallis entropy. Pattern Recogn Lett 25:1059–1065. https://doi.org/10.1016/j.patrec.2004.03.003

Rao RV, Patel V (2013) An improved teaching-learning-based optimization algorithm for solving unconstrained optimization problems. Sci Iran 20:710–720. https://doi.org/10.1016/j.scient.2012.12.005

Renyi A (1961) On measures of entropy and information. In: Proceedings of the fourth Berkeley symposium on mathematical statistics and probability, volume 1: contributions to the theory of statistics. University of California Press, Berkeley, Calif, pp 547–561

Sahoo PK, Arora G (2004) A thresholding method based on two-dimensional Renyi’s entropy. Pattern Recogn 37:1149–1161. https://doi.org/10.1016/j.patcog.2003.10.008

Sahoo PK, Arora G (2006) Image thresholding using two-dimensional Tsallis–Havrda–Charvát entropy. Pattern Recogn Lett 27:520–528. https://doi.org/10.1016/j.patrec.2005.09.017

Sahoo PK, Soltani S, Wong AKC (1988) A survey of thresholding techniques. Comput Vision, Graph Image Process 41:233–260. https://doi.org/10.1016/0734-189X(88)90022-9

Sankur B, Sezgin M (2001) Image thresholding techniques: a survey over categories. Pattern Recogn 34:1573–1583

Sarkar S, Das S (2013) Multilevel image Thresholding based on 2D histogram and maximum Tsallis entropy— a differential evolution approach. IEEE Trans Image Process 22:4788–4797. https://doi.org/10.1109/TIP.2013.2277832

Sathya PD, Kayalvizhi R (2011) Modified bacterial foraging algorithm based multilevel thresholding for image segmentation. Eng Appl Artif Intell 24:595–615. https://doi.org/10.1016/j.engappai.2010.12.001

Sezgin M, Sankur B (2004) Survey over image thresholding techniques and quantitative performance evaluation. J Electron Imaging 13:146–168. https://doi.org/10.1117/1.1631315

Shubham S, Bhandari AK (2019) A generalized Masi entropy based efficient multilevel thresholding method for color image segmentation. Multimed Tools Appl 78:17197–17238. https://doi.org/10.1007/s11042-018-7034-x

Simon D (2009) Biogeography-based optimization. Evol Comput IEEE Trans 12:702–713. https://doi.org/10.1109/TEVC.2008.919004

Song JH, Cong W, Li JJ (2017) A fuzzy C-means clustering algorithm for image segmentation using nonlinear weighted local information. J Inf Hiding Multimed Signal Process 8:578–588

Sri Madhava Raja N, Rajinikanth V, Latha K (2014) Otsu based optimal multilevel image Thresholding using firefly algorithm. J Model Simul Eng 2014:17–17. https://doi.org/10.1155/2014/794574

Tsallis C (1988) Possible generalization of Boltzmann-Gibbs statistics. J Stat Phys 52:479–487. https://doi.org/10.1007/BF01016429

Tsallis C (2001) Nonextensive statistical mechanics and its applications. Lect Notes Phys 560:3–98

Upadhyay P, Chhabra JK (2019) Kapur’s entropy based optimal multilevel image segmentation using. Crow Search Algorithm Appl Soft Comput:105522. https://doi.org/10.1016/j.asoc.2019.105522

Xing Z, Jia H (2020) Modified thermal exchange optimization based multilevel thresholding for color image segmentation. Multimed Tools Appl 79:1137–1168. https://doi.org/10.1007/s11042-019-08229-1

Yang X-S (2010) Nature-inspired Metaheuristic algorithms

Yang X-S (2012) Flower pollination algorithm for global optimization. In: Durand-Lose J, Jonoska N (eds) Unconventional computation and natural computation: 11th international conference, UCNC 2012, Orléan, France, September 3–7, 2012. Proceedings. Springer Berlin Heidelberg, Berlin, Heidelberg, pp 240–249

Yang X-S, Gandomi A (2012) Bat algorithm: a novel approach for global engineering optimization. Eng Comput 29:464–483. https://doi.org/10.1108/02644401211235834

Yao X, Yong L, Guangming L (1999) Evolutionary programming made faster. Evol Comput IEEE Trans 3:82–102. https://doi.org/10.1109/4235.771163

Yin P-Y (2007) Multilevel minimum cross entropy threshold selection based on particle swarm optimization. Appl Math Comput 184:503–513. https://doi.org/10.1016/j.amc.2006.06.057

Yin P-Y, Chen L-H (1997) A fast iterative scheme for multilevel thresholding methods. Signal Process 60:305–313. https://doi.org/10.1016/S0165-1684(97)00080-7

Zaitoun NM, Aqel MJ (2015) Survey on image segmentation techniques. Procedia Comput Sci 65:797–806. https://doi.org/10.1016/j.procs.2015.09.027

Zhang Y, Wu L (2011) Optimal Multi-Level Thresholding Based on Maximum Tsallis Entropy via an Artificial Bee Colony Approach 13:841–859

Zhang H, Fritts JE, Goldman SA (2008) Image segmentation evaluation: a survey of unsupervised methods. Comput Vis Image Underst 110:260–280. https://doi.org/10.1016/j.cviu.2007.08.003

Zhang L, Zhang L, Mou X et al (2011) FSIM: a feature similarity index for image quality assessment. IEEE Trans Image Process 20:2378–2386. https://doi.org/10.1109/TIP.2011.2109730

Zhiwei Y, Zhaobao Z, Xin Y, Xiaogang N (2005) Automatic threshold selection based on ant colony optimization algorithm. In: 2005 international conference on neural networks and brain, pp 728–732

Zhou W, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13:600–612. https://doi.org/10.1109/TIP.2003.819861

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1. Test functions

Appendix 1. Test functions

Rights and permissions

About this article

Cite this article

Naik, M.K., Panda, R., Wunnava, A. et al. A leader Harris hawks optimization for 2-D Masi entropy-based multilevel image thresholding. Multimed Tools Appl 80, 35543–35583 (2021). https://doi.org/10.1007/s11042-020-10467-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10467-7