Abstract

Gait recognition is one of the most important techniques in application areas such as video-based surveillance, human tracking and medical systems. In this study, a novel Gabor wavelets based gait recognition algorithm is proposed, which consists of three steps. First, the gait energy image (GEI) is formed by extracting different orientation and scale information from the Gabor wavelet. Secondly, A two-dimensional principal component analysis ((2D)2PCA) method is employed to reduce the feature space dimension. The (2D)2PCA method minimizes the within-class distance and maximizes the between-class distance. Last, the multi-class support vector machine (SVM) is adopted to recognize different gaits. Experimental results performed on CASIA gait database show that the proposed gait recognition algorithm is generally robust, and provides higher recognition accuracy comparing with existing methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, gait recognition techniques have received wide attentions in various fields, such as access control systems, medical diagnostics, security monitoring and criminal investigation [2, 4, 17, 22, 39]. Compared to other biometric recognition methods (such as fingerprints, palm prints, face recognitions, etc.), the most important advantage of gait recognition is that the gait recognition is known as a contactless, non-invasive and effective human identification method [6, 8, 9, 21]. Moreover, in the current world, the high demand of human surveillance and identification systems urges the development of accurate, efficient and robust gait recognition techniques [11, 14].

Conventional gait recognition methods usually consist of four main steps: pre-processing, gait-cycle detection, feature extraction and classification, and can be categorized into two groups: model-free approaches [10, 26, 37] and model-based approaches [30, 31]. Model-based gait recognition methods model human motions using physiological characteristics. Gait dynamics and human kinematics are described using explicit features, such as the stick-figure model [19, 20]. Model-free gait recognition methods process gait sequences without any models. Motion characteristics of silhouettes and spatio-temporal shapes are usually analyzed. A typical model-free gait recognition method consists of a gait cycle detection algorithm, a set of training data, a feature extractor and a classifier.

In this study, a novel model-free gait recognition method is proposed. The gait features are extracted from GEIs using Gabor wavelets. The (2D)2PCA method is used to reduce the feature matrix dimension. A multi-class SVM is employed to identify the gait sequences. Experimental results show that the proposed algorithm greatly reduces the feature matrix dimension with improved recognition accuracy rates. The main contributions of this paper include:

-

1)

New gait representation. The Gabor wavelets based gait feature set is a new time-varying gait representation. Compared to existing methods that use GEIs directly as features, the Gabor wavelets based gait features contain more detailed scaling and directional information, which results in high classification rates.

-

2)

Effective dimension reduction algorithm. An effective dimension reduction algorithm was proposed to reduce the gait feature matrix dimension. According to the experimental results, the training time of (2D)2PCA on Dataset B of the CASIA gait database takes about 40 min, whereas the training time of traditional PCA takes 2–3 h.

-

3)

High gait classification rates. Experimental results show that the proposed gait recognition algorithm is effective, and produces much higher gait classification rates than existing methods.

2 Related works

Gait recognition has been an active research topic for the past decade. Huang et al. [12] presented a gait recognition method combining Gabor wavelets and GEI. The Gabor wavelets are calculated from the dataset directly. In the proposed method, the Gabor wavelets are extracted from the GEI; and the (2D)2PCA dimension reduction method is employed to reduce the training time. Yoo et al. [36] classified gaits using back-propagation neural network (BPNN). A sequence of 2D stick-figure models were extracted with topological analyses. Han [10] summarized the GEI as an averaged silhouette image of a gait cycle, and applied the GEI to gait recognition to save storage space and computing time as well as reducing the noise sensitivity of the silhouette images. Ekinci and Aykut [7] proposed a novel approach for gait recognition using the kernel PCA (KPCA). Liu and Tan [16] studied LDA-subspaces to extract discriminative information from gait features in various viewing angles. Zeng et al. [38] proposed a deterministic learning (DL) theory with radial basis function (RBF) neural networks for gait recognition. Kejun et al. [15] applied the sparsity preserving projection (SPP) method to gait recognition, which was originally used in face recognition problems [23]. They also implemented an extension of the SPP named kernel SPP (KSPP). Luo et al. [18] introduced a robust gait recognition system based on canonical correlation analysis (CCA). Tang et al. [24] looked for the common view surface of the gait images under significantly different viewing angles. Aggarwal et al. [1] presented a gait recognition framework that utilizes Zernike moment invariants to deal with the covariates in clothing and bag carrying conditions. Chen et al. [3] designed a multimodal fusion framework for face and fingerprint images using block based feature-image matrix, which has better classification rates with low dimensional multimodal biometrics. Islam [13] proposed a wavelet-based feature extraction method for gait recognition. A template matching based approach is used for the gait classification process. Wu et al. [29] studied a gait recognition based human identification system using deep convolution neural networks (CNNs). It is recognized as the first gait recognition work based on deep CNNs.

3 Methodology

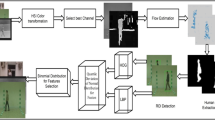

A typical gait recognition method usually involves three steps: detection of moving objects, feature extraction and gait recognition. Since the technology of moving object detection is relatively mature [32, 33], in this study, the last two steps will be attacked. In the second step, i.e. feature extraction, a novel Gabor wavelets based gait feature extraction algorithm is proposed. In the last step, i.e. gait recognition, a multi-class SVM based gait recognition algorithm is presented. An overview of the proposed gait recognition framework is shown in Fig. 1.

3.1 Gabor wavelets based gait feature extraction

Gait energy image (GEI) is a cumulative energy diagram representing the complete gait cycle. The luminance values of the pixels reflect the frequencies (energies) of the body positions in a gait cycle. Given a sequence of gait silhouette images, gait energy image (GEI) is defined as:

where N is the number of frames in a gait cycle; B t (x,y) represents the pixel value at point (x,y). In Fig. 2, the GEIs of a complete gait cycle are shown. In Fig. 3, three different conditions, i.e. normal condition, with bag and with clothes at 90o viewing angle, are illustrated.

The Gabor wavelet was introduced by David Gabor in 1946 [5]. He found that Gabor wavelet effectively extracts the local orientations of images at different scales, which is useful in simulating human perceptive fields and identifying the spatial position of images. The Gabor wavelet makes the gait recognition sensitive to the external environment, such as lighting, walking direction, speed, postures and so on. The Gabor wavelet is defined as:

where μ and ν represent the direction and scale of the Gabor kernel, z = (x,y) represents the coordinates of the image. The wavelet vector k μ,ν is defined as follows:

where \( {k}_{\nu}=\frac{k_{\max }}{f^{\nu}} \); \( {\varphi}_u=\frac{\pi \mu}{8} \); k max is the maximal frequency; f represents the kernel distribution coefficient in the frequency domain; σ represents Gabor kernel ratio of the width and wavelength. Let I(x, y) represents the gray value distribution of the image, a Gabor convolution kernel can be defined as:

where z = (x, y), ‘⊗’ is the convolution operators, G μ , ν (z) represents the convolution result of orientation and scales Gabor kernel. In this study, the Gabor wavelets are used in five scales and eight directions. The extracted image of Gabor features is defined as follows:

According to the convolution theorem, G μ , ν (z) can be solved by the fast Fourier transform (FFT):

where ζ and ζ ‐1 represent the Fourier transform and inverse Fourier transform. The Equations from (4) to (7) are combined to formulate G μ , ν (z) as:

where M μ , ν (z) and θ μ , ν (z) represent the amplitude information and the phase value, respectively. Amplitude information M μ , ν (z) is calculated by the local energy differences in an image. The amplitude spectrums of gait energy images are selected as gait features. Parameters of the amplitude spectrums are defined as σ = 2π, k max = π/2, \( f=\sqrt{2} \). Figure 4 depicts the 40 amplitude spectrums in the Gabor kernel (five scales and eight directions).

3.2 Dimension reduction using (2D)2PCA

The dimension reduction method extracts the most important information from the high dimensional Gabor wavelet feature space, and eliminates the correlations between the features. The dimension reduction is an important step to improve the time complexity for the overall framework. In this study, an extension of the conventional principal component analysis is proposed, which is called (2D)2PCA.

First, a two-dimensional principal component analysis (2DPCA) is introduced and applied to a two-dimensional matrix.

Assuming that the size of an image (amplitude spectrum) A is m × n. An m × d matrix Y is obtained by projecting A onto a matrix Q ∈ R n × d(n ≥ d):

where Q represents the projection matrix and Y represents the feature matrix of image A. In the 2DPCA method, the total dispersion J(Q) of the projected vector Y is an evaluation of the projected matrix(Q):

The covariance matrix of image A is defined as:

where Q k is the kth m × n testing image, M represents the number of training samples. The average image matrix is defined as:

In Eq. (10), the J(Q) can be simplified to:

where Q is the standard orthogonal matrix that maximizes J(Q). After an optimization process, the unit image matrix \( \widehat{Q} \) can be obtained corresponding to the maximum Eigen-values. Each column of \( \widehat{Q} \) is composed by the largest d feature vector corresponding to the nonzero Eigen-values of the covariance matrix G. The unit image matrix \( \widehat{Q} \) can be expressed as:

Where q i T q j = 0, i , j ∈ [1, d], and i ≠ j.

The gait features are extracted for the amplitude spectrums classification. For any A, the Y k can be defined as:

After projection, a set of feature vectors Y 1 , Y 2 ,...,Y d are called the main component vectors. The 2DPCA algorithm selects d principal component vectors to form an m × d matrix, which is called the feature matrix.

The (2D)2PCA algorithm is a two-dimensional PCA method in two directions (row and column). Gait features are extracted by combining the 2DPCA results on row and column direction. In (2D)2PCA, A k and \( \overline{A} \) are formulated as:

where a k (i) and \( {\overline{a}}^{(i)} \) represent the ith row vectors of A k and \( \overline{A} \). The Eq. (11) can be rewritten as:

The optimal projection matrix Q is obtained by calculating the former d Eigen-vectors corresponding to largest Eigen-values. In Eq. (17), G t can be reconstructed using the outer product between the column vectors.

Similarly, in column direction, A k and \( \overline{A} \) are:

where a k (j) and \( {\overline{a}}^{(j)} \) represent the ith column vector of A k and \( \overline{A} \). The covariance matrix of image is:

By projecting an m × n image on Q, another m × n matrix Y(Y = AQ) is generated; and the feature matrix C (q × d) of (2D)2PCA can be obtained by:

After projecting each training image A k (k = 1,2,...,M) to Q and Z, the feature matrices C k (k = 1,2,...,M) of the training images can be calculated.

To evaluate the efficiency of the proposed (2D)2PCA algorithm, the training time of the (2D)2PCA is compared with the traditional PCA on the CASIA gait database, Database B. The hardware specification includes a CPU with Intel Core i5 3.2GHz, and a RAM with Kingston 8GB DDR4 2400 MHz. Python (version 2.73) and OpenCV (version 2.4.9) are used to implement the PCA and (2D)2PCA algorithms. In Fig. 5, by comparing the training time against the number of videos, the proposed (2D)2PCA method shows much butter efficiency performance than the traditional PCA method.

3.3 Gait recognition using multi-class SVM

Support vector machine (SVM) is a powerful machine learning technique based on statistical learning theory [25]. In this study, a multi-class SVM based gait recognition algorithm is implemented for gait recognition.

The traditional SVM is a two-class classifier; and there are two approaches to solve the n-class problem with SVM: the one-against-one approach and the one-against-rest approach [34, 35]. Since the dimension of the selected feature subset is relatively small, the one-against-rest approach is adopted to implement the multi-class SVM. The detailed gait recognition algorithm is described in Algorithm 1.

The SVMs established in Step 4 are Gaussian kernel SVM, with Gaussian kernel function:

The objective function is:

The parameters γ and C in Eqs. (21) and (22) must be tuned to achieve accurate classification results in Algorithm 1. The overall tuning process is shown in Fig. 6. Horizontally, the value of parameter C is fixed; and the value of γ is tuned from 0.1 to 100. Vertically, the value of parameter γ is fixed; and the value of C is tuned from 1 to 10. It is noted that the optimal combination of the two parameters must be tuned every time for different training data.

4 Experiments

The open-access CASIA gait database provided by Institute of China Automation is utilized to verify the effectiveness of the proposed algorithm [40]. The database consists of three sub-datasets, namely, Dataset A, Dataset B and Dataset C. Samples of the three sub-datasets are shown in Fig. 7. The Dataset A contains 240 image sequences corresponding to twenty different persons, three walking lanes and 4 different conditions. The Dataset B is a large multi-view gait database which contains 13,640 gait videos. 124 persons are recorded from 11 viewing angles, i.e. from 0o to 180o (every 18 degree). For each viewing angle of each person, there are 10 gait videos recorded, including 6 normal videos, 2 with bag and 2 with clothes. The Dataset C is another large-scale gait video collection by infrared cameras at night. In total 153 persons are recorded. Each person walks under four conditions: normal walking, fast walking, slowly walking and walking with a bag.

There are in total four experiments performed in this section. Experiments 1 and 2 are based on Dataset B; experiment 3 is performed on Dataset A; and experiment 4 is tested on Dataset C. In general, the experiments can always be divided into two phases, i.e., the training phase and the testing phase. In the training phase, the GEI is calculated for each video sequence; and the amplitude spectrums of Gabor wavelets are calculated as gait features. After applying (2D)2PCA to reduce the dimension of the feature matrix, optimal mapping matrix is obtained. In the testing phase, the testing gait sequence is converted from high-dimensional feature matrix to low-dimensional feature space using the calculated optimal mapping matrix. And the multi-class SVM algorithm is used to classify the gaits.

The correct classification rate (CCR) is employed to measure the classification accuracy:

where TP, TN, FP and FN indicate the number of classified samples that are true positive, true negative, false positive and false negative [27, 28].

4.1 Experiment 1

In experiment 1, the first three sets of gait sequences from all normal groups in Dataset B, i.e. no coat and no bag, are selected as the training set, and the remaining three sets of gait sequences in normal groups are used as the testing set. Both the training sample number and the test sample number are 372. Seven different dimension reduction techniques are implemented for the dimension reduction step, including the conventional PCA, LDA method, KPCA [7], KSPP [15], PCA + SPP [23], 2DPCA method and (2D)2PCA. The gait recognition steps for all approaches are the same. The CCRs of the seven algorithms in Experiment 1 are shown in Table 1. All classification rates are recorded with rank = 1. The complete cumulative match characteristics (CMC) curve is shown in Fig. 6 with rank from 1 to 30 for the seven algorithms.

From Table 1 and Fig. 8, the proposed method achieves the highest classification rates among all approaches. The coefficient matrix is calculated by sparse projection, which preserves the local information of the original data and potentially makes the gait features more distinguishable. Moreover, the CCRs of traditional methods are lower than hybrid methods. For example, by combining the PCA and SPP, the classification rates are higher than PCA and KPCA.

4.2 Experiment 2

In the experiment 2, three normal groups, one group with bags and one group with coats under 90-degree viewing angle in Database B are selected as the training set; and the other groups in Database B are selected as testing set. The CCRs of the seven algorithms (rank = 1) similar to Experiment 1 are shown in Table 2.

Comparing the classification rates between PCA and Gabor + (2D)2PCA, the classification rates of PCA method in 18o, 36o and 54o angles are significantly lower than the proposed Gabor + (2D)2PCA method. Moreover, the training time of (2D)2PCA is about 40 min comparing with the 2–3 h training time for the PCA method. The multi-class SVM processes the matrix size of 64 × 64, whereas the PCA method processes the matrix of dimension 4096 × 4096.

Figure 9 shows the CMC curves of the seven algorithms in experiment 2. Since Gabor wavelets extract scaling and directional information effectively, the GEI feature set contains more detailed scaling information in all directions. Therefore, compared to the method using GEIs directly as features, the proposed method produces higher classification rate. The classification rates of PCA + SPP, KSPP, and the proposed method are close. But both former methods use linear programming techniques in the sparse reconstruction process. The training time is much longer than the proposed method. From Table 2 and Fig. 9, the proposed method demonstrates properties including lower complexity, less training time, and higher classification rates. Moreover, the robustness of the proposed algorithm is also demonstrated on two aspects:

-

1)

Stable classification rates under different viewing angles. The classification rates using the proposed algorithm achieve more than 90% under all 11 viewing angles.

-

2)

Similar classification rates with or without carrying bags. In gait recognition problems, the gait sequences for carrying and not carrying bags are different stories. The proposed method shows high classification rates for both cases of with or without carrying bags.

4.3 Experiment 3

In experiment 3, two image sequences in Dataset A are selected as the training set; and the other sequences are treated as the testing datasets. Figure 10 shows the variation of the overall classification rates under different rank numbers using the proposed algorithm, PCA, Tang et al.’s 3-dimensional partial similarity matching (3DPSM) method [24] and Aggarwal et al.’s Zernike moment invariants (ZMI) method [1].

In Fig. 10, for rank number less than 20, the (2D)2PCA has higher classification rate than the other three methods. Figure 10 also illustrate the fact that, compared with the results in Experiment 1 and Experiment 2, the performance of the proposed method and the PCA approach are greatly improved. The reason is that, in Dataset A, there is no interference factors, such as bags and coats.

4.4 Experiment 4

In experiment 4, half of the image sequences are evenly selected from Dataset C as the training set; the remaining sequences are treated as the testing datasets. Figure 11 shows the CMC curve comparison among the proposed method, PCA, the 3DPSM method [24] and the ZMI method [1].

From Fig. 11, the classification rate of the proposed method is again higher than the other methods for rank number less than 25. In addition, compared with the results in Experiment 3, the overall classification rates decrease because of more interference factors in Dataset C, such as the poor video quality

5 Conclusion, discussion and future work

In this study, a novel gait recognition algorithm based on Gabor wavelets and (2D)2PCA is proposed to extract gait features. The proposed algorithm is demonstrated to have advantages, such as lower complexity, less training time, robustness and higher classification rates, compared to existing gait recognition methods. The concept of GEI preserves the important information including walk frequency, contour and phase information. The (2D)2PCA algorithm significantly reduces the feature space dimension to save the training time. A multi-class SVM is implemented to classify gait sequences. Experimental results show that the proposed method produces higher recognition accuracy than six existing approaches with less computational time. The robustness of the proposed algorithm is demonstrated by testing it on various viewing angles and rank numbers.

One limitation of the proposed approach is that the generated GEIs lose some dynamic information, since they are calculated by averaging a series of images. As a future work, other feature information, such as geometric information, texture and so on, will be integrated into GEI to improve the quality of the GEIs.

References

Aggarwal H, Vishwakarma D (2017) Covariate conscious approach for Gait recognition based upon Zernike moment invariants. IEEE Transactions on Cognitive and Developmental Systems

Arora P, Srivastava S (2015, February) Gait recognition using gait Gaussian image. In: IEEE 2015 2nd International Conference on Signal Processing and Integrated Networks (SPIN), pp 791–794

Chen Y, Yang J, Wang C, Liu N (2016) Multimodal biometrics recognition based on local fusion visual features and variational Bayesian extreme learning machine. Expert Syst Appl 64:93–103

Choudhury SD, Tjahjadi T (2015) Robust view-invariant multiscale gait recognition. Pattern Recogn 48(3):798–811

Daugman JG (1985) Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. JOSA A 2(7):1160–1169

Donohue L (2012) Technological leap, statutory gap, and constitutional abyss: remote biometric identification comes of age. Minnesota Law Review 97:407–559

Ekinci M, Aykut M (2007) Human gait recognition based on kernel PCA using projections. J Comput Sci Technol 22(6):867–876

Guan Y, Wei X, Li CT, Keller Y (2014) People identification and tracking through fusion of facial and gait features. In: Biometric Authentication. Springer International Publishing, pp. 209–221

Gupta JP, Singh N, Dixit P, Semwal VB, Dubey SR (2013) Human activity recognition using gait pattern. International Journal of Computer Vision and Image Processing (IJCVIP) 3(3):31–53

Han J, Bhanu B (2006) Individual recognition using gait energy image. IEEE Trans Pattern Anal Mach Intell 28(2):316–322

Hossain E, Chetty G (2013, November) Multimodal Feature Learning For Gait Biometric Based Human Identity Recognition. In: Neural Information Processing. Springer Berlin Heidelberg, pp. 721–728

Huang DY, Lin TW, Hu WC, Cheng CH (2013) Gait recognition based on Gabor wavelets and modified gait energy image for human identification. J Electron Imaging 22(4):043039

Islam MS, Islam MR, Hossain MA, Ferworn A, Molla MKI (2017) Subband entropy-based features for clothing invariant human gait recognition. Advanced Robotics 1–12

Iwama H, Muramatsu D, Makihara Y, Yagi Y (2013) Gait verification system for criminal investigation. IPSJ Transactions on Computer Vision and Applications 5:163–175

Kejun W, Tao Y, Zhuowen L, Mo T (2013) Kernel sparsity preserving projections and its application to gait recognition. Journal of Image and Graphics 18(3):257–263

Liu N, Tan YP (2010, March) View invariant gait recognition. In Acoustics Speech and Signal Processing (ICASSP), 2010 I.E. International Conference on (pp 1410-1413). IEEE

Lu J, Wang G, Moulin P (2014) Human identity and gender recognition from gait sequences with arbitrary walking directions. IEEE Trans Inf Forensics Secur 9(1):51–61

Luo C, Xu W, Zhu C (2015, September) Robust gait recognition based on partitioning and canonical correlation analysis. In: Imaging Systems and Techniques (IST), 2015 I.E. International Conference on (pp 1–5). IEEE

Milovanovic M, Minovic M, Starcevic D (2012, November) New gait recognition method using Kinect stick figure and CBIR. In: IEEE 2012 20th Telecommunications Forum (TELFOR), pp 1323–1326

Nandy A, Chakraborty P (2015, August) A new paradigm of human gait analysis with Kinect. In: IEEE 2015 Eighth International Conference on Contemporary Computing (IC3), pp 443–448

Preis J, Kessel M, Werner M, Linnhoff-Popien C (2012, June) Gait recognition with kinect. In: 1st international workshop on kinect in pervasive computing. New Castle, pp. P1–P4

Raheja JL, Chaudhary A, Nandhini K, Maiti S (2015) Pre-consultation help necessity detection based on gait recognition. SIViP 9(6):1357–1363

Ren Y, Wang Z, Chen Y, Zhao W (2015) Sparsity preserving discriminant projections with applications to face recognition. Math Probl Eng 501:203290

Tang J, Luo J, Tjahjadi T, Guo F (2017) Robust arbitrary-view gait recognition based on 3D partial similarity matching. IEEE Trans Image Process 26(1):7–22

Ukil A (2007) Support Vector Machine Computer Science 1.3:1303–1308

Veeraraghavan A, Roy-Chowdhury AK, Chellappa R (2005) Matching shape sequences in video with applications in human movement analysis. IEEE Trans Pattern Anal Mach Intell 27(12):1896–1909

Vishwakarma DK, Singh K (2016) Human activity recognition based on spatial distribution of gradients at sub-levels of average energy silhouette images. IEEE Transactions on Cognitive and Developmental Systems

Vishwakarma DK, Kapoor R, Dhiman A (2016) Unified framework for human activity recognition: an approach using spatial edge distribution and ℜ-transform. AEU-Int J Electron Commun 70(3):341–353

Wu Z, Huang Y, Wang L, Wang X, Tan T (2016) A comprehensive study on cross-view gait based human identification with deep cnns. IEEE transactions on pattern analysis and machine intelligence

Yam CY, Nixon MS (2009) Model-based gait recognition. In: Encyclopedia of Biometrics. Springer, New York, pp 633–639

Yam CY, Nixon MS, Carter JN (2004) Automated person recognition by walking and running via model-based approaches. Pattern Recogn 37(5):1057–1072

Yan C, Zhang Y, Dai F, Li L (2013, March) Highly parallel framework for HEVC motion estimation on many-core platform. In Data Compression Conference (DCC), 2013 (pp 63–72). IEEE

Yan C, Zhang Y, Xu J, Dai F, Zhang J, Dai Q, Wu F (2014) Efficient parallel framework for HEVC motion estimation on many-core processors. IEEE Trans Circuits Syst Video Technol 24(12):2077–2089

Yan K, Shen W, Mulumba T, Afshari A (2014) ARX model based fault detection and diagnosis for chillers using support vector machines. Energ Buildings 81:287–295

Yan K, Zhiwei J, Shen W (2017) Online fault detection methods for chillers combining extended kalman filter and recursive one-class SVM. Neurocomputing 228:205–212

Yoo JH, Hwang D, Moon KY, Nixon MS (2008, November) Automated human recognition by gait using neural network. In: Image Processing Theory, Tools and Applications, 2008. IPTA 2008. First Workshops on (pp 1–6). IEEE

Yu S, Tan T, Huang K, Jia K, Wu X (2009) A study on gait-based gender classification. IEEE Trans Image Process 18(8):1905–1910

Zeng W, Wang C, Li Y (2014) Model-based human gait recognition via deterministic learning. Cogn Comput 6(2):218–229

Zhang Y, Pan G, Jia K, Lu M, Wang Y, Wu Z (2015) Accelerometer-based gait recognition by sparse representation of signature points with clusters. IEEE Transactions on Cybernetics 45(9):1864–1875

Zheng S (2011) CASIA Gait Database collected by Institute of Automation, Chinese Academy of Sciences, CASIA Gait Database

Acknowledgements

This work is supported by the National Natural Science Foundation of China (CN) (No. 61303146, 61602431), and is performed under the auspices by the AQSIQ of China (No.2010QK407).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that there is no conflict of interest regarding the publication of this manuscript.

Rights and permissions

About this article

Cite this article

Wang, X., Wang, J. & Yan, K. Gait recognition based on Gabor wavelets and (2D)2PCA. Multimed Tools Appl 77, 12545–12561 (2018). https://doi.org/10.1007/s11042-017-4903-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4903-7