Abstract

This paper introduces a novel framework for user identification by analyzing neuro-signals. Studies regarding Electroencephalography (EEG) revealed that such bio-signals are sensitive, hard to forge, confidential, and unique which the conventional biometric systems like face, speaker, signature and voice lack. Traditionally, researchers investigated the neuro-signal patterns by asking users to perform various imaginary, visual or calculative tasks. In this work, we have analyzed this neuro-signal pattern using audio as stimuli. The EEG signals are recorded simultaneously while user is listening to music. Four different genres of music are considered as users have their own preference and accordingly they respond with different emotions and interests. The users are also asked to provide music preference which acts as a personal identification mechanism. The framework offers the benefit of uniqueness in neuro-signal pattern even with the same music preference by different users. We used two different classifiers i.e. Hidden Markov Model (HMM) based temporal classifier and Support Vector Machine (SVM) for user identification system. A dataset of 2400 EEG signals while listening to music was collected from 60 users. User identification performance of 97.50 % and 93.83 % have been recorded with HMM and SVM classifiers, respectively. Finally, the performance of the system is also evaluated on various emotional states after showing different emotional videos to users.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over many years, biometric is used to deal with the unique and identifiable characteristics of the human being. Due to its reliability, biometric technology has been widely adopted for security purposes. Existing technologies of biometrics mostly use hand, palm, face, eyes, and signature as a base and are termed as Human-Computer-Interaction (HCI) for person identification and verification [46]. An identification system answers ‘ w h a t y o u h a v e’ and works on one-to-N matching criteria of identifying a given person out of a pool of persons. In contrast, verification [41] system solves ‘ w h a t y o u a r e’ and involves one-to-one matching of the claimed identity.

Existing biometric systems suffer from various security attacks including impersonation, forging and shoulder-surfing etc. Therefore, a robust biometric system is required against such security risks. Acknowledging such challenging issues involved, recently a number of work was developed through brainwave based biometric systems using electroencephalography (EEG) signals. Various Brain-Computer-Interface (BCI) applications have been successfully developed using EEG signals. Such applications include gaming [23], security [42] and healthcare systems [47]. Also, the potential of EEG signals has been used for rehabilitation purpose in-case of traumatic diseases like strokes [18]. Moreover, the uniqueness, low cost, high temporal resolution, portability, easy setup and handling of the EEG signals imposed high potential to incorporate these signals for the development of biometric systems.

With the help of EEG signals, attempts are being made to analyze and detect various changes in psychological and physiological behavior. Emotions, which constantly modulate individual’s rationality, vary on instant basis. The detection of progressive and intricate changes in EEG signals have led researchers to study the importance of affective computing [37]. Breitwieser et al. [9] proposed the use of smart phone to monitor bio-signals. The objective was to interpret the bio-signals through multimedia application in real time.

To measure specific patterns of brain, researchers have used various stimuli like mental activity task [32], motor imaginary movement [6], Visual Evoked Potential (VEP) [50]. Stickel et al. [44] used abstract and realistic pictures as stimuli to study EEG recordings for the assessment of software learning. It was shown that users with alpha frequency depict easy learning whereas users with high beta waves resulted in hard learning of software. In these studies, it was observed that users’ data was collected using various stimuli by superimposing the tasks but no clear evidence was given to prove the unique characteristic of the brain.

In this work, we have used a multimedia approach for person identification, where neuro-signals are evoked by listening to music. From the experiment, it was noticed that music has high impact on user’s behavior. Lin et al. [25] proposed an emotion recognition framework using EEG signals while listening music. Four basic emotions, namely joy, anger, sadness and pleasure were recognized by extracting spectral power features using SVM classifier. However, the impact of music on neuro-signal depends on individual choice of music-genre. A study [16] was done to predict preference from four different types of music, namely rock pop, electronic, jazz, and classical. Inspired with these work, we have selected four music categories for stimuli while recording EEG signals. The main contributions of the paper are as follows:

-

1.

We proposed a novel user identification framework based on EEG signals while listening to music.

-

2.

User’s identity validation is performed using four different genres of music i.e. devotional, electronic, classical and rock.

-

3.

A comparative performance analysis of two different classifiers, namely Hidden Markov Model (HMM) and Support Vector Machine (SVM) are presented for user identification purpose.

-

4.

We also studied the impact of various emotions in identification process after showing different emotional videos to users.

Rest of the paper is systematically organized as follows. In Section 2, we present the related work of user identification system. Section 3 discusses the system setup for the proposed framework, preprocessing techniques employed for smoothing the EEG signals, feature extraction methods, and the classifiers used. Experimental results are presented in Section 4. Finally, conclusion and future possibilities of the work are discussed in Section 5.

2 Related work

Based on the various physiological or behavioral features, researchers have worked on biometric identification techniques that varies from fingerprint [5], palm vein [3], signature [27, 40], voice [15], iris [14] to a novel EEG signals [10, 43] based approaches. In this section, we present a comprehensive review of the work done by researchers in biometric applications.

Fingerprints are commonly used for identity-verification biometric because of its distinct features. A Vaulted Fingerprint Verification (VFV) protocol was used [5] where triangle features were proposed for identity verification. The protocol was to verify remote users so that their privacy remain conserved. With the same feature extraction technique [38] a touch-less fingerprint authentication system was proposed and attained an accuracy of 93.6 %. In [21], the image of palm veins structure were recorded and performed an Equal Error Rate (EER) of 1.20 %, whereas [24] encoded the 2D image into a bit string for identification with ERR of 0.4 %. Another palm vein based verification system was proposed [3] using Gabor filter and cosine distance as features for classification. The authors in [52] used lines of the palm for authentication purpose by using directional line detector with an ERR of 0.6 %.

Because of the day-to-day financial transactions and official communication of documents, signature based verification system is widely accepted. In [35], an offline signature verification system using contourlet transform and textural features has been discussed. The performance of the system was evaluated using HMM classifier with a success rate of 93.4 %. A character recognition was presented [19] to check behavioral identity of the users using Artificial Neural Network (ANN) classifier with an EER of 6.9 %.

Making use of brain signals as a potential biometric authentication system, can also help in identifying the people with various disabilities. In [41 ], a person authentication system using EEG signals has been presented. They have recorded the signals of 9 subjects while performing imaginative tasks of self-paced left-hand or right-hand movement. A Gaussian Mixture Model (GMM) and Maximum-a-Posteriori (MAP) model based statistical framework has been used to evaluate the system performance with an accuracy of 80.7 %. Palaniappan et al. [33] have proposed a VEP-based person identification framework by recording EEG signals of 102 subjects based on mental task. Subjects have to recognize and remember the picture that was shown earlier. A classification rate of 98.12 % was achieved on gamma band VEP signals using Elman neural network classifier. The repeatability of EEG signals over time for the same user has been addressed in [22] by acquiring signal dataset of 9 subjects in two different sessions. An accuracy of 90.53 % has been recorded using auto-regression features and linear classifier based on a Minimum-Mean-Square-Error (MMSE) criterion. In [1], a person authentication system has been proposed using EEG signals generated from mental writing as passwords. Multivariate Autoregressive (MVAR) and Power Spectral Density (PSD) features were extracted, where a Half Total Error Rate (HTER) of 5 % and 3 % have been recorded with SVM classifier whereas in [2], auto regression feature extractor and linear discriminator classifier have been proposed for person identification. Zúquete et al. [54] have proposed a person identification using EEG signals by analyzing VEPs, which are brain’s response to a visual stimulus. The energy of differential EEG signals of 8 occipital electrodes was extracted as features. The system was tested using k-NN with 1-NN and Support Vector Data Description (SVDD) with a Radial Basis Function (RBF) kernel classifiers where an accuracy of 93.7 % was recorded for 70 subjects. In [13], an accuracy of 99 % was achieved for authentication by using single-channel EEG signal with 15 subjects.

Researchers also worked on combination of EEG, Electrocardiography (ECG) and other physiological signals to study the state of emotions like excitation, calmness, stress, happiness and neutral etc. Soleymani et al. [43] proposed a user-independent emotion recognition system to recover affective tags for videos using EEG signals and eye-gaze data while showing emotional video clips. They used logarithms of the PSD as EEG features for 32 electrodes. An accuracy of 68.5 % and 76.4 % were achieved with three valence and arousal scores when tested using SVM classifier with RBF kernel. Emotions through EEG brain signals were measured using music to distinguish various rhythms [10]. The melody and beat-histogram as rhythm feature vector were used to classify four emotions. Linear regression and C4.5 were used for building emotion models whereas [25] the SVM classifier is employed to investigate four basic emotions i.e. joy, anger, sadness, pleasure using EEG signals while listening music with an accuracy of 92.73 %. In [34], the authors have proposed a user authentication system using acoustical monitoring of keyboard while typing the pre-defined word. Mel-Frequency Cepstral Coefficients (MFCC) features were extracted from the input data and HMM classifier was used to evaluate the performance. The authors have achieved EER between 9.4 % and 14.8 % for authentication task whereas an accuracy of 99.33 % was achieved in person identification.

3 System setup

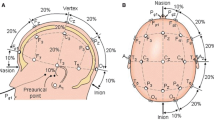

In this section, we describe the proposed multimedia framework for user identification using EEG signals while listening instrumental music. The block diagram of the proposed framework is shown in Fig. 1. The activity of capturing brain signals while listening to music has been done by wearing EEG sensor and earphones simultaneously. To capture the signals, a consumer graded non-invasive EEG based neuro-headset Emotiv Epoc+ with 14 sensors +2 references is used [8]. More details can be found in [45]. A graphical user interface (GUI) provided by EmotivXavier Test Bench 3.1 tool at a sampling rate of 128 Hz is used to analyze the signals while recording. In order to build a robust identification system all 14 channels (i.e. AF3, AF4, F3, F4, F7, F8, FC5, FC6, P7, P8, T7, T8, O1, O2) are considered. Four different types of music were selected for the study, namely devotional, electronic, classical and rock. In our experiment, all four music genres were of 20 seconds. These four music genres were merged into a single audio file with 10 seconds of silence interval between each music as depicted in Fig. 2. The silence interval has been kept for resetting emotional state of the user between ending and starting of a music genre. After hearing the music genres, the users were asked to give the preference of music enjoyed most that will act as their password. The vocal music has not been included in the study for avoiding unwanted variation in the brain signals due to lyrics processing.

3.1 Signal preprocessing using Savitzky-Golay filter

Recorded bio-signals are usually non-stationary due to continuous change over time. They are also contaminated with several noise such as unwanted noise from hair, muscle activity, heart pulse and possible changes in the impedance of the electrodes [48]. Moreover, the recording equipment is very sensitive and easily pick interferences from the surrounding. Hence the input signal with high signal-to-noise ratio (SNR) is expected. Thus, in order to achieve an acceptable SNR, signal smoothing technique has been applied to extract necessary information from signals. In this study, the signals are filtered by Savitzky-Golay filter to attenuate its short-term variations [7].

This digital filter works for the signals captured in time domain and designed to formulate data smoothing problems [20]. The filter is defined as a weighted coefficients (C −n ,C −(n−1)...,C n−1,C n ) to carry smoothing operation. These weighted coefficients are also known as convolution integers for fitting data to a polynomial of degree d. For the raw signal [ R = R 1,R 2...,R N ], the smoothed data points (S k ) g are computed using (1).

In this work, a degree size of 2 and cut-off frequency of 100 Hz are used to filter the captured data of each electrode. By this filter we avoid noise and capture the important trend. Since contraction of muscles lead signal frequencies greater than 100 Hz, so suppression of frequencies above 100 Hz will remove movement artifacts in the acquired signal [39]. The result of preprocessing is shown in Fig. 3, where Fig. 3a shows the captured raw signal, and Fig. 3(b) shows the outcome of the corresponding signal after applying Savitzky-Golay filter.

3.2 Hidden Markov model based user identification

In this section, the features extracted from EEG signals and classification using Hidden Markov Model (HMM) is discussed. Time-frequency based wavelet transform features are used to extract the gamma band frequency. These are described below.

3.2.1 Wavelet transform features

Wavelet transform based features have been considered as an important tool for signal analysis. Wavelet Transform has the ability to represent signals in both time and frequency domains [36]. Fourier transform can be applied on stationary signals. However, EEG signals are non-stationary signal and contain a time varying frequency content. Thus, Fourier transform are not applied to such signals. The use of Daubechies-4 (DB4) wavelets in the analysis of EEG signals has been used successfully [49]. In DB4, the discrete wavelet coefficients (DWT) for different scales are obtained. Here, the output of the high pass filter provides the set of DWT coefficients called detail-coefficients that capture the high frequency energy of the signal. Similarly, low pass filter encompasses the low energy of the signal and provides approximation-coefficients. The mathematical formulation and wavelet expansion of signal \(x\left (t\right )\) with ‘ detail’ and ‘ approximation’ coefficients can be described using (2).

where \(\sum C_{j0k}\varphi _{j0k}\left (t\right )\) denotes the ‘ approximation’ and \({\sum }_{j=k0} {\sum }_{k}d_{ik}\psi _{jk}\left (t\right )\) represents the ‘ detail’ coefficients. The ‘ detail’ coefficients are represented by using (3)

where \(\psi _{jk}\left (t\right )dt\) defines the wavelet function. It is given by using (4),

The ‘ approximation’ coefficient can be described using (5),

whereas \(\varphi \left (t\right )\) is described as the scaling function in DB4 and can be computed using (6).

The electrical activity measured from the brain surface is divided into specific frequencies. As certain frequencies are more prominent in different state of emotions, hence measured in different ranges. The standard EEG frequency bands to measure brain signals are (0−4 Hz) Delta, (4−8 Hz) Theta, (8−16 Hz) Alpha, (16−32 Hz) Beta, and (32−100 Hz) Gamma. It has been shown that oscillatory neural activity in the gamma frequency is sensitive to attenuated tones in a rhythmic sequence [29]. The magnitude of Steady State Evoked Potentials (SSEPs) changes when the subject hear rhythmic sequence [30]. Therefore, using DB4 the signals are decomposed into 5-levels as shown in Fig 4.

Palaniappan and Mandic considered the variations in Gamma band for person identification using EEG signals [33]. In our study, after initial experiments on all band waves (alpha, beta, gamma, theta and delta) individually, we also obtained highest performance using Gamma band. Therefore, only the variation in gamma band waves are considered in evaluating the performance of the system using HMM and is denoted by (G F ) that can be computed using (7). An illustration of extracted gamma band wave feature corresponding to a preprocessed signal is shown in Fig 5.

where \(G_{f^{1}}\), \(G_{f^{2}}\) are the gamma band wave features corresponding to first, second electrodes and so on.

3.2.2 Classification using HMM

HMM classifier is a discrete statistical model used to process non-stationary signals. The model is successfully used for modeling bio-signals [31], speech signals [12] and image sequences [26]. It is a double layered process with hidden layer controlling the state of an observable layer and shows the time variation of random signals with Markovian chain. The basic HMM model consists of three tuples defined by initial, transition and observation probability states and is denoted by { π, A, B} with π as the initial probability distribution, A represents the transition state matrix as A = [a i j ], i, j = 1, 2,…N shows the transition probability from state i to state j, and B denotes the observation probability b j (O k ) for observing the sequence O k from state j. A Gaussian Mixture Model (GMM) has been defined for each state of the model and the output probability density function b j (x) is computed by using (8).

where x is the k dimensional feature vector, M j is the number of Gaussian assigned to state j. ℵ(x, μ,Σ) denotes the Gaussian with mean μ. Co-variance matrix denoted by \(\sum \) and c j k gives weight coefficient of the Gaussian with component k to state j. For a model λ, if O is an observation sequence O = (O 1,O 2,…O T ) and is assumed to be generated by a state sequence Q = Q 1,Q 2,…S T of length T, then the probability of observation or likelihood for the observed sequence can be calculated using (9), where \(\pi _{q_{1}}\) denotes the initial probability of start state.

In this work, Bakis topology which works from left-to-right has been used for experiments performed using HTKFootnote 1 (HMM toolkit) tool. The extracted gamma feature vector defined in (7) has been used to train EEG signals model and re-estimation of the initial output probability b i (O) has been done using Baum-Welch algorithm. The identification process has been performed using Viterbi decoding algorithm that finds a signal sequence having best likelihood.

3.3 SVM based user identification

User Identification using SVM classifier is performed using two statistical features (S F ) that are derived from the gamma band feature vector (G F ). The details of the features and the classifier are discussed below.

3.3.1 Statistical features

We have extracted two statistical features for each \(G_{F^{i}}\) where (i=1, 2..., 14), namely, Root Mean Square (RMS) and Standard Deviation denoted by S R and S D , respectively. Hence a new feature vector S F of 28 dimensions is computed using (10).

-

1.

Root Mean Square:RMS is a one-dimensional feature correspond to S R in our feature vector for each electrode. It’s a quantity of the square root of the mean value of the squared values of the quantity taken over an interval [17]. The value can be computed using (11)

$$ RMS=\sqrt {\frac{1}{N}\sum\limits_{i=1}^{N}{\left| X_{i}\right|^{2}}} $$(11)where N is the number of samples and X is the input vector for the i th input’s amplitude.

-

2.

Standard Deviation:Standard deviation (σ) based feature in the EEG signals has been successfully reported [4] for security and emotion recognition applications. Standard deviation is used to show the variation exists in the signals and can be computed by using (12).

$$ \sigma = \left( \frac{1}{n-1} \sum\limits_{i=1}^{n}\left( x_{i} - \bar x \right)^{2}\right)^{\frac{1}{2}} $$(12)where x is the signal corresponding to one electrode and \(\bar x\) is the mean defined in (13)

$$ \bar x=\frac{1}{n}\sum\limits_{i=1}^{n}\left( x_{i}\right) $$(13)and n is the number of elements presents in the signal. The standard deviation corresponds to our feature vector S D .

3.3.2 Classification using SVM

SVM classifier aims to maximize the distance between support vectors by avoiding the misclassification error and over-fitting of the data. As there are large number of predictor values, SVM deals with these values by mapping them to a new dimensional space [53]. In this study, linearly separable multi-class SVM classifier has been used that separates the data without overlapping and mis-classification. For a labelled training set {(x 1,y 1),(x 2,y 2)......(x l ,y l )}, where x i with i = 1, 2...l represents a vector of length d and y i ∈ {1, 2,....k} that represents the class of a sample signal. The formulation of the process can be done by minimizing (14).

with constraints defined in (15)

where \({\xi _{i}^{m}}\geq , i=1,.......l\) and m∈1,........k∖y i . Then the decision can be computed by using (16)

where, the weight vector \(\left (\omega \right )\), bias (b), number of support vectors \(\left (m\right )\), k is the k th classifier, C is the regularization parameter and ξ is the slack variable. In this work, we have used LIBSVMFootnote 2 (A library for SVM) to conduct experiments on SVM classifier.

4 Results and discussions

This section presents the results that were obtained after performing various experiments on the collected dataset. The performance of the proposed framework has been evaluated in two phases using 5-fold cross validation, where 80 % of the dataset has been used in training EEG signals and the rest 20 % used for testing. First, we discuss the classification results using HMM and then, the classification results using SVM have been presented.

4.1 Dataset description

In this study, real time data for user identification was collected from 60 users using Emotiv Epoc+ based wireless EEG neuro-sensor at Indian Institute of Technology Roorkee, India. The volunteers were asked to listen to the music with their eyes closed while their electrical activity was being recorded through EEG device. Data from all 14 channels at a sampling rate of 128 Hz was recorded. The users were instructed to remain calm during the whole process with clear thoughts. Four different types of instrumental music were selected that includes devotional, electronic, classical and rock. Each music was of 20 seconds with 10 seconds of silence-gap so that the user can come back in normal emotional state. The detailed parameters of the 4 music genres are shown in Table 1. These parameters are the properties of MP3 music files correspond to four music genres. Here, the music act as stimuli to evoke brain signals that were used for identification purpose. The EEG signals for all music were recorded in a single trial using the music framework described in Fig. 2. By using this, we have collected 240 (i.e. 60x4) EEG signals of 60 users corresponding to 4 music (20 sec each). Next, out of these 240 collected signals, each signal is divided into 10 equal parts with 2 seconds each that results a total of 2400 (i.e. 240x10) distinct signals. Hence a dataset of 2400 signals was recorded for 60 users listening to 4 music genres with 10 samples of each music with duration of 2 seconds.

After getting the first preference of music from each subject, 600 (i.e. 60x10) samples out of 2400 signals were selected from the dataset and these were used to evaluate the performance of the system. Large variations in the EEG signals of multiple users were observed even with the same music as their first preference. Such variation are shown in Fig. 6, where four different users opted the same music (i.e. electronic) as their first preference. Moreover, a large variation in response to the four music was recorded in the EEG signals of the same user while listening. Such variation can be seen in Fig. 7, where each sub-plot of the EEG signal corresponds to one of the four music. These variations are due to different level of involvement and emotions by different subjects.

In addition to this, data from 10 users were also collected to study the impact of emotions on the EEG signals. For this, four different 2−3 minutes videos were shown to the users to incite the emotions according to Valence-Arousal model. Four different kinds of emotions are studied namely happiness, sadness, anger and scare. The emotion state data was captured in various sessions. The key goal is to test the impact on user’s EEG signals for user identification system while going through various emotional states. Videos were shown one session at a time and data was collected after the end of the video. For further research in this area the dataset has been made onlineFootnote 3.

4.2 Identification results using HMM

HMM has been considered for modeling temporal sequences. Hence the 14 dimensional feature vector G F defined in (7) has been used for evaluating the performance of person identification process using HMM. The feature vector sequence has been processed using left-to-right continuous density HMMs. The results have been computed by varying HMM states, S t ∈ {3, 4, 5, 6} and also the number of Gaussian components per state i.e. from 1 to 64 with an incremental step power of 2. An accuracy of 97.50 % has been recorded at 32 Gaussian mixtures when tested with varied GMM components with 3 HMM states as shown in Fig. 8. The identification rate has also been computed by varying HMM states with 32 Gaussian mixtures as shown in Fig. 9. From the experiment, an accuracy of 97.50 % has been recorded with 3 HMM state and 32 Gaussian mixtures. The confusion matrix corresponding to the HMM based results for all 60 subjects in the form of heat-map is shown in Fig. 10.

Error Analysis:

Here, we present the details of the users whose signals were not correctly identified by the proposed framework by consulting the confusion matrix shown in Fig. 10. It is because of the noisy signals and different emotional states of the subjects that created confusion and wrongly matched with the EEG signals of other subjects. The signals of the three subjects were not correctly identified by the system, even though they have different music choice as their passwords. One reason could be the electromagnetic noise due to surrounding devices or ongoing University Examinations which caused the stress and led to wrong interpretation of the signals. Hence a different variation in the same signal has been recorded that creates the confusion and were identified wrongly.

4.3 Identification results using SVM

Since SVM classifier performs better in non-temporal sequence related problems. Thus, we have used the 28 dimensional feature vector S F as defined in (10). The performance of the system has been evaluated with linear kernel based SVM classification and by varying the regularization parameter (C) between 1 to 10 for multi-class classification as shown in Fig. 11. An average accuracy of 93.83 % has been recorded at regularization parameter C = 6.

4.4 User identification by varying signal with time

The performance of the system was also evaluated by varying testing signals with recorded duration of 20 seconds. As defined in Section 4.1, the EEG signals were divided into 10 samples with equal time duration of 2 seconds. The experiments were conducted by picking 2 consecutive signals for testing and by keeping rest of the samples for training. The results were computed for all 60 subjects, where accuracies of 100 % and 96.88 % were recorded at signals that belongs to the time duration of 10 to 16 seconds as shown in Fig. 12, when tested with HMM and SVM classifiers, respectively. In HMM, the maximum accuracies were recorded at 3 HMM states with 32 Gaussian mixture components whereas in SVM, the accuracies were recorded using Linear kernel and by varying regularization parameter (C) from 1 to 10 where maximum results were achieved with C=6. It was noted that the performance of the system is high in the signals that lie between 10-16 seconds (in middle) instead of signals in the starting and ending time durations. It might be due to the focus achieved by the users at the middle part of the music in comparison to the starting and ending parts.

4.5 User identification with varying emotional states

In this section, we present the details of the performance of the proposed framework with varying emotional state. The EEG signals were recorded while the subjects were in different emotional states after watching different videos as described in Section 4.1. In order to peruse the performance of the system four different emotional states have been selected namely, ‘happiness’, ‘sadness’, ‘anger’ and ‘scare’. The experiment was conducted for 10 subjects by keeping their previous training models obtained from HMM and SVM classifiers defined in Sections 4.2 and 4.3, respectively. EEG signals of a user with different emotional states are depicted in Fig. 13. It can be noted from Fig. 13 that different emotions generated variation in EEG signals. A comparative performance of the system for different emotional states in comparison to the ‘calm’ state (recorded later without showing any videos) is shown in Fig. 14. It can be noted that the maximum accuracy has been achieved as 100 % in the calm state in comparison to other emotional states. So it can be analyzed that the emotions play an important role in EEG signals. Thus, leading to variation in the performance level of the identification system.

4.6 User identification results for different music choice

This section presents the user identification results for different music genres i.e. devotional, electronic, classical and rock when tested separately with HMM and SVM classifiers for all 60 users. An average accuracy of the performance in the form of bar chart is shown in Fig. 15. Though, the identification results on different music are slightly less than the proposed music choice based framework, but could be used to allow the user to set multiple secret passwords based on different music.

4.7 Comparative performance analysis

A comparative analysis of our proposed framework with previous studies is shown in Table 2 for user identification. An accuracy of 96.98 % was reported [11] with resting state when the number of subjects is 48. With the same state [28] an accuracy of 96.2 % is calculated with 11 subjects. While using VEP as an activity maximum accuracy of 86.1 % is shown with 10 subjects [53]. It can be observed from the Table 2 that the experimental results of the proposed approach with 60 user while listening music outperforms the previously reported studies for user identification.

5 Discussion and conclusion

In this paper, we have proposed a novel user identification framework using EEG signals recorded while the users listened to 4 genres of music. Two types of experiments were performed. First, we tried to avoid emotional influence of previous music by including a pause of 10 seconds in between two successive music genres. Second, videos of different emotions were shown to the user before recording EEG signals to check the influence of emotion in authentication system. These samples were tested with classifier models generated from 1st experiment. We noted that the results from experiment 1 were better than experiment 2. This suggests us that previous emotion could influence the user during authentication.

A large dataset comprising of 60 users was used in this system. The performance of the system was evaluated using EEG signals based on the music choice reported by the users. In experiment 1, user identification rates of 97.50 % and 93.83 % were recorded using HMM and SVM classifiers, respectively. Next for experiment 2, in emotional state based performance evaluation an identification rate of 100 % was achieved in ‘calm’ state when tested with HMM and SVM, respectively. In the future, the study can be extended to better estimate the performance with different emotional states by developing emotional state models. Since there is always a scope of improvement, other hierarchical and classifier combination approach can be used for further enhancement in identification performance.

References

Abdulkader SN, Atia A, Mostafa M-SM (2015) Single trial authentication with mental password writing. In: Human Aspects of Information Security, Privacy, and Trust, pp 3–12

Abo-Zahhad M, Ahmed SM, Abbas SN (2015) A new multi-level approach to EEG based human authentication using eye blinking. Pattern Recogn Lett

Al-Juboori AM, Wu X, Zhao Q (2013) Biometric authentication system based on palm vein. In: International Conference on Computer Sciences and Applications, pp 52–58

Alomari MH, Abubaker A, Turani A, Baniyounes AM, Manasreh A (2014) EEG mouse: A machine learning-based brain computer interface. Int J Adv Comput Sci Appl 5(4):193–198

Alzahrani H, Boult TE (2014) Remote authentication using vaulted fingerprint verification. In: SPIE Defense+ Security, pp 90750K–90750K

Astigarraga A, Arruti A, Muguerza J, Santana R, Martin JI, Sierra B (2016) User adapted motor-imaginary brain-computer interface by means of EEG channel selection based on estimation of distributed algorithms. Math Probl Eng

Azami H, Mohammadi K, Bozorgtabar B (2012) An improved signal segmentation using moving average and savitzky-golay filter. Journal of Signal and Information Processing

Badcock NA, Mousikou P, Mahajan Y, de Lissa P, Thie J, McArthur G (2013) Validation of the emotiv EPOC®; EEG gaming system for measuring research quality auditory ERPs. PeerJ 1:e38

Breitwieser C, Terbu O, Holzinger A, Brunner C, Lindstaedt S, Müller-putz GR (2012) iscope–viewing biosignals on mobile devices. In: Joint International Conference on Pervasive Computing and the Networked World, pp 50–56

Cabredo R, Legaspi RS, Inventado PS, Numao M (2012) An emotion model for music using brain waves. In: International Society of Music Information Retrieval, pp 265–270

Campisi P, Scarano G, Babiloni F, Fallani FD, Colonnese S, Maiorana E, Forastiere L (2011) Brain waves based user recognition using the eyes closed resting conditions protocol. In: International Workshop on Information Forensics and Security, pp 1–6

Champion C, Houghton S (2016) Application of continuous state hidden markov models to a classical problem in speech recognition. Comput Speech Lang 36:347–364

Chuang J, Nguyen H, Wang C, Johnson B (2013) I think, therefore i am: Usability and security of authentication using brainwaves. In: International Conference on Financial Cryptography and Data Security, pp 1–16

de Mira Jr. J, Neto HV, Neves EB, Schneider FK (2015) Biometric-oriented iris identification based on mathematical morphology. Journal of Signal Processing Systems 80(2):181–195

Dustor A, Kłosowski P (2013) Biometric voice identification based on fuzzy kernel classifier. In: International Conference on Computer Networks, pp 456–465

Hadjidimitriou SK, Hadjileontiadis LJ (2012) Toward an EEG-based recognition of music liking using time-frequency analysis. IEEE Trans Biomed Eng 59(12):3498–3510

Hariadi M, Purnomo MH et al (2014) EEG signal identification based on root mean square and average power spectrum by using backpropagation. J Theor Appl Inf Technol 66(3)

Holzinger A, Scherer R, Seeber M, Wagner J, Müller-Putz G (2012) Computational sensemaking on examples of knowledge discovery from neuroscience data: towards enhancing stroke rehabilitation. In: International Conference on Information Technology in Bio-and Medical Informatics, pp 166–168

Iranmanesh V, Ahmad SMS, Adnan WAW, Yussof S, Arigbabu OA, Malallah FL (2014) Online handwritten signature verification using neural network classifier based on principal component analysis. Sci World J:2014

Krishnan SR, Seelamantula CS (2013) On the selection of optimum savitzky-golay filters. IEEE Transactions on Signal Processing 61(2):380–391

Kumar A, Hanmandlu M, Madasu VK, Vasikarla S (2011) A palm print authentication system using quantized phase feature representation. In: Applied Imagery Pattern Recognition Workshop, pp 1–8

La Rocca D, Campisi P, Scarano G (2013) On the repeatability of EEG features in a biometric recognition framework using a resting state protocol. In: BIOSIGNALS, pp 419–428

Lalor EC, Kelly SP, Finucane C, Burke R, Smith R, Reilly RB, Mcdarby G (2005) Steady-state VEP-based brain-computer interface control in an immersive 3d gaming environment. EURASIP journal on applied signal processing 2005:3156–3164

Lee JC (2012) A novel biometric system based on palm vein image. Pattern Recogn Lett 33(12):1520–1528

Lin Y-P, Wang C-H, Wu T-L, Jeng S-K, Chen J-H (2008) Support vector machine for EEG signal classification during listening to emotional music. In: 10th Workshop on Multimedia Signal Processing, pp 127–130

Mahajan K, Rai M (2014) Watermarking scheme for colour images using hidden markov model. International Journal of Computer Science and Mobile Computing

Mandal R, Roy PP, Pal U, Blumenstein M (2015) Spatial pyramid matching-based multi-script off-line signature identification. Journal of the American Society of Questioned Document Examiners 18(1):3–9

Mao C, Hu B, Wang M, Moore P (2015) EEG-based biometric identification using local probability centers. In: International Joint Conference on Neural Networks, pp 1–8

Nozaradan S, Peretz I, Missal M, Mouraux A (2011) Tagging the neuronal entrainment to beat and meter. J Neurosci 31(28):10234–10240

Nozaradan S, Peretz I, Mouraux A (2012) Selective neuronal entrainment to the beat and meter embedded in a musical rhythm. J Neurosci 32(49):17572–17581

Obermaier B, Guger C, Neuper C, Pfurtscheller G (2001) Hidden markov models for online classification of single trial EEG data. Pattern Recogn Lett 22(12):1299–1309

Palaniappan R (2008) Two-stage biometric authentication method using thought activity brain waves. Int J Neural Syst 18(01):59–66

Palaniappan R, Mandic DP (2007) Biometrics from brain electrical activity: A machine learning approach. IEEE Transactions on Pattern Analysis and Machine Intelligence 29(4):738–742

Pleva M, Kiktova E, Viszlay P, Bours P (2016) Acoustical keystroke analysis for user identification and authentication. In: 2016 26th International Conference Radioelektronika (RADIOELEKTRONIKA), pp 386–389

Pushpalatha KN, Prajwal SS, Gautam AK, Shiva Kumar KB (2014) Offline signature verification based on contourlet transform and textural features using hmm. In: Recent Advances and Innovations in Engineering, pp 1–6

Rafiee J, Rafiee M, Prause N, Schoen M (2011) Wavelet basis functions in biomedical signal processing. Expert Syst Appl 38(5):6190–6201

Ramirez R, Palencia-Lefler M, Giraldo S, Vamvakousis Z (2015) Musical neurofeedback for treating depression in elderly people. Front Neurosci 9

Ravi H, Sivanath SK (2013) A novel method for touch-less finger print authentication. In: International Conference on Technologies for Homeland Security, pp 147–153

Repovš G (2010) Dealing with noise in eeg recording and data analysis. In: Informatica Medica Slovenica, vol 15, pp 18–25

Roy PP, Bhowmick S, Pal U, Ramel JY (2012) Signature based document retrieval using ght of background information. In: International Conference on Frontiers in Handwriting Recognition, pp 225–230

Sebastien M, JosR D (2007) Person authentication using brainwaves EEG and maximum a posteriori model adaptation. IEEE Transactions on Pattern Analysis and Machine Intelligence 29(4):743–752

Shah PG, Vastare KC, Huang X, Srikumar A, Sreenivasa SM, Kumar APRM, Kodada KR (2015) Development of a novel EEG wave controlled security system. In: Seventh International Conference on Intelligent Computing and Information Systems, pp 116–120

Soleymani M, Pantic M, Pun T (2012) Multimodal emotion recognition in response to videos. IEEE Trans Affect Comput 3(2):211–223

Stickel C, Fink J, Holzinger A (2007) Enhancing universal access–EEG based learnability assessment. In: International Conference on Universal Access in Human-Computer Interaction, pp 813–822

Stytsenko K, Jablonskis E, Prahm C (2011) Evaluation of consumer eeg device emotiv epoc. In: MEi: CogSci Conference 2011, Ljubljana

Sundararajan A, Pons A, Sarwat AI (2015) A generic framework for EEG-based biometric authentication. In: 12th International Conference on Information Technology-New Generations, pp 139–144

Swaminathan R, Prasad S (2016) Brain computer interface used in health care technologies. In: Next Generation DNA Led Technologies, pp 49–58

Tatum IV WO (2014) Handbook of EEG interpretation. Demos Medical Publishing

Ting W, Guo-zheng Y, Bang-hua Y, Hong S (2008) EEG feature extraction based on wavelet packet decomposition for brain computer interface. Measurement 41 (6):618–625

Wang Y, Gao X, Hong B, Jia C, Gao S (2008) Brain-computer interfaces based on visual evoked potentials. IEEE Engineering in medicine and biology magazine 27(5):64–71

Weston J, Watkins C (1998) Multi-class support vector machines. Technical report

Wu X, Zhang D, Wang K (2006) Palm line extraction and matching for personal authentication. IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans 36(5):978–987

Yeom S-K, Suk H-I, Lee S-W (2013) Person authentication from neural activity of face-specific visual self-representation. Pattern Recogn 46(4):1159–1169

Zquete A, Quintela B, da Silva Cunha JP (2010) Biometric authentication using brain responses to visual stimuli. In: BIOSIGNALS, pp 103–112

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kaur, B., Singh, D. & Roy, P.P. A Novel framework of EEG-based user identification by analyzing music-listening behavior. Multimed Tools Appl 76, 25581–25602 (2017). https://doi.org/10.1007/s11042-016-4232-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-4232-2