Abstract

In this paper, aiming at the drawback of the popular dimensionality reduction method Discriminant Sparse Neighborhood Preserving Embedding(DSNPE), i.e. the construction of its between-class scatter is too complexity, a novel algorithm Discriminant Locality Preserving Projection (DSLPP) is proposed. Our proposal retains the local sparse reconstructive relationships of DSNPE and constructs a novel between-class scatter by using all mean faces as sparse representation dictionary. In particular, DSLPP preserves the sparse reconstructive relationship of mean face, so then it can not only efficiently reduce the between-class scatter computation complexity of DSNPE, but also increase the discriminant power. In the experiment, we compare DSLPP with classic and state-of-the-art dimensionality reduction methods on the publicly available data sets such as ORL, Yale, UMIST, and AR, and further apply the Gabor feature into DSLPP on AR face database to further improve its performance. The experimental results show that DSLPP is able to obtain a better representation of the class information and achieve much higher recognition accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since pattern recognition tasks, such as data mining, information retrieval, and text categorization, often suffer from the low efficiency brought by the huge dimensionality of the feature space, Dimensionality Reduction (DR) technology has attracted more and more attention. The objective of dimensionality reduction is to find a low-dimensional feature representation for data compression and to enhance the discrimination for subspace classification [18]. With respect to the DR methods that have been proposed recently, they can generally be categorized into two classes, i.e. linear and nonlinear methods. In the first class, two most popular ones are Principal Component Analysis (PCA) [14] and Linear Discriminant Analysis (LDA) [1]. However, LDA cannot resolve Small Sample Size (SSS) problem [23] because the within-class compactness matrix is singular, and both PCA and LDA consider only the global Euclidean structure of the data and fail to take into account the manifold structure of the data [41]. Furthermore, to avoid the SSS problem of LDA, several algorithms, such as Maximum Margin Criterion(MMC) [17], were further proposed.

In contrast to linear DR approaches, techniques based on nonlinear manifold learning can effectively discover the intrinsic nonlinear structures of data [45]. Recently, Many nonlinear manifold learning methods have been proposed, such as locally linear embedding (LLE) [24], Multidimensional Scaling(MDS)[6], Least Square Projection(LSP) [22], Local Affine Multidimensional Projection [13], isometric feature mapping (ISOMAP) [26], Laplacian Eigenmap (LE) [2], Local Tangent Space Alignment (LTSA) [40] and Maximum Variance Unfolding (MVU) [28]. However, all these approaches yield maps that are defined only on the training data and there is no clear approach for the evaluation of these maps on new testing data [11]. To settle this problem, several representative manifold learning algorithms have been proposed, such as Neighborhood Preserving Embedding (NPE) [10] and Locality Preserving Projections (LPP) [9]. However, both NPE and LPP are unsupervised learning methods and do not consider the underlying class information, which limits the classification performance of them. To improve the classification performance of LPP, Marginal Fisher Analysis (MFA) [32], Local Discriminant Embedding(LDE) [3], Supervised Locality Preserving Projection (SLPP) [15], Discriminant Locality Preserving Projection (DLPP) [39] and Orthogonal Discriminant Locality Preserving Projections (ODLPP) [46] are presented. Besides, graph construction is a key step for different dimensionality reduction algorithms, and two most popular methods for graph construction are k-nearest-neighbor and ε-ball-neighborhood. However, the sample neighborhood size k and the ball radius ε are sensitive to noise and difficult to determine in many real-world problems [5, 43, 44].

Recently, sparse representation, which is a powerful tool for signal processing and pattern classification [29, 30], has been utilized to design feature extraction methods [35]. M Yang et al. [38] proposed a sparse representation based Fisher Discrimination Dictionary Learning (FDDL) approach to image classification. Cheng et al. [4, 31, 33, 36] made use of sparse representation to construct an adapting graph to avoid tuning the model parameters. Qiao et al. extended NPE and further proposed Sparsity Preserving Projection (SPP) [19] to avoid selecting the neighborhood parameters. However, SPP is based on unsupervised learning and neglects the label information, which may degrade its performance [16]. Fei et al. [43] proposed a Discriminative Learning by Sparse representation Projection for classification (DLSP) by incorporates the merits of both local between-class geometrical structure and spasity property. Gui et al. [20] proposed a Discriminant Sparse Neighborhood Preserving Embedding (DSNPE) by combining SPP and Maximum Margin Criterion (MMC) [17].

In this paper, aiming at the drawback of DSNPE that the construction of its between-class scatter is too complexity, a novel supervised dimensionality reduction algorithm called Discriminant Sparse Locality Preserving Projection (DSLPP) is proposed. To our best knowledge, this is the first time that a between-class scatter by using all mean faces as sparse representation dictionary is constructed and the sparse reconstructive relationship of the mean face is preserved. In particular, our proposed DSLPP can not only efficiently reduce the between-class scatter computation complexity of DSNPE, but also increase the discriminant power. Moreover, comparing with LPP, NPE, and DLPP, the determination of the within-class and between-class similarities is also improved by using the sparse representation and the class information, and comparing with SPP, DSLPP is a supervised learning method which considers the class information. The mainly advantages of DSLPP are: (1) it is able to efficiently reduce the between-class scatter computation complexity which could be very high in DSNPE; (2) it constructs its between-class scatter by sparse representation without redundant dictionary, which can maximize the between-class distance. By contrast, the representation dictionary of DSNPE is redundant when constructing the between-class scatter. (3) it increases the discrimination capacity and improves the classification performance.

The remainder of this paper is organized as follows. In Section 2, we briefly review LPP and SPP. In Section 3, we introduce the proposed method in detail. In Section 4, we present the results of tests on the ORL, Yale, UMIST and AR face databases to demonstrate the effectiveness of the new method. Some conclusions are drawn in Section 5.

2 Outline of locality preserving projection and sparsity preserving projection

2.1 Locality preserving projection

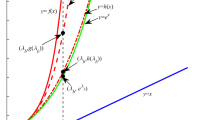

LPP is a popular manifold learning algorithm, which aims to find the optimal low-dimensional projections that preserve the local geometry of the observation data set. Given a set of training samples \(\{\boldsymbol {x}_{\boldsymbol {i}}\}_{i=1}^{n}\), where x i ∈ R m, let X = [x 1 , x 2 ,⋯ , x n ] ∈ R m×n be the data matrix consisting of all the training samples. In practice, the feature dimension m is often very high. The goal of linear dimensionality reduction is to transform the data from the original high-dimensional space to a low-dimensional space, that is y = A T x ∈ R d for any x ∈ R m with d≪m where A = (α 1, α 2,⋯ , α d ) and α i (i = 1,⋯ , d) is an m-dimensional column vector. The objective function of LPP is defined as follows:

where \(\boldsymbol {y}_{\boldsymbol {i}}=\mathbf {A}^{T}\boldsymbol {x}_{\boldsymbol {i}}(i=1,2,\cdots ,n)\) and Tr(⋅) denotes the trace of a matrix, D is a diagonal matrix, D i i is the column (or row) sum of W: \(\mathbf {D}_{ii}=\sum \limits _{j}\mathbf {W}_{ij}\), and the affinity weight matrix W is defined as

or, in a simple 0 −1 way [12],

where σ is the heat kernel parameter and N k (x i ) denotes the k nearest neighbors of x i . By imposing the constraint A T X D X T A = 1 the transformation matrix A can be obtained by solving the generalized eigenvalue problem:

2.2 Sparsity preserving projection

SPP first seeks a sparse reconstructive weight vector s i for each x i through the ℓ 1 minimization problem:

where s i = [s i,1,⋯ , s i, i−1,0, s i, i+1,⋯ , s i, n ]T is an n-dimensional vector and 1 is a vector of all ones. In practice, (5) is usually transformed into a regularization problem, which is also called the Lasso [27]:

Then, the optimal solution, denoted by \(\boldsymbol {s}_{\boldsymbol {i}}^{*}\) is used to construct the following objective function:

where \(\mathbf {S}=\left [\boldsymbol {s}_{\mathbf {1}}^{*},\boldsymbol {s}_{\mathbf {2}}^{*},\cdots ,\boldsymbol {s}_{\boldsymbol {n}}^{*}\right ]^{T}\), α is the projection matrix. Finally, the optimal α of SPP can be obtained by solving the generalized eigenvalue problem:

3 Discriminant sparse locality preserving projection

In this section, we present the DSLPP algorithm in detail. First, we design a sparse local scatter to characterize the within-class compactness, and propose a novel between-class scatter by preserving the sparse reconstructive relationship of the mean face. On this basis, we then present a criterion to maximize the ratio of the between-class scatter to the within-class compactness. After maximizing the criterion function of DSLPP, the samples from the same class will be close to each other while the samples from different classes will be far away from each other.

3.1 The within-class compactness

The within-class compactness is an index which is used to characterize the compactness between samples in the same class. In order to characterize the within-class compactness, we suppose the sample set X = [X 1, X 2,⋯ , X i ⋯ , X c ]∈R m×n, where \(\mathbf {X}_{i}=[\mathbf {x}_{i,1},\mathbf {x}_{i,2},\cdots ,\mathbf {x}_{i,n_{i}}]\), n i is the number of samples in the i-th class, n 1 + n 2+⋯ , + n c = n. Suppose x i belongs to the k-th class, the corresponding dictionary X k is composed only of the samples with the same label as x i . Similar to DSNPE, the within-class sparse coefficient vector \(\mathbf {s}_{i}^{w}\) can be obtained by solving the following optimization problem:

where \(\mathbf {X}_{k}=[\mathbf {x}_{k,1},\mathbf {x}_{k,2},\cdots ,\mathbf {x}_{k,n_{k}}]\in R^{m\times n_{k}}\), and \(\mathbf {s}_{i}^{w}=[s_{i,1}^{w},s_{i,2}^{w},\cdots ,s_{i,i-1}^{w},0,s_{i,i+1}^{w},\cdots ,s_{i,n_{k}}^{w}]^{T}\in R^{n_{k}}\),

where r k−1 = n 1 + n 2+⋯ + n k−1, the W w denote the within-class weight matrix. In order to illustrate the construction of W w, an example is as follows: Suppose c = 3, n 1 = 3, n 2 = 4, n 3 = 3, n = n 1 + n 2 + n 3 = 10, t h e n

The numerator of objective function can be reduced to:

where L w = D w−W w, \(\mathbf {D}^{w}=\sum \limits _{j}w_{ij}^{w}\). The trace Tr(A T X L w X T A) is called the within-class compactness.

3.2 The between-class scatter

The between-class scatter is an index which is used to characterize the separability between different classes. In order to characterize the between-class scatter, we suppose f i and f j are the mean weight vectors for the i-th class and the j-th class and that m i and m j are separately the mean faces of the i-th class and the j-th class in projection space. W b denotes the between-class weight matrix. The between-class sparse coefficient vector \(\mathbf {{s_{i}^{b}}}\) can be obtained by solving the following optimization problem:

where D = [f 1, f 2,⋯ , f i−1, f i , f i+1⋯ , f c ]∈R m×c, and c is the number of classes. \(\mathbf {s}_{i}^{b}=[s_{i,1}^{b},s_{i,2}^{b},\cdots ,s_{i,i-1}^{b},0,s_{i,i+1}^{b},\cdots ,s_{i,c}^{b}]^{T}\in R^{c}\), \(\sum \limits _{j}s_{i,j}^{b}=1\). \(w_{i,j}^{b}=s_{i,j}^{b}\). The numerator of objective function can be reduced to:

where L b = D b−W b, \(\mathbf {D}^{b}=\sum \limits _{j}w_{ij}^{b}\), F = [f 1, f 2,⋯ , f c ], and \(\mathbf {f_{i}}=\frac {1}{n_{i}}\sum \limits _{k=1}^{n_{i}}\mathbf {{x_{k}^{i}}}\). The trace Tr(A T F L b F T A) is called the between-class scatter.

3.3 Discriminant sparse locality preserving projection algorithm

To improve recognition, we seek a projection matrix A that maximizes the between-class scatter and minimizes the within-class compactness. Therefore, these dual criteria can be re-written as a trace ratio optimization problem, namely

Note that the trace ratio problem does not have a closed optimum solution directly [5, 34, 42]. In this paper, we further simplify (14) and define the objective function of DSLPP as

From above we can see that both L w and L b are Laplacian operators. Because L w is a positive semi-definite and symmetrical matrix, X L w X T is also a positive semi-definite and symmetrical matrix. If the X L w X T is nonsingular, the optimization problem (15) can be directly obtained by solving the eigenvectors of (X L w X T)−1(F L b F T). However, for many classification tasks, X L w X T is singular because of the small number of the training samples and large number of data dimension. To avoid the singularity of X L w X T, we propose to use PCA to reduce the dimension of the training samples. The main algorithmic procedure of DSLPP is summarized as follows:

- Step 1::

-

Use PCA to project the data set to a lower dimensional subspace. The PCA projection matrix is denoted by A p . For the sake of simplicity, the data set in the PCA subspace is still denoted by X.

- Step 2::

-

Construct the within-class weight matrix W w and X L w X T using (9–11).

- Step 3::

-

Compute the between-class weight matrix W b and F L b F T using (12) and (13).

- Step 4::

-

Work out the optimal projection matrix \(\mathbf {A}^{*}=[\mathbf {\alpha }_{\mathbf {1}},\mathbf {\alpha }_{\mathbf {2}},\cdots ,\mathbf {\alpha }_{\boldsymbol {d}}]\), corresponding to the first d largest nonzero eigenvalues of (X L w X T)−1(F L b F T).

- Step 5::

-

The final projection matrix is \(\mathbf {A}=\mathbf {A}_{p}\mathbf {A}^{*}\).

4 Experimental results

To evaluate the proposed DSLPP algorithm, we compare it with LDA [1], LPP [9], DLPP [39], NPE [10], SPP [19] and DSNPE [20] on the ORL [25], Yale[25], UMIST [7] and AR [21] face databases. In these databases, there exists a wide range of variations, including facial expression, pose, and lighting direction. In our experiments, images from each class are selected randomly for training, and the remainder are used for testing. In order to make the comparison fair, we used PCA as a preprocessing step by retaining 98% energy for all the compared algorithms on ORL, Yale and UMIST databases, and by setting the maximum reduce dimension as 300 for all the compared algorithms on AR database, respectively. Noting that in the LDA, the maximal number of dimension is c−1, where c is the number of individuals [1]. For SPP and DSNPE, the parameter λ of l 1-regularizer(5) was set to 1 in all the experiments. Note that \(\boldsymbol {s}_{\boldsymbol {i}}^{\boldsymbol {w}}\) in (9) and \(\boldsymbol {s}_{\boldsymbol {i}}^{\boldsymbol {b}}\) in (12) are no longer expected to be sparse because X k consists only of k-th class samples and D consists only of other mean samples except for k-th class. Hence, for DSLPP, the parameter λ of the l 1-regularizer (5) was set to 0 in all experiments. For LPP, DLPP and NPE, the k-NN criterion was used to construct the graphs, and the 0–1 method was used to construct the adjacency matrices. The neighbor parameter K in the locality-based methods was varied from 1 to T−1 in step 1 to search for the best performance, where T is the number of training samples. The experiments were completed in Matlab 7.14 (R2012a) and performed on a computer with an Intel(R) Xeon (R) CPU, 2.53 GHz and Windows 7 Professional.

4.1 Experiments on ORL database

The ORL [25] face database consists of 40 subjects, 10 different face images for each subject. In our experiment, all the images were cropped and resized to 32 ×32 pixels with 256 gray level per pixel. All images are rendered with continuous variations of pose and lighting direction. Some sample images are shown in Fig. 1.

For each individual, l(=3,4,5,6) images are randomly selected for training and the rest are used for testing. We repeat the experiment 20 times for each given l. The maximal average recognition rates of each method and the corresponding standard deviations are given in Table 1. In Table 1, it can be seen that DSLPP obtains higher recognition rates in every case. Figure 5 shows the best classification accuracy versus the variation of the size of the training set.

4.2 Experiments on Yale database

The Yale [25] database contains 165 gray scale images of 15 individuals. The images have variations in their illumination conditions and their facial expressions (normal, with glasses, without glasses, happy, sad, sleepy, surprised, and wink). In our experiments, each image was manually cropped and resized to 64 ×64 pixels. Some sample images are shown in Fig. 2. For each individual, l(=4,5,6,7) images are randomly selected for training and the rest are used for testing. We repeat the experiment 20 times for each given l. The maximal average recognition rates of each method and the corresponding standard deviations are given in Table 2. In Table 2, it can be seen that DSLPP obtains the highest recognition rates in every case. Figure 6 shows the best classification accuracy versus the variation of the size of the training set.

4.3 Experiments on UMIST database

In this section, we demonstrate that our algorithm’s classification performance is superior to that of the other subspace learning methods concerning pose changes. The UMIST database [7] contains 575 images of 20 individuals, each covering a wide range of poses, from profile to frontal views. We use a cropped version of the UMIST database [7] with 112 ×92. Figure 3 shows some images of an individual. For each individual, l(=5,7,9,11) images are randomly selected for training and the rest are used for testing. We repeat the experiment 20 times for each given l. The maximal average recognition rates of each method and the corresponding standard deviations are given in Table 3. Figure 7 shows the best classification accuracy versus the variation of the size of the training set. It was found that the DSLPP method significantly outperforms LDA, LPP, DLPP, NPE, SPP and DSNPE. That is to say, the DSLPP algorithm is not sensitive to the changes in pose.

4.4 Experiments on AR database

AR face database contains over 4000 color images which respectively corresponds to 126 people’s faces (70 men and 56 women) with different facial expressions, illumination conditions, and occlusions. The pictures of most persons are taken in two sessions, and each section contains 13 color images. In our experiments, we use a subset of the AR face database provided and pre-processed by Martines [21]. As [8], we chose a subset (only with illumination changes and expressions) of AR database [21] consisting of 50 male subjects and 50 female subjects. The original resolution of the images is 165 ×120. Here, for computational convenience, we manually cropped the face portion of the image and then normalized it to 60 ×43. The sample images of AR face database are shown in Fig. 4. We also apply the Gabor feature into DSLPP on AR face database to further improve the performances of DSLPP, and the dimension of Gabor-feature vector is 5600. In particular, the method of Gabor filter is the same as in [37]. The maximal recognition rate of each method and the corresponding reduced dimension are listed in Table 4. The recognition rate curves of some representative algorithms versus the variation of dimensions are shown in Fig. 8. From the experimental results, we can see that DSLPP gets the higher recognition rate than other methods, DSLPP based on Gabor (GDSLPP) also archives the highest recognition.

4.5 Discussion

In Tables 1–4, Figs. 5, 6, 7 and 8, we can see that:

-

1.

The maximal average recognition of DSLPP is superior to LDA, which is due to the fact that LDA work with the hypothesis that the samples follow a gauss distribution that restricts its application for some complex distribution data. By contrast, DSLPP works without any hypothesis, and in addition, since the sparse representation coefficients of DSLPP can incorporate the intrinsic discrimination construction of data sets, by preserving the sparse reconstructive relationship, DSLPP is able to obtain better recognition results than LDA.

-

2.

The maximal average recognition of DSLPP is superior to LPP and NPE. This is likely due to the fact that LPP and NPE only encode the local discriminative information and do not explicitly consider the global discriminative information. This impairs their recognition accuracy.

-

3.

DSLPP is also superior to SPP. This is likely due to the fact that SPP uses only unsupervised learning, neglecting the label information.

-

4.

DSLPP is also superior to DLPP. Although DLPP considers both the global and local geometrical structures of the data, it constructs the similarity matrix by adopting the Euclidean measure of distance, which is not applicable to the complex manifold structure of the data. Unlike DLPP, DSLPP constructs the similarity matrix by sparse representation methods. In Figs. 5–8, the recognition accuracy of DSLPP is obviously better than DLPP. This is probably because DLPP measures the similarity of two points by the Euclidean distance.

-

5.

DSLPP is superior to the DSNPE which owes to the fact that representation dictionary of DSNPE is redundant when constructing the between-class scatter, while DSLPP constructs its between-class scatter by sparse representation without redundant dictionary, which can maximize the between-class distance. Therefore, DSLPP has more discriminant power.

-

6.

GDSLPP is superior to DSLPP which owes to the fact that DSLPP is directly applied on the raw gray intensity, while GDSLPP is directly applied on Gabor features.

5 Conclusion

In this paper, we propose the DSLPP algorithm, which is a new dimensionality reduction method that preserves the sparse reconstructive relationship of the mean face. The advantages of DSLPP are that DSLPP can not only efficiently reduce the between-class scatter computation complexity which could be very high in DSNPE, but also increase the discriminant power. Moreover, comparing with LPP, NPE, and DLPP, the determination of the the within-class and between-class similarities is also improved by using the sparse representation and the class information, and comparing with SPP, DSLPP is a supervised learning method which considers the class information. Furthermore, we also apply the Gabor feature into DSLPP on AR face database to further improve its performance. The experimental results show the effectiveness of our proposal. We will extend DSLPP to a nonlinear method using the kernel trick. Meanwhile, we also want to improve our proposal by introducing the method based on Bayes and the probability theory into the matching recognition process.

References

Belhumeur PN, Hespanha JP, Kriengman DJ (1997) Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Belkin M, Niyogi P (2003) Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 15(6):1373–1396

Chen HT, Chang HW, Liu TL (2005) Local discriminant embedding and its variants. In: Proceedings of the IEEE computer visual and pattern recognition (CVPR), pp 846– 853

Cheng B, Yang J, Yan S, Fu Y, Huang T (2010) Learning with L1-graph for image analysis. IEEE Trans Image Process 19(4):858–866

Chun D, Shi-Lin Z, Ji-Xiang S, Hao S, Liang-Liang W (2013) Discriminant embedding by sparse representation and nonparametric discriminant analysis for face recognition. J Cent South Univ 20:3564–3572

Cox TF, Cox MAA (2000) Multidimensional Scaling, 2nd edn. Chapman and hall/CRC

Graham DB, Allinson NM (1998) Characterizing virtual eigensignatures for general purpose face recognition. In: Face recognition: From theory to applications, vol 163, pp 446– 456

Gui J, Sun Z, Jia W, Hu R, Lei Y, Ji S (2012) Discriminant Sparse Neighborhood Preserving Embedding for Face Recognition. Pattern Recogn 45 (8):2884–2893

He X, Niyogi P (2003) Locality preserving projections. In: Proceedings of Conference on Advances in Neural Information Processing Systems (NIPS)

He X, Cai D, Yan S, Zhang H (2005) Neighborhood preserving embedding. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 1208–1213

He X, Yan S, Hu Y, Niyogi P, Zhang H-J (2005) Face recognition using Laplacianfaces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Huang P, Tang Z, Chen C, Yang Z (2014) Local maximal margin discriminant embedding for face recognition. J Vis Commun Image R 25:296–305

Joia P, Paulovich F, Coimbra D, Cuminato J, Nonato L (2011) Local affine multidimensional projection. IEEE Trans Vis Comput Graph 17(12):2563–2571

Jolliffe IT (1986) Principal component analysis. Springer, New York

Kong J, Wang S, Wang J, Ma L, Fu B, Lu Y (2009) A novel approach for face recognition based on supervised locality preserving projection and maximum margin criterion. International Conference on Computer Engineering and Technology ICCET 2009(1):419–423

Lai Z, Li Y, Wan M, Jin Z (2013) Local sparse representation projections for face recognition. Neural Comput Applic 23:2231–2239

Li HF, Jiang T, Zhang KS (2006) Efficient and robust feature extraction by maximum margin criterion. IEEE Trans Neural Netw 17(1):157–165

Li W, Ruan Q, Wan J (2014) Dimensionality reduction using graph-embedded probability-based semi-supervised discriminant analysis. Neurocomputing 138:283–296

Lishan Q, Songcan C, Xiaoyang T (2010) Sparsity preserving projections with applications to face recognition. Pattern Recogn 43:331–341

Lu G-F, Jin Z, Zou J (2012) Face recognition using discriminant sparsity neighborhood preserving embedding. Knowl-Based Syst 31:119–127

Martinez AM, Benavente R (1998) The AR face database, CVC, Univ. Autonoma Barcelona, Barcelona, Spain, Technical Report, 24

Paulovich F, Nonato L, Minghim R, Levkowitz H (2008) Least square projection: a fast high-precision multidimensional projection technique and its application to document mapping. IEEE Trans Visual Comp Graph 14(3):564–575

Raudys SJ, Jain AK (1991) Small sample size effects in statistical pattern recognition: Recommendations for practitioners. IEEE Trans Pattern Anal Mach Intell 13(3):252–264

Roweis ST, Saul LK (2000) Nonlinear dimension reduction by locally linear embedding. Science 290(5500):2323–2326

See (http://www.cad.zju.edu.cn/home/dengcai/Data/FaceData.html)

Tenenbaum JB, da Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290:2319–2323

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Statist Soc Ser B (Methodological) 58(1):267–288

Weinberger KQ, Saul LK (2006) An introduction to nonlinear dimensionality reduction by maximum variance unfolding. In: Proceedings of the 21st National Conference on Artificial Intelligence, vol 2, pp 1683–1686

Wright J, Yang AY, Ganesh A, Sastry SS, Ma Y (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 31 (2):210–227

Wright J, Ma Y, Mairal J, Sapiro G, Huang T-S, Yan S (2010) Sparse representation for computer vision and pattern recognition. In: Proceedings of the IEEE, Special Issue on Applications of Compressive Sensing and Sparse Representation, vol 98, pp 1031–1044

Wright J, Ma Y, Mairal J, Sapiro G, Huang T, Yan S (2010) Sparse representation for computer vision and pattern recognition. Proc IEEE 98(6):1031–1044

Yan S, Xu D, Zhang B, Zhang H, Yang Q, Lin S (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29(1):40–51

Yan S, Wang H (2009) Semi-supervised learning by sparse representation. In: SIAM International Conference on Data Mining (SDM), pp 792–801

Yang-Qing J, Fei-Ping N, Chang-Shui Z (2009) Trace ratio problem revisited. IEEE Trans Neural Netw 20(4):729–735

Yang M, Zhang L (2010) Gabor feature based sparse representation for face recognition with gabor occlusion dictionary. Computer Vision-ECCV:448–461

Yang J, Chu D, Zhang L, Xu Y, Yang J (2013) Sparse representation classifier steered discriminative projection with applications to face recognition. IEEE Trans Neural Netw Learn Syst 24(7):1023–1035

Yang M, Zhang L, shiu S, Zhang D (2013) Gabor feature based robust representation and classification for face recognition with Gabor occlusion dictionary 46(7):1865–1878

Yang M, Zhang L, Feng X, Zhang D (2014) Sparse representation based fisher discrimination dictionary learning for image classification. Int J Comput Vis 109 (3):209–232

Yu W, Teng X, Liu C (2006) Face recognition using discriminant locality preserving projections. Image Vis Comput 24(3):239–248

Zhang Z, Zha H (2005) Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM J Sci Comput 26(1):313–338

Zhao H, Sun S, Jing Z, Yang J (2006) Local structure based supervised feature extraction. Pattern Recogn 39:1546–1550

Zhao M-B, Zhang Z, Chow TW (2012) Trace ratio criterion based generalized discriminative learning for semi-supervised dimensionality reduction. Pattern Recogn 45:1482–1499

Zang F, Zhang J (2011) Discriminative learning by sparse representation for classification. Neurocomputing 74:2176–2183

Zhen-Yue Z, Jing W, Hong-Yuan Z (2012) Adaptive manifold learning. IEEE Trans Pattern Anal Mach Intell 34(2):253–265

Zhong F, Zhang J, Li D (2014) Discriminant locality preserving projections based on L1-norm maximization. IEEE Transactions on Neural Networks and Learning Systems 25(11):2065–2074

Zhu L, Zhu S (2007) Face recognition based on orthogonal discriminant locality preserving projections. Neurocomputing 70(7-9):1543–1546

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grant No. 61472297, U1404622 and 61503082.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, Y., Wang, Y. & Xue, X. Discriminant sparse locality preserving projection for face recognition. Multimed Tools Appl 76, 2697–2712 (2017). https://doi.org/10.1007/s11042-015-3212-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-3212-2