Abstract

This study presents an optimization-based image watermarking scheme with integrated quantization embedding. First, peak signal to noise ratio (PSNR) is rewritten as a performance index in matrix form. In order to guarantee the robustness, this study embeds a watermark into the low-frequency coefficients of discrete wavelet transform (DWT). Unlike traditional way of single-coefficient quantization, this study applies amplitude quantization to embed the watermark and then rewrite this amplitude quantization as a constraint with embedding state. Then, an optimization-based equation connecting the performance index and amplitude-quantization constraint is obtained. Second, Lagrange Principle is used to solve the equation and then the optimal results are applied to embed the watermark. In detection, the hidden watermark can be extracted without original image. Finally, the performance of the proposed scheme is evaluated by PSNR and BER.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Digital watermarking is an important technique for copyright protection in recent years. In general, the watermarking in frequency domain [4, 5, 11, 15, 16, 21, 24–28] has better performance than watermarking in airspace domain [1, 2, 7, 9, 12, 18, 20]. Hence most watermarking scheme are implemented in frequency domain such as discrete Fourier transform method [24], discrete cosine transform method [15, 26], and discrete wavelet transform method [4, 5, 11, 16, 21, 25, 27, 28].

In [7], Chen et al. introduced a framework for solving the inherent tradeoffs between the robustness, the degradation of the host image, and the embedding capacity. They adjusted the tradeoffs optimally between rate, distortion, and robustness in an information embedding system, namely quantization index modulation. In [18], the authors proposed a novel hiding data scheme with distortion tolerance. The proposed scheme not only can prevent the quality of the processed image from being seriously degraded, but also can simultaneously achieve distortion tolerance. But the associated embedding system is implemented in the single-bit spatial domain which fails to take the advantages offered by wavelet-based transform or other transform. Therefore, this study utilizes DWT low-frequency coefficients to embed one watermark bit in the host image. Besides, the proposed optimization technique is complicated. To improve the efficiency of their optimization technique, this study optimizes the tradeoff between peak signal to noise ratio (PSNR) and bit error ratio (BER) by using Lagrange Principle.

First, the PSNR and the amplitude quantization equation are rewritten as a performance index in matrix form and a constraint with embedding state. Then, an optimization equation is then proposed to connect the performance index and the constraint. Second, the Lagrange Principle is used to derive the optimal solution. The optimal result is then applied to embed the watermark. In other words, this study proposes an optimization-based formula for the modification of low-frequency amplitude. Finally, the performance of the proposed scheme is evaluated by PSNR and BER. Simulation results indicate that the proposed scheme has good image quality under high embedding capacity and robust to JPEG compression.

The rest of this paper is organized as follows. Section II reviews some mathematical preliminaries. Section III rewrites the PSNR and the amplitude quantization equation as a performance index in matrix form and a constraint with embedding state. Moreover, an optimization-based equation that connects the performance index and amplitude-quantization constraint is proposed. Finally, the Lagrange Principle is used to solve the optimization-based problem and the associated optimal solution is then applied to embed the watermark. In detection, watermark can be extracted without original image. Section IV does some experiments to test the performance of the proposed scheme. Conclusions are finally drawn in Section V.

2 Mathematical preliminaries

Before introducing the proposed optimization-based image watermarking, DWT, SNR, matrix operations, and Lagrange Principle are reviewed in this section.

2.1 Discrete-time wavelet transform (DWT)

In conventional discrete cosine transform or Fourier transform, sinusoids are used for basis functions. It can only provide the frequency information. Temporal information is lost in this transformation process. In some applications, we need to know the frequency and temporal information at the same time, such as a musical score, we want to know not only the notes (frequencies) we want to play but also when to play them. Fast Fourier transform (FFT) efficiently do the decomposition of signal into uniform-resolution analysis. It is suitable to analyze the wide-sense-stationary condition but not in non-stationary condition. Unlike conventional Fourier transform or Fast Fourier transform, wavelet transforms are based on small waves, called wavelets. It can be shown that we can both have frequency and temporal information by this kind of transform using wavelets even in non-stationary condition. The wavelet transform maps a function which belongs to functional space L 2(ℝ) onto a scale-space plane. The wavelets are obtained by a single prototype function ψ(x) which is regulated with a scaling parameter and shift parameter [22]. In any dicretised wavelet transform, there are only a number of wavelet coefficients for each bounded rectangular region. Still, each coefficient requires the evaluation of an integral. To avoid this numerical complexity, one need one auxiliary function, the basic scaling function φ(⋅). The basic scaling function and the wavelet basis function are as follows.

where t ∈ ℝ, n ∈ ℤ. From these two functions, one can construct two subspaces as follows.

where j and n are the dilation and translation parameters; From this, one can require that the sequence

form a mutiresolution analysis of L 2(ℝ) and that the subspaces ⋯, W 1, W 0, W − 1, ⋯ are the orthogonal differences of the above sequence, that is, W j is the orthogonal complement of V j inside the subspace V j − 1. Then, the orthogonality relations follow the existence of sequences h = {h n } n ∈ ℤ and g = {g n } n ∈ ℤ that satisfy the following identities:

where h = {h n } n ∈ ℤ and g = {g n } n ∈ ℤ are respectively the sequence of low-pass and high-pass filters [10, 19, 23].

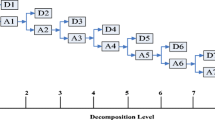

In this paper, we use the wavelet bases in (1) and (2) to transform the host image into the orthogonal DWT domain by four-level decomposition. A method to implement DWT is a filter bank that provides perfect reconstruction. DWT has local analysis of frequency in space and time domain and it gets image multi-scale details step by step. If the scale becomes smaller, every part gets more accurate and ultimately all images details can be focalized accurately. If DWT is applied to an image, it will produce high-frequency parts, middle-frequency parts, and a lowest-frequency part which is a square coefficient matrix. Figure 1 shows the one-level decomposition of 2D DWT.

2.2 Mathematical definitions and theorems

To find the extreme of the matrix function, some optimization methods for matrix function are introduced in [3, 6, 8, 17]. First of all, operations of the matrix function are reviewed in Theorem 1 and Theorem 2.

Theorem 1.

If W is an n × n matrix, and \( \tilde{\mathbf{C}} \) is an n × 1 column vector, then

Theorem 2.

If \( \tilde{\mathbf{C}} \) is an n × 1 column vector, and C is an n × 1 constant vector, then

In order to apply the Lagrange Principle, we have to introduce the gradient of a matrix function \( f\left(\tilde{\mathbf{C}}\right) \) as follows.

Definition 2.

Suppose that \( \tilde{\mathbf{C}}={\left[{\tilde{c}}_1,{\tilde{c}}_2,\cdot \cdot \cdot, {\tilde{c}}_n\right]}^T \) is an n × 1 matrix and \( f\left(\tilde{\mathbf{C}}\right) \) is a matrix function. Then the gradient of \( f\left(\tilde{\mathbf{C}}\right) \) is

Now we consider the problem of minimizing (or maximizing) the matrix function \( f\left(\tilde{\mathbf{C}}\right) \) subject to a constraint \( g\left(\tilde{\mathbf{C}}\right)=0 \). This problem can be described as follows

In order to solve (11), Lagrange Principle is applied as follows.

Theorem 3.

Suppose that g is a continuously differentiable function of \( \tilde{\mathbf{C}} \) on a subset of the domain of a function f. Then if \( {\tilde{\mathbf{C}}}_0 \) minimizes (or maximizes) \( f\left(\tilde{\mathbf{C}}\right) \) subject to the constraint \( g\left(\tilde{\mathbf{C}}\right)=0 \), \( \nabla f\left({\tilde{\mathbf{C}}}_0\right) \) and \( \nabla g\left({\tilde{\mathbf{C}}}_0\right) \) are parallel. That is, if \( \nabla g\left({\tilde{\mathbf{C}}}_0\right)\ne 0 \), then there exists a scalar λ such that

Based on Theorem 3, if we let

then the original problem (12) becomes a matrix function \( J\left(\tilde{\mathbf{C}},\lambda \right) \) which has no constraint. The necessary conditions for existence of the extreme of J are

and

Finally, we use Theorem 3 and the techniques in (13)-(15) to propose an optimization-based image watermarking in next section.

3 Proposed watermarking technique

Since a tradeoff exists between image quality measured by PSNR and robustness measured by BER, the scalar parameter λ is introduced to connect the performance index obtained from PSNR and amplitude quantization equation. Finally, the Lagrange Principle in Theorem 3 is applied to find the optimal solution and then this optimal solution is used to embed watermark. This section also introduces the extraction techniques.

3.1 Proposed embedding technique

Before the proposed embedding technique is implemented, the watermark bits B = {b i } are randomly generated by using binary code. Accordingly, the values in the watermark belong to the set {0, 1}. The watermark is embedded into the coefficients of DWT middle-frequency sub-band LH2. Unlike the traditional single-coefficient quantization, the proposed embedding technique is as follows.

-

If the embedded bit “b i = 1” is embedded into k consecutive coefficients {|c 1|, |c 2|, ⋅ ⋅ ⋅ ⋅⋅, |c k |}, then amplitude \( {\displaystyle \sum_{i=1}^k\left|{c}_i\right|} \) is modified to

$$ {A}_1=\left\lfloor \raisebox{1ex}{${\displaystyle \sum_{i=1}^k\left|{c}_i\right|}$}\!\left/ \!\raisebox{-1ex}{$S$}\right.\right\rfloor S+\frac{3}{4}S $$(16)

-

If the embedded bit “b i = 0” is embedded into k consecutive coefficients {|c 1|, |c 2|, ⋅ ⋅ ⋅ ⋅⋅, |c k |}, then amplitude \( {\displaystyle \sum_{i=1}^k\left|{c}_i\right|} \) is modified to

$$ {A}_0=\left\lfloor \raisebox{1ex}{${\displaystyle \sum_{i=1}^k\left|{c}_i\right|}$}\!\left/ \!\raisebox{-1ex}{$S$}\right.\right\rfloor S+\frac{1}{4}S $$(17)

where {c i } are the LH2 coefficients in DWT; ⌊⌋ indicates the floor function; the number of consecutive coefficients k is adopted as the first secret key KY 1 and S is the quantization size (QS) which is adopted as the secret key KY 2. To combine Eqs. (16) and (17) into a single equation, all k absolute values of the middle-frequency LH2 coefficient are input into a vector form which will be used in optimization procedure.

Based on Eqs. (16), (17) and (18), the embedding can be rewritten as an equation that incorporates an embedding state, that is

where \( {\tilde{\mathbf{C}}}_k \): \( {\tilde{\mathbf{C}}}_k={\left[\left|{\tilde{c}}_1\right|,\left|{\tilde{c}}_2\right|,\cdot \cdot \cdot \cdot \cdot, \left|{\tilde{c}}_k\right|\right]}^T \) is the watermarked (unknown) wavelet-coefficient vector that corresponds to C k .

W: \( \mathbf{W}=\left[\begin{array}{cccc}\hfill {w}_1\hfill & \hfill {w}_2\hfill & \hfill \cdots \hfill & \hfill {w}_k\hfill \end{array}\right] \) is a weighting matrix whose scalar entries can be arbitrarily determined by an encoder. To avoid any entry from being arbitrarily large, without loss of generality, the summation of all the scalar entries is assumed to equal to k. For example, \( \mathbf{W}=\left[\begin{array}{cccc}\hfill 0.7\hfill & \hfill 1.1\hfill & \hfill \cdots \hfill & \hfill 0.8\hfill \end{array}\right] \).

α: the embedding state; If binary bit “1” is embedded then the state of α is “1”; otherwise, the state of α is “0”.

To have the best image quality under the embedding Eq. (20), we discuss the calculation of PSNR and the corresponding optimization problem for modifying the amplitude in (20). Since we implement the DWT with orthogonal wavelet bases, PSNR is rewritten in the following form.

where MN is the size of the image, respectively. For the optimization of the watermarked image quality, Eq. (22) is rewritten as a performance index.

or

Since 2552 MN is a constant, Eq. (24) can be rewritten as a more simple form for the performance index of optimization.

Based on the performance index \( f\left({\tilde{\mathbf{C}}}_k\right) \) in (25) and the constraint \( g\left({\tilde{\mathbf{C}}}_k\right) \) in (20), the optimization-based quantization problem is in the following form.

To embed the watermark B, we need to solve the optimization problem (26). By Theorem 3, we set Lagrange multiplier λ to combine (26a) and (26b) into the following matrix function.

or

The necessary conditions for existence of the minimum of \( J\left({\tilde{\mathbf{C}}}_k,\lambda \right) \) are

Multiply (2a) by W, we have

Since \( \mathbf{W}{\tilde{\mathbf{C}}}_k=\alpha {A}_1+\left(1-\alpha \right){A}_0 \) from (29b), Eq. (30) is rewritten as

Hence the optimal solution for vector parameter λ is

Replacing (32) to (29a), the optimal solution of modified coefficients is

According to Eq. (33), if binary bit “1” is embedded then the state of α is “1”. If binary bit “0” is embedded then the state of α is “0”. Therefore, the encoder can arbitrarily construct the scaling matrix W to obtain the optimal modified coefficients \( {{\tilde{\mathbf{C}}}_k}^{*} \) and then the embedding process can be completed. Figure 2 shows the proposed embedding process.

This study chooses k = 3 to be an example of detail embedding process. Every three consecutive coefficients in DWT level four is grouped into the vector form C 3 = [|c 1|, |c 2|, |c 3|]T. If the weighting matrix W is

then the embedding process is

Substituting C 3 into (33) yields the optimal coefficient vector \( {{\tilde{\mathbf{C}}}_3}^{*}={\left[\begin{array}{ccc}\hfill \left|{{\tilde{c}}^{*}}_1\right|\hfill & \hfill \left|{{\tilde{c}}^{*}}_2\right|\hfill & \hfill \left|{{\tilde{c}}^{*}}_3\right|\hfill \end{array}\right]}^T \).

3.2 Extraction technique

Because of the nature of quantization, our extraction process is blind. To extract the hidden watermark, we group every k (the first secret key KY 1) consecutive coefficients into \( {{\tilde{\mathbf{C}}}^{*}}_k=\left\{\left|{{\tilde{c}}^{*}}_1\right|,\left|{{\tilde{c}}^{*}}_2\right|,\cdot \cdot \cdot, \left|{{\tilde{c}}^{*}}_k\right|\right\} \), where \( {\tilde{c}}^{*} \) denote the watermarked coefficient which is optimized. Then, the decoder can use the second secret key KY 2 to extract the watermark by using the following rules:

-

If the summation of \( {{\tilde{\mathbf{C}}}^{*}}_k=\left\{\left|{{\tilde{c}}^{*}}_1\right|,\left|{{\tilde{c}}^{*}}_2\right|,\cdots, \left|{{\tilde{c}}^{*}}_k\right|\right\} \) satisfies

$$ {\displaystyle \sum_{i=1}^k\left|{{\tilde{c}}_i}^{*}\right|}-\left\lfloor \raisebox{1ex}{${\displaystyle \sum_{i=1}^k\left|{{\tilde{c}}_i}^{*}\right|}$}\!\left/ \!\raisebox{-1ex}{$S$}\right.\right\rfloor S\ge \frac{S}{2}, $$(36) -

then the extracted value \( {\widehat{b}}_i=1 \).

-

If the summation of \( {{\tilde{\mathbf{C}}}^{*}}_k=\left\{\left|{{\tilde{c}}^{*}}_1\right|,\left|{{\tilde{c}}^{*}}_2\right|,\cdot \cdot \cdot, \left|{{\tilde{c}}^{*}}_k\right|\right\} \) satisfies

$$ {\displaystyle \sum_{i=1}^k\left|{{\tilde{c}}_i}^{*}\right|}-\left\lfloor \raisebox{1ex}{${\displaystyle \sum_{i=1}^k\left|{{\tilde{c}}_i}^{*}\right|}$}\!\left/ \!\raisebox{-1ex}{$S$}\right.\right\rfloor S<\frac{S}{2}, $$(37) -

then the extracted value \( {\widehat{b}}_i=0 \).

By repeating the rules (36) and (37), all hidden watermarks can be extracted as \( \widehat{B}=\left\{{\widehat{b}}_i\right\} \) . The detail process of the proposed extraction technique is shown in Fig. 3.

4 Experimental results

This section describes some experiments to evaluate the performance of the proposed image watermarking method. As the examples of weighting matrix, this study considers the matrices \( {\mathbf{W}}_1=\left[\begin{array}{cccc}\hfill 1\hfill & \hfill 1\hfill & \hfill 1\hfill & \hfill 1\hfill \end{array}\right] \) and \( {\mathbf{W}}_2=\left[\begin{array}{cccc}\hfill 0.7\hfill & \hfill 1.2\hfill & \hfill 1.3\hfill & \hfill 0.8\hfill \end{array}\right] \). In the experiments, 100 images including Lena, Jet, Peppers, and Cameraman of size 512 × 512 are adopted [13, 14]. Each host image is decomposed into three levels using a DWT transform and then the watermark is embedded into the middle-frequency LH2 coefficients. Since the Lagrange Principle specifies the optimal quantization of the LH2 amplitude, the PSNR can exceed 40 dB for high embedding capacity when the entries of the weighting matrix are all one. Figure 3 presents the original images. Figures 4, 5, 6 and 7 display the watermarked images with different NCC, QS and weighting matrices. Table 1 presents the average PSNR and embedding capacity under different numbers of consecutive coefficients (NCC), quantization sizes (QS) and weighting matrices.

To better illustrate the effect of scaling factors adjustment on PSNR, a case k = 4, S = 60, W 1 with w 1 + w 2 = 2, w 3 = 1, w 4 = 1 and their relationship are shown in Figs. 8 and 9. It is clear that the PSNR drops as w 2 decreases and is maximized when w 2 = 1, which corresponds to w 1 = 1 as well. In other words, huge variation of coefficients reduces PSNR.

Following the embedding process, some attacks are made against the image to test the robustness of the embedded watermark. The robustness is measured using the bit error ratio (BER).Fig. 10.

where B error and B total denote the number of error bits and the number of total bits, respectively. Based on the same technique of quantization, we contrast our technique against the one proposed by Lin et al. [18]. Besides, we also contrast our technique against the traditional method which used single-coefficient quantization index modulation, i.e., k = 1, S = 15. The test of robustness supports the following conclusions.

-

(1)

Additive noise: Table 2 presents the experimental results of adding Gaussian noise. This table also indicates that different parameters k, S and weighting matrices have similar BER. Lin et al. [18] has slightly lower robustness than ours.

Table 2 Average ber after gaussian noise -

(2)

JPEG compression: As shown in Table 3, the watermarked image is compressed by JPEG compression with different quality factors. The proposed method is robust against this compression. Compare to the method proposed by Lin et al. [18], our method has much better robustness than their method. Accordingly, the developed method clearly improves the robustness against this attack. This table also shows that different weighting matrices W 1 and W 2 have similar BER. However, with the increase of the parameters k and S, BER decreases rapidly.

Table 3 Average ber after jpeg compression -

(3)

Median filtering: Table 4 presents the effects of adopting a circular median filter with radii 3 and 4. The results also indicate that the proposed method is better than the one proposed by Lin et al. [18]. The results in this table also present that different parameters k, S and weighting matrices have similar BER.

Table 4 Average ber after median filter -

(4)

Rotation: A rotation attack with different angles is applied to the watermarked image. The experimental results in Table 5 show that our method has much better robustness than the method proposed by Lin et al. [18]. These results also show that different parameters k, S and weighting matrices have similar BER.

Table 5 Average ber after rotation -

(5)

Scaling: The scaling attack with different amounts is applied to the watermarked image. Table 6 shows the experimental results. The BER is around 38 %. The results also indicate that the proposed method is better than the one proposed by Lin et al. [18]. Moreover, they also show that different parameters k, S and weighting matrices have similar BER.

Table 6 Average ber after scaling

This study uses the Lagrange Principle to optimize tradeoff efficiently. It only uses the differentiation of a function to obtain the optimal solution. The experimental results shown in Tables 1, 2, 3, 4, 5 and 6 indicate better performance.

5 Conclusion

This paper presents an optimization-based amplitude quantization technique for image watermarking. To enhance the robustness, the watermark is embedded in the middle-frequency LH2 coefficients in DWT. Based on an equation that connects the watermarking performance index and the amplitude-quantization constraint, we obtained an optimization-based formula for image watermarking. The experimental results show that the proposed method has high PSNR and embedding capacity. In the proposed optimization-based formula, the weight matrix W affects algorithm performance, for example, huge variation of coefficients reduces PSNR. In as a future work, the problem to decide W of improving performance will be considered.

References

Alghoniemy M, and Tewfik AH (1999) ‘Progressive quantized projection watermarking scheme,’ In Proc. 7th ACM Int. Multimedia Conf., Orlando, FL, Nov. 1999, pp. 295–298

Alghoniemy M, and Tewfik AH (2000) ‘Geometric distortion correction in image watermarking,’ in Proc. SPIE Security and Watermarking of Multimedia Contents II, vol 3971, San Jose, CA, Jan. 2000, pp. 82–89

Anderson B, and Moore JB (1990) ‘Optimal control: Linear quadratic methods,’ Prentice-Hall

Bao P, Ma X (2005) Image adaptive watermarking using wavelet domain singular value decomposition. IEEE Circ Syst Video Technol 15(1):96–102

Chae JJ, Manjunath BS (1998) A robust embedded data from wavelet coefficients. Proc SPIE: Storage and Retrieval for Image and Video Databases 3312:308–317

Chen ST, Huang HN, Chen CC, Tseng KK, and Tu SY (2013) “Adaptive audio watermarking via the optimization point of view on wavelet-based entropy,” Elsevier: Digital Signal Processing, pp. 971–980

Chen B, Wornell GW (2001) Quantization index modulation: a class of provably good methods for digital watermarking and information embedding. IEEE Trans Inf Theory 47(4):1423–1443

Chen ST, Wu GD, Huang HN (2010) Wavelet-domain audio watermarking scheme using optimization-based quantization. IET Proc Signal Process 4(6):720–727

Chung KL, Yang WN, Huang YH, Wu ST, Hsu YC (2007) On SVD-based watermarking algorithm. Appl Math Comput 188:54–57

Daubechies I (1988) Orthogonal bases for compactly supported wavelets. Comm Pure Appl Math 41:909–996

Gaurav B and Balasubramanian R (2009) “A new robust reference watermarking scheme based on DWT-SVD,” Elsevier: Computer Standards and Interfaces, pp. 1–12

Hartung F and Kutter M (1999) ‘Multimedia watermarking techniques.’ Proc IEEE 87(7)

http://www.imageprocessingplace.com/root_files_V3/image_databases.htm

http://www.petitcolas.net/watermarking/image_database/index.html

Kumswat P, Attakitmongcol K, Striaew A (2005) A new approach for optimization in image watermarking using genetic algorithms. IEEE Trans Signal Process 53(12):4707–4719

Lai CC, Tsai CC (2010) Digital image watermarking using discrete wavelet transform and singular value decomposition. IEEE Trans Instrum Meas 59(11):3060–3063

Lewis FL (1986) Optimal Control. John Wiley and Sons, New York

Lin LC, Lin YB, Wang CM (2009) Hiding data in spatial domain images with distortion tolerance. Comput Stand Interfaces 31:458–464

Liu Z, Ahmed A, Jing BY, Gao X (2012) WaVPeak: picking NMR peaks through wavelet-based smoothing and volume-based filtering. Bioinformatics 28(7):914–920

Liu R, Tan T (2002) An SVD-based watermarking scheme for protecting rightful ownership. IEEE Trans Multimed 4(1):121–128

Maity SP, and Kundu MK (2004) ‘A blind CDMA image watermarking scheme in wavelet domain.’ Proc. IEEE ICIP 2004, Singapore, October 2004, pp. 2633–2636

Mallat S (1989) A theory for multiresolution signal decomposition: the wavelet respresentation. IEEE Trans Pattern Anal Mach Intel 11:674–693

Rao RM, and Bopardikar AS (2000) Wavelet transforms – Introduction to theory and applications, Addition-Wesley

Ruanaidh O, and G Csurka (1999) ‘A bayesian approach to spread spectrum watermark detection and secure copyright protection for digital image libraries,’ In Proceedings of CVPR99, Fort Collins, Colorado, 1:207–212

Sharkas M, Youssef B, and Hamdy N (2006) ‘An adaptive image-watermarking algorithm employing the DWT,’ the 23th National Radio Science Conference, March 2006, pp. 14–16

Su F, Ma G, Wu J (2003) A ditgital watermarking algorithm with robust for image cropping. J Electron Inf Technol 25(3):295–299

Xiao L, Wu H, Wei Z (2003) Multiple digital watermarks embedding in wavelet domain with multiple-based number. J Comput Aided Des Comput Grap 15(2):200–204

Yingkun H, Xiangcai Z, Lili Z, and Mingxia L.: ‘Image with less information watermarking algorithm based on DWT,’ Eighth ACIS International Conference on Software Engineering, Artificial Intelligence, Networking, and Parallel/Distributed Computing

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, ST., Huang, HN., Kung, WM. et al. Optimization-based image watermarking with integrated quantization embedding in the wavelet-domain. Multimed Tools Appl 75, 5493–5511 (2016). https://doi.org/10.1007/s11042-015-2522-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-2522-8