Abstract

Computational load of motion estimation in advanced video coding (AVC) standard is significantly high and its more true for HDTV sequences. In this paper, video processing algorithm is mapped onto a learning method to improve machine to machine (M2M) architecture, namely, the parallel reconfigurable computing (PRC) architecture, which consists of multiple units, First, we construct a directed acyclic graph (DAG) to represent the video coding algorithms comprising motion estimation. In the future trillions of devices are connected (M2M) together to provide services and that time power management would be a challenge. Computation aware scheme for different machine is reduced by dynamically scheduling usage of multi-core processing environment for video sequence depending up complexity of the video. And different video coding algorithm is selected depending upon the nature of the video. Simulation results show the effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

For the past 20 years several video coding standards such as MPEG-1, MPEG-2/4, H.263, H.264 [6], have been playing a significant role in digital media revolution. The recent advancement in H.264 significantly increases coding efficiency and processor computation power with inclusion of variable block motion estimation and many other coding features [23], and that is very true for HDVT sequences and there is a need to efficiently process the video. Recently, the computation-aware (CA) concept is getting attention of video processing researchers. In software implementations, processors may have to support video coding of different frame rates, frame sizes, and search ranges. In hardware implementations, even if the frame rate, frame size, and search range have been clearly determined, the computation resource (e.g. operating frequency) may still be adjusted according to the battery power for portable devices.

The ability to enable and disable components, as well as tuning their performance to the workload (e.g., user’s requests), is important in achieving energy efficient utilization. In this work, we will present new approaches for lowering energy consumption in both system design and utilization. The Policy presented in this thesis has been experimented using an event driven simulator, which demonstrated the effectiveness in power savings with less impact on performance or reliability [8].

The fundamental premise for the applicability of power management schemes is that systems, or system components, experience non-uniform workloads during normal operation time [4, 19]. Non-uniform workloads are common in communication networks and in almost any interactive system.

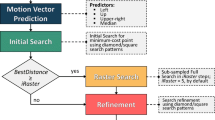

Dynamic power management (DPM) techniques achieve energy efficient utilization of systems by selectively placing system components into low-power states when they are idle [1]. As illustrated in Fig. 1. The goal of CA BMA [Block Matching Algorithm]s is to find the best block matching results in a computation-limited and computation-variant environment. The authors of [21] are pioneers of CA BMAs. They contributed a novel scheme. But it was intended for software and not feasible in hardware [3, 22].

With M2M, machines could not only retrieve information from other devices but also, to some extent, take action based on the information. Sensors that gather the information that some M2M systems transmit are becoming more widely used and thus are driving demand for the technology especially in power management area. The vision of an M2M and Internet of Things built from smart devices raises several important research questions in terms of system architecture, design and development, and human involvement [7]. The lower cost of sensors and initiatives for integrating them into larger systems are also increasing the approach’s popularity. The biggest new trend is that vendors are expanding M2M into wireless technology, using radio chips or modules they can attach to almost any device or machine. Thus, M2M is gearing up for exponential

Collocated smart objects with local rule databases (1–5) form an ad hoc reasoning system in which logical queries are sent from object to object and results are passed back along the inference chain(refer Fig. 2). In this example, smart object 1 contains one rule, A if B; to evaluate B, it sends a query to objects 2 and 3, which in turn asks object 4. Reasoning chains can be limited to physical areas around the originating object. In this example, smart object 5 is beyond the distance limit set by smart object 1 (the circle indicates the maximum distance from object 1).

Peer- to peer reasoning with smart object [7]

2 Mobile multimedia from M2M context

In order to evaluate the performance of video compres-sion coding, it is necessary to define a measure to compare the original video and the video after compressed. Most video compression systems are designed to minimize the mean square error (MSE) between two video sequences Ψ1 and Ψ2, which is defined as

where N is the total number of frames in either video sequences.

Instead of the MSE, the peak-signal-to-noise ratio (PSNR) in decibel (dB) is more often used as a quality measure in video coding, which is defined as

It is worth noting that one should compute the MSE between corresponding frames, average the resulting MSE values over all frames, and finally convert the MSE value to PSNR.

If you are expected to be late, our mobile device inform our audience automatically telling them approximately how long they have to wait. Moreover, our planner can look up the traffic condition in advance and suggests what time you should leave. Sensors can monitor the traffic conditions along the routes to your destination so that you are able to select the best route to get to the venue on time [2].

2.1 Predictive coding

However, the performance is not efficient to compress the fast changing video, so other methods to remove the spatiotemporal redundancy are proposed for video compression. The most famous method is the block matching algorithm, or motion estimation and motion compensation method. The block matching algorithm divides the current frame and the previous frame into several macroblocks, comparing the blocks in the two frames and trying to search for the best matched pairs for each block [12].

The dissimilarity D(s,t) (sometimes referred to as error, distortion, or distance) between two images Ψn andΨn-1 is defined as follows

where M(u,v) is a metric that measure the dissimilarity between the two arguments u and v.

There are several types of matching criteria and two most frequently used is MSE and MAD, which is defined as follows:

-

Mean square error (MSE):

$$ M\left(u,v\right)={\left(u-v\right)}^2 $$(4) -

Mean absolute difference (MAD):

$$ M\left(u,v\right)=\left|u-v\right| $$(5)

A study based on experimental works reported that the matching criterion does not significantly affect the search. Hence, the MAD is preferred due to its simplicity in implementation [12].

These two measures (MSE/MAD) give us a clear idea about the video frame. And MSE/MAD of the previous frame gives more information about the current frame and its complexity. If the current frame of a video sequence is fast moving (complex) then more processor core are allotted to process else only one processor core is assigned.

2.2 Background

From our previous work, even though Deterministic Markov Non-Stationary Policies (DMNSP) gives best power optimization, we can find out other policies than DMNSP of DPM also give best power optimization with respect to different arrival of request in embedded system model [17, 18]. For example, when the requests of requester are coming in long time interval, the greedy policy can give best power optimization compare with others and our DMNSP policies. When the requests are coming in continuously without inter arrival time worst policy (always on) can give best result. To allow further improvement in the battery life of portable devices, one new energy reduction schemes will be needed which ought to predict a best and suitable policy amidst existing policy online. This call for the use of intelligent controllers which can learn itself to predict a best policy that balance workload against power.

The number of levels in a DAG defined as the “depth” of a DAG. Maximizing the speed of a DAG is equivalent to minimizing the depth of the DAG. Thus partitioning and optimization methods for the fine grained DRL/M hardware architecture will be considered. For this DRL architecture, consideration of parallel processing in temporal partitioning may improve execution time. Many designers are interested in exploiting the inherent parallelism often present in large signal processing applications, so the trade-off between speed and silicon area with various levels of parallelism becomes an important design issue. Hence, a partitioning technique is developed with this parallel processing consideration in mind. The ability to find a minimum depth solution with duplication is necessary. Thus, a graph is partitioned into sub-graphs with a minimum depth solution. Each sub-graph consists of a set of clustering sub-graphs. Each sub-graph is selected by the greedy method, one at a time. This method will be shown to find the minimum depth partitioning of an application.

2.3 Complexity analysis

The whole partitioning procedure has a low time complexity. Before finding the MFC, all the nodes are processed by topological sorting. Hence during portioning, the nodes are visited in topological order. The time complexity of visiting the nodes is O(V.logV) where V is the number of nodes in the given graph. The task of finding each MFC includes calculating the area, sorting the area and labeling nodes. Each floor cone area is calculated by the depth first search (DFS) methodology. Hence, the time complexity of calculating the area is O(V.logV). In sorting the area, the number of floor cones is no more than the number of nodes V. The time complexity is O(V). When labeling nodes, a node is visited once. The complexity of labeling nodes is O(V). In the MFC-processing, to apply FFD the number of MFC is no more than the number of nodes V. The time complexity is O(V.logV). The minimum-depth partitioning solution selects k-MMG’s in each greedy method iteration. Therefore, depth determining takes O(V2.logV) time. In conclusion, the total time complexity is bounded by O(V2.logV).

The reference pixel block is generated by displacement from the current block’s location in the reference frame. The displacement is provided by the Motion Vector (MV). MV consists of is a pair (x, y) of horizontal and vertical displacement values. There are various criteria available for calculating block matching. The popular criteria of sum of absolute difference (SAD) is listed bellow

where c ij is a sample of the template macroblock and r ij is a sample of the candidate block. The search range(SR) is 16 × 16, and macroblock size is 16 × 16. Figure 3 illustrates the SAD calculation. Current and reference block candidate are represented by c and r.

As it is required to search the whole search range to find the correct MV. First say, the search starts in x direction, Fig. 3 shows the DFG of the macroblock (MB). In this graph, there are 256 (16 × 16) inputs and 1 output. However, in the HDVT there are 1920 × 1080/16 = 8100 MBs.

3 Mobile multimedia for intelligent M2M

There are also computing requirements that need to be taken into account when it comes to SDTV and HDTV, assuming we want to encode 720 p at 30 frames per second, this would require to handle 1,280 × 720 pixels per frame which gives us 27,648,000 pixels each second to process. That’s over 27 million pixels to encode and another 27 million pixels to decode. High definition is usually done using the H.264 standard (in order to lower the bandwidth required while keeping high quality) and H.264 is quite complex to compute [13].

3.1 Slice partition for HDTV

Advanced video encoding techniques take each frame, split it into smaller section called slice and then analyze and encode each macro block [15]. In other words, multi-core processors can be utilized in a fairly simple way, we split the pictures into regions and encode the regions in parallel. This is not trivial because sometimes the actual processing of a macro block is dependent on previous images or its neighbor macro blocks (especially for motion estimation algorithms), but other than that, it is quite straightforward. Each core processes each slice, we use a scheduling algorithm to switch between core when video is fast and smooth [11, 14]. For illustration we use Fig. 4 (Irene-sign language sequence), though slice are not exact rectangular split.

3.2 Requester model

A special entity called “requester” that generates workloads including IO requests and computation needs. Request modeling is one essential part of power management because policies predict future workloads based on their requester models. We consider two requester models for designing policies: single requester, multiple requesters. These models are increasingly complex and close to the programs running on realistic interactive systems like a laptop computer.

Figure 5 depicts the concept of the single-request model. The requester generates requests for the device; meanwhile, the power manager observes the requests. Based on this observation, the power manager issues commands to change the power states of the device. Some policies explicitly use this model in determining their rules to change power states [5, 20]; some other policies implicitly assume a single requester [10].

3.3 Reinforcement learning (RL) for M2M systems

Figure 6 shows how the RL agent interacts with the process. Nearly all RL methods currently in use are based on the Temporal Differences (TD) technique [16]. The fundamental idea behind it is prediction learning: when the agent receives reinforcement, it must somehow propagate it backwards in time so that states leading to that condition and formerly visited may be associated with a prediction of future consequences. This is based on an important assumption on the process’ dynamics, called the Markov condition [9]: the present observation must be perfectly a conditional probability on the immediate past observation and input action. In practical terms, this means that the agent’s sensors must be good enough to produce correct and unambiguous observations of the process states.

Power consumption of IBM Hard Disk Drive under different DPM polices are shown in Figs. 7, 8, and 9. In Tables 1, 2, and 3 Columns 2 to 4 respectively show the power consumption implemented by general purpose processor (GPP), parallel processing elements without the local memory for various DPM scheme.

4 Experimental results

Our proposed algorithm can compute a theoretically unlimited number of parallel FPGA modules (DRPPU) but, for reasons of simple comparison for core to core communication, we perform simulations for parallel arrays from one to 5 DRPPU and for DRPPU areas (A DRPPU ) from 1,536, 2,304, 2,688, 4,992, 6,144, 6,656 to 9,280 CLB. Thus we are demonstrating the ability of our algorithm to help design massively parallel architecture. In fact, FPGA is intrinsically capable of such function but application up to the present time has been largely linear, due to lack of design tools and the habitual persistence of traditional thinking.

On the other hand as regards to dynamic power management policies are concern, all the policies suggested so far [9] have either under prediction or over prediction by which they pay performance or power penalty. Our policy makes sure that server is ON, when there is an event in the Service Requester and Service Queue. Which means that under prediction or over prediction will never occur. Performance penalty will never occur by the proposed scheme.

5 Conclusion

The addition of parallel processing techniques to reconfigurable computing has the potential to improve DSP applications. Therefore, multiple processing units arranged according to traditional parallel processing techniques are being applied for high computation and data intensive applications such as HMM. This paper has presented a minimum-depth partitioning algorithm for parallel reconfigurable computing. It is shown that application speed can be improved by increasing parallelism of the parallel DRC units. The resulting high-parallelism design has somewhat higher total chip area because of redundancy between parallel units. The proposed algorithm can accept arbitrary chip area constraints or maximum parallelism constraint and then optimize for speed

Good predictors should minimize the number of mispredictions. We call over prediction (under prediction) a predicted idle period longer (shorter) than the actual one. Over predictions give rise to a performance penalty, while under predictions imply power waste but no performance penalty. To represent the quality of a predictor we define two figures: safety that is the complement of the risk of making over predictions, and efficiency, that is the complement of the risk of making under predictions.

All the policies suggested so far have either under prediction or over prediction by which they pay performance or power penalty. Our policy makes sure that server is ON, when there is an event in the Service Requester and Service Queue. Which means that under prediction or over prediction will never occur. Performance penalty will never occur by our policy.

References

Benini L, Paleologo G, Bogliolo A, De Micheli G (1999) Policy optimization for dynamic power management. IEEE Trans Comput Aided Des 18(6):813–833

Chen Y-K (2012) Challenges and opportunities of internet of things. ASP-DAC, 2012, 17th Asia-South Pacific Conference Proceedings, Page 383–388, Jan 30th–Feb 2nd 2012

Chen LF, Lai YK (2004) VLSI architecture of the reconfigurable computing engine for digital signal processing applications. IEEE Circuits and Systems Conference, ISCAS ’04. pp 937–40

Chung E, Benini L, De Micheli G (1999) Dynamic power management for nonstationary service requests. Design, Automation and Test in Europe, pp 77–81

Johnson RA (2001) Probability and statistics for engineers. Prentice hall of India

Joint Video Team of ITU-T and ISODEC JTC I (2003) Draft ITU-T recommendation and final draft international standard of joint video specification. (ITU-T Kec. H.264 ISO/IEC 14496.10 AVC) JVT of ISO/IEC MPEG and ITU-T VCEG , JVT – GO05

Kortuem G, Kawar F, Fitton D, Sundramoorty V (2010) Smart object as building blocks for the internet of things. IEEE Internet Comput 44–51

Lu Y, Chung E, Simuníc T, Benini L, De Micheli G (2000) Quantitative comparison of PM algorithms. Design, Automation and Test in Europe, pp 20–26

Lu Y-H, De Micheli G (2001) Comparing system-level power management policies. Stanford University, IEEE

Maestre R, Kurdahi FJ, Fernández M, Hermida R, Bagherzadeh N, Singh H (2001) Kernel scheduling techniques for efficient solution space exploration in reconfigurable computing. Special issue on modern methods and tools in digital system design. J Syst Archit 47:277–292

Paul A (2013) High performance for adaptive deblocking filter in H.264/AVC system. IETE Tech Rev

Paul A, Bharanitharan K, Wu J (2013) Algorithm and architecture for adaptive motion estimation in video processing. IETE Tech Rev 30(1):24–30

Paul A, Chen B-W, Bharanitharan K, Wang J-F (2013) Video search and indexing with reinforcement agent for interactive multimedia services. ACM Trans Embed Comput Syst 12(2)

Paul A, Jiang YC, Wang JF, Yang JF (2012) Parallel reconfigurable computing based mapping algorithm for motion estimation in advanced video coding. ACM Trans Embed Comput Syst 11(S2)

Paul A, Wu J, Yang J-F, Jeong J (2011) Gradient-based edge detection for motion estimation in H.264/AVC. IET Image Process 323–327

Qiu Q, Pedram M (1999) Dynamic power management based on continuous-time Markov decision processes. Design Automation Conference

Schmit H et al (2002) PipeRench: a virtualized programmable datapath in 0.18 micron technology. IEEE Custom Integrated Circuits Conference, pp 63–66

Singh H, Lu G, Lee M, Kurdahi FJ, Bagherzadeh N, Filho E, Maestre R (2000) MorphoSys: case study of a reconfigurable computing system targeting multimedia applications. Proceedings Design Automation Conference (DAC’00), pp 573–578, Los Angeles, California

Stallings W (2003) Computer organization and architecture: designing for performance. Pearson Education

Sutton RS, Barto AG (1998) Reinforcement learning—an introduction. MITPress, Cambridge, A Bradford Book

Tsai PL, Huang SY, Liu CT, Wang JS (2003) Computationaware scheme for software-based block motion estimation. IEEE Trans Circuits Syst Video Technol 13(9):901–913

Vissers KA (2003) Parallel processing architectures for reconfigurable systems. Design, Automation and Test in Europe Conference and Exhibition, pp 396–397

Wiegand T, Sullivan GJ, Bjontegaard G, Luthra A (2003) Overview of the H.264/AVC video coding standard. IEEE Trans Circuits Syst Video Technol 13(7):560–576

Acknowledgments

This research is support by Kyungpook National University Research Fund 2012. This work was partially supported by URP-CEST 2013 [Undergraduate Research Program - Center for Embedded Software Technology], Kyungpook National University, Korea.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Paul, A., Rho, S. & Bharnitharan, K. Interactive scheduling for mobile multimedia service in M2M environment. Multimed Tools Appl 71, 235–246 (2014). https://doi.org/10.1007/s11042-013-1490-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-013-1490-0