Abstract

One of the major challenges in the content-based information retrieval and machine learning techniques is to-build-the-so-called “semantic classifier” which is able to effectively and efficiently classify semantic concepts in a large database. This paper dealt with semantic image classification based on hierarchical Fuzzy Association Rules (FARs) mining in the image database. Intuitively, an association rule is a unique and significant combination of image features and a semantic concept, which determines the degree of correlation between features and concept. The main idea behind this approach is that any image visual concept has some associated features, so that, there are strong correlations between the concepts and their corresponding features. Regardless of the semantic gap, an image concept appears when the corresponding features emerge in an image and vice versa. Specially, this paper’s contribution was to propose a novel Fuzzy Association Rule for improving traditional association rules. Moreover, it was concerned with establishing a hierarchical fuzzy rule base in the training phase and setup corresponding fuzzy inference engine in order to classify images in the testing phase. The presented approach was independent from image segmentation and can be applied on multi-label images. Experimental results on a database of 6000 general-purpose images demonstrated the superiority of the proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Rapid growth of multimedia information leads to the appearance of content management as a strategic requirement. Content based information retrieval is one approach for content management which contains a set of techniques for classifying and retrieving information [9]. The focus of the paper was on image classification as an important field in information retrieval and machine vision extensive research efforts have been devoted in the field of image classification in the recent years [10, 11, 13]. Most famous techniques in image classification include support vector machine (SVM), Decision Tree, Naïve Bayes, etc. Association rule based classification is a new approach in image classification [1, 3, 5, 19]. An association rule is a combination of low-level visual features and semantic concept which are unique and significant for describing the semantic concept. In [20], association classification was proven to have higher classification accuracy than other approaches. The main goal of image classification using association rules is to find a set of rules in order to identify the classes of undetermined image feature vectors. Generally, designing an image classifier based on association rules includes the following steps: (a) extracting image features, (b) mining frequently repetitive pairs of features and concepts to generate association rules, and (c) selecting rules with the best evaluation measures. In order to enhance the predictability of the association rules, different papers have been published which presented classification systems based on Fuzzy Association Rules (FAR)[1, 5]. The FAR can be considered to be a classification rule if the antecedent part contains fuzzy feature sets and the consequent part contains a class label [1]. The FAR is an implication, ‘If X is A then Y is B’, to deal with quantitative attributes. X and Y are sets of attributes and A, B are fuzzy sets which describe X and Y, respectively [17]. Chan Man Kuok et al. proposed an algorithm which used a fuzzy technique for mining association rules and the algorithm solved the problem of sharp boundary [8]. Jing et al. used a cluster technique to extract fuzzy association rules and proposed CBFAR algorithm so that this approach outperformed a known Apriori-based fuzzy association rules mining algorithm [6]. In 2008, P. Santhi Thilagam et al. proposed an algorithm to extract and optimize fuzzy association rules using a multi-objective genetic algorithm. This method was efficient in many scenarios [16].

Class hierarchies are a common way for reducing the complexity of the classification problem, especially when dealing with a large number of classes because some classes are more closely related than others [12]. While flat classification techniques might reach the minimum performance in dealing with a large number of classes, the hierarchical classification techniques are able to overcome the problem by training in a hierarchical structure in a stepwise manner [4, 12]. Given a hierarchy of classes, standard machine learning approaches may find it harder to discern similar classes than the classes that are unrelated according to the classification system. Thus, it would be beneficial to apply a recursive top-down approach to hierarchical classification: first, to discriminate the subsets of classes at the top level of the hierarchy and then to recursively separate the classes (or sets of classes) in those subsets [21]. Figure 1 illustrates an example of hierarchical image classification. The problem consists of five classes including sea, waterfall, sunset, mountain and snow. The given hierarchy depicts some classes as strongly related to each other. For instance, the Sea class is related to Waterfall class but not to Sunset. So, in the first step, images are classified in two classes: Waterscape and Mainland. Then, in the second step, the Waterscape class is divided into two sub-classes. Furthermore, the Mainland class is divided to three sub-classes. Zimek [21] investigated why predictive performance can be improved by exploiting the class hierarchy. Recently, several systems have successfully applied hierarchical classification on large databases [4, 12, 18, 21].

1.1 Paper approach

Many approaches have proposed association rule mining based on image content [19]. In this paper, the major contribution was to present a novel Fuzzy Association Rule (FAR) for improving traditional association rules. Intuitively, an association rule is a unique and significant combination of image features and a semantic concept which determines the degree of correlation between features and concepts. The main idea behind this approach is that any image visual concept has some associated features and there are strong correlations between the image concept and its corresponding features. Regardless of the semantic gap, an image concept appears when the corresponding features emerge in an image and vice versa. FAR was used to build a semantic classifier for demonstrating the effectiveness of the proposed approach of FAR mining. In order to build the semantic classifier, a fuzzy rule base was established in the training phase, which contained the best FAR with the highest evaluation measure. Classification was performed in the testing phase using fuzzy inference engines. The experimental results showed the significant improvement of FAR against traditional Association Rules.

Moreover, the minor contribution was a handmade hierarchical database structure IN ORDER to reach better performance in image classification. The fuzzy rule base and fuzzy inference engines were organized according to the database hierarchical structure. All hierarchical structures (database, fuzzy rule base and fuzzy inference engines) were handmade and no algorithm was used for their set up. The test bed of this study was a part of COREL image database. No prior image segmentation was required for feature extraction.

1.2 Outline of the paper

The rest of the paper is organized as follows: in Section 2, the system framework of the proposed approach is presented. Then, feature extraction and fuzzification of low-level visual features were described in the next section. The innovation of this paper is given in Sections 4 and 5. Fundamental concepts of FAR mining are presented in Section 4 and the fuzzy rule base is introduced in Section 5. Hierarchical fuzzy classification is described in the next section. The experimental results were presented and the advantages of this approach over other state-of-the-art papers were demonstrated in Section 7; concluding remarks were given in Section 8.

2 The system framework

Figure 2 demonstrates the framework of the presented approach in two phases: training phase and testing phase. In the former, after feature extraction, low level features were extracted. Fuzzification of feature vectors was done; then, FAR was mined for each concept in all layers and a fuzzy rule base was made up. In the latter, for a new image, a semantic image classifier was built using a hierarchical fuzzy inference system after feature extraction.

Association rules determine the degree of correlation between a feature and a semantic concept [10]. This paper presented a novel FAR that led to more effectiveness than non-fuzzy association rules. Figure 3 shows the value of a feature versus 1000 images with different concepts. Clearly, the feature value for the image subset of 500-600 was very different from other images. If the image subset of 500-600 included the concept C k , then there was a very strong correlation between feature F i and concept C k which could be presented by F i → C k . The features like Fi were looked for in order to establish a fuzzy rule base. However, if no such predictive features could be found because of its challenges, building a fuzzy rule base would be continued by fewer predictive features. Evaluation measures of FARs determined the productivity power of a rule which was investigated in more details later.

3 Feature extraction and fuzzification

In this paper, the feature extraction was performed based on feature availability in the training phase [2] and feature correlation in the testing phase. Feature extraction was performed in HSV and La*b* color space. In fact, each descriptor was extracted from six color matrixes: H, S, V, L, a* and b*. Seven types of descriptors were extracted from each color matrix: two types of color feature, four types of texture feature and one type of shape feature. The color moments and fuzzy color histogram [7] were color descriptors of feature vector, the former of which took six places (the fifth moment until the tenth moment) and the latter took ten places in the feature vector. Generally the fuzzy features lead to better results [14]. The fuzzy color histogram extracted ten colors from the image: red, blue, green, yellow, brown, cyan, magenta, white, dark and black. Thus, 16 color descriptors were extracted from each color matrix. Four types of exploited texture descriptors were contrast, correlation, energy and homogeneity. Co-occurrence matrix, which is necessary for computing texture descriptors, were extracted in four directions of 0, 45, 90 and 135 degrees by two distances of 5 and 10 pixels; thereby, eight co-occurrence matrixes were obtained from each color matrix. In this way, 32 texture descriptors were extracted from each color matrix because four texture descriptors were extracted from each co-occurrence matrix. Edge detector descriptor was used to extract shape features. Lines in four directions (0, 45, 90 and 135) were extracted using sobel filter. Lines with less than 10 pixels and more than 100 pixels were omitted. The sum length of horizontal lines was one descriptor and the sum length of vertical lines was other descriptor; also two other descriptor values were computed in this way. So, four places of feature vector were dedicated for shape features. After performing feature extraction in the spatial domain, the color matrixes were be transformed to five frequency domains (Fourier, wavelet, Radon, Z and cos domain). Then, the mentioned descriptors were extracted again.

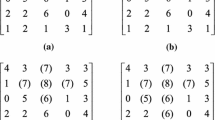

The features with strong correlation with concepts were searched for since the features with such property were not known; thus, many features was inevitably extracted in the training phase and the value of correlation of each feature was computed; finally, the ones with the most correlation were selected in order to build the rule base. It appeared that, in the testing phase, just the most correlated features, which were used to build the rule base, were extracted. Normalization and fuzzification of feature vector were done after feature extraction. Figure 4 shows the triangular membership functions used in the fuzzification. The original feature values were replaced with the relative degree of features in all MFs (Low, Mid, High); thus, fuzzy feature vector tripled the dimension of the original feature vector. Note that, dimension of fuzzy feature vector decreased after FAR mining. Table 1 gives an example of original feature value consisting of three low level features, named F1, F2 and F3, which were extracted from 10 images including three concepts of Beach, Sky and Grass. Table 2 represents the fuzzy feature vectors based on the value of Table 1. For more explanation, the original value of the feature F1 of the first image was 0.1 and the relative degrees of 0.1 to Low, Mid, and High membership functions were 0.8, 0.2 and 0 respectively (\( {\mu_{Low}}({F_1}) = 0.8 \), \( {\mu_{Mid}}({F_1}) = 0.2 \) and \( {\mu_{High}}({F_1}) = 0 \)). Hence, the original feature value (0.1) was replaced with three values in the fuzzy feature vector (0.8, 0.2, and 0). This process was done for all the low level original feature values.

4 Novel fuzzy association rule

As mentioned before, association rules address the value of correlation between a feature and a concept [5]. In this section, the paper contribution of FAR mining was presented. The presented FAR was more predictive than non-fuzzy Association Rule. For the beginning, given the sample set U = {S 1 ,…,S n } and the semantic concepts C = {c 1 ,…,c k }, a concept in layer l was shown as \( C_i^l \). An image is demonstrated as I = (c, F) where c is the image concept and F is the feature vector. For each concept c∈ C, the image set can be split in two categories: positive samples (\( S_c^{+} \)) and negative samples (\( S_c^{-} \)). The former contained samples with the concept c and the latter contained samples without concept c. In this paper, rules were shown as:

Table 3 describes these variables. As follows, the primary concepts of paper contribution are presented.

FuzzyCount of a rule in the image set D, specified the total degree of membership of the feature f to the \( MF_i^l \) in images containing the concept \( C_k^l \).

where the term f i refers to a feature of i th image sample \( S_c^{+} \) in D.

-

FuzzySupport of a rule in the image set D addresses fuzzy percentage of images including both the feature f i and concept \( C_k^l \).

where |D| is the number of images in D.

For example, FuzzySupport of a rule, f is \( M{F_i}^l \to C_k^l \), equaled 0.9, meaning that 90 % of images had both the feature f and concept \( C_k^l \).

-

FuzzyConfidence of a rule addressed the fuzzy percentage of images containing both the feature f and concept \( C_k^l \) toward the total of images containing the feature f. In fact, it calculated the probability of finding concept c in the image set.

FuzzyConfidence presented the implication of a rule and addresses the accuracy of its prediction. A given rule was called confident if the value of FuzzyConfidence exceeds a minimum threshold. For example, supposing two image sets, one with 1000 images and the other with 100 images, both would have five images with the feature f and concept c. The confidence of the given rule, f is \( MF_i^l \to C_{^k}^l \), was 1 for both data sets; so, it can classify five images of these classes. It should be noted that five images formed 5 % of the first class while forming 0.5 % of the second class. Although the confident rules had enough predictive power to build the semantic classifier, to reach more predictive power, the stronger rules had to be extracted.

-

FuzzyLift calculates the prediction power of a rule. It is the ratio of FuzzyConfidence to Expected Confidence, which is equal to the distribution of concept c in image set.

FuzzyLift addressed the importance of a rule. If the value of FuzzyLift was greater than one, then it would indicate that there was an association between the image feature and image concept. On the other hand, if the value of FuzzyLift was less than one, it would indicate a negative relationship between the feature and concept. If the value of FuzzyLift was equal to 1, then the rule had no effect [17].

For example, 20 percent of images in a dataset can be supposed to contain the concept c. If an image |was selected randomly by the probability of 20 percent, the image concept would be c (confidence of 0.2). In cases resembling the one explained, if the confidence was 0.1, the probability of selection would be weaker than the random selection. Generally, the rule confidence must be more than the concept distribution in the dataset.

Table 4 shows all FARs and their objective measures (FuzzySupport, FuzzyConfidence and FuzzyLift) which were extracted from Table 2 based on the proposed algorithm. For further explanation, in the first row, f1 is Low → beach expresses that, if the feature f1 was a member of the low fuzzy set, then the image concept would the beach. The rule confidence was one because the value of \( {\mu_{Low}}({f_1}) \) was zero for non-beach concepts. Therefore, \( {\mu_{Low}}({f_1}) \)did not emerge for any non-beach concepts. So, if the concept of input image was the beach, the f1 feature was sufficient for its classification. Some rules such as 2, 3 had weak FuzzySupport and FuzzyConfidence, so they were not used in the Fuzzy Rule base. There were several methods for developing association rule mining. One of the famous methods called Apriori, which has been applied in a wide range of tasks [5, 8], was used here to extract association rules.

5 Building fuzzy rule base

Obviously, prediction power of one FAR is not enough to classify large image databases. So, a number of the most qualitative FARs should be exploited for building a semantic classifier. The prediction power of a rule depends on three factors [19]: 1) covering the rate of positive samples, \( S_c^{+} \), 2) capability for separating the positive sample from the negative sample (\( S_c^{+} \) from \( S_c^{-} \)), and 3) the value of rule functionality improvement versus random classification. The first and third factors were explained by FuzzySupport and FuzzyLift, respectively. The second factor was covered by GrowthRate, as defined below:

The GrowthRate is the ratio of positive to negative samples of a rule, which evaluates the separating power \( S_c^{+} \) from \( S_c^{-} \).

The overall quality of a rule, called rule weight, is measured by combining mentioned triple factors:

The approach for weighting rules was somewhat similar to [19]. Table 5 summarizes all the concepts about FARs. The weight of a rule specifies the effectiveness of the rule in classification. Since the rule base must be composed of the most effective rules, in this paper, the rules with the highest weight were inserted to the rule base.

6 Hierarchical fuzzy classification

In recent years, hierarchical classification has been successfully used for the classification of large databases [4, 13]. Hierarchical classification has some advantages compared with flat classification such as using posterior probability of each concept, exploiting association between concepts (ontological information), capability of performing parallel classification and improving the accuracy of classification [4, 13]. In this paper, the image database was organized in four layers as a hierarchical structure. Table 6 shows the hierarchy of images. A number of Mamdani fuzzy inference engines were exploited to perform the classification [17]. They were organized in a hierarchical structure corresponding to the hierarchical structure of image database. Figure 5 shows a part of fuzzy inference engines. Figure 6 demonstrates the trapezoidal output fuzzy sets organized corresponding to the hierarchical structure of image database. Defuzzification was performed using the central average method, which has three advantages of simplicity, understandably and continuously [17].

Training phase and testing phase of the proposed algorithm are summarized in Figure 7. The first step of the training phase was feature extraction and fuzzification. Apriori algorithm was used for rule mining in each layer. The most qualitative FARs with the highest weight were used to build the fuzzy rule base. The output of the training phase was the fuzzy rule base. The next step was classification. Adaptive feature extraction and fuzzification of test image were the first part of classification. Adaptive feature extraction meant that the feature vector of input test image was composed of the features that built the rule base in the training phase. Afterwards, the hierarchical fuzzy inference engines in partnership with the fuzzy rule base determined the concepts of test image.

7 Evaluation and experimental results

The proposed algorithm was implemented and run in a system of Pentium IV 2.8 GHZ CPU with 512 M memory. The image database contained 6000 general-purpose images from COREL including 48 semantic concepts (class), which were manually organized in four layers (http://imagedb.persiangig.com). Each class contained about 100 images 60 images of which were dedicated to training and 40 for testing phases. To certify the characteristic of generality, 1000 unknown concept images were injected into the database. The unknown concept’s class was not used in the training phase. In other words, the unknown concepts were not learned by the system. It was utilized in the testing phase for evaluating the generality of the algorithm against unknown concepts. MATLAB was used for programming. In this section, the following cases were studied:

-

A brief review ofevaluation measures of classifiers

-

Performance analysis

-

Fuzzy rule base

-

Weight threshold

-

Effectiveness

-

Efficiency

-

Hierarchical performance

-

-

Discussing the effectiveness of fuzzification

-

The quality and quantity of FAR

-

Fuzzy association rules against non-fuzzy association rules

-

-

System performance in dealing with multi-label images

-

Comparison with the related works

Finally, in order to evaluate the performance objectively, the results of the approach were compared with the two works in the literature: CFAR [13] and FARCHD [1], both of which performed classification using Fuzzy Association Rules. To equalize the test bed for comparison, seven real-world datasets were developed in the experiments.

7.1 A brief review of evaluation measures of classifiers

There are some common measures to evaluate systems in information retrieval. In this part, a brief review of some is presented and some others will be reviewed using these criteria in order to evaluate the suggested algorithm. Before anything, some primary concepts must be introduced. According to Figure 8:

-

True Positive (TPi): number of images correctly assigned to class i

-

False Positive (FPi): number of images incorrectly assigned to class i but actually did not belong to that

-

False Negative (FNi): number of images did not assign to class i but actually belonged to that

-

True Negative (TNi): number of images did not assign to class i but actually did not belong to that

Now, familiarity with evaluation measures can be done. The most commonly used performance measure in classification is precision and recall. Precision i means the percentage of correctly classified images among all the images assigned to class i. Recall i means the percentage of correctly classified images among all the images, which must be assigned to class i [15]:

F-measure is another popular criterion for evaluating the classification quality. Since it makes a trade-off between recall and precision, it is widely used for evaluating the classifiers. F-measure expresses the harmonic mean of recall and precision. It is defined for class i as:

This is also known as the F1-measure because recall and precision have equal weight.

While the above measures are used for evaluating the classification quality of each class, there are other measures to evaluate the overall quality of the classifier in multi-label classification. Micro-average and macro-average are two classifier evaluation measures, which are derived from F-measure. They make more sense of the strength and weakness of systems under evaluation. The micro-average gives equal weight to all images so it is considered as the average over all other classes [15]:

where M is the number of classes. In the same way, it is possible to compute the overall micro-average of the classifier. It is the harmonic mean of micro-average precision and micro-average recall :

where precision and recall are micro-average precision and micro-average recall , respectively.

The macro-average gives equal weight to each class [15]:

where M is the number of classes. In the same way, it is possible to compute the overall macro-average of classifier. It is the average of F-measure over all the classes:

where precision i and recall i are the value of precision and recall for the class I, respectively

Table 7 shows the result of classification in detail. For more clarity, the measures of spoon class are explained. As appeared in the spoon row, the classifier assigns 86 images to this class correctly (TP) but it assigned 14 spoon images to other classes incorrectly (FN). After classification, there were six images in the spoon class which actually did not belong to this class (FP).

According to the formula 8, 9, the evaluation measures were computed as:

7.2 Performance analysis

7.2.1 Fuzzy rule base

The fuzzy rule base should be composed of the most qualitative rules which have a big weight value. In this paper, the rules with the weight of more than ten were selected to build the rule base (weight threshold = 10). Regarding Formula 7, the weight of a rule is a combination of three factors: FuzzyConfidence, GrowthRate and FuzzyLift. Therefore, more value of these measures leads to the more value of rules weight. So, most qualitative rules have the greatest value of these measures. Figure 9 shows the histogram of these measures for rules in the fuzzy rule base. The maximum value of FuzzyConfidence is one while the values of FuzzyLift and GrowthRate should be more than one to be beneficial for classification. Figure 10 shows the number of rules in each layer. Classes of layer n had a smaller number of rules against the classes of layer n-1 because the number of images in each layer was less than the number of images in the previous layer. Classification of a few images requires less number of rules. For example, in layer one, 6000 images should be classified while, in layer three, the fuzzy inference engine of seat concept should classify only 200 images. Classification of 6000 images requires a greater number of rules than that of 200 images.

7.2.2 Weight threshold

In this part, the discussion of weight threshold tuning and its effectiveness on system performance and time complexity is presented. If the weight threshold is a small number, then the rule base contains a great number of rules; subsequently, the time of classification increases because the fuzzy inference engines have to process a great number of rules. Figure 11a shows the average time of classification over different values of weight threshold. Decrease of the weight threshold leads to a greater number of rules inserted to the rule base. Thus, the system effectiveness increases because a greater number of rules can classify images more precisely. Figure 11b shows the system performance over different values of weight threshold. If the weight threshold is equal to n, then all rules with the weight of more than or equal to n will be inserted to the rule base. The selected weight threshold is only one possible choice and authors have no claims about the optimal value of weight threshold.

7.2.3 Effectiveness

The overall quality of rule base plays the most important role in system effectiveness.

Fuzzy inference engines with the partnership of fuzzy rule base assign each image to an appropriate class. Figure 12 shows the precision and recall rate over each layer. The overall precision of layer n was less than the performance of layer n-1 because, if an image was classified incorrectly, then it would be incorrectly classified in the next layer, inevitably. Table 8 shows the overall performance in each layer. All images would be classified correctly if all the rules had absolute confidence, meaning that: FuzzyConfidence = 1. Since the rules were somewhat confident, FuzzyConfidence < 1, some images were incorrectly classified. Figure 13 shows the number of misclassified images in all the layers. The misclassified images of concept Artificial were those that must be assigned to the Nature class but they were wrongly assigned to Artificial class.

Recall and precision behavior in the hierarchical structure. a Performance of layer 1; percision average is 90; recall average is 81. b) Performance of layer 2; precision average is 90; recall average is 85. a) Performance of layer 3; precision average is 87; recall average is 82. b Performance of layer 4; precision average is 85; recall average is 81

Due to evaluating the generality of the algorithm against new concepts, 1000 unknown concept images were inserted in the image database. The unknown concepts were not learned by the system. Figure 14 shows the number of misclassified unknown concept images. About 20 % of unknown concept images were wrongly assigned to other concepts and 80 % were correctly classified as unknown concepts. Table 9 shows the system performance after inserting 1000 unknown concept images to the database. As can be observed, the classifier had good persistence against unknown concepts. In order to evaluate the rate of performance reduction against unknown concepts, the unknown concept images were injected into the image database step by step. Figure 15 shows the micro-averagePrecision and micro-averageRecall in ten steps. In each step, 100 images were inserted to the database. System performance decreased gradually when unknown concept images were inserted to the database.

7.2.4 Efficiency

The time of classification was in correlation with the number of rules in fuzzy rule base because the fuzzy inference engines had to process the rules and rule processing was time consuming. Figure 16 shows the average time of classification over each layer. This figure had meaningful correlation with Figure 10 which showed the number of rules in each layer because a greater number of rules led to greater classification time.

Fuzzy inference engines in layer n classified images more quickly than inference engines of layer n-1 because the number of rules in layer n was less than that in layer n-1. For example, the fuzzy inference engine in layer one should classify 6000 images into two classes of Artificial and Nature using 105 rules while, in layer 3, the fuzzy inference engine of seat concept classified only 200 images in two classes using 15 rules. Obviously, processing 15 rules would take less time that processing 105 images.

7.2.5 Hierarchical performance

To demonstrate the performance of hierarchical classification versus flat classification, a flat classifier was developed, which classified images of layer 4 without considering the three first layers. In other words, it tried to classify the test image in 48 classes. Table 10 shows the overall performance of hierarchical and flat classifier. The hierarchical performance was more than flat classification because it could use the similarity between the classes [12]. Figure 17 shows the efficiency and effectiveness of flat classifier versus hierarchical classifier. Generally, hierarchical classifiers are more time consuming because an image should be classified several times in layers. The result of time spending was to reach better performance in recall and precision.

7.3 Discussing the effectiveness of fuzzification

Fuzzification of feature vector plays an important role in the prediction power of FAR. In other words, the rules extracted from fuzzy feature vector had higher values of weight versus the rules extracted from non-fuzzy feature vector. Fuzzification of feature vector was applied after feature extraction. In this part, different aspects of fuzzification are discussed.

-

The quantity and quality of FAR

In order to evaluate the effect of membership function, two different fuzzy sets were tested: triangular and Gaussian fuzzy sets. Figure 18 compares the quantity and quality of extracted rules with two fuzzy sets. The triangular fuzzy set generated a greater number of rules against Gaussian fuzzy set. The average rules generated by Triangular fuzzy set was 3600 rules while the average rules generated by Gaussian fuzzy set was 3000 rules.

The quality of rules is the most important thing for classifier performance. Figure 18.b shows that the triangular fuzzy set generates more qualitative rules because of producing a greater number of rules with the weight threshold of six. Table 11 compares the system performance using triangular and Gaussian fuzzy sets. As was expected, the triangular fuzzy set built more qualitative classifier than Gaussian fuzzy set. In this paper, all the experiments were performed using the triangular membership function.

-

Fuzzy association rules against non-fuzzy association rules

Non-fuzzy association rules were extracted from quantized feature data. Quantization led to the change of the real value of data and replaced unrealistic valued in the dataset. This problem led to the reduction in the effectiveness of non-fuzzy association rules. To evaluate the suggested fuzzy association rule against non-fuzzy association rules, the feature vectors were discritized; then the association rules were extracted like in [19]. Table 12 shows the overall performance of the suggested algorithm and non-fuzzy association rules.

7.4 System performance in dealing with multi-label images

No special experiment was developed for evaluating the system performance in dealing with multi-label images because most of the images in the database were multi-label; thus, all the results implicated the system behavior in dealing with multi-label images. However, the process of handling multi-label images was given here. Consider a multi-label image with two concepts, for example, sunset and mountain. So, it belonged to these two classes (sunset and mountain) altogether. In the training phase, the sunset rules were extracted from the image when the image was placed in the sunset class; also the mountain rules were extracted from the image when it was placed in the mountain class. Therefore, the rules of each image concept were extracted separately from image when the image belonged to each class. In the testing phase, adaptive feature extraction was applied on the test image and the feature vector was constructed; subsequently, the fuzzy inference engines started the matching process between feature vector and fuzzy rule base. In this process, perhaps some rules with different concepts were matched with the feature vector; meaning that the input image contained more than one concept. For example, if some sunset rules and some mountain rules were matched with the feature vector, then fuzzy inference engine would classify the test image in two classes of sunset and mountain.

7.5 Comparison with the related works

To evaluate the proposed algorithm, these results were compared with the findings of some other state-of-the-art similar paper: CFAR [13] and FARCHD [1], both of which dealt with fuzzy association rule in order to establish a semantic classifier. Table 13 indicates that the proposed approach reached0 more precision with a less numbers of rules compared with two other methods. Due to equalizing the test bed of the comparison, six real-world datasets were considered in the experiments. All the datasets were multivariate, real attributes and dedicated for the classification task.

8 Conclusion

In this paper, a new approach was proposed to improve image classification using fuzzy association rules (FAR). The FAR was a combination of low-level visual features and semantic concept, which was unique and significant for describing the semantic concept. Fuzzy rule base was constructed using the most qualitative FARs after feature extraction and fuzzification of feature vector. A hierarchical fuzzy inference engine was developed for building the semantic classifier.

In this study, a part of COREL image database was used. It had about 6000 images with 48 categories. To investigate the generality characteristic of the database, it was enriched by injecting 1000 unknown concept images, which were not used in the training phase but participated in the testing phase. The experimental results demonstrated that the proposed classifier had the factors of an outstanding classifier. Despite the impressive advantages of the suggested classifier such as the independence of image segmentation, solving dimensionality problem and efficiency in run-time, one of its limitations was being time consuming in FAR mining.

For future works, the methodology might be improved in the following directions:

The application of the developed methodology could be changed to a dynamic image database environment (i.e. support insertion and deletion of images and concepts in the database).

Besides the semantic classification, this methodology may be also applied to solving other problems such as automatic image annotation, image retrieval, object recognition and image database summarization.

Fuzzifier and defuzzifier are static fuzzy sets and can be dynamic to different concepts because some semantic concepts are more consistent with some special fuzzy sets.

References

Alcal J, Alcal A, Herrera F (2011) A fuzzy association rule based classification model for high-dimensional problems with genetic rule selection and lateral tuning. IEEE Trans Fuzzy Syst. doi:10.1109/TFUZZ.2011.2147794

Chang B, Hang H, Huang H (2003) Research friendly MPEG-7 software testbed. IS&T/SPIE Symposium on Electronic Imaging Science and Technology, pp 890–901

Chen Z, Chen G (2008) Building an associative classifier based on fuzzy association rules. Int J Comput Intell Syst 1:262–273

Fan J, Gao Y, Luo H, Jain R (2008) Mining multilevel image semantics via hierarchical classification. IEEE Trans Multimed 10:167–187

Hua Y, Chena S, Tzengb G (2002) Mining fuzzy association rules for classification problems. Comput Ind Eng 43:735–750

Jing W, Huang L, Luo Y et al (2006) An algorithm for privacy-preserving quantitative association rules mining. Proceedings of the 2nd IEEE International Symposium, pp 315–324

Konstantinidis K, Gasteratos A, Andreadis I (2005) Image retrieval based on fuzzy color histogram processing. Opt Commun 248:375–386

Kuok CM, Fu A, Wong MH (2008) Mining fuzzy association rules in databases. ACM SIGMOD 27:159–168

Liu Y, Zhang D, Lu G, Ma W (2007) A survey of content-based image retrieval with high-level semantics. Pattern Recogn 40:262–282

Lu D, Weng Q (2007) A survey of image classification methods and techniques for improving classification performance. Rem Sen 28:823–870

Malathi A, Shanthi V (2011) Statistical measurement of ultrasound placenta images complicated by gestational diabetes mellitus using segmentation approach. j. of information Hiding and Multimedia Signal Process 2:332–343

Marsza M, Schmid C (2008) Constructing category hierarchies for visual recognition. European Conference on Computer Vision (ECCV '08), pp 479–491

Silaa C, Freitas A (2011) A survey of hierarchical classification across different application domains. Data min. and knowl. Discovery 22:31–72

Singh C (2011) Fuzzy rule based median filter for gray-scale images. j. of information Hiding and Multimedia Signal Process 2:108–122

Sun A, Lim E (2001) Hierarchical text classification and evaluation. In proc. ICDM, pp 521–528

Thilagam S, Ananthanarayana P et al (2008) Extraction and optimization of fuzzy association rules using multi-objective genetic algorithm. Pattern Anal Appl 11:315–324

Wang LX (1996) A course in fuzzy systems and control. Prentice-Hall, New Jersey

Wang F, Kan M (2006) NPIC: Hierarchical synthetic image classification using image search and generic features. in Proc. CIVR, pp 473–482

Wang W, Zhang A (2006) Extracting semantic concepts from images: a decisive feature pattern mining approach. Multimedia Syst. doi:10.1007/s00530-006-0029-x

Xiaoyun C, Wei C (2006) Text categorization based on classification rules tree by frequent patterns. Softw 17:1017–1025

Zimek A, Buchwald F, Frank E, Kramer S (2008) A study of hierarchical and flat classification of proteins. IEEE Trans Comput Biol Bioinformatics 7:563–571

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tazaree, A., Eftekhari-Moghadam, AM. & Sajjadi-Ghaem-Maghami, S. A semantic image classifier based on hierarchical fuzzy association rule mining. Multimed Tools Appl 69, 921–949 (2014). https://doi.org/10.1007/s11042-012-1123-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-012-1123-z