Abstract

Facial expressions of emotion (FEEs) have been portrayed as potent (dis-) incentives for power-motivated perceivers, because they signal the strength of a sender’s dominance (Stanton et al. in Implicit motives. Oxford University Press, New York, pp 245–278, 2010). Here, we tested the hypothesis that individuals with a high implicit power motive (nPower), who have a disposition to seek (emotional) impact on others, would be faster at recognizing FEEs than individuals low in nPower. In a task employing videos of morphed FEEs, which are gradually changing from neutral to either anger, joy or surprise, higher nPower predicted faster recognition of the displayed emotion as well as a tendency to misidentify joy as anger. Our findings suggest that one way through which people high in nPower are socially influential is their enhanced sensitivity to emotional signals in their social environment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The implicit need for power (or nPower) is defined as a disposition for feeling affective pleasure from having impact on others or the world at large, and for feeling aversion for others’ influence on oneself (Schultheiss 2008). It is commonly assessed using standardized content coding of imaginative stories that individuals tell about picture cues (Schultheiss and Pang 2007). Because coding systems for nPower reflect effects of experimentally aroused power motivation on story-writing responses (e.g., Winter 1988, 1994), they fulfil a critical requirement for a test’s validity (see Borsboom et al. 2004). nPower is implicit in the sense that picture-story measures of the need for power and other motives consistently fail to substantially overlap with questionnaire measures designed to explicitly assess the same motivational needs (Köllner and Schultheiss 2014; Rawolle et al. 2013; Schultheiss et al. 2009; Spangler 1992). Thus, nPower’s influence on behaviour bypasses people’s conscious views of themselves.

One of the ways in which nPower is assumed to affect behaviour is through orienting attention towards salient cues in the environment that imply either successful influence on others (motive-relevant incentive) or impending influence of others on themselves (motive-relevant disincentive; McClelland 1987; Schultheiss and Hale 2007). Recent research tested this idea based on a theoretical framework of social information processing that views facial expressions of emotions (FEEs) as salient cues for interpersonal motives like nPower (see Stanton et al. 2010). According to Stanton et al. (2010), FEEs are important nonverbal means of communication in social interactions because they signal the sender’s high or low dominance to the perceiver, which has consistently been demonstrated in rating studies of FEEs (e.g. Hess et al. 2000; Knutson 1996). However, the effect that a sender’s FEE has on a perceiver should also depend on the perceiver’s implicit motives (Stanton et al. 2010). Because high-nPower individuals want to secure their own influence in social interactions, FEEs signalling high dominance, such as anger, joy, and disgust (see Hess et al. 2000; Knutson 1996), should be disincentives for high-nPower perceivers. In contrast, FEEs signalling a sender’s low dominance, such as fear, sadness, or surprise (see Hess et al. 2000; Knutson 1996), should serve as incentives for high-nPower individuals, since they confirm the perceiver’s relative dominance in relation to the sender. Thus, nPower is assumed to influence perceivers’ reactions to both types of FEEs (dominant and submissive), as both represent motive-relevant cues in social interactions.

This assumption has been corroborated in several studies. Schultheiss and Hale (2007) studied attentional orienting using a dot-probe task and found that high-nPower individuals oriented their attention towards FEEs signalling low dominance (surprise) and away from FEEs signalling high dominance (anger, joy). Schultheiss et al. (2005) used an implicit visuomotor sequence learning task with FEEs as reinforcers. They found that high-nPower individuals showed enhanced learning of sequences that were reliably paired with low-dominance FEEs and impaired learning of sequences that were reliably paired with high-dominance FEEs. A functional magnetic resonance imaging (fMRI) study conducted by Schultheiss et al. (2008) revealed that nPower predicts activation of brain areas critical for motivated behaviour (e.g., the striatum) in response to angry and, to a lesser extent, surprised FEEs. Wang et al. (2011) conducted an event-related potential (ERP) study and found a higher P3-component in high-nPower individuals in response to anger faces, suggesting increased recruitment of attentional resources for processing facial dominance signals. In a more recent ERP study using an oddball task, Wang et al. (2014) could further demonstrate that high-nPower individuals, compared to low-nPower individuals, were better able to identify anger FEEs, particularly if anger was displayed with low intensity. These studies consistently show that both the positive and negative incentive value of low- and high-dominance FEEs increases with the strength of individuals’ nPower.

In the present study we wanted to replicate and extend these findings by testing whether nPower predicts enhanced sensitivity towards dominance-related dynamic changes in FEEs. Using morphing technology, we created video stimuli that display gradual transitions in the eye region from a neutral to an emotional FEE (joy, anger, and surprise) and had participants categorize FEEs as quickly as possible (i.e. as soon as they recognized the first signs of a specific FEE). This approach represents an adaptation of previously validated measure of emotional sensitivity and social competence (Baron-Cohen et al. 2001; Ekman and O’Sullivan 1991). We thus tested how sensitive participants were to slight, gradual changes in muscular activation indicative of a specific emotion, with earlier responses corresponding to less observed muscular observation needed to detect and categorize an FEE. Earlier detection at lower intensities of muscular activation therefore equals faster reaction speed. Additionally, we also assessed accuracy rates to ensure that faster detections do not come at the expense of lower categorization accuracy. Faster detections can only be interpreted as higher emotional sensitivity if they do not come at the expense of accuracy.

In our paradigm we selectively focused on the eye portion of FEEs for several reasons. First, the eye portion of FEEs, especially the gaze direction, might be critical for eliciting motivation-driven emotion processing. Gaze direction signals the self-relevance of a sender’s FEE to the perceiver (N’Diaye et al. 2009). It signals whether a perceiver is the source or the target of a sender’s FEE and thereby whether a FEE has to be evaluated against the perceiver’s motivational needs. Second, the ability to read emotions from the eye portion of a face has been found to detect subtle individual differences in social competence (Baron-Cohen et al. 2001). Third, full face FEEs are easy to recognize and might result in ceiling effects with little variation in emotion recognition, which would artificially blur effects of nPower (see also Wang et al. 2014). Excluding indications of muscular activity in the mouth portion of the face, which is very distinct for prototypical joy (AU12), anger (AU22/23), and surprise (AU26/27) expressions (Ekman et al. 2002), results in stimuli that are more difficult to recognize and thus potentially more sensitive to motive effects.

Based on the research described above we predicted that high-nPower individuals, in comparison to low-nPower individuals, should show enhanced sensitivity towards FEEs and should therefore be able to recognize them at lower intensities as reflected in quicker detection. Additionally, we assumed that quicker detections in high-nPower individuals are not due to lower accuracy rates for these participants.

Methods

Participants

Seventy-four participants (35 female) were recruited via flyers at Friedrich-Alexander University, Erlangen, Germany for a study on “Motivation and Emotion” and received €15 for their participation. Sample size was pre-determined solely based on the requirements for master’s thesis projects at Friedrich-Alexander University and the a priori expectation of obtaining a medium-sized effect (i.e., r ≈ .30) for the association between nPower and emotion recognition. Two participants were excluded from all analyses because they did not follow task instructions (see below). This resulted in a net N of 72 (33 female). Participants’ mean age was 23.5 (SD = 3.65, range = 19–34). Psychology majors were not allowed to participate in the study.

Procedure

After giving informed consent, participants were seated at data-collection computers in one-person test cubicles. All tasks were presented using the experimental software Inquisit 3.0 (Millisecond Software LLC, Seattle, WA) and all instructions were provided on the computer screen ensuring highly standardized test situations across participants. Participants worked on a Picture Story Exercise (PSE; Schultheiss and Pang 2007) measure of nPower and an emotion recognition task, which is described below. They answered demographic questions and worked on additional tasks unrelated to the hypothesis tested here. The PSE was administered at the beginning of each session to minimize influences of the test situation on motive measures. Upon completion of the tasks participants were paid and debriefed.

Implicit power motive

nPower was assessed with the PSE, using standardized instructions described in Schultheiss and Pang (2007). Participants wrote imaginative stories about six pictures (Women in Laboratory, Ship Captain, Nightclub Scene, Couple by River, Trapeze Artists, and Boxer) that were presented in a random order for 10 s each. Participants had 4 min to write each story before they moved on to the next picture. Stories were later coded for motivational imagery using Winter’s (1994) Manual for Scoring Motive Imagery in Running Text. According to this manual, power imagery is scored whenever a story character shows a concern with having impact on others by strong forceful actions, persuading and convincing others, manipulating and controlling them, providing unsolicited help, impressing others, or eliciting strong non-reciprocal emotions in others. Two scorers, who had previously attained at least 85 % agreement with training material pre-scored by experts, independently coded each participant’s stories. Their inter-rater reliability across all stories was .83 as indicated by Pearson correlation of overall power imagery counts per participant. Participants wrote, on average, 540 words (SD = 161) with an average of 5.29 (SD = 2.61) power images. Participants’ power scores were square-root transformed and corrected by regression for total word count, which correlated significantly with power scores (r = .49, p < .001). The residuals were z-transformed and represented our measure of nPower in all subsequent analyses.

Stimulus development

To measure participants’ ability to recognize emotions from subtle FEEs, we created videos of gradually changing FEEs of increasing emotion intensity (e.g. from neutral to 100 % anger). We selected static pictures of six different actors (3 female; stimulus persons p0304, p0305, p0402, p0505, p0606, and p1206) displaying the standardized FEEs anger, joy and surprise or a neutral face in full frontal orientation from the SMoFEE (Standardized and Motivated Facial Expression of Emotion) stimulus set (Rösch and Schultheiss, n.d.) based on high recognition rates in an evaluation study. For stimulus descriptions in terms of muscular activity (according to Ekman et al. 2002) and recognition rates, please see Table 1. The grand means of rated emotional intensities of the selected FEEs were high [anger: 5.45 (mean SD = 1.46), joy: 6.04 (mean SD = 1.70), surprise: 5.34 (mean SD = 1.88); rating scale: 0 (no emotion) to 8 (maximum)], while the rated intensity of neutral faces was very low [neutral: 0.29 (mean SD = 0.36)]. We cropped all images with a fixed frame size of a 1:0.47 ratio ranging from the tip of the nose to the hairline. This was done because the ability to read emotions from the eye portion of a face has been found to detect subtle individual differences in social sensitivity (Baron-Cohen et al. 2001). We further matched the position of the eyes in all photos within an actor to minimize motion cues in the resulting morphed videos (see Fig. 1). Morphs were then created using the software VidMorph Pro (GeoVid, Berkeley, CA). Corresponding facial landmarks were manually marked on the original static pictures of each morph pair (between 200 and 350 landmarks per pair) focusing in particular on facial features relevant for the expression of emotions like the eyes’ corrugator supercilii and orbicularis oculi muscles (Ekman et al. 2002). For each of the 18 morph pairs up to 1075 frames were interpolated between the morph end points at a rate of 25 frames/s, resulting in smooth transitions. Videos with four different durations (13, 23, 33 and 43 s) were created as four different levels of difficulty, with longer videos representing slower changes in muscular activity and therefore stimuli that are more difficult to detect quickly. We also created 24 additional control-stimulus videos in which the neutral expression remained fixed. In so doing, we guarded against reaction biases caused by identical onset and temporal dynamics of FEEs and made the non-changing faces of the neutral condition less obvious. The final morphing video stimulus pool consisted of 72 emotional video clips (4 durations × 3 emotions × 6 actors), plus 24 control videos.

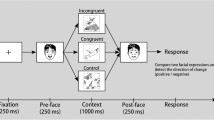

Emotion recognition task

In the emotion recognition task, participants first saw a fixation cross for 2 s followed by the neutral start frame of each video for 1 s and then viewed the morphed video clip until they indicated via mouse click that they either recognized an FEE or thought that no change in expression had occurred. Upon a mouse click, the video was interrupted and participants were asked to choose between the alternatives “anger”, “joy”, “surprise” or “no expression”. Participants were instructed that some trials would display static pictures and they should terminate the trial once they were sure that no change in expression was occurring. The data from these trials were not included in the final analyses, since they reflect a decision to terminate a trial rather than the ability to recognize FEEs. Reaction times and accuracy in these trials did not correlate significantly with nPower (r = −.15 and −.12, both ps > .20).

Results

We examined participants’ reaction times and accuracy on the emotion recognition task and found two participants who were both exceptionally inaccurate and fast (accuracy: 26.04 and 20.83 %, reaction times: 0.77 and 0.41 s), suggesting that they did not follow task instructions. We excluded them from the following analyses. Average accuracy in the remaining sample was 76.60 % (SD = 13.27, range = 44.44–97.22 %), average reaction time was 7.94 s (SD = 3.04, range of median reaction times = 2.96–16.59 s). Across participants, reaction times and accuracy correlated positively (r = .54, p < .001).

To determine whether performance on the emotion recognition task depended on individuals’ nPower, we ran repeated-measures regression analyses with reaction time as dependent variable, nPower as continuous between-subjects predictor, FEE type as a three-way within-subjects factor and Duration as a four-way within-subjects factor. In this analysis, we used median reaction times for each combination of FEE, duration, and target. We found a significant main effect of nPower on reaction times, F(1, 70) = 4.84, p = .03, r = −.27, which indicated that individuals high in nPower were overall faster at recognizing FEEs than individuals low in nPower. We also obtained significant main effects of FEE, F(2, 140) = 80.95, p < .001, with response times differing as follows: Surprise < Anger < Joy (p = .05, Bonferroni-corrected), and of Duration, F(3, 210) = 115.35, p < .001, with response times differing as follows: 13 < 23 < 33 < 43 s (p = .05, Bonferroni-corrected). We did not obtain significant effects for the nPower × Emotion interaction, F(2, 140) = 0.99, n.s., or the nPower × Duration interaction, F(3, 210) = 1.22, n.s. (see Fig. 2 for scatterplots).

We repeated this analysis with recognition accuracy (proportion of correct responses for each combination of FEE, duration and target) as dependent variable. There was no significant main effect of nPower, F(1, 70) = 2.41, n.s., but a significant main effect of FEE, F(2, 142) = 79.83, p < .001, with response accuracy scores differing as follows: Surprise = Anger > Joy (p = .05, Bonferroni-corrected). We also obtained a significant effect of Duration, F(3, 210) = 86.18, p < .001, with response accuracy scores differing as follows: 13 > 23 > 33 > 43 s (p = .05, Bonferroni-corrected). And we obtained a significant nPower × FEE interaction, F(2, 140) = 3.01, p = .05, but no significant nPower × Duration interaction, F(3, 210) = 0.96, n.s.

To explore the significant nPower × FEE interaction on accuracy, we performed follow-up regression analyses to calculate the simple main effects of nPower separately for each FEE. nPower was associated with marginally lower accuracy on trials displaying joy [F(1, 70) = 3.74, p = .06], but not on trials displaying anger [F(1, 70) = 0.03, n.s.] or surprise [F(1, 70) = 1.50, n.s.]. To explain this reduced accuracy, we analysed how FEEs shown in joy-face trials were labelled. We found that nPower was associated with a marginally increased likelihood of labelling the FEE joy as anger (r = .22, p = .06) but no increased likelihood of labelling it as surprise (r = .02, p = n.s.).

Across all participants, reaction times and accuracy were positively correlated (r = .54, p < .001).

Discussion

In this study, we aimed to replicate and extend earlier observations of an association between nPower and sensitivity to dominance-related FEEs by using morphing methodology and examining if people high in nPower, compared to those low in nPower, recognize an unfolding emotional expression at an earlier stage, reflecting greater emotional sensitivity. We tested this hypothesis by having participants identify the specific emotion displayed in dynamic morphs, changing from neutral to full emotion, of the eye region of target persons. Our data support our hypothesis by showing that higher nPower predicted significantly faster reaction times. Thus, high-nPower individuals were able to recognize FEEs at lower intensities, whereas low-nPower individuals needed longer inspection times and thus more intense expressions to recognize FEEs. Although recognition speed and accuracy were positively correlated in the overall sample, suggesting that those who waited longer for an emotion to emerge also categorized it more accurately, the effect of nPower on recognition speed did not generally come at the expense of lower accuracy. Unlike for recognition speed, there was no main effect of nPower on accuracy.

Our analyses suggested that to the extent that a speed-accuracy trade-off existed, it was very localized: an nPower × FEE interaction on accuracy indicated that high-nPower individuals were less accurate than low-nPower individuals in recognizing the FEE joy. Intriguingly, they tended to misclassify joy as anger. Misclassifications between the FEEs joy and anger might partly be due to our paradigm, because our morphed videos displayed only the eye region of the face. The expression of the emotions joy and anger involve some overlapping muscle activations around the eyes (Ekman et al. 2002), which could lead to misclassifications on the emotion recognition task. In particular, the inner part of the orbicularis oculi (action unit 7) is activated during the expression of anger and partly activated during the expression of joy.

This cannot, however, explain why high-nPower individuals should be more likely than low-nPower individuals to show this specific misclassification. We rather think that these misclassifications might be due to the negative incentive value of the FEE joy for high-nPower individuals. Joy, like anger, has been theorized to be energized by the behavioural approach system, which regulates behaviour in such divergent categories as aggression and social attachment (cf. Keltner et al. 2003). Given that joy is also energized by the approach system might be the reason why joy has been consistently reported to signal a sender’s high dominance (Hareli et al. 2009; Hess et al. 2000; Knutson 1996). This would make it as much of a disincentive for high-nPower individuals as the high-dominance anger expression (see also Schultheiss et al. 2005; Stanton et al. 2010). The negative affective reaction to the FEE joy could have acted as a cue to classify it as the negative emotion anger, which was the only negative emotion among our response alternatives. Another interpretation of the misclassification effect we observed is based on a study by Fodor et al. (2006), who showed that a sender’s dominant behaviour leads to stronger activation of the corrugator supercilii (frown muscle, action unit 4) in high-nPower perceivers. We speculate that the onset of angry or joyful expressions in a sender lead to corrugator activation in high-nPower individuals and that this response, via facial feedback (see Adelmann and Zajonc 1989) may (a) help these individuals to quickly detect the emotion, but also (b) lead to the observed misclassifications between joy and anger, because both trigger a negative affective response. Besides that, the rather atypical finding of lower overall recognition rates for joy compared to anger or surprise can be explained by the region of the face used for our stimuli. While other studies usually find joy to be most easily recognized, these studies usually also incorporate full face FEEs. To test the validity of this account of our findings, future research should test whether corrugator activation mediates the relationship between nPower and emotion recognition speed and accuracy.

In summary, our findings provide a differentiated picture of the relation between nPower and emotion recognition ability. They suggest that high-nPower individuals are generally faster than low-nPower individuals at recognizing FEEs signalling both high and low dominance. This speed advantage leads to some inaccuracies in the classification of high-dominance FEEs (joy vs. anger), while the discrimination between high- and low-dominance FEEs was unaffected. Thus, the process of emotion recognition in high-nPower people seems to be selectively improved in a way that facilitates efficient scanning of the social environment for possible signals of others’ dominance or submission. Taken together, our results provide further support for the notion of higher emotional sensitivity for high-nPower individuals and are well in line with previous findings by Wang et al. (2014).

Additionally, our results might in part explain why a high degree of nPower alone (e.g. McClelland and Burnham 1976) or in combination with a high degree of activity inhibition (e.g. McClelland and Boyatzis 1982) leads to social success in everyday life in the long term (for an overview see Winter 2010). Picture-story measures of (inhibited) nPower have repeatedly been shown to predict successful outcomes like creative problem solutions (Fodor and Carver 2000), persuasive communication (Schultheiss and Brunstein 2002) and effective leadership and management (McClelland and Boyatzis 1982). The fast recognition of FEEs by high-nPower individuals might be one facet of social competence that mediates the effect of (inhibited) nPower on social success in the long run. Support for this notion comes from a meta-analysis by Hall et al. (2009) that examined studies employing a wide range of behavioural interpersonal sensitivity tasks (testing perception of nonverbal cues in face, voice, body). Significant positive correlations were found, among others, with self- and other-rated social competencies like workplace effectiveness or relationship quality. In the present study we tested emotion recognition in a computerized morphing task. While this can be viewed as an artificial situation, it provides us with an objective behavioural measure, contrary to widely used self-report measures. However, in order to establish a causal link between nPower and social success that is mediated by higher emotional sensitivity, future research has to combine the approaches of our present study with additional measures of social success e.g. in the workplace.

One limitation of this study is the correlative nature of the association between nPower and emotion recognition. To test whether nPower has a causal effect on emotion recognition, future studies could aim to arouse nPower experimentally (perhaps with PSE changes in nPower scores as a manipulation check; see Schultheiss et al. 2004) and test whether this has an effect on emotion recognition. Another limitation is the restriction of the stimulus set to three FEEs and to the eye region of the face. Thus, future research needs to examine whether the effects reported here can also be observed with other FEEs that vary on the dimension of dominance (e.g., disgust, fear) and extend to nonverbal cues encoded by other parts of the face and to the perception of emotional changes in the entire face. We would like to note, though, that by restricting the field of view to the eye region, we were successful in avoiding ceiling effects for recognition, particularly in the case of joy. Finally, although the results we obtained are consistent with other studies that have examined the role of nPower in responses to FEEs, replication studies with higher statistical test power are needed to provide further corroboration for an association between nPower and face recognition. More specifically, given the observed effect size in this study (r = −.27 for recognition speed), we recommend sample sizes of N ≥ 110 in future studies to replicate our findings with a probability exceeding 80 %.

References

Adelmann, P. K., & Zajonc, R. B. (1989). Facial efference and the experience of emotion. Annual Review of Psychology, 40(1), 249–280.

Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., & Plumb, I. (2001). The “Reading the Mind in the Eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry, 42(2), 241–251.

Borsboom, D., Mellenbergh, G. J., & van Heerden, J. (2004). The concept of validity. Psychological Review, 111, 1061–1071.

Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial action coding system. The manual on CD ROM. Salt Lake City, UT: Research Nexus.

Ekman, P., & O’Sullivan, M. (1991). Who can catch a liar? American Psychologist, 46(9), 913–920.

Fodor, E. M., & Carver, R. A. (2000). Achievement and power motives, performance feedback, and creativity. Journal of Research in Personality, 34(4), 380–396. doi:10.1006/jrpe.2000.2289.

Fodor, E. M., Wick, D. P., & Hartsen, K. M. (2006). The power motive and affective response to assertiveness. Journal of Research in Personality, 40(5), 598–610.

Hall, J., Andrzejewski, S., & Yopchick, J. (2009). Psychosocial correlates of interpersonal sensitivity: A meta-analysis. Journal of Nonverbal Behavior, 33(3), 149–180.

Hareli, S., Shomrat, N., & Hess, U. (2009). Emotional versus neutral expressions and perceptions of social dominance and submissiveness. Emotion, 9(3), 378–384.

Hess, U., Blairy, S., & Kleck, R. E. (2000). The influence of facial emotion displays, gender, and ethnicity on judgments of dominance and affiliation. Journal of Nonverbal Behavior, 24, 265–283.

Keltner, D., Gruenfeld, D. H., & Anderson, C. (2003). Power, approach, and inhibition. Psychological Review, 110(2), 265–284.

Knutson, B. (1996). Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior, 20(3), 165–182.

Köllner, M. G., & Schultheiss, O. C. (2014). Meta-analytic evidence of low convergence between implicit and explicit measures of the needs for achievement, affiliation, and power. Frontiers in Psychology, 5, 826.

McClelland, D. C. (1987). Human motivation. New York: Cambridge University Press.

McClelland, D. C., & Boyatzis, R. E. (1982). Leadership motive pattern and long-term success in management. Journal of Applied Psychology, 67, 737–743.

McClelland, D. C., & Burnham, D. H. (1976). Power is the great motivator. Harvard Business Review, 54, 100–110.

N’Diaye, K., Sander, D., & Vuilleumier, P. (2009). Self-relevance processing in the human amygdala: Gaze direction, facial expression, and emotion intensity. Emotion, 9(6), 798–806.

Rawolle, M., Schultheiss, M., & Schultheiss, O. C. (2013). Relationships between implicit motives, self-attributed motives, and personal goal commitments. Frontiers in Psychology, 4, 923.

Rösch, A. G., & Schultheiss, O. C. (n.d.). The standardized and motivated facial expression of emotion (SMoFEE) stimulus set: I. Evaluation of expressive and perceptual validity (in preparation).

Schultheiss, O. C. (2008). Implicit motives. In O. John, R. Robins, & L. Pervin (Eds.), Handbook of personality: Theory and research (3rd ed., pp. 603–633). New York: Guilford.

Schultheiss, O. C., & Brunstein, J. C. (2002). Inhibited power motivation and persuasive communication: A lens model analysis. Journal of Personality, 70(4), 553–582.

Schultheiss, O. C., & Hale, J. A. (2007). Implicit motives modulate attentional orienting to facial expressions of emotion. Motivation and Emotion, 31(1), 13–24.

Schultheiss, O. C., & Pang, J. S. (2007). Measuring implicit motives. In R. W. Robins, C. Fraley, & R. Krueger (Eds.), Handbook of research methods in personality psychology (pp. 322–344). New York: Guilford.

Schultheiss, O. C., Pang, J. S., Torges, C. M., Wirth, M. M., & Treynor, W. (2005). Perceived facial expressions of emotion as motivational incentives: Evidence from a differential implicit learning paradigm. Emotion, 5(1), 41–54.

Schultheiss, O. C., Wirth, M. M., & Stanton, S. J. (2004). Effects of affiliation and power motivation arousal on salivary progesterone and testosterone. Hormones and Behavior, 46(5), 592–599.

Schultheiss, O. C., Wirth, M. M., Waugh, C. E., Stanton, S. J., Meier, E. A., & Reuter-Lorenz, P. (2008). Exploring the motivational brain: effects of implicit power motivation on brain activation in response to facial expressions of emotion. Social Cognitive and Affective Neuroscience, 3(4), 333–343.

Schultheiss, O. C., Yankova, D., Dirlikov, B., & Schad, D. J. (2009). Are implicit and explicit motive measures statistically independent? A fair and balanced test using the picture story exercise and a cue- and response-matched questionnaire measure. Journal of Personality Assessment, 91(1), 72–81.

Spangler, W. D. (1992). Validity of questionnaire and TAT measures of need for achievement: Two meta-analyses. Psychological Bulletin, 112(2), 140–154.

Stanton, S. J., Hall, J. L., & Schultheiss, O. C. (2010). Properties of motive-specific incentives. In O. C. Schultheiss & J. C. Brunstein (Eds.), Implicit motives (pp. 245–278). New York: Oxford University Press.

Wang, J., Liu, L., & Yan, J. H. (2014). Implicit power motive effects on the ERP processing of emotional intensity in anger faces. Journal of Research in Personality, 50, 90–97.

Wang, J., Liu, L., & Zheng, Y. (2011). Effects of implicit power motive on the processing of anger faces: An event-related potential study. Journal of Research in Personality, 45(5), 441–447.

Winter, D. G. (1988). The power motive in women—And men. Journal of Personality and Social Psychology, 54(3), 510–519.

Winter, D. G. (1994). Manual for scoring motive imagery in running text (4th ed.). Department of Psychology, University of Michigan, Ann Arbor: Unpublished manuscript.

Winter, D. G. (2010). Political and historical consequences of implicit motives. In Implicit motives (pp. 407–432).

Acknowledgments

This work was supported by Deutsche Forschungsgemeinschaft [grant SCHU 1210/3-1] awarded to Oliver C. Schultheiss and Andreas G. Rösch and a McClelland Fellowship of the McClelland Center for Research and Innovation (Hay Group) awarded to Andreas G. Rösch.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Donhauser, P.W., Rösch, A.G. & Schultheiss, O.C. The implicit need for power predicts recognition speed for dynamic changes in facial expressions of emotion. Motiv Emot 39, 714–721 (2015). https://doi.org/10.1007/s11031-015-9484-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11031-015-9484-z