Abstract

Purchase intention and willingness-to-pay (WTP) questions are often analyzed without considering that a respondent's utility maximizing answer need not correspond to a truthful answer. In this paper, we argue that individuals act, at least partially, in their own self-interest when answering survey questions. Consumers are conceptualized as thinking along two strategic dimensions when asked hypothetical purchase intention and WTP questions: (a) whether their response will influence the future price of a product and (b) whether their response will influence whether a product will actually be offered. Results provide initial evidence that strategic behavior may exist for some goods and some people.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

A fundamental tenet of modern economics, confirmed in countless empirical studies, is that individuals respond to incentives. If incentives matter, their effects are not likely relegated to the marketplace, but permeate behavior in all facets of life including how individuals respond to survey questions. As long as individuals believe their responses might influence actions taken by businesses, they might respond to surveys in such a way as to maximize their own utility. A utility maximizing answer need not correspond to a truthful answer.

For example, if a person is asked what they would pay for a product, they might purposefully state a dollar amount lower than their actual willingness-to-pay (WTP) so that the brand manager will reduce the price. Several authors have noted the potential for people to answer surveys in a way to attempt to manipulate price (e.g., Gibson, 2001; Jedidi et al., 2003; Monroe, 1990; Wertenbroch and Skiera, 2002). Although strategic responses to survey questions have received little attention in the marketing literature, it is a maintained assumption in many disciplines such as game theory, economics, and political science that humans behave with strategic intent.

In this paper, we argue that individuals act, at least partially, in their own self-interest when answering survey questions. We define a strategic survey response as one in which a person indicates a preference or intention different than their actual preference or intention so as to benefit themselves in some way. Seeking to bridge the gap in knowledge regarding the role of strategic responses on purchase intention and WTP measures, we develop a conceptual framework that permits development of some testable hypotheses about strategic responses to purchase intention questions. We then conduct two experiments to test the hypotheses. By identifying the extent to which strategic behavior affects responses to purchase intention questions, we hope to reduce some of the uncertainty in forecasting new product success.

Background and conceptual model

Systematic error in purchase intentions

Despite the pervasive view that stated intentions are perhaps the best predictors of actual behavior (e.g., Fishbein and Ajzen, 1975), it has long been recognized that answers to stated intention questions are not perfectly correlated with actual purchases (see Morwitz, 1997, 2001). As a result, a variety of calibration techniques have been proposed to adjust stated purchase intentions to better reflect actual purchasing behavior (e.g., Bemmaor, 1995; Jamieson and Bass 1989; Morrison, 1979; Urban et al., 1983). Such empirically based calibrations could be improved with a more thorough and theory-based understanding of strategic response to survey questions.

A variety of explanations for the discrepancy between stated and actual behavior have been put forth. Such explanations have focused on changes in preferences between the survey and purchase decision, perhaps caused by the survey itself (e.g., Chardon et al., 2005), the inability to predict future preferences (e.g., Loewenstein and Adler, 1995), the effect of information acquisition (Manski, 1990), and a variety of other biases in measuring and reporting intentions (e.g., Fisher, 1993; Greenleaf, 1992; Mittal and Kamakura, 2001).

Many of the above explanations are based on the notion that systematic error is introduced when measuring purchase intentions. In this study, we investigate a systematic error in measuring purchase intentions thought to arise from strategic responses. In fact, many biases, such as social desirability bias (see Fisher, 1993), can be viewed as a specific form of strategic response bias. Untruthful answers could result because of anticipated positive or negative reciprocity from the surveyor that would serve to benefit the interviewee. Social desirability bias need not result from a desire to please the interviewer per se, but from the benefits that potentially accrue to the interviewee as a result of a happier interviewer.

Despite the possibility that strategic responses could introduce systematic bias in purchase intentions, the concept has not been systematically investigated. In fact, when summarizing the extant literature, Morwitz (1997) identifies three factors that affect how accurately respondents represent their intentions, none of which include strategic response bias. More recently, Sun and Morwitz (2005) proposed a “unified model” to predict purchase behavior from stated intentions, and while they discuss systematic biases in intentions, their model did not incorporate strategic survey responses.

Model of strategic survey responses

Carson et al. (2001) argue that people have an incentive to strategically overstate their preferences for the goods in hypothetical questioning so as to increase the likelihood that the goods will ultimately be offered. Because the actual purchase decision need not be made until a later date, and there is no cost to indicating a high purchase intent in a hypothetical survey. An individual has an incentive to state a high purchase intent in case they might want the goods at a future date regardless of whether they want the goods at the time of questioning. In contrast, others have argued that people have an incentive to understate their true preferences in hypothetical purchase intention questions. For example, Monroe (1990) argued that individuals have a strategic incentive to underestimate their true preferences in hypothetical scenarios because “consumers feel that it is in their best interest to keep prices down.” Similarly, Gibson (2001) argued that repeated questioning about the desirability of products “reveals the purpose of the study to respondents” and “makes the respondents self-conscious, resulting in a systematic undervaluation of price.”

As outlined, different views exist regarding the incentives consumers have when responding to a hypothetical purchase intention and WTP question. Wertenbroch and Skiera (2002) succinctly summarize the situation as follows (p. 230), “If subjects believe that their responses will be used to set long-term prices, they have an incentive to understate their WTP. If they believe that their responses will determine the introduction of a desirable new product, they may perceive reasons to overstate their WTP.” These ideas are formalized in Fig. 1, which presents a model of consumer behavior when responding to hypothetical stated intention questions.

When confronted with a hypothetical purchase intention question, an individual must assess the extent to which their response to the question will ultimately influence (a) the price charged for the product and (b) whether the goods will ultimately be offered for sale. For individuals who perceive their response to have a substantive influence on product price but a low perceived influence on product offering, an incentive exists to understate true purchase intentions. Because such individuals primarily believe their response will influence the future price charged for the product and that they will not influence whether the product is offered, understating true preferences will serve to send a “signal” to marketers to set a low price for the product, which of course benefits the respondent. By contrast, an individual that believes his or her response to the stated intention question will have very little influence on price, but a large influence on whether the product is offered has an incentive to overstate their actual purchase intentions. Because such a person believes they have little influence on the ultimate price that will be charged and because their response is purely hypothetical, the overstatement comes at no cost, but potentially yields a future reward. Individuals who believe they have high or low influence on both domains must balance the two competing factors of a higher future price and the likelihood the product will never be offered in the marketplace.

Although it is difficult, if not impossible, to measure an individual's true purchase intention, the model in Fig. 1 lends itself to the development of two testable hypotheses.

-

H1: Individuals with a high perceived influence on product price will state a lower purchase-intent/WTP than individuals with a low perceived influence on product price, ceteris paribus.

-

H2: Individuals with a high perceived influence on product offering will state a higher purchase-intent/WTP than individuals with a low perceived influence on product offering, ceteris paribus.

Although H1 and H2 are general in the sense that they are posited to hold for any private goods, there may be moderating factors that affect the extent to which individuals respond strategically. One such factor is product involvement (i.e., the level of interest a consumer finds in a product class), which has been found to influence choice behaviors, usage frequency, extensiveness of decision-making processes, response to persuasive messages, etc. (e.g., Laurent and Kapferer, 1985; Mittal and Lee, 1989; Sherif and Cantril, 1947; Zaichkowsky, 1985, 1994). Of relevance to this study, it should be clear that the incentives for strategic behavior increase for higher involvement goods. An individual has little incentive to attempt to influence the outcome of goods for which they care nothing about. This leads us to Hypothesis 3, which is stated below:

-

H3: Individuals with higher levels of involvement will exhibit more strategic behavior as compared with individuals with low involvement levels.

There are a variety of other factors that may also moderate the relationship between price/offering perceptions and strategic responses; one additional factor we consider in this study is honesty. Scales have been developed to measure integrity and personality-based honesty and such scales have been shown to predict employee theft among other factors (e.g., Bernardin and Cooke, 1993). Ones et al. (1993) demonstrated that honesty appears to be a stable factor across different situations, implying that honesty may relate not just to theft but also to survey responses. In this regard, an honest individual is unlikely to give untruthful responses and as such is unlikely to respond strategically. This observation leads to the fourth hypothesis.

-

H4: More honest people will exhibit less strategic behavior than dishonest people.

Study 1

Procedures

Study 1 was designed to test the first two hypotheses. One hundred twenty-eight undergraduate students at Oklahoma State University participated in the study. The study used a 2 (price influence: low vs. high) × 2 (offering influence: low vs. high) between-subjects design less one treatment: the price:high/offering:high treatment. This design permits a separate test of the effect of changing price influence from low to high while holding offering influence constant at low (H1) and a test of the effect of changing offering influence from low to high while holding price influence constant at low (H2).

In all treatments, participants were asked to indicate the highest amount of money they would be willing to pay for a bottle of Jones Soda, a specialty soda that comes in many flavors, at a booth that would/might be set up outside a classroom 1 week after the experiment. Jones Soda was used because most participants were somewhat familiar with it, but for which there were limited opportunities for purchase outside the experiment as it was not sold in any local grocery stores and only in one local restaurant (of the 128 participants about 24% indicated that they had previously bought Jones Soda). In the price:low/offering:low treatment, participants were told, “I am going to sell Jones Soda at a booth set up outside this classroom prior to your next class meeting on Thursday at a price of $1.50 per bottle.” In the price:high/offering:low treatment, participants were told, “I am going to sell Jones Soda at a booth set up outside this classroom prior to your next class meeting on Thursday. I am debating what price to charge for the Jones Soda. In a moment you will answer a question indicating how much you are willing to pay for Jones Soda. After you complete the surveys, I will collect them, look at your responses, and decide on the price I will charge for the sodas at the booth that will be set up on Thursday.” In the price:low/offering:high treatment, participants were told, “I am debating whether to sell Jones Soda at a booth set up outside this classroom prior to your next class meeting on Thursday. In a moment you will answer a question indicating how much you are willing to pay for Jones Soda. After you complete the surveys, I will collect them, look at your responses, and decide whether or not to make Jones Soda available for sale after class at a booth set up outside this classroom. If the decision is made to sell the sodas, they will be offered for sale at $1.50 per bottle.”

After being provided a written version of the above statements and completing the WTP question, participants were asked to indicate the extent to which they agreed (1 = strongly disagree; 7 = strongly agree) with the statements: (1) The answer I gave on the previous page will influence the price charged for Jones Soda; and (2) The answer I gave on the previous page will influence whether Jones Soda is available for sale after class on Thursday.

Results

Mean WTP in the price:high/offering:low treatment was significantly lower than mean WTP in the price:low/offering:low treatment, a finding which supports H1 (M=$1.14 vs. $1.27; t-statistic = 1.82; p<0.10; Wilcoxon rank-sum statistic = 1525.5; p<0.02). However, data do not support H2. Mean WTP in the price:low/offering:high treatment was $1.20/bottle, which is not significantly different than the mean WTP of $1.27 in the price:low/offering:low treatment (t-statistic = 0.65; p>0.10; Wilcoxon rank-sum statistic = 1745; p>0.10).

As a manipulation check, it is also useful to investigate how the statements of perceived influence on price and offerings varied across treatment. As expected, participants stated a significantly higher level of perceived influence on offering in the price:low/offering:high treatment than in the price:low/offering:low treatment, (M=5.07 vs. 4.05; t-statistic = 9.36; p<0.01; Wilcoxon rank-sum statistic = 2201.5; p<0.01). Participants also stated a higher level of perceived influence on price in the price:high/offering:low treatment than in the price:low/offering:low treatment, but the difference is not statistically significant (M=4.08 vs. 3.85; t-statistic = 0.31; p>0.10; Wilcoxon rank-sum statistic = 1807; p>0.10). To further explore the issue, for each individual, we created one variable representing the difference between perceived influence on price and offering (i.e., difference = perceived price influence rating – perceived offering influence rating). Consistent with expectations, the difference in perceived price and offering influence was 0.025, −0.707, and −0.02 in the price:high/offering:low, price:low/offering:high, and price:low/offering:low treatments, respectively. Results of the parametric ANOVA and nonparametric Kruskal–Wallis tests reject the hypothesis that the price/offering influence difference is the same across all three treatments [F(2, 125) = 2.30, p<0.10; χ2(2) = 6.45, p<0.04].

In addition to using the perceived price and offering statements as a manipulation check, it is prudent to investigate whether the measures themselves are related to stated WTP. Combining data across all three treatments, we find that the Pearson correlation coefficient between the perceived price influence, perceived offering influence difference, and stated WTP is −0.19, which is statistically different than zero at the p<0.04 level of significance. A similar result is found for the Spearman rank correlation, which is −0.26, an amount that is significantly different than zero at the p<0.01 level of significance. Thus, consistent with H1 and H2, these correlation coefficients indicate that as perceived price influence increases relative to the perceived offering influence, stated WTP falls.

Study 2

Procedures

The aim of study 2 was to perform a joint test of H1 and H2 and to investigate H3 and H4. Specifically, in study 2, the factors of price and offering were simultaneously manipulated in a way that, as shown in Fig. 1, provides the “best shot” at identifying whether strategic responses exist. As shown in study 1, the effect of price perceptions on stated WTP need not be symmetric to the effect of offering perceptions on stated WTP; however, for study 2 it suffices to manipulate price and offering perceptions in a way that the direction of strategic bias is clear. To this end, study 2 used a 2 (goods: parking pass vs. t-shirt) × 2 (price/offering perception: price:high/offering:low vs. price:low/offering:high) between subject design with 290 undergraduate students from Purdue University.

The choice of the two goods was driven by a desire to select goods, which differed primarily in the level of involvement, although it is likely the two goods differ on several other dimensions as well. The parking pass refers to a pass for a new parking garage being built on campus and was chosen as it was one we anticipated students would be very interested in and involved with and one for which students would believe they had some influence over the manner in which the product was introduced. The second goods was a new naturally colored cotton t-shirt, produced without dies, to be sold by a local bookstore. Relative to the parking pass, it was anticipated that students' level of product involvement would be lower for t-shirts.

For the parking pass goods, subjects were told the following in the price:high/offering:low treatment: “Purdue Parking Facilities has decided to build a new student-only parking garage near campus. However, they have not yet determined the price to charge for the parking passes. Currently, there is a debate over the price to be charged for the passes. Some people believe the passes should be priced relatively cheap to benefit the students, while others believe passes should be more expensive and cover the full cost of construction. For this reason, Parking Facilities has asked us to help them in determining a price to charge for parking passes to the new garage. Please answer the next two questions about your preferences for the new parking garage.”

For the parking pass goods, subjects were told the following in the price:low/offering:high treatment: “Purdue Parking Facilities is considering whether or not to build a new student-only parking garage near campus. Currently, there is a debate over whether or not the garage should be built. Some people think the garage should be built to ease the parking burden on students, while others believe the garage should not be built because it would be too expensive and the money would be better spent elsewhere. For this reason, Parking Facilities has asked us to help them in determining whether or not to build the garage. If a new garage is built, prices for parking passes will be the same as they are now. Please answer the next two questions about your preferences for the new parking garage.”

The preambles in the colored cotton t-shirt treatments were similarly worded; in the price:low treatment, the price of t-shirts was given to be $20. Following these introductory statements, individuals were asked to complete two stated purchase intention questions. The first was a standard 5-point purchase intention scale that asked, for example, “How likely are you to purchase a parking pass for this new garage?” Subjects responded on a 5-point scale with 1 being “Definitely Will Buy” and 5 being “Definitely Will Not Buy.” In subsequent data analysis, the scale is reverse coded such that a larger number implies higher purchase intent. The second purchase intention question was a dichotomous choice question: “If these parking passes are sold for $75, will you purchase one?” Subjects responded by circling Yes or No.

Following the purchase intention questions, individuals completed the 10-item product involvement semantic scale, a differential scale used and validated by Zaichkowsky (1994). Participants circled the number (on a scale of one to seven) that best described their feelings about either the parking garage or the t-shirts. The endpoints of the 10-item product involvement semantic scale items were: (1) important, unimportant, (2) boring, interesting, (3) relevant, irrelevant, (4) exciting, unexciting, (5) means nothing, means a lot, (6) appealing, unappealing, (7) fascinating, mundane, (8) worthless, valuable, (9) involving, uninvolving, and (10) needed, not needed.

Participants were then asked a series of questions regarding their perceived influence on product price and offering. The four questions relating to perceived influence on offering (for the parking garage) were: (1) My opinions will be taken into consideration when deciding whether a new student-only parking garage is eventually built at Purdue; (2) By stating that I find the new parking garage more or less desirable, I can have an effect on whether Purdue will build a new student-only garage; (3) My opinions will not matter when Purdue chooses whether to build a new student-only parking garage; and (4) Overall, I believe my opinions will be taken into consideration by Purdue Parking Facilities when they decide whether to build a new parking garage. The four questions relating to perceived influence on price (for the parking garage) were: (1) By stating that I find the new parking garage more or less desirable, I can have an effect on the final price charged for parking passes; (2) My opinions will be taken into consideration when deciding the price that Parking Facilities will charge for passes to the new garage, (3) Overall, I believe my opinions will be taken into consideration by Purdue when they decide what price to charge for parking passes to the new garage; and (4) My opinions will not matter when Parking Facilities decides what price to charge for passes to the new garage. These eight questions were intermingled with one another on the survey and individuals were asked to respond on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree).

Finally, subjects were asked to complete several statements relating to honesty. Two items were drawn from the Marlowe–Crowne Social-Desirability Scale Personal Reaction Inventory (Crowne and Marlowe, 1964) and four were developed for this study. The items were: (1) I consider myself to be an honest person, (2) I do not lie, (3) It is OK to use a fake I.D. to get into a bar, (4) I would tell a lie about myself to impress a date, (5) I would embellish facts on my resume, and (6) I can remember “playing sick” to get out of doing something I didn't want to do. These questions were intermingled with several other unrelated items. Individuals again, responded on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree).

Results

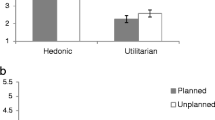

Based on H1 and H2, we would expect stated purchase intentions to be higher in the price:low/offering:high treatments than in the price:high/offering:low treatments. Data from the parking passes are consistent with these hypotheses. Parking pass purchase intentions were significantly higher in the price:low/offering:high treatment than in the price:high/offering:low treatment (M=3.11 vs. 2.53; t-statistic = 7.34, p<0.01; Wilcoxon rank-sum statistic = 5119, p<0.01). Regarding the dichotomous choice purchase intention question, almost 16% more individuals said “yes” they would purchase a parking pass when they were told that they had an influence over whether the new parking garage was built as compared to individuals that were told that the price was uncertain and that the garage was being built, a difference that is statistically significant (M=45.6% vs. 29.7%; Chi-square test of independence χ2(1) = 4.07, p<0.04; Fisher's exact test, p<0.05). However, the same findings do not hold for the t-shirt treatments. For t-shirts, purchase intentions were not significantly different in the price:low/offering:high and the price:high/offering:low treatments (M=3.00 vs. 3.11; t-statistic = 0.68, p>0.10; Wilcoxon rank-sum statistic = 4429.5, p>0.10). Further, there was no significant difference in the percentage of people saying “yes” they would purchase a t-shirt at $20 in the price:low/offering:high and the price:high/offering:low treatments (M=22.1% vs. 19.1%; Chi-square test of independence χ2(1)=0.18, p>0.10; Fisher's exact test, p>0.10).

Taken together, these findings confirm the joint hypothesis of H1 and H2 for the parking pass data but not for the t-shirt data. One reason for this difference may be attributable to differences in the level of involvement across the goods. The mean summated score on the 10-item product involvement scale was 44.23 for parking passes and 36.94 for t-shirts (α coefficient of internal reliability exceeded 0.90 for both the goods). We strongly reject the hypothesis that the mean involvement levels were the same across goods (p<0.01) according to a t-test and a Wilcoxon rank-sum test. Thus, individuals were more involved with the parking passes than with the t-shirts. This result provides indirect evidence for H3; however, parking passes and t-shirts likely differ on a variety of other dimensions in addition to product involvement.

To delve into this issue a bit further, for each goods we estimated an ordinary least squares regression where purchase intent was regressed on a dummy variable identifying the price:high/offering:low treatment relative to the price:low/offering:high treatment, the involvement and honesty scales, and interaction effects between the price:high/offering:low treatment dummy variable and the involvement and honesty scales. In this regression framework, the treatment effect now depends on (or is moderated by) individuals' levels of involvement and honesty. Regression results for each goods and a joint model combining data from both goods are presented in Table 1. Results indicate higher levels of involvement are associated with higher purchase intent. Of further interest are the interaction effects between the price:high/offering:low treatment dummy variable and the involvement and honesty scales. Results indicate, most notably for the joint model, that the price:high/offering:low treatment effect becomes more pronounced in the directions predicted by H3 and H4: the price:high/offering:low effect becomes more negative the more involved and dishonest the participant.

Table 2 uses the regression coefficients reported in Table 1 to calculate the price:high/offering:low treatment effect at various levels of involvement and honesty. As shown in Table 2, at the mean levels of involvement and honesty, the difference in purchase intent between the two treatments in the joint model is −0.08, which implies that individuals stated lower purchase intent in the price:high/offering:low treatment as compared to the price:low/offering:high; a finding consistent with H1 and H2. However, in this regression context, the treatment effect is not statistically significant for any of the three models at the mean levels of involvement and honesty. By contrast, in the joint model and for the parking garage model, the price:high/offering:low treatment effect is statistically significant in the direction predicted by the theoretical model for high involvement individuals. This finding confirms H3. Consistent with H4, Table 2 also shows that the price:high/offering:low treatment effect is statistically significant for individuals that scored low on the honesty scale (e.g., relatively dishonest individuals) in the t-shirt and joint models.

While the point estimates related to the effects of involvement and honesty on the price:high/offering:low treatment are all in the expected direction, it is important to note that the effects are always statistically significant in the individual-goods regressions. This may be due to a low degree of internal reliability in the honesty scale (Chronbach's alpha = 0.64) or because if someone is truly dishonest, they may well answer survey questions about honesty as if they are honest. Finally, the relatively low level of variability in responses to the honesty scale (a coefficient of variation of only 18%) and the low level of involvement in the t-shirt treatment may make it difficult to discern treatment effects given the sample size used in this study.

Finally, we turn to investigating whether individuals' stated level of perceived influence on offering and price was influenced by the experimental manipulation. Again, we focus on the difference in perceived price and offering. The difference in the summed 4-item measure of price influencing the summed 4-item measure of offering influence was not significantly different in the price:high/offering:low and price:low/offering:high treatments (M=−0.85 vs. 0.48; t-statistic = 0.62; p>0.10; Wilxocon rank-sum statistic = 20 874; p>0.10). It is curious that the manipulation check was as expected in study 1 but not in study 2. One possible reason for the discrepancy is that in study 1, it was fairly straightforward for participants to see that the experimenter had direct control over price and availability of the product, but in study 2, price and availability were ultimately controlled by another party (e.g., parking facilities or the bookstore).

Conclusions

The ability to predict consumer behavior is important for a variety of academic disciplines including marketing, psychology, and economics. There is considerable evidence that inconsistencies often exist between what people say they will do and what they actually do. In this paper, we offer an explanation for this inconsistency: individuals answer purchase intention questions strategically to benefit themselves by altering future outcomes.

Consumers are conceptualized as thinking along two strategic dimensions when asked hypothetical purchase intentions: (a) whether their survey response will influence the future price of the new product and (b) whether their survey response will influence whether the new product will actually be offered. Our empirical analyses indicate that strategic behavior exists for some goods and some individuals. Study 1 showed that manipulating peoples' influence on a product's price had a larger effect on stated WTP than manipulating peoples' influence on product availability. Study 2 showed that individuals with a high influence on product price and a low influence on product offering stated lower purchase intentions as compared to people with a low perceived influence on price and a high influence on offering. Study 2 showed that the degree of bias caused by strategic responses depends on the nature of the goods: whether it is a parking pass or a t-shirt and on individual characteristics such as product involvement and honesty.

Given that our results are supportive of the notion that people, in some cases, respond strategically to purchase intention questions, the question is what should be done? One possibility is to utilize preference elicitation devices that create incentives for truthful preference revelation. For example, Hoffman et al. (1993) proposed using incentive-compatible experimental auctions to determine individuals' preferences for new products (see also Lusk and Shogren, (in press)). Another option is to use nonhypothetical choices as in Ding et al. (2005) or Lusk and Schroeder (2004), which also provide incentives for truthful preference revelation. In addition to these approaches, there may be more direct and less intrusive ways of removing strategic biases in purchase intentions. For example, Cummings and Taylor (1999) used a “cheap talk script,” which describes the potential for strategic responses and requests subjects to avoid strategic responses when answering stated intention questions.

Another possibility for dealing with strategic behavior is to investigate individual determinants of strategic behavior. There are a variety of variables that might influence individuals' propensity to respond strategically including the extent to which an individual is a strategic thinker and whether the virtue of altruism is important to the individual. Strategic thinkers and those that place lower importance on altruism would be expected to offer stated purchase intentions that deviate most from true intentions. Measuring these constructs, in addition to those such as involvement and honesty, might allow researchers to net out the effect of strategic responses in purchase intentions.

On a final note, it is important to recognize that this study represents an initial investigation in the issue of strategic responses to survey questions; many more issues merit investigation. In particular, it would be beneficial to further develop scales to measure individuals' perceived influence on product price and offering to determine whether the experimental manipulations are having the desired effect. Further, rather than manipulating the dimensions of price and offering through the preamble to the purchase intent question, it would be interesting to study natural variation in these constructs in the market price. There are many situations in survey research in which the dimensions of price or offering are likely to be more salient in the mind of a consumer. For example, if a consumer is asked to state how likely they are to purchase a new variant from a product line of well-known existing brands (e.g., Diet Cherry Coca-Cola with Lime) they are likely to know they will not influence price (i.e., all sodas tend to have the same price in vending machines), but product offering becomes the salient issue. By contrast, if a consumer is asked to evaluate a well-known product and brand (e.g., Whirlpool washing machine) they can be quite confident their response will not influence whether the product continues to be offered and thus price is likely to be the salient feature. Results of study 1 suggest strategic responses might be more problematic when people believe their response might influence product price. We are optimistic that further investigations along these lines may aid in refining the theory of strategic survey responses and in resolving some of the unexplained discrepancies in stated intentions and actual behavior and lead to improvement of sales forecasts.

References

Bemmaor, A. C. (1995). Predicting behavior from intention-to-buy measures: The parametric case. Journal of Marketing Research, 32, 176–192.

Carson, R., Flores, N. E., & Meade, N. F. (2001). Contingent valuation: Controversies and evidence. Environmental and Resource Economics, 19, 173–210.

Chardon, P., Morwitz, V. G., & Reinartz, W. J. (2005). Do intentions really predict behavior? Self-generated validity effects in survey research. Journal of Marketing, 69, 1–14.

Crowne, D. P., & Marlowe, D. (1964). The approval motive: Studies in evaluative dependence. New York: Wiley.

Cummings, R. G., & Taylor, L. O. (1999). Unbiased value estimates for environmental goods: A cheap talk design for the contingent valuation method. American Economic Review, 89, 649–665.

Ding, M., Grewal, R., & Liechty, J. (2005). Incentive-aligned conjoint analysis. Journal of Marketing Research, 42, 67–83.

Fishbein, M., & Ajzen, I. (1975). Belief, attitude, intention and behavior. Reading, MA: Addison-Wesley.

Fisher, R. J. (1993). Social desirability bias and the validity of indirect questioning. Journal of Consumer Research, 20, 303–326.

Gibson, L. D. (2001). What's wrong with conjoint analysis? Marketing Research, 16–19.

Greenleaf, E. A. (1992). Improving rating scale measures by detecting and correcting bias components in some response styles. Journal of Marketing Research, 29, 176–189.

Hoffman, E., Menkhaus, D., Chakravarti, D., Field, R., & Whipple, G. (1993). Using laboratory experimental auctions in marketing research: A case study of new packaging for fresh beef. Marketing Science, 12, 318–338.

Jamieson, L., & Bass, F. (1989). Adjusting stated intention measures to predict trial of new products: A comparison of models and methods. Journal of Marketing Research, 26, 336–345.

Jedidi, K., Jagpal, S., & Manchanda, P. (2003). Measuring heterogeneous reservation prices for product bundles. Marketing Science, 22, 107–130.

Laurent, G., & Kapferer, J. N. (1985). Measuring consumer involvement profiles. Journal of Marketing Research, 22, 41–53.

Loewenstein, G., & Adler, D. (1995). A bias in predictions of tastes. The Economic Journal, 105, 929–937.

Lusk, J. L., & Schroeder, T. C. (2004). Are choice experiments incentive compatible? A test with quality differentiated beef steaks. American Journal of Agricultural Economics, 86, 467–482.

Lusk, J. L., & Shogren, J. F. (in press). Experimental auctions: Methods and applications in economic and marketing research. Cambridge, UK: Cambridge University Press.

Manski, C. F. (1990). The use of intentions data to predict behavior: A best-case analysis. Journal of the American Statistical Association, 85, 934–940.

Mittal, V., & Kamakura, W. A. (2001). Satisfaction, repurchase intent, and repurchase behavior: Investigating the moderating effect of customer characteristics. Journal of Marketing Research, 38, 131–142.

Mittal, B., & Lee, M. (1989). A causal model of consumer involvement. Journal of Economic Psychology, 10, 363–389.

Monroe, K. K. (1990). Pricing: Making profitable decisions. New York: McGraw-Hill.

Morrison, D. G. (1979). Purchase intentions and purchase behavior. Journal of Marketing, 43, 65–74.

Morwitz, V. G. (1997). Why consumers don't always accurately predict their own future behavior. Marketing Letters, 8, 57–70.

Morwitz, V. G. (2001). Methods for forecasting from intentions data. In J. S. Armstrong (Ed.), Principles of forecasting: A handbook for researchers and practitioners (pp. 34–56). Norwell, MA: Kluwer Academic.

Ones, D. S., Viswesvaran, C., & Schmidt, F. L. (1993). Meta-analysis of integrity test validities: Findings and implications for personnel selection and theories of job performance. Journal of Applied Psychology, 78, 679–703.

Sherif, M., & Cantril, H. (1947). The psychology of ego involvement. New York: Wiley.

Sun, B., & Morwitz, V. G. (2005). Predicting purchase behavior from stated intentions: A unified model. Working paper, Department of Marketing, Carnegie Mellon University.

Urban, G. L., Hatch, G. E., & Silk, A. J. (1983). The ASSESSOR pre-test market evaluation system. Interfaces, 13, 38–59.

Wertenbroch, K., & Skiera, B. (2002). Measuring consumers' willingness to pay at the point of purchase. Journal of Marketing Research, 39, 228–241.

Zaichkowsky, J. L. (1985). Measuring the involvement construct. Journal of Consumer Research, 12, 341–351.

Zaichkowsky, J. L. (1994). The personal involvement inventory: Reduction, revision, and application to advertising. Journal of Advertising, 23, 59–71.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lusk, J.L., McLaughlin, L. & Jaeger, S.R. Strategy and response to purchase intention questions. Market Lett 18, 31–44 (2007). https://doi.org/10.1007/s11002-006-9005-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11002-006-9005-7