Abstract

The identification of a universal law that can predict the spatiotemporal structure of any entity at any scale has long been pursued. Thermodynamics have targeted this goal, and the concept of entropy has been widely applied for various disciplines and purposes, including landscape ecology. Within this discipline, however, the uses of the entropy concept and its underlying assumptions are various and are seldom described explicitly. In addition, the link between this concept and thermodynamics is unclear. The aim of this paper is to review the various interpretations and applications of entropy in landscape ecology and to sort them into clearly defined categories. First, a retrospective study of the concept genesis from thermodynamics to landscape ecology was conducted. Then, 50 landscape ecology papers that use or discuss entropy were surveyed and classified by keywords, variables and metrics identified as related to entropy. In particular, the thermodynamic component of entropy in landscape ecology and its various interpretations related to landscape structure and dynamics were considered. From the survey results, three major definitions (i.e., spatial heterogeneity, the unpredictability of pattern dynamics and pattern scale dependence) associated with the entropy concept in landscape ecology were identified. The thermodynamic interpretations of these definitions are based on different theories. The thermodynamic interpretation of spatial heterogeneity is not considered relevant. The thermodynamic interpretation related to scale dependence is also questioned by complexity theory. Only unpredictability can be thermodynamically relevant if appropriate measurements are used to test it.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The search for a universal law that can apply from physics to social sciences has been challenging scientists since the rise of reductionist theories. In this sense, the laws of thermodynamics are expected to explain any process at any scale (Li et al. 2004). Therefore, the term entropy is now used in a variety of disciplines. However, in Landscape ecology, numerous interpretations and uses are associated with entropy: as a pattern or processes descriptor, with or without reference to thermodynamics.

In addition, the current thermodynamic interpretations of landscape entropy can be questioned by complexity theory (Li 2000b; Wu and Marceau 2002), while the meaning of entropy is often not discussed, or even mentioned, when used in landscape ecology (Bolliger et al. 2005). The interpretations of entropy in landscape ecology (landscape entropy) can even be contradictory because entropy can be associated with chaos or the opposite depending on the interpretation. This ambiguity causes confusion in using and interpreting metrics related to entropy.

Therefore, this review aims to distinguish amongst the various applications of landscape entropy and analyse their consistency. We address four questions: (1) What are the links between landscape entropy and the origins of the concept? (2) How can we quantify landscape entropy? (3) What are the relevant interpretations of entropy? (4) Can thermodynamics predict landscape spatiotemporal structure?

To answer these questions, we first explore the origin of the entropy concept in thermodynamics, explain how a parallel concept developed in information theory, and discuss the link between these two origins. We then describe how these two concepts were adopted in ecology and, through a bibliographic survey, examine how various interpretations evolved in landscape ecology. Finally, we explore the various metrics used to quantify entropy. The discussion questions the validity of the thermodynamic interpretation of landscape entropy according to complexity theory and briefly examines the relevance and limitations of the metrics. We conclude with recommendations for a transparent use of the term.

Origins: thermodynamics

The notion of entropy originates from classical thermodynamics: it was developed by Clausius in 1850 as a system state function (Fig. 1). Entropy was originally used to quantify the degree of irreversibility of a thermodynamic transformation in an isolated system. Indeed, according to the second law of thermodynamics, a system spontaneously evolves towards the thermodynamic equilibrium, that corresponds to its maximal entropy level; hence, the entropy of an isolated system increases with every transformation it undergoes (Benson 1996; Benatti 2003; Harte 2011). As entropy was not measurable per se in the mid-nineteenth century, it was defined by its variation during a theoretically reversible transformation within a closed system, as in Eq. (1):

where D S is the entropy variation (Joules per Kelvin), D Q is the heat transfer between the system and its surroundings, and T is the equilibrium temperature (Harte 2011).

Summary of the development of the entropy concept and relevant relationships from its foundations to landscape ecology. The arrows indicate the sense of the evolution. No arrow is drawn from thermodynamics to information theory since our analysis concluded that there was no relationship. The dashed arrow shows that the thermodynamic interpretation of ecological succession needs to be further investigated. It will influence the relevance of the thermodynamic interpretation of unpredictability and scale influence

In approximately 1875, Boltzmann formulated a probabilistic interpretation of the second law of thermodynamics using atomic theory (Benson 1996; Harte 2011). He introduced the macrostate and microstate concepts. The former concept describes the general state of a system at a macroscopic level, characterised by state functions (e.g., temperature, pressure, volume). The latter concept takes the configuration of each system element (position and movement of each particle) into account. Boltzmann demonstrated that one given macrostate could correspond to numerous different microstates and stated that, according to the second law of thermodynamics, a system spontaneously evolves to the most probable macrostate, i.e., the state that would result from the largest number of different microstates: the state of maximum entropy (Depondt 2002; Harte 2011). Following this approach, entropy was defined as follows:

where S is the system entropy (J/K), k B is the Boltzmann constant (1.38062 J/K), and W is the number of different microstates corresponding to a given macrostate.

The most probable macrostate has the most homogeneous (i.e., undifferentiated or uniform) configuration (Benson 1996; Harte 2011). Boltzmann justified the correspondence with Clausius’ theory stating that an isolated system spontaneously loses its structure and becomes a homogeneous mixture of all its molecules (Forman and Godron 1986a; Benson 1996; Harte 2011). This definition explains why entropy is associated with disorder, in contrast to a differentiated structure in which the various elements would be sorted into separate locations instead of being evenly distributed.

Parallel development in information theory

An alternative use of the term entropy was developed in 1948 by Claude Shannon for information theory (Fig. 1). Shannon studied the way information contained in messages such as telegrams was degraded during transmission (Shannon and Weaver 1948; Harte 2011). In this context, entropy (H) is defined as follows:

where n is the number of elementary message components (i) and p i is the probability of the occurrence of each form this component can assume. H varies from 0 to log n (Shannon and Weaver 1948).

Here, information is a function of the ratio between the number of possible contents before and after the information is received (Margalef 1958). Entropy represents the missing information, i.e., the amount of information that could be gained by receiving a supplementary message component (Shannon and Weaver 1948; Margalef 1958; Harte 2011). Entropy production represents information loss during signal transmission (Moran et al. 2010); this metric is used to determine the degree of redundancy that is required in the message in order to preserve its (unaltered) meaning upon reception (Depondt 2002). The more elaborate the emitted signal and the less information contained in the received signal, the higher the entropy production (Moran et al. 2010). Indeed, if the emitted signal is elaborate (n is high and the various p i are small), a single message component i provides only a small amount of the total message content. Negentropy, the inverse of entropy, is the information contained in the received message, i.e., the degree of organisation (Margalef 1958; Harte 2011).

A functional connection between thermodynamics and information theory can be drawn. Indeed, for an ideal gas, considering i as the number of groups of molecules with the same properties, there is a larger number of possible spatial arrangements of i (the number of microstates) when the various p i are smaller and more numerous; the Boltzmann Eq. (2) is directly proportional to the Shannon Eq. (3). Therefore, information entropy (entropy as applied in information theory) is a measure of our confusion regarding the state of the system (Shannon and Weaver 1948; Benatti 2003; Harte 2011). Stonier (1996) even asserted that energy and information were interconvertible. Shannon himself noticed this similarity in his research (Shannon and Weaver 1948), even though his theory was not derived from Boltzmann’s theory (Benatti 2003).

However, the hypothesis of a physical correspondence between thermodynamic and information entropies is questionable for three reasons. First, thermodynamic entropy (S) depends upon the various microstates of a system at the molecular level, which is more strongly related to the law of large numbers (Sanov 1958). In information theory, the number of possible message components varies across an entirely different range (Depondt 2002; Benatti 2003; Maroney 2009). Second, despite the use of the same formalism, information entropy and thermodynamics are based on clearly divergent theoretical assumptions: according to Boltzmann, entropy corresponds to a homogeneous structure, while Shannon’s entropy corresponds to elaborate (heterogeneous) signals (Ricotta 2000; Depondt 2002; Harte 2011). Third, considering classical thermodynamics, if transformation irreversibility, which is fundamental to entropy variation, can correspond to the irreversible degradation of the received signal, a redundancy in the message content that allows for the reconstruction of the message meaning does not make sense for thermodynamic entropy (Depondt 2002). In conclusion, there is no confirmation that any thermodynamic interpretation of information theory is relevant. Information entropy is, therefore, merely a formal parallelism to thermodynamic entropy (Renyi 1961).

Thermodynamics and information theory applied to ecology

Ecologists rapidly applied information entropy to assess biological diversity with the Shannon diversity index (MacArthur 1955; Margalef 1958; Ulanowicz 2001). Later, the development of the analysis of landscape heterogeneity was based on those metrics (Romme 1982). Diversity is understood as the interaction between the number of species and their relative abundances. Diversity represents the probability that two individuals sampled at random will not belong to the same species (Pielou 1975). The majority of information entropy-related metrics are derived from the Shannon index (3), where, p i represents the relative abundance of individuals of species i in an ecosystem containing n species. Before Stonier (1996), Margalef (1958) proposed that information theory as applied to ecology and evolution could have a thermodynamic meaning. However, ecological thermodynamic interpretations do not refer to information theory (Wurtz and Annila 2010; Chakraborty and Li 2011). Pielou (1975) even highlighted the absence of an ecological meaning of information theory. In contrast, authors currently referring to information theory do not generally refer to thermodynamics (Ulanowicz 2001), with a few exceptions, such as Ricotta (2000).

As for the thermodynamic heritage of entropy in ecology (Fig. 1), it is mainly used to describe the evolution of a food web through ecological succession (Wurtz and Annila 2010). Ecosystems, like living organisms, can be described as dissipative structures, i.e., open systems that consume available energy. These systems are non-equilibrium (Li et al. 2004) : the possible equilibrium state achieved by the ecosystem is not the thermodynamic equilibrium but, rather, a situation of stability (“metastability”, “dynamic equilibrium”, “homeostasis” or “stationary state”) in the ecosystem structure (Yarrow and Salthe 2008; Parrott 2010; Chakraborty and Li 2011; Ingegnoli 2011). This energy originates more or less directly from the sun (external source) and is transformed by organisms at various trophic levels following non-linear dynamics. This process is associated with an entropy increase outside the system and an entropy decrease inside the system. This phenomenon is called self-organisation, an emergent property in complex systems (Li 2000b; Li et al. 2004; Green and Sadedin 2005; Parrott 2010; Chakraborty and Li 2011). Various studies have examined the roles of the aforementioned processes in determining biodiversity and resilience, which can be related to entropy in terms of species diversity (Parrott 2010; Chakraborty and Li 2011), but these studies do not explicitly establish such a link. During the ecological succession process, ecological communities are highly effective at minimising the entropy increase through the food web (Wurtz and Annila 2010; Hartonen and Annila 2012). Note that this type of entropy use in ecology, though described using thermodynamic equations, does not provide any quantitative measurements of entropy production and energy fluxes (Maldague 2004), most likely because such measurements would require considerable infrastructure (Ulanowicz 2001). It has even been stated that classical thermodynamics cannot predict the evolution of ecological systems because the latter are non-equilibrium systems that follow non-linear dynamics (Li 2000b; Li et al. 2004; Ulanowicz 2004).

Methods

This review consists of a quantitative survey on the uses and interpretations of entropy in landscape ecology based on a selection of representative papers. A bibliographic search was based on journal articles, conference proceedings and books published or in press in 2012 according to a joint database search in ScienceDirect, Scopus and Google Books. To select articles applying or discussing entropy concepts in landscape ecology, research filters were applied on the term “entropy” in the full text and “landscape ecology” in the full text or “landscape” in the source title. As Google Books did not provide these search tools, only books with “landscape ecology” in their subject and “entropy” as well as “land” or “landscape ecology” in the full text were selected. This search resulted in 297 publications: 215 papers from ScienceDirect, 60 from Scopus (11 in common with ScienceDirect), and 37 books from Google Books. Papers citing entropy only in the references and book reviews were excluded. Fewer than 200 papers remained after this filtration. Fifty of these were selected as the most representative papers, i.e., the journal articles published in the highest impact factor journals and the most cited books or conference proceedings according to Google Scholar. This selection was performed with the goal of encompassing the widest possible range of metrics and interpretations of entropy in landscape ecology.

Each selected document was analysed regarding the interpretation, use and metrics of landscape entropy. The results were listed, and similarities were grouped for further description and comparison. The representativeness of each quantification method was studied, and the documents were classified according to the interpretation of entropy and its links with thermodynamics.

Results

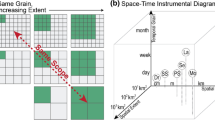

Amongst the various discussions, metrics and uses contained in the 50 selected papers, three interpretations of landscape entropy could be distinguished: (1) spatial pattern heterogeneity, (2) unpredictability of pattern dynamics and (3) scale dependence of spatial and temporal patterns (Fig. 1; Table 1). The interpretations mentioning a thermodynamic relationship generally describe processes in a qualitative way, whereas the non-thermodynamic interpretations are quantitative and describe patterns. As the same quantification methods can be used in various ways, these methods are presented in a separate subsection.

Entropy in space: heterogeneity

The use of entropy concepts to quantify landscape heterogeneity was reported in half of the selected references (Table 1), although a link with thermodynamics was rarely discussed. In these papers, entropy represents the intricacy of the landscape pattern, either compositionally (numerous land covers present in even proportions) or configurationally (numerous patches of tortuous forms) (Fahrig and Nuttle 2005). This use of entropy was inherited from information theory. Within this interpretation, the majority of authors consider the link between entropy and thermodynamics as simply a formal parallelism, associating entropy with disorder, applied at the landscape level (Li and Reynolds 1993; Joshi et al. 2006; Leibocivi 2009). Zhang et al. (2006) add that interactions within the landscape and with its surroundings cannot strictly be evaluated from a thermodynamic point of view, though a few authors provide thermodynamic interpretations (Table 1).

There are two opposing thermodynamic interpretations of heterogeneity (Table 1). In the case of a direct correlation, higher heterogeneity would mean higher entropy (Bogaert et al. 2005). This interpretation is based on information theory applied at the landscape level: entropy here means a signal as well as landscape heterogeneity. For the authors considering an inverse correlation, higher entropy corresponds to higher homogeneity. This homogeneity is understood as an undifferentiated structure covering the entire landscape (the “macrostate”), following Boltzmann’s theory (see “Origins: thermodynamics”) applied at the landscape level (Forman and Godron 1986a; Benson 1996; Harte 2011).

Spatial heterogeneity is used indirectly to assess species distributions (Cale and Hobbs 1994; Farina 2000; Johnson et al. 2001; Cushman and McGarigal 2003; Tews et al. 2004; Fahrig et al. 2011), to assess the effects of disturbances such as urban sprawl (Sudhira et al. 2004; Rahman et al. 2011) or habitat loss through fragmentation (Wilkinson 1999; Tews et al. 2004; Fahrig et al. 2011).

Entropy in time: unpredictability

The concept of entropy is also used to describe the instability of landscape evolution. Two approaches are employed for this application. The first approach applies a thermodynamic interpretation of unpredictability (Table 1) and aims to describe landscape evolution in energetic terms. Here, as with ecosystems in ecology, landscapes are considered as dissipative structures composed of living organisms that consume energy from the sun directly or indirectly to increase their inner structure (and thus decrease their inner entropy) while the entropy of their surroundings increases (McHarg 1981; Naveh 1982, 1987; Li 2000b; Leuven and Poudevigne 2002; Zhang et al. 2006; Gobattoni et al. 2011). In the (theoretical) absence of a disturbance, landscapes tend to evolve towards a condition of metastability over time, and the dissipative processes only maintain the inner structure of the landscape system. In this case, the inner entropy decrease compensates for the entropy increase in its surroundings (Naveh 1987; Li 2000b; Ingegnoli 2011). Several authors have associated any instability (“transition phase”) and change with an increase in outer entropy production (Dorney and Hoffman 1979; McHarg 1981; Lee 1982; Corona 1993; Wilkin 1996; Newman 1999).

In contrast, the second approach consists of estimating unpredictability by applying information entropy metrics to landscape patterns. This approach is essentially quantitative and does not refer to thermodynamics, instead employing an analogy between signal transmission and landscape structure evolution. From this perspective, entropy is referred to as unpredictability because the irregularity of landscape change is measured using entropy metrics. This view depicts the evolution of spatial patterns, or biophysical gradients, such as those in meteorological data or the Normalised Difference Vegetation Index (NDVI). The data are generally obtained from remote-sensing image time series. Such time series can now be chosen according to the desired temporal scale of observation (Zaccarelli et al. 2013). The data are analysed using information theory-derived metrics and interpreted in relation to disturbance and stability (Mander and Jongman 1998; Martín et al. 2006; Zaccarelli et al. 2013; Zurlini et al. 2013). Some of the authors following this approach have criticised the thermodynamic approach to assessing unpredictability, stating that a change in the energy, matter and information fluxes between a landscape system and its surroundings does not necessarily imply unpredictability because the system may return to its previous metastable state (see “Thermodynamics and information theory applied to ecology”) after such a disturbance (Zaccarelli et al. 2013).

According to both approaches, however, a minor disturbance temporarily interrupts the stationary state. When there is a high level of landscape resistance, the landscape spatial structure is not modified and the landscape processes progressively return to their previous state. Alternatively, when there is a high level of landscape resilience, the landscape spatial structure can be modified but progressively returns to the same stationary state as before the disturbance (Pimm 1984; Ingegnoli 2011). Unpredictability arises when a disturbance is sufficiently severe to disrupt the within-landscape processes and patterns to a degree that the system resilience cannot overcome the disturbance. This state is described as transitive and is linked with the terms “phase transition”, “bifurcation”, “perturbing transitivity”, “critical threshold”, “severe outside disturbance” or “instability” (Naveh 1987; Li 2000b; Li et al. 2004; Ulanowicz 2004; Zhang et al. 2006; Chakraborty and Li 2011; Ingegnoli 2011). At this stage, it is not possible to predict the new metastable state into which the landscape will evolve, either thermodynamically or structurally. The level of unpredictability can be used to assess landscape resilience under various types of pressures, including those caused by humans (Naveh 1987; Zaccarelli et al. 2013; Zurlini et al. 2013).

Entropy over space and time: pattern scale dependence

The use of entropy concepts to study the effect of scale on spatial and temporal patterns is the least frequent usage (Table 1) (O’Neill et al. 1989; Riitters et al. 1995; Johnson et al. 2001). This usage emerged in the literature shortly after the linkage of entropy to heterogeneity and unpredictability.

Typically, this approach examines irregularities in pattern measurements across a gradient of scales by employing disorder metrics derived from information theory (Johnson et al. 1999). Decreasing the spatial resolution can obscure ecologically relevant contrasts along ecological gradients such as rainfall distribution or species abundance, since this can influence the shape and size of habitat patches and merge or even erase patches when their sizes are smaller than the pixel or when they cross multiple pixels (Turner et al. 1989). This scale dependency may have an important influence on the identification of patterns and, therefore, on inferences of underlying ecological processes (Cale and Hobbs 1994).

Only one paper studying landscape entropy as pattern scale dependence mentioned thermodynamics (O’Neill et al. 1989). In this context, scale dependence is discussed in terms of hierarchy and complexity theory (Wu and Marceau 2002; Li et al. 2004; Green and Sadedin 2005). It should be noted however that Cushman et al. (2010) highlighted the difference between considerations of scale and hierarchical levels: scale refers to a continuous property measured in common units, whereas hierarchical level refers to a discrete property with various entities studied at each level.

Hierarchy theory states that the existence of emergent properties that arise from nonlinear interactions of the components of a system with each other and with external constraints prevents the prediction of system behaviour when only considering the properties of its components (Wu and Marceau 2002; Li et al. 2004; Green and Sadedin 2005). Hence, the inner and outer constraints applied on the studied system need to be described. In landscape ecology, the level of focus is the landscape. The immediately higher level, the system environment or surroundings, is the region; this level represents the outer constraints encountered by the landscape. The immediately lower level, the components or holons (sub-systems) of the landscape, are the ecosystems (Wu and Marceau 2002; Ingegnoli 2011). According to O’Neill et al. (1989), entropy is considered in terms of the laws of thermodynamics applied on living systems at various levels, recognising that living systems spontaneously tend towards minimal entropy production.

We stress here that the study of scale dependence in landscape ecology extends beyond the sole usage of the term entropy within this framework. The majority of the research studying this issue is conducted within the framework of complexity theory but does not refer to thermodynamics. This research highlights the influences of scale and hierarchical levels of observation on the explanatory power of observed patterns and processes (Levin 1992; Wu and Marceau 2002; Green and Sadedin 2005; Yarrow and Salthe 2008; Cushman et al. 2010).

Quantification methods

The present section provides a short description of the indexes that are referred to as “entropy indexes” in landscape ecology. As previously explained, these indexes are not connected with thermodynamics. Only the fundamental quantification methods (Fig. 2) are presented, beginning with those inherited from ecology. Note that the majority of these metrics are included in the Fragstats landscape pattern analysis software (McGarigal et al. 2012).

Number of citations and use of the most cited (at least twice) entropy quantification methods in landscape ecology, classified by entropy type. According to a survey of 50 journal articles, conference proceedings and reference books within the scope of landscape ecology (see symbols in references section for detailed list). Statistics are not referenced as they can represent any kind of general statistics. Shannon and analogous include Shannon, Simpson, diversity, evenness and conditional entropy

Metrics inherited from ecology

The oldest and still most widely used metrics are the Shannon index and its analogous forms (Antrop 1998; Antrop and Van Eetvelde 2000; Palang et al. 2000; Ricotta 2000; Antrop 2004). When evaluating compositional or configurational heterogeneity, p i in Table 2, Eq. (4), represents the areal proportion of either the land cover or patch i (Yeh and Li 1999; Carranza et al. 2007). When evaluating unpredictability, p i can also represent the proportion of an ecological factor broken down in classes, such as NDVI, precipitation or the distance from a town. The latter is calculated over time rather than as a spatial series. The Simpson and Shannon diversity indexes are analogous. The Simpson diversity index (Table 2; Eq. (5)) is used in statistics and ecology. This index considers relative abundances, as does the Shannon index, but it is computed using the arithmetic mean rather than the geometric mean and is normalised (Pielou 1975). The Brillouin index (Table 2, Eq. (6)), used in physics and ecology, is used to evaluate the diversity of a fully censused area, whereas the Simpson and Shannon indexes are better suited for samples (Pielou 1975; Orloci 1991; Bogaert et al. 2005).

Those metrics were later grouped into a generic form: the Renyi generalised entropy index (Table 2, Eq. (7)), (Renyi 1961; Pielou 1975). By this definition, 0 < α < ∞, and according to its value, the Renyi index may correspond to one of the above-cited indexes. The value of H 1 equals the Shannon index, while H 2 is a logarithmic version of the Simpson index (Pielou 1975). The Brillouin index can also be approached using this formula (Orloci 1991). Evenness (Table 2, Eq. (8)) is a component of diversity that considers only the relative abundances of the measured elements (Pielou 1975; Forman and Godron 1986b; Johnson et al. 1999; Martín et al. 2006; Proulx and Fahrig 2010).

Conditional entropy, also associated with the Shannon and analogous indexes, (Table 2, Eqs. (4) to (8)), is used to measure the unpredictability or scale dependence of the spatial heterogeneity of a landscape. When the degree of entropy is known to be partially attributed to a known random variable, conditional entropy is the entropy conditioned by the other random variables (Shannon and Weaver 1948; Legendre and Legendre 2012). This concept is based on conditional probability studies (Shannon and Weaver 1948; Jost 2006; Martín et al. 2006). The p i variables in Eq. (3) are then spatial pattern indexes themselves that are applied to an entire landscape at varying times or resolutions, i (Pablo et al. 1988; Patil et al. 2000; Martín et al. 2006). A particular use of conditional entropy and the Shannon index for the measurement of unpredictability has also been proposed: normalised spectral entropy. It integrates the frequency at which a certain pattern or gradient can be recovered in a time series and its Fourier power spectrum (Johnson et al. 1999; Zaccarelli et al. 2013; Zurlini et al. 2013).

Another application of conditional entropy was adapted from statistics to ecology and landscape ecology to measure the α, β and γ diversities (Table 2, Eq. (9)). These indices evaluate the mean habitat diversity at the landscape level (α) and the differences between distinct thematic layers (β) representing the landscape (e.g., land cover, human activities, soil types); α + β = γ (Pablo et al. 1988; Ernoult et al. 2003). These metrics are calculated using Shannon derivatives (Shannon and Weaver 1948; Whittaker 1960; Jost 2006; Wurtz and Annila 2010).

As a particular case, the MaxEnt (Maximal Entropy) method uses information regarding entropy without measuring it for its own purpose. Inherited from ecology, the versions adapted for landscape ecology assess geographic distributions of species or habitats, based on a sample, by finding the most uniform distribution subject to the applied constraints (i.e., the measured distribution parameters) using Lagrange multipliers on Shannon indices (Phillips et al. 2006; Powell et al. 2010; Harte 2011).

Metrics developed in landscape ecology and non-ecological disciplines

With regard to landscape entropy indexes not inherited from ecology, contagion indexes are the most widely employed (Table 2; Fig. 2). Contagion indexes measure configurational heterogeneity or, more precisely, the spatial distribution and intermixing of patch types (Riiters et al. 1996). These indexes are also applied to landscapes at various scales and compared (Johnson et al. 1999; Gaucherel 2007) but are not indexes of scale dependence per se (Benson and Mackenzie 1995). Contagion represents the relative importance of adjacencies between pixels of different patch types in a landscape, hence the level of aggregation of the patch types. The most common contagion index is the Shannon contagion index (McGarigal et al. 2012). Juxtaposition represents the relative importance of the common edge lengths between patches and also uses an adaptation of the Shannon index (Johnson and Patil 2007; McGarigal et al. 2012).

Fractal dimension is a widely used measure of patch shape tortuosity that is reported to relate to entropy (Fig. 2) (Kenkel and Walker 1996). Whereas a patch edge has one topological dimension and a surface has two, the fractal dimension of its edges varies from 1 (straight line) to 2. The fractal dimension approaches 2 if the shape tortuosity is sufficiently important that the edge can fill a surface (Mandelbrot 1983; Kenkel and Walker 1996). The fractal dimension of a single patch cannot be properly calculated, for multi-scalar information is then not available (Krummel et al. 1987). Therefore, a linear regression of Eq. (9) (Table 2) is used to calculate the fractal dimension of a population of patches, which should preferably be of similar shapes (Krummel et al. 1987), where, k is an unknown constant, D is the fractal dimension, P is the patch perimeter, and A is the patch area. In our survey, the most recent use of the fractal dimension was reported in 2007, compared to 2013 for the Shannon index and the MaxEnt method. A variant, the similarity dimension, evaluates scale dependence (Patil et al. 2000). This index is also frequently used to assess fragmentation, but the majority of interpretations do not explicitly mention entropy, and the various calculations are still debated (Krummel et al. 1987; Turner et al. 1989; Li 2000a; Halley et al. 2004).

Several other metrics are less frequently used for studies of landscape entropy (Fig. 2). There are simple indexes such as the Largest Patch Index (LPI), and edge density (Johnson and Patil 2007; McGarigal et al. 2012) and statistics such as semivariance (Ernoult et al. 2003). A number of metrics have been adapted from other disciplines, e.g., the Gini Coefficient from social statistics (Gini 1921). This latter metric is used and interpreted similarly to Shannon-based indexes (Jaeger 2000; Kilgore et al. 2013).

Discussion and conclusion

The theoretical research on the evolution of the entropy concept from its origins to landscape ecology (Fig. 1) has revealed that the various interpretations of this term are not consistent. The ways the term entropy is used in thermodynamics and in information theory do have functional similarities, but these concepts represent different realities; the term is used more as a formal analogy than as a physical correspondence.

Spatial heterogeneity: contested thermodynamic correspondence

Reconsidering the links between thermodynamics and spatial heterogeneity, the existence of two opposing interpretations must be considered. The majority of authors implicitly assume an analogy to (particle) disorder that works well: a higher degree of landscape entropy reflects greater spatial heterogeneity. This analogy can be observed at various levels, such as in terms of species or habitat diversity, but the authors employing this interpretation do not necessarily confer thermodynamic properties to the systems they study: greater spatial heterogeneity does not necessarily indicate greater (or less) thermodynamic entropy.

However, the most detailed link between spatial heterogeneity and entropy is the inverse correlation proposed by Forman and Godron (1986a) based on conceptual arguments that connect the Boltzmann equation to landscape patterns and dynamics (Forman and Godron 1986a; Forman 1995; Depondt 2002; Harte 2011). Notably, this link is also present in the different forms that spatial heterogeneity metrics can assume: high variability in the metrics values is generally measured for landscapes with the intermediate levels of class dominance and aggregation (Neel et al. 2004).

Forman’s conceptual framework of the production of heterogeneity through dissipative structures assumes that pattern properties that exist at the particle level are the same at the levels of the living organism, habitat and landscape (Forman and Godron 1986a). However, this assumption can be questioned based on the complexity and hierarchy theories described above: the processes, patterns and entities at play are not the same at any hierarchical level (Baas 2002; Wu and David 2002; Green and Sadedin 2005). Employing the same logic as when evaluating the link between information theory and thermodynamics, this amalgam between the molecular and landscape levels appears inappropriate (O’Neill et al. 1989; Maroney 2009). Indeed, the possible patch arrangements in a landscape are far less numerous than the number of possible microstates. Moreover, at various organisational levels, the time frames at which processes occur differ considerably. Moreover, neither irreversibility nor signal redundancy for reconstruction after transmission are possible when considering a strict correspondence between landscape spatial structure and (thermodynamic) entropy. Indeed, when landscape structure changes, for example, because of a disturbance, the landscape can, in certain instances, return to its previous structure as a result of resilience and ecological succession (see “Entropy in time: unpredictability”).

In addition, no (thermodynamic) entropy quantification methods have been proposed. Measurements of energy fluxes at the landscape level, which requires an enormous recording infrastructure, have been reported in rare cases, such as in Ryszkowski and Kędziora (1987), but, to date, no study has provided a sufficient level of integrative results to evaluate the link between spatial heterogeneity at the landscape scale and entropy. Therefore, any statement specifying a link, direct or inverse, between spatial heterogeneity and thermodynamic entropy should be treated with caution. The majority of the authors that use the term entropy when they mean spatial heterogeneity do not even mention a thermodynamic interpretation of entropy. Hence, in this context, the use of the term entropy may simply be language abuse.

Unpredictability: incomplete thermodynamic framework

Thermodynamic descriptions of landscape evolution in terms of unpredictability are more frequent than those in terms of spatial heterogeneity (Table 1). However, to date, none of these studies have been able to predict landscape stability or instability based on the production of entropy and energy exchanges (Li 2000b; Ingegnoli 2011). Such attempts are unlikely to succeed because, similar to ecosystems, landscapes are complex systems that exist in states that are far from equilibrium and exhibit non-linear dynamics (see “Entropy over space and time: pattern scale dependence”); hence, landscape evolution cannot be described using classical thermodynamics (Li 2000b; Li 2002; Li et al. 2004; Ulanowicz 2004). In this case, unpredictability cannot merely be associated with an increase or decrease in entropy, whether within or outside of the landscape system. Even for a stable landscape, the production of entropy in the surroundings is higher than the entropy decrease within the landscape because of the irreversible transformations caused by the organisms; therefore, the exchanges described by Ingegnoli (2011) are irrelevant (Benson 1996). Li (2000b) has reported that there have been numerous misinterpretations of landscape thermodynamics by (landscape) ecologists. Evolutionary processes and unpredictability have been described to an extent, but variations in entropy are not described in the case of phase transition or compared between old and new (meta)stable states.

Moreover, as the energy exchanges within the system and with its environment are not measured, knowledge of the manner in which thermodynamics are related to landscape dynamics requires further deepening. This shortcoming might be overcome by measuring the spatiotemporal variations of albedo in the infrared channels of passive remote sensing images in order to test the aforementioned theories.

Scale, hierarchy and complexity: how thermodynamics fails to predict landscape spatiotemporal dynamics

The majority of the mentions of entropy as a measure of scale dependence did not refer to thermodynamics. Therefore, the use of the term entropy can be viewed as an abuse of language that has arisen from the use of metrics first used as entropy metrics in information theory. With regard to the relevance of a thermodynamic interpretation of the influence of scale, the measurement of energy exchanges at the level of matter or living organisms to infer thermodynamic behaviour at the landscape level appears inappropriate and insufficient because of the complexity of landscapes. Thermodynamic laws apply at every organisational level, but complex interactions within and amongst various levels imply that structures and processes are not self-similar across levels (Wu and Marceau 2002). These discrepancies have two consequences. First, the measurement of such exchanges appears, in practice, unfeasible because of the number of interactions. Second, even if such a computation could be performed, a given set of departure conditions could generate various spatiotemporal structures (Green and Sadedin 2005). Currently, predicting the spatiotemporal structure of a landscape is better accomplished by studying the processes and interactions at the immediately lower (ecosystems) and higher (region) organisational levels to study the departure conditions and constraints applied to landscapes. Nondeterministic behaviours are also observed at those levels, especially because of the lack of predictability of human influences on landscape structure. Therefore, simulation models are performed to evaluate trajectory scenarios (Green and Sadedin 2005; Ingegnoli 2011). Even if self-similar structures exist across multiple scales in nature, resulting from self-organised criticality and often displaying fractal patterns or distributions (e.g., in coastal geomorphology or in body size through a food web), these cases are particular (Baas 2002; Wu and David 2002; Green and Sadedin 2005; Parrott 2010).

Notably, perceptions of processes are strongly influenced by the observation scale, whether spatial or temporal. What seems unpredictable at a given spatiotemporal scale, e.g., the variation in albedo across a landscape during a year, can be predictable at a larger scale, e.g., when seasonal variations appear with more regularity across multiple years (Zurlini et al. 2013).

Metrics, terminology and insufficiencies

Such a contrast between the application of a concept’s meaning and the use of its metrics is very unexpected. However, though thermodynamic issues are rarely addressed, numerous landscape entropy metrics have been proposed. Most of these metrics remain marginal (Fig. 2): only three metrics appear to be commonly used. The Shannon index is clearly the most persistent and polyvalent. Its use and formula have evolved, but the interpretation still relies on the same basis (Renyi 1961; Phipps 1981; Ricotta 2000; Johnson et al. 2001; Zaccarelli et al. 2013). This level of stability allows for comparisons amongst studies and may explain the success of this family of metrics. As a consequence of its wide use, the Shannon index has also been misused regarding its interpretation and its purpose (Pielou 1975; Bogaert et al. 2005).

Contagion and juxtaposition indexes are the second most used landscape entropy metrics, though employed five times less frequently than the Shannon index and its analogous indexes (Fig. 2). These indexes are mainly used to specifically address configurational heterogeneity (Ricotta et al. 2003; McGarigal et al. 2012). With regard to the fractal dimension metric, it appears that its use has recently decreased, most likely because of the practical difficulty and lack of specificity of its calculation methods and the lack of relevance of its interpretation in terms of landscape entropy (Xu et al. 1993; Li 2000a; Halley et al. 2004). The fractal dimension is, therefore, not recommended for assessing landscape entropy. Note that some authors identified fractal dynamics in interactions amongst system components and power-law scaling of frequency distribution features at various levels and linked it to thermodynamic processes in dissipative structures (Li 2002). However, this interpretation is not related to the spatial structure. It is important to note that the aforementioned metrics do not include every existing heterogeneity, unpredictability and scale dependence metrics, but only those that were associated with the term entropy, and that these metric use the term entropy for a non-thermodynamic representation.

As the term entropy is used in various ways with the same metrics and often without an explicit interpretation framework, we recommend using the term entropy with more accuracy and explicitness by employing the following three expressions. “Spatial heterogeneity” is proposed to describe the intricateness of the spatial pattern. “Unpredictability” should describe the irregularity in the pattern of change over time. “Scale dependence” should assess the effect of the spatial resolution on the observed patterns. The use of the expression “spatial heterogeneity” is already widespread in landscape ecology in the same sense. “Scale dependence” is used slightly less frequently but is often described by similar expressions: scale influence, influence of scale, or scale impact. The term “unpredictability” is still rarely used and no specific and unambiguous term is yet preferentially used to describe it, instead being referred to by the terms entropy, stability, persistence, or using sentences not suitable for a keyword search.

This quantitative survey highlights insufficiencies of the sampling methodology. Only searching for the mention of the term entropy did not allow for an understanding of its meaning, which required complementary research. Nevertheless, a lack of justification for the use and the interpretations of entropy in landscape ecology was revealed, as if the link between entropy and landscape dynamics was a well-established fact. This review demonstrates that this is not the case.

In addition, note that a change in entropy results from a process. In ecology, ecosystem process studies rarely explain pattern formations in landscapes (Levin 1992; Cushman et al. 2010; Chakraborty and Li 2011), while landscape ecology more often focuses on inferring the impact of spatial patterns on ecological processes than the opposite (Turner 1989; Baudry 1991). Therefore, there is still a gap to fill in the pattern/process paradigm.

References

The marked references indicate the 50 papers used for the survey. *: Spatial heterogeneity, #: unpredictability, °: scale dependence, ‘:thermodynamic relationship

* Antrop M (1998) Landscape change: Plan or chaos? Landsc Urban Plann 41:7

* Antrop M (2004) From holistic landscape synthesis to transdisciplinary landscape management. Frontis Workshop, 2004/06/01/6 2004. Springer, The Netherlands

* Antrop M, Van Eetvelde V (2000) Holistic aspects of suburban landscapes: visual image interpretation and landscape metrics. Landsc Urban Plan 50:16

Baas ACW (2002) Chaos, fractals and self-organization in coastal geomorphology: simulating dune landscapes in vegetated environments. Geomorphology 48:309–328

Baudry J (1991) Ecological consequences of grazing extensification and land abandonment: role of interactions between environment, society and techniques. Options Mediterraneennes. Serie A: Seminaires Mediterraneens (CIHEAM)

Benatti F (2003) Classical and quantum entropies: dynamics and information. In: Greven A, Keller G, Warnecke G, Kellerare G (eds) Entropy. Princeton University Press, Princeton, pp 279–298

* Benson MJ, Mackenzie MD (1995) Effects of sensor spatial resolution on landscape structure parameters. Landscape Ecol 10:8

Benson H (1996) Entropy and the second law of thermodynamics. University Physics, Wiley, New York, pp 417–439

‘* Bogaert J, Farina A, Ceulemans R (2005) Entropy increase of fragmented habitats: a sign of human impact? Ecol Indic 5:6

*# Bolliger J, Lischke H, Green DG (2005) Simulating the spatial and temporal dynamics of landscapes using generic and complex models. Ecol Complex 2:107–116

Cale P, Hobbs RJ (1994) Landscape heterogeneity indices: problems of scale and applicability, with particular reference to animal habitat description. Pac Conserv Biol 1:183–193

* Carranza ML, Acosta A, Ricotta C (2007) Analyzing landscape diversity in time: the use of Renyi’s generalized entropy function. Ecol Indic 7:6

Chakraborty A, Li BL (2011) Contribution of biodiversity to ecosystem functioning: a non-equilibrium thermodynamic perspective. J Arid Land 3:71–74

‘# Corona P (1993) Applying biodiversity concepts to plantation forestry in northern Mediterranean landscapes. Landsc Urban Plan 24:23–31

Cushman SA, Littell J, Mcgarigal K (2010) The problem of ecological scaling in spatially complex, nonequilibrium ecological systems. Spatial Complexity, Informatics, and Wildlife Conservation. Springer, New York, pp 43–63

Cushman SA, Mcgarigal K (2003) Landscape-level patterns of avian diversity in the Oregon Coast Range. Ecol Monogr 73:259–281

Depondt P (2002) L’entropie et tout ça. Le roman de la thermodynamique, Paris, Cassini

‘# Dorney RS, Hoffman DW (1979) Development of landscape planning concepts and management strategies for an urbanizing agricultural region. Landsc Plan 6:151–177

* Ernoult A, Bureau F, Poudevigne A (2003) Patterns of organisation in changing landscapes: implications for the management of biodiversity. Landscape Ecol 18:13

Fahrig L, Baudry J, Brotons L (2011) Functional landscape heterogeneity and animal biodiversity in agricultural landscapes. Ecol Lett 14:101–112

Fahrig L, Nuttle WK (2005) Population ecology in spatially heterogeneous environments. In: Lovett GM, Turner MG, Jones CG, Weathers KC (eds) Ecosystem function in heterogeneous landscapes. Springer, New York, pp 95–118

Farina A (ed) (2000) Methods in landscape ecology. In: Principles and methods in landscape ecology. Kluwer Academic, Dordrecht, p 65

‘* Forman RTT (1995) Land mosaics: the ecology of landscapes and regions. Cambridge University Press, Cambridge, MA

‘* Forman RTT, Godron M (1986a) Heterogeneity and typology. In: Forman RTT, Godron M (eds) Landscape ecology. Wiley, New York, pp 463–493

Forman RTT, Godron M (1986) Overall structure. In: Forman RTT, Godron M (eds) Landscape ecology. Wiley, New York, pp 191–225

* Gaucherel C (2007) Multiscale heterogeneity map and associated scaling profile for landscape analysis. Landsc Urban Plan 82:8

Gini C (1921) Measurement of inequality of incomes. Econ J 31:124–126

‘# Gobattoni F, Pelorosso R, Lauro G, Leone A, Monaco R (2011) A procedure for mathematical analysis of landscape evolution and equilibrium scenarios assessment. Landsc Urban Plan 103:289–302

Green DG, Sadedin S (2005) Interactions matter—complexity in landscapes and ecosystems. Ecol Complex 2:117–130

Halley J, Hartley S, Kallimanis A, Kunin W, Lennon J, Sgardelis S (2004) Uses and abuses of fractal methodology in ecology. Ecol Lett 7:254–271

Harte J (2011) Entropy, information and the concept of maximum entropy. In: Harte J (ed) Maximum entropy and ecology: a theory of abundance, distribution, and energetics. Oxford University Press, Oxford, pp 117–129

Hartonen T, Annila A (2012) Natural networks as thermodynamic systems. Complexity 18:53–62

‘# Ingegnoli V (2011) Non-equilibrium thermodynamics, landscape ecology and vegetation science. In: Moreno-Piraján JC (ed) Thermodynamics—systems in equilibrium and non-equilibrium. InTech, Rijeka, pp 139–172. http://www.intechopen.com/books/thermodynamics-systems-in-equilibrium-and-non-equilibrium/non-equilibrium-thermodynamics-landscape-ecology-and-vegetation-science

* Jaeger JAG (2000) Landscape division, splitting index, and effective mesh size: new measures of landscape fragmentation. Landscape Ecol 15:115–130

° Johnson GD, Myers WL, Patil GP, Taillie C (1999) Multiresolution fragmentation profiles for assessing hierarchically structured landscape patterns. Ecol Model 116:9

° Johnson GD, Myers WL, Patil GP, Taillie C (2001) Characterizing watershed-delineated landscapes in Pennsylvania using conditional entropy profiles. Landscape Ecol 16:597–610

°* Johnson GD, Patil GP (2007) Methods for quantitative characterization of landscape pattern. Landscape pattern analysis for assessing ecosystem. Springer, Berlin, pp 13–22

* Joshi PK, Lele N, Agarwal SP (2006) Entropy as an indicator of fragmented landscape. Curr Sci 91:3

Jost L (2006) Entropy and diversity. Oikos 113:363–375

Kenkel N, Walker D (1996) Fractals in the biological sciences. Coenoses 11:77–100

* Kilgore MA, Snyder SA, Block-Torgerson K, Taff SJ (2013) Challenges in characterizing a parcelized forest landscape: Why metric, scale, threshold, and definitions matter. Landsc Urban Plan 110:36–47

Krummel J, Gardner R, Sugihara G, O’neill R and Coleman P (1987) Landscape patterns in a disturbed environment. Oikos 321–324

‘# Lee BJ (1982) An ecological comparison of the McHarg method with other planning initiatives in the Great Lakes Basin. Landsc Plan 9:147–169

Legendre P, Legendre L (2012) Numerical ecology. Elsevier Science, Amsterdam

* Leibocivi DG (2009) Defining spatial entropy from multivariate distributions of co-occurrences. In: Hornsby KS, Claramunt C, Denis M, Ligozat G (eds) 9th international conference on spatial information theory, 2009 Aber Wrac’h. Springer, Berlin

‘# Leuven RSEW, Poudevigne I (2002) Riverine landscape dynamics and ecological risk assessment. Freshw Biol 47:845–865

Levin SA (1992) The problem of pattern and scale in ecology: the Robert H. MacArthur award lecture. Ecology 73:1943–1967

Li B-L (2002) A theoretical framework of ecological phase transitions for characterizing tree-grass dynamics. Acta Biotheor 50:141–154

Li B-L (2000) Fractal geometry applications in description and analysis of patch patterns and patch dynamics. Ecol Model 132:33–50

‘# Li B-L (2000b) Why is the holistic approach becoming so important in landscape ecology? Landsc Urban Plan 50:27–41

* Li H, Reynolds J (1993) A new contagion index to quantify spatial patterns of landscapes. Landscape Ecol 8:155–162

Li J, Zhang J, Ge W, Liu X (2004) Multi-scale methodology for complex systems. Chem Eng Sci 59:1687–1700

Macarthur R (1955) Fluctuations of animal populations and a measure of community stability. Ecology 36:533–536

Maldague M (2004) Le deuxième principe de la thermodynamique et la gestion de la biosphère. Application à l’environnement et au développement. In: Eraift MM, Unesco (eds) Traité de gestion de l’environnement tropical. Les Classiques des Sciences Sociales, Saguenay, pp 10.1–10.21

Mandelbrot BB (1983) The fractal geometry of nature. Henry Holt and Company

# Mander Ü, Jongman RHG (1998) Human impact on rural landscapes in central and northern Europe. Landsc Urban Plan 41:149–153

Margalef R (1958) Information theory in ecology. Gen Syst 3:36–72

Maroney O (2009) Information processing and thermodynamic entropy. In: Zalta EN (ed) The Stanford Encyclopedia of Philosophy, Stanford University, Stanford. http://stanford.library.usyd.edu.au/entries/information-entropy/

# Martín MJJ, Pablo CL, Agar PM (2006) Landscape changes over time: comparison of land uses, boundaries and mosaics. Landscape Ecol 21:1075–1088

Mcgarigal K, Cushman SA, Ene E (2012) FRAGSTATS v4: spatial pattern analysis program for categorical and continuous maps. University of Massachusetts, Amherst

‘# Mcharg IL (1981) Human ecological planning at Pennsylvania. Landsc Plan 8:109–120

Moran MJ, Shapiro HN, Boettner DD, Bailey M (2010) Using entropy. Fundamentals of engineering thermodynamics. Wiley, Hoboken, pp 281–358

‘# Naveh Z (1982) Mediterranean landscape evolution and degradation as multivariate biofunctions: theoretical and practical implications. Landsc Plan 9:125–146

‘# Naveh Z (1987) Biocybernetic and thermodynamic perspectives of landscape functions and land use patterns. Landscape Ecol 1:75–83

Neel MC, Mcgarigal K, Cushman SA (2004) Behavior of class-level landscape metrics across gradients of class aggregation and area. Landscape Ecol 19:435–455

‘# Newman PWG (1999) Sustainability and cities: extending the metabolism model. Landsc Urban Plan 44:219–226

‘° O’neill RV, Johnson AR, King AW (1989) A hierarchical framework for the analysis of scale. Landscape Ecol 3:13

Orloci L (1991) Entropy and information. The Hague, SPB Academic Publishing

* Pablo CL, Agar PM, Sal AG, Pineda FD (1988) Descriptive capacity and indicative value of territorial variables in ecological cartography. Landscape Ecol 1:203–211

* Palang H, Mander Ü, Naveh Z (2000) Holistic landscape ecology in action. Landsc Urban Plan 50:1–6

Parrott L (2010) Measuring ecological complexity. Ecol Indic 10:1069–1076

° Patil GP, Myers WL, Luo Z, Johnson GD, Taillie C (2000) Multiscale assessment of landscapes and watersheds with synoptic multivariate spatial data in environmental and ecological statistics. Math Comput Model 32:257–272

* Phillips SJ, Anderson RP, Shapire RE 2006. Maximum entropy modeling of species geographic distributions. Ecol Model 190:29

* Phipps M (1981) Entropy and community pattern analysis. J Theor Biol 93:253–273

Pielou EC (1975) Ecological diversity. Wiley, New York

Pimm SL (1984) The complexity and stability of ecosystems. Nature 307:321–326

* Powell M, Accad A, Austin MP, Choy SL, Williams KJ, Shapcott A (2010) Predicting loss and fragmentation of habitat of the vulnerable subtropical rainforest tree Macadamia integrifolia with models developed from compiled ecological data. Biol Conserv 143:12

* Proulx R, Fahrig L (2010) Detecting human-driven deviations from trajectories in landscape composition and configuration. Landscape Ecol 25:1479–1467

* Rahman A, Aggarwal SP, Netzband M, Fazal S (2011) Monitoring urban sprawl using remote sensing and GIS techniques of a fast growing urban centre, India. IEEE J Sel Top Appl Earth Obs Remote Sens 4:56–64

Renyi A (1961) On measures of entropy and information. In: Fourth Berkeley symposium on mathematical statistics and probability, 1961 Berkeley. University of California Press

* Ricotta C (2000) From theoretical ecology to statistical physics and back: self-similar landscape metrics as a synthesis of ecological diversity and geometrical complexity. Ecol Model 125:245–253

Ricotta C, Corona P, Marchetti M (2003) Beware of contagion! Landsc Urban Plan 62:173–177

* Riiters KH, O’neill RV, Wickham JD, Jones BK (1996) A note on contagion indices for landscape analysis. Landscape Ecol 11:197–202

° Riitters KH, O’neill RV, Hunsaker CT, Wickham JD, Yankee DH, Timmins SP, Jones KB, Jackson BL (1995) A factor analysis of landscape pattern and structure metrics. Landscape Ecol 10:23–39

Romme WH (1982) Fire and landscape diversity in subalpine forests of Yellowstone National Park. Ecol Monogr 52:199–221

Ryszkowski L, Kędziora A (1987) Impact of agricultural landscape structure on energy flow and water cycling. Landscape Ecol 1:85–94

Sanov IN (1958) On the probability of large deviations of random variables. Math Sb 42:11–44

Shannon CE, Weaver W (1948) A mathematical theory of communication. American Telephone and Telegraph Company

Stonier T (1996) Information as a basic property of the universe. BioSystems 38:135–140

* Sudhira HS, Ramachandra TV, Jagadish KS (2004) Urban sprawl: metrics, dynamics and modelling using GIS. Int J Appl Earth Obs Geoinf 5:29–39

Tews J, Brose U, Grimm V, Tielbörger K, Wichmann M, Schwager M, Jeltsch F (2004) Animal species diversity driven by habitat heterogeneity/diversity: the importance of keystone structures. J Biogeogr 31:79–92

Turner MG (1989) Landscape ecology: the effect of pattern on process. Annu Rev Ecol Evolut Syst 20:171–187

Turner MG, O’neill RV, Gardner RH, Milne BT (1989) Effects of changing spatial scale on the analysis of landscape pattern. Landscape Ecol 3:153–162

Ulanowicz RE (2001) Information theory in ecology. Comput Chem 25:393–399

Ulanowicz RE (2004) On the nature of ecodynamics. Ecol Complex 1:341–354

Whittaker RH (1960) Vegetation of the Siskiyou mountains, Oregon and California. Ecol Monogr 30:279–338

‘# Wilkin DC (1996) Accounting for sustainability: challenges to landscape professionals in an increasingly unsustainable world. Landsc Urban Plan 36:217–227

Wilkinson DM (1999) The disturbing history of intermediate disturbance. Oikos 84:145–147

Wu J, David JL (2002) A spatially explicit hierarchical approach to modeling complex ecological systems: theory and applications. Ecol Model 153:7–26

Wu J, Marceau D (2002) Modeling complex ecological systems: an introduction. Ecol Model 153:1–6

Wurtz P, Annila A (2010) Ecological succession as an energy dispersal process. Biosystems 100:70–80

Xu T, Moore ID, Gallant JC (1993) Fractals, fractal dimensions and landscapes—a review. Geomorphology 8:245–262

Yarrow MM, Salthe SN (2008) Ecological boundaries in the context of hierarchy theory. BioSystems 92:233–244

* Yeh AGO, Li X (1999) An entropy method to analyze urban sprawl in a rapid growing region using TM images. In: Sensing AAOR (ed) Asian conference on remote sensing, 1999 Hong Kong. GISdevelopment

# Zaccarelli N, Li B-L, Petrosillo I, Zurlini G (2013) Order and disorder in ecological time-series: Introducing normalized spectral entropy. Ecol Indic 28:22–30

‘# Zhang Y, Yang Z, Li W (2006) Analyses of urban ecosystem based on information entropy. Ecol Model 197:1–12

# Zurlini G, Petrosillo I, Jones BK, Zaccarelli N (2013) Highlighting order and disorder in social–ecological landscapes to foster adaptive capacity and sustainability. Landscape Ecol 28:1161–1173

Acknowledgments

Isabelle Vranken is a research fellow at the FNRS, Belgium. Thanks to Prof. G. Zurlini and Dr N. Zaccarelli for their complementary explanations. Thanks for the thoughtful remarks of the Editor.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vranken, I., Baudry, J., Aubinet, M. et al. A review on the use of entropy in landscape ecology: heterogeneity, unpredictability, scale dependence and their links with thermodynamics. Landscape Ecol 30, 51–65 (2015). https://doi.org/10.1007/s10980-014-0105-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10980-014-0105-0