Abstract

The literature of the kinetics in thermal analysis deals mainly with models that consist of a single reaction equation. However, most samples with practical importance are too complex for such an oversimplified description. There is no universal way to overcome the difficulties, though there are well-established models that can express the complexity of the studied reactions for several important types of samples. The assumption of more than one reaction increases the number of unknown parameters. Their reliable estimation requests the evaluation of a series of experiments. The various linearization techniques cannot be employed in such cases, while the method of least squares can be carried out at any complexity of the models by proper numerical methods. It is advantageous to evaluate simultaneously experiments with linear and nonlinear temperature programs because a set of constant heating rate experiments is frequently not sufficient to distinguish between different models or model variants. It is well worth including modulated and constant reaction rate temperature programs into the evaluated series whenever they are obtainable. Sometimes different samples share some common features. In such cases one can try to describe their reactions by assuming parts of the kinetic parameters to be common for the samples. One should base the obtained models and parameter values on a sufficiently large amount of experimental information, in a reliable way. This article is based on the authors’ experience in the indicated directions from 1979 till the present. Though the examples shown are taken from biomass research, the models and methods shown in the article are also hoped to be relevant for other materials that have complicated structure or exhibit complicated thermal reactions, or both.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The literature of the non-isothermal kinetics is dominated by models that consist of a single equation in the form

here α is the reacted fraction, and f(α) is either derived from some theory or an empirical function. The so-called model-free approaches are also based on this type of equations.

Unfortunately, the samples with practical importance are usually too complex for such an oversimplified description because different sorts of reactive species participate in the studied processes. Sometimes backward reactions or other secondary reactions influence the measured signals. Impurities with catalytic activities may also complicate the picture. There is no universal way to overcome the difficulties, though there are well-established models that can express the complexity of the studied reactions for several important types of samples.

The assumption of more than one reaction increases the number of unknown parameters. Their reliable estimation requests the evaluation of a series of experiments. The traditional evaluation methods (i.e., the various linearization techniques) cannot be employed in such cases because they can handle only one kinetic equation of type (1). Besides, they are restricted to constant heating rates (linear T(t) programs), their sensitivity on the experimental errors is unfavorable, and the empirical version of the reacted fraction (α) frequently cannot be read from the TG curves. The latter problem arises whenever the decomposition of an organic sample is followed by the slow carbonization of the formed chars.

The present work is based on the authors’ experience in the indicated directions from 1979 till the present. Though the examples shown are taken from biomass research, the treatment is also hoped to be relevant for other materials that have complicated structure or exhibit complicated thermal reactions, or both.

Evaluation of a series of experiments by the method of least squares (LSQ)

As mentioned above the traditional linearization techniques of the non-isothermal kinetics cannot be employed when the model consists of more than one reaction. Besides, a complex model contains too many unknown parameters compared to the information content of a single thermal analysis experiment. In such cases the simultaneous evaluation of several experiments can be carried out by the method of the nonlinear least squares. The present stage of development of computers and numerical methods facilitates this.

Let us use a notation as follows: \(X^{\text{obs}}\) observations (TG, DTG, DSC or MS signals normalized by the initial sample mass) \(X^{\text{calc}}\) their counterparts simulated from the model Then the following objective function is minimized:

where N exper is the number of experiments evaluated together, N points is the number of t i time values in experiment j, and the w j weight factors express the different uncertainties of the different experimental curves. They also involve a division by N points, as explained later. The minimization of the above objective function can be carried out numerically. The equations of the model are solved for different sets of parameters; the parameter values are changed by a proper algorithm, and the process is repeated till a minimum of the objective function is reached. The proper choice of the initial parameters is important here. Usually, the results of an earlier work can be employed as initial parameters.

History of the LSQ evaluation of series of experiments in the non-isothermal kinetics from 1979 till 1996

The kinetic evaluation of thermal analysis experiments is published in a wide range of journals and conferences; hence, a general survey in the field is difficult. According to our knowledge the first paper dealing with the least squares evaluation of more than one non-isothermal thermoanalytical experiments was published by the first author of the present article nearly 40 years ago [1]. The work contained a section entitled “Least squares evaluation of more than one thermoanalytical curve.” The objective function in this section was identical to Eq. (2) without the w j weight factors. A detailed description was given on the employed numerical methods. A parameter transformation was also described to reduce the compensation effects between the variables. The outline of the algorithm was terminated by a note: “the resulting program can be run on minicomputers of 64 K bytes of total memory. With careful programming the required memory can be diminished below 32 K bytes and the computation can be carried out on desk-top computers.” The quoted sentences reflected the possibilities of the seventies. Note that the memory of a present day desktop computer is between 2 and 16 GB, which shows an increase in around 5 orders of magnitude in the past four decades. The computational speed of the computers has also increased highly.

The method was tested by simulated experiments which were constructed from two partial reactions at a linear and a stepwise heating program [1]. The simulated experiments of this early work were reconstructed now and are shown here in Fig. 1. As the last row of Table 1 indicates in that work [1], the simultaneous evaluation of the noisy curves of Fig. 1c resulted in 3.6 kJ mol−1 error in the determination of the activation energies, while the error of the reaction order was about 0.03. Smaller errors were obtained now, when the evaluations were repeated for the purpose of the present paper: The root mean square error of E and n was found to be 1.1 kJ mol−1 and 0.006, respectively. The smaller errors are probably due to the improved minimization of the objective function by the computers and numerical methods of the twenty-first century.

Construction of simulated experiments in 1979 from two partial reactions at a constant heating rate (a) and a stepwise heating program (b). A random noise of Gaussian distribution was added to the obtained mass loss rate curves (c). (These plots were reconstructed from the data of Ref. [1])

The next paper in this direction appears to be the work of Braun & Burnham [2]. They presented a method that can be employed at any temperature program and employed it to the simulated experiments with constant heating rates. In their Fig. 6 the evaluation of simulated experiments with heating rates of 0.56, 5.6 and 56 °C min−1 is shown. This choice of heating rates is very reasonable because the lowest value, 0.56 °C min−1, corresponds to a practical limit (ca. 18 h per experiment), while the thermoanalytical experiments above 56 °C min−1 are frequently influenced by heat and/or mass transfer limitations. The studied models included a distributed activation energy model (DAEM) which has been a useful tool for the description of complex decomposition reactions for more than 40 years [3]. Burnham et al. [4] have employed this evaluation method for studying the thermal decomposition of kerogens (the portion of organic materials in sedimentary rocks) in 1987, and for coal pyrolysis in 1989 [5]. More than one DAEM was used in their works. Sundararaman et al. [6] also studied the thermal decomposition of kerogens in 1992 by assuming different DAEMs and elaborating a complex algorithm for the evaluation.

Várhegyi et al. employed the LSQ evaluation of a series of non-isothermal experiments for biomass research in 1993 and 1994 [7, 8] and for the study of the controlled combustion of cokes and other chars in 1996 [9]. The article of 1993 [7] dealt with the behavior of cellulose in hermetically closed sample holders where the initial moisture and the volatile products of the pyrolysis remain together with the decomposing sample. The corresponding kinetic model contained competitive and consecutive reactions and included the catalytic effect of the water. Nine DSC experiments were evaluated simultaneously by the method of least squares. The experiments differed in the amount of cellulose and water enclosed in the sample holder. The corresponding DSC curves had different heights which were compensated by the w k weight factors in Eq. (1). The number of digitized points, \(N_{\text{points}}\), also differed in the experiments, and accordingly, the weights had the following form:

here \(h_{\text{j}}\) is the height of the experiment denoted by index j. The division by \(h_{\text{j}}^{2}\) serves as a normalization for the differential curves (which were normalized DSC signals in the given work). Without such a normalization the curves with higher magnitudes were dominating in the objective function and the good fit for the smaller curves would not be ensured. Note that the experimental errors in the thermal analysis are not statistical. The random, independent noises of the digitized points are usually filtered out either by the instrument itself or by the data acquisition software. Accordingly, a higher number of the digitized points does not result in a higher precision; that is why a division by N points is needed. Without this division by N points the experiments containing more digitized data would be overrepresented in the least squares sum. When the experiments have high reaction rates, the kinetics is frequently influenced by heat or mass transport limitations. On the other hand the experiments with low reaction rates may be distorted by the baseline uncertainties. The use of Eq. (3) means that the relative precision of the experiments is regarded to be roughly the same, which is a compromise. We have used the above weight factors since 1993 [7] till the present. Obviously, the mean values of the observations can also be used for normalization instead of the peak maximum. The difference between these two approaches is mainly practical: the mean value strongly depends on the interval selected for the evaluation, while the peak maximum is a more unique quantity.

Shortly afterward further authors started to evaluate their non-isothermal experiments simultaneously [10, 11]. Várhegyi et al. [12] tried to call the attention of the thermal analysis community to the importance of the simultaneous LSQ evaluation of more than one experiments in an article in the Journal of Thermal Analysis in 1996. This work was entitled “Application of complex reaction kinetic models in thermal analysis. The least squares evaluation of series of experiments.”

What temperature programs should be used for a series of experiments?

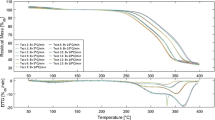

A dependable kinetic model should be based on an ample amount of experimental information. The usual way is to employ constant heating rate temperature programs with different heating rates. However, the experimental curves obtained in this way are frequently very similar to each other. Figure 2 illustrates this similarity for the normalized mass loss rate curves of wood samples by plotting experiments from a recent work [13]. Therefore, the adding of more heating rates to an experimental series does not really increase the amount of available experimental information. According to our experience a set of constant heating rate experiments is frequently not sufficient to discern between different models or model variants.

Normalized mass loss rate curves of a spruce sample showing that the experiments obtained at different heating rates are usually very similar to each other. (Experiments from a recent work [13] are plotted in this figure)

A straightforward way would be the inclusion of isothermal experiments. However, we seldom can produce entirely isothermal experiments in thermal analysis because there is a transient period before reaching the isothermal part where important parts of the reactions might occur. A better way is to heat up the sample in a controlled way, and include the heat up period, too, into the kinetic evaluation. Besides, it is well worth continuing the heat up after the isothermal section to study the continuation of the processes. In other words the isothermal experiments should be handled as experiments at a stepwise T(t) and the kinetic equations should be solved numerically from low to high temperatures, as it was done by Várhegyi [1].

In our opinion, a wide variety of temperature programs should be used. If a model is good, it should describe well the observations at any T(t). In thermal analysis we can easily construct multistep temperature programs from linear sections including heat up, cool down and isothermal sections, as shown in Fig. 3a. If a kinetic model is good, it should describe the experimental data at such temperature programs, too.

Nowadays several thermoanalytical apparatuses have special built-in temperature program features which can also add valuable information to a series of experiments. Figure 3b displays heating programs that were used for studying the thermal decomposition of wood [14]. The wavy line across Fig. 3b is a modulated T(t): Sinusoidal waves with amplitudes of 5 °C and a wavelength of 200 s were superposed on a 2 °C min−1 linear T(t) function. They served to increase the rather limited information content of the linear T(t) experiments. In the “constant reaction rate” (CRR) experiments the equipment regulated the heating of the samples, so that the reaction rate would oscillate around a preset limit. The CRR experiments aimed at getting low mass loss rates in the entire domain of the reaction. The highest mass loss rate was found to be 0.8 μg s−1 in these experiments. The T(t) needed to keep the reaction rate around a preset limit depends obviously on the reactivity of the given sample. Figure 3b displays what the instrument set to the spruce (•••) and the birch (---) samples of the study.

Note that the modulated and the CRR temperature programs have been available for a long time. (The CRR method with a different name was invented by the Paulik brothers nearly 50 years ago.) Their evaluation together with the linear and stepwise heating programs does not need extra efforts: The numerical solution of the model can easily be carried out at any T(t) function. Still this approach is not yet popular. In the field of biomass research we found the simultaneous LSQ evaluation of modulated, CRR and other type of experiments together only in works in which we participated [14,15,16,17,18,19].

An example: the controlled combustion of charcoals

Charcoals are made usually from woods or other lignocellulosic materials. These feedstocks have rather complicated chemical and physical structure. Accordingly, the charcoals are not homogeneous; they contain more and less reactive parts. A simple approach for the kinetic description of the parts with different reactivity is the assumption of pseudo-components. The use of pseudo-components in the biomass research has a long history though the early investigators have not clarified/emphasized by the adjective “pseudo” that their components are not well-defined chemical compounds.

In our work we approximated the charcoal combustion by three pseudo-components. The figures presented here belong to the simultaneous least squares evaluation of 18 experiments on six different charcoal samples [19]. The samples were prepared from wood (spruce) and forest residue by three different ways. More details about the samples, the experiments and their evaluation can be found in Ref. [19]. We show here unpublished figures from the evaluation called “Model Variant II” in that work. Figure 4 shows the behavior of the pseudo-components at a heating at 10 °C min−1. Figure 5 shows how the pseudo-components approximate the experimental mass loss rates at a modulated and a CRR heating program.

In this work each pseudo-component was described by Eq. (1). Such a formula was selected for f(α) which has two adjustable parameters and can approximate the self-acceleration due to increasing pore surface area in the pores of the sample during charcoal combustion [9, 19]. Fifty-two unknown parameters were determined for the six samples from 18 experiments. Hence N param/N exper was 2.9 in this evaluation, meaning that less than three parameters were determined from each experimental curve.

Another example: the thermal decomposition of woods

Woods consist of three major components (cellulose, hemicellulose and lignin), and several minor components. Accordingly, the description of their thermal decomposition requires at least three pseudo-components. Here examples follow from a recent work of Barta-Rajnai et al. [13]. The thermal decomposition of the cellulose component is relatively simple under the usual conditions of thermal analysis. Usually a first-order kinetics gives an adequate approximation, though a self-accelerating kinetics frequently gives somewhat better fit. We followed the latter approach in our recent works: The cellulose component was described by Eq. (1) with the same type of f(α) function which was used in our combustion and gasification studies [9, 13, 14, 16,17,18,19].

The thermal decomposition of the hemicellulose and lignin is more complex. There are several partial reactions. In our opinion the best available way is the use of a distributed activation energy model (DAEM). This approach was elaborated for coals more than 40 years ago [3] and has been used in biomass researches since 1985 [20]. A DAEM approximates the decomposition kinetics of many reacting species. The reactivity differences are described by different activation energies. To keep the number of unknown parameters on a reasonable level a distribution function can be assumed for the activation energies. See more details in the literature, e.g., in the classical work of Anthony et al. [3].

The examples presented here belong to Evaluation 2 in the work of Barta-Rajnai et al. when 18 experiments on one spruce and two torrefied spruce samples were evaluated together. Part of the parameters were chosen common for the three samples, as outlined in the next section. Twenty-one unknown parameters were determined for the three samples from 18 experiments; accordingly, N param/N exper was about 1.2. Figure 6 shows the pseudo-components at a constant heating rate. It may be interesting to note that we cannot call the first pseudo-component as hemicellulose; the term “mainly hemicellulose” is more correct. The interpretation of the third pseudo-component particularly needs caution. The thermal decomposition of the lignin occurs in a particularly wide temperature range in the experimental conditions of thermal analysis: from 200 to 800 °C. (See e.g., the work of Jakab et al. [21] which shows the thermal decomposition of 16 carefully prepared lignin samples at 20 °C min−1 heating in inert atmosphere.) This wide interval illustrates why we need a DAEM for the thermal decomposition kinetics of this material. Besides, the lignin decomposition is overlapping with several other reactions that arise somewhere between 200 °C and the ending temperature of the experiments. That is why a longer text is given for the third pseudo-component in Fig. 6. Figure 7 shows the pseudo-components and the curve fitting at a stepwise temperature program.

The number of the unknown parameters

There are many publications which employ Eq. (1) and regard the activation energy as a function of the reacted fraction, α. (See e.g., the ICTAC Kinetic Project [22]). Practically, it means a graphical or tabular presentation of 20–100 E − A data pairs as function of α. In this way 40–200 kinetic parameters are determined from a few simple experimental curves measured at constant heating rates. In reality, however, the information content of such an experimental series is much smaller.

Figure 8 shows −dm/dt curves simulated at 1, 10 and 100 °C min−1 heating rates, assuming two parallel first-order reactions. The same kinetic parameters were used in Fig. 1, where the overlap of the partial curves is illustrated at a 4 °C min−1 heating rate as well as a stepwise program. This series of experiment is constructed from eight parameters: Each partial curve has an E (146.44 and 167.36 kJ mol−1), an A (0.8167 × 1011 and 6.467 × 1011 s−1), an n (= 1), and an area parameter (= 0.5). These parameter values were taken from Ref. [1]. Note that the true information content of the experiments shown in Fig. 8 is eight parameters. Similarly, the information content of the experiments shown in Fig. 3 is 12 kinetic parameters, or less. The experiments in Fig. 3 are part of a larger series of experiments from the work of Barta-Rajnai et al. [13]. The corresponding evaluation, outlined briefly in the previous section, assumed three pseudo-components and resulted in a reasonable fit for a wide range of temperature programs. Each of the pseudo-components has four parameters, of which three are kinetic parameters and one determines the magnitude of the given partial curve.

However, the application of the Friedman method [23], or other model-free approaches [22], or the Miura–Maki method [24] for a DAEM evaluation would result in a very high number of kinetic parameter values for the experiments shown in Fig. 3 or 8. Note that a computing algorithm almost always results in some numbers; the question is the meaning, the reliability and the uniqueness of these numbers.

Toward the determination of kinetic parameters that are more reliable than the ones filling the literature nowadays

There is no general recipe to achieve this goal. There are a few pieces of advice that might be useful, as listed above. Among others the experiments should be based on a wide range of experimental conditions (as wide as the properties of the given samples, reactions and equipment permit). Frequently several samples are available which share some common features. If so, one can try to describe their reactions by assuming several common parameters. The goal is to base the obtained parameter values on a large amount of experimental information. In the work of Barta-Rajnai et al. [13] the ratio of the evaluated experiments and the determined parameter values, N param/N exper, were near to one, meaning that each parameter value was based on nearly one TGA experiment. This was achieved by a systematic investigation to find which parameters could be assumed identical for the samples without a considerable worsening of the fit quality.

A cross section of recent works that use non-isothermal kinetics

An increasing number of kinetic works are published in thermal analysis. In the last 2 years the Journal of Thermal Analysis and Calorimetry published more than 200 articles containing the word “kinetic” or “kinetics” in their titles. We selected 60 of these articles for a closer look to obtain a cross section on the present state of the field. The selection was based on the relevance of the titles to the subjects of the present work. A quarter of the selected papers were found to be closely related to our treatment, as shown below.

Four papers employed the simultaneous least squares evaluation of more than one constant heating rate experiment. Conesa et al. [25] studied the shredder residues of motor vehicles in this way. Three heating rates (5, 15 and 30 °C min−1) and three different atmospheres (N2 with 0, 10 and 20% O2) were used. The complexity of the studied feedstock was described by assuming three pseudo-components. Their thermal reactions were described by a distributed activation energy model. The model assumed Gaussian distribution on the activation energies. As a comparison the pseudo-components were also described by first-order kinetics. We think that this work was the closest match to the considerations outlined in the present article. In a subsequent work Conesa and Soler [26] studied biomass, electronic wastes and their mixture by similar means. In that work the reactions of the pseudo-components were described by first-order and n-order kinetics. Yang et al. [27] examined the combustion properties of peats by the simultaneous least squares evaluation of experiments at five heating rates. Three partial reactions were considered: pyrolysis, fuel oxidation and char burn. The partial reactions were described by n-order kinetics. Plis et al. [28] studied the combustion behavior of furniture wood wastes. One of their samples was the untreated waste, while four other samples were made from the original feedstock by thermal pre-treatments (torrefaction). The torrefaction served to improve the fuel properties. A simple kinetic model was used that consisted of two first-order partial reactions. The evaluation was based on the simultaneous evaluation of experiments at 5, 10 and 20 °C min−1 heating rates.

Two further works evaluated the experiments one-by-one by the method of least squares. This procedure is not sufficiently safe, as shown in the next section.

Four articles employed a formal deconvolution of such experimental DTG curves that consisted of overlapping peaks. For the deconvolution each experiment was regarded as sum of some artificial functions. Gaussian, Weibull, Pearson and Fraser–Suzuki functions were used for the approximation of the overlapping peaks in these works. The method is well illustrated by Fig. 10 in the article of Nishikawa et al. [29]. The theory and practice of the deconvolution method are explained in the book of Arhangelskii et al. [30]. However, we do not recommend this type of evaluation for the following reasons:

-

1.

In our opinion there is no need for artificial functions in the deconvolution because the kinetic models themselves can serve for the description of the partial peaks, and the kinetic evaluation of the experiments can directly lead to a deconvolution. (See e.g., Figures 1–7 in the present work).

-

2.

We think that this method introduces artifacts into the evaluation. If Gaussian curves are used, for example, then the obtained kinetics will reflect the properties of the Gaussian curves.

-

3.

The deconvolution is applicable only to constant heating rate measurements and is not suitable for the simultaneous evaluation of more than one experiment.

Four articles divided the complex TGA curves into smaller temperature domains and assumed a kinetic equation of type Eq. (1) in each domain. In these works the kinetic evaluation was carried out separately in each domain by a traditional evaluation method. However, the separation of the overlapping processes cannot be carried out by so simple means. Let us regard Fig. 1 in the work of Cruz and Crnkovic [31] as an example, which shows the oxidative decomposition of a lignocellulosic biomass sample. Here the border between the first and second reaction steps is around 305 °C. However, the thermal decomposition of the cellulose is far from being terminated at this temperature, while the reactions of the cellulose start earlier in this material. (That is why the two partial peaks overlap.) Besides, the thermal decomposition reactions of the lignin component take place everywhere between 200 and 600 °C at a considerable reaction rate [21]. This example illustrates why we cannot deduce the reacted fractions of the partial processes from the experimental TGA curves by this method.

Why one experiment is not enough for a dependable kinetic evaluation

Numerous works have shown in the literature that a single TGA experiment can be described by many ways; accordingly, a kinetic evaluation based only on one experiment is ill defined. Here we add a new example that shows the similarities of the n-order kinetics and DAEM kinetics with very different activation energies in a non-isothermal experiment.

A DTG curve was simulated at 5 °C min−1 heating rate by a DAEM model assuming a mean activation energy, E 0, of 200 kJ mol−1 and a Gaussian distribution of the activation energy with a deviation, σ(E), of 10 kJ mol−1. This curve is represented by circles (○○○) in Fig. 9a. The pre-exponential factor was chosen so that the peak maximum would be close to that of a typical biomass decomposition at this slow heating rate. Afterward, this curve was approximated by another DAEM curve where σ(E) was fixed to be 20 kJ mol−1. The method of least squares was used for this approximation. Though very different E 0 and A values were obtained, the two curves are indistinguishable at 5 °C min−1 as Fig. 9a shows. Finally the original curve of E 0 = 200 and σ(E) = 10 kJ mol−1 was approximated by a simple n-order kinetics, and a good fit was obtained again. However, the high similarity of these curves is restricted only to one heating rate. When the simulations were carried out at 50 °C min−1 with exactly the same kinetic parameter values, the three curves highly differed from each other, as shown in Fig. 9b. Table 1 shows the kinetic parameters, the peak maxima and the peak widths (full width at half maximum, FWHM) belonging to Fig. 9.

Conclusions

The non-isothermal kinetics of complex processes is not an easy field if it aims at well defined, reliable results. This work discussed some aspects of it based on several decades of experience. The main points were:

-

The materials of practical importance seldom have simple thermal behavior.

-

The traditional models and evaluation methods of the non-isothermal kinetics are usually not suitable for materials with complicated chemical and/or physical structure.

-

One should look for such models which reflect more or less the complexity of the studied processes.

-

The evaluation should be based on an ample amount of experimental information.

-

It is advantageous to evaluate simultaneously experiments with linear and nonlinear temperature programs because a set of linear temperature programs (constant heating rate experiments) is frequently not sufficient to distinguish between different models or model variants.

-

The method of least squares is highly advisable for the evaluation of series of experiments because it can be carried out for any model complexity and any sort of temperature program at the present level of computers and numerical methods.

-

Sometimes different samples share some common features. In such cases one can try to describe their reactions by assuming parts of the kinetic parameters to be common for the samples.

-

The points listed above aim to base the obtained models and parameter values on a large amount of experimental information in a reliable way.

References

Várhegyi G. Kinetic evaluation of non-isothermal thermoanalytical curves in the case of independent reactions. Thermochim Acta. 1979;28:367–76. https://doi.org/10.1016/0040-6031(79)85140-0.

Braun RL, Burnham AK. Analysis of chemical reaction kinetics using a distribution of activation energies and simpler models. Energy Fuels. 1987;1:153–61. https://doi.org/10.1021/ef00002a003.

Anthony DB, Howard JB, Hottel HC, Meissner HP. Rapid devolatilization of pulverized coal. In: Symposium (international) on combustion 1975 Jan 1. Elsevier; (Vol. 15, No. 1, pp. 1303–1317). https://doi.org/10.1016/s0082-0784(75)80392-4.

Burnham AK, Braun RL, Gregg HR, Samoun AM. Comparison of methods for measuring kerogen pyrolysis rates and fitting kinetic parameters. Energy Fuels. 1987;1(452):458. https://doi.org/10.1021/ef00006a001.

Burnham AK, Oh MS, Crawford RW, Samoun AM. Pyrolysis of Argonne premium coals: activation energy distributions and related chemistry. Energy Fuels. 1989;3:42–55. https://doi.org/10.1021/ef00013a008.

Sundararaman P, Merz PH, Mann RG. Determination of kerogen activation energy distribution. Energy Fuels. 1992;6:793–803. https://doi.org/10.1021/ef00036a015.

Várhegyi G, Szabó P, Mok WSL, Antal MJ Jr. Kinetics of the thermal decomposition of cellulose in sealed vessels at elevated pressures. Effects of the presence of water on the reaction mechanism. J Anal Appl Pyrol. 1993;26:159–74. https://doi.org/10.1016/0165-2370(93)80064-7.

Várhegyi G, Jakab E, Antal MJ Jr. Is the Broido–Shafizadeh model for cellulose pyrolysis true? Energy Fuels. 1994;8:1345–52. https://doi.org/10.1021/ef00048a025.

Várhegyi G, Szabó P, Jakab E, Till F, Richard J-R. Mathematical modeling of char reactivity in Ar–O2 and CO2–O2 mixtures. Energy Fuels. 1996;10:1208–14. https://doi.org/10.1021/ef950252z.

Conesa JA, Caballero J, Marcilla A, Font R. Analysis of different kinetic models in the dynamic pyrolysis of cellulose. Thermochim Acta. 1995;254:175–92. https://doi.org/10.1016/0040-6031(94)02102-T.

Conesa JA, Marcilla A, Font R, Caballero JA. Thermogravimetric studies on the thermal decomposition of polyethylene. J Anal Appl Pyrol. 1996;36:1–5. https://doi.org/10.1016/0165-2370(95)00917-5.

Várhegyi G, Antal MJ Jr, Szabó P, Jakab E, Till F. Application of complex reaction kinetic models in thermal analysis. The least squares evaluation of series of experiments. J Thermal Anal. 1996;47:535–42. https://doi.org/10.1007/bf01983995.

Barta-Rajnai E, Várhegyi G, Wang L, Skreiberg Ø, Grønli M, Czégény Zs. Thermal decomposition kinetics of wood and bark and their torrefied products. Energy Fuels. 2017;31:4024–34. https://doi.org/10.1021/acs.energyfuels.6b03419.

Tapasvi D, Khalil R, Várhegyi G, Tran K-Q, Grønli M, Skreiberg Ø. Thermal decomposition kinetics of woods with an emphasis on torrefaction. Energy Fuels. 2013;27:6134–45. https://doi.org/10.1021/ef4016075.

Becidan M, Várhegyi G, Hustad JE, Skreiberg Ø. Thermal decomposition of biomass wastes. A kinetic study. Ind Eng Chem Res. 2007;46:2428–37. https://doi.org/10.1021/ie061468z.

Tapasvi D, Khalil R, Várhegyi G, Skreiberg Ø, Tran K-Q, Grønli M. Kinetic behavior of torrefied biomass in an oxidative environment. Energy Fuels. 2013;27:1050–60. https://doi.org/10.1021/ef3019222.

Wang L, Sandquist J, Várhegyi G, Matas Güell B. CO2 gasification of chars prepared from wood and forest residue. A kinetic study. Energy Fuels. 2013;27:6098–107. https://doi.org/10.1021/ef401118f.

Wang L, Várhegyi G, Skreiberg Ø. CO2 gasification of torrefied wood. A kinetic study. Energy Fuels. 2014;28:7582–90. https://doi.org/10.1021/ef502308e.

Wang L, Várhegyi G, Skreiberg Ø, Li T, Grønli M, Antal MJ. Combustion characteristics of biomass charcoals produced at different carbonization conditions. A kinetic study. Energy Fuels. 2016;30:3186–97. https://doi.org/10.1021/acs.energyfuels.6b00354.

Avni E, Coughlin RW, Solomon PR, King HH. Mathematical modelling of lignin pyrolysis. Fuel. 1985;64:1495–501. https://doi.org/10.1016/0016-2361(85)90362-X.

Jakab E, Faix O, Till F. Thermal decomposition of milled wood lignins studied by thermogravimetry/mass spectrometry. J Anal Appl Pyrol. 1997;40:171–86. https://doi.org/10.1016/S0165-2370(97)00046-6.

Vyazovkin S. Computational aspects of kinetic analysis. Part C. The ICTAC Kinetics Project—the light at the end of the tunnel? Thermochim Acta. 2000;355:155–63. https://doi.org/10.1016/S0040-6031(00)00445-7.

Friedman HL. Kinetics of thermal degradation of char-forming plastics from thermogravimetry. Application to a phenolic plastic. J Polym Sci Polym Symp. 1964;6:183–95. https://doi.org/10.1002/polc.5070060121.

Miura K, Maki T. A simple method for estimating f(E) and k0(E) in the distributed activation energy model. Energy Fuels. 1998;12:864–9. https://doi.org/10.1021/ef970212q.

Conesa JA, Rey L, Aracil I. Modeling the thermal decomposition of automotive shredder residue. J Therm Anal Calorim. 2016;124:317–27. https://doi.org/10.1007/s10973-015-5143-6.

Conesa JA, Soler A. Decomposition kinetics of materials combining biomass and electronic waste. J Therm Anal Calorim. 2017;128:225–33. https://doi.org/10.1007/s10973-016-5900-1.

Yang J, Chen H, Zhao W, Zhou J. Combustion kinetics and emission characteristics of peat by using TG–FTIR technique. J Therm Anal Calorim. 2016;124:519–28. https://doi.org/10.1007/s10973-015-5168-x.

Plis A, Kotyczka-Morańska M, Kopczyński M, Łabojko G. Furniture wood waste as a potential renewable energy source. J Therm Anal Calorim. 2016;125:1357–71. https://doi.org/10.1007/s10973-016-5611-7.

Nishikawa K, Ueta Y, Hara D, Yamada S, Koga N. Kinetic characterization of multistep thermal oxidation of carbon/carbon composite in flowing air. J Therm Anal Calorim. 2017;128:891–906. https://doi.org/10.1007/s10973-016-5993-6.

Arhangelskii I, Dunaev A, Makarenko I, Tikhonov N, Belyaev S, Tarasov A. Non-isothermal kinetic methods: workbook and laboratory manual. Berlin: Edition Open Access. 2013. Downloadable from internet address http://edition-open-access.de/textbooks/1/index.html.

Cruz G, Crnkovic PM. Investigation into the kinetic behavior of biomass combustion under N2/O2 and CO2/O2 atmospheres. J Therm Anal Calorim. 2016;123:1003–11. https://doi.org/10.1007/s10973-015-4908-2.

Acknowledgements

The authors acknowledge the financial support by the Research Council of Norway and a number of industrial partners through the project BioCarb + (“Enabling the Biocarbon Value Chain for Energy”).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Várhegyi, G., Wang, L. & Skreiberg, Ø. Towards a meaningful non-isothermal kinetics for biomass materials and other complex organic samples. J Therm Anal Calorim 133, 703–712 (2018). https://doi.org/10.1007/s10973-017-6893-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10973-017-6893-0