Abstract

The minimum detectable dose (MDD) limit was examined in four different ways for groups of LiF:Mg,Ti thermoluminescent dosimeters, and two ways for CaF2:Dy, CaF2:Tm, CaF2:Mn, and CaSO4:Dy dosimeters. All types were irradiated and read out at dose intervals from 8.8 μGy to 6.6 mGy. Dose response linearity was never lost even for the lowest dose tested. As an ideal MDD, the signal arising from a zero applied dose readout was compared to calibration from true doses, resulting in signal corresponding to 0.04–0.1 μGy. The effects of fading and high ambient radon exposure on the MDD were examined.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Theory

Different detector types can be compared through their ability to determine a minimum amount of radioactive material or dose. This is dependent on the background radiation level, absolute detection efficiency, radiation source properties, and time of deployment, the last of which being the only one fully under user control. This is no different for thermoluminescent dosimeters (TLDs), and a study was conducted to identify and quantify as many of these factors as possible.

There are various definitions of the minimum dose limit, or detection threshold, available in the literature. Piesch and Burgkhardt proposed that the detection threshold D LDL is three times the standard deviation \( s_{{\bar{E}}} \) of unirradiated dosimeters [1]:

Based upon Eq. (1), in one of the studies carried out by Ranogajec-Komor [2, 3], D LDL was found to be 0.2, 7 and 61 μGy for LiF:Mg,Cu,P; CaF2:Mn; and LiF:Mg,Ti dosimeters respectively.

In its 1991 standard, revised in 2006, the International Electrotechnical Commission defined the detection threshold for environmental dosimeters as [4]:

where t n is the student’s t factor for n number of dosimeters, \( s_{{\bar{E}}} \) is the standard deviation for all unirradiated n dosimeters of the evaluated value \( \bar{E} \), and H = 10 μGy for the purposes of environmental monitoring. However, the 2006 standard was withdrawn in 2011 in favor of a more general standard, most recently revised in 2012, which does not contain a test of detection threshold for passive environmental dosimeters [5].

A general treatment of the lower limits of any measurement process involving radiation defines the detection limit L D and determination limit L Q. These were defined by Currie [6] as “true” net signal level which may be expected to lead to detection, and the level at which the measurement precision will be satisfactory for quantitative detection, respectively. In the same work, the detection limit for any detector was given as [6]:

where α and β are the false positive and false negative probabilities, respectively, and k α and k β come from the standard normal distribution and correspond to one-sided confidence levels of 1−α and 1−β.

For a TLD, however, which quantifies radiation in terms of delivered dose rather than detected counts, further work was required to determine the detection and determination limits. Hirning reworked Currie’s equation for the detection limit L D for a TLD as follows [7]:

where t m and t n are Student t-factors for sample sizes of m and n dosimeters respectively at the required confidence level, s b is the sample standard deviation of n background dosimeters, s µ is the relative standard deviation of m dosimeters exposed to “high kerma” (i.e., higher than background), and \( \bar{K}_{b} \) is the measured background air kerma. However, the detection limit is only the boundary at which a TLD can be determined to have had any non-background exposure at all. To specify the minimum air kerma that can be measured with a given precision, the determination limit L Q can be used [7]:

where, s b , s µ , and \( \bar{K}_{b} \) are as before, and k Q is the inverse of the maximum relative standard deviation desired for the measurement.

Other minimum detectable dose limits inherent to the reader and dosimeters also exist in addition to the statistical limits given above. Even if other factors inherent to the dosimeters were completely negligible, the dark current present in the reader’s light collection device, such as a photomultiplier tube (PMT), would serve as a theoretical lower limit to any dose measurement, as no signal weaker than the dark current would appear on readout. However, as a practical matter, the actual lower limit will be higher than the dark current signal due to background and the continuous clearing of energy traps, i.e. fading.

Additionally, accurate measurement of dose is also reliant upon it falling within the dosimeters’ linear dose–response region, in which the signal emitted upon heating is directly proportional to dose. Outside this region, accurate dose quantification is hindered by supralinearity, or over-response in proportion to dose, and sublinearity, or under-response in proportion to dose. Although deviations from linearity can be corrected for with detailed measurement protocols and additional in-depth calibration [8], the breakdown of dose–response linearity at low doses is an important factor to consider when quoting a minimum detectable dose. If additional calibration is not possible or practical, the minimum value of the linear region would provide another minimum detectable dose limit. This value has been reported to be as high as 100 μGy for LiF:Mg,Ti, and as low as 1 μGy for CaF2:Mn and 0.1 μGy for CaF2:Dy [9].

Realistic TLD deployments for personnel monitoring last between 30 and 90 days, possibly longer still for environmental monitoring, and as such deployment time needs to be considered. Additional time can increase the minimum detectable dose limit, as longer deployments increase the background signal \( \bar{K}_{b} \) in a linear fashion with time as well as the sample standard deviation of background dosimeters s b in a square root fashion following the statistics of accumulation times [10].

In addition to accumulated background signal, however, long deployment times may cause the effect of fading to be significant. In the case of LiF:Mg,Ti over a typical personnel monitoring deployment period of 30 to 90 days, the sum of peaks 4 and 5 do not fade significantly [11, 12], even at high ambient temperatures up to 40 °C [13]. If peaks 2 and 3 are considered as well, their fading could cause the signal actually seen to drop below the minimum detectable signal level. Known fading rates could be used to augment a time-sensitive minimum detectable dose function with the time it takes for a certain prompt dose to fade below the limit.

Finally, at low doses, individual glow curve peaks may not be discernable by the computer if using computerized glow curve analysis software. If such a program is necessary for the desired dosimetry application, such as for determination of post-irradiation time in LiF:Mg,Ti, which requires the measurement of the areas of peaks 2, 3, 4, and 5 [14] and no other limit applies, some dosimetry applications may not be possible once the individual peaks in a glow curve are no longer separable by the computer.

Experimental

Dose–response linearity experiment

Twenty TLD chips each of LiF:Mg,Ti, CaF2:Dy, CaF2:Tm, CaF2:Mn, and CaSO4:Dy (BICRON/Harshaw, 6801 Cochran Road, Solon, OH 44139, USA) were used in an experiment to determine the minimum detectable dose for each type. All chips were of dimensions 3.2 × 3.2 × 0.9 mm. These were calibrated to a dose of 4.4 mGy using a 320 GBq 137Cs source facility [15] while mounted to a polytetrafluoroethylene calibration phantom [16].

Each TLD was calibrated three times, deemed adequate for characterization of individual chip sensitivities [17, 18], then read out with a standard TLD reader with hot gas and hot planchet capabilities (Model 4500 TLD Reader, BICRON/Harshaw, 6801 Cochran Road, Solon, OH 44139, USA). Readout cycles were performed using nitrogen gas (Pre-purified compressed nitrogen cylinder 300, Metro Welding Supply Corporation, 12620 Southfield Road, Detroit, MI 48223, USA) flowing through the chamber to suppress light effects that occur only with oxygen present, such as triboluminescence [19]. Annealing was performed using a standard readout cycle, during which the planchet was heated to 50 °C, then heated to 300 °C at a rate of 10 °C s−1. The planchet was held at 300 °C until 33.3 s after the cycle started, then cooled to 50 °C where the TLD chip could then be replaced.

After calibration, the TLDs were exposed to 6.6 mGy, a relatively high dose compared to the manufacturer’s stated minimum detectable dose of 100 µGy. To see if dose response linearity failed at doses lower than the manufacturer’s stated minimum detectable dose, the process was then repeated for incrementally lower doses of 4.4 and 2.2 mGy; and 880, 660, 440, 220, 180, 130, 90, 40, and 8.8 µGy, in keeping with previous experiments (e.g. [20]). Higher doses were not tested as the TLD materials used were previously shown to exhibit dose–response linearity to at least 1 Gy [11]. Lower doses could not be tested due to the relatively high strength of the source and limitations in the minimum irradiation time.

A maximum of 24 h of pre-irradiation and 1 h of post-irradiation fading was allowed to occur between annealing and readout. Fading effects were compensated for using the results of a previous experiment [11]. The glow curve data were obtained using the packaged TLD reader software (WinREMS version PL-26732.8.0.0.0, BICRON/Harshaw, 6801 Cochran Road, Solon, OH 44139, USA) and the glow curve separated into individual glow peaks using a computerized glow curve analysis (GCA) program [20] for a numerical computing package (MATLAB R2010b, The MathWorks Inc., 3 Apple Hill Drive, Natick, MA 01760).

At the lowest dose levels, the dark current was observed and compared to the maximum glow peak height. An approximate dose was determined from the dark current signal, in order to determine the ideal minimum detectable dose if this were solely determined by the dark current. Fading and background radiation dose were ignored for this experiment.

During all other experiments, the GCA program used was observed for analysis of low dose glow curves to determine if the computer had more difficulty with or was unable to perform the separation of individual glow peaks from the glow curve at low doses. This was order to determine if a lower dose limit applies should GCA be necessary for desired dosimetry applications.

Detection and determination limits

In order to obtain detection and determination limits proposed by Hirning and presented as Eqs. 2 and 3, influences on the three main factors present in both equations were determined. These are sample standard deviation s b , measured background air kerma \( \bar{K}_{b} \), and relative standard deviation of high kerma dosimeters s μ .

Several factors inherent to the reader, dosimeters, and calibration contribute to dosimeter signal precision, increasing s μ . These include reader drift, TLD readout history, and source and dosimeter positioning if calibrating multiple dosimeters at once. Though difficult to quantify separately, these can be corrected for to result in an s μ of 10 % or less for new or nearly new dosimeters. An increase in the number of calibrations beyond that which is normally necessary for dosimetry can decrease the statistical variance for high kerma dosimeters as well, reducing s μ accordingly.

Factors not involving irradiation, such as reader drift, may contribute to s b . However, previous work has shown s b to be dominated by background signal, varying with time according to the statistics of accumulation times as background signal is accumulated [10].

Factors contributing to background signal, increasing \( \bar{K}_{b} \), will cause s b to increase as well as it is not a relative value. In addition to background radiation at the storage location, radon can also increase background signal for TLDs [21]. For personnel dosimetry, movement through multiple areas may change background signal with time dramatically, though for simplicity this effect was ignored, with background signal assumed to be constant with time.

Data from a previous work [17] were used to apply fading to a previous study [10] of the effect of deployment time on the detection and determination limits. Though fading did not significantly affect s μ , s b or \( \bar{K}_{b} \), it may cause the TLD signal to drop below the signal required for a minimum detectable dose with a prompt measurement. All of the above factors were manipulated individually in order to determine their practical effects on the detection and determination limits.

Results and discussion

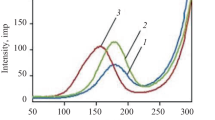

Linearity was confirmed in all five tested TLD materials from 8.8 μGy to 6.6 mGy, as shown in Fig. 1. R 2 correlation values were 0.997 or greater over the entire dose range for all TLD materials tested, confirming dose–response linearity. The high range exhibited strong linearity as expected, and the dose response remained linear down to the lowest tested dose level, with R 2 values of 0.987 or greater over the low dose range 8.8 μGy to 0.88 mGy; results in that range may be seen more clearly in Fig. 2.

Below 180 μGy, the individual glow peaks of LiF:Mg,Ti blended together, with the GCA program requiring as many as ten times more iterations to separate the glow curve accurately. Below 88 μGy, the GCA program became ineffective, unable to separate the glow peaks at all. An example of this peak blending seen at 44 μGy is shown in Fig. 3. Despite this, the total glow curve area remained linear with dose. Glow curves from all other TLD types could still be separated accurately down to 8.8 μGy, the lowest dose tested.

In addition, the simple use of a GCA program causes an increase in s µ . As the fitted functions for sum of the individual glow peaks does not exactly match the original glow curve data, a small error is imparted when using peak areas computed by the program instead of the total glow peak area measured directly from the original TLD reader’s light sensor data. A typical measure of the goodness of fit of a GCA program, the figure of merit (FOM), quantifies this effect [22]:

where A is area under the individual peak or the entire glow curve, j start and j stop are the starting and ending temperatures in the fit region respectively, y j is light sensor current (in this case, a photomultiplier tube) at temperature j, and y(x j ) is the value of the fit function at temperature j. A value of 1.75 % or less is typical of GCA programs. The effect of a larger FOM on s µ is difficult to quantify in general, as it depends on GCA program parameters, the number of peaks in the TLD material studied, and the high kerma dosimeter set size at a minimum. If the absolute minimization of dose limits is desired, a computerized GCA method should be avoided.

A thermoluminescent signal from a TLD can be obscured by dark current. One way of defining the minimum detectable dose is that which is perceptible above the dark current level. For the TLD reader used for this study with LiF:Mg,Ti, the PMT dark current’s equivalent dose level is stated to be less than 1 µGy by the manufacturer. This was verified by comparing values of the dark current level to the maximum of the tallest peak of the glow curve for each TLD material tested. Each was found to be less than 1 µGy, with results summarized in Table 1.

The presence or absence of radon, though previously found to affect background signal, and hence \( \bar{K}_{b} \), in bare LiF:Mg,Ti TLDs [11], was not found to significantly affect s b . Even in TLD groups not exposed to additional radon, s b fluctuated by as much as 20 % from group to group. Background signal showed an increase of 2.16 µGy for each MBq m−3 h of integrated radon. The effect of high radon concentrations up to 37 kBq m−3 for a 7 day exposure, using a fixed radiation background air kerma rate of 0.131 µGy h−1, is shown in Fig. 4. Values used in Fig. 4 used in Eqs. 2 and 3 were s µ = 0.0519, s b = 4.71, \( \bar{K}_{b} \) = 14.7 µGy, and k Q = 10.

As variance in \( \bar{K}_{b} \) alone has little effect on the detection and determination limits, very large radon concentrations are required before a significant difference is seen. A radon concentration of 18.5 kBq m−3 for 7 day is required to cause a 10 % increase in the 71 µGy determination limit, and no significant difference was found in the detection limit. Compared to the United States Environmental Protection Agency’s action level of 148 Bq m−3 [23], a very high radon concentration is required to have a significant effect on minimum detectable dose, though quantities as high as 410 kBq m−3 have been measured in homes [24]. If extrapolated to this value, the determination limit becomes 230 µGy, a clearly non-trivial increase. The detection limit only increases from 16 to 18 µGy.

In order to determine the effect of deployment time on the minimum dose limits, modifications were made to Eqs. 2 and 3 to replace the background air kerma \( \bar{K}_{b} \) and the sample standard deviation of dosimeters exposed to background s b with parameters that account for the accumulation of background radiation dose with time. In so doing, the background air kerma was found to be \( \bar{K}_{b} = \tau \cdot \bar{K}_{b} \), where\( \tau \) is measured in days and \( \bar{K}_{b} \) is the average daily background air kerma rate. The sample standard deviation of the background dosimeters was found to be \( s_{b} = \sqrt {s_{0}^{2} + \tau \cdot \alpha_{b} } \), where s 0 is the sample standard deviation with no deployment time and α b is the statistical variance of the daily air kerma value, which varies with the square root of τ based on the statistics of accumulation times. Using data from a previous experiment and methodology from Traino [10], \( \bar{K}_{b} \) for this dosimetry system was found to be 2.10 µGy day−1, s 0 to be 4.71 µGy and α b to be 9.7 × 10−4 µGy2 day−1.

Though fading could not be introduced into the time-sensitive detection and determination limits, as fading does not significantly change any of the included parameters, fading data from a previous experiment were used to determine the storage time necessary before irradiations to known low doses fell below the detection and determination limits. The time value at which a limit curve and a fading curve intersect is the maximum storage time allowed before fading causes a signal to be undetectable.

Pre-irradiation and post-irradiation fading functions for the entire LiF:Mg,Ti glow curve were compared to the detection and determination limits for various prompt doses over a typical 90 day deployment period, using the same statistical values as before. Graphical results for a prompt dose of 100 μGy are shown in Fig. 5. The time axis value of the crossing point between a fading function and a limit indicates the time necessary for fading to push the signal below a limit. For 100 μGy, approximately 12 day of post-irradiation fading are required for the signal to fall below the determination limit, while 32 day of pre-irradiation fading is required. The sharp drop in the post-irradiation fading function is due to the rapid fading of peaks 2 and 3. Excluding these peaks renders the effect of fading on minimum detectable dose trivial, however, as the fading of the sum of peaks 4 and 5 is negligible over 90 day. Additional results for other prompt dose levels are given in Table 2. Prompt doses of 25 µGy are detectable for at least 4 days even with fading, and surpass the determination limit at 75 µGy. At 200 µGy, fading does not cause signal loss below the determination limit for at least 52 days of deployment.

Pre-irradiation and post-irradiation fading functions for LiF:Mg,Ti compared to the detection and determination limits for a prompt dose of 100 μGy. Crossing points between fading functions and limits denote time required for fading to push signal below a limit and are expanded upon for other prompt doses in Table 2

Previous work found s μ to improve from 4.2 % after a single calibration to 3.2 % after three calibrations and 3.0 % after five calibrations. The effectiveness of additional calibration drops sharply after three calibrations, with the effect on s μ becoming insignificant after five calibrations [12]. A reduction in s μ from 5 to 4 % has only a small effect on the detection limit, but a profound effect on the determination limit at high radiation background values. For example, at a background kerma of 200 µGy, the detection limit is reduced from 165 to 110 µGy when s μ is reduced from 5 to 4 %.

Conclusions

Dose and response continue a linear relationship for doses even as low as 8.8 μGy, much less than the manufacturer-stated minimum of 100 μGy for the five TLD materials studied. An ideal minimum detectable dose can be determined in comparison to the PMT dark current, which was found to range from an equivalent dose of 0.04 μGy in CaF2:Dy to 0.1 μGy in CaF2:Tm and CaSO4:Dy. However, this holds true only with background radiation and fading neglected, an impractical assertion. Also, if a different TLD readout system is used that has a higher dark current level, such as a CCD or photodiode array, this value may become limiting instead of the detection and determination limits if the equivalent dose of the dark current signal is higher than the detection limit from the TLD material statistics alone.

If the dosimetry application requires computerized GCA, blending of adjacent peaks in LiF:Mg,Ti at low doses causes difficultly for the computer below 180 μGy. At 88 μGy and below, the GCA program tested is unable to separate the peaks of the LiF:Mg,Ti glow curve. CaF2:Dy, CaF2:Tm, CaSO4:Dy, and LiF:Mg,Cu,P could still be analyzed by the computer down to 8.8 μGy, however, and the peak blending seen in LiF:Mg,Ti could still be analyzed by an integrating region of interest method to confirm dose–response linearity at low doses.

Radon can affect the minimum detection and determination limits for LiF:Mg,Ti by increasing the background air kerma \( \bar{K}_{b} \), though other statistical parameters are unaffected. A very high radon concentration of 18.5 kBq m−3 for 7 days was required to increase the determination limit by 10 % from an original value of 70 µGy. The detection limit remained nearly unaffected. Though a longer deployment time would increase the integrated radon level, it would increase the background air kerma \( \bar{K}_{b} \) as well. Unless the radon concentration is very high, the dose attributed to it alone will be small compared to radiation background. Still, LiF:Mg,Ti dosimeters left in an area with a high radon concentration may experience a slightly increased minimum detectable dose as a result of radon exposure. Before making a correction, the planned badge enclosure should be tested for radon permeability, as at least one common environmental dosimeter badge has been shown to be impervious to radon over short time periods [21].

Although fading does not affect the standard deviation of background or high kerma dosimeter groups, fading functions can be used to determine the amount of time in storage required for the signal from various prompt doses to fall below the detection and determination limits. When using the entire integrated glow curve, it should be noted that peaks 2 and 3 in LiF:Mg,Ti fade very quickly post-irradiation, causing a much faster approach to the limits. This may be remedied by considering peaks 4 and 5 only, though this would cause problems with dosimetry applications requiring other peaks, such as the determination of post-irradiation fading time, which requires peak 2 [14]. In general, over a 90 day deployment period, a prompt dose of below 75 µGy caused crossover with the detection limit within 90 days, while a prompt dose of above 75 µGy did not cross the detection limit but did cross the determination limit within 90 days. Fading data from other dosimeter types could be studied similarly in order to determine realistic detection and determination limits for more common materials.

References

Piesch E, Burgkhardt B (1984) Environmental monitoring, European interlaboratory test programme for integrating dosemeter systems. Commission of the European Communities, Luxemburg (EUR 8932)

Ranogajec-Komor M (2009) In: Aycik GA (ed) New techniques for the detection of nuclear and radioactive agents. Springer, Dordrecht, pp 97–111

Ranogajec-Komor M, Knežević Ž, Miljanić S, Vekić B (2008) Characterisation of radiophotoluminescent dosimeters for environmental monitoring. Radiat Meas 43:392–396

International Electrotechnical Commission (2006) Thermoluminescence dosimetry systems for personal and environmental monitoring. IEC, Geneva (61066:1991)

International Electrotechnical Commission (2012) Radiation protection instrumentation—passive integrating dosimetry systems for environmental and personal monitoring. IEC, Geneva (EC 62387-1:2012)

Currie LA (1968) Limits for qualitative detection and quantitative determination: application to radiochemistry. Anal Chem 40:586–593

Hirning CR (1992) Detection and determination limits for thermoluminescence dosimetry. Health Phys 62:223–227

Knoll GF (2010) Radiation detection and measurement. Wiley, New York, pp 733–778

Busuoli G (1981) In: Oberhofer M, Scharmann A (eds) Applied thermoluminescence dosimetry. CRC Press, Bristol, pp 83–96

Traino AC, Perrone F, Luperini C, Tana L, Lazzeri M, d’Errico F (1998) Influence of background exposure on TLD minimum dose detection and determination limits. Radiat Prot Dosim 78:257–262

Harvey JA, Haverland NP, Kearfott KJ (2010) Characterization of the glow-peak fading properties of six common thermoluminescent materials. Appl Radiat Isot 68:1988–2000

Harvey JA, Kearfott KJ (2011) Reproducibility of glow peak fading characteristics of thermoluminescent dosimeters. Radiat Meas 46:319–322

Harvey JA, Kearfott KJ (2012) Effects of high ambient temperature on glow-peak fading properties of LiF:Mg,Ti thermoluminescent dosemeters. Radiat Prot Dosim 149:109–115

Weinstein M, German U, Dubinsky S, Alfassi ZB (2003) On the determination of the postirradiation time from the glow curve of TLD100. Radiat Prot Dosim 106:121–130

Studenski MT, Haverland NP, Kearfott KJ (2007) Simulation, design, and construction of a 137Cs irradiation facility. Health Phys 92:S78–S86

Parker LW, Harvey JA, Kearfott KJ (2011) An integrated system for the beta, gamma and neutron calibration and storage of thermoluminescent dosimeters for a research laboratory. Health Phys 100:S43–S49

Harvey JA, Thomas EM, Kearfott KJ (2011) Quantification of various factors influencing the precision of thermoluminescent detector calibrations for new and used chip sets. Health Phys 100:S79–S91

Simpkins RW, Kearfott KJ (1997) The minimum number of observations necessary to develop an average thermoluminescent dosimeter element correction factor. Radiat Prot Manag 13:55–61

McKeever SWS, Moscovitch M, Townsend PD (1995) Thermoluminescence dosimetry materials: properties and uses. Ramtrans Publishing, Ashford

Harvey JA, Rodrigues ML, Kearfott KJ (2011) A computerized glow curve analysis (GCA) method for WinREMS thermoluminescent dosimeter data using MATLAB. Appl Radiat Isot 69:1282–1286

Harvey JA, Kearfott KJ (2011) The effects of high ambient radon on thermoluminescence dosimetry readings. Radiat Prot Dosim 147:491–497

Bos AJJ, Piters TM, Gomez Ros JM, Delgado A (1994) An intercomparison of glow curve analysis computer programs: II. Measured glow curves. Radiat Prot Dosim 51:257–264

United States Environmental Protection Agency (2012) A citizen’s guide to radon. EPA 402/K-12/002. United States Environmental Protection Agency, Washington

Kearfott KJ (1989) Preliminary experiences with Rn222 in Arizona homes. Health Phys 56:169–179

Acknowledgments

Muhammad Rafique is grateful to the Higher Education Commission of Pakistan for providing his postdoctoral fellowship through Grant No. Ref: 2-6(22)/PDFP/HEC/2013/14.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Harvey, J.A., Kearfott, K.J. & Rafique, M. Dose response linearity and practical factors influencing minimum detectable dose for various thermoluminescent detector types. J Radioanal Nucl Chem 303, 1711–1718 (2015). https://doi.org/10.1007/s10967-014-3794-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-014-3794-6