Abstract

This paper proposes a new decision making/optimization paradigm, the decision making/optimization in changeable spaces (DM/OCS). The unique feature of DM/OCS is that it incorporates human psychology and its dynamics as part of the decision making process and allows the restructuring of the decision parameters. DM/OCS is based on Habitual Domain theory, the decision parameters, the concept of competence set, and the mental operators 7-8-9 principles of deep knowledge. The covering and discovering processes are formulated as DM/OCS problems. Some illustrative examples of challenging problems that cannot be solved by traditional decision making/optimization techniques are formulated as DM/OCS problems and solved. In addition, some directions of research related to innovation dynamics, management, artificial intelligence, artificial and e-economics, scientific discovery, and knowledge extraction are provided in the conclusion.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Decision making pervades human life. The quality of life of any individual, family, group, organization, and of society depends directly on the quality of the decisions made by the mandated decision makers (DMs). Therefore, a sound theoretical framework is essential for deriving sound decisions. There are two approaches to decision making: qualitative and quantitative approaches. In this paper, we focus on the quantitative approach. The quantitative approach to decision making went through two major stages. The early approach to decision making was essentially deterministic. It assumed that a fixed set X of decisions or alternatives is exactly known and unchangeable, and a utility (or value) function u(⋅) that represents the preferences of the DMs, can be exactly determined. To each decision x in X, a value u(x) is associated; a decision x is preferred to a decision y if u(y)<u(x). Then the problem of finding the best or optimal decision is formulated as an extremum mathematical programming problem, where the utility function u(x) is maximized over the set X (see [1] and references therein). Numerous resolution methods were developed and implemented as linear programming [2] and references therein, and nonlinear programming [3] and references therein. This model was extended to decision making problems involving multiple criteria u 1(x),u 2(x),…,u m (x). Here, also many achievements were made (see [4, 5] and references therein).

In the second stage, the researchers became aware that in order to better model real decision making problems one has to take into account the uncertainties inherent in human judgment and the environment. The traditional deterministic model (single or multiple criteria) was extended to models incorporating partially or completely probabilistic or fuzzy (see [6–9] and references therein) or fuzzy-probabilistic inputs [10]. It is important to note that in probabilistic and/or fuzzy models it is assumed that the uncertain parameters vary within a certain range and have well-known shapes of probability distributions or fuzzy membership functions.

Despite the tremendous theoretical and practical results achieved in the quantitative approach, some important aspects of decision making have not been incorporated in the existing models. Indeed, in real decision making, some parameters may even be intangible. Without special efforts, we may not be aware of their existence. Even when they are noticed, their dimensions, ranges, and shapes may not be easily predetermined or assumed as in probabilistic and/or fuzzy models. Often, real-life decision making problems also involve parameters that are changeable, including the set of alternatives, the criteria, and the DMs, as situations and psychological states of the DMs change. Discovering and controlling the change of these parameters is a vital part of the process of solving challenging decision making problems. A decision making problem involving changeable parameters is called a decision making in changeable spaces (DMCS) problem. The following examples illustrate this new class of problems.

Example 1.1

(Game of silence [11])

Silence fell over a young couple after a family quarrel. They did not talk to each other for two days. The situation became uneasy for husband and wife. No one of them wanted to break the silence first because of the fear of losing face.

This problem cannot be formulated in the framework of traditional mathematical programming because the utility functions of the couple, and its set of alternatives, are not known. Indeed, the couple is in a stressful state and the solutions or strategies for solving the problem (return to normal life), have yet to be generated. Here, the couple faces a discovering-covering (dis/covering for short) problem. They want to return to normal life (covering a target), but they do not know how to achieve this target (discovering solutions).

Example 1.2

(Horse Race [12])

A retiring corporate chairman invited to his ranch two finalists (A and B) from whom he would select his replacement using a horse race. A and B, equally skillful in horseback riding, were given a black and white horse, respectively. The chairman laid out the course for the horse race and said, “Starting at the same time now, whoever’s horse is slower in completing the course will be selected as the next chairman!” After a puzzling period, A jumped on B’s horse and rode as fast as he could to the finishing line, while leaving his own horse behind. By the time B realized what was going on, it was already too late. Naturally, A became the new chairman.

Here, we have a dis/covering problem; the two finalists were puzzled at the beginning, it was not immediately apparent what strategy was needed to win the race, they had to discover winning strategy themselves.

Example 1.3

(Prisoner Dilemma)

A business man appointed two of his sons as managers of two of his companies that produced the same products in the same region. After a period of good relations and cooperation, the two brothers started to compete for the market share by cutting down their prices. Both brothers were caught in this decision trap from which they could not get out of. The situation became difficult for both because by cutting their prices so many times, they were approaching their production costs. The one whose price reached his production cost first would naturally have to leave the market, with all the consequences that this implies in terms of losses, which generally lead to bankruptcy.

Here also, being trapped in a price war, the sons had to generate new ideas to solve the conflict satisfactorily. In other words, they faced a discovering problem.

Another example is the well-known Nokia case. Innovation is a key to success in a competitive environment. Nokia lost a large part of its market share when it failed to create new products that satisfied the needs of its customers. Innovation is a discovering process that essentially depends on decision making. Nokia failed to discover the smart phone in time because its decision makers did not see the trend in customer needs. As we see, the discovery process cannot be formulated as a traditional optimization problem, because the set of needs of customers is a changeable space, which Nokia failed to discover. Furthermore, product design requires generating new ideas and solutions that do not exist or are not known a priori.

From Examples 1.1–1.3, it appears clearly that the traditional theoretical framework or paradigm for decision making ignores many important aspects of the decision making process, such as the DMs’ psychological states dynamics and uncertain parameters with unknown range and/or dimensions. In fact, a nontrivial decision making process involves at least two groups of parameters, namely, decision elements and decision environmental facets, which include the human behavioral system [13]. A decision making model that incorporates these two components and DMs’ psychological states would be sounder and closer to reality. Habitual Domain (HD) theory [14–16] is an adequate framework for the development of such a comprehensive model. In this paper, we focus on modeling covering and discovering as DMCS problems, which include a large class of decision making problems. In Sects. 3 and 5, we will present the situations of Examples 1.1–1.3 as DMCS problems. Moreover, we will analyze them and provide their solutions.

When DMs face a problem E, they have a relevant set of skills, competences and resources that is called competence set [17], to handle it (later we will analyze this set). It often happens that a current competence set is not adequate for solving the problem, in which case, its transformation to a suitable competence set is necessary. This very transformation is known as the covering process. Covering can be defined as “how to transform a given set of skills, competences, and resources to cover a targeted set of skills, competences, and resources”.

Given a competence set, what is the best way to make use of it to solve unsolved problems or to create value? The process underlying this problem solving or value creation involves discovering. A discovering process can be defined as identifying how to use available tangible and intangible skills, competences, and resources to solve an unsolved problem or to produce new ideas, concepts, products, or services that satisfy some newly-emerging needs of people. Usually, a nontrivial covering problem involves discovering and vice versa. These two processes are complementary and contrasting. Indeed, covering requires the generation of new ideas and/or concepts, i.e., discovering, while discovering requires some target or objective that the DMs want to reach or achieve, that is, to cover.

Since DMCS problems represent a new class of decision making problems, we introduce a new appropriate optimization paradigm that we call Optimization in Changeable Spaces (OCS), to handle them. The unique characteristic of OCS is that it incorporates human psychology and its dynamics and allows the restructuring of a DMCS to solve it.

The rest of the paper is organized as follows. Section 2 briefly presents the decision making process from HD theory perspective and the decision parameters. Section 3 presents competence set analysis. Section 4 introduces OCS and provides necessary and sufficient conditions for dis/covering completion. Section 5 provides some applications of Sect. 4’s results. Section 6 provides potential applications and research directions. Section 7 concludes the paper.

2 Habitual Domains and Decision Making in Changeable Spaces

As mentioned in the Introduction, the traditional framework for decision making is not appropriate for the formulation and resolution of DMCS problems because it does not take into account the psychological states of the DMs, does not handle parameters with unknown shapes and ranges, and does not allow the restructuring of the decision parameters during the decision process. In this paper, we demonstrate that Habitual Domain (HD) theory [12, 14] can be used as a basis to develop a comprehensive framework for the DMCS problems. Thus, we essentially use HD theory as a general framework to develop our model of dis/covering. In this section, because of space constraint, we briefly introduce the HD theory. For more details, we refer the reader to the books of Yu [12, 14] and [18].

The collection of ideas and actions (including ways of perceiving, thinking, responding, acting, and memory) in our brain, together with their formation, dynamics, and basis in experience and knowledge, is called our Habitual Domain (HD) [18]. Over time, unless extraordinary or purposeful effort is exerted, our HD will become stabilized within a certain domain. This can be proven mathematically [19]. As a consequence, we observe that each of us has habitual ways of eating, dressing, speaking, reacting to specific situations, etc.

The concept of an individual’s HD can be extended to other living entities, such as companies, social organizations, and groups in general. The following are the basic elements of HD.

-

(i)

The potential domain (PD): the collection of ideas and actions that can potentially be activated to occupy our attention.

-

(ii)

The actual domain (AD): the set of ideas and actions that are actually activated or which occupy our attention.

-

(iii)

The activation probabilities (AP): the probabilities that ideas or actions in PD also belong to AD.

-

(iv)

The Reachable Domain (RD): the set of ideas and actions that can be attained from a given set in AD.

Thus, the habitual domain can be formally formulated as

where t represents time. The theory of HD is based on eight hypotheses H1–H8: (H1) Circuit Pattern Hypothesis, (H2) Unlimited Capacity Hypothesis, (H3) Efficient Restructuring Hypothesis, (H4) Analogy and Association Hypothesis, (H5) Goal Setting and State Evaluation Hypothesis, (H6) Charge Structure and Attention Allocation Hypothesis, (H7) Discharge Hypothesis, and (H8) Information Input Hypothesis.

The hypotheses H1–H8 are explained in the Appendix. Note that hypotheses H1–H4 describe how the brain operates, while hypotheses H5–H8 describe how the mind operates. Moreover, a high level of charge can be a drive for active problem solving or can create a mental stress if no action is taken. For more details on the hypotheses H1–H8 and their interaction, we refer the reader to [12, 15, 16].

In fact, it is humans that make decisions; therefore, understanding the human behavioral system plays a vital role in making good decisions. The complex processes of human behaviors have a common denominator, resulting from a common behavior mechanism. The mechanism depicts the dynamics of human behavior. Based on the literature of psychology, neural physiology, dynamic optimization theory, and system science, Yu [12, 14, 15] described the dynamic human behavior mechanism as presented in Fig. 1, which is briefly explained below:

-

(i)

Box (1) is our brain and its extended nervous system. Its functions may be described by the four hypotheses (H1–H4) in Appendix.

-

(ii)

Boxes (2)–(3) represent two basic functions of our mind, Goal Setting and State Evaluation, explained by H5 in the Appendix.

-

(iii)

Boxes (4)–(6) represent how we allocate our attention to various events, described by H6 in Appendix.

-

(iv)

Boxes (8)–(9), (10), and (14) represent the least resistance principle which humans use to release their charges (precursors of mental stress), described by H7 in the Appendix.

-

(v)

Boxes (7), (12)–(13) and (11) represent the information input into our information processing center (Box (1)). Boxes (10) and (11) are two important functions of human thinking and information processing. Boxes (7), (12)–(13) represent external information inputs, an important parameter in decision making, which are explained in H8 in the Appendix.

2.1 Decision Making Parameters

Dis/covering problems are fundamentally DMCS problems. Therefore, a complete description of the real decision making process is a prerequisite to formulation of a model of dis/covering problems. Decision making in nontrivial situations is a complex process that involves two interacting groups of parameters, namely, the decision elements and environmental facets. Moreover, many nontrivial decision problems involve uncertainty and the unknown, which can lead to judgmental fuzziness and decision failure. The unknown and uncertainty may be due to the changing nature and/or unawareness of the relevant parameters. In the next section, we describe briefly the decision parameters.

2.1.1 Decision Elements

In general, there are five basic elements involved in a decision making process. These are (i) decision alternatives, (ii) decision criteria, (iii) decision outcomes, (iv) decision preferences, and (v) decision information inputs. In existing decision theories, these elements are implicitly assumed to be fixed. In real decision making problems, these elements are not fixed, they change over time depending on events, information input, and the psychological states of DMs, especially, when the decision process is in a transition state. In other words, each of these elements is a changeable space. They can also be considered as HDs; they tend to stabilize if no relevant event and/or information arrives. For more details, we refer the reader to [13].

2.1.2 Decision Environmental Facets

Decision environments may be described by four facets: (i) decisions as a part of the human behavior mechanism, (ii) stages of the decision making processes, (iii) players in the decision making processes, and (iv) unknowns in decision making processes. Here also, the existing decision theories implicitly assume that the facets (ii)–(iv) are more or less fixed; however, in real decision making problems, they change overtime depending on the psychological sates of DMs and the arriving events and information. As for (i), in most of the decision theories, it is not incorporated. Let us elaborate more on the unknowns in the decision making process. For more details on the other facets, we refer the reader to [13].

Knowing the unknowns and how to manage them may add satisfaction to our decision processes; otherwise, they may create fear, frustration and bitterness. Unknowns may exist in any decision element. Because of HDs and being unaware of the decision parameters and their changing nature, people would easily have decision blinds or even get into decision traps. When blinds or traps occur, it is hard to see the problem clearly, let alone to solve it effectively and efficiently. Note that the blinds and traps can cause fuzziness or unknown in decision making. Conversely, fuzziness and unknowing about the nature of problems can lead to decision blinds, traps, and wrong decisions.

Formally, a DMCS problem can be represented by the following collection (from one decision maker perspective) {X t ,C t ,F t ,D t ,I t ,HD t ,J t ,U t }, where the time t varies in a certain interval [0,L] representing the allowable time for solving the problem, X t is the available alternative set at time t; C t is the criteria set at time t; F t is the outcome measured in terms of the criteria at time t; D t is the preference of DM at time t; I t is the information inputs at time t; HD t is the Habitual Domain of DM at time t; J t is the set of the involved DMs at time t and U t is the set of unknowns at time t. It is important to note that the described decision parameters not only vary with time, but also mutually interact with each other through time. Time optimality and time satisficing solution (optimal or satisficing as perceived by DMs during certain period of time, see Chaps. 7–8 of [12, 14]) become important solution concepts.

3 Competence Set Analysis

Competence Set Analysis began with Yu in 1989 [20], as a derivative of HD theory. Its mathematical foundation was built by Yu and Zhang [21–23]. The competence set (CS) for a given decision problem is defined as a collection of ideas, knowledge, skills and resources for its effective resolution. Therefore, knowing the characteristics and dynamics of this set is essential for successfully solving challenging problems. When the decision maker thinks he/she has already acquired and mastered the CS as perceived, he/she would feel comfortable making the decision and/or undertaking the challenge. For more details on competence set, we refer the reader to [13].

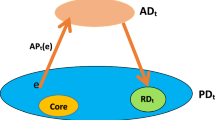

3.1 Decision Blinds and Decision Traps from a Competence Set Perspective

The competence set CS of a problem E is, in fact, a projection of the DMs’ HDs onto the problem. Implicitly, it contains actual domain, potential domain, reachable domain, and activation probabilities (Eq. (1)). For simplicity, assume that CS(E) is constant and denoted by CS t(E), the competence set of the DMs at any time t. Then CS(E)∖CS t(E) would be the decision blinds, it is the set of all the competences required but not seen by the decision makers at time t. See the illustration in Fig. 2.

Note that the larger the decision blind is, the more likely it is that the DMs might make important mistakes. For more details, we refer the reader to [13].

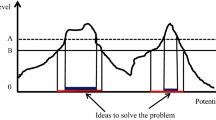

Suppose that CS t(E) is fixed for all t in a certain domain and CS(E)∖CS t(E) is large, then we tend to make mistakes in decisions and we are in a decision trap. Note that CS t(E) being fixed or trapped in a certain domain is equivalent to the corresponding actual domain (AD) and reachable domain (RD) being fixed or trapped in a certain domain. This can occur when we are in a very highly charged state of mind or when we are over confident, which makes us respond quickly and unthinkingly and to habitually commit the behavior of decision traps. In Fig. 3 below, one can see that decision blinds reduce as we move our AD from A to B then to C. By changing our actual domain (AD), we can change and expand our reachable domain (RD). We can reduce decision blinds and/or avoid decision traps by systematically changing the AD. For illustration, assume that CS(E) and RD are given, as depicted in Fig. 3.

Then, as we move the AD from A to B, then to C, our decision blinds reduce progressively from CS(E)∖RD(A) to CS(E)∖(RD(A)∪RD(B)) then CS(E)∖(RD(A)∪RD(B)∪RD(C)).

For challenging decision problems, we can treat the 9 decision parameters of Sect. 2.1 as 9 points of AD. Systematically moving over these 9 parameters and pondering their possible RDs can expand our RD for dealing with the challenging problems. As a consequence, CS t(E) is expanded and our decision blind, CS(E)∖CS t(E), reduced. In the next section, we will introduce HD tools to enrich and expand our HD and CS t(E) to reduce the decision blinds and avoid decision traps.

3.2 Clarifying Fuzziness and Unknown in Decision Making Using HD Tools

To reduce the blinds and avoid traps, in addition to being aware of the decision parameters and their changing nature, we need HD tools to expand and enrich our actual domains and reachable domains and look into the depth of the potential domains. The HD tools can also expand and enrich our perception of the decision problem and its related parameters. Here, we present three toolboxes: the seven empowering operators, the eight basic methods for expanding HD (Eq. (1)), and the nine principles of deep knowledge. Note that all the HD tools in all three boxes may be used or ignored by DMs. In fact, the more we use them, the more powerful they will be in our brain and the more ready to help us expand and enrich our HD. We call them the 7-8-9 principles of deep knowledge (shortly the 7-8-9 principles). These basic general principles can be used individually or combined to create new ideas to solve dis/covering problems. This list is derived from HD theory, we do not pretend that it is exhaustive; the reader may propose other general principles and more specific principles for specific dis/covering problems.

3.2.1 Seven Empowerment Operators

The seven empowering operators listed in Table 1 can make our minds think positively, with great hope and confidence to explore our world as to achieve our goals. As a result, whenever these operators occupy our minds, we could expand and enrich our HD.

3.2.2 Eight Basic Methods for Expanding HD

The eight basic principles, as listed in Table 2, are almost self-explanatory. They can expand our HDs. These principles, usually, through self-suggestion, will enable us to generate new ideas, new concepts and, consequently, to expand our HDs.

3.2.3 Nine Principles of Deep Knowledge

The nine principles for deep knowledge, as listed in Table 3, not only allow us to understand and expand our HDs, but help us on how to use our own HDs and other people’s HDs to solve our problems as well.

For more details on the 7-8-9 principles, we refer the reader to [12, 13, 16].

Remark 3.1

One can, by analogy, see the cognitive process of a DM as an internal light of the mind that illuminates part of the DM’s Potential Domain. Then the efficiency and effectiveness of solving a DMCS problem depends on the brightness, intensity, and orientation (flexibility) of the internal light. It is important to note that the 7-8-9 principles of deep knowledge are tools that the DM can use to go deeper into his/her potential domains, to change and enlarge the scope of his/her Reachable and Actual domains. Therefore, these principles have a direct effect on the guidance and control of DM’s internal light. The more they are used skillfully and frequently, the brighter, intense and flexible (in terms of direction or orientation) is the DM’s internal light. The brightness of the internal light allows illuminating or shining the area it points to, its intensity helps to penetrate into the depth of potential domains in the direction it focuses and its flexibility makes it possible to change direction it points to. For instance, consider the problem of covering the points A, B, and C of the targeted competence set CS(E) in Fig. 3. By using one or some of the 7-8-9 principles, the DM may increase the brightness of the light to enlarge the scope of his RD to reach the point A. Next, using some of the 7-8-9 principles, he/she may increase the intensity of the light so as to reach deeper parts of the Potential Domain, then reach the point B. The DM may use the 7-8-9 principles to increase the brightness, intensity, and change the direction (flexibility) of the internal light, at the same time, to reach the point C. Indeed, referring to Fig. 2, a person who diligently and repeatedly uses the 7-8-9 principles increases the brightness, intensity, and flexibility of his/her internal light thereby enlarging his/her reachable domain in all directions. Thus, enlarging the HDs by 7-8-9 principles increases the potential of DMs to cover a larger amount of ideas, skills and concepts that are part of the targeted competence set CS(E), that is, to reduce the decision blinds and avoid decision traps.

The cognitive process in dis/covering may be described by the situation of a person that enters a dark and open area for the first time, equipped with a torch with variable brightness and intensity, to look for something he/she believes it is there. The dark area would be Ω 1 space and the area covered by the torch would be the Reachable Domain, and the torch’s light is his/her internal light. Falling in a decision trap can be similarly represented by a person that enters a cave and does not know how to get out. He/she has a torch with variable brightness and intensity to look for the way out. Thus, completing dis/covering depends essentially on the brightness, intensity, and flexibility of the torch’s light.

3.2.4 Competence Set Analysis of Dis/Covering

To illustrate how competence set analysis can be used to study real-world dis/covering problems, we now discuss Example 1.1.

Example 3.1

In Example 1.1, the husband and wife were, in fact, facing a covering problem where the initial competence set CS ∗ consisted of hostile thoughts, ideas, and behavior; the targeted competence set CS consisted of cooperative ideas, thoughts, and behavior, i.e., normal family life. They could not solve this covering problem. The reason is that none wanted to start breaking the silence because of the fear of losing face. In terms of competence set analysis, they were actually in a decision trap. The situation was worsening and the charge level of both was increasing. Staying in such a situation for long time may lead to divorce as it often happens. Hopefully, the couple could get out the decision trap and reach CS as follows. The wife started opening and closing drawers and approaching her husband who was watching TV. This action made the husband ask: what are you looking for? She attentively answered “your voice.” The husband felt love from wife. They hugged each other, and then return to normal life followed naturally.

Let us analyze the dis/covering process that took place using competence set analysis. We describe the transition from the initial competence set CS ∗ to the final competence set CS in steps.

- Step 1. :

-

(Discovering) Since both husband and wife stuck to their position waiting the other to break the silence, they were caught in a decision trap. In terms of HD theory, we may say that their actual domains ADs and/or reachable domains RDs were exclusively occupied by the thoughts of “not losing face,” “waiting the other to act” and related thoughts. Now, we show how they got out of the decision trap by using the 7-8-9 principles. First, note that the situation considerably increased the charge level of both. As mentioned in Sect. 2, a high charge level can be a drive for problem solving and action. Therefore, the wife started to apply the “Deep and Down Principle,” M16, to reduce her charge level so as to reach deeper parts of her HD and allow good ideas and thoughts to surface, i.e., to be activated to the AD or to be in Reachable Domain, RD. At the same time, the wife applied the “Contradiction Principle,” M22, “Projecting from Higher Position Principle,” M9, and “Our remaining lifetime is our most valuable asset. I will enjoy it fully and make a 100 % contribution to society in each moment of my remaining life,” M7, from the 7-8-9 principles, which made her compare their current conflict atmosphere with the ideal situation of good family life and see that the latter should be much more important. Further, applying the principles M1 and M3 of the Seven Empowering Operators, “Everyone is a priceless living entity. We are all unique creations who carry the spark of the divine” and, “There are reasons for everything that occurs; one major reason is to help us grow and develop,” she came to the conclusion that this quarrel is just a test of the strength of their relationship; they have to overcome it. Thus, by using the six mentioned operators, the wife has considerably expanded the initial competence set CS ∗ into a new competence set CS 1 consisting of thoughts related to the desire to return to normal family life, not losing face and waiting for the other to break the silence. To achieve the transition from CS ∗ to CS 1 the wife used the six operators: M16, M22, M9, M7, M1, and M3. One may express this transition as compound operation as follows:

$$ \mathrm{M}_{3} \mathbin{\mathrm{o}}\mathrm{M}_{1} \mathbin{\mathrm{o}}\mathrm{M}_{7} \mathbin{\mathrm{o}}\mathrm{M}_{9} \mathbin{\mathrm{o}}\mathrm{M}_{22} \mathbin{\mathrm{o}}\mathrm{M}_{16}\bigl(\mathit{CS}^*\bigr)= \mathit{CS}^{1} . $$(2)With CS 1, the wife has considerably improved her competence set, but she still cannot get the couple out of the decision trap. Here and in the rest of the paper, the symbol “o” in (Eq. (2)) does not mean the common compound operation of two functions; it reflects the order of operations.

- Step 2. :

-

Applying the “Alternating Principle,” M17, the wife came to the idea to change from waiting the husband to break the silence to the idea of making him break the silence without losing face. This mental operation changed her competence set from CS 1 to CS 2. With CS 2 including CS 1 and making the husband break the silence without losing face, the wife felt that she is close to be able to solve the problem. One may express this transition as follows:

$$ \mathrm{M}_{17}\bigl(\mathit{CS}^{1}\bigr)= \mathit{CS}^{2} . $$(3) - Step 3. :

-

(Covering) Next, she has concentrated on finding the ways she can push the husband break the silence without losing face. In order to find such ways, she applied the “Deep and Down Principle,” M16, to go deeper into her potential domain PD and the “Inner Connection Principle,” M20. Then she came to the ideas of raising the husband curiosity because curiosity satisfaction is one of the most basic goals of human being, and activating one of the most important elements of his Habitual Domain core, his love for her. Thus, she arrived at a new competence set CS 3. With CS 3 including CS 2, the husband’s curiosity goal and his love for her, she felt that now she is able to solve the problem, it remains to generate strategies raising husband’s curiosity and activating his love for her. One may express this transition as compound operation as follows:

$$ \mathrm{M}_{20} \mathbin{\mathrm{o}}\mathrm{M}_{16} \bigl( \mathit{CS}^{2}\bigr)= \mathit{CS}^{3}. $$(4) - Step 4. :

-

To arise the husbands curiosity and activate his love for her, she applied the “Alternating Principle,” M17, again and the “Active Association Principle,” M10. Thus, she could generate a set of strategies {c 1,c 2,…,c l } that could arise curiosity and activate love feeling, among them, the strategy of “approaching him by opening and closing the drawers” to arise his curiosity and responding that “she is looking for his voice” when he asks about what is going on to activate his love for her. With the new competence set CS 4=CS 3∪{c 1,c 2,…,c l }, the wife became confident that the problem will be solved, that is, CS 4 contains the required competence set CS to solve the problem. She chose to implement the “opening and closing drawers” strategy. One may express this transition as a compound operation as follows:

$$ \mathit{CS} \subset \mathit{CS}^{4} = \mathrm{M}_{10} \mathbin{\mathrm{o}}\mathrm{M}_{17}\bigl(\mathit{CS}^{3}\bigr) . $$(5)One may summaries the whole operation (Eq. (2))–(Eq. (5)) of transforming the initial competence set CS ∗ to the final competence set CS 4 as follows:

$$ \mathit{CS} \subset \mathit{CS}^{4} = \mathrm{M}_{10} \mathbin{\mathrm{o}}\mathrm{M}_{17}\mathbin{\mathrm{o}}\mathrm{M}_{20} \mathbin{\mathrm{o}}\mathrm{M}_{16} \mathbin{\mathrm{o}}\mathrm{M}_{17} \mathbin{\mathrm{o}}\mathrm{M}_{3} \mathbin{\mathrm{o}}\mathrm{M}_{1} \mathbin{\mathrm{o}}\mathrm{M}_{7} \mathbin{\mathrm{o}}\mathrm{M}_{9} \mathbin{\mathrm{o}}\mathrm{M}_{22} \mathbin{\mathrm{o}}\mathrm{M}_{16}\bigl(\mathit{CS}^*\bigr) . $$(6)

Remark 3.2

Referring to Remark 3.1, from Example 3.1, it appears clearly that different people may get different outcome when they use the same 7-8-9 principles to solve the same problem depending on how skillfully they use them. Indeed, we have seen that by using the “Deep and Down Principle,” M16, the wife could come out with new ideas and change her mind to move from hostile state of mind to a cooperative state of mind to solve the problem, while the husband could not change his mind even though he was making a lot of mental efforts too, including the use of the “Deep and Down Principle,” M16.

4 Optimization in Changeable Spaces

In this section, we introduce a new optimization paradigm to formulate and solve DMCS, the optimization in changeable spaces (OCS) or Second Order Optimization. The OCS model introduces psychological and cognitive aspects and the possibility to restructure the decision parameters into optimization, which have never been considered in existing optimization models. OCS is formulated in the framework of HD theory (Sect. 2). The fundamental elements of OCS are the decision parameters including the five decision making elements, the four decision making environmental facets (see Sect. 2), the 7-8-9 principles of deep knowledge (see Sect. 3.2) and the concept of competence set presented in Sect. 3.

In our formulation of OCS problems, we focus on one general problem, namely, the dis/covering problem. Before that, we need to introduce some mathematical notations and tools. The 7-8-9 principles, M 1,M 2,…,M 24, can help generate new ideas to solve problems, therefore, mathematically, they can be thought of operators that transform ideas into other ideas. Thus, the domain of these operators is the Ω 1-space of all the knowledge and skills that the whole humanity has reached so far. The operators M 1,M 2,…,M 24 are set-to-set functions with domain Ω 1-space and range Ω-space, such that for any subset A of Ω 1,M i (A)⊂Ω. The space Ω is the space of all the knowledge and skills that the whole humanity has reached so far and the knowledge and skills it will reach in the future. The Ω-space is not a set in the traditional sense because its boundaries are not known.

For presentation convenience, denote by M:={M 1,M 2,…,M 24} the set of 7-8-9 principles. In the decision making process, the DMs may apply these principles individually at some times or use a sequence of them at some other times; an individual principle may also be repeatedly applied in some period of time. A finite compound of principles M i(1)oM i(2)o⋯oM i(s) from M is called ideas generation operator or IG-operator. For any part A of the Ω 1-space, the DMs could generate new ideas by the operation M i(1)oM i(2)o⋯oM i(s)(A) that we call ideas generation operation or IG-operation. Let us denote by

the set of all IG-operators based on M.

4.1 Covering

In Sect. 1, we have literally defined covering problem as “how to transform a given competence set CS ∗ into a set that contains a targeted competence set CS”. In fact, at any time, the competence set is just a projection of DMs’ Habitual Domains (Eq. (1)); hence, it has an Actual Domain, a Reachable Domain, a Potential Domain, and Activation Probabilities. In this paper, we focus only on the reachable domain part of competence set, that is, the skills and resources that can be reached from the actual domain. Generally speaking, at any time, the competence set of DMs may include part or all of the decision parameters, skills and resources related to the decision making problem. The process of transformation from one competence set to another can occur when there is a change in actual domain or the reachable domain is expanded to deeper parts of the potential domain or some new ideas are acquired from outside of the DMs’ habitual domains. To realize such transformation, the use of the 7-8-9 principles of deep knowledge is very useful. In this paper IG-operators will be used as tools of competence set transformation.

4.1.1 Feasibility and Minimum Time and/or Cost Covering

Assume that a time frame [0,L] for completing the covering is given. The first question that arises is: Is there an IG-operator H that can complete covering within the allowed time? In other words, is the covering problem feasible? This problem can be formulated as follows:

here and in the rest of the paper, t(H) is the duration of the transformation of the given competence set including the time spent for finding or selecting the IG-operator H. We will deal with covering feasibility problem in Sect. 4.3. Assume that the covering problem is feasible, i.e., there exists at least one IG-operator that can lead to CS covering within the allowed time. Then the minimum time covering problem can be formulated as follows:

In the constraints of (9), the set CS ∗ can be replaced by Ω 1-space. As far as the authors know such a problem has not been discussed in literature. The unique feature of this problem is that it involves the mental operator H in CM that is defined in a domain that is not endowed with some known mathematical structure to be tractable with traditional optimization methods. Some new mathematical structures are suitable to solve this problem in its general form. This could be a worthy direction of research. For the time being, in order to make the problem accessible by traditional methods, some further restrictive assumptions should be made. The optimization problem (9) can be formulated in a way that takes into account the stages or steps of the decision making process as follows. Assuming that there are p+1 stages, we have

where H(i) is the IG-operator implemented at stage i. This problem looks like an optimal control problem, however, it has a fundamental difference, H(i) is not a traditional control function, it is an ideas generating operator and the dynamics of CS t is not governed by a differential equation or difference-equation.

Assume that the DMs can provide an estimate c(H) of the cost of any IG-operator H∈CM, then the minimum cost covering problem can be formulated as follows:

When the DMs are interested in time and cost efficiency at the same time, a multiple criteria formulation is more suitable

The reader may derive more OCS problems from the previous models. In Sect. 5, we present some applications of the models (8)–(12).

Remark 4.1

In traditional optimization, the term “minimization” is about finding the absolute minimum of some objective function subject to some constraints. Absolute minimum may not be reached when a problem involves human psychology and changeable spaces. Therefore, in HD theory “minimization” is about reducing the charge level of the decision maker to a satisfactory or acceptable level. Thus, minimization in problems (9)–(12) and in the problems that appear in the rest of the paper should be understood in the HD theory sense not in traditional sense.

4.2 Discovering

In this section, we present the discovering problem as an OCS problem. In Sect. 1, we have seen that discovering is the transformation of a given competence set CS ∗ into a new competence set so as to solve an unsolved problem. In terms of HD theory, discovering contributes to reducing the charge level (see the Appendix and Sect. 2) or relieving the pain of some targeted people. Thus, the general formulation of discovering as an OCS problem is

where ch(H(CS ∗)) is the resulting charge level after implementation of the IG-operator H. When discovery time is limited, a multiple criteria formulation is more suitable

where t(H) is the duration of the operation H(CS ∗). In fact, the traditional mathematical programming problem

is a special case of the problem (13). Indeed, in (15) the objective function f(x) expresses cost or profit in general, which is a special expression of charge level because maximizing profit or minimizing costs reduces the charge level of the DMs; the constraints of (15), generally, express the available resources and how they are used for a given decision x. In other words, the constraints express the existing resources (competence set) and how they are used or transformed to create value for a given decision x.

Remark 4.2

The problem (15) could be an optimal control problem i.e. f could be an integral functional, the constraints could be a system of differential equations or difference equations and x a time dependent control function u(t).

The traditional optimization problem reduces to a dis/covering problem. Formulating (15) as an OCS problem, we obtain the problem

where CS ∗:={x/x∈X,g i (x)=b i , i=1,2,…,m} is the fixed set of available alternatives derived from the constraints of (15); \(\mathit{CS}: = \{ x^{*}/x^{*} \in \mathit{CS}^{*}, f(x^{*}) = \min_{x \in \mathit{CS}^{*}}f(x)\}\), is the set of optimal solutions of (15), H is an IG-operator, and c(H) is the cost of finding and implementing H, e.g., time cost. In terms of the problem (15), the operator H in (16) may be interpreted as an optimization method or algorithm, e.g., gradient method. Here, covering CS means covering at least one element from CS. A solution H of (16) generally involves mathematical transformations that can be represented mainly by the principle M21, the “Transforming and Changing principle” and the other 7-8-9 principles. Thus, the formulation of a traditional optimization problem alone is not an OCS problem because its decision parameters are fixed. However, considering the search for its optimal solution, it becomes an OCS. In general, (15) may appear at the late stages of the resolution process of an OCS problem when the decision blinds and decision traps are eliminated.

4.3 Necessary and Sufficient Conditions for Covering

In this subsection, we present the dis/covering process in a way that allows us to derive some necessary and sufficient conditions for its completion within the allowable time [0,L]. We consider an approach based on the cardinality of the competence set CS t at any time t.

The resolution of the covering problem depends on the awareness of the DMs of the decision parameters related to the problem, the 7-8-9 principles and the dynamics of their HDs. Naturally, in covering problems, the HDs and competence sets of the DMs should not stay trapped for long time in some area before covering is completed, especially, when the available time is limited and short. Thus, by avoiding long-lasting decision traps within the allowed time [0,L], the covering problem could be solved. Generally speaking, if the allowed time is large enough, the covering problem could be solved when there is a continuous acquiring (up to some period Δ) of new elements from the targeted competence set CS. In order to get some practical results, we make the following general assumptions.

Assumption 4.1

At any time t of the dis/covering process, the DMs have a correct perception about their actual competence set and the actual targeted competence set. Moreover, the targeted competence set CS is constant.

Assumption 4.2

One may generally assume that when ideas or skills or resources are acquired they are not lost in the future, that is, the sequence of competence sets CS t is non decreasing, that is, CS t⊂CS t′ for all t, t′ such that t<t′.

4.3.1 Cardinality Approach to Covering

Let us assume that the initial competence set CS ∗ and the targeted competence set CS is finite (it is generally the case), i.e., CS ∗:={a 1,a 2,…,a n } and CS:={b 1,b 2,…,b m }.

Definition 4.1

Let CS 0:=CS ∗, then the covering problem can be formulated as follows:

where L is the maximum time allowed to solve the covering problem.

Denote by AQ t:=CS t∩CS the acquired set of ideas, skills, and competences from the targeted competence set CS at time t and let q t :=Card{AQ t} be the cardinality of AQ t. Then the covering problem (17) can be simply formulated as follows:

Definition 4.2

(Decision trap)

We say that the decision makers are in a decision trap iff there exists some time t 0 such that q t is constant for all t in the interval [t 0,t 1], where t 1>t 0+Δ. The time Δ, 0<Δ<L depends on the allowable covering time [0,L], it can be subjectively set by the decision makers. Δ is called decision trap threshold.

Definition 4.2 means that the DMs cannot acquire any new elements from \(\mathit{CS}\backslash \mathit{CS}^{t^{0}}\) to be added to CS t during the period [t 0,t 1]. In terms of HD theory, Δ depends on the DMs’ charge level. If it is high, the DMs tend to take a small value for Δ, whereas when it is low, the DMs tend to take a large value for Δ. It is important to note that it often happens that the DMs fall in a decision trap without being aware of it. In this case, the period Δ is not relevant and the covering process may not be completed within the allowed time. In this paper, we assume that when the DMs are in a decision trap, they are aware of it. A covering process that does not involve decision traps can be formally defined as follows.

Definition 4.3

We say that the covering process is operational iff for all t such that CS∖CS t≠∅, there exists some period r(t)≤Δ such that t+r(t)≤L and q t+r(t)−q t ≥1.

In other words, starting from any time t, the competence set CS t, does not stop acquiring additional elements from CS for a period longer than Δ, which means that an operational covering process is a process that does never fall in a decision trap. The condition CS∖CS t≠∅ means that the covering process is not completed at time t, while t+r(t)≤L and q t+r(t)−q t ≥1 mean that at least one element from CS∖CS t is acquired by DMs within the remaining time [t,L], without falling in a decision trap. Consequently, we have the following necessary condition for covering completion within the allowable time.

Necessary Conditions

There are two necessary conditions for completing the covering.

-

(i)

In case the covering process is expected to be operational, a necessary and sufficient condition is that t ∗:=max{t(b i ), i=1,2,…,m}≤L, where \(m := \operatorname{Card}\{\mathit{CS}\}\) and t(b i ) is the smallest time t such that b i ∈CS t, i.e., the first time at which b i ∈CS is acquired by the DMs. Let us assume that the acquiring of elements from CS takes place sequentially, that is, at any time t the covering process is dedicated fully to the acquisition of only one element from CS. Consequently, since \(m = \operatorname{Card}\{\mathit{CS}\}\), in the extreme case when each element of CS requires the maximum time period Δ to be covered in an operational covering process, the necessary condition is mΔ≤L (in Proposition 4.1 below, we prove that it is also, in general, a sufficient condition). Thus, the period of time mΔ can be taken as an upper bound for completing the covering process on time, provided that mΔ≤L. In case the DMs expects only a certain number s of problems that will take the maximum resolution period of time Δ in an operational covering process, then the necessary condition to complete the covering process is sΔ≤L. It is important to note that this necessary condition is not valid if the acquisition of elements from CS is not sequential (i.e., it can be parallel).

-

(ii)

In case the DMs expect to fall in a certain number of decision traps (challenging problems), then the necessary condition to complete the covering process is t max<L, where

$$t_{\max} := \max\{t \mid \mbox{the DMs are in a decision trap at time}\ t\}. $$

Sufficient Conditions

The following two propositions establish the feasibility or sufficient condition for a covering within allowable time, when the process is operational.

Proposition 4.1

Assume that the acquisition of elements from CS is sequential, mΔ≤L and the covering process is operational. Then the covering of CS can be achieved within the allowable covering time [0,L].

Proof

Let us recall that based on (18) the covering problem is solved when q t =m for some time t. Assume the worst case, that for all t such that t≤L−Δ, we have r(t)=Δ, since the process is operational, we have q t+Δ−q t ≥1, for all t≤L−Δ. Therefore, q mΔ=q mΔ−q (m−1)Δ+q (m−1)Δ−q (m−2)Δ+⋯+q Δ−q 0+q 0≥m. Then either q sΔ=m for some s<m, then q mΔ=m because q t is nondecreasing by Assumption 4.2 or the process continues until the time mΔ, then q mΔ=m because the process is operational. □

Let us now turn to the difficult case when the process encounters decision traps. Assume that the DMs enter a decision trap at some time t 0 and consider the problem at some time t 1>t 0+Δ. As stated above, this means that the DMs are stuck and recognize that they cannot reach or acquire any additional point from CS after trying during the period Δ. In terms of HD theory, this means that the Actual Domains (ADs) and/or the RDs of the DMs are trapped in some area. In order to reach an additional element from CS, the DMs need either to change their ADs and/or expand their RDs toward CS. As for the change of ADs, the DMs have to consider decision parameters (see Sects. 2.1.1–2.1.2) and go deeper into their potential domains (PDs) to expand their RDs, to come out with new ideas and concepts. For this purpose, the 7-8-9- principles presented in Sect. 3.2 can be very useful.

Definition 4.4

At any time t 1, an IG-operation using an IG-operator H is said to be successful in advancing the covering process, if there exists a subset A of Ω 1-space such that \(H(A) \cap(\mathit{CS}\backslash \mathit{CS}^{t^{1}}) \ne\emptyset\). Otherwise, it is said unsuccessful.

Most likely, the subset A of the Ω 1-space could be \(\mathit{CS}^{t^{1}}\) itself or part of \(\mathit{CS}^{t^{1}}\) or contain only part of it. If H is successful, the new competence set is \(\mathit{CS}^{t^{2}} = \mathit{CS}^{t^{1}} \cup(H(A) \cap(\mathit{CS}\backslash \mathit{CS}^{t^{1}}))\), which is larger than \(\mathit{CS}^{t^{1}}\). In terms of the sequence q t introduced above, an IG-operation that starts at time t 1 and finishes at time t 2 is said to be successful if \(q_{t^{1}} < q_{t^{2}}\). Thus, we have the following sufficient condition for solving the covering problem.

Proposition 4.2

Assume that the following conditions are satisfied:

-

(i)

t max≤L

-

(ii)

\(\Delta(m - q_{t_{\max}}) \le L - t_{\max}\)

Then the covering problem can be solved within the given time frame [0,L].

Proof

The condition (i) implies that, each time the DMs get into a decision trap, they are able to get out of it by acquiring at least one additional element from CS within the time frame. After the time t max, the covering process becomes operational, i.e., no decision trap is expected. Then DMs will acquire at least one new element from CS within a period of time not exceeding Δ. Since at time t max the number of acquired elements from CS is \(q_{t_{\max}}\), then the number of uncovered elements is \(m - q_{t_{\max}}\). Taking into account Assumption 4.2, such number of elements would require a maximum time of \(\Delta(m - q_{t_{\max}})\). Therefore, to complete the covering process, the time that remains after t max, i.e., (L−t max), should be larger than \(\Delta(m - q_{t_{\max}})\), which is guaranteed by (ii). □

5 Applications

In Sects. 1–3, we have seen that a DMCS problem is a challenging problem that involves decision parameters that the DMs may ignore or have to discover. Moreover, we have pointed out that such problems cannot be solved by existing optimization techniques. In Sect. 3, the basic mental operators, 7-8-9 principles, were presented as tools that can help expand the habitual domain of DMs so as to reduce the blinds and get out of decision traps to solve dis/covering problems. In Sect. 4, the covering and discovering problem were formulated as OCS problems. In this section, to illustrate this new class of optimization problems, we formulate the DMCS problems presented in Examples 1.2–1.3 as OCS problems using the models (8)–(14). Moreover, we provide a mathematical expression of some IG-operators from Eq. (7) to show the possibility of solving OCS problems mathematically.

Example 5.1

In Example 1.2 (Horse Race), at the beginning, the decision making parameters of the problem including the decision elements and decision environment facets as described in Sect. 2 are as follows. As for the decision elements, the set of alternatives is empty for both players because they do not know how to solve the problem. There is only one criterion in this game situation, the ranking of the candidate’s horse when it crosses the finishing line. The outcomes of the horse race for each candidate are either his horse crosses the finishing line first or second. Both candidates prefer to make one’s horse cross the finishing line last. Finally, information input consists of the rules of the race given by the president of the company and any information that each candidate could get about the other. As for the environmental facets, the situation involves two players, candidates A and B that are involved in a horse race with very specific rules, therefore, each of them needs to understand and monitor the behavior of the other and devise strategies accordingly.

This game involves two stages. The first stage is the decision trap period, when the two candidates did not know what to do. The second stage covers the resolution process to get out of the decision trap implemented by the candidate A. The unknown in such situations is the behavior and the competence set of the other candidate. Here, the use of the eight hypotheses H1–H8 of habitual domain and the derived behavioral mechanism [12] (see Fig. 1) is essential to understand the behavior of the involved DMs. In order to win the race, a candidate has to understand the decision elements and the environmental facets of the race situation, then based on his understanding of this situation, he needs to evaluate his competence set CS ∗ and the required competence set CS, if possible. Finally, by using the 7-8-9 principles, he may expand his competence set CS ∗ to cover CS or discover a CS (in case CS is unknown) that will make him feel confident to win the race.

Let us now formulate the problem as an OCS problem from the perspective of candidate A, a similar OCS problem can be formulated for candidate B. Once the two candidates have been explained the details and the rules of the game, they were puzzled, i.e., they were in a decision trap. The reason is that the rules of the game are not the commonly known rules of horse race: generally, the rule is that the first candidate crossing the finishing line is the winner. The candidates faced a discovery problem. Thus, the initial competence sets CS ∗(A) and CS ∗(B) of A and B, respectively, consisted of the traditional knowledge about the rules of horse races and the individual skills of riding a horse. Here, since the candidates are in a game situation, staying puzzled for few seconds may be considered as being in a decision trap. In game situations, falling in a decision trap generally leads to losing the game. The targeted competence set CS of each candidate is the competences needed to win over the other candidate by making sure that his own horse crosses the finishing line last. Thus, we obtain the following OCS problem for candidate A

where t A(H) is the duration of the IG-operation H(CS ∗(A)) from A’s perspective. In this problem, there is no specific time limitation. The game ends when one of the two horses crosses the finishing line. Let us now see how the candidate A developed a solution of (19) in steps.

- Step 1. :

-

The candidate A analyzed the rules of the game using the principles “Deep and Down Principle,” M16, and “Projecting from a Higher Position,” M9, to activate new ideas from the his potential domain, PD, to his Actual Domain, AD, or to his Reachable Domain, RD, he then determined the most important objects that are involved in the horse race as the two pairs (A, H1) and (B, H2), that is, candidate A and his horse and candidate B and his horse. This operation resulted in a new competence set \(\mathit{CS}^{t_{1}}(\mathrm{A})\) consisting of CS ∗(A) and the pairs (A, H1) and (B, H2) as the main focus. The transformation from CS ∗(A) to \(\mathit{CS}^{t_{1}}(\mathrm{A})\) by using the operators M16 and M9 can be expressed as follows:

$$ \mathrm{M}_{9} \mathbin{\mathrm{o}}\mathrm{M}_{16} \bigl( \mathit{CS}^*(\mathrm{A})\bigr) =\mathit{CS}^{t_{1}}(\mathrm{A}). $$(20) - Step 2. :

-

Next, applying the “Alternating Principle,” M17, to the pairs (A, H1) and (B, H2), candidate A alternated the horses to obtain the pairs (A, H2) and (B, H1) by jumping on B’s horse. This operation changed the rule of the game to “whoever crosses the finishing line first will be the winner”. Then, riding B’s horse as fast as he could to the finishing line, his horse will be definitely the last to cross the finishing line because candidate B was still trapped in CS ∗(B) and did not understand on time what is going on. When B realized what was going on, it was too late! Candidate A won the race. The alternating operation resulted in a new competence set \(\mathit{CS}^{t_{2}}(\mathrm{A})\) for candidate A that obviously includes the needed competence set SC for solving the game, which completes the discovering problem. The alternating operation can be represented as follows:

$$ \mathit{CS} \subset \mathit{CS}^{t_{2}}(\mathrm{A}) = \mathrm{M}_{17}\bigl(\mathit{CS}^{t_{1}}(\mathrm{A})\bigr). $$(21)One may summarize the whole discovery process (Eq. (20))–(Eq. (21)) of the solution as follows:

$$\mathit{CS} \subset \mathit{CS}^{t_{2}}(\mathrm{A}) = \mathrm{M}_{17}\mathbin{\mathrm{o}}\mathrm{M}_{9}\mathbin{\mathrm{o}}\mathrm{M}_{16} \bigl(\mathit{CS}^*(\mathrm{A})\bigr). $$

Now, let us mathematically formulate the two steps of the resolution process, then derive the specific OCS problem of the form (19). In terms of Habitual Domains, the operation of Step 1 means that candidate A has brought the two pairs (A, H1) and (B, H2) into his actual domain. In terms of the activation probability from the potential domain to the actual domain, this means that the activation probability of the pairs (A, H1) and (B, H2) became 1. Mathematically, one can formulate the operation (20) as follows. Assume that the initial competence set of candidate A is CS ∗(A):={a 1,a 2,…,a n }, and let P 0(a j ), j=1,2,…,n be the initial activation probabilities of a 1,a 2,…,a n , respectively, from his reachable domain RD to his actual domain AD. Generally speaking, the activation probability can be seen as a time dependent function with domain as the potential domain and range [0,1], that is, P t(⋅):PD→[0,1]. Then the operation (Eq. (20)) reduces to the transformation of the initial activation probabilities P 0(a j ), j=1,2,…,n to a new set of probabilities \(P^{t_{1}}(a_{j})\), j=1,2,…,n such that \(P^{t_{1}}((\mathrm{A},\mathrm{H}1))=P^{t_{1}}((\mathrm{B},\mathrm{H}2))=1\), where t 1 is the duration of operation (Eq. (20)). This transformation can be expressed as a function S:{P 0(a j ), j=1,2,…,n}→[0,1], such that \(S(P^{0}(a_{j}))=P^{t_{1}}(a_{j})\), j=1,2,…,n.

Next, one may mathematically formulate the operation (Eq. (21)) as follows. Let us represent the elements A, H1, B, and H2 by the numbers 1, 2, 3, and 4, respectively, and let d=(1,2,3,4). Then alternating horses through the “Alternating Principle,” M17, by candidate A, can be identified with the following linear transformation Fd=d′, where d=(1,2,3,4), d′=(1,4,3,2) and F is the matrix

Clearly, d′=(1,4,3,2) expresses the desired change of horses. Thus, by alternating the horses, candidate A is now confident that he can win the race, that is, he has covered the required competence set CS, i.e., \(\mathit{CS} \subset M_{17}(\mathit{CS}^{t_{1}}(\mathrm{A})) = \mathit{CS}^{t_{2}}(\mathrm{A})\), where t 2−t 1 is the duration of operation \(M_{17}(\mathit{CS}^{t_{1}}(\mathrm{A}))\). Therefore, mint A(H)=t A(M 17oM 9oM 16)=t 2; the discovery problem (Eq. (20))–(Eq. (21)) can be formulated mathematically as follows:

where t(Fof) is the duration of the operation Fof, V={f∣f:PD→[0,1]} is the set of functions that assign activation probability from the potential domain to the actual domain and

the elements of W are similar to the matrix F in Eq. (22). The functions in V help to identify or select the elements of CS ∗ that the DMs should focus on or concentrate their attention.

Example 5.2

In Example 1.3, the situation can be described as a game that takes place in two stages: The stage of price war, then the resolution stage. The phase of price war can be described by traditional game theory as a prisoner dilemma game. At some time t during the price war, for illustration and simplicity, by “Active Association Principle,” M10, one may represent the situation as a prisoner dilemma game with payoff matrix of the form

where the elder son plays the rows and the younger son plays the columns; CP means keep current price, while DP means decrease the price. In each entry of the matrix A, the first number represents the gain of the elder brother while the second number represents the gain of the younger brother. It is obvious that if one of the brothers maintains CP the other brother has a high incentive to decreases the price DP. The one who decreases the price will considerably increase his payoff, while the other will incur a loss of 50 %. Therefore, the strategy profile (CP, CP) is unstable. It is obvious that both the strategy profiles (CP, DP) and (DP, CP) are unstable because one player at least gets the lowest payoff he can get in the game. Most likely, both players will adopt the strategy (DP, DP), that is, remain in the price war, which is a Nash equilibrium. However, (DP, DP) is not a satisfactory solution to the players in the long run. Staying in Nash equilibrium would lead one of the players to lose the price war and leave the market. Therefore, such a game cannot be solved satisfactorily in the framework of traditional game theory. In order to consider the second phase of the game, one has to detach from the restrictive traditional game theory framework and adopt a more general and flexible framework. OCS could be such a framework. Indeed, if one looks at the problem as a DMCS problem, he can see that being trapped in the strategy profile (DP, DP), the players face a discovery problem. They have to generate new ideas to solve the game satisfactorily, including the restructuring of the game by calling for external intervention via Hypothesis H8 of HD theory (see the Appendix).

Let us now analyze how the elder son and father developed a solution to this problem. The starting competence set CS ∗ of both players for solving the problem was trapped in a very narrow area characterized by hostility and related thoughts and behaviors, which showed up in the form of price war. In fact, their charge level was so high that their actual domains (ADs) were almost exclusively occupied by the price war and related ideas and thoughts. Their reachable domain was very narrow too. The competence set of both players for solving the problem was almost constant over this first stage of the game. Over time, when the prices decreased significantly, the danger of bankruptcy started looming on the horizon. The charge level of the elder brother started to decrease and his mind started to shift from the idea of pursuing short term gains to the idea of long term benefits and sustainability. To reach this state, he used the “Deep and Down Principle,” M16. This principle makes it possible to expand the current stagnant competence set of the elder son, denoted by CS ∗(EL), to a larger one. Then using the “Contradiction Principle,” M22, he came to the conclusion that this price war has to be stopped by shifting from short term profit maximization to long term sustainability and benefits, however, he did not know how to achieve this. Then using the “Changing and Transforming Principle,” M21, and the Hypothesis H8 (Information input hypothesis, see Sect. 2 and the Appendix) of HD theory, the elder son could change one of the parameters of the game situation (see Sect. 2.1.1), the set of players, by appealing to the father as a new player that has the potential to solve the problem. In terms of HD theory, a union or integration of two habitual domains has taken place to solve the same problem. Naturally, the resulting competence set would be larger than the individual competence sets. This phase of the game can be characterized by the following transformation:

where F stands for father and CS 1(EL∪F) is the competence set of the elder son and the father. Here, the superscripts indicate the stage of the game, not time. CS 1(EL∪F) is much larger than CS ∗(EL) because it includes the father’s competence set, which is richer in experience and more powerful in terms of decision making.

In the second stage, when the father came to know the situation through an elder son’s report, being the actual owner of both companies, his charge level increased sharply because he did not want to see his sons wagging price war against each other. The father did not know how to stop the price war at the beginning, he used the “Deep and Down Principle,” M16, as to open the possibility to generate new ideas. He wanted to stop the price war, but dealing with his own sons he wanted to be fair as well. Thus, at the same time using the “Changing and Transforming Principle,” M21, he changed one of the parameters of the game, the criteria, by introducing fairness, as a new criterion for selecting strategies or solutions. Then, as the owner of both companies and being not involved in the war price, using the “Projection from Higher Position Principle,” M9, he could see that the problem can be solved if the incentive to deviate from the CP strategy is eliminated. Then, using the “Changing and Transforming Principle,” M21, again, he changed the rule of the game in terms of outcomes as follows. Whoever deviates from the strategy CP, has to pay a penalty of 15=(30−20)+5 to the other. The obtained new game in traditional form from Eq. (23) is

Clearly, the strategy profile (CP, CP) is now stable individually and collectively. Indeed, no player would have incentive to unilaterally decrease his price because he will end up with a lesser payoff of 15, while collectively the two players cannot move to the profile (DP, DP), where both decrease the price because they will end up with a very low payoff of (13, 13). Thus, in the new game, (CP, CP) is a solution that is individually and collectively stable and fair at the same time. The second phase can be characterized by the following operations:

where CS 2(EL∪F) is the discovered competence set that includes the required competence set CS to solve the price war problem. It includes the payoff matrix A′. It is interesting to note that the new payoff matrix of the new game A′ is obtained from the payoff matrix A of the previous game by applying the “Changing and Transforming principle,” M21, therefore, it can be represented by the following linear transformations:

One can summarize the whole process of dis/covering (Eq. (24))–(Eq. (25)) by the following operation:

Let us now formulate the dis/covering process as an OCS problem of the form (9)

where t(H) is the duration of implementing IG-operator H, CS ∗ is the initial competence set of the sons that was trapped in price war, the allowable time L is short, may be few weeks, and CS is the required competence set to stop the price war fairly.

Let us now formulate mathematically the solution of the problem (28). Here, the allowed time is short; it is the time remaining before any of the two companies goes bankrupt. We have seen in Eq. (24) that by applying the operation M21oM22oM16(CS ∗(EL)), the elder son called the father for intervention to solve the problem, as a result, a larger competence set is obtained. Mathematically, this operation can be formulated as a union of two competence sets as follows CS ∗(EL)∪CS ∗(F)=CS 1(EL∪F). Next, the father and the son addressed the question: How to transform the payoff matrix A in Eq. (23) to cover CS? By the operation (Eq. (26)), the father arrived at the idea of penalty that can be mathematically formulated as follows. Find a matrix K of type A such that the matrix

satisfies a>d, b<a and c>d. The resulting payoff matrix A′ is the solution of the dis/covering problem. A solution to this problem is

This solution is equivalent to father’s solution consisting of imposing a penalty of 15 units on the one who deviates from the current price strategy. The new payoff matrix A′=A+K stops the war price in a fair way, that is, the father could get his sons out of their decision trap by covering the required competence set CS before any of his companies went bankrupt. Thus, we can formulate the problem (28) as follows:

where t(Fof) is the duration of operation Fof, V={f/f:PD(EL)→[0,1]} is the set of functions that assign activation probabilities from the potential domain (PD) to the actual domain (AD) and W={F∣F is a linear transformation of type (Eq. (29))}. A solution to this problem is F ∗of ∗, where f ∗ is the function that assigns a probability 1 to the father. Indeed, by appealing to the father, the elder son brought him to his actual domain. F ∗ is a transformation of type (Eq. (29)), where K is given in Eq. (30). The functions involved in the constraints of the OCS problem (31) show that it is possible to mathematically solve OCS problems under some reasonable assumptions.

6 Potential Applications and Further Research Directions

The new optimization models (8)–(14) we presented in this work open new directions of research and have potential applications in economics and management such as (i) formulation and analysis of the innovation process using DMCS and OCS (ii) the use of DMCS and OCS models in management, conflict resolution, game theory, planning and decision making, (iii) the use of DMCS and OCS in artificial intelligence: introduction of the new optimization models (8)–(14) into the emerging discipline of artificial economics [24] and e-economy, (iv) the use of DMCS and OCS in scientific discovery and (v) the use of DMCS and OCS in knowledge extraction (data mining). In the subsections below, we provide more details on (i)–(v).

6.1 DMCS and OCS in Innovation Dynamics

In the field of corporate management, “corporate competitiveness” has always been a hot topic. To be competitive, corporations must continually innovate to provide good products or services that satisfy the needs of customers faster and more effectively than their competitors, allowing them to create value and distribute the value to all stakeholders. Clearly, innovation is a process which involves a number of decision parameters and challenging problems in changeable spaces. By checking the list of these potential challenging problems and decision parameters therein, we could reduce the decision blinds and avoid decision traps; and by deliberating the possible occurrence in the potential domain of each possible problem, we could avoid or reduce the possible decision shocks when we are confronted with the challenging problems. Scientific discovery and knowledge extraction are an essential part of the innovation process. We discuss these later in Sects. 6.4–6.5, respectively.

6.1.1 Innovation Dynamics Anatomy

According to the HD theory and competence set analysis, producing new products or services can be regarded as a transformation of the existing competence CS ∗ to a new competence set CS. With this concept, we could depict a comprehensive and integrated framework, of Innovation Dynamics as in Fig. 4.

The innovation dynamics can be interpreted step by step clockwise and counter clockwise. Let us briefly discuss its clockwise interpretation according to the indices (i)–(vi) of Fig. 4.

-

(i)

According to HD theory, when there exists unfavorable discrepancies between the current states and the ideal goals of individuals or organizations (for instance, the corporations are losing money instead of making money, or they are technologically behind, instead of being ahead of the competitors), it will create charges which can prompt the individuals or corporations to work harder to reach their ideal goals;

-

(ii)

The transformation of CSs will appear in visible or invisible ways, which results in a new set of the products or services produced by the corporations;

-

(iii)

The products or services produced by corporations must carry the capability to relieve/release the pains and frustrations of targeted customers. Note that there are actual domains, reachable domains, and potential domains for the targeted customers, and for their pains, frustrations, and problems;

-

(iv)

Besides discharge, corporations or organizations can create charges in the targeted customers by means of marketing, advertisement or promotion, and vice versa;

-

(v)

The targeted customers will experience the change of charges. When their pains and frustrations are relieved, the customers become happy. By their buying the products or services, the products and services create their value;

-

(vi)

The value will be distributed to the participants such as employees, stakeholders, suppliers, society, etc. In addition, to gain the competitive edge, products and services have to be continuously upgraded and improved. The reinvestment, therefore, is needed in order to develop and produce new products and services.

For the counterclockwise interpretation, we refer the reader to [17].

6.1.2 Challenging Decision Making Problems Related to Innovation Dynamics

Note that activities over each link of the Innovation Dynamics, in Fig. 4, involve DMCS problems in the form of competence sets transformation problems related to different fields of management. Therefore, in order to solve them, one may formulate them as OCS problems using models (8)–(12). For example, the process of transforming competence sets (refer to (i) and (D) in Fig. 4) consists of acquiring, adjusting and allocating resources (human resources, skills, technologies, etc.) and transforming resources into products/services. The corporation’s internal and external charge will be released when the transformation of CSs succeeds. The related management fields are human resource management, organization management, production management, research and development (R&D), and procurement. For more details on the fields of management related to different processes of innovation dynamics, see [17]. Such relations can be seen as a set of new research directions for the various fields of managements to develop and research, as well as a possible checking list for reducing decision blinds and avoiding decision traps.

6.2 DMCS and OCS in Management and Game Situations

Nowadays, business or institution managers face various challenges in financial, human resources, operations, and marketing management as competition and environmental uncertainties increase due to globalization and frequent crises. Most of such problems can be formulated as DMCS problems, particularly, dis/covering problems. The parameters involved in changeable spaces may be demand; the price of raw materials as oil; the rate of exchange between currencies as Dollar–Euro rate; the behavior of competitors, etc. The survival and sustainability of a business or institution depends on how it deals with DMCS problems. Since decision making in management is essentially based on interaction, psychology and competence, the OCS models (8)–(14) we presented in Sect. 4 could be very useful to enable us to derive satisfactory solutions to DMCS management related problems.