Abstract

We consider a general network of harmonic oscillators driven out of thermal equilibrium by coupling to several heat reservoirs at different temperatures. The action of the reservoirs is implemented by Langevin forces. Assuming the existence and uniqueness of the steady state of the resulting process, we construct a canonical entropy production functional \(S^t\) which satisfies the Gallavotti–Cohen fluctuation theorem. More precisely, we prove that there exists \(\kappa _c>\frac{1}{2}\) such that the cumulant generating function of \(S^t\) has a large-time limit \(e(\alpha )\) which is finite on a closed interval \([\frac{1}{2}-\kappa _c,\frac{1}{2}+\kappa _c]\), infinite on its complement and satisfies the Gallavotti–Cohen symmetry \(e(1-\alpha )=e(\alpha )\) for all \(\alpha \in {\mathbb {R}}\). Moreover, we show that \(e(\alpha )\) is essentially smooth, i.e., that \(e'(\alpha )\rightarrow \mp \infty \) as \(\alpha \rightarrow \tfrac{1}{2}\mp \kappa _c\). It follows from the Gärtner–Ellis theorem that \(S^t\) satisfies a global large deviation principle with a rate function I(s) obeying the Gallavotti–Cohen fluctuation relation \(I(-s)-I(s)=s\) for all \(s\in {\mathbb {R}}\). We also consider perturbations of \(S^t\) by quadratic boundary terms and prove that they satisfy extended fluctuation relations, i.e., a global large deviation principle with a rate function that typically differs from I(s) outside a finite interval. This applies to various physically relevant functionals and, in particular, to the heat dissipation rate of the network. Our approach relies on the properties of the maximal solution of a one-parameter family of algebraic matrix Riccati equations. It turns out that the limiting cumulant generating functions of \(S^t\) and its perturbations can be computed in terms of spectral data of a Hamiltonian matrix depending on the harmonic potential of the network and the parameters of the Langevin reservoirs. This approach is well adapted to both analytical and numerical investigations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Boundary driven mechanical systems are paradigmatic in nonequilibrium statistical mechanics. Existence and uniqueness of nonequilibrium steady states have been extensively studied for a variety of such systems: harmonic [47] and anharmonic [5] crystals, 1-dimensional chains of anharmonic oscillators [6, 8, 21–24, 65], rotors [11, 12] and other Hamiltonian systems [9, 26, 49, 50]. More general Hamiltonian networks have been considered in [10, 27, 52]. In this paper, we shall study stochastically driven networks of harmonic oscillators which are the simplest models in the last category. The questions of existence and uniqueness of the steady state is well understood in such systems. Estimates of the rate of relaxation to the steady state are also available [1, 66]. The focus of this work is on the concept of entropy production and its fluctuations, although our approach can be extended to cover the fluctuations of energy/entropy fluxes between individual heat reservoirs and the network. The universal fluctuation relations satisfied by the entropy production rate (or phase-space contraction rate) in transient [20, 25] and stationary [31, 32] processes have been one of the central issues in the recent developments of nonequilibrium statistical mechanics. Various approaches to these relations have been proposed in the literature and we refer the reader to [13, 14, 39, 40, 48, 51, 61, 69] for reviews and detailed discussions. The interested reader should also consult [67], where fluctuation relations are derived for boundary driven anharmonic chains, and [41] for a discussion of these topics in the framework of Gaussian dynamical systems. For theoretical and experimental works dealing specifically with mechanically driven harmonic systems we refer the reader to [36, 37, 44].

In this paper we follow the scheme advocated in [39, 40] and fully elaborated in [38]. The details are as follows.

Consider a probability space \((\Omega ,\mathcal{P},\mathbb {P})\) equipped with a measurable involution \(\Theta :\Omega \rightarrow \Omega \). Suppose that the measures \(\mathbb {P}\) and \(\widetilde{\mathbb {P}}=\mathbb {P}\circ \Theta \) are equivalent. We define the canonical entropic functional of the quadruple \((\Omega ,\mathcal{P},\mathbb {P},\Theta )\) by

and denote by P the law of this random variable under \(\mathbb {P}\). Since

the support of P is symmetric w.r.t. the origin. It reduces to \(\{0\}\) whenever \(\widetilde{\mathbb {P}}=\mathbb {P}\). In the opposite case the symmetry \(\Theta \) is broken and the well known fact that the relative entropy of \(\mathbb {P}\) w.r.t. \(\widetilde{\mathbb {P}}\), given by

is strictly negative (it vanishes iff \(\mathbb {P}=\widetilde{\mathbb {P}}\)) shows that the law P favors positive values of S. To obtain a more quantitative statement of this fact, it is useful to consider Rényi’s relative \(\alpha \)-entropy

Note that \(\mathrm{Ent}_0(\mathbb {P}|\widetilde{\mathbb {P}})=\mathrm{Ent}_1(\mathbb {P}|\widetilde{\mathbb {P}})=0\), and since the function \({\mathbb {R}}\ni \alpha \mapsto \mathrm{Ent}_\alpha (\mathbb {P}|\widetilde{\mathbb {P}})\) is convex by Hölder’s inequality, one has \(\mathrm{Ent}_\alpha (\mathbb {P}|\widetilde{\mathbb {P}})\le 0\) for \(\alpha \in [0,1]\). It is straightforward to check that \(\mathrm{Ent}_\alpha (\mathbb {P}|\widetilde{\mathbb {P}})\) is a real-analytic function of \(\alpha \) on some open interval containing ]0, 1[ and infinite on the (possibly empty) complement of its closure. In particular, it is strictly convex on its analyticity interval.

From the definition of \(\widetilde{\mathbb {P}}\) and Relation (1.2) we deduce

and the definition of S yields

It follows that Rényi’s entropy satisfies the symmetry relation

which, in applications to dynamical systems, will turn into the so-called Gallavotti–Cohen symmetry. The second equality in Eq. (1.3) allows us to express Rényi’s entropy in terms of the law P as

Note that, up to the sign of \(\alpha \), \(e(\alpha )\) is the the cumulant generating function of the random variable S. Denoting by \(\widetilde{P}\) the law of \(-S\) under \(\mathbb {P}\), the symmetry (1.4) leads to

from which we obtain

on the common support of P and \(\widetilde{P}\). Thus, negative values of S are exponentially suppressed by the universal weight \(\mathrm {e}^{-s}\). In the physics literature such an identity is called a fluctuation relation or a fluctuation theorem for the quantity described by S. Most often S is a measure of the power injected in a system or of the rate at which it dissipates heat in some thermostat. The equivalent symmetry of the cumulant generating function \(e(\alpha )\) of S which follows from the symmetry (1.4) of Rényi’s entropy

is referred to as the Gallavotti–Cohen symmetry. The name symmetry function is sometimes given to

In terms of this function, the fluctuation relation is expressed as

The above-mentioned fact that

rewritten as

constitute the associated Jarzynski identity and the strict negativity of relative entropy

becomes Jarzynski’s inequality.

In all known applications of the above scheme to nonequilibrium statistical mechanics, the space \((\Omega ,\mathcal{P},\mathbb {P})\) describes the space-time statistics of the physical system under consideration over some finite time interval [0, t] (in the following, we shall denote by a superscript or a subscript the dependence of various objects on the length t of the considered time interval). The involution \(\Theta ^t\) is related to time-reversal and the canonical entropic functional \(S^t\) to entropy production or phase space contraction. The fluctuation relation (1.5) is a fingerprint of time-reversal symmetry breaking and the strict inequality in (1.8) is a signature of nonequilibrium.

The practical implementation of our scheme to nonequilibrium statistical mechanics requires 4 distinct steps which will structure our treatment of thermally driven harmonic networks. In order to clearly formulate the purpose of each of these steps, we illustrate the procedure at hand on a very simple model of electrical RC-circuit described in Fig. 1. We shall not provide detailed proofs of our claims in this example since they all reduce to elementary calculations. We refer the reader to [74] for a detailed physical analysis and to [30] for experimental verification of the fluctuation relations for this system.

Step 1: Construction of the canonical entropic functional

The internal energy of the circuit of Fig. 1 is stored in the electric field within the capacitor and is given by

where z denotes the charge on the plate of the capacitor and C is the capacitance. The equation of motion for z is

where I is the constant current fed into the circuit and \(V_t\) the electromotive force (emf) generated by the Johnson–Nyquist thermal noise within the resistor R. Integrating the equation of motion gives

To simplify our discussion (and to avoid stochastic integrals and the technicalities related to time-reversal), we shall assume that \(V_t\) has the form

where \(\tau \ll \tau _0=RC\) and \(\xi _k\) denotes a sequence of i.i.d. centered Gaussian random variables with variance \(\sigma ^2\). Sampling the charge at times \(n\tau +0\) yields a sequence \(z_0,z_1,z_2,\ldots \) satisfying the recursion relation

where \({\overline{z}}=I\tau _0\) and \(\eta =\mathrm {e}^{-\tau /\tau _0}\). According to (1.10), the charge between two successive kicks is given by

Assuming \(z_0\) to be independent of \(\{\xi _k\}\), the sequence \(z_0,z_1,z_2\ldots \) is a Markov chain with transition kernel

One easily checks that the unique invariant measure for this chain has the pdf

In the case \(I=0\) (no external forcing), according to the zero\({}^\mathrm{th}\) law of thermodynamics, the system should relax to its thermal equilibrium at the temperature T of the heat bath. Thus, in this case the invariant measure should be the equilibrium Gibbs state of the circuit at temperature T which, by (1.9), has the pdf

\(k_B\) denoting Boltzmann’s constant. This requirement fixes the value of variance of \(\xi _k\)’s and

One can show (see Sect. 8 in [3]) that, in the limit \(\tau \rightarrow 0\), the covariance of the fluctuating emf \(V_t\) converges to

in accordance with the Johnson–Nyquist formula ([55], see also [73, Sect. IX.2]). For \(I\not =0\), Eq. (1.13) describes a nonequilibrium steady state (NESS) of the system. In the following, we shall consider the stationary Markov chain started with the invariant measure and denote by \(\langle \,\cdot \,\rangle _\mathrm{st}\) the corresponding expectation.

The pdf of a finite segment \(Z_n=(z_0,\ldots ,z_n)\in {\mathbb {R}}^{n+1}\) of the stationary process is given by

which is the Gaussian measure on \({\mathbb {R}}^{n+1}\) with mean and covariance

We chose the involution \(\Theta :{\mathbb {R}}^{n+1}\rightarrow {\mathbb {R}}^{n+1}\) to be the composition of charge conjugation \(z\mapsto -z\) with time-reversal of the Markov chain,

The time-reversed process is the Markov chain which assigns the weight (1.14) to the reversed segment \(\Theta (Z_n)\). Thus, the transition kernel \({\tilde{p}}(z'|z)\) and invariant measure \({\tilde{p}}_\mathrm{st}(z)\) of the time-reversed process must satisfy

for all \(n\ge 1\) and \(Z_n\in {\mathbb {R}}^{n+1}\). For \(n=1\), this equation becomes

Integrating both sides over \(z_1\) gives

from which we further deduce

One then easily checks that (1.15) is indeed satisfied for all \(n\ge 1\). Note that in the case \(I=0\) one has

and it follows that \({\tilde{p}}(z'|z)=p(z'|z)\), Eq. (1.16) turning into the detailed balance condition. In this case, the time-reversed process coincides with the direct one: in thermal equilibrium, the time-reversal symmetry holds. However, in the nonequilibrium case \(I\not =0\), time-reversal invariance is broken and \({\tilde{p}}_\mathrm{st}(z)\not =p_\mathrm{st}(z)\).

We are now ready to describe the canonical entropic functional. Applying our general scheme to the marginal \(\mathbb {P}^{n\tau }\) of the finite segment \(Z_n\) (which has the pdf \(p_n\)), we can write (1.1) as

from which we deduce

Step 2: Deriving a large deviation principle

From a more mathematical point of view, as stressed by Gallavotti–Cohen [31, 32], the interesting question is whether the entropic functional \(S^t\) satisfies a large deviation principle in the limit \(t\rightarrow \infty \). More precisely, is it possible to control the large fluctuations of \(S^t\) by a rate function \({\mathbb {R}}\ni s\mapsto I(s)\) such that

as \(t\rightarrow \infty \) for any open set \(\mathcal{S}\subset {\mathbb {R}}\) ? Moreover, does this rate function satisfy the relation

which is the limiting form of (1.5), for all \(s\in {\mathbb {R}}\) ? Finally, can one relate this rate function to the large-time asymptotics of Rényi’s entropy via a Legendre transformation

as suggested by the theory of large deviations? To illustrate these points, we return to our simple example.

For this very particular system, the fluctuation relation (1.5) essentially fixes the law of the random variable \(S^{n\tau }\). Indeed, since \(S^{n\tau }\) is Gaussian under the law of the stationary process (as a linear combination of Gaussian random variables \(\xi _k\)), its pdf \(P^{n\tau }\) is completely determined by the mean \({\overline{s}}_n\) and variance \(\sigma _n^2\) of \(S^{n\tau }\). A simple calculation based on (1.5) shows that \(\sigma _n^2=2{\overline{s}}_n\), whence it follows that

where we set

We conclude that

and hence

A direct calculation using (1.18) implies that, for any open set \(\mathcal{S}\subset {\mathbb {R}}\),

where the rate function

satisfies the fluctuation relation (1.17). The large-time symmetry function for \(S^{n\tau }\) is

Step 3: Relating the canonical entropic functional to a relevant dynamical or thermodynamical quantity

Denoting by \(U_t=z_t/C\) the voltage and using (1.10), the work performed on the system by the external current I in the period \(]k\tau ,(k+1)\tau [\) is equal to

Thus, we can rewrite

where

\(W^{n\tau }\) is the work performed by the external current during the period \([0,n\tau ]\). Accordingly, \(w_n\) is the average injected power and \({\overline{w}}\) is its expected stationary value. It follows from the first law of thermodynamics that the heat dissipated by the resistor R in the thermostat during the interval \([0,n\tau +0[\) is given by

and so we may also write

where

denote the average dissipated power and its expected stationary value.

Thus, up to a multiplicative and additive constant and a “small” (i.e., formally \(\mathcal{O}(n^{-1})\)) correction, \(S^{n\tau }/n\tau \) is the time averaged power injected in the system by the external forcing and the time averaged power dissipated into the heat reservoir during the time period \([0,n\tau +0[\).

Step 4: Deriving a large deviation principle for physically relevant quantities

The problem encountered here stems from the fact that the relation between \(S^t\) and a physically relevant quantity (denoted by \(\mathfrak {S}^t\)) typically involves some “boundary terms”, which depend on the state of the system at the initial time 0 and final time t. In cases where these boundary terms are uniformly bounded as \(t\rightarrow \infty \), one finds that \(\mathfrak {S}^t\) satisfies the same large deviation principle as \(S^t\). This is what happens, for example, in strongly chaotic dynamical systems over a compact phase space (e.g., under the Gallavotti–Cohen chaotic hypothesis); we refer the reader to [40, Sect. 10] for a discussion of this case. However, unbounded boundary terms can compete with the tails of the law of \(S^t\), which may lead to complications, as our example shows.

Given the Gaussian nature of \(w_n\), it is an easy exercise to show that the entropic functional directly related to work and defined by

has a cumulant generating function which satisfies

for all \(\alpha \in {\mathbb {R}}\). It follows that \(\mathfrak {S}_\mathrm{w}^{n\tau }\) satisfies the very same large deviation estimates as \(S^{n\tau }\). However, note that unlike function (1.19), the finite-time cumulant generating function \(\log \langle \mathrm {e}^{-\alpha \mathfrak {S}_\mathrm{w}^{n\tau }}\rangle _\mathrm{st}\) does not satisfy the Gallavotti–Cohen symmetry (1.6). Only in the large time limit do we recover this symmetry. A simple change of variable allows us to write down the cumulant generating function of the work \(W^{n\tau }\),

We conclude that the work \(W^{n\tau }\) satisfies the large deviations estimate

for all open sets \(\mathcal{W}\subset {\mathbb {R}}\) with the rate function

The symmetry function for work is thus

Note that, as the kick period \(\tau \) approaches zero, we recover the universal fluctuation relation (1.17), i.e., \(\mathfrak {s}_\mathrm{work}(w)=w\).

Consider now the entropic functional

related to the dissipated heat. The explicit evaluation of a Gaussian integral shows that its cumulant generating function is given by

where \(a_n\) and \(b_n\) are bounded (in fact converging) sequences and

The divergence of the cumulant generating function for \(|\alpha |\ge \alpha _n\) is of course due to the competition between the tail of the Gaussian law \(p_n\) and the quadratic terms in \(\mathfrak {S}^{n\tau }_\mathrm{h}\).

Note that the sequence \(\alpha _n\) is monotone decreasing to its limit

and it follows that

The unboundedness of the boundary terms involving \(z_0^2\) and \(z_n^2\) in (1.20) leads to a breakdown of the Gallavotti–Cohen symmetry for \(|\alpha -\frac{1}{2}|>|\alpha _\mathrm{c}-\frac{1}{2}|\). More dramatically, the limiting cumulant generating function is not steep, i.e., its derivative fails to diverge as \(\alpha \) approaches \({\pm }\alpha _\mathrm{c}\). Under such circumstances, the derivation of a global large deviation principle for nonlinear dynamical systems is a difficult problem which remains largely open and deserves further investigations. For linear systems, however, as shown in [41], it is sometimes possible to exploit the Gaussian nature of the process to achieve this goal. Indeed, following the strategy developped in Sect. 3.4, one can show that \(\mathfrak {S}_\mathrm{h}^{n\tau }\) satisfies a large deviation principle with rate function

where

Performing a simple change of variable, we conclude that the cumulant generating function of the heat \(Q^{n\tau }\) satisfies

The corresponding large deviations estimate reads

for all open sets \(\mathcal{Q}\subset {\mathbb {R}}\) with the rate function

which satisfies what is called in the physics literature an extended fluctuation relation [15, 16, 28, 29, 33–35, 54, 72] with the symmetry function

where

Thus, the linear behavior persists for small fluctuations \(|q|\le |q_{-}|\), but saturates to the constant values \({\mp }({q_{+}}+{q_{-}})\) for \(|q| > q_{+}\), the crossover between these two regimes being described by a parabolic interpolation. Note also that, as the kick period \(\tau \) approaches zero, \(q_{\mp }\rightarrow (1\mp 2){\overline{q}}/k_{B}T\). In this limit the symmetry function \({\mathfrak {s}_\mathrm{heat}}(q)\) agrees with the conclusions of [74] (see Fig. 2). \(\square \)

The symmetry functions (i.e., twice the odd part of the rate function) of work and heat for the circuit of Fig. 1 in the limit \(\tau \rightarrow 0\) (the unit on the abscissa is \(RI^{2}/k_{B}T\))

As this example shows, the main problem in understanding the mathematical status and physical implications of fluctuation relations in oscillator networks and other boundary driven Hamiltonian systems stems from the lack of compactness of phase space and its consequence: the unboundedness of the observable describing the energy transfers between the system and the reservoirs [i.e., the last term in the right-hand side of Eq. (1.20)]. We will show that one can achieve complete control of these boundary terms by an appropriate change of drift (a Girsanov transformation) in the Langevin equation describing the dynamics of harmonic networks. This change is parametrized by the maximal solution of a one-parameter family of algebraic Riccati equation naturally associated to deformations of the Markov semigroup of the system. For a network of N oscillators, our approach reduces the calculation of the limiting cumulant generating function of the canonical functional \(S^t\) and its perturbations by quadratic boundary terms to the determination of some spectral data of the \(4N\times 4N\) Hamiltonian matrix of the above-mentioned Riccati equations. Combining this asymptotic information with Gaussian estimates of the finite time cumulant generating functions, we are able to derive a global large deviation principle for arbitrary quadratic boundary perturbations of \(S^t\). We stress that our scheme is completely constructive and well suited to numerical calculations.

The remaining parts of this paper are organized as follows. In Sect. 2 we introduce a general class of harmonic networks and the stochastic processes describing their nonequilibrium dynamics. Section 3 contains our main results. There, we consider more general framework and study the large time asymptotics of the entropic functional \(S^t\) canonically associated to stochastic differential equations with linear drift satisfying some structural constraints (fluctuation–dissipation relations). We prove a global large deviation principle for this functional and show, in particular, that it satisfies the Gallavotti–Cohen fluctuation theorem. We then consider perturbations of \(S^t\) by quadratic boundary terms and show that they also satisfy a global large deviation principle. This applies, in particular, to the heat released by the system in the reservoirs. We turn back to harmonic networks in Sect. 4 where we apply our results to specific examples. Finally, Sect. 5 collects the proofs of our results.

2 The Model

We consider a collection of one-dimensional harmonic oscillators indexed by a finite set \(\mathcal{I}\). The configuration space \({\mathbb {R}}^\mathcal{I}\) is endowed with its Euclidean structure and the phase space \(\Xi ={\mathbb {R}}^\mathcal{I}\oplus {\mathbb {R}}^\mathcal{I}\) is equipped with its canonical symplectic 2-form \(\mathrm {d}p\wedge \mathrm {d}q\). The Hamiltonian is given by

where \(|\cdot |\) is the Euclidean norm and \(\omega : {\mathbb {R}}^\mathcal{I}\rightarrow {\mathbb {R}}^\mathcal{I}\) is a non-singular linear map. Time-reversal of the Hamiltonian flow of h is implemented by the anti-symplectic involution of \(\Xi \) given by

We consider the stochastic perturbation of the Hamiltonian flow of h obtained by coupling a non-empty subset of the oscillators, indexed by \(\partial \mathcal{I}\subset \mathcal{I}\), to Langevin heat reservoirs. The reservoir coupled to the ith oscillator is characterized by two parameters: its temperature \(\vartheta _i>0\) and its relaxation rate \(\gamma _i>0\). We encode these parameters in two linear maps: a bijection \(\vartheta :{\mathbb {R}}^{\partial \mathcal{I}}\rightarrow {\mathbb {R}}^{\partial \mathcal{I}}\) and an injection \(\iota :{\mathbb {R}}^{\partial \mathcal{I}}\rightarrow {\mathbb {R}}^\mathcal{I}={\mathbb {R}}^{\partial \mathcal{I}}\oplus {\mathbb {R}}^{\mathcal{I}\setminus \partial \mathcal{I}}\) defined by

The external force acting on the ith oscillator has the usual Langevin form

where the \(\dot{w}_i\) are independent white noises.

In mathematically more precise terms, we shall deal with the dynamics described by the following system of stochastic differential equations

where \({}^*\) denotes conjugation w.r.t. the Euclidean inner products and w is a standard \({\mathbb {R}}^{\partial \mathcal{I}}\)-valued Wiener process over the canonical probability space \((W,\mathcal{W},\mathbb {W})\). We denote by \(\{\mathcal{W}_t\}_{t\ge 0}\) the associated natural filtration.

To the Hamiltonian (2.1) we associate the graph \(\mathcal{G}=(\mathcal{I},\mathcal{E})\) with vertex set \(\mathcal{I}\) and edges

To avoid trivialities, we shall always assume that \(\mathcal{G}\) is connected.

As explained in the introduction, we shall construct the canonical entropic functional of the process (p(t), q(t)) and relate it to the heat released by the network into the thermal reservoir. We end this section with a calculation of the latter quantity.

Applying Itô’s formula to the Hamiltonian h we obtain the expression

which describes the change in energy of the system. The ith term on the right-hand side of this identity is the work performed on the network by the ith Langevin force (2.3). Since these Langevin forces describe the action of heat reservoirs, we shall identify

with the heat injected in the network by the ith reservoir. A direct application of the fundamental thermodynamic relation between heat and entropy leads to consider \(\mathrm {d}S_i(t)=-\vartheta _i^{-1}\delta Q_i(t)\) as the entropy dissipated into the ith reservoir. Accordingly, the total entropy dissipated in the reservoirs during the time interval [0, t] is given by the functional

For a lack of better name, we shall call the physical quantity described by this functional the thermodynamic entropy (TDE), in order to distinguish it from various information theoretic entropies that will be introduced latter.

3 Abstract Setup and Main Results

It turns out that a large part of the analysis of the process (2.4) and its entropic functionals is independent of the details of the model and relies only on its few structural properties. In this section we recast the harmonic networks in a more abstract framework, retaining only the structural properties of the original system which are necessary for our analysis.

Notations and Conventions Let E and F be real or complex Hilbert spaces. L(E, F) denotes the set of (continuous) linear operators \(A:E\rightarrow F\) and \(L(E)=L(E,E)\). For \(A\in L(E,F)\), \(A^*\in L(F,E)\) denotes the adjoint of A, \(\Vert A\Vert \) its operator norm, \(\mathrm{Ran}\,A\subset F\) its range and \(\mathrm{Ker}\,A\subset E\) its kernel. We denote the spectrum of \(A\in L(E)\) by \(\mathrm{sp}(A)\). A is non-negative (resp. positive), written \(A\ge 0\) (resp. \(A>0\)), if it is self-adjoint and \(\mathrm{sp}(A)\in [0,\infty [\) (resp. \(\mathrm{sp}(A)\subset ]0,\infty [\)). We write \(A\ge B\) whenever \(A-B\in L(E)\) is non-negative. The relation \(\ge \) defines a partial order on L(E). The controllable subspace of a pair \((A,Q)\in L(E)\times L(F,E)\) is the smallest A-invariant subspace of E containing \(\mathrm{Ran}\,Q\). We denote it by \(\mathcal{C}(A,Q)\). If \(\mathcal{C}(A,Q)=E\), then (A, Q) is said to be controllable. We denote by \({\mathbb {C}}_\mp \) the open left/right half-plane. \(A\in L(E)\) is said to be stable/anti-stable whenever \(\mathrm{sp}(A)\subset {\mathbb {C}}_\mp \).

We start by rewriting the equation of motion (2.4) in a more compact form. Setting

Equation (2.4) takes the form

and functional (2.6) becomes

Note that the vector field Ax splits into a conservative (Hamiltonian) part \(\Omega x\) and a dissipative part \(-\Gamma x\) defined by

These operators satisfy the relations

The solution of the Cauchy problem associated to (3.2) with initial condition \(x(0)=x_0\) can be written explicitly as

This relation defines a family of \(\Xi \)-valued Markov processes indexed by the initial condition \(x_0\in \Xi \). This family is completely characterized by the data

where \(\Xi \) and \(\partial \,\Xi \) are finite-dimensional Euclidean vector spaces and \((A,Q,\vartheta ,\theta )\) is subject to the following structural constraints:

In the remaining parts of Sect. 3, we shall consider the family of processes (3.7), which are strong solutions of SDE (3.2), associated with the data (3.8) satisfying (3.9).

Remark 3.1

The concrete models of the previous section fit into the abstract setup defined by (3.2), (3.8), and (3.9) with \(\mathrm{Ker}\,(A-A^*)=\{0\}\) and \(\theta Q=-Q\). We have weakened the first condition and included the case \(\theta Q=+Q\) in (3.9) in order to encompass the quasi-Markovian models introduced in [23, 24]. There, the Langevin reservoirs are not directly coupled to the network, but to additional degrees of freedom described by dynamical variables \(r\in {\mathbb {R}}^\mathcal{J}\), where \(\mathcal{J}\) is a finite set. The augmented phase space of the network is \(\Xi ={\mathbb {R}}^\mathcal{J}\oplus {\mathbb {R}}^\mathcal{I}\oplus {\mathbb {R}}^\mathcal{I}\), and \(\partial \,\Xi ={\mathbb {R}}^\mathcal{J}\). The equations of motion take the form (3.2) with

where \(\iota :{\mathbb {R}}^\mathcal{J}\rightarrow {\mathbb {R}}^\mathcal{J}\) is bijective and \(\Lambda :{\mathbb {R}}^\mathcal{J}\rightarrow {\mathbb {R}}^\mathcal{I}\) injective. The time reversal map in this case is given by

Writing the system internal energy as \(H(x)=\frac{1}{2}|p|^2+\frac{1}{2}|\omega q|^2+\frac{1}{2}|r|^2\), the calculation of the previous section yields the following formula for the total entropy dissipated into the reservoirs

where \(\mathfrak {S}^t\) is given by (3.3).

Let \(\mathcal{P}(\Xi )\) be the set of Borel probability measures on \(\Xi \) and denote by \(P^t(x,\,\cdot \,)\in \mathcal{P}(\Xi )\) the transition kernel of the process (3.7). For bounded or non-negative measurable functions f on \(\Xi \) and \(\nu \in \mathcal{P}(\Xi )\) we write

so that \(\nu (f_t)=\nu _t(f)\). A measure \(\nu \) is invariant if \(\nu _t=\nu \) for all \(t\ge 0\). We denote the actions of time-reversal by

so that \(\nu (\widetilde{f})=\widetilde{\nu }(f)\). A measure \(\nu \) is time-reversal invariant if \(\widetilde{\nu }=\nu \). The generator L of the Markov semigroup \(P^t\) acts on smooth functions as

where

We further denote by \(\mathbb {P}_{x_0}\) the induced probability measure on the path space \(C({\mathbb {R}}^+,\Xi )\) and by \(\mathbb {E}_{x_0}\) the associated expectation. Considering \(x_0\) as a random variable, independent of the driving Wiener process w and distributed according to \(\nu \in \mathcal{P}(\Xi )\), we denote by \(\mathbb {P}_\nu \) and \(\mathbb {E}_\nu \) the induced path space measure and expectation. In the language of statistical mechanics, functions f on \(\Xi \) are the observables of the system, \(\nu \) is its initial state, and the flow \(t\mapsto \nu _t\) describes its time evolution. Invariant measures thus correspond to steady states of the system.

The following result is well known (see Chapter 6 in the book [18] and the papers [27, 52]). For the reader convenience, we provide a sketch of its proof in Sect. 5.1.

Theorem 3.2

-

(1)

Under the above hypotheses, the operator

$$\begin{aligned} M:=\int _0^\infty e^{sA}Be^{sA^*}\mathrm {d}s \end{aligned}$$is well defined and non-negative, and its restriction to \(\mathrm{Ran}\,M\) satisfies the inequality

$$\begin{aligned} \vartheta _\mathrm{min}=\min \mathrm{sp}(\vartheta ) \le M\big |_{\mathrm{Ran}\,M}\le \max \mathrm{sp}(\vartheta )=\vartheta _\mathrm{max}. \end{aligned}$$(3.13)Moreover, the centred Gaussian measure \(\mu \) with covariance M is invariant for the Markov processes associated with (3.2).

-

(2)

The invariant measure \(\mu \) is unique iff the pair (A, Q) is controllable. In this case, the mixing property holds in the sense that, for any \(f\in L^1(\Xi ,\mathrm {d}\mu )\), we have

$$\begin{aligned} \lim _{t\rightarrow +\infty }P^tf=\mu (f), \end{aligned}$$where the convergence holds in \(L^1(\Xi ,\mathrm {d}\mu )\) and uniformly on compact subsets of \(\Xi \).

-

(3)

Let x(t) be defined by relation (3.7), in which the initial condition \(x_0\) is independent of w and is distributed as \(\mu \). Then x(t) is a centred stationary Gaussian process. Moreover, its covariance operator defined by the relation \((\eta _1,K(t,s)\eta _2)=\mathbb {E}_\mu \bigl \{(x(t),\eta _1)(x(s),\eta _2)\bigr \}\) has the form

$$\begin{aligned} K(t,s)= e^{(t-s)_+A}M e^{(t-s)_-A^*}. \end{aligned}$$(3.14)

Remark 3.3

In the harmonic network setting, if \(\vartheta =\vartheta _0 I\) for some \(\vartheta _0\in ]0,\infty [\) (i.e., the reservoirs are in a joint thermal equilibrium at temperature \(\vartheta _0\)), then it follows from (3.13) that \(M=\vartheta \), which means that \(\mu \) is the Gibbs state at temperature \(\vartheta _0\) induced by the Hamiltonian h.

In the sequel, we shall assume without further notice that process (3.7) has a unique invariant measure \(\mu \), i.e., that the following hypothesis holds:

Assumption (C) The pair (A, Q) is controllable.

Remark 3.4

To make contact with [52], note that in terms of Stratonovich integral the TDE functional (3.3) is given by

This identity is a standard result of stochastic calculus (see, e.g., Sect. II.7 in [58]) and is used as a definition of the entropy current in [52].

3.1 Entropies and Entropy Production

In this section we introduce information theoretic quantities which play an important role in our approach to fluctuation relations. We briefly discuss their basic properties and in particular their relations with the TDE \(\mathfrak {S}^t\).

Let \(\nu _1\) and \(\nu _2\) be two probability measures on the same measurable space. If \(\nu _1\) is absolutely continuous w.r.t. \(\nu _2\), the relative entropy of the pair \((\nu _1, \nu _2)\) is defined by

We recall that \(\mathrm{Ent}(\nu _1|\nu _2)\in [-\infty ,0]\), with \(\mathrm{Ent}(\nu _1|\nu _2)=0\) iff \(\nu _1=\nu _2\) (see, e.g., [56]).

Suppose that \(\nu _1\) and \(\nu _2\) are mutually absolutely continuous. For \(\alpha \in {\mathbb {R}}\), the Rényi [60] relative \(\alpha \)-entropy of the pair \((\nu _1, \nu _2)\) is

The function \({\mathbb {R}}\ni \alpha \mapsto \mathrm{Ent}_\alpha (\nu _1|\nu _2)\in ]-\infty ,\infty ]\) is convex. It is non-positive on [0, 1], vanishes for \(\alpha \in \{0,1\}\), and is non-negative on \({\mathbb {R}}\setminus ]0,1[\). It is real analytic on ]0, 1[ and vanishes identically on this interval iff \(\nu _1=\nu _2\). Finally,

for all \(\alpha \in {\mathbb {R}}\).

Let \(\nu \in \mathcal{P}(\Xi )\) be such that \(\nu (|x|^2)<\infty \) (recall that in our abstract framework the Hamiltonian is \(h(x)=\frac{1}{2}|x|^2\)). The Gibbs–Shannon entropy of \(\nu _t=\nu P^t\) is defined by

The Gibbs–Shannon entropy is finite for all \(t>0\) (see Lemma 5.4 (1) below) and is a measure of the internal entropy of the system at time t.

To formulate our next result (see Sect. 5.2 for its proof) we define

Note that any Gaussian measure on \(\Xi \) belongs to \(\mathcal{P}_+(\Xi )\).

Proposition 3.5

Let a non-negative operator \(\beta \in L(\Xi )\) be such thatFootnote 1

Define the quadratic form

and a reference measure \(\mu _\beta \) on \(\Xi \) by

Then the following assertions hold.

-

(1)

\(\mu _\beta \Theta =\mu _\beta \) and \(\Theta \sigma _\beta =-\sigma _\beta \).

-

(2)

Let \(L^\beta \) denote the formal adjoint of the Markov generator (3.11) w.r.t. the inner product of the Hilbert space \(L^2(\Xi ,\mu _\beta )\). Then

$$\begin{aligned} \Theta L^\beta \Theta =L+\sigma _\beta . \end{aligned}$$(3.20) -

(3)

The TDE (3.3) can be written as

$$\begin{aligned} \mathfrak {S}^t=-\int _0^t\sigma _\beta (x(s))\mathrm {d}s +\log \frac{\mathrm {d}\mu _{\beta }}{\mathrm {d}x}(x(t)) -\log \frac{\mathrm {d}\mu _{\beta }}{\mathrm {d}x}(x(0)). \end{aligned}$$(3.21) -

(4)

Suppose that Assumption (C) holds. Then for any \(\nu \in \mathcal{P}_+(\Xi )\) the de Bruijn relation

$$\begin{aligned} \frac{\mathrm {d}\ }{\mathrm {d}t}\mathrm{Ent}(\nu _t|\mu ) =\tfrac{1}{2} \nu _t\left( |Q^*\nabla \log \frac{\mathrm {d}\nu _t}{\mathrm {d}\mu }|^2\right) \end{aligned}$$(3.22)holds for t large enough. In particular, \(\mathrm{Ent}(\nu _t|\mu )\) is non-decreasing for large t.

-

(5)

Under the same assumptions

$$\begin{aligned} \frac{\mathrm {d}\ }{\mathrm {d}t}\left( S_\mathrm{GS}(\nu _t)+\mathbb {E}_{\nu }[\mathfrak {S}^t]\right) =\tfrac{1}{2}\nu _t \left( |Q^*\nabla \log \frac{\mathrm {d}\nu _t}{\mathrm {d}\mu _\beta }|^2\right) \end{aligned}$$(3.23)holds for t large enough.

Remark 3.6

Part (2) states that our system satisfies a generalized detailed balance condition as defined in [24] (see also [6]).

Let us comment on the physical interpretation of Part (3) in the harmonic network setting. Let \(\mathcal{I}=\cup _{k\in K}\mathcal{I}_k\) be a partition of the network and denote by \(\pi _k\) the orthogonal projection on \({\mathbb {R}}^\mathcal{I}\) with range \({\mathbb {R}}^{\mathcal{I}_k}\). Defining

for \(k,l\in K\), we decompose the network into |K| clusters \(\mathcal{R}_k\) with internal energy \(h_k\), interacting through the potentials \(v_{k,l}\). Denote by

the total energy stored in \(\mathcal{R}_k\). Assume that all the reservoirs attached to \(\mathcal{R}_k\), if any, are at the same temperature, i.e.,

and for \(k\in K\) let \(\beta _k\ge 0\) be such that \(\beta _k=\vartheta _i^{-1}\) whenever \(i\in \mathcal{I}_k\cap \partial \mathcal{I}\) (see Fig. 3). Defining the non-negative operator \(\beta \) by

we observe that (3.17) holds as a consequence of (3.24) and the time-reversal invariance of \({\tilde{h}}_k\). The corresponding reference measure \(\mu _\beta \) is, up to irrelevant normalization, a local Gibbs measure where each cluster \(\mathcal{R}_k\) is in equilibrium at the inverse temperatures \(\beta _k\).

Itô’s formula yields the local energy balance relation

where \(\delta Q_i(t)\) is given by (2.5). The last term on the right-hand side of this identity is the total heat injected into subsystem \(\mathcal{R}_k\) by the reservoirs attached to it. Thus, we can identify

with the total flux of energy flowing out of \(\mathcal{R}_k\) into its environment which is composed of the other subsystems \(\mathcal{R}_{l\not =k}\). Multiplying Eq. (3.26) with \(\beta _k\), summing over k, integrating over [0, t] and comparing the result with (2.6) we obtain

Comparison with (3.21) yields

which, according to the heat-entropy relation, is the total inter-cluster entropy flux. Two different ways of partitioning the system and assigning reference local temperatures to each subsystems leads to total entropy dissipation which only differs by a boundary term

provided the local inverse temperatures \(\beta _k\), \(\beta _k'\) are consistent with the temperatures of the reservoirs.

Equation (3.23) can be read as an entropy balance equation. Its left-hand side is the sum of the rate of increase of the internal Gibbs–Shannon entropy of the system and of the TDE flux leaving the system. Thus, the quantity on the right-hand side of Eq. (3.23) can be interpreted as the total entropy production rate of the process. Using Eqs. (3.16) and (3.21), we can rewrite Eq. (3.23) as

where the entropy production functional \(\mathrm{Ep}\) is defined by

In the physics literature, the quantity

is sometimes called stochastic entropy (see, e.g., [69, Sect. 2.4]). In the case \(\nu =\mu \), i.e., for the stationary process, stochastic entropy does not contribute to the expectation of \(\mathrm{Ep}(\mu ,t)\), and Eq. (3.28) yields

so that (3.27) reduces to

where the right-hand side is the steady state entropy production rate. In the following, we set

By (3.29) this quantity is independent of the choice of \(\beta \in L(\Xi )\) satisfying Conditions (3.17). The relation (3.30) shows that \(\mathrm{ep}\ge 0\). Computing the Gaussian integral on the right-hand side of (3.30) yields

where \(\Vert \cdot \Vert _2\) denotes the Hilbert–Schmidt norm. Thus, \(\mathrm{ep}>0\) iff \(MQ-Q\vartheta \not =0\). By Remark 3.3, the latter condition implies in particular that the eigenvalues of \(\vartheta \) (i.e., the temperatures \(\vartheta _i\)) are not all equal. Part (2) of the next proposition provides a converse. For the proof see Sect. 5.3.

Proposition 3.7

-

(1)

\(\mathrm{ep}=0\Leftrightarrow MQ=Q\vartheta \Leftrightarrow [\Omega ,M]=0\Leftrightarrow \mu \Theta =\mu .\) In particular, the steady state entropy production rate vanishes iff the steady state \(\mu \) is time-reversal invariant and invariant under the (Hamiltonian) flow \(\mathrm {e}^{t\Omega }\).

-

(2)

Let \(\vartheta _1,\vartheta _2\) be two distinct eigenvalues of \(\vartheta \) and denote by \(\pi _1,\pi _2\) the corresponding spectral projections. If \(\mathcal{C}(\Omega ,Q\pi _1)\cap \mathcal{C}(\Omega ,Q\pi _2)\not =\{0\}\), then \(\mathrm{ep}>0\).

Remark 3.8

The time-reversal invariance \(\mu \Theta =\mu \) of the steady state is equivalent to \(\theta M\theta =M\). For Markovian harmonic networks, the latter condition is easily seen to imply

i.e., the statistical independence of simultaneous positions and momenta. In the quasi-Markovian case, \(\theta M\theta =M\) implies

3.2 Path Space Time-Reversal

Given \(\tau >0\), the space-time statistics of the process (3.7) in the finite period \([0,\tau ]\) is described by \((\mathfrak {X}^\tau ,\mathcal{X}^\tau ,\mathbb {P}_\nu ^\tau )\), where \(\mathbb {P}_\nu ^\tau \) is the measure induced by the initial law \(\nu \in \mathcal{P}(\Xi )\) on the path-space \(\mathfrak {X}^\tau =C([0,\tau ],\Xi )\) equipped with its Borel \(\sigma \)-algebra \(\mathcal{X}^\tau \). Path space time-reversal is given by the involution

of \(\mathfrak {X}^\tau \). The time reversed path space measure \(\widetilde{\mathbb {P}}_\nu ^\tau \) is defined by

Since

\(\widetilde{\mathbb {P}}_\nu ^\tau \) describes the statistics of the time reversed process \(\varvec{\tilde{x}}\) started with the law \(\nu P^\tau \Theta \). It is therefore natural to compare it with \(\mathbb {P}_{\nu P^\tau \Theta }^\tau \). The following result (proved in Sect. 5.4) provides a connection between the functional \(\mathrm{Ep}(\,\cdot \,,\tau )\) and time-reversal of the path space measure.

Set

Proposition 3.9

For any \(\tau >0\) and any \(\nu \in \mathcal{P}^1_{\mathrm {loc}}(\Xi )\), \(\widetilde{\mathbb {P}}_\nu ^\tau \) is absolutely continuous w.r.t. \(\mathbb {P}_{\nu P^\tau \Theta }^\tau \) and

Remark 3.10

The above result is a mathematical formulation of [52, Sect. 3.1] in the framework of harmonic networks. Rewriting (3.34) as

we obtain Eq. (3.12) of [52]. Proposition 3.9 is a consequence of Girsanov formula, the generalized detailed balance condition (3.20), and the fact that the time-reversed process \(\varvec{\tilde{x}}\) is again a diffusion. Apart from the last fact, which was proven in [57], the main technical difficulty in its proof is to check the martingale property of the exponential of the right-hand side of (3.34).

Remark 3.11

It is an immediate consequence of Eq. (5.13) below that \(\nu P^\tau \in \mathcal{P}^1_{\mathrm {loc}}(\Xi )\) for any \(\nu \in \mathcal{P}(\Xi )\) and \(\tau >0\).

Equipped with Eq. (3.34) it is easy to transpose the relative entropies formulas of the previous section to path space measures. As a first application, let us compute the relative entropy of \(\mathbb {P}_{\eta \Theta }^\tau \) w.r.t. \(\widetilde{\mathbb {P}}_\nu ^\tau \):

If \(\nu \in \mathcal{P}_+(\Xi )\) then (3.27) yields

which, according to the previous section, is the entropy produced by the process during the period \([0,\tau ]\). Setting \(\nu =\mu \), we obtain

Together with Proposition 3.7(1), this relation proves

Theorem 3.12

The following statements are equivalent:

-

(1)

\(\mathbb {P}_{\mu }^\tau \circ \Theta ^\tau =\mathbb {P}_\mu ^\tau \) for all \(\tau >0\), i.e., the stationary process (3.7) is reversible.

-

(2)

\(\mathbb {P}_{\mu }^\tau \circ \Theta ^\tau =\mathbb {P}_\mu ^\tau \) for some \(\tau >0\).

-

(3)

\(\mathrm{ep}=0\).

3.3 The Canonical Entropic Functional

We are now in position to deal with the first step in our scheme: the construction of the canonical entropic functional \(S^\tau \) associated to \((\mathfrak {X}^\tau ,\mathcal{X}^\tau ,\mathbb {P}_\mu ^\tau ,\Theta ^\tau )\). By Proposition 3.9, Rényi’s relative \(\alpha \)-entropy per unit time of the pair (\(\mathbb {P}_\mu ^\tau , \widetilde{\mathbb {P}}_\mu ^\tau )\),

is the cumulant generating function of

In the following, we shall set

which, by construction, satisfies the Gallavotti–Cohen symmetry \(e_\tau (1-\alpha )=e_\tau (\alpha )\).

Before formulating our main result on the large time asymptotics of \(e_\tau (\alpha )\), we need several technical facts which will be proved in Sect. 5.5.

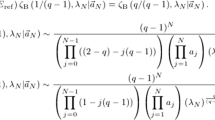

Theorem 3.13

Suppose that Assumption (C) holds.

-

(1)

For \(\beta \in L(\Xi )\) satisfying Conditions (3.17), the map

$$\begin{aligned} {\mathbb {R}}\ni \omega \mapsto E(\omega ) =Q^*(A^*-\mathrm {i}\omega )^{-1}\Sigma _\beta (A+\mathrm {i}\omega )^{-1}Q \end{aligned}$$(3.37)takes values in the self-adjoint operators on the complexification of \(\partial \Xi \). As such, it is continuous and independent of the choice of \(\beta \).

-

(2)

Set

$$\begin{aligned} \varepsilon _-=\min _{\omega \in {\mathbb {R}}}\min \mathrm{sp}(E(\omega )),\qquad \varepsilon _+=\max _{\omega \in {\mathbb {R}}}\max \mathrm{sp}(E(\omega )),\qquad \kappa _c=\frac{1}{\varepsilon _+}-\frac{1}{2}. \end{aligned}$$The following alternative holds: either \(\kappa _c=\infty \) in which case \(E(\omega )=0\) for all \(\omega \in {\mathbb {R}}\), or \(\frac{1}{2}<\kappa _c<\infty \), \(\varepsilon _-<0\), \(0<\varepsilon _+<1\), and

$$\begin{aligned} \frac{1}{\varepsilon _-}+\frac{1}{\varepsilon _+}=1. \end{aligned}$$ -

(3)

Set \(\mathfrak {I}_c=]\frac{1}{2}-\kappa _c,\frac{1}{2}+\kappa _c[\, =\,]\frac{1}{\varepsilon _-},\frac{1}{\varepsilon _+}[\). The function

$$\begin{aligned} e(\alpha )= -\int _{-\infty }^{\infty }\log \det \left( I-\alpha E(\omega )\right) \frac{\mathrm {d}\omega }{4\pi } \end{aligned}$$(3.38)is analytic on the cut plane \(\mathfrak {C}_c=({\mathbb {C}}\setminus {\mathbb {R}})\cup \mathfrak {I}_c\). It is convex on the open interval \(\mathfrak {I}_c\) and extends to a continuous function on the closed interval \({\overline{\mathfrak {I}}}_c\). It further satisfies

$$\begin{aligned} e(1-\alpha )=e(\alpha ) \end{aligned}$$(3.39)for all \(\alpha \in \mathfrak {C}_c\),

$$\begin{aligned} \left\{ \begin{array}{l@{\quad }l} e(\alpha )\le 0&{}\text{ for } \alpha \in [0,1];\\ e(\alpha )\ge 0&{}\text{ for } \alpha \in {\overline{\mathfrak {I}}}_c\setminus ]0,1[;\\ \end{array} \right. \end{aligned}$$and in particular \(e(0)=e(1)=0\). Moreover

$$\begin{aligned} \mathrm{ep}=-e'(0)=e'(1), \end{aligned}$$and either \(\mathrm{ep}=0\), \(\kappa _c=\infty \), and \(e(\alpha )\) vanishes identically, or \(\mathrm{ep}>0\), \(\kappa _c<\infty \), \(e(\alpha )\) is strictly convex on \({\overline{\mathfrak {I}}}_c\), and

$$\begin{aligned} \lim _{\alpha \downarrow \frac{1}{2}-\kappa _c}e'(\alpha )=-\infty , \qquad \lim _{\alpha \uparrow \frac{1}{2}+\kappa _c}e'(\alpha )=+\infty . \end{aligned}$$(3.40) -

(4)

If \(\mathrm{ep}>0\), then there exists a unique signed Borel measure \(\varsigma \) on \({\mathbb {R}}\), supported on \({\mathbb {R}}{\setminus }\mathfrak {I}_c\), such that

$$\begin{aligned} \int \frac{|\varsigma |(\mathrm {d}r)}{|r|}<\infty , \end{aligned}$$and

$$\begin{aligned} e(\alpha )=-\int \log \left( 1-\frac{\alpha }{r}\right) \varsigma (\mathrm {d}r). \end{aligned}$$ -

(5)

For \(\alpha \in {\mathbb {R}}\) define

$$\begin{aligned} K_\alpha =\left[ \begin{array}{c@{\quad }c} -A_\alpha &{}QQ^*\\ C_\alpha &{}A_\alpha ^*\end{array}\right] , \end{aligned}$$(3.41)where

$$\begin{aligned} A_\alpha =(1-\alpha )A-\alpha A^*,\qquad C_\alpha =\alpha (1-\alpha )Q\vartheta ^{-2}Q^*. \end{aligned}$$(3.42)For all \(\omega \in {\mathbb {R}}\) and \(\alpha \in {\mathbb {R}}\) one has

$$\begin{aligned} \det (K_\alpha -\mathrm {i}\omega )=|\det (A+\mathrm {i}\omega )|^2\det (I-\alpha E(\omega )). \end{aligned}$$Moreover, for \(\alpha \in \mathfrak {I}_c\),

$$\begin{aligned} e(\alpha ) =\frac{1}{4}\mathrm{tr}(Q\vartheta ^{-1}Q^*) -\frac{1}{4}\sum _{\lambda \in \mathrm{sp}(K_\alpha )}|\mathrm{Re}\,\lambda |\, m_\lambda , \end{aligned}$$(3.43)where \(m_\lambda \) denotes the algebraic multiplicity of \(\lambda \in \mathrm{sp}(K_\alpha )\).

Remark 3.14

We shall prove, in Proposition 5.5(11), that

This lower bound is sharp, i.e., there are networks for which equality holds [see Theorem 4.2(3)].

Remark 3.15

It follows from (3.44) that \(\kappa _c=\infty \) for harmonic networks at equilibrium, i.e., whenever \(\vartheta _\mathrm{min}=\vartheta _\mathrm{max}=\vartheta _0>0\). Up to the controllability assumption of Proposition 3.7(2), these are the only examples with \(\kappa _c=\infty \) (see also Remark 5.6 and Sect. 4).

Remark 3.16

Remark 2 after Theorem 2.1 in [41] applies to Part (4) of Theorem 3.13.

In the sequel it will be convenient to consider the following natural extension of the function \(e(\alpha )\).

Definition 3.17

The function

is given by (3.38) for \(\alpha \in {\overline{\mathfrak {I}}}_c\) and \(e(\alpha )=+\infty \) for \(\alpha \in {\mathbb {R}}{\setminus }{\overline{\mathfrak {I}}}_c\).

This definition makes \({\mathbb {R}}\ni \alpha \mapsto e(\alpha )\) an essentially smooth closed proper convex function (see [62]).

The main result of this section relates the spectrum of the matrix \(K_\alpha \), through the function \(e(\alpha )\), to the large time asymptotics of the Rényi entropy (3.36) and the cumulant generating function of the canonical entropic functional \(S^t\).

Proposition 3.18

Under Assumption (C) and with Definition 3.17 one has

for all \(\alpha \in {\mathbb {R}}\).

A closer look at the proof of Proposition 3.18 in Sect. 5.7 gives more. For any \(x\in \Xi \) and \(\alpha \in {\overline{\mathfrak {I}}}_c\)

see [53, Sect. 20.1.5] and references therein. The functions \(\alpha \mapsto c_\alpha \in [0,\infty [\) and \(\alpha \mapsto T_\alpha \in L(\Xi )\) are real analytic on \(\mathfrak {I}_c\), continuous on \({\overline{\mathfrak {I}}}_c\), \(c_\alpha >0\) for \(\alpha \in \mathfrak {I}_c\), and \(T_\alpha >M^{-1}\) for \(\alpha \in {\overline{\mathfrak {I}}}_c\). Moreover, the convergence also holds in \(L^1(\Xi ,\mathrm {d}\mu )\) and is exponentially fast for \(\alpha \in \mathfrak {I}_c\). For \(\alpha \in {\overline{\mathfrak {I}}}_c\) and as \(\tau \rightarrow \infty \), one has

where \(\epsilon (\alpha )>0\) for \(\alpha \in \mathfrak {I}_c\). However, \(c_\alpha \) vanishes on \(\partial \mathfrak {I}_c\) and hence the “prefactor” \(g_\tau (\alpha )\) diverges as \(\alpha \rightarrow \partial \mathfrak {I}_c\). Nevertheless, (3.45) holds because

Like in our introductory example, the occurrence of singularities in the “prefactor” \(g_\tau (\alpha )\) is related to the tail of the law of \(S^t\). This phenomenon was observed by Cohen and van Zon in their study of the fluctuations of the work done on a dragged Brownian particle and its heat dissipation [15] (see also [16, 72] for more detailed analysis). In their model, which is closely related to ours, the cumulant generating function of the dissipated heat \(e_\tau (\alpha )\) diverges for \(\alpha ^2\ge (1-\mathrm {e}^{-2\tau })^{-1}\) and hence

This leads to a breakdown of the Gallavotti–Cohen symmetry and to an extended fluctuation relation. We will come back to this point in the next section and see that this is a general feature of the TDE functional \(\mathfrak {S}^t\) [see Eq. (3.64) below]. Proposition 3.18 and Theorem 3.13(3) show that the canonical entropic functional \(S^t\) does not suffer from this defect: its limiting cumulant generating function \(e(\alpha )\) satisfies Gallavotti–Cohen symmetry for all \(\alpha \in {\mathbb {R}}\).

3.4 Large Deviations of the Canonical Entropic Functional

We now turn to Step 2 of our scheme. We recall some fundamental results on the large deviations of a family \((\xi _t)_{t\ge 0}\) of real-valued random variables (the Gärtner–Ellis theorem, see, e.g., [17, Theorem V.6]). We shall focus on the situations relevant for our discussion of entropic fluctuations. We refer the reader to [17, 19] for more general exposition.

By Hölder’s inequality, the cumulant generating function

is convex and vanishes at \(\alpha =0\). It is finite on some (possibly empty) open interval and takes the value \(+\infty \) on the (possibly empty) interior of its complement.

Remark 3.19

The above definition follows the convention used in the mathematical literature on large deviations. Note, however, that in the previous section we have adopted the convention of the physics literature on entropic fluctuations where the cumulant generating function of an entropic functional \(\xi _t\) is defined by \(\alpha \mapsto t^{-1}\log \mathbb {E}[\mathrm {e}^{-\alpha \xi _t}]\). This clash of conventions is the origin of various minus signs occurring in Theorems 3.20 and 3.28 below.

The function

is convex and vanishes at \(\alpha =0\). Let D be the interior of its effective domain \(\{\alpha \in {\mathbb {R}}\,|\,\Lambda (\alpha )<\infty \}\), and assume that \(0\in D\). Then D is a non-empty open interval, \(\Lambda (\alpha )>-\infty \) for all \(\alpha \in {\mathbb {R}}\), and the function \(D\ni \alpha \mapsto \Lambda (\alpha )\) is convex and continuous. The Legendre transform

is convex and lower semicontinuous, as supremum of a family of affine functions. Moreover, \(\Lambda (0)=0\) implies that \(\Lambda ^*\) is non-negative. The large deviation upper bound

holds for all closed sets \(C\subset {\mathbb {R}}\).

Assume, in addition, that on some finite open interval \(0\in D_0=]\alpha _-,\alpha _+[\subset D\) the function \(D_0\ni \alpha \mapsto \Lambda (\alpha )\) is real analytic and not linear. Then \(\Lambda \) is strictly convex and its derivative \(\Lambda '\) is strictly increasing on \(D_0\). We denote by \(x_\mp \) the (possibly infinite) right/left limits of \(\Lambda '(\alpha )\) at \(\alpha =\alpha _\mp \). By convexity,

for any \(\alpha _0\in D_0\) and \(\alpha \in {\mathbb {R}}\), and

Since \(\Lambda ^*\) is non-negative, it follows that \(\Lambda ^*(\Lambda '(0))=0\). One easily shows that (3.47) also implies

for \(x\in E=]x_-,x_+[\). If the limit

exists for all \(\alpha \in D_0\), then it coincides with \(\Lambda (\alpha )\), and the large deviation lower bound

holds for all open sets \(O\subset {\mathbb {R}}\). Note that in cases where \(x_-=-\infty \) and \(x_+=+\infty \) one has \(E={\mathbb {R}}\) and convexity implies \(\Lambda (\alpha )=+\infty \) for \(\alpha \in {\mathbb {R}}\setminus [\alpha _-,\alpha _+]\).

We shall say that the family \((\xi _t)_{t\ge 0}\) satisfies a local LDP on E with rate function \(\Lambda ^*\) if (3.46) holds for all closed sets \(C\subset {\mathbb {R}}\) and (3.48) holds for all open sets \(O\subset {\mathbb {R}}\). If the latter holds with \(E={\mathbb {R}}\), we say that this family satisfies a global LDP with rate function \(\Lambda ^*\).

By the above discussion, Proposition 3.18 and Theorem 3.13(3) immediately yield:

Theorem 3.20

Suppose that Assumption (C) holds. Then, under the law \(\mathbb {P}_\mu \), the family \((S^t)_{t\ge 0}\) satisfies a global LDP with rate function (see Fig. 4)

It follows from the Gallavotti–Cohen symmetry (3.39) that the function \({\mathbb {R}}\ni s\mapsto I(s)+\frac{1}{2} s\in [0,\infty ]\) is even, i.e., the universal fluctuation relation

holds for all \(s\in {\mathbb {R}}\).

Remark 3.21

If \(\mathrm{ep}>0\), then the strict convexity and analyticity of the function \(e(\alpha )\) stated in Theorem 3.13(3) imply that the rate function I(s) is itself real analytic and strictly convex. Denoting by \(s\mapsto \ell (s)\) the inverse of the function \(\alpha \mapsto -e'(-\alpha )\), we derive

and the Gallavotti–Cohen symmetry translates to \(\ell (-s)+\ell (s)=-1\).

3.5 Intermezzo: A Naive Approach to the Cumulant Generating Function of \({{\mathfrak {S}}^t}\)

Before dealing with perturbations of the functional \(S^t\), we briefly digress from the main course of our scheme in order to better motivate what will follow. We shall try to compute the cumulant generating function of the TDE functional \(\mathfrak {S}^t\) by a simple Perron-Frobenius type argument.

By Itô calculus, for any \(f\in C^2(\Xi )\) one has

where

is the deformation of the Fokker–Planck operator (3.11), and \(A_\alpha \), B, \(C_\alpha \) are given by (3.12), (3.42). Note that the structural relations (3.9) imply

where \(L_\alpha ^*\) denotes the formal adjoint of \(L_\alpha \). Assuming \(L_\alpha \) to have a non-vanishing spectral gap, a naïve application of Girsanov formula leads to

where \(\Psi _\alpha \) is the properly normalized eigenfunction of \(L_\alpha \) to its dominant eigenvalue \(\lambda _\alpha \). It follows that

the Gallavotti–Cohen symmetry \(\lambda _{1-\alpha }=\lambda _\alpha \) being a direct consequence of (3.51).

Given the form of \(L_\alpha \), the Gaussian Ansatz

is mandatory. Insertion into the eigenvalue equation \(L_\alpha \Psi _\alpha =\lambda _\alpha \Psi _\alpha \) leads to the following equation for the real symmetric matrix \(X_\alpha \),

while the dominant eigenvalue is given by

There are two difficulties with this naïve argument. The first one is that it is far from obvious that Girsanov theorem applies here. The second one is again related to the “prefactor” problem. In fact we shall see that Eq. (3.53) does not have positive definite solutions for \(\alpha \le 0\), making the right-hand side of (3.52) infinite for \(\alpha \ge 1\). Nevertheless, the above calculation reveals Eq. (3.53) and (3.54) which will play a central role in what follows.

3.6 More Entropic Functionals

In this section we deal with step 3 of our scheme. The main result, Proposition 3.22 below, concerns the large time behavior of cumulant generating functions of the kind

where \(\Phi \) and \(\Psi \) are quadratic forms on the phase space \(\Xi \),

and the initial measure \(\nu \in \mathcal{P}(\Xi )\) is Gaussian. We then apply this result to some entropic functionals of physical interest:

-

(1)

The steady state TDE (recall Eq. (3.35)),

$$\begin{aligned} \mathfrak {S}^t=S^t+\log \frac{\mathrm {d}\mu }{\mathrm {d}x}(\theta x(t)) -\log \frac{\mathrm {d}\mu }{\mathrm {d}x}(x(0)), \end{aligned}$$(3.56)with \(\nu =\mu \).

-

(2)

The steady state TDE for quasi-Markovian networks (3.10) which we can rewrite as

$$\begin{aligned} \mathfrak {S}^t_\mathrm{qM} =\mathfrak {S}^t+\tfrac{1}{2}|\vartheta ^{-1/2}\pi _Q x(t)|^2 -\tfrac{1}{2}|\vartheta ^{-1/2}\pi _Q x(0)|^2, \end{aligned}$$(3.57)where \(\pi _Q\) denotes the orthogonal projection to \(\mathrm{Ran}\,Q=\partial \Xi \), with \(\nu =\mu \).

-

(3)

Transient TDEs, i.e., the functionals \(\mathfrak {S}^t\) and \(\mathfrak {S}_\mathrm{qM}^t\), but in the transient process started with a Dirac measure \(\nu =\delta _{x_0}\).

-

(4)

The steady state entropy production functional

$$\begin{aligned} \mathrm{Ep}(\mu ,t)=S^t+\log \frac{\mathrm {d}\mu \Theta }{\mathrm {d}\mu }(x(t)) \end{aligned}$$with \(\nu =\mu \).

-

(5)

The canonical entropic functional for the transient process, started with the non-degenerate Gaussian measure \(\nu \in \mathcal{P}(\Xi )\),

$$\begin{aligned} S^t_\nu \!=\!\log \frac{\mathrm {d}\mathbb {P}_\nu ^t}{\mathrm {d}\widetilde{\mathbb {P}}_\nu ^t} \!=\!\log \frac{\mathrm {d}\mathbb {P}_\mu ^t}{\mathrm {d}\widetilde{\mathbb {P}}_\mu ^t} +\log \frac{\mathrm {d}\mathbb {P}_\nu ^t}{\mathrm {d}\mathbb {P}_\mu ^t} -\log \frac{\mathrm {d}\widetilde{\mathbb {P}}_\nu ^t}{\mathrm {d}\widetilde{\mathbb {P}}_\mu ^t} =S^t-\log \frac{\mathrm {d}\nu }{\mathrm {d}\mu }(\theta x(t))+\log \frac{\mathrm {d}\nu }{\mathrm {d}\mu }(x(0)). \end{aligned}$$

To formulate our general result, we need some facts about the matrix equation (3.53).

Define a map \(\mathcal{R}_\alpha :L(\Xi )\rightarrow L(\Xi )\) by

where \(A_\alpha \), B and \(C_\alpha \) are defined by (3.12) and (3.42). The equation \(\mathcal{R}_\alpha (X)=0\) is an algebraic Riccati equation for the unknown self-adjoint \(X\in L(\Xi )\). We refer the reader to the monographs [2, 46] for an in depth discussion of such equations.

A solution X of the Riccati equation is called minimal (maximal) if it is such that \(X\le X'\) (\(X\ge X'\)) for any other solution \(X'\) of the equation. We shall investigate the Riccati equation in Sect. 5.6. At this point we just mention that, under Assumption (C), it has a unique maximal solution \(X_\alpha \) for any \(\alpha \in {\overline{\mathfrak {I}}}_c\), with the special values

Proposition 3.22

Suppose that Assumption (C) is satisfied and let \(\nu \) be the Gaussian measure on \(\Xi \) with mean a and covariance \(N\ge 0\). Denote by \(P_\nu \) the orthogonal projection on \(\mathrm{Ran}\,N\) and by \(\widehat{N}\) the inverse of the restriction of N to its range. Let \(F,G\in L(\Xi )\) be self-adjoint and define \(\Phi \), \(\Psi \) by (3.55).

-

(1)

For \(t>0\) the function

$$\begin{aligned} {\mathbb {R}}\ni \alpha \mapsto g_t(\alpha )= \frac{1}{t}\log \mathbb {E}_\nu \left[ \mathrm {e}^{-\alpha (S^t+\Phi (x(t))-\Psi (x(0)))}\right] \end{aligned}$$is convex. It is finite and real analytic on some open interval \(\mathfrak {I}_t=]\alpha _-(t),\alpha _+(t)[\ni 0\) and infinite on its complement. Moreover, the following alternatives hold:

-

Either \(\alpha _-(t)=-\infty \) or \(\lim _{\alpha \downarrow \alpha _-(t)}g_t'(\alpha )=-\infty \).

-

Either \(\alpha _+(t)=+\infty \) or \(\lim _{\alpha \uparrow \alpha _+(t)}g_t'(\alpha )=+\infty \).

-

-

(2)

Set

$$\begin{aligned} \mathfrak {I}_+&=\{\alpha \in {\overline{\mathfrak {I}}}_c\,|\, \theta X_{1-\alpha }\theta +\alpha (X_1+F)>0\},\\ \mathfrak {I}_-&=\{\alpha \in {\overline{\mathfrak {I}}}_c\,|\, \widehat{N}+P_\nu (X_\alpha -\alpha (G+\theta X_1\theta ))|_{\mathrm{Ran}\,N}>0\}, \end{aligned}$$with the proviso that \(\mathfrak {I}_-={\overline{\mathfrak {I}}}_c\) whenever \(N=0\). Then \(\mathfrak {I}_\infty =\mathfrak {I}_-\cap \mathfrak {I}_+\) is a (relatively) open subinterval of \({\overline{\mathfrak {I}}}_c\) containing 0.

-

(3)

If \(X_1+F>0\) and either \(N=0\) or \(\widehat{N}+P_\nu (X_1-\theta X_1\theta -G)|_{\mathrm{Ran}\,N}>0\), then \([0,1]\subset \mathfrak {I}_\infty \).

-

(4)

For \(\alpha \in \mathfrak {I}_\infty \) one has

$$\begin{aligned} \lim _{t\rightarrow \infty }g_t(\alpha )=e(\alpha ). \end{aligned}$$(3.60) -

(5)

Set \(\alpha _-=\inf \mathfrak {I}_\infty <0\) and \(\alpha _+=\sup \mathfrak {I}_\infty >0\). Then,

$$\begin{aligned} \lim _{t\rightarrow \infty }\alpha _\pm (t)=\alpha _\pm , \end{aligned}$$(3.61)and for any \(\alpha \in {\mathbb {R}}\setminus [\alpha _-,\alpha _+]\),

$$\begin{aligned} \lim _{t\rightarrow \infty }g_t(\alpha )=+\infty . \end{aligned}$$(3.62)

Remark 3.23

The existence and value of the limit (3.60) for \(\alpha \in \partial \mathfrak {I}_\infty \) is a delicate problem whose resolution requires additional information on the two subspaces

at the points \(\alpha \in \partial \mathfrak {I}_\infty \). Since, as we shall see in the next section, this question is irrelevant for the large deviations properties of the functional \(S^t+\Phi (x(t))-\Psi (x(0))\), we shall not discuss it further.

Remark 3.24

We shall see in Sect. 5.6 that the maximal solution \(X_\alpha \) of the Riccati equation is linked to the function \(e(\alpha )\) through the identity \(e(\alpha )=\lambda _\alpha \), where \(\lambda _\alpha \) is given by Eq. (3.54). Thus, the large time behavior of the function \(\alpha \mapsto g_t(\alpha )\) is completely characterized by the maximal solution \(X_\alpha \) through this formula and the two numbers \(\alpha _\pm \). Riccati equations play an important role in various areas of engineering mathematics, e.g., control and filtering theory. For these reasons, very efficient algorithms are available to numerically compute their maximal/minimal solutions. Hence, our approach is well designed for numerical investigation of concrete models.

Steady State Dissipated TDE According to Eqs. (3.56) and (3.59), the case of TDE dissipation in the stationary process corresponds to the choice

and it follows directly from Proposition 5.5(2) and (4) below that

Setting \(\alpha _-=\inf \{\alpha \in {\overline{\mathfrak {I}}}_c\,|\,X_\alpha +\theta X_1\theta >0\}\), we have either \(\alpha _-\in ]\tfrac{1}{2}-\kappa _c,0[\) and

or \(\alpha _-=\tfrac{1}{2}-\kappa _c\) and

Suppose that \(\tfrac{1}{2}-\kappa _c\le -1\) and let \(\alpha \in [\tfrac{1}{2}-\kappa _c,-1]\). From Proposition 5.5(10) we deduce that \(X_\alpha \le \alpha X_1\). Since \(X_1=\theta M^{-1}\theta >0\), it follows that

Observe that the right-hand side of this inequality is odd under conjugation by \(\theta \). Moreover, Proposition 3.7(1) implies that it vanishes iff \(\mathrm{ep}=0\). It follows that \(\mathrm{sp}(X_\alpha +\theta X_1\theta )\cap ]-\infty ,0]\not =\emptyset \). Thus, we can conclude that one always has \(\alpha _+=1\) and \(\alpha _-\ge -1\), with strict inequality whenever \(\mathrm{ep}>0\).

By Proposition 3.22,

An explicit evaluation of the resulting Gaussian integral further shows that

The Gallavotti–Cohen symmetry is broken in the sense that it fails outside the interval ]0, 1[, in particular \(e_\mathrm{TDE,st}(0)=e(0)=0<e_\mathrm{TDE,st}(1)\). Note also that

i.e., the limiting cumulant generating function for TDE dissipation rate in the stationary process is neither lower semicontinuous nor upper semicontinuous.

Remark 3.25

We shall see in Sect. 5.6 (see Remark 5.6) that in the case of thermal equilibrium, i.e., \(\vartheta =\vartheta _0I\) for some \(\vartheta _0\in ]0,\infty [\), one has \(X_\alpha =\alpha \vartheta _0I\) and hence \(X_{-1}+\theta X_1\theta =0\). Thus, in this case, \(\alpha _-=-1\) and since \(e(\alpha )\) vanishes identically by Proposition 3.13(3),

Remark 3.26

According to Eq. (3.57), for quasi-Markovian networks the steady-state TDE dissipation corresponds to

Since \(\theta \pi _Q=\pm \pi _Q=\pi _Q\theta \), one has

provided \(\partial \Xi \not =\Xi \). The inequality (3.63) yields

for \(\tfrac{1}{2}-\kappa _c\le \alpha \le -1\). From the Lyapunov equation (5.4) one easily deduces that

iff \(\theta M\theta =M\) so that the above argument still applies and (3.64) holds with \(\mathfrak {S}^t\) replaced by \(\mathfrak {S}_\mathrm{qM}^t\) and \(\alpha _-\ge -1\) with strict inequality whenever \(\mathrm{ep}>0\).

Transient Dissipated TDE Consider now the functional \(\mathfrak {S}^t\) for the process started with the Dirac measure \(\nu =\delta _{x_0}\) for some \(x_0\in \Xi \). This corresponds to

and in this case

and hence \(\mathfrak {I}_\infty =[\tfrac{1}{2}-\kappa _c,1[\). Proposition 3.22 yields a cumulant generating function

which does not depend on the initial condition \(x_0\).

Remark 3.27

For quasi-Markovian networks it may happen that \(\mathfrak {I}_\infty =]\alpha _-,1[\) with \(\alpha _->\tfrac{1}{2}-\kappa _c\). For later reference, let us consider the caseFootnote 2 \(\kappa _c=\kappa _0\) (recall Remark 3.14). We deduce from Proposition 5.5(12) that

for \(\alpha \in [\tfrac{1}{2}-\kappa _0,0]\). Thus, in this case we have \(\mathfrak {I}_\infty =[\tfrac{1}{2}-\kappa _c,1[\) as in the Markovian case.

Steady State Entropy Production Rate Motivated by [52], where the functional \(\mathrm{Ep}(\mu ,t)\) plays a central role, we shall also investigate the large time asymptotics of its cumulant generating function

in the stationary process. We observe that this function coincides with a Rényi relative entropy, namely

so that the symmetry (3.15) yields

The large time behavior of \(e_{\mathrm{ep},t}(\alpha )\) follows from Proposition 3.22 with the choice

Thus,

and since we can write \(X_\alpha +(1-\alpha )\theta X_1\theta =\theta (Y_{1-\alpha }+W_{1-\alpha })\theta \) with \(Y_{1-\alpha }=X_{1-\alpha }+\theta X_\alpha \theta \) and \(W_{1-\alpha }=(1-\alpha )X_1-X_{1-\alpha }\), it follows from Proposition 5.5(10) that

In particular the limit

coincides with \(e(\alpha )\) for all \(\alpha \in {\mathbb {R}}\) iff the following condition holds:

Condition (R) \(X_{1-\alpha }+\alpha X_1>0\) for all \(\alpha \in {\overline{\mathfrak {I}}}_c\).

This condition involves maximal solutions of two algebraic Riccati equations. Except in some special cases [see Proposition 5.5(12)], its validity is not ensured by general principles (the known comparison theorems for Riccati equations do not apply) and we shall leave it as an open question. We will come back to it in Sect. 4 in context of concrete examples.

Transient Canonical Entropic Functional Assuming for simplicity that the covariance N of the initial condition \(\nu \in \mathcal{P}(\Xi )\) is positive definite, Proposition 3.22 applies to the cumulant generating function of \(S_\nu ^t\) with

It follows that

so that \(\alpha _-=1-\alpha _+=\tfrac{1}{2}-\kappa _\nu \) for some \(\kappa _\nu >\tfrac{1}{2}\) and

Note that by the construction of \(S_\nu ^t\) the Gallavotti–Cohen symmetry holds for all times. One has \(\kappa _\nu =\kappa _c\) and hence \(e_\nu (\alpha )=e(\alpha )\) for all \(\alpha \in {\mathbb {R}}\), provided

3.7 Extended Fluctuation Relations

We finally deal with the 4\(^\mathrm{th}\) and last step of our scheme: we derive an LDP for the the entropic functionals considered in the previous section and illustrate its use in obtaining extended fluctuation relations for various physical quantities of interest. We start with a complement to the discussion of Sect. 3.4.

In most cases relevant to entropic functionals of harmonic networks, the generating function \(\Lambda \) is real analytic and strictly convex on a finite interval \(D_0=]\alpha _-,\alpha _+[\), is infinite on \({\mathbb {R}}\setminus [\alpha _-,\alpha _+]\), and the interval \(E=]x_-,x_+[\) is finite. In such cases \(\Lambda _\pm \) are both finite and (3.47) implies that the Legendre transform of \(\Lambda \) is given by

where \(\ell :E\rightarrow D_0\) is the reciprocal function to \(\Lambda '\). Thus, \(\Lambda ^*\) is real analytic on E, affine on \({\mathbb {R}}\setminus E\) and \(C^1\) on \({\mathbb {R}}\). The Gärtner–Ellis theorem only provides a local LDP on E for which the affine branches of \(\Lambda ^*\) are irrelevant. However, exploiting the Gaussian nature of the underlying measure \(\mathbb {P}\), it is sometimes possible to extend this local LDP to a global one, with the rate function \(\Lambda ^*\). Inspired by the earlier work of Bryc and Dembo [4], we have recently obtained such an extension for entropic functionals of a large class of Gaussian dynamical systems [41]. The next result is an adaptation of the arguments in [4, 41] and applies to the functional

under the law \(\mathbb {P}_\nu \), with the hypothesis and notations of Proposition 3.22. We set (recall (3.40))

Theorem 3.28

-

(1)

If Assumption (C) holds then, under the law \(\mathbb {P}_\nu \), the family \((\xi _t)_{t\ge 0}\) satisfies a global LDP with the rate function

$$\begin{aligned} J(s)=\left\{ \begin{array}{ll} I(\eta _-)-(s-\eta _-)\alpha _+= -s\alpha _+-e(\alpha _+)&{}\quad \text{ for } s\le \eta _-;\\ I(s)&{} \quad \text{ for } s\in ]\eta _-,\eta _+[;\\ I(\eta _+)-(s-\eta _+)\alpha _-= -s\alpha _--e(\alpha _-)&{}\quad \text{ for } s\ge \eta _+; \end{array} \right. \end{aligned}$$(3.66)where I(s) is given by (3.49). In particular, if \(\mathrm{ep}>0\), then it follows from the strict convexity of I(s) that

$$\begin{aligned} J(-s)-J(s)<I(-s)-I(s)=s, \end{aligned}$$for \(s>\max (-\eta _-,\eta _+)\).

-

(2)

Under the same assumptions, the family \((\xi _t)_{t\ge 0}\) satisfies the Central Limit Theorem: For any Borel set \(\mathcal{E}\subset {\mathbb {R}}\),

$$\begin{aligned} \lim _{t\rightarrow \infty }\mathbb {P}_\nu \left[ \frac{\xi _t-\mathbb {E}_\nu [\xi _t]}{\sqrt{ta}}\in \mathcal{E}\right] ={\mathrm n}_1(\mathcal{E}), \end{aligned}$$where \(a=e''(0)\) and \(\mathrm {n}_1\) denotes the centered Gaussian measure on \({\mathbb {R}}\) with variance 1.

If \(\mathfrak {I}_\infty ={\overline{\mathfrak {I}}}_c\), then we are in the same situation as in Sect. 3.4 and \(\xi _t\) has the same large fluctuations as the canonical entropic functional \(S^t\). In particular it also satisfies the Gallavotti–Cohen fluctuation theorem. However, in the more likely event that \(\mathfrak {I}_\infty \) is strictly smaller than \({\overline{\mathfrak {I}}}_c\), then (see Fig. 5) the function \(g(\alpha )=\limsup _{t\rightarrow \infty }g_t(\alpha )\) only coincides with \(e(\alpha )\) on \(]\alpha _-,\alpha _+[\) and the rate function J(s) differs from I(s) outside the closure of the interval \(]\eta _-,\eta _+[\). Unless \(\alpha _-=1-\alpha _+\) (in which case \(\eta _-=-\eta _+\) and \(J(-s)-J(s)=s\) for all \(s\in {\mathbb {R}}\)) the Gallavoti-Cohen symmetry is broken and the universal fluctuation relation (3.50) fails. The symmetry function \(\mathfrak {s}(s)=J(-s)-J(s)\) then satisfies an “extended fluctuation relation”.

The cumulant generating function \(g(\alpha )=\limsup _{t\rightarrow \infty }g_t(\alpha )\) and the rate function J(s) for the functionals \((\xi _t)_{t\ge 0}\) of Theorem 3.28

Combining Theorem 3.28 with the results of Sect. 3.6 we obtain global LDPs for steady state and transient dissipated TDE. Let us discuss their features in more detail.

Steady State Dissipated TDE Assuming \(\mathrm{ep}>0\), we have \(-1<\alpha _{\mathrm {TDE,st}-}<0\) and \(\alpha _{\mathrm {TDE,st}+}=1\), hence \(\eta _{\mathrm {TDE,st}-}=-e'(1)=-\mathrm{ep}\) and \(\eta _{\mathrm {TDE,st}+}=-e'(\alpha _{\mathrm {TDE,st}-})>\mathrm{ep}\). In this case, the symmetry function is

and in particular \(\mathfrak {s}_\mathrm{TDE,st}(s)<s\) for \(s>\mathrm{ep}\). The slope of the affine branch of \(\mathfrak {s}_\mathrm{TDE,st}\) satisfies

so that \(s\mapsto \mathfrak {s}_\mathrm{TDE,st}(s)\) is strictly increasing.

In the equilibrium case (\(\vartheta _\mathrm{min}=\vartheta _\mathrm{max}\)) one has \(\alpha _{\mathrm {TDE,st}\mp }=\mp 1\) and \(e(\alpha )\) vanishes identically. Hence the rate function for steady state dissipated TDE is the universal function

and \(\mathfrak {s}_\mathrm{TDE,st}(s)=0\) for all \(s\in {\mathbb {R}}\).

Transient Dissipated TDE Assuming again \(\mathrm{ep}>0\), we have \(\alpha _{\mathrm {TDE,tr}-}=\frac{1}{2}-\kappa _c\) and \(\alpha _{\mathrm {TDE,tr}+}=1\), so that \(\eta _{\mathrm {TDE,tr}-}=-e'(1)=-\mathrm{ep}\) and \(\eta _{\mathrm {TDE,tr}+}=-e'(\tfrac{1}{2}-\kappa _c)=+\infty \). The symmetry function reads

which coincides with the steady state heat dissipation for \(0\le s\le \eta _{\mathrm {TDE,st}+}\). However, the strict concavity of the function \(s-I(s)\) implies

for all \(s>\eta _{\mathrm {TDE,st}+}\). By Remark 3.21,

iff \(s=-e'(-1)>-e'(0)=\mathrm{ep}\). Thus, whenever \(\tfrac{1}{2}-\kappa _c<-1\) Footnote 3 the function \([0,\infty [\ni s\mapsto \mathfrak {s}_\mathrm{TDE,tr}(s)\) has a unique maximum at \(s=-e'(-1)\), and the concavity of \(s-I(s)\) implies that \(\mathfrak {s}_\mathrm{TDE,tr}\) becomes negative for large enough s. In the opposite case where \(\tfrac{1}{2}-\kappa _c>-1\) the symmetry function \(\mathfrak {s}_\mathrm{TDE,tr}\) is strictly monotone increasing (see Fig. 7 in Sect. 4.1 for an explicit example of this somewhat surprising fact.)

4 Examples

In this section we turn back to harmonic networks in the setup of Sect. 2. We denote by \(\{\delta _i\}_{i\in \mathcal{I}}\) the canonical basis of the configuration space \({\mathbb {R}}^\mathcal{I}\).

We start with two general facts which reduce the phase space controllability condition (C) and the non-vanishing of \(\mathrm{ep}\) to configuration space controllability (see Sect. 5.10 for a proof).

Lemma 4.1

-

(1)

If \(\mathrm{Ker}\,\omega =\{0\}\), then (A, Q) is controllable iff \((\omega ^*\omega ,\iota )\) is controllable.

-

(2)

Denote by \(\pi _i\), \(i\in \partial \mathcal{I}\), the orthogonal projection on \(\mathrm{Ker}\,(\vartheta -\vartheta _i)\). Let \(\mathcal{C}_i=\mathcal{C}(\omega ^*\omega ,\iota \pi _i)\). If there exist \(i,j\in \partial _\mathcal{I}\) such that \(\vartheta _i\not =\vartheta _j\) and \(\mathcal{C}_i\cap \mathcal{C}_j\not =\{0\}\), then \(\mathrm {ep}(\mu )>0\).

4.1 A Triangular Network

Consider the triangular network of Fig. 6 where \(\mathcal{I}={\mathbb {Z}}_6\) and \(\partial \mathcal{I}={\mathbb {Z}}_6\setminus 2{\mathbb {Z}}_6\) (the indices arithmetic is modulo 6). The potential

is positive definite provided \(|a|<\frac{1}{2}\) and \(2a^2-\frac{1}{2}<b<1-4a^2\). One easily checks that \(a\not =0\) implies \(\mathrm{Ran}\,\iota \vee \mathrm{Ran}\,\omega ^2\iota ={\mathbb {R}}^\mathcal{I}\). Thus Assumption (C) is verified under these conditions. Noting that \(\delta _2\in \mathcal{C}_1\cap \mathcal{C}_3\), we conclude that \(\mathrm{ep}>0\) if \(\vartheta _1\not =\vartheta _3\). By symmetry, \(\mathrm{ep}>0\) iff

We shall fix the parameters of the model to the following values

the “relative temperatures” being parametrized by