Abstract

We investigate large deviations of the free energy in the O’Connell–Yor polymer through a variational representation of the positive real moment Lyapunov exponents of the associated parabolic Anderson model. Our methods yield an exact formula for all real moment Lyapunov exponents of the parabolic Anderson model and a dual representation of the large deviation rate function with normalization \(n\) for the free energy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

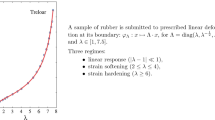

This paper studies the model of a 1 + 1 dimensional semi-discrete directed polymer in a random environment due to O’Connell and Yor [25]. Directed polymers in random environments were introduced in the statistical physics literature in [17], with mathematical work following in [5, 18]. A physically motivated mathematical introduction to this family of models can be found in the survey article [9]. These models have attracted substantial attention recently, in part due to the conjecture that under suitable regularity assumptions they lie in the KPZ universality class. For a discussion of this conjecture and further references, we refer the reader to [10].

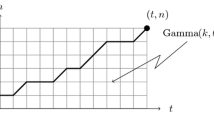

Our study will focus on the point-to-point polymer partition function of this model, which [25] defines for \(n \in \mathbb {N}\) and \(\beta > 0\) as

where \(\{B_i\}_{i=1}^\infty \) is a family of i.i.d. standard Brownian motions. This partition function is the normalizing constant for a quenched polymer measure on non-decreasing càdlàg paths \(f:\mathbb {R}_+ \rightarrow \mathbb {N}\) with \(f(0) = 1\) and \(f(n) = n\). Up to a constant factor, \(Z_n(\beta )\) is the conditional expectation of a functional of a Poisson path on the event that the path is at \(n\) at time \(n\). More precisely, let \(\pi (\cdot )\) be a unit rate Poisson process on \(\mathbb {R}_+\) which is independent of the family \(\{B_i\}_{i=1}^\infty \) and denote by \(\mathcal {E}\) the expectation with respect to the law of this Poisson process. With the notation

we have

The prefactor of \(|A_{n,n}|^{-1}\) accounts for the fact that the ordered jump points of \(\pi \) on \([0,n]\) conditioned on \(\pi (n) = n\) are uniformly distributed on the Weyl chamber \(A_{n,n}\).

The O’Connell–Yor polymer model was originally introduced in [25] in connection with a generalization of the Brownian queueing model. Based on the work of Matsumoto and Yor [21], O’Connell and Yor were able to show the existence of a stationary version of this model satisfying an analogue of Burke’s theorem for M/M/1 queues. The Burke property makes the O’Connell–Yor polymer one of the four polymer models considered exactly solvable, the others being the continuum directed polymer studied in [1], the log-gamma polymer introduced by Seppäläinen in [28], and the strict-weak gamma polymer studied in [11] and [24]. A precise statement of the Burke property for this model and an outline of how this property leads to the main result of this paper is given in Sect. 2.2. Subsequent work on the representation theoretic underpinnings of the exact solvability of these models can be found in the work of Borodin and Corwin on Macdonald processes [6] and the work of O’Connell connecting this model to the quantum Toda lattice [23].

As one of the few tractable models in the KPZ universality class, this polymer model has been extensively studied: Moriarty and O’Connell [22] rigorously computed the free energy; Seppäläinen and Valkó [29] identified the scaling exponents; Borodin, Corwin, and Ferrari [8] showed that the model lies in the KPZ universality class by proving the Tracy–Widom limit for the free energy fluctuations; and Borodin and Corwin [7] proved a contour integral representation for the integer moments and computed their large \(n\) asymptotics.

The main results of this paper are Theorems 2.2 and 2.3, which compute the moment Lyapunov exponents and large deviation rate function with normalization \(n\) for the free energy respectively. Theorem 2.2 can be thought of as an extension of the asymptotics studied in [7]. There, the authors use a contour integral representation for the integer moments of \(Z_n(\beta )\) to compute the limit

for any \(k \in \mathbb {N}\). In [7], Appendix A.1], as part of a replica computation of the free energy, they conjecture an analytic continuation of their formula to \(k >0\). We are able to compute the above limit for all \(k \in \mathbb {R}\), confirming this conjecture.

The proofs of Theorems 2.2 and 2.3 follow an approach introduced by Seppäläinen in [27]. Georgiou and Seppäläinen used this method to compute the large deviation rate function with normalization \(n\) for the free energy in the log-gamma polymer in [14]. The key technical condition making this scheme tractable is the independence provided by the Burke property, which the log-gamma polymer shares with the O’Connell–Yor polymer. It is therefore natural to expect that the techniques of [14] should also apply in this setting; we take this as our starting point.

Physically, we can view the parameter \(\beta \) as an inverse temperature. In this sense, we can think of directed polymer models as positive temperature analogues of directed percolation. This leads to a natural coupling between the directed polymer and directed percolation models, which we take advantage of throughout the paper. The directed percolation model associated to the O’Connell–Yor polymer is Brownian directed percolation; see [16, 25] for a discussion of this model. A distributional equivalence between the last passage time in Brownian directed percolation and the largest eigenvalue of a standard GUE matrix was discovered independently by Baryshnikov [2], Theorem 0.7] and Gravner, Tracy, and Widom [15], both in 2001. The known large deviations [20] for top eigenvalues of GUE matrices give useful estimates in several of the proofs that follow. The precise results we use are collected in the ‘Bounds from the GUE connection’ section of the appendix.

This polymer and the log-gamma polymer are the only positive temperature polymer models for which precise large deviations have been studied. Precise left tail large deviations, which have non-universal scalings [3], remain open for both models. The lattice Gaussian directed percolation and polymer models in one spatial dimension have left tail large deviations with normalization \(\frac{n^2}{\log (n)}\) [12], Theorem 1.2] [3], p. 774], while the Brownian directed percolation model has left tail large deviations with normalization \(n^2\) [20], (1.26)]. It is natural to expect that the left tail large deviations for this model should follow those of Brownian directed percolation rather than the Gaussian lattice models, but we do not currently have a proof of this result.

Notation When \(b(\cdot )\) is Brownian motion and \(s \le t\), we adopt the convention \(b(s,t) = b(t) - b(s)\). For \(a,b \in [-\infty ,\infty ]\), we set \(a \vee b = \max (a,b)\) and \(a \wedge b = \min (a,b)\). The polygamma functions are denoted by \(\Psi _k(x)\), where \(\Psi _0(x) = \frac{d}{dx}\log \Gamma (x)\) and \(\Psi _n(x) = \frac{d}{dx} \Psi _{n-1}(x)\).

For \(f,g:\mathbb {R} \rightarrow (-\infty ,\infty ]\), the Legendre–Fenchel transform is defined by \(f^*(\xi ) = \sup _{x \in \mathbb {R}}\{x \xi - f(x)\}\) and the infimal convolution is defined by \(f \square g(x) = \inf _{y \in \mathbb {R}}\{f(x-y) + g(y)\}\). For properties of these operators, we refer the reader to [26].

A random variable has a \(\Gamma (\theta ,1)\) distribution if it has density \(\Gamma (\theta )^{-1}x^{\theta - 1}e^{-x}1_{\{x>0\}}\) with respect to the Lebesgue measure on \(\mathbb {R}\).

2 Preliminaries and Statement of Results

2.1 Definition of the Polymer Model and Statement of Results

Let \(\{B_i\}_{i=0}^\infty \) be a family of independent two-sided standard Brownian motions. Define partition functions for \(j,n \in \mathbb {Z}_+\) with \(j < n\) and \(s,t \in (0,\infty )\) with \(s < t\) by

For the case \(j = n\), we define

We will refer to the \(j,n\) variables as space and the \(u,t\) variables as time. Translation invariance of Brownian motion and our assumption that the environment is i.i.d. immediately imply that the distribution of the partition function is shift invariant.

It follows from Brownian scaling that for \(\beta > 0\) and \(n > 1\) we have

For the remainder of the paper, we will only consider partition functions of the form \(Z_{j,n}(u,t)\); results for these partition functions can be translated into results for \(Z_n(\beta )\) using this distributional identity.

Next, we argue that the partition function is supermultiplicative: that is, for \(j, n,m \in \mathbb {Z}_+\), \(v \ge 0\), and \(u,t > 0\) ,

For notational convenience, we will consider the case \(j= v = 0\). For \(t,u > 0\), we have

For \(m,n \in \mathbb {N}\) and \(t,u > 0\), we have

When \(m < n\) and \(t,u > 0\), we decompose \(\log Z_{m,n}(u,t)\) as follows:

where

is strictly increasing in \(t\) and strictly decreasing in \(u\). It follows that

The free energy for (1) was computed in [22]. We mention that, as in [14], Lemma 4.1], once one knows the existence and continuity of the free energy, a variational problem similar to the one we study for the rate function in this paper can be used to compute the value of the free energy. We have

Lemma 2.1

([22]) Fix \(s,t \in (0,\infty )\). Then the almost sure limit

exists and is given by

The main result of this paper is a computation of the real moment Lyapunov exponents of the parabolic Anderson model associated to (4) and, through an application of the Gärtner-Ellis theorem, the large deviation rate function with normalization \(n\) for the free energy of the polymer. Specifically, we have

Theorem 2.2

Let \(s,t \in (0,\infty )\) and \(\xi \in \mathbb {R}\). Then

and \(\Lambda _{s,t}(\xi )\) is a differentiable function of \(\xi \in \mathbb {R}\).

Theorem 2.3

Fix \(s,t \in (0,\infty )\). The distributions of \(n^{-1}\log Z_{1,\lfloor ns \rfloor }(0,nt)\) satisfy a large deviation principle with normalization \(n\) and convex good rate function

Remark 2.4

The function being minimized for \(\xi > 0\) in Theorem 2.2 is convex and coercive, so the minimum is attained. The details this fact are worked out in the proof of Corollary 3.11.

2.2 Definition of the Stationary Model and Proof Outline

Let \(B(t)\) be a two-sided Brownian motion independent of the family \(\{B_i\}_{i=0}^\infty \) and for \(\theta >0\), \(t \in \mathbb {R}\) and \(n \in \mathbb {Z}_+\) define point-to-point partition functions by

with the convention that

We can think of \(Z_n^\theta (t)\) as a modification of the polymer in the previous subsection where we add a spatial dimension and start in the infinite past. For \(s,t > 0\) and \(n\) sufficiently large that \(ns\ge 1\), we obtain a decomposition of \(Z_{\lfloor ns \rfloor }^\theta (nt)\) into terms that involve the partition functions we are studying by considering where paths leave the potential of the Brownan motion \(B\):

This expression also leads to the interpretation of \(Z_n^\theta (t)\) as a modification of the point-to-point partition function discussed in the previous subsection where we have added boundary conditions.

We will refer to this model as the stationary polymer, where the term stationary comes from the fact that it satisfies an analogue of Burke’s theorem for M/M/1 queues. This fact is one of the main contributions of [25] and we refer the reader to that paper for a more in depth discussion of the connections to queueing theory. We follow the notation of [29], which contains the version of the Burke property that will be used in this paper. Define \(Y_0^\theta (t) = B(t)\) and for \(k \ge 1\) recursively set

then we have

Lemma 2.5

[29], Theorem 3.3] Let \(n \in \mathbb {N}\) and \(0 \le s_n \le s_{n-1} \le \cdots \le s_1 < \infty \). Then over \(j\), the following random variables and processes are all mutually independent.

Furthermore, the \(X_j\) and \(Y_j\) processes are standard Brownian motions, and \(e^{-r_j(s_j)}\) is \(\Gamma (\theta ,1)\) distributed.

An induction argument shows that

As we will see shortly, expression (9) would lead to a variational formula for the right tail rate function we are looking for in terms of the right tail rate function of \(Z_{\lfloor ns \rfloor }^\theta (nt)\). This right tail rate function would be tractable using (11) if \(B(nt)\) were independent of \(\sum _{k=1}^{\lfloor ns \rfloor } r_k^\theta (nt)\); as this is not the case, it is convenient to rewrite (9) in a form that separates these two terms:

We now briefly outline the proof of Theorem 2.2. In order to compute the positive moment Lyapunov exponents, we consider the dual problem of establishing right tail large deviations for the free energy. As is typically the case, existence and regularity of the right tail rate function follows from subadditivity arguments. It then follows from (12) that this right tail rate function solves a variational problem in terms of computable rate functions coming from the stationary model. Taking Legendre–Fenchel transforms brings us back to the study of moment Lyapunov exponents and gives the variational problem a linear structure which makes it tractable.

For non-positive exponents, we use crude estimates on the partition function to identify the limit. We are able to do this because the left tail large deviations for the free energy are are strictly subexponential while the moment Lyapunov exponents are only sensitive to exponential scale large deviation.

3 A Variational Problem for the Right Tail Rate Function

3.1 Definitions and Notation

The goal of this subsection is to introduce the right tail rate function for the free energy, which we will denote \(J_{s,t}(x)\), and the rate functions coming from the stationary model which appear in the variational expression for \(J_{s,t}(x)\). We will defer some of the proofs of technical results about the existence and regularity of these rate functions to Appendix 1. We begin by defining these functions and addressing existence.

Theorem 3.1

For all \(s\ge 0\), \(t > 0\) and \(r \in \mathbb {R}\), the limit

exists and is \(\mathbb {R}_+\) valued. Moreover, \(J_{s,t}(r)\) is continuous, convex, subadditive, and positively homogeneous of degree one as a function of \((s, t,r) \in [0,\infty ) \times (0,\infty ) \times \mathbb {R}\). For fixed \(s\) and \(t\), \(J_{s,t}(r)\) is increasing in \(r\) and \(J_{s,t}(r) = 0\) if \(r \le \rho (s,t)\).

The proof of Theorem 3.1 can be found in the ‘Existence and Structure of the Right Tail Rate Function’ section of Appendix 1.

Next, we define the computable rate functions from the stationary model. By the Burke property for the stationary model, the first limit below can be computed as the right branch of a Cramér rate function. For \(s,t > 0\), we set

We may continuously extend \(U_s^\theta (x)\) to \(s = 0\) by setting

We record the Legendre–Fenchel transforms of these functions below:

The next lemma implies existence of the rate functions which will appear when we use equation (12) to prove that \(J_{s,t}(x)\) satisfies a variational problem in the next subsection. Versions of this result appear in several other papers, so we elect not to re-prove it. The exact statement we need appears in [14].

Lemma 3.2

[14], Lemma 3.6] Suppose that for each \(n\), \(X_n\) and \(Y_n\) are independent, that the limits

exist, and that \(\lambda \) is continuous. If there exists \(a_\lambda \) and \(a_\phi \) so that \(\lambda (a_\lambda ) = \phi (a_\phi ) = 0\), then

We define rate functions corresponding to the two parts of the decomposition in (12) as follows: for \(a \in [0,t)\), \(u\in (0,s]\), \(v \in [0,s)\), and \(x \in \mathbb {R}\) set

Recall that \(\log Z_j^\theta (0) = \sum _{k=1}^j r_k^\theta (0)\) is measurable with respect to the sigma algebra \(\sigma (B(s), B_k(s) : 1 \le k \le j; s \le 0)\) and that for \(0 \le u < nt\), \(\log Z_{j,\lfloor ns \rfloor }(u,t)\) is measurable with respect to the sigma algebra \(\sigma (B_k(s_k) : j \le k \le \lfloor ns\rfloor , u \le s_j \le nt)\). Combining the independence of the environment with the computations above, Theorem 3.1 and Lemma 3.2 imply that \(G_{a,s,t}^\theta (x)\) and \(H_{u, v, s, t}^\theta (x)\) are well-defined. In particular, we immediately obtain

Corollary 3.3

For \(a\in [0,t)\) and \(u \in (0,s]\), and \(v \in [0,s)\)

In order to show that (12) leads to a variational problem, we need some regularity on \(G_{a,s,t}^\theta (x)\) and \(H_{u,v,s,t}^\theta (x)\). The three results that follow are purely technical, so we defer their proofs to the ‘Regularity for the Stationary Right Tail Rate Functions’ section of Appendix 1. Lemma 3.4 gives a strong kind of local uniform continuity of \(H_{u,v,s,t}^\theta (x)\) and Lemma 3.5 gives the same for \(G_{a,s,t}^\theta (x)\). The difference between the two statements comes from Lemma 3.6, which shows that \(G_{a,s,t}^\theta (x)\) degenerates to infinity locally uniformly near \(a = t\).

Lemma 3.4

Fix \(\theta , s, t > 0\) and a compact set \(K \subseteq \mathbb {R}\). Then

Lemma 3.5

Fix \(\theta , s, t > 0\) and \(0 < \delta \le t\) and a compact set \(K \subseteq \mathbb {R}\). Then

Lemma 3.6

Fix \(\theta , s, t > 0\) and \(K \subset \mathbb {R}\) compact. Then

3.2 Coarse Graining and the Variational Problem

Fix \(a \in [0,t)\) and \(0 < \delta \le t - a\). Then (12) implies the following lower bounds

For any partition \(\{a_i\}_{i=0}^{N}\) of \([0,t]\), we also have

Our goal is now to show that estimates (14), (15), and (16) above lead to a variational characterization of the right tail rate function \(J_{s,t}(x)\):

To improve the presentation of the paper, we have moved some of the estimates in the proofs that follow to Appendix 2.

Lemma 3.7

Fix \(\theta > 0\), \((s,t) \in (0,\infty )^2\) and \(x \in \mathbb {R}\). Then

Proof

For \(a \in [0,s)\), taking \(j = \lfloor a n \rfloor \) in inequality (15) above immediately implies

Fix \(\delta \in (0,t)\); then for all \(a \in [0, t - \delta )\) and all \(u \in [0, a+\delta ]\), we have

It then follows that

Fix \(\epsilon >0\). By independence of the Brownian environment, we find that

Applying the lower bound obtained by considering the minimum of the Brownian increments on the interval \([a, a+\delta ]\) allows us to show that as \(n \rightarrow \infty \) the probability in line (21) tends to one. Then taking \(\limsup \) and recalling inequality (14), we obtain

By Lemma 3.5, we may take \(\delta ,\epsilon \downarrow 0\) in (22). Optimizing over \(a\) in the resulting equation and in (18) gives the result. \(\square \)

Lemma 3.8

Fix \(\theta > 0\), \((s,t) \in (0,\infty )^2\) and \(x \in \mathbb {R}\). Then

Proof

Fix a large \(p > 1\) and small \(\epsilon , \gamma > 0\). Consider uniform partitions \(\{a_i\}_{i=0}^{M}\) of \([0,t]\) and \(\{b_i\}_{i=0}^{N}\) of \([0,s]\) of mesh \(\nu = \frac{t}{M+1}\) and \(\delta = \frac{s}{N+1}\) respectively. We will add restrictions on these parameters later in the proof. Take \(n\) sufficiently large that \(\lfloor b_i n \rfloor < \lfloor b_{i+1} n\rfloor \) for all \(i\).

Fix \(j < \lfloor ns \rfloor \) not equal to any of the partition points \(\lfloor b_i n \rfloor \) and consider \(i\) so that \(\lfloor b_i n \rfloor < j < \lfloor b_{i+1} n \rfloor \). Notice that \(Z_0^\theta (nt)\) is \(\sigma (B(nt))\) measurable and \( Z_j^\theta (0)\) is measurable with respect to \(\sigma (B(s),B_1(s), \ldots , B_j(s) : s \le 0)\), so these random variables and \(Z_{j, \lfloor ns \rfloor }(u,v)\) are mutually independent if \(0\le u < v\). It follows from translation invariance and this independence that

We have

It then follows that

Using the moment bound in Lemma 6.2 with \(\xi = -p\) for \(p > 1\) and the exponential Markov inequality gives the bound

For the last inequality, we first require \(\gamma < \frac{\epsilon }{4p}\) and then take \(\delta \) small enough that \(\delta \log \frac{\delta }{\gamma } < \frac{\epsilon }{4}\). The exponential Markov inequality and the known moment generating function of the i.i.d. sum give the bound

where in the last step we additionally require \(\delta < \frac{\epsilon p}{4} \log \left( \Gamma (\theta + p) \Gamma (\theta )^{-1}\right) ^{-1}\). For the case that \(j\) is a partition point, we have

and the same error bound as above applies. We now turn to the problem of estimating the integral

By Lemma 6.5 we have

where we require \(\nu < \frac{\epsilon }{\theta }\).

Take \(n\) sufficiently large that \(\log (ns + N) \le n \epsilon \). It follows from (16) and union bounds that

Combining this with the previous estimates, multiplying by \(-1\) and sending \(n \rightarrow \infty \) gives

We first send \(\delta \downarrow 0\), then \(\gamma \downarrow 0\), then \(\nu \downarrow 0\), then \(p \uparrow \infty \). By Lemma 3.6, there is \(\eta > 0\) so that for all \(\epsilon \in [0,1]\), we have

Now, take \(\epsilon \downarrow 0\) and use Lemmas 3.4 and 3.5. This gives the desired bound

\(\square \)

We now turn the variational problem for the right tail rate functions into a variational problem involving Legendre–Fenchel transforms.

Lemma 3.9

For any \(\theta > 0\) let \(\xi \in (0,\theta )\). Then \(J_{s,t}^*(\xi )\) satisfies the variational problem

Proof

Lemmas 3.7 and 3.8 imply (17). Infimal convolution is Legendre–Fenchel dual to addition for proper convex functions [26], Theorem 16.4] so we find

If \(\xi \in (0, \theta )\), then \((U_s^\theta )^*(\xi ) < \infty \), so we may subtract \((U_s^\theta )^*(\xi )\) from both sides. Substituting in the known Legendre–Fenchel transforms gives the result. \(\square \)

3.3 Solving the Variational Problem

Next, we show that the variational problem in Lemma 3.9 identifies \(J_{s,t}^*(\xi )\) for \(\xi > 0\). To show the analogous result in [14], the authors followed the approach of rephrasing the variational problem as a Legendre–Fenchel transform in the space-time variables and appealing to convex analysis. We present an alternate method for computing \(J_{s,t}^*(\xi )\) in the next proposition, which has the advantage of allowing us to avoid some of the technicalities in that argument. This direct approach is the main reason we are able to appeal to the Gärtner-Ellis theorem to prove the large deviation principle.

Proposition 3.10

Let \(I \subseteq \mathbb {R}\) be open and connected and let \(h,g:I \rightarrow \mathbb {R}\) be twice continuously differentiable functions with \(h'(\theta ) > 0\) and \(g'(\theta ) < 0\) for all \(\theta \in I\). For \((x,y) \in (0,\infty )^2\), define

and suppose that \(\frac{d^2}{d\theta ^2}f_{x,y}(\theta ) > 0\) for all \((x,y) \in (0,\infty )^2\) and that \(f_{x,y}(\theta ) \rightarrow \infty \) as \(\theta \rightarrow \partial I\) (which may be a limit as \(\theta \rightarrow \pm \infty \)). If \(\Lambda (x,y)\) is a continuous function on \((0,\infty )^2\) with the property that for all \((x,y) \in (0,\infty )^2\) and \(\theta \in I\) the identity

holds, then

Proof

Fix \((x,y) \in (0,\infty )^2\) and call \(\nu = \frac{y}{x}\). Under these hypotheses, there exists a unique \(\theta _{x,y}^* = \arg \min _{\theta \in I} f_{x,y}(\theta ) = \theta _{1,\nu }^ *\). Identity (23) implies that for all \(a \in [0,x)\) and \(b \in [0,y)\) we have

and therefore for any \(\theta \in I\), \(a \in [0,x)\) and \(b \in [0,y)\),

Uniqueness of minimizers implies that \(f_{x-a,y}(\theta _{x-a,y}^*) - f_{x-a,y}(\theta ) < 0\) unless \(\theta = \theta _{x-a,y}^*\) and similarly \(f_{x,y-b}(\theta _{x,y-b}^*) - f_{x, y-b}(\theta ) < 0\) unless \(\theta = \theta _{x,y-b}^*\). Notice that \(\theta _{1,\nu }^*\) solves

By the implicit function theorem, we may differentiate the previous expression with respect to \(\nu \) to obtain

Now, set \(\theta = \theta _{x,y}^*\) in (23). Equality (27) implies that for \(a \in (0,x)\) and \(b \in (0,y)\), \(\theta _{(x,y-b)}^* < \theta _{(x,y)}^* < \theta _{(x-a,y)}^*\). Then (24) and (25) give us the inequalities

Notice that (23) implies either there exists \(a_n \rightarrow a \in [0,x]\) or \(b_n \rightarrow b \in [0,y]\) so that one of the following hold:

Our goal is to show that the only possible limits are \(a_n \rightarrow 0\) or \(b_n \rightarrow 0\), from which the result follows from continuity. Continuity and inequalities (28) and (29) rule out the possibilities \(a \in (0,x)\) and \(b \in (0,y)\) respectively. It therefore suffices to show that

We will only write out the proof of (30), since the proof of (31) is similar. For any fixed \(a \in (0,x)\), we have

It suffices to show that the previous expression is decreasing in \(a\). Differentiating the previous expression and using (26) and the fact that \(\theta _{(x,y)}^* < \theta _{(x-a,y)}^*\), we find

\(\square \)

Corollary 3.11

For all \(\xi > 0\),

Proof

It follows from the variational representation in Lemma 3.9 that \(J_{s,t}^*(\xi )\) is not infinite for any choice of the parameters \(\xi ,s,t>0\). It then follows from Lemma 5.4 and [26], Theorem 10.1] that \(J_{s,t}^*(\xi )\) is continuous in \((s,t) \in (0,\infty )^2\).

Fix \(\xi \) and set \(I = \{ \theta : \theta > \xi \}\). For \(\theta \in I\) and \(s,t \in (0,\infty )\), define

Lemma 3.9 shows that with these definitions \(J_{s,t}^*(\xi )\) solves the variational problem in Proposition 3.10. Because \(\Psi _1(x) > 0\) and \(\Psi _2(x) < 0\), we see that for \(\theta \in I\)

It then follows that \(\frac{d^2}{d\theta ^2}f_{s,t}(\theta ) > 0\). Moreover, since \(\log \frac{\Gamma (\theta - \xi )}{\Gamma (\theta )}\) grows like \(-\xi \log (\theta )\) at infinity and \(-\log (\theta - \xi )\) at \(\xi \), \(f_{s,t}(\theta )\) also tends to infinity at the boundary of \(I\) and the result follows.

The second equality is the substitution \(\mu = \theta - \xi \). \(\square \)

4 Moment Lyapunov Exponents and the LDP

The next result would be Varadhan’s theorem if \(J_{s,t}(x)\) were a full rate function, rather than a right tail rate function. The proof is somewhat long and essentially the same as the proof of Varadhan’s theorem, so we omit it. Details of a similar argument for the stationary log-gamma polymer can be found in [14], Lemma 5.1]. The exponential moment bound needed for the proof follows from Lemma 6.2.

Lemma 4.1

For \(\xi > 0\),

and in particular the limit exists.

Remark 4.2

Lemma 4.1 shows that \(J_{s,t}^*(\xi )\) is the \(\xi \) moment Lyapunov exponent for the parabolic Anderson model associated to this polymer. With this in mind, the second formula in the statement of Corollary 3.11 above agrees with the conjecture in [7], Appendix A.1].

To see this, we first observe that the partition function we study differs slightly from the partition function \(Z_\beta (t,n)\) studied in [7] (defined in equation (3) of that paper). As we saw was the case for \(Z_n(\beta )\) in equation (3), up to normalization constants both \(Z_{0,\lfloor ns \rfloor }(0,nt)\) and \(Z_\beta (t,n)\) are conditional expectations of functionals of a Poisson path. The normalization constant for \(Z_{0, \lfloor ns \rfloor }(0,nt)\) is given by the Lebesgue measure of the Weyl chamber \(A_{\lfloor ns \rfloor +1, nt}\), while the normalization constant for \(Z_\beta (t,n)\) is \(P_{\pi (0)=0}\left( \pi (t) = n\right) \) where \(\pi (\cdot )\) is again a rate one Poisson process. There is a further difference in that [7] adds a pinning potential of strength \(\frac{\beta }{2}\) at the origin to the definition of \(Z_\beta (t,n)\), which introduces a multiplicative factor of \(e^{-\frac{\beta }{2}t}\). Combining these changes and restricting to the parameters studied in [7], Appendix A.1], we have the relation

Since \(P_{\pi (0) = 0}\left( \pi (n) = \lfloor n \nu \rfloor \right) |A_{\lfloor n \nu \rfloor +1,n}|^{-1} = e^{-n}\), Corollary 3.11 and Lemma 4.1 then imply that for any \(k > 0\),

which is the extension of the moment Lyapunov exponent \(H_k(z_k^0)\) conjectured in the middle of page 24 of [7].

Our next goal is to show that the left tail large deviations are not relevant at the scale we consider. This proof is based on the proof of [14], Lemma 4.2] which contains a small mistake; as currently phrased, the argument in that paper only works for \(s,t \in \mathbb {Q}\). This problem can be fixed by altering the geometry of the proof, but doing this adds some technicalities which can be avoided in the model we study. We will follow an argument similar to the original proof for \(s \in \mathbb {Q}\), then show that this implies what we need for all \(s\).

Proposition 4.3

Fix \(s,t >0\). For all \(\epsilon > 0\)

Proof

First we consider the case \(s \in \mathbb {Q}\). There exists \(M \in \mathbb {N}\) large enough that \(M(s \wedge t) \ge 1\) and for all \(m \ge M\) we have

Fix \(m \ge M\) so that \(ms \in \mathbb {N}\). We will denote coordinates in \(\mathbb {R}^{\lfloor ns \rfloor - 1}\) by \((u_1, \ldots , u_{\lfloor ns \rfloor -1})\). For \(a,b,s,t \in (0,\infty )\) and \(n,k,l \in \mathbb {N}\), define a family of sets \(A_{k,a}^{l,b} \subset \mathbb {R}^{\lfloor ns \rfloor - 1}\) by

For \(j,k, \in \mathbb {Z}^+\), set

For each \(n\) sufficiently large that the expression below is greater than one, define

so that we have

With this choice of \(N\), for \(0 \le k \le \lfloor \sqrt{n} \rfloor \) and \(0 \le j \le N-1\), \(A_j^k\) is nonempty. Then for \(0 \le k \le \lfloor \sqrt{n} \rfloor \), define

To simplify the formulas that follow, we introduce the notation \(s_j = j ms\) and \(t_j^k = (j+k)mt\). In words, we can think of \(D_k\) as the collection of paths from \(0\) to \(nt\) which traverse the bottom line until \(t_0^k\), then for \(0 \le j \le N-1\) move from \(t_j^k\) to \(t_{j+1}^k\) along the next \(ms\) lines. The path then moves from \(t_N^k\) to \(t\left( n - \frac{m}{2}\right) \) along the next \(ms\) lines and finally proceeds to \(nt\) along the remaining lines. Observe that \(\{D_k\}_{k=0}^{\lfloor \sqrt{n} \rfloor }\) is a pairwise disjoint, non-empty family of sets. With the convention \(u_0 = 0\) and \(u_{\lfloor ns \rfloor } = nt\), we have the bound

In the integral over \(D_k\), for each \(0 \le j \le N\) we add and subtract \(B_{s_j}(t_j^k)\) in the exponent. Similarly, add and subtract \(B_{s_{N+1}}\left( t\left( n - \frac{m}{2}\right) \right) \). The reason for this step is that this will make the product of integrals coming from the definition of \(D_k\) into a product of partition functions, as when we showed supermultiplicativity of the partition function in (7). Introduce

and observe that \(H_0^n \le B_{s_N}(t_N^0, t_N^k) + H_k^n\). Let \(C> 0\) be a uniform lower bound in \(n\) (recall that \(m\) is fixed) on the Lebesgue measure of the Weyl chamber in the definition of \(H_{\lfloor \sqrt{n} \rfloor }^n\). Such a bound exists by (32). Set \(I_n = \max _{t_{N-1}^0 \le u \le t_{N-1}^{\lfloor \sqrt{n}\rfloor }}\{B_{s_N}(t_{N-1}^0, u)\}\). We have the lower bound

We therefore have the upper bound

It follows from translation invariance, Lemma 6.6, and (33) that

We have

for some \(c_1 > 0\). The first equality comes from the fact that the terms in the maximum are i.i.d. and the second comes from large deviation estimates for an i.i.d. sum once we recall that \(N = \frac{n}{m} + o(n)\).

Recall that by (32), \(n\left( t - \frac{m}{2}\right) - t_N^0 = O(\sqrt{n})\). It follows from Lemma 6.4 that there exist \(c_2, C_2 > 0\) so that

The remaining two terms can be controlled with the reflection principle and are \(O\left( e^{-\frac{1}{2}n^{\frac{3}{2}}}\right) \).

Now let \(s\) be irrational. For each \(k\), fix \(\tilde{s}_k < s\) rational with \(e^{-k} < |\tilde{s}_k - s| < 2 e^{-k}\) and set \(\tilde{t}_k = t -\frac{1}{k} \). Call \(\alpha _k = s - \tilde{s}_k\) and \(\beta _k = t - \tilde{t}_k = \frac{1}{k}\). Subadditivity gives

Since \(\tilde{s}_k\) is rational, we have already shown that the first term is negligible. Take \(k\) sufficiently large that \(\rho (s,t) - \rho (\tilde{s}_k, \tilde{t}_k) - \frac{\epsilon }{2} < -\frac{\epsilon }{4}\). By Lemma 6.3, we find

Using formula (38), \(J_{GUE}(r) = 4 \int _0^r \sqrt{x(x+2)}dx\), it is not hard to see that as \(k \rightarrow \infty \), this lower bound tends to infinity. \(\square \)

Lemma 4.4

Fix \(s,t >0\) and \(\xi < 0\). Then

Proof

Fix \(\epsilon > 0\) and small and recall that Lemma 6.2 and Jensen’s inequality imply that for any \(\xi < 0\), \(\sup _n\left\{ \frac{1}{n} \log E\left[ e^{\xi \log Z_{1, \lfloor ns \rfloor }(0,nt)}\right] \right\} < \infty \). The lower bound is immediate from

once we recall that \(P(\log Z_{1,\lfloor ns \rfloor }(0,nt) \le n(\rho (s,t) + \epsilon )) \rightarrow 1\).

For the upper bound, we decompose the expectation as follows

Recalling that \(P\left( \log Z_{1,\lfloor ns \rfloor }(0,nt) > n(\rho (s,t) - \epsilon )\right) \rightarrow 1\), this leads to

By Cauchy–Schwarz and Proposition 4.3

\(\square \)

Combining the previous results, we are led to the proof of Theorem 2.2, from which we immediately deduce Theorem 2.3.

Proof of Theorem 2.2

Lemmas 4.1 and 4.4 give the limit for \(\xi \ne 0\) and the limit for \(\xi = 0\) is zero.

Note that \(\Lambda _{s,t}(\xi )\) is differentiable for \(\xi < 0\) the left derivative at zero is \(\rho (s,t)\). For \(\xi > 0\), there is a unique \(\mu (\xi )\) solving

This \(\mu (\xi )\) is given by the unique solution to

which can be rewritten as

By the mean value theorem, there exists \(x \in [0,\xi )\) so that

Using this, we see that \(\Lambda _{s,t}(\xi )\) is continuous at \(\xi = 0\). The implicit function theorem implies that \(\mu (\xi )\) is smooth for \(\xi > 0\). Differentiating (34) with respect to \(\xi \) and applying (35), we have

Substituting in for \(\mu (\xi )\), appealing to continuity, and taking \(\xi \downarrow 0\) gives

which implies differentiability at zero and hence at all \(\xi \). \(\square \)

Proof of Theorem 2.3

The large deviation principle holds by Theorem 2.2 and the Gärtner-Ellis theorem [13], Theorem 2.3.6]. \(\square \)

References

Amir, G., Corwin, I., Quastel, J.: Probability distribution of the free energy of the continuum directed random polymer in 1 + 1 dimensions. Commun. Pure Appl. Math. 64, 466–537 (2011)

Baryshnikov, Y.: GUEs and queues. Probab. Theory Relat. Fields 119, 256–274 (2001)

Ben Ari, I.: Large deviations for partition functions of directed polymers in an IID field. Ann. Inst. H. Poincaré Probab. Stat. 45(3), 770–792 (2009)

Ben Arous, G., Dembo, A., Guionnet, A.: Aging of spherical spin glasses. Probab. Theory Relat. Fields 120, 1–67 (2001)

Bolthausen, E.: A note on the diffusion of directed polymers in a random environment. Commun. Math. Phys. 123, 529–534 (1989)

Borodin, A., Corwin, I.: Macdonald processes. Probab. Theory Relat. Fields 158(1–2), 225–400 (2014)

Borodin, A., Corwin, I.: Moments and Lyapunov exponents for the parabolic Anderson model. Ann. Appl. Probab. 24(3), 1172–1198 (2014)

Borodin, A., Corwin, I., Ferrari, P.: Free energy fluctuations for directed polymers in random media in 1 + 1 dimension. Commun. Pure Appl. Math. 67, 1129–1214 (2014)

Comets, F., Shiga, T., Yoshida, N.: Probabilistic analysis of directed polymers in a random environment: a review. Adv. Stud. Pure Math. 39, 115–142 (2004)

Corwin, I.: The Kardar–Parisi–Zhang equation and universality class. Random Matrices Theory Appl. 1(1), 1130001 (2012)

Corwin, I., Seppäläinen, T., Shen, H.: The Strict-Weak Lattice Polymer. arXiv:1409.1794 (2014)

Cranston, M., Gauthier, D., Mountford, T.S.: On large deviation regimes for random media models. Ann. Appl. Probab. 19(2), 826–862 (2009)

Dembo, A., Zeitouni, O.: Large Deviations Techniques and Applications. Applications of Mathematics, vol. 38. Springer, New York (1998)

Georgiou, N., Seppäläinen, T.: Large deviation rate functions for the partition function in a log-gamma distributed random potential. Ann. Probab. 41(6), 4248–4286 (2013)

Gravner, J., Tracy, C.A., Widom, H.: Limit theorems for height fluctuations in a class of discrete space and time growth models. J. Stat. Phys. 102(5–6), 1085–1132 (2001)

Hambly, B.M., Martin, J.B., O’Connell, N.: Concentration results for a Brownian directed percolation problem. Stoch. Process. Appl. 102, 207–220 (2002)

Huse, D.A., Henly, C.L.: Pinning and roughening of domain wall in Ising systems due to random impurities. Phys. Rev. Lett. 54, 2708–2711 (1985)

Imbrie, J.Z., Spencer, T.: Diffusion of directed polymers in a random environment. J. Stat. Phys. 52, 609–626 (1988)

Kuczma, M., Gilányi, A. (eds.): An Introduction to the Theory of Functional Equations and Inequalities. Birkhäuser, Basel (2009)

Ledoux, M.: Deviation Inequalities on Largest Eigenvalues. Lecture Notes in Math, vol. 1910, pp. 167–219. Springer, New York (2007)

Matsumoto, H., Yor, M.: A relationship between Brownian motions with opposite drifts via certain enlargements of the Brownian filtration. Osaka Math J. 38, 1–16 (2001)

Moriarty, J., O’Connell, N.: On the free energy of a directed polymer in a Brownian environment. Markov Process. Relat. Fields 13, 251–266 (2007)

O’Connell, N.: Directed polymers and the quantum Toda lattice. Ann. Probab. 40(2), 437–458 (2012)

O’Connell, N., Ortmann, J.: Tracy–Widom asymptotics for a random polymer with gamma-distributed weights. Electron. J. Probab 20(25), 1–18 (2015)

O’Connell, N., Yor, M.: Brownian analogues of Burkes theorem. Stoch. Process. Appl. 96(2), 285–304 (2001)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series, vol. 28. Princeton University Press, Princeton, NJ (1970)

Seppäläinen, T.: Large deviations for increasing sequences in the plane. Probab. Theory Relat. Fields 112, 221–224 (1998)

Seppäläinen, T.: Scaling for a one-dimensional directed polymer with boundary conditions. Ann. Probab. 40(1), 19–73 (2012)

Seppäläinen, T., Valkó, B.: Bounds for scaling exponents for a 1 + 1 dimensional directed polymer in a Brownian environment. ALEA 6, 1–42 (2010)

Acknowledgments

The author would like to thank Timo Seppäläinen for suggesting this problem; Benedek Valkó for many helpful conversations; and the anonymous referees for their feedback, which helped to improve the presentation of the paper. The author also benefited greatly from attending Ivan Corwin’s summer course on KPZ at MSRI.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Right Tail Rate Functions

1.1 Existence and Structure of the Right Tail Rate Function

We now turn to the problem of showing the existence and regularity of the right tail rate function for the polymer free energy. Our main goal in this subsection will be to prove Theorem 3.1. As is typical for right tail large deviations, existence and regularity follow from (almost) subadditivity arguments. Because the partition function degenerates for steps with no time component and we do not restrict attention to integer \(s\), it is necessary to tilt time slightly in this argument. We break the proof of Theorem 3.1 into two parts: first we show the result with time tilted, then we show that this change does not matter.

Theorem 5.1

For all \(s\ge 0\), \(t > 0\) and \(r \in \mathbb {R}\), the limit

exists and is \(\mathbb {R}_+\) valued. Moreover, \(J_{s,t}(r)\) is continuous, convex, subadditive, and positively homogeneous of degree one as a function of \((s, t,r) \in [0,\infty ) \times (0,\infty ) \times \mathbb {R}\). For fixed \(s\) and \(t\), \(J_{s,t}(r)\) is increasing in \(r\) and \(J_{s,t}(r) = 0\) if \(r \le \rho (s,t)\).

Proof

Define the function \(T: [0,\infty ) \times (1,\infty ) \times \mathbb {R} \rightarrow \mathbb {R}_+\) by

Lemma 6.1 in the appendix implies that \(P \left( \log Z_{0, \lfloor x \rfloor }(0,y - 1) \ge z \right) \ne 0\) and therefore that this function is well-defined.

Take \((x_1, y_1, z_1),(x_2, y_2, z_2) \in [0,\infty ) \times (1,\infty ) \times \mathbb {R}\) and call \(x_{1,2} = \lfloor x_1 + x_2 \rfloor - \lfloor x_1 \rfloor - \lfloor x_2 \rfloor \in \{0,1\}\). By (6), we have

Independence and translation invariance then imply

If \(x_{1,2} = 0\) then, recalling that \(\log Z_{\lfloor x_2\rfloor , \lfloor x_2 \rfloor }(u,t) = B_{\lfloor x_2 \rfloor }(u,t)\), we find

Similarly, when \(x_{1,2} = 1\) we have

Setting \(C = \max \{\log (2), - \log P \left( \log Z_{0, 1}(0,1) \ge 0\right) \} < \infty \), we find that

\(T(x,y,z)\) is therefore subadditive with a bounded correction. Non-negativity and Lemma 6.1 imply that \(T(x,y,z)\) is bounded for \(x,y,z\) in a compact subset of its domain. The proof of [19], Theorem 16.2.9] shows that we may define a function on \([0,\infty ) \times (0,\infty ) \times \mathbb {R}\) by

and that this function satisfies all of the regularity properties in the statement of the theorem except continuity and monotonicity. Monotonicity in \(r\) for fixed \(s\) and \(t\) follows from monotonicity in the prelimit expression. Convexity and finiteness imply continuity on \((0,\infty )^2 \times \mathbb {R}\) [26], Theorem 10.1]. Moreover, [26], Theorem 10.2] gives upper semicontinuity on all of \([0,\infty ) \times (0,\infty ) \times \mathbb {R}\). It therefore suffices to show lower semicontinuity at the boundary; namely, we need to show

Fix \((t, r) \in (0,\infty ) \times \mathbb {R}\) and a sequence \((s_k,t_k,r_k) \in [0,\infty ) \times (0,\infty ) \times \mathbb {R}\) with \((s_k, t_k, r_k) \rightarrow (0,t, r)\). Recall that \(\log Z_{0,0}(0,t) = B_0(t)\), so we may compute with the normal distribution to find \(J_{0,t}(r) = \frac{r^2}{2t}1_{\{r \ge 0\}}\). From this we can see that if \(s_k = 0\) for all sufficiently large \(k\), we have \(J_{s_k, t_k}(r_k) \rightarrow J_{0, t}(r)\). We may therefore assume without loss of generality that \(s_k > 0\) for all \(k\). First observe that if \(r \le 0\), then \(J_{0,t}(r) = 0\) and the lower bound follows from non-negativity.

If \(r > 0\), we may assume without loss of generality that there exists \(c >0\) with \(r_k > c\) for all \(k\). By Lemma 6.3 in the appendix, for all sufficiently large \(k\) we have

where \(J_{GUE}(r) = 4\int _0^r \sqrt{x(x+2)}dx\). Using this formula and calculus, we find that

and therefore continuity follows. Lemma 2.1 implies that \(J_{s,t}(r) = 0\) for \(r \le \rho (s,t)\). \(\square \)

Remark 5.2

Note that we only address the spatial boundary in the previous result. The reason for this is that the right tail rate function is not continuous at \(t = 0\) for any \(s > 0\) and \(x \in \mathbb {R}\). To see this, we can use the lower bound for \(J_{s,t}(r)\) coming from Lemma 6.3. As \(t \downarrow 0\), this lower bound tends to infinity.

Lemma 5.3

Fix \((s,t,r) \in (0,\infty )^2 \times \mathbb {R}\). For any sequences \(s_n, t_n\in \mathbb {N} \times (0,\infty )\) with \(\frac{1}{n}(s_n, t_n) \rightarrow (s,t)\) we have

Proof

Fix \(\epsilon < \min (s,t)\) and positive. We will assume that \(n\) is large enough that the following conditions hold:

We have

It follows that

Call \(s(n) = s_n - \lfloor (s - \epsilon ) n \rfloor \) and \(t(n) = t_n - (t - \epsilon ) n + 1\) and divide the interval \((0, t(n))\) into \(s(n)\) uniform subintervals. We may bound \(Z_{0, s(n)}(0,t(n))\) below by a product of i.i.d. random variables:

Therefore,

Notice that \(\lim \frac{s(n)}{n} = \epsilon \) and \(\lim \frac{t(n)}{n} = \epsilon \), so we may further assume without loss of generality that \(\frac{1}{2} < \frac{t(n)}{s(n)} < 2\) for all \(n\). We have

so that

Therefore for \(C = \log (2) - \log P\left( \log Z_{0, 1}\left( 0,\frac{1}{2}\right) \ge 0 \right) \) and all \(\epsilon < \min (s,t)\) we have

sending \(\epsilon \downarrow 0\) and applying continuity of the rate function gives one inequality. A similar argument gives the \(\liminf \) inequality. \(\square \)

The next corollary follows from convexity of \(J_{s,t}(x)\) in \((s,t,x) \in (0,\infty )^2\times \mathbb {R}\). Details can be found in the first few lines of the proof of [14], Lemma 4.6].

Lemma 5.4

For all \(\xi > 0\), \(J_{s,t}^*(\xi )\) is convex as a function of \((s,t) \in (0,\infty )^2\).

1.2 Regularity for the Stationary Right Tail Rate Functions

Next, we turn to regularity for \(H_{u,v,s,t}^\theta (x)\) and \(G_{a,s,t}^\theta (x)\), which are defined in (13). We begin with the proof of Lemma 3.4. This result is the only point in the paper where we directly use the continuity up to the boundary in Theorem 3.1.

Proof of Lemma 3.4

Notice that \(\theta t, a\Psi _0(\theta ),\) and \(\rho (s-b,t + \gamma )\) are bounded for \(a,b \in [0,s]\) and \(\gamma \in [0,t]\). Using this fact and the formula for \(H_{a,b,s,t + \gamma }^\theta (r)\) coming from Corollary 3.3 and Lemma 3.2, there exists a compact set \(K'\) containing \(K\) so that for all \(r \in K\), \(a,b \in [0,s]\), and \(\gamma \in [0,t]\)

Note that \((a,x) \mapsto R_t^\theta \square U_a^\theta (x)\) is continuous on \([0,s] \times \mathbb {R}\). By Theorem 3.1, for any compact set \(K'\) we have joint uniform continuity of \((a, b, \gamma , r,x) \mapsto R_t^\theta \square U_a^\theta (x) + J_{b, t + \gamma }(r-x)\) on the compact set \([0,s]^2 \times [0,t] \times K' \times K'\) and so the result follows. \(\square \)

The proof of Lemma 3.5 is similar to the proof of Lemma 3.4, so we omit it. Next, we turn to the proof of Lemma 3.6, which shows that \(G_{a,s,t}^\theta (x)\) tends to infinity locally uniformly near near \(a = t\).

Proof of Lemma 3.6

We have

Fix \(\epsilon > 0\). The formula in Lemma 2.1 shows that \(\rho (s,t-a) \rightarrow -\infty \) as \(a \uparrow t\), so that for all \(x \in \mathbb {R}\) and \(a\) sufficiently close to \(t, x > - \theta (t-a) + \rho (s, t-a)\). For \(a\) sufficiently large that this holds for all \(x \in K\), we have

By Lemma 6.3, for all \(x \in K\) and \(a\) sufficiently large, we have

Combining this with the exact formula for \(R_{t-a}^\theta (x)\) and optimizing the lower bounds over \(x \in K\) shows that the infimum over \(x \in K\) of the minimum of these three lower bounds tends to infinity, giving the result. \(\square \)

Appendix 2: Technical Estimates

To reduce the clutter elsewhere in the paper, we collect a number of useful estimates in this appendix.

1.1 A Lower Bound on the Probability of Being Large

Lemma 6.1

Let \(K \subset [0,\infty ) \times (0,\infty ) \times \mathbb {R}\) be compact. Then there exists \(C_K > 0\) so that for all \((x,y,z) \in K\)

Proof

Since \(\lfloor x \rfloor \) takes only finitely many values on any compact set, we may fix \(\lfloor x \rfloor \). If \(\lfloor x \rfloor = 0\), then \(Z_{0, \lfloor x \rfloor }(0,y) = B_0(y)\) and the result follows. For \(\lfloor x \rfloor \ge 1\), we bound below by an i.i.d. product: \(Z_{0, \lfloor x \rfloor }(0,y) \ge \prod _{i=0}^{\lfloor x \rfloor -1} Z_{i, i+1}\left( i \frac{y}{\lfloor x \rfloor },(i+1)\frac{y}{\lfloor x \rfloor }\right) \). It follows that

Jensen’s inequality applied to \(\log Z_{0,1}(0,t)\) gives

where \(\frac{1}{t}\int _0^t B_0(u) du\) and \(\frac{1}{t}\int _0^t B_1(u,t) du\) are i.i.d. mean zero normal random variables with variance \(\frac{t}{3}\). Applying this lower bound to the expression in (36) gives the result. \(\square \)

1.2 Moment Estimate for the Partition Function

Lemma 6.2

Fix \(t > 0\), \(n \in \mathbb {N}\) and \(\xi \in \mathbb {R}\) with \(|\xi | > 1\). Then there is a constant \(C > 0\) depending only on \(\xi \) so that

Proof

Recall the definition of \(A_{n,t}\) in (2). By Jensen’s inequality with respect to the uniform measure on \(A_{n,t}\) and Tonelli’s theorem we find

where we have used independence of the Brownian increments and the moment generating function of the normal distribution to compute the last line. The remainder of the statement of the lemma comes from the identity \(|A_{n,t}| = \frac{t^{n-1}}{(n-1)!}\) and Stirling’s approximation to \(n!\). \(\square \)

1.3 Bounds from the GUE Connection

Let \(\lambda _{GUE,n}\) be the top eigenvalue of an \(n\times n\) GUE random matrix with entries that have variance \(\sigma ^2 = \frac{1}{4n}\). Then [2], Theorem 0.7] and [15] give

The right tail rate function of \(\lambda _{GUE,n}\) can be computed ([20], (1.25)], [4]) for \(r>0\) to be

Lemma 6.3

Suppose that \(r,s,t>0\) and \((s_n, t_n, r_n) \in \mathbb {N} \times (0,\infty ) \times \mathbb {R}\) satisfy \(n^{-1}(s_n,t_n,r_n) \rightarrow (s,t,r)\). If \(r - s \log t- s + s\log s > 2 \sqrt{t s}\), then

and if \(r + s \log t + s - s\log s > 2 \sqrt{t s}\), then

Proof

Recall the definition of \(A_{n,t}\) in (2) and observe that

Using this fact and bounding \(Z_{0,n}(0,t)\) as defined in (4) above with the maximum value of the Brownian increments, we obtain

The result then follows from the inequality

The proof of the second bound follows a similar argument: we bound the partition function below with the minimum of the Brownian increments, apply the upper bound from Stirling’s approximation to \(n!\), and appeal to Brownian reflection symmetry. \(\square \)

Lemma 6.4

Fix \(\epsilon > 0\) and let \(s \in \mathbb {N}\) and \(t_n = O(n^\alpha )\) for some \(\alpha < 1\). Then there exist \(c,C > 0\) so that

Proof

Large deviation estimates for largest eigenvalues give the result. For example, by [20], (2.7)], there exist \(C,c>0\) such that

\(\square \)

1.4 Upper Tail Coarse Graining Estimate

Lemma 6.5

Fix \(a \in [0,t)\) and \(\epsilon > 0\). Then for \(\nu < \min (\epsilon , t-a)\)

Proof

By (6), we have for all \(u \in (a, a+ \nu )\)

so it follows that

where the last inequality comes from Brownian translation invariance, symmetry, and scaling. Recall that \(B + B_1\) has the same process level distribution as \(\sqrt{2} B\). The result follows from the reflection principle. \(\square \)

1.5 Left Tail Error Bound

Lemma 6.6

Take sequences \(t_n, s_n, r_n\) such that there exist \(a,b > 0\) with \(a < t_n < b\), \(r_n \rightarrow r > 0\) and \(s_n \in \mathbb {N}\) satisfies \(s_n \log (s_n) = o(n)\). Then there exist constants \(c,C>0\) such that

Proof

We have \(Z_{0,s_n}(0,t_n)\ge \prod _{i=0}^{s_n -1}Z_{i, i+1}\left( i \frac{t_n}{s_n}, (i+1) \frac{t_n}{s_n}\right) \) where the \(Z_{i, i+1}\left( i \frac{t_n}{s_n}, (i+1) \frac{t_n}{s_n}\right) \) are i.i.d.. As above in (37), there exist i.i.d. random variables \(X_i \sim N\left( \log \left( \frac{t_n}{s_n} \right) , \frac{2 t_n}{3 s_n}\right) \) with \(Z_{i, i+1}\left( i \frac{t_n}{s_n}, (i+1) \frac{t_n}{s_n}\right) \ge X_i\). It follows that

Recall that \(\frac{r_n}{\sqrt{3 t_n}} + \frac{s_n}{n\sqrt{3 t_n}}\log \left( \frac{t_n}{s_n}\right) \) is a bounded sequence and without loss of generality is bounded away from zero. The result follows from normal tail estimates. \(\square \)

Rights and permissions

About this article

Cite this article

Janjigian, C. Large Deviations of the Free Energy in the O’Connell–Yor Polymer. J Stat Phys 160, 1054–1080 (2015). https://doi.org/10.1007/s10955-015-1269-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-015-1269-y