Abstract

This paper considers a two-agent scheduling problem in which each agent has a set of jobs that competes with that of another agent for the use of m unrelated parallel machines. Each agent aims to minimize a certain scheduling criterion related to the completion times of its jobs. The overall objective is to minimize the total completion time of the jobs of one agent while keeping the weighted number of tardy jobs of another agent within a given limit. We introduce a novel column generation scheme, called in-out column generation, to maintain the stability of dual variables and then embed this scheme into a branch-and-price framework. A greedy heuristic is used to obtain a set of initial columns to start the in-out column generation. The pricing subproblem in the column generation scheme is formulated as a single-machine scheduling problem that can be solved using dynamic programming techniques. An efficient branching strategy that is compatible with pricing subproblems is also proposed. The extensive computational results that are obtained by using randomly generated data demonstrate that our branch-and-price algorithm is singularly efficient and promising.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Multi-agent scheduling has received substantial research interest in the last decade because of its importance in many application domains. Consider the example in which production and engineering jobs compete for a set of expensive bottleneck machines in a semiconductor wafer fabrication facility (Ramacher and Mönch 2016). The engineering department, which runs the engineering jobs for test purposes, would prefer short flow times, while the production control department, which is responsible for the production of all jobs, aims to achieve a high throughput and meet the due dates of all jobs. A similar situation can arise in preventive maintenance scheduling where some machines must undergo maintenance at regular intervals. One can imagine maintenance and manufacturing as two distinct activities (agents), with each activity having several operations (jobs) that compete for resources as well as a number of maintenance tasks that must be performed in given time windows that are specified by a release date and a due date (Leung et al. 2010).

This paper addresses a two-agent scheduling problem in which unrelated parallel machines are used by two agents. One of these agents aims to minimize the total completion time of his jobs, while the other agent tries to keep the weighted number of his tardy jobs within a specified limit. The complete information about the agents’ jobs (tasks) is available to a “central” coordinator, whose goal is to develop a schedule that can accommodate the objectives of the two agents. Such objective has been previously proposed in multi-agent scheduling settings (see Agnetis et al. 2004; Leung et al. 2010 and Ng et al. 2006 for a single-machine setting). The considered model, whether in its current form or with simple modifications, can also be applied to healthcare problems that involve two surgery types, namely, elective surgeries that must be planned in advance and semi-urgent surgeries that compete for the use of multiple operating rooms over a planning horizon (a week or a month). In a public healthcare system, semi-urgent surgeries must typically be performed by healthcare authorities within a specific time window for clinical considerations, that is, before a “due date” (i.e., within 4 weeks). In the meantime, the average waiting times for elective surgeries are expected to be minimized to increase patient satisfaction. However, efficiently solving a two-agent scheduling problem with unrelated parallel machines is a challenging task. In fact, an algorithm that can determine the optimal or approximation solutions for such a problem is yet to be proposed. Therefore, the main purpose of this paper is to fill this gap, which we achieve by developing a branch-and-price algorithm.

The branch-and-price method has been proven to be a powerful tool for solving otherwise intractable scheduling problems (Bard and Rojanasoonthon 2006; Brunner et al. 2010; Chen and Powell 1999; Ng and Johnson 2008; Pessoa et al. 2010; van den Akker et al. 1999, 2012). This approach consists of a branch-and-bound method where the lower bounds in the search tree are computed by a column (or variable) generation algorithm that incorporates with a series of pricing subproblems to identify the promising columns. In this paper, the branch-and-price algorithm is implemented through several enhancement components that enhance the efficiency of the computation, including (i) the execution of a heuristic algorithm for finding good initial feasible solutions; (ii) the use of a novel column generation scheme, which we refer to as the in-out column generation scheme, to keep the stabilization of dual variables; (iii) the use of an efficient branching strategy that is compatible with the pricing subproblems to accelerate the convergence of the algorithm; and (iv) the use of an efficient heuristic algorithm for constructing a “good” feasible integer solution that can prune nodes from the best available integral solution (or current incumbent) and for proposing an optimal fractional solution that corresponds to a node in the branch-and-bound tree. Components (i) and (iv) borrow the ideas from van den Akker et al. (1999) and Vanderbeck (2000), (iii) follows the strategy developed in Wolsey (1998) and Keller and Bayraksan (2009), (ii) applies the in-out column generation scheme that was introduced by Ben-Ameur and Neto (2007) to solve the multicommodity min-cost flow problem. To the best of our knowledge, this paper is the first to embed the in-out column generation scheme into a branch-and-price procedure. Extensive computational experiments were performed on randomly generated instances to evaluate and assess the efficiency of the proposed algorithm. With the aforementioned implementation, problems with up to 80 jobs can be solved to optimality within a reasonable running time.

The pioneering work in this field can be traced to Agnetis et al. (2004) and Baker and Smith (2003), who consider the scheduling problems that arise when two agents, each with a nonpreemptive job set and a set of performance criteria to optimize, compete with each other in processing their respective jobs on a shared processing facility. To better position the contribution of this paper in the multi-agent scheduling literature, we review the related literature, which topics may be classified into: (1) multi-agent scheduling problems in a parallel machine environment and (2) multi-agent scheduling problems that are formulated as constrained optimization models. The reader may refer to Agnetis (2012), Agnetis et al. (2014) and Perez-Gonzalez and Framinan (2014) for comprehensive surveys on multi-agent scheduling.

Similar to most studies on multi-agent scheduling problems, this paper is on the constrained optimization model framework. The literature on multi-agent scheduling in a parallel machine environment is considered the most relevant to this work. Given the intrinsic complexity of such problems, only a limited number of papers have been published so far. Elvikis et al. (2011) examine the two-agent scheduling problems with equal processing times on uniform parallel machines, where one agent must perform job scheduling to minimize the general cost function while the other agent must minimize the makespan of his jobs. These agents consider different min-max and min-sum versions of the cost function as well as provide special properties and polynomial time algorithms. Gerstl and Mosheiov (2013) consider just-in-time (JIT) scheduling with unit-time jobs in an identical and uniform parallel machine setting, in which the goal is to minimize the weighted sum of the earliness-tardiness values for one agent and keeps the weighted sum of deviations from a common due date by no greater than a given limit for the other agent. An optimal solution in all cases can be obtained in polynomial time by solving a number of linear assignment problems. Leung et al. (2010) study the scheduling problems with two agents on identical parallel machines where jobs have release dates and job preemption is allowed. The optimality criteria include the maximum of regular functions, total completion, and number of tardy jobs. Polynomial and pseudo-polynomial time algorithms are provided to solve various combinations of the considered criteria. Yin et al. (2016) consider JIT scheduling on unrelated parallel machines, in which both agents aim to maximize the weighted number of their JIT jobs that are completed exactly on their due dates. They provide a pseudo-polynomial time solution algorithm for finding an optimal Pareto solution, which establishes that it is NP-hard in the ordinary sense when the number of machines is fixed, and show that it admits a fully polynomial time approximation scheme for finding an approximate Pareto solution. However, the aforementioned papers do not consider the two-agent scheduling problem in a non-identical parallel machine setting. The reader may refer to Gerstl and Mosheiov (2014), Yin et al. (2016), Li and Yuan (2012), Kovalyov et al. (2015), Lee et al. (2009) and Wan et al. (2010) for studies on models with non-parallel machines and different criteria.

The rest of the paper is organized as follows. Section 2 describes our two-agent scheduling problem and initially formulates this problem as a mixed integer linear programming model and then as a set-partitioning model. Section 3 introduces the in-out column generation scheme. Section 4 develops the branch-and-price algorithms and presents the implementation details. Section 5 reports the computational results by using randomly generated data. Section 6 concludes the paper and suggests some topics for future research.

2 Problem definition and formulations

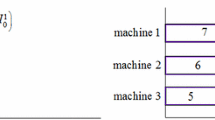

We consider two agents, A and B, with each agent having his own set of nonpreemptive jobs to be processed on m unrelated parallel machines \(M=\{M_1,M_2,\ldots ,M_m\}\), all of which are continuously available from time zero onward. All jobs are available for processing at time zero, each job must be processed on only one machine, and each machine is capable of processing any job but only one job at a time. Agent A possesses the job set \(J^A = \{J^A_1, J^A_2 ,\ldots , J^A_{n_A} \}\), while agent B possesses the job set \(J^B = \{J^B_1, J^B_2 ,\ldots , J^B_{n_B} \}\). We refer to these jobs as A-jobs and B-jobs, respectively. Each job \(J_j^A\) is associated with a processing time \(p_{ij}^A\) if processed on machine \(M_i\), while each job \(J_k^B\) is associated with a processing time \(p_{ik}^B\) if processed on machine \(M_i\), a weight \(w_k^B\), and a due date \(d_k^B\). The completion time of job \(J_j^A\) (resp., \(J_k^B\)) in a schedule is denoted by \(C_j^A\) (resp., \(C_k^B\)).

Given a schedule, a B-job is said to be on time if it is completed before or exactly on its due date; otherwise, this job is considered tardy. We assume that all parameters are integers, and let \(P=\sum _{j=1}^{n^A}p_j^A+\sum _{k=1}^{n^B}p_k^B\) be the total processing time of all \(n=n_A+n_B\) jobs.

The goal of this study is to minimize the total completion time of A-jobs, i.e., \(\sum _{j=1}^{n^A}C_j^A\), while keeping the weighted number of tardy B-jobs, i.e., \(\sum _{k=1}^{n^B}w_k^BU_k^B\), no larger than a pre-fixed threshold \(V^B\), where \(U_k^B =1\) if \(C_k^B> d_k^B\) and \(U_k^B =0\) otherwise.

The single-machine case with a unit weight of B-jobs, i.e., \(w_k^B=1\) for \(k=1,\ldots ,n_B\), is studied in Leung et al. (2010) and Ng et al. (2006) who show that the problem is \({\mathcal {NP}}\)-hard in the ordinary sense and develop pseudo-polynomial time algorithms to solve such problem. This current paper focuses on the case with arbitrary weights for the B-jobs as well as on scheduling the jobs on unrelated parallel machines, which is a very challenging task. In “Appendix”, we show that the problem is \({\mathcal {NP}}\)-hard in the strong sense even for the case in which all parallel machines are identical.

By using the three-field notation for two-agent scheduling introduced by Agnetis et al. (2014), we denote our problem by \(R||\sum _{j=1}^{n^A}C_j^A:\sum _{k=1}^{n^B}w_k^BU_k^B\). A schedule for the problem is said to be feasible if the constraint imposed on the B-jobs is satisfied.

For convenience in the developments, we re-index the A-jobs in an arbitrary order from \(J_1\) to \(J_{n_A}\) and the B-jobs in the order of earliest due date (EDD) from \(J_{n_A+1}\) to \(J_{n}\). There exists an optimal schedule for the problem in which the tardy B-jobs are assigned to the end of sequence in any order on the machines. For notational convenience, we may assume, without loss of generality, that tardy B-jobs are rejected and thus do not need to be processed on any machine. Thus, the mathematical programming representation of the scheduling problem can be formulated as follows:

where

- \(C_j\)::

-

the earliest completion time of job \(J_j\), \(j=1,\ldots ,n\);

- \(x_{jr}^i\)::

-

equal to 1 if job \(J_j\) is scheduled (resp., scheduled on time) at position r on machine \(M_i\) for \(j=1,\ldots ,n_A\) (resp., \(j=n_A+1,\ldots ,n\)), and equal to 0 otherwise, \(i=1,\ldots ,m\), \(r=1,\ldots ,n\);

- \(U_{j}\)::

-

equal to 1 if job \(J_j\) is tardy, and equal to 0 otherwise, \(j=n_A+1,\ldots ,n\); and

- M::

-

a very large positive number, which can be settled as P.

The objective function (1) seeks to minimize the total completion time of the A-jobs. Constraint set (2) guarantees that each A-job is assigned exactly to one position on exactly one machine. Constraint set (3) ensures that each B-job is either assigned exactly to one position on exactly one machine or scheduled as tardy. Constraint set (4) relays that there is at most one job scheduled at each position on a machine. Constraint (5) ensures that the weighted number of tardy B-jobs does not exceed the limit bound \(V^B\). Constraint set (6) defines the completion times for the A-jobs. Constraint set (7) guarantees that if a B-job is scheduled on a machine, then this job will be completed before or on its due date and is therefore considered on time, which becomes redundant when \(x_{jr}^i=1\) incorporates a very large positive number M. Constraint set (8) guarantees that if one is job assigned to the \((r+1)\)th position on a machine, then the rth position on the machine must have a job. In other words, we do not allow empty positions between two jobs. Constraint set (9) specifies the nonnegativity of \(C_j\) and sets up the binary restrictions for \(U_j\) and \(x_{jr}^i\).

The MILP formulation clearly shows that the existence of big-M coefficients makes the problem very difficult to solve. The difficulty lies in the fact that the linear programming relaxation of the MILP model typically leads to a very poor solution due to the presence of the big-M coefficients. In addition, the MILP model contains decision variables with differing effects on the global problem and objective function (e.g., the assignment of a job to be processed at a position on a machine versus the identification of tardy B-jobs), and the number of these variables increases considerably along with the number of jobs and machines. Our first attempt at solving the problem was simply to use the state-of-the-art MIP solver CPLEX to solve the MILP model. Unfortunately, CPLEX was able to solve the problems with up to 15 jobs and could not find any optimal solutions for most instances when \(n\ge 15\) due to the rapid increase in variables in the MILP model and the intrinsic complexity of the problem. However, after determining the tardy B-jobs and the job-assigned machines, we can easily observe that the job-to-position decisions on each machine are independent. In fact, these decisions form independent single-machine scheduling problems, thereby suggesting that a hierarchy algorithm that decomposes the problem offers a promising direction. In this case, we resort to the branch-and-price method by applying Dantzig–Wolfe decomposition, which involves a column (or variable) generation strategy that decomposes the problem into a set-partitioning (main) problem and several subproblems.

In what follows, we show how the original problem can be reformulated as a set-partitioning problem by applying Dantzig–Wolfe decomposition to the MILP model.

To perform the decomposition, we define a partial schedule as a schedule on a single machine, which is formed by a subset of all jobs. To develop the set-partitioning problem, we use the following notation:

Parameters

- \({\hat{x}}^i_{pj}\)::

-

equal to 1 if job \(J_j\) is covered in a partial schedule p for machine \(M_i\), i.e., scheduled (resp., scheduled on time) on machine \(M_i\) for \(j=1,\ldots ,n_A\) (resp., \(j=n_A+1,\ldots ,n\)), and equal to 0 otherwise;

- \(\hat{x}^i_p\)::

-

\((\hat{x}^i_{p1},\hat{x}^i_{p2},\ldots ,\hat{x}^i_{pn})\), the vector representing a partial schedule p for machine \(M_i\);

- \(P^i\)::

-

the set of all partial schedules for machine \(M_i\), which is huge yet finite; and

- \({\hat{c}}^i_p\)::

-

the cost of the partial schedule \(\hat{x}^i_p\) induced by agent A, i.e., the total completion time of the A-jobs covered by \(\hat{x}^i_p\).

The relationship between the parameter \({\hat{x}}^i_{pj}\) and the original variables \(x^i_{jr}=1\) is evident: \({\hat{x}}^i_{pj}=1\) if and only if \(\sum \limits _{r=1}^{n} x^i_{jr}=1\).

Decision variables

- \(\lambda ^i_p\)::

-

equal to 1 if the partial schedule p is selected for machine \(M_i\), and equal to 0 otherwise. This variable is also referred to as the master variable.

The mathematical programming model for the problem \(R||\sum _{j=1}^{n^A}C_j^A:\sum _{k=1}^{n^B}w_k^BU_k^B\) can now be reformulated as follows:

Constraint sets (11)–(13) correspond to the original constraints (2)–(4) and guarantee that each job is covered by exactly one partial schedule and that each machine is assigned at most one partial schedule.

To convert the master variables in the MP back into the original variables in the MILP model, the following relationship can be used:

The U-variable in the MP is the same as that in the MILP model, where \(\mathrm{e}_{jr}^p=1\) if and only if job \(J_j\) is scheduled at position r in the partial schedule p. Obviously, the original variable solution x, i.e., (\(x_{jr}^i\), \(j,r=1,\ldots ,n\), \(i=1,\ldots ,m\)), is integral if and only if the corresponding master variable solution \(\lambda \), i.e., (\(\lambda ^i_{p}\), \(i=1,\ldots ,m\), \(p\in P_i\)), is integral.

The problem is reformulated by applying Dantzig–Wolfe decomposition because the linear relaxation of the MP is tighter than that of the MILP model because the fractional solutions that are not convex combinations of 0–1 solutions to the original constraints are not feasible to the MP. However, for real instances, the MP cannot be solved directly because enumerating all partial schedules, variables, or columns is impractical. Moreover, the number of columns may increase exponentially along with the number of machines and jobs. To efficiently compute a lower bound, we solve the linear relaxation of the MP by removing the 0–1 restrictions on the binary variables and including only a subset \(\overline{P}^i\subset P^i\), \(i=1,\ldots ,m\). The resulting problem, which is denoted by RMP, is known as the restricted master problem. The dual of the RMP takes the following form:

where \(\pi _j\), \(j=1,\ldots ,n\), and \(\rho _i\), \(i=1,\ldots ,m+1\), are the dual variables associated with constraint sets (11) and (12) and constraint sets (13) and (14) where \(P^i\) is replaced by \(\overline{P}^i\), respectively.

3 In-out column generation

As discussed in the previous section, solving the MP directly is impractical. In this case, we apply the column generation algorithm, which starts with a subset of columns and solves the resulting RMP. This algorithm then tries to identify a new column with the most negative reduced cost by using the dual optimal solution of the current RMP. The inclusion of the new column may lead to an improved solution. The implicit search for the column with the most negative reduced cost leads to the optimization of a subproblem, which we refer to as the pricing subproblem. After including these newly found columns to the current RMP, the resulting RMP is resolved and the process is repeated until no other column with negative reduced costs is proven to be non-existent. The algorithm is usually embedded in a branch-and-bound framework or the so-called branch-and-price framework.

To efficiently apply the column generation algorithm in the branch-and-bound context, four critical issues must be addressed.

-

(i)

How to generate an initial set of columns? The column generation algorithm must start with a feasible primal solution, i.e., an initial set of columns to form the initial RMP. Good initial columns can decrease the number of iterations of column generation. We design a greedy randomized adaptive search algorithm that relies on the structural properties of the problem to generate several feasible solutions.

-

(ii)

What dual multipliers (vector) are used to construct pricing subproblems and to search for partial schedules with negative reduced costs? In each iteration of the classical column generation method, the dual optimal solution of the current RMP is used to form the pricing subproblems. However, the optimal solution (obtained by Simplex) of the RMP contains mostly nonbasic columns and a limited number of “useful” columns. Therefore, what should we do to avoid generating many unnecessary columns? The dual optimal solution of the linear relaxation of the MP can be used to deduce the optimal basic columns. Thus, to generate “good” columns, we must avoid using many “bad” dual variables. Based on this observation, we adopt the in-out column generation method developed by Ben-Ameur and Neto (2007). This algorithm uses a convex combination of the dual optimal solution of the current RMP and a feasible solution of the dual of the linear relaxation of the MP to form the pricing subproblems, to overcome the aforementioned shortcomings of the classical column generation method, and maintain the stability of the dual variables.

-

(iii)

How should the pricing subproblem be solved in the branch-and-bound context? In the column generation method, an important issue associated with branching is that the resulting pricing subproblems should not become significantly more difficult to solve after branching. In our implementation of branch-and-bound, we choose to branch on the original variables instead of the master variables and then impose additional branching restrictions, which we refer to as job-position restrictions, on the pricing subproblems. We have decided against branching on the master variables because restricting the master variable \(\lambda _p^i\) to 0 means that the partial schedule p is excluded and no such schedule can be generated in the subsequent pricing subproblems on machine \(M_i\). However, a partial schedule cannot be easily excluded when solving pricing subproblems. In the following subsection, we show that the resulting pricing subproblem is \({\mathcal {NP}}\)-hard and then develop a dynamic programming algorithm that is compatible with the job-position restrictions and runs in pseudo-polynomial time for the subproblems.

-

(iv)

How many columns with negative reduced costs should be added to the current RMP each time a pricing subproblem is solved? The more columns we add per iteration, the fewer linear programs we need to solve, and the more columns we add per iteration, the bigger the linear programs become. According to the preliminary experiments, the best option is adding the three columns with the most negative reduced costs.

In what follows, we show how to initialize the master problem and how each subproblem can be solved by using dynamic programming techniques. Afterward, we discuss the in-out column generation algorithm in detail.

3.1 Master problem initialization

To generate several initial feasible solutions, we design a greedy randomized adaptive search algorithm that is analogous to the approach developed in van den Akker et al. (1999). We also propose an iterative local improvement method for identifying the \(\gamma \) best solutions.

This greedy randomized adaptive search algorithm generates feasible schedules and can be formally described as follows.

3.2 The pricing subproblems

The goal of solving pricing subproblems is to find promising columns to add to the current RMP. The MILP model can be decomposed into m independent pricing subproblems, one for each machine. To be precise, given the dual vector \((\pi ,\rho )=(\pi _1,\ldots ,\pi _n,\rho _1,\ldots ,\rho _{m+1})\), the reduced cost \(r_p^i\) of the column corresponding to \(p\in P^i\) is given as

That is to say, when solving the current RMP, the decomposition yields m subproblems, with each subproblem its own convexity constraints [constraint sets (6)–(9)] and associated dual vector \((\pi ,\rho )\): \(\forall i=1,\ldots ,n\)

In the sequel, we use \(\chi _{(j,i)}\), \(i=1,\ldots ,m, j=1,\ldots ,n\), to denote the set of possible scheduled positions of job \(J_j\) on machine \(M_i\). This formulation can be regarded as as a job-position restriction on job \(J_j\) for the ith pricing subproblem, where \(\chi _{(j,l)}=\emptyset \), \(j=n_A+1,\ldots ,n\), \(l=1,\ldots ,m\), denotes the scenario that job \(J_{j-n_A}^B\) is scheduled tardy. In this case our pricing subproblem on some machines is to find a subset of all jobs and to determine a schedule for these jobs that satisfies the job-ordering restrictions in order to efficiently incorporate the branching restrictions described in the following section. As a consequence, the total completion time of the A-jobs minus the total dual variable value of these jobs is minimized. As shown in “Appendix”, the ith pricing subproblem is \({\mathcal {NP}}\)-hard even if there are no job-ordering restrictions are imposed.

3.2.1 Dynamic programming algorithm for the ith pricing subproblem

This subsection presents a forward dynamic programming algorithm for the ith pricing subproblem by using the trial vector representation.

The following structural properties of the optimal schedules of the ith pricing subproblem are used in our dynamic programming algorithm.

Lemma 3.1

For the ith pricing subproblem, there exists an optimal schedule such that

-

(1)

all scheduled jobs are processed consecutively on machine \(M_i\) without idle time and the first job starts at time 0;

-

(2)

the scheduled A-jobs are sequenced in order of the shortest processing time (SPT) on machine \(M_i\); and

-

(3)

the scheduled B-jobs are sequenced in EDD order.

Proof

The proof is supported by the job interchange and insertion arguments that are analogous to those used in Yin et al. (2016). \(\square \)

In what follows, we refer to the partial schedule as the feasible partial schedule if it fulfills the properties stated in Lemma 3.1.

Lemma 3.2

Let \(S=(J_{[1]},\ldots ,J_{[j]},\ldots ,J_{[\mathrm {r}]})\) be a feasible schedule for the ith pricing subproblem. Then, the following properties hold:

-

(1)

if \(J_{[j]}\) is an A-job such that \(C_{[j]}(S)-\pi _{[j]}>0\), and \(l\in \chi _{[l+1]}\) for \(l=j,\ldots ,\mathrm {r}-1\), then deleting job \(J_{[j]}\) yields a better solution; and

-

(2)

if \(J_{[j]}\) is a B-job such that \(\pi _{[j]}<0\), and \(l\in \chi _{[l+1,i]}\) for \(l=j,\ldots ,\mathrm {r}-1\), then deleting job \(J_{[j]}\) yields a better solution.

Proof

We prove only (1), while the proof of (2) is analogous. Create from S a new schedule \(S'\) by deleting job \(J_{[j]}\) and leaving the other jobs unchanged in S. Note that the completion time of each job scheduled before job \(J_{[j]}\) in S is not affected, while each job processed after job \(J_{[j]}\) is scheduled early. Therefore, based on Eq. (22) and the assumptions that \(C_{[j]}(S)-\pi _{[j]}>0\) and \(l-1\in \chi _{[l,i]}\) for \(l=j+1,\ldots ,\mathrm {r}\), we can easy see that \(S'\) is feasible and better than S, as required. \(\square \)

Based on Lemma 3.1, we re-index the A-jobs and B-jobs in SPT and EDD orders such that \(p_{i1}^A\le \ldots \le p_{in_A}^A\) and \(d_{1}^B\le \ldots \le d_{n_B}^B\), respectively, and then re-index the dual variables \(\pi _j,j=1,\ldots ,n_A\), such that \(\pi _j\) is associated with the constraint corresponding to job \(J_j^A\). Under this presentation, the dual variable \(\pi _j\) in the DRMP corresponds to job \(J_{j-n_A}^B\) for \(j=n_A+1,\ldots ,n\).

We now discuss how to determine the optimal solution strategy for the pricing subproblem. In our dynamic programming algorithm, a state is defined as a quadruple (j, k, t, r) that encodes a feasible partial schedule for jobs \(\{J_1^A,\ldots ,J_j^A,J_1^B,\ldots ,J_k^B\}\) such that the total processing time of the covered jobs is t and the last job is scheduled at position r. F(j, k, t, r) denotes the minimum objective function value (total completion time of the covered A-jobs minus the total dual variable values of the covered A-jobs and B-jobs) corresponding to the state (j, k, t, r). Given a state (j, k, t, r), we know that job \(J_j\), job \(J_k\), or one of the jobs in \(\{J_1^A,\ldots ,J_{j-1}^A,J_1^B,\ldots ,J_{k-1}^B\}\) is scheduled at the rth position. Thus, to form the state (j, k, t, r), the following cases must be considered:

-

Case 1 The job \(J_j^A\) is scheduled at the rth position, which is possible only if \(t-\pi _j\le 0\) or there exists \(l\in \{j+1,\ldots ,n_A,n_A+k+1,\ldots ,n\}\) such that \(\{r,\ldots ,r+n-j-k-1\}\varsubsetneq \chi _{(l,i)}\) due to Lemma 3.2 and \(r\in \chi _{(j,i)}\) due to the job-position restriction on \(J_j^A\). In this case, job \(J_j^A\) generates a cost \(t-\pi _j\), and we have \(F(j,k,t,r)=F(j-1,k,t-p_{ij}^A,r-1)+t-\pi _j\).

-

Case 2 The job \(J_k^B\) is scheduled at the rth position. In this case, job \(J_k^B\) must be scheduled on time, which is possible only if \(r\in \chi _{(k+n_A,i)}\), \(t\le d_k^B\), and \(t-\pi _{k+n_A}\le 0\) or there exists \(l\in \{j+1,\ldots ,n_A,n_A+k+1,\ldots ,n\}\) such that \(\{r,\ldots ,r+n-j-k-1\}\varsubsetneq \chi _{(l,i)}\). The contribution of \(J_k^B\) to the objective function is \(-\pi _{k+n_A}\). Thus, we have \(F(j,k,t,r)=F(j,k-1,t-p_{ik}^B,r-1)-\pi _{k+n_A}\).

-

Case 3 The job scheduled at the rth position is one of the jobs in \(\{J_1^A,\ldots ,J_{j-1}^A,J_1^B,\ldots ,J_{k-1}^B\}\). In this case, both jobs \(J_j^A\) and \(J_k^B\) do not generate any cost. Thus, we have \(F(j,k,t,r)=F(j-1,k,t,r)\) or \(F(j,k,t,r)=F(j,k-1,t,r)\).

For notational convenience, let \(\Delta _{jkr}\) indicate that there exists \(l\in \{j+1,\ldots ,n_A,n_A+k+1,\ldots ,n\}\) such that \(\{r,\ldots ,r+n-j-k-1\}\varsubsetneq \chi _{(l,i)}\). Our algorithm for solving the pricing problem can be formally described as follows.

The effectiveness of a dynamic programming algorithm depends largely on domination rules that make some states fathomable. During the implementation of Algorithm 2, we adopt the following domination rule to further reduce the state space:

Domination rule The state (j, k, t, r) dominates \((j,k,t',r)\) if \(t\le t'\), \(F(j,k,t,r)\le F(j,k,t',r)\), and at least one of the inequalities is strict.

Theorem 3.3

Algorithm 2 solves the pricing subproblem in \(O(n_An_B\max \{n_A,n_B\}P)\) time.

Proof

Given that Algorithm 2 exploits the structural properties developed in Lemmas 3.1 and 3.2 as well as compares the costs of all possible state transitions, this algorithm can generate the optimal schedules for the pricing subproblem. We now analyze the time complexity of the algorithm. The two sorting algorithms take \(O(n_A\log n_A)\) and \(O(n_B\log n_B)\) times, respectively. The number of different pairs of \(\{j,k,t,r\}\) is upper bounded by \(O(n_An_BnP)\), because j, k, t, and r have there are at most \(n_A+1\), \(n_B+1\), \(P+1\), and \(n+1\) at most, respectively. Thus, the overall time complexity of Algorithm 2 is indeed \(O(n_An_B\max \{n_A,n_B\}P)\). \(\square \)

When applying Algorithm 2 to solve the pricing subproblem at the root node of the searching tree of the branch-and-bound, we can drop the variable r because no job-position restrictions are imposed on the pricing subproblem at the root node. Moreover, in this special case, the time complexity reduces to \(O(n_An_BP)\).

3.3 In-out column generation implementation

This subsection presents a stepwise description of our iterative in-out column generation algorithm for solving the current RMP.

The main idea of the in-out column generation algorithm is as follows: define \((\pi ^{\mathrm{in}},\rho ^{\mathrm{in}})\) as a feasible solution to the dual of the linear relaxation of the MP, i.e., constraint set (18) is valid for all \(p\in P^i\), \(i=1,\ldots ,m\), and define \((\pi ^{\mathrm{out}},\rho ^{\mathrm{out}})\) as the dual optimal solution of the current RMP. Here, \((\pi ^{\mathrm{in}},\rho ^{\mathrm{in}})\) and \((\pi ^{\mathrm{out}},\rho ^{\mathrm{out}})\) denote the dual interior and external points, respectively. The new columns are still added to the current RMP based on reduced cost considerations, but this reduced cost is computed by using a dual vector \((\pi ^{\mathrm{sep}},\rho ^{\mathrm{sep}})=\alpha (\pi ^{\mathrm{out}},\rho ^{\mathrm{out}})+(1-\alpha )(\pi ^{\mathrm{in}},\rho ^{\mathrm{in}})\) instead of \((\pi ^{\mathrm{out}},\rho ^{\mathrm{out}})\), where \(\alpha \in (0,1]\) is a real valued scalar. The columns added in this way are expected to be more efficient. When \(\alpha =1\), the in-out column generation scheme reduces to the classical case.

Let \(\bar{z}^{\mathrm{sep}}=\sum \nolimits _{j=1}^{n}\pi _j^{\mathrm{sep}}+\sum \nolimits _{i=1}^{m}\rho _i^{\mathrm{sep}}+\rho _{m+1}^{\mathrm{sep}}V^B\), \(\bar{z}^{\mathrm{out}}=\sum \nolimits _{j=1}^{n}\pi _j^{\mathrm{out}}+\sum \nolimits _{i=1}^{m}\rho _i^{\mathrm{out}}+\rho _{m+1}^{\mathrm{out}}V^B\), and \(c^{i*}=\min \Big \{0;\hat{c}^i_p-\)\(\sum \nolimits _{j=1}^{n}\pi _j^{\mathrm{sep}}\hat{x}^i_{pj}-\rho _i^{\mathrm{sep}}|p\in P_i\Big \}\). We then obtain the following bounds in each iteration at the root node:

Lemma 3.4

Let \(z^{*}_{\mathrm{LRMP}}\) be the optimal objective function value of the linear relaxation of the MP. Then, \(\bar{z}^{\mathrm{sep}}+\sum \nolimits _{i=1}^{m}c^{i*}\le z^{*}_{\mathrm{LRMP}}\le \bar{z}^{\mathrm{out}}\).

Proof

See “Appendix”. \(\square \)

Given that \(z^{*}_{\mathrm{LRMP}}\le z^{*}\), where \(z^{*}\) is the optimal objective function value of the MP, \(\bar{z}^{\mathrm{sep}}+\sum \nolimits _{i=1}^{m}c^{i*}\) is also a lower bound on \(z^{*}\). In general, \(\bar{z}^{\mathrm{out}}\) is not a valid upper bound on \(z^{*}\) except if the current master variables satisfy the integrity constraint.

Unlike the seminal in-out column generation algorithm developed in Ben-Ameur and Neto (2007), we dynamically update the lower bound in the iteration process. The lower bound may be used to prove the optimality of the current best increment or fathom the current node of the branch-and-bound tree. If \(z_U-z_L<1\), where \(z_L\) and \(z_U\) are the current lower and upper bounds, respectively, then we can stop the in-out column generation algorithm. In this case, we find a provably optimal solution for the problem under consideration. Moreover, if \(\lceil z_L\rceil \ge z_U\), then we can fathom the current node because exploring such node cannot lead to an optimal solution. The whole process of the in-out column generation algorithm can be descried by Algorithm 3. This algorithm terminates if the gap between the objective function values of the dual interior and exterior points is inferior to a precision \(\varepsilon \).

4 Branch-and-price implementation

In-out column generation terminates with a relaxed solution to the MP. If the solution is considered integral, then it is optimal; otherwise, branch-and-bound is used to find integer solutions. The resulting branch-and-price algorithm is illustrated as follows. The major components of this algorithm, such as how to branch, how to construct a feasible solution from the farcical solution of the RMP and how a node is selected from the pool of active nodes, are discussed in detail in the following subsections.

4.1 Branching strategy

The branching rule should be easily integrable into the in-out column generation scheme. To this end, we branch on the original variables than on the master variables. To be more precise, after exploring a branch-and-bound node such as node l, if the solution \((\widetilde{\lambda },\widetilde{U})\) of the corresponding RMP is fractional and the corresponding solution value is not larger than \(z_U-1\), then the corresponding x-variable solution is computed by using Eq. (16). Based on this original variable solution (x, U), which must be fractional, the appropriate fractional variables to be branched on next are selected by following the generalized upper bound branching strategy developed in Wolsey (1998).

For ease of presentation, we let \(x_{j,n+1}^{i}=U_j\), \(i=1,\ldots ,m\), \(j=n_A+1,\ldots ,n\) and define \(G_j^i=\{r:0<x_{jr}^i<1,1\le r\le n\}\) for \(i=1,\ldots ,m\), \(j=1,\ldots ,n_A\) and \(G_j^i=\{r:0<x_{jr}^i<1,1\le r\le n+1\}\) for \(i=1,\ldots ,m\), \(j=n_A+1,\ldots ,n\). The standard branching rule is to split the branch-and-bound tree into one partition with \(x_{jr}^i=0\) and another partition with \(x_{jr}^i=1\) for some fractional variable \(x_{jr}^i\). However, this will lead to an unbalanced tree, because nonzero variables have roughly \(n-1\) possibilities in \(G_j^i\) in one branch and have only one possibility in another branch. Instead, we search for the \(G_j^i\) that is most fractional, i.e., we search for the i and j indices which \(\sum \nolimits _{r\in G_j^i} x_{jr}^i\) is closest to 0.5. Given such \(G_j^i\), we must consider the following cases:

-

(i)

There are at least two elements in \(G_j^i\). In this case, we let \(\sigma (1),\sigma (2),\ldots ,\sigma (a)\) denote some ordering of the values of \(x_{jr}^i\), \(r\in G_j^i\) to branch (to keep these two nodes balanced, we use the \(\wedge \)-shaped order in our implementation). We can create node \(l_1\) with \(x_{jr_{\sigma (k)}}^{i}=0, k=1,\ldots ,b\) and node \(l_2\) with \(x_{jr_{\sigma (k)}}^{i}=0, k=b+1,\ldots ,a\), where \(b=\min \left\{ k: \sum \limits _{v=1}^kx_{j,r_{\sigma (v)}}^{i}\ge 1/2\sum _r x_{jr}^i\right\} \).

-

(ii)

There is only one element \(x_{jr}^i\) in \(G_j^i\). We can create one branch with \(x_{jr}^{i}=0\) and another branch with \(x_{jr}^{i}=1\).

This branch strategy creates a more balanced tree and can greatly improve the solution time (Wolsey 1998). In what follows, we describe how to set the job-position restriction sets \(\chi _{(j,i)}\) for node \(l_1\), which we denote as \(\chi _{(j,i)}^{l_1}\). The case for node \(l_2\) is analogous.

If a variable \(x_{jr}^i\), \(i=1,\ldots ,m, j,r=1,\ldots ,n\), is fixed to 0 at node \(l_1\), then the initial RMP of node \(l_1\) includes all columns of node l except for the ones in which job \(J_j\) is scheduled at position r on machine \(M_i\). At the same time, the job-position restrictions are updated such that \(\chi _{(j,i)}^{l_1}=\chi _{(j,i)}^{l}{\setminus } \{r\}\), \(\chi _{(j,i')}^{l_1}=\chi _{(j,i')}^{l}\) (\(i'\ne i\)) and \(\chi _{(j',i')}^{l_1}=\chi _{(j',i')}^{l}\) (\(i'=1,\ldots ,n\), \(j'\ne j\)), thereby guaranteeing that no feasible partial schedule is generated when job \(J_j\) is scheduled at position r on machine \(M_i\). However, if a variable \(x_{jr}^i\), \(i=1,\ldots ,m, j,r=1,\ldots ,n\), is fixed to 1 at node \(l_1\), then job \(J_j\) must be scheduled at position r on machine \(M_i\). However, this restriction cannot be enforced efficiently in the pricing subproblem. Therefore, we modify the subproblem by imposing the restrictions \(x_{jr'}^{i'}=0\) (\(i'\ne i\), \(r'=1,\ldots ,n\)) and \(x_{jr'}^{i}=0\) (\(r'\ne r\)). In this case, the initial RMP of node \(l_1\) includes all columns of node l except those in which job \(J_j\) is not scheduled at position r on machine \(M_i\). At the same time, the job-position restrictions are updated such that \(\chi _{(j,i)}^{l_1}= \{r\}\), \(\chi _{(j,i')}^{l_1}=\emptyset \) (\(i'\ne i\)) and \(\chi _{(j',i')}^{l_1}=\chi _{(j',i')}^{l}\) (\(i'=1,\ldots ,m, j'\ne j\)), thereby guaranteeing that no feasible partial schedule is generated when job \(J_j\) is not scheduled at position r on machine \(M_i\).

If a variable \(U_j\), i.e., \(x_{j,n+1}^{i}\), \(j=n_A+1,\ldots ,n\), is fixed to 0 at node \(l_1\), then the initial RMP of node \(l_1\) includes all columns of node l except those in which job \(J_j\) is scheduled as tardy. At the same time, the job-position restrictions are updated such that \(\chi _{(j',i')}^{l_1}=\chi _{(j',i')}^{l}\) (\(i'=1,\ldots ,m\),\(j'=1,\ldots ,n\)). However, if a variable \(U_j\), is fixed to 1 at node \(l_1\), then the initial RMP of node \(l_1\) includes all columns of node l except those in which job \(J_j\) is scheduled as early. The job-position restrictions are updated such that \(\chi _{(j,i')}^{l_1}=\emptyset \) (\(i'=1,\ldots ,m)\) and \(\chi _{(j',i')}^{l_1}=\chi _{(j',i')}^{l}\) (\(i'=1,\ldots ,m, j'\ne j\)).

4.2 Constructing a feasible solution

The use of quick heuristic methods is crucial in the branch-and-bound method, especially for identifying feasible integer solutions that can be used to prune nodes. However, note that, unless the optimal solution to the RMP is integral, the solution provided by the in-out column generation algorithm is not feasible for the master problem, and the corresponding solution for the original mixed integer programming model is also infeasible. This subsection develops two heuristic methods to construct a “good” feasible solution based on the optimal solution of the current RMP, and then proposes a local optimization method to improve this solution.

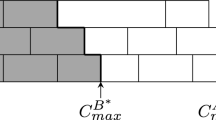

When exploring a branch-and-bound tree, two solutions are typically at our disposal. One is the current incumbent \(\overline{\kappa }=(\overline{\lambda },\overline{U})\) that is feasible in relation to the integrality constraints but has a possibly high objective function value, while the other is the solution of the current RMP at the current node \(\widetilde{\kappa }=(\widetilde{\lambda },\widetilde{U})\) that is not integral but has a possibly low objective function value.

The main idea of the first heuristic is to collect all columns corresponding to the master variables in \(\overline{\kappa }\) which values are equal to 1 in addition to those columns in the current RMP. The resulting RMP with integrity constraints is referred to as a sub-ILP that is solved by an MIP solver without any further column generation, thereby increasing the chances of obtaining higher quality feasible solutions. The resulting sub-ILP may also be potentially large and difficult to solve, so we truncate its exploration by setting a node limit nl. Any integer solution found in the sub-ILP is also a solution to the global master problem.

The second heuristic relies on a simple residual rounding heuristic, which can be briefly described as follows. Assume that we have a fractional solution \(\widetilde{\kappa }=(\widetilde{\lambda },\widetilde{U})\). We set the fractional value to 1 for those components which are already taking a value of 1 or close 1. When a fractional variable is fixed at 1, we update the job-position restrictions accordingly. Specifically, when a variable \(\widetilde{\lambda }_{p}^i\) is fixed at 1, the partial schedule \(\hat{x}_{p}^i\) is covered in the optimal schedule. Let \(J_{p}^i=\{j|\hat{x}_{pj}^i=1\}\) and r(j) denote the scheduled position of job \(J_j\) in \(\hat{x}_{p}^i\). For each \(j\in J_{p}^i\), we update \(\chi _{(j,i')}\): \(\chi _{(j,i')}=\{r\}\) if \(i'=i\). Otherwise, we update \(\chi _{(j,i')}=\emptyset \). We then delete the columns in which job \(J_j\) is not scheduled at position r on machine \(M_i\). When \(\widetilde{U}_{j}\) is fixed at 1, we update \(\chi _{(j,i)}\): \(\chi _{(j,i)}=\emptyset \), \(i=1,\ldots ,n\). We then delete the columns in which job \(J_j\) is scheduled on time. The resulting problem, called residual RMP, is solved again by using the developed in-out column generation algorithm. This process is repeated until an integer solution is obtained, when the residual RMP is considered infeasible, or when no feasible (integer) solution can be found by the heuristic. Afterward, if the algorithm returns that the residual RMP is infeasible, we fix the next fractional variable which value is closest to 1 and then solve the resulting residual RMP.

We experiment with the running times of the two heuristics on our test problems and find that the average solution time for the first heuristic ranges from 0.04 to 6 s, which is much shorter compared with the overall running time. Meanwhile, that the second heuristic consumes much time. Based on this observation, we set our algorithm as follows to call the heuristics. The first heuristic is called at every node. If the first heuristic cannot find a better feasible solution at every f node of the branch-and-bound tree, then the second heuristic is called. In case a better feasible solution is found, the local improvement strategy described in Algorithm 4 is applied to improve this solution further.

4.3 Node selection strategy

We explore the search tree by applying a hybrid strategy that combines the depth-first rule [also known as last-in-first-out (LIFO)] and the best-bound rule. This method is computationally inexpensive and can quickly find good feasible solutions. If the current branch-and-bound node is not fathomed, then the depth-first rule is applied such that one of the two son nodes of the current branch-and-bound node is selected as the next node to be solved. If the current branch-and-bound node is fathomed, then the best-bound rule is applied such that the active node with the smallest local lower bound is selected as the next node to be explored.

5 Computational experiments

To assess their performance, we applied the proposed algorithms to solve various sizes of randomly generated problem instances. We implemented the linear programming models inside the branch-and-price algorithm in the YALMIP/CPLEX 12.6 optimization solver and in the dynamic programming algorithms in MATLAB. We performed all computational experiments on a personal computer with 4 GB memory and 3.40-GHz CPU. We generated the data for the computational experiments according to the following scheme.

5.1 Datasets

To the best of our knowledge, no standard test datasets are available for the problem under consideration. Therefore, we adapted an existing standard data setting that was originally set up to test the unrelated parallel machine scheduling with the objective of minimizing the weighted number of tardy jobs (Chen and Powell 1999). This setting has been used in many computational experiments as a benchmark. To create the problems with two competing agents, we randomly assigned one of three numbers \(\lfloor n/3\rfloor \), \(\lfloor n/2\rfloor \) to \(n_A\), which denotes the number of A-jobs, where n is the number of jobs. Different sets of parameter values were generated as follows:

-

(i)

the number of jobs was \(n\in \{10, 20,\ldots ,60,80\}\), while the number of A-jobs was randomly chosen from the set \(\{\lfloor n/3\rfloor , \lfloor n/2\rfloor ,\lfloor 2n/3\rfloor \}\);

-

(ii)

the number of machines was \(m\in \{2,3,4,6,8,10\}\);

-

(iii)

the job processing times \(p_{ij}\) were integers that were uniformly distributed in [1, 100], which is the most commonly used distribution in the parallel machine scheduling literature;

-

(iv)

the job weights of agent B were integers that were uniformly distributed in [1, 100];

-

(v)

the due date of job \(J_j^B\) was set to \(\max \{\alpha _j^B,\beta _q\}\), where \(\alpha _j^B=\min \limits _{i=1,\ldots ,m}p_{ij}^B\) and \(\beta _q\) was an integer that was uniformly distributed in [1, 100r / q], with \(r=n/m\) and \(q\in \{1,\ldots ,5\}\) being a controllable parameter indicating the congestion level of the scheduling system;

-

(vi)

the controllable parameter \(V^B\) was randomly chosen from \(\{\lfloor W^B/3\rfloor , \lfloor W^B/2\rfloor ,\lfloor 2W^B/3\rfloor \}\);

-

(vii)

the controllable parameter \(\alpha \) was chosen from \(\{0.3,0.5,0.7,0.9,0.99,1\}\); and

-

(viii)

the other parameters were set as follows: \(\gamma =10\), \(\xi _1^A= 0.8, \xi _2^A=0.15, \xi _1^B=\xi _2^B=0.4\), \(\xi _3^B=0.15\), \(\delta _1=1,500\), \(\delta _2=2,500\), \(\varepsilon =10^{-6}\), \(nl=1,000\), \(f=3\), and \(\varepsilon =10^{-6}\).

We randomly generated 20 test instances for each problem characterized by the given numbers of jobs and machines.

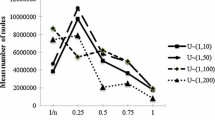

5.2 Computational results

Our experiments were divided into two parts. In the first experiment, we tested the effect of parameter \(\alpha \) in the in-out column generation algorithm on the overall performance of the branch-and-price algorithm. Based on the results of the preliminary computational tests, \(\alpha \) takes six different values, namely, 0.3, 0.5, 0.7, 0.9, 0.99 and 1. The results are presented in Table 1, where “(n, m)” denotes the combination of numbers of jobs and machines, “\(\alpha \)” represents the control parameter, and “Time (s)” measures the average CPU time (in seconds) consumed for each combination of “(n, m)” and “\(\alpha \)”. Table 1 clearly demonstrates that \(\alpha =0.99\) outperforms the other \(\alpha \) values by far. Specifically, the case with \(\alpha =0.99\) outperforms the case with \(\alpha =1\) except when \(m=2\) and \(n=10\). In terms of CPU time, \(\alpha =0.99\) is 40% faster than \(\alpha =1\) for most of the tested instances. Based on this observation, we set \(\alpha =0.99\) in the following experiments.

The second experiment assessed the performance of the proposed branch-and-price algorithm in solving randomly generated problem instances as well as measures the influence of the heuristic that is developed in Sect. 3.1 on such performance. The computational results are presented in Table 2, where column “LP-IP” shows the average percentage gap between the solution value of the linear relaxation of MP at the root node and the optimal objective value, which reflects the tightness of the obtained lower bound. In addition, column “P-R-N” indicates the number of problems (out of 20) that are solved at the root node, i.e., without any branching, column “N-E” shows the average number of nodes explored, column “C-G” show the average number of columns generated, columns “P-T (s)” and “T-T (s)” represent the average CPU time (in seconds) spent looking for columns with negative reduced cost and the average CPU time (in seconds) consumed to solve the problem, respectively, and columns “N-W-HA” and “T-W-HA (s)” present the number of nodes visited and the average CPU time (in seconds) consumed to solve the problem by using the branch-and-price algorithm without the heuristic, respectively. From Table 2, we can make the following observations:

-

(i)

The lower bound given by the solution value of the linear relaxation of the MP that was solved by using the in-out column generation algorithm was extremely close to the optimal solution value. Branching was often not required. Specifically, 54.5% of the 400 tested problems were solved at the root node, respectively. For each set of 20 test problems with the same size, the average gap between the lower bound and the optimal solution value was less than 8%, and this value fell below 0.6% when \(m\ge 6\).

-

(ii)

For a fixed n, the problem becomes easy as m increased in most tested instances. Not only do we need less time to solve the linear relaxation of the MP but also we need slightly less search nodes and tighter lower bounds. Specifically, the gap between the lower bound and the optimal solution value fell below 0.01% in most tested instances when \(m\ge 6\). This phenomenon may be explained by the fact that the more machines involved, the fewer jobs we expected to appear in the optimal partial schedules and the smaller the relevant solution space becomes.

-

(iii)

As m increased, the number of problems solved at the root node decreased. However, the lower bound was more often tight, implying that more optimal solutions were fractional. This assumption is plausible due to the fact that the position of a job in the final feasible schedule was not predetermined when m increased.

-

(iv)

For a fixed m, as n increased, the number of problems solved at the root node decreased, the average number of nodes explored for solving the problem increased, and the average number of columns generated to solve the problem increased in most of the tested instances. These findings indicate that the problem becomes more difficult to solve as n increased. These observations can be partially explained by the fact that the number of optimal fractional solutions increased along with n and that the chance of “hitting” an integral solution decreased accordingly.

-

(v)

The average number of nodes explored to solve the problem was below 6.9, thereby suggesting that our branch-and-price algorithm performs extremely well. This finding also confirms the effectiveness of the upper-bounding, lower-bounding, and branching algorithms.

-

(vi)

The pricing time was responsible for more than 90% of the total execution time, on average, over all instances.

-

(vii)

Our algorithm was able to deal with instances with \(n\le 80\) within two hours, as long as the number of machines was not too small.

-

(viii)

Without embedding the heuristic developed in Sect. 3.1 into the branch-and-price algorithm, this algorithm would visit about twice as many nodes for most of the tested instances, thereby consuming more time. In this case, the heuristic can significantly reduce the overall running time of our branch-and-price algorithm for most of the tested instances.

6 Conclusions

This paper addresses the scheduling problem with two competing agents on m unrelated parallel machines. The objective is to minimize the total completion time of the jobs of one agent while keeping the weighted number of tardy jobs of the other agent at a predetermined level. We show that the problem is NP-hard in the strong sense and develop a branch-and-price algorithm to solve such a problem. A number of enhancements to the standard branch-and-price algorithm are also introduced to accommodate large-scale issues. The experiment results show that the branch-and-price approach is a very effective and powerful solution to our problem.

The main findings of this work are be summarized as follows:

-

(i)

Embedding the in-out column generation scheme into a branch-and-price framework to maintain the stability of dual variables is shown to be a more efficient strategy than the classical column generation scheme.

-

(ii)

By incorporating the desired structural properties into dynamic programming techniques to solve the pricing subproblems, the developed column generation approach provides a low bound that is singularly strong and is able to solve most of the tested instances with only a small number of columns and iterations.

From a modeling standpoint, the following extension can be readily addressed. Sometimes, certain machines may not be able to perform certain jobs. The current model can easily be modified to handle such an extension. Specifically, in constraint sets (11) and (12), “the range of index i from 1 to m” can be changed to “\(i \in M_j\)”, where \(M_j\) denotes the set of machines that can process job \(J_j\). This change does not affect the model structure, and the solution developed in this paper remains valid.

Several issues must be further addressed to enhance the real-life application of our approach. First, given that the pricing subproblem is solved via a pseudo-polynomial time dynamic programming algorithm, the state space at each node can grow exponentially along with the input size. Therefore, for larger instances, the implemented subproblems may not be efficient. Our experiments also show that much of the time has been spent on solving the pricing subproblems. Therefore, a more effective heuristic, instead of an exact algorithm, for solving the pricing subproblems must be designed to save a substantial amount of computational time. Second, several enhancement strategies, such as identifying cuts that can be added to the master problem, must also be developed to improve the performance of the branch-and-price algorithm. Third, hybrid column generation algorithms that combine mathematical programming and constraint logic programming may also be developed. These algorithms have been proven effective in solving intractable combinatorial optimization problems. Future research may also investigate cooperative scheduling games with multiple agents, in which one agent is usually interested in certain structural properties of the game (e.g., the conditions under which a game is balanced or convex).

References

Agnetis, A. (2012). New directions in: Informatics, optimization, logistics, and production. In P. B. Mirchandani, J. C. Smith, & H. J. Greenberg (Eds.), Multiagent scheduling problems, tutorials in operations research (pp. 151–170). Catonsville: INFORMS.

Agnetis, A., Billaut, J. C., Gawiejnowicz, S., Pacciarelli, D., & Soukhal, A. (2014). Multiagent scheduling: Models and algorithms. Heidelberg: Springer.

Agnetis, A., Mirchandani, P. B., Pacciarelli, D., & Pacifici, A. (2004). Scheduling problems with two competing agents. Operations Research, 52, 229–242.

Baker, K. R., & Smith, J. C. (2003). A multiple-criterion model for machine scheduling. Journal of Scheduling, 6, 7–16.

Bard, J. F., & Rojanasoonthon, S. (2006). A branch-and-price algorithm for parallel machine scheduling with time windows and job priorities. Naval Research Logistics, 53, 24–44.

Ben-Ameur, W., & Neto, J. (2007). Acceleration of cutting-plane and column generation algorithms: Applications to network design. Networks, 49(1), 3–17.

Brunner, J. O., Bard, J. F., & Kolisch, R. (2010). Midterm scheduling of physicians with flexible shifts using branch and price. IIE Transactions, 43, 84–109.

Chen, Z. L., & Powell, W. B. (1999). Solving parallel machine scheduling problems by column generation. INFORMS Journal on Computing, 11, 78–94.

Elvikis, D., Hamacher, H. W., & T’kindt, V. (2011). Scheduling two agents on uniform parallel machines with makespan and cost functions. Journal of Scheduling, 14, 471–481.

Gerstl, E., & Mosheiov, G. (2013). Scheduling problems with two competing agents to minimized weighted earliness–tardiness. Computers and Operations Research, 40, 109–116.

Gerstl, E., & Mosheiov, G. (2014). Single machine just-in-time scheduling problems with two competing agents. Naval Research Logistics, 61, 1–16.

Keller, B., & Bayraksan, G. (2009). Scheduling jobs sharing multiple resources under uncertainty: A stochastic programming approach. IIE Transactions, 42, 16–30.

Kovalyov, M. Y., Oulamara, A., & Soukhal, A. (2015). Two-agent scheduling with agent specific batches on an unbounded serial batching machine. Journal of Scheduling, 18, 423–434.

Lee, K., Choi, B.-C., Leung, J. Y. T., & Pinedo, M. L. (2009). Approximation algorithms for multi-agent scheduling to minimize total weighted completion time. Information Processing Letters, 109, 913–917.

Leung, J. Y. T., Pinedo, M., & Wan, G. (2010). Competitive two agents scheduling and its applications. Operations Research, 58(2), 458–469.

Li, S. S., & Yuan, J. J. (2012). Unbounded parallel-batching scheduling with two competitive agents. Journal of Scheduling, 15, 629–640.

Mor, B., & Mosheiov, G. (2010). Scheduling problems with two competing agents to minimize minmax and minsum earliness measures. European Journal of Operational Research, 206, 540–546.

Ng, C., Cheng, T. C. E., & Yuan, J. (2006). A note on the complexity of the problem of two-agent scheduling on a single machine. Journal of Combinatorial Optimization, 12, 387–394.

Ng, T. S. A., & Johnson, E. L. (2008). Production planning with flexible customization using a branch-price-cut method. IIE Transactions, 40, 1198–1210.

Perez-Gonzalez, P., & Framinan, J. M. (2014). A common framework and taxonomy for multicriteria scheduling problem with interfering and competing jobs: Multi-agent scheduling problems. European Journal of Operational Research, 235, 1–16.

Pessoa, A., Uchoa, E., Poggi de Aragao, M., & Rodrigues, R. (2010). Exact algorithm over an arc-time-indexed formulation for parallel machine scheduling problems. Mathematical Programming Computation, 2, 259–290.

Ramacher, R., & Mönch, L. (2016). An automated negotiation approach to solve single machine scheduling problems with interfering job sets. Computers and Industrial Engineering, 99, 317–329.

van den Akker, J. M., Hoogeveen, J. A., & Van de Velde, S. L. (1999). Parallel machine scheduling by column generation. Operations Research, 47, 862–872.

van den Akker, J. M., Hoogeveen, J. A., & van Kempen, J. W. (2012). Using column generation to solve parallel machine scheduling problems with minmax objective functions. Journal of Scheduling, 15, 801–810.

Vanderbeck, F. (2000). Exact algorithm for minimising the number of setups in the one-dimensional cutting stock problem. Operations Research, 48, 915–926.

Wan, G., Vakati, R. S., Leung, J. Y. T., & Pinedo, M. (2010). Scheduling two agents with controllable processing times. European Journal of Operational Research, 205, 528–539.

Wolsey, L. (1998). Integer programming. New York: Wiley.

Yin, Y., Cheng, S. R., Cheng, T. C. E., Wang, D., & Wu, C. C. (2016). Just-in-time scheduling with two competing agents on unrelated parallel machines. Omega, 63, 41–47.

Yin, Y., Wang, D., Wu, C. C., & Cheng, T. C. E. (2016). CON/SLK due date assignment and scheduling on a single machine with two agents. Naval Research Logistics, 63(5), 416–429.

Acknowledgements

We thank the AE and two anonymous referees for their helpful comments on an earlier version of our paper. This paper was supported in part by the National Natural Science Foundation of China (Nos. 11561036, 71501024, 71871148), by Research Grants Council of Hong Kong (T32-102/14-N), by China Postdoctoral Science Foundation (Nos. 2018T110631, 2017M612099), and by Sichuan University (No. 2018hhs-47).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 A.1 The complexity of the problem \(P||\sum _{j=1}^{n^A}C_j^A:\sum _{k=1}^{n^B}w_k^BU_k^B\)

We show that the problem under consideration is \({\mathcal {NP}}\)-hard in the strong sense even for the case of identical parallel machines, i.e., \(p_{ij}^A=p_j^A\) and \(p_{ik}^B=p_k^B\) for \(i=1,\ldots ,m,j=1,\ldots ,n_A,k=1,\ldots ,n_B\), which is denoted by \(P||\sum _{j=1}^{n^A}C_j^A:\sum _{k=1}^{n^B}w_k^BU_k^B\) via a reduction from 3-PARTITION.

3-PARTITION: Given an integer E, a finite set A of 3r positive integers \(a_{j}\), \(j=1,\ldots ,3r\), such that \(E/4<a_j<E/2\) for \(j=1,\ldots ,3r\), and \(\sum \nolimits _{j=1}^{3r}a_j=rE\), can the set \(I=\{1,\ldots ,3r\}\) be partitioned into r disjoint subsets \(I_1,\ldots ,I_r\) such that \(\sum \nolimits _{j\in I_h}a_j=E\) for \(h=1,\ldots ,r\)?

Given an instance of 3-PARTITION, consider the following instance of the schedule problem \(P||\sum _{j=1}^{n^A}C_j^A\le V^A,\sum _{k=1}^{n^B}w_k^BU_k^B\le V^B\) with 4r jobs and r machines. Specifically, agent A has \(n_A=3r\) jobs, which are associated with processing times \(p_{j}^A =a_j\) for \(j = 1,\ldots , 3r\). Meanwhile, agent B has r jobs, which are associated with processing times \(p_{k}^B=3rE+1\), weights \(w_{k}^B=1\) and due dates \(d_{k}^B=(3r+1)E+1\) for \(k= 1,\ldots , r\). The thresholds \(V^A =3rE\) and \(V^B =0\) are set.

Assume that the 3-PARTITION instance has a solution, and let us prove that there exists a feasible schedule for the constructed instance of the schedule problem. Let \(A_h\), \(h=1,\ldots ,r\), be the set of A-jobs corresponding to the set \(I_h\) of the solution for the 3-PARTITION instance. Consider a schedule in which the jobs in \(A_h\), \(h=1,\ldots ,r\), are scheduled on machine \(M_h\) in the first E time units, followed by the B-job \(J_h^B\). In this schedule, the completion time of each A-job is at most E, and each B-job is completed exactly on its due date. Consequently, the total completion time of A-jobs is at most 3rE, while the weighted number of tardy B-jobs is exactly equal to zero. Therefore the obtained schedule is feasible for the constructed instance of the schedule problem.

Conversely, if there exists a feasible schedule for the constructed instance of the schedule problem, then the 3-PARTITION instance has a solution. For this schedule, \(I_h\), \(h=1,\ldots ,r\) denotes the set of indices of the A-jobs that are scheduled on machine \(M_h\). The following assertions hold:

-

(1)

There is exactly one B-job scheduled on each machine. Otherwise, there exists at least one tardy B-job assigned on some machine. Therefore, the weighted number of tardy B-jobs will be larger than \(V^B\).

-

(2)

The A-jobs are scheduled on each machine before the B-job. Otherwise, the completion time of the A-job scheduled after the B-job is after time \(3rE+1\), which is larger than \(V^A\).

-

(3)

The disjoint subsets \(I_1,\ldots ,I_r\) form a partition of set I such that \(\sum \nolimits _{j\in I_h}a_j=E\) for \(h=1,\ldots ,r\). The disjoint subsets \(I_1,\ldots ,I_r\) are partitions of set I. By combing (1) and (2), we conclude that all B-jobs are completed exactly on their due dates, say \((3r+1)E+1\), thereby implying that \(\sum \nolimits _{j\in I_h}p_j^A=\sum \nolimits _{j\in I_h}a_j=E\) for \(h=1,\ldots ,r\).

In sum, there is a solution to the 3-PARTITION instance.

1.2 A.2 The proof that the ith pricing subproblem is \({\mathcal {NP}}\)-hard even if there are no job-ordering restrictions

We prove the above assumption through a reduction from 2-PARTITION. For ease of presentation, we drop the index i.

2-PARTITION: Given an integer E, a finite set A of 2r positive integers \(a_{j}\), \(j=1,\ldots ,2r\), such that \(\sum \nolimits _{j=1}^{2r}a_j=2E\), can the set \(I=\{1,\ldots ,2r\}\) be partitioned into two disjoint subsets \(I_1\) and \(I_2\), such that \(\sum \nolimits _{j\in I_h}a_j=E\) for \(h=1,2\)?

Given an instance of 2-PARTITION, consider the following instance of the decision version of the pricing subproblem with \(2r+1\) jobs and threshold U. To be more specific, agent A has one job, which is associated with a processing time \(p^A_1=1\) and a dual variable value \(\pi _1=-E\). Agent B has 2r jobs, which are associated with processing times \(p_k^B=a_k\), due dates \(d_k^B=d^B=E\), and dual variable values \(\pi _{k+1}=a_k\) for \(k=1,\ldots ,2r\), where the dual variable \(\pi _{k+1}\) corresponds to job \(J_k^B\). Set the threshold \(U=-E\) and the dual variable value \(\rho =0\).

Assume that the 2-PARTITION instance has a solution, and let us prove that there exists a feasible solution for the constructed instance of the decision version of the pricing subproblem. Let \(B_1\) be the set of B-jobs corresponding to the set \(I_1\) of the solution for the 2-PARTITION instance. Consider a schedule in which only the jobs in \(B_1\) are scheduled on the machine. In this schedule, each scheduled B-job is completed on time, and the reduced cost of this partial schedule p is \(r_p=-\sum \nolimits _{j\in I_1}\pi _{j+1}=-\sum \nolimits _{j\in I_1}a_{j}=-E\). Consequently, the obtained schedule is feasible for the constructed instance of the decision version of the pricing subproblem.

Conversely, we show that if there exists a feasible partial schedule p for the constructed instance of the decision version of the pricing subproblem, then the 2-PARTITION instance has a solution. For this schedule, \(I_1\) denotes the set of indices of the B-jobs that are scheduled on the machine. The following assertions hold:

-

(1)

The A-job is not scheduled on the machine. Otherwise, the reduced cost of this partial schedule is \(r_p=C_1^A-\pi _1-\sum \nolimits _{j\in I_1}\pi _{j+1}\ge 1+E-2E>-E=U\).

-

(2)

\(\sum \nolimits _{j\in I_1}a_j=E\). Given that the B-jobs that are covered in this partial schedule are scheduled on time, by following (1), we have \(\sum \nolimits _{j\in I_1}p_j^B=\sum \nolimits _{j\in I_1}a_j\le d^B=E\). Meanwhile, given that this partial schedule is feasible, we have \(r_p=-\sum \nolimits _{j\in I_1}\pi _{j+1}=-\sum \nolimits _{j\in I_1}a_j\le -U=-E\). Consequently, \(\sum \nolimits _{j\in I_1}a_j=E\).

In sum, a solution for the 2-PARTITION instance exists. \(\square \)

1.3 A.3 Proof of Lemma 3.4

Clearly, \(z^{*}_{\mathrm{LRMP}}\le \bar{z}^{\mathrm{out}}\). Let \(\kappa ^{*}=(\lambda ^{*},U^{*})\) be an optimal solution to the linear programming relaxation of the MP. Thus, we have

where the second inequality follows from \(c^{i*}\le 0\), \(\rho _i^{\mathrm{sep}}\le 0\) and constraint set (13), the third inequality follows from constraint set (19), and the last inequality follows from \(\rho _{m+1}\le 0\) and constraint set (14). \(\square \)

Rights and permissions

About this article

Cite this article

Yin, Y., Chen, Y., Qin, K. et al. Two-agent scheduling on unrelated parallel machines with total completion time and weighted number of tardy jobs criteria. J Sched 22, 315–333 (2019). https://doi.org/10.1007/s10951-018-0583-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10951-018-0583-z