Abstract

Objectives

Estimate the frequency of self-reported factual innocence in non-capital cases within a state population of prisoners.

Methods

We conducted a survey of a population sample of state prisoners who were asked to anonymously report their involvement in the crimes for which they were most recently convicted. To assess the validity of verifiable responses, prisoner self-report data were compared to aggregate conviction and demographic information derived from administrative records. To assess the validity of unverifiable responses, we developed a non-parametric test to estimate the probability of false innocence claims.

Results

We estimate that wrongful convictions occur in 6% of criminal convictions leading to imprisonment in an intake population of state prisoners. This estimate masks a considerable degree of conviction-specific variability ranging from a low of 2% in DUI convictions to a high of 40% in rape convictions. Implausible or false innocence claims are estimated to occur in 2% of cases.

Conclusions

The present investigation demonstrates that survey methods can provide bounded estimates of factual innocence claims within a discrete and known population. The resulting estimates, the first to formally separate claims of legal and factual innocence and to incorporate a formal measure of response plausibility, suggest that prisoners themselves are very often willing to self-report the correctness of their convictions. At the same time, a considerable minority indicate that procedural weaknesses with the administration of justice occurred in their cases. And, a distinct minority, with considerable offense variation, maintain that they are completely innocent of the charges against them.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Each year U.S. criminal courts produce over 1 million felony convictions, with just under half of these convictions resulting in imprisonment (Rosenmerkel et al. 2010). While the criminal justice system provides many legal protections to the accused, wrongful convictions do occur and scholars have long recognized the need for a measure of their prevalence as a first step in understanding, and ultimately remedying, their underlying causes (Borchard 1932; Lustgarten 1950; Gardner 1952; Frank 1957; Radin 1964; Bedau and Radelet 1987; Gross 1998; Poveda 2001; Spencer 2007; Gould and Leo 2010; Gross 2013). Legal exonerations in recent years provide one basis for estimating this number. The rate of exonerations suggesting that between 2 and 5% of capital or otherwise serious cases are wrongful convictions (Bedau and Radelet 1987; Risinger 2007; Gross and O’Brien 2008; Gross et al. 2014). However, there are reasons to believe that the measurement of wrongful convictions in capital and non-capital cases merits further scholarly effort.

First, estimates of the frequency of wrongful convictions in capital cases are the result of considerable effort to prove to a legal authority that individuals have been erroneously convicted. This often requires a very high burden of proof, which has left uncounted an unknown number of erroneous convictions that cannot meet this burden by virtue of using a legal outcome (i.e., exoneration) as the basis for counting the number of wrongful convictions. This risk is even greater in the vast majority of criminal cases that are not subject to vigorous post-conviction relief efforts observed in many capital cases (e.g., murder, rape), leaving as an open question whether these findings reflect what is happening in the vast majority of non-capital criminal convictions. Second, because the observed frequency of wrongful convictions is the starting point for exploring the predictors of wrongful convictions, the absence of a prevalence measure in the non-capital caseload makes it difficult to know whether the causes of wrongful convictions in this broader population are shared with the causes of wrongful convictions in capital cases. And finally, without a measure of wrongful convictions in non-capital case populations, it is currently unknown whether proposed remedies for errors leading to exonerations will be effective in reducing the number and causes of wrongful convictions in cases unlikely to lead to exonerations.

Previous attempts to measure the frequency of wrongful convictions in non-capital criminal cases have taken a number of different forms. Scholars have surveyed criminal justice practitioners, asking them to report their perception of the prevalence of wrongful convictions in their experience, under the assumption that practitioner experience might provide some insight into a quantity previously thought to be unmeasurable (Ramsey and Frank 2007; Zalman et al. 2008). Other scholars have analyzed judge-jury agreement studies to estimate the frequency that a judge believes that a jury erred in convicting a defendant (Gastwirth and Sinclair 1998; Poveda 2001; Spencer 2007). Finally, scholars have proposed surveying convicted persons directly to gather their self-assessments of their involvement in the crimes for which they were convicted (Blumberg 1967; Peterson et al. 1980; Poveda 2001). All of these diverse methodologies have generated broadly consistent prevalence estimates in the low single to low double-digit ranges. However, three empirical challenges of these survey methodologies remain only partially resolved. First, surveys of criminal justice practitioners have not asked respondents to provide the likelihood of errors in discrete populations of cases with which they have had personal experience, but instead they have been asked to articulate their belief about the error rate for the criminal justice system as a whole, making them difficult to interpret and tricky to generalize onto specific populations of cases. Second, surveys of judges (in judge-jury agreement studies) and surveys of convicted persons have yet to establish exactly what respondents intend when they indicate that someone has been erroneously or wrongfully convicted. Finally, the relationship between self-reported innocence and actual innocence in the context of a self-report measure have not been fully established, just as the relationship between practitioner perceptions of wrongful conviction rates and actual wrongful conviction rates have not been fully established.

In order to begin to address these limitations of the existing empirical literature, this paper reports the results of a new self-report data collection effort designed to estimate the prevalence of wrongful convictions in a population sample of state-level prisoners. Prison populations, unlike capital case populations, include a much wider variety of conviction offenses, spanning lower-seriousness crimes such as larceny, forgery, and drug possession up to higher-seriousness crimes such as murder, rape, armed robbery, and aggravated assault. To achieve representativeness of a criminal justice population of convicted persons, the Criminal Justice Experience Survey (CJES) was administered to the intake population of convicted and imprisoned individuals in a state correctional system. To assess the meaning of self-reported claims of wrongful conviction and actual innocence among this population, the CJES developed and fielded a multi-part questionnaire that situates claims of wrongful conviction within a larger distribution of respondent legal grievances with the criminal justice system. Finally, to assess the plausibility of innocence claims, a new method of estimating response bias for sensitive questions was developed and implemented within the survey.

Based on the results of this survey administration, we provide estimates of how often individuals are likely to be wrongfully imprisoned and, by extension, how often wrongful convictions are likely to occur in less serious but more common criminal cases, an area of increasing scholarly interest (Natapoff 2012, 2015; National Registry of Exonerations 2015; Gross 2015). We also demonstrate, based on the results of this newly developed instrument, that this approach is able to produce estimates of wrongful convictions among prisoners that are both consistent with past estimates and robust to previously unaddressed concerns of representativeness and response bias. The resulting estimates indicate that, in a general population of prisoners, two-thirds of prisoners self-report being correctly convicted, a third of prisoners self-report being wrongfully convicted, with 8% making a claim of factual innocence, and 6% making a consistent and plausible claim. We consider this to be an upper bound on the rate of factual innocence within this population and the aggregate of offense-specific point estimates ranging from a low of 2% in DUI convictions to a high of 40% in rape convictions.

Estimating the Frequency of Wrongful Convictions

Legal scholars have been interested in the problem of wrongful convictions since at least the 1930s. Early research in this area sought to catalogue instances in which legal verdicts were called into question after substantial new post-conviction evidence emerged (Borchard 1932; Lustgarten 1950; Gardner 1952; Frank 1957). These studies, often written by legal scholars and journalists, examined the particular procedural paths by which those subsequently determined to be innocent were convicted in the first place—frequently focusing on perpetrator misidentification, ineffective counsel or even the reappearance of missing persons thought to have been murdered (Leo 2005). Since demonstrating the occurrence and features of wrongful convictions was their primary focus, studies in this sub-literature rarely considered how representative their samples were, how often less egregious errors might be missed, or how to go about placing a relevant denominator underneath the numerator formed by their catalogues of catastrophic errors. Instead, the majority of these studies, as well as their more recent counterparts, have principally focused their attention on describing notable case features, their relative frequency, and their potential role as contributing causes of wrongful convictions (Borchard 1932; Lustgarten 1950; Gardner 1952; Frank 1957; Radin 1964; Macnamara 1969; Brandon and Davies 1973; Rattner 1988; Garrett 2008, 2011). It is only relatively recently that scholars have begun to use these samples of observed and legally corrected errors to estimate the frequency of wrongful convictions based on the subset of these cases for which the legal system has a developed infrastructure for investigating claims of innocence, most often capital or otherwise serious cases involving rape and murder.

Like their cataloging counterparts, including modern studies examining the predictors of wrongful convictions (See, for example, Gross and O’Brien 2008; Garrett 2011; Gould et al. 2014), wrongful conviction prevalence studies often begin by recording instances in which individuals convicted of capital or other serious crimes have had their convictions overturned or otherwise been exonerated by the legal process (Bedau and Radelet 1987; Radelet et al. 1996; Gross 1998; Gross and O’Brien 2008). However, prevalence studies depart from the cataloging sub-literature in two key respects. First, due to improvements in information technology, as well as the creation of organizations devoted to litigating and identifying these cases (Dwyer 2000; Gross 2016), this search for cases is likely to be much more systematic than those conducted earlier in the twentieth century. While networks of like-minded lawyers and colleagues still play a role in facilitating the collection of cases, database searches of case management and legal news information systems by permanently staffed organizations almost certainly produces a much more comprehensive coverage of exonerations and other findings of innocence within covered legal systems. Second, scholars working in this area have sought to solve the denominator problem by focusing their attention on capital or other serious cases for which we have an understanding of their frequency and to a lesser extent their features. Since most exonerations happen in this subset of cases, by counting the total number of capital or otherwise serious cases that could give rise to a rightful or wrongful conviction, it is possible to measure the prevalence of this phenomenon.

Within the population of capital and serious cases, wrongful conviction rates have been estimated to be between 2.3% and 5%. At the low-end of this range, Gross and O’Brien report that wrongful convictions, in which factual innocence was later uncovered, occurred in an estimated 2.3% of death penalty cases (Gross and O’Brien 2008). A more recent estimate from Gross and colleagues puts the number closer to 4.1%, after adjusting for censoring in persons executed or otherwise removed from death row prior to either their exoneration or the exhaustion of all legal proceedings (Gross et al. 2014). Risinger (2007) offers a similar, if slightly higher, estimate derived from a sample of capital murder and rape cases (3–5%). Lastly, Roman and colleagues, examining a sample of rape cases with previously untested DNA, estimate that the testing would have produced exculpatory evidence for individuals convicted of rape in 3% of cases (Roman et al. 2012).

While these estimates provide an empirical starting point for considering the problem of wrongful convictions, several of their features suggest the need for additional inquiries. Virtually all of these studies rely on a legal finding of innocence as their measure of wrongful conviction.Footnote 1 This necessarily excludes from consideration any cases where the quality of exculpatory evidence or the vigor of post-conviction relief efforts is insufficient to exonerate or otherwise clear an erroneously convicted person. In addition, as Gross (2016) has noted, “[w]e have reliable statistical evidence that the rate of false convictions among death sentences in the United States is about 4%, but we don’t have comparable information about non-capital convictions.”Footnote 2 The reasons for this lack of comparable information are threefold. First, it is unlikely that the capital case or the exonerated populations are representative of either the prison population or the broader population of individuals with criminal convictions (Brandon and Davies 1973; Garrett 2011). In 2013, capital cases represented only 0.2% of all prisoners in state and federal custody (Carson 2014; Snell 2014). They composed an even smaller fraction (0.02%) of the admissions to prisons in the same year, reflecting the secular decline in the use of the death penalty in the United States (Hood and Hoyle 2015; Garrett 2017). In addition, due to differing legal requirements and an active advocacy community for death penalty and life imprisonment cases, the commitment of legal resources to these cases far exceeds what is available in non-capital cases. Finally, the analysis of DNA evidence, which has played an important role in detecting errors in capital cases, is less likely to be a factor in non-capital cases, where forensics have historically played a much smaller role (Peterson et al. 1987; Roman et al. 2009; Peterson et al. 2013). As a result, factual and legal errors are much more likely to be discovered in capital cases than in non-capital cases (Gross 2013). This confluence of trends, with death penalty cases declining as a percentage of all convictions and wrongful convictions becoming less identifiable in non-capital cases, has produced an expanding dark figure of wrongful convictions.

Social scientists have made a a number of attempts to quantify this dark figure. They include surveys of criminal justice practitioners to ascertain their estimates of the frequency of wrongful convictions (Huff et al. 1986; Ramsey and Frank 2007; Zalman et al. 2008). In the earliest such survey, Huff and colleagues estimated that the probability of a wrongful conviction in the United States was approximately 0.5% (Huff et al. 1986). Subsequent replications by others have put the number at between 1 and 3% (Ramsey and Frank 2007; Zalman et al. 2008) and extended this approach to citizen estimates (Zalman et al. 2012). The use of convenience samples of criminal justice practitioners, coupled with the challenge of determining exactly what and whose experiences respondents are drawing upon when they state their beliefs regarding the frequency of wrongful convictions, makes it difficult to know for what population of cases, in what jurisdictions, and over what years these estimates adhere.

An alternative methodology would be to survey criminal justice practitioners regarding their most recent case. This would allow for an estimate over a known population of cases during a specific time period. A version of this approach has been tried with judges overseeing jury trials. Using this methodology, scholars have queried the judiciary, typically asking judges how often they would have acquitted defendants in criminal jury trials in which juries voted to convict (Gastwirth and Sinclair 1998; Poveda 2001; Spencer 2007). The resulting estimates, have been as high as 10% of cases, leverage the fact that judges are present for the fact-finding phases of the trials that they preside over. This methodology for estimating the prevalence of wrongful convictions rests upon the twin assumptions that judges are more accurate fact-finders than juries, likely due to a combination of training and experience, and that all errors can be detected by fact-finders. Neither of these assumptions has yet been directly tested. However, beyond the issue of relative or absolute fact-finding accuracy, jury trials represent a small (4%) and diminishing number of felony convictions (Rosenmerkel et al. 2010; Weiser 2016), limiting the ongoing utility of this particular estimator.

Finally, surveys of convicted felons have been intermittently conducted (Blumberg 1967; Peterson et al. 1980) and criminologists have used survey methods to gather information on criminal acts for nearly 100 years.Footnote 3 Beginning with Robison (1936), if not earlier, researchers have attempted to understand the characteristics of delinquent and criminal behavior among adolescents and young adults using social surveys (Murphy et al. 1946; Nye and Short 1957, 1959; Reiss and Rhodes 1961; Erickson and Empey 1963; Hirschi 1969; Gold 1970). While many of these surveys captured a large volume of seemingly innocuous behavior (e.g., smoking, curfew violations, petty theft), they also demonstrated that respondents would report both deviant and illegal acts, including quite serious ones (e.g., assaults, robberies). More recently, scholars have adapted this methodology to study the predictors and frequency of false confessions among the mentally ill, juveniles, and adult prisoners (Gudjonsson and Sigurdsson 1994; Sigurdsson and Gudjonsson 1996; Gudjonsson et al. 2006; Redlich et al. 2010).

The challenges inherit in validating self-reported measures of delinquency and offending against official records has long been recognized (Hindelang et al. 1979; Cernkovich et al. 1985; Huizinga and Elliott 1986). Although no measurement is perfect, the reliability and validity of self-report data have been subjected to high levels of scrutiny, especially for behaviors not widely recognized as acceptable, including offending (Thornberry and Krohn 2000).Footnote 4 When self-reported rates of delinquency are compared to matched official records of police contacts, high degrees of correlation have been observed, ranging from 68% (Huizinga and Elliott 1986) between YLS data and self-reports to a 78% correlation with official data (Hardt and Peterson-Hardt 1977) to a 94% agreement between self- and peer-reports of delinquency (Farrington 1977). More recent work reinforces the robust relationship between self- reports and official records, but offers caveats with regard to variation by offense history, race, gender and age (Kirk 2006; Piquero et al. 2014).

Beginning with Blumberg (1967), scholars have queried convicted persons directly to see what their satisfaction or agreement with their conviction process actually was. By asking inmates or other convicted persons to report whether they committed the crimes for which they were convicted, these surveys theoretically allow researchers to measure factual innocence.Footnote 5 Peterson, Braiker and Polich (1980) in the first RAND Inmate Study reported that 13% of inmates failed to report committing any of the 11 most common commitment crimes that they were surveyed about (Peterson et al. 1980). Due to concerns that this measure likely included some number of respondents who were incarcerated for crimes beyond this list or otherwise didn’t believe that their commitment conviction was reflective of their level of involvement, they adjusted their estimate down to 6% to reflect the percentage of persons who completely denied all involvement in all possible crimes. In the absence of validation information, the authors interpreted this number as reflecting a combination of wrongfully convicted and lying respondents. Poveda (2001), in a re-analysis of the second RAND Inmate Survey (Marquis and Ebener 1981; Chaiken et al. 1982), estimated that 15.4% of surveyed prisoners reported committing none of the crimes for which they were convicted. Like the first RAND Inmate Survey, he adjusted his estimate for respondent indications elsewhere in the survey that they had in fact committed a crime. This less comprehensive adjustment altered his estimate only slightly, reducing it by 1.5 to 13.9% (Poveda 2001). In related work, Gudjonsson and Sigurdsson (1994) have used a similar technique to measure the frequency of false confessions among Icelandic prisoners.Footnote 6

An essential assumption that underlies each of these prisoner self-report studies is the proposition that convicted felons are uniquely well-situated to comment on the quality of the conviction that they most recently received since they possess information on both their involvement and their conviction status, something that no other individual is likely to possess. This holds true across all types of cases, ranging from theft to murder. Convicted felons also can be sampled such that their responses, if un- or minimally-biased, readily generalize onto discrete populations (e.g., all convicted persons in a jurisdiction or all imprisoned felons).

Although inmate self-report data offer numerous potential advantages, a number of practical limitations exist in this sub-literature. First, past administrations have not achieved an adequately representative sample. The average response rate in the first RAND Inmate survey was 57% among California prisoners (Peterson et al. 1980). The second RAND inmate survey achieved a considerably higher response rate for Texas prisoners (82%), but a similarly low response rate (50%) for prisoners in Michigan and California (Peterson et al. 1982). While these response rates are not intrinsically cause for concern since (Groves and Lyberg 2010), low response rates are particularly problematic given a considerable body of survey research that has shown that non-response is a more common reaction than biased or false response to sensitive survey questions (Tourangeau and Yan 2007). Such a pattern, especially if non-response were to be correlated with probability of reporting either innocence or guilt, could bias self-report estimates. Second, for those felons that do respond, the verifiability of responses, particularly on the potentially sensitive question of their own guilt, has not been assessed. And finally, the comparability of survey derived estimates, which do not normally separate factual from legal innocence, to wrongful conviction measures in the capital case literature, which normally do, has yet to be resolved. Still, in contrast, to the limitations of other non-capital wrongful conviction estimators, each of these three problems are potentially addressable.

Data and Methods

The Criminal Justice Experience Survey (CJES) was developed to test whether self-report data gathered from a post-conviction population could be used to estimate the frequency and correlates of wrongful convictions. Modeled off of earlier surveys of convicted and imprisoned persons (Blumberg 1967; Chaiken et al. 1982), the CJES asked respondents to anonymously report the crimes for which they were most recently convicted and, in a subsequent item, the subset of these crimes that they believe they actually committed. Due to concerns that this previously employed metric might conflate wrongful conviction claims stemming from factual and legal innocence, several additional questions were asked in order to better understand respondent intent. These included a forced-choice question on whether the respondent believed themselves to have been wrongfully or properly convicted; a follow-up item asked only those claiming a wrongful conviction to clarify exactly why they believed themselves to be wrongfully convicted (See Fig. 1). This follow-up item was developed from the most frequently provided open-ended responses received during an extensive pre-testing, pilot phase. The available choices were discrete, with each possible response representing a distinctive type of claim regarding the respondent’s grievance with their conviction, and the available choices were ordered from strongest claim of factual innocence to the weakest claim of factual innocence. Respondents were instructed to check all responses that applied.Footnote 7 This construct allowed respondents to situate themselves along a continuum of levels of involvement and types of grievances formed by the twin issues of personal responsibility and legal due process that underlie many wrongful conviction claims.

Administration of the survey took place at the primary reception facility for the Pennsylvania Department of Corrections (PA DOC). The facility accepts male inmates from across the entire state and is the primary site responsible for the intake and classification of incoming prisoners prior to their assignment to a non-reception prison for the duration of their sentences. The existing assessment process required that prisoners complete a number of screening instruments during their first day at the facility. The CJES administration was integrated into this process and took place in a classroom-like setting in groups with a median size of 36 individuals. This administration format was selected in order to maximize response rates and respondent confidence in survey anonymity, mirroring the approach used in the original RAND Survey (Peterson et al. 1980).

Prior to receiving the standard PA DOC screening instruments, prisoners were asked to open a distinct and labeled CJES envelope that contained the survey, completion instructions and the informed consent documents. The instructions, reiterated by a session moderator, communicated that the survey was being conducted by researchers independent from the PA DOC, that all responses were anonymous and confidential, and that participation was voluntary. Completion of the instrument itself was self-paced and, after approximately 10 min, all surveys (completed and blank) were resealed by prisoners in their original envelopes and collected.Footnote 8 The CJES administration began on November 2, 2015 and ran for 5 months. During that time period 2846 surveys, representing the entire intake population at the facility, were administered. In practice, the unit response rate was 94.1%, with 2678 complete or partially complete responses received. Since the vast majority of male offenders newly committed to the PA DOC system participate in the screening process in this facility, this high response rate serves as a support for the assumption that the CJES administration achieved representativeness of the statewide PA DOC male intake population.

To assess the general reliability of prisoner self-reports, responses on several offense and demographic variables were compared to PA DOC administrative records. Because responses were anonymous, prisoner self-reports were aggregated and compared to group-level administrative data for all inmates moving into the reception unit on the days when the surveys were administered. On most verifiable questions, including age, race, commitment offense, and county of commitment, self-report responses closely mirror administratively recorded values (See Table 1). Most differences between measures are statistically insignificant. Larger observed departures, particularly for a subset of commitment offenses and race sub-categories, likely reflect a mixture of non-random unit and item non-response as well as differential classification by prisoners, the DOC or within the CJES item. This final possibility is an especially likely explanation for the classification of commitment charges and race/ethnicity responses into categorical groupings. When compared to age, race and offense responses are likely to be subject to similar unit- and item-non-response problems, but not categorization issues.Footnote 9 Given the nearly identical age distributions across self-report and administrative records, observed differences are most readily understood as reflecting differences in categorization by officials and individuals. These results, consistent with past validation exercises of prisoner self-report data, suggest a high degree of external validity for respondent answers while simultaneously revealing the inherent ambiguities in aligning self-report and administrative records.

Binomial Estimation of Implausible Responses

Unlike many common sensitive questions involving income, sexual behavior, or many criminal acts, which can be at least partially validated against administrative records, a wrongful conviction is not a status that lends itself to verification. Just as criminal acts that do not lead to contact with police cannot be verified against official records, many claims of innocence cannot be similarly verified. This is particularly the case if the lack of verifiable evidence of innocence is in part the basis for why an individual would be wrongfully convicted and available to report factual innocence in a survey. This leaves surveys of wrongful convictions with a paradox, they are perhaps the only means of gathering a representative measure of wrongful convictions, but the accuracy of the information gathered cannot seemingly be verified, especially on the measure of interest.

In other situations where survey responses cannot be verified, a common survey design method is to minimize the probability that a respondent would be motivated to give a false response during the survey. This could take the form of limiting what data are collected or making general promises of anonymity. The potential for each of these methods to reduce response bias rests on the assumption that respondents will have greater confidence in the integrity of the research at the time of administration and, therefore, will answer more honestly than they otherwise would have in the absence of these measures. Another example of this approach can be seen in randomized response methods (RRM) as proposed by Warner (1965).Footnote 10 However, these approaches do not actually verify that respondents are being truthful in their responses. To provide a more direct measure of respondent truthfulness, especially under either strict or relaxable assumptions required in surveys of sensitive topics, we consider a somewhat different approach.

Our proposed method of estimating this quantity relies on the fact that when respondents are asked about the number of times that they have experienced a particular sensitive state, it is possible to estimate the plausibility of their particular response based on the number of times they and all other respondents have experienced this sensitive state. In any repeat trial with a fixed probability, the binomial distribution can be used to predict the number of expected successes and failures that should be observed. This simple approach is the basis of many introductory statistics problems and has occasionally been used to detect unlikely patterns in other real world problems (Kaplowitz et al. 1982). The binomial distribution and the various approximations to it are useful because they quantify how likely it is that a respondent or set of respondents would be to generate a particular distribution of successes and failures. In the case of our application that is the numbers of times respondents have been previously convicted and report being wrongfully convicted.

If all respondents in the CJES were subject to a single uniformly acting justice system, in which the probability of a correct conviction (p) were fixed, it would be possible to use a single binomial distribution to compare the self-reported conviction histories and self-reported innocence histories to the expected values from the binomial distribution. Such a model would assume that each of the n respondents have an xi, the number of convictions that respondent i reports as correct, that comes from a Binomial(mi, p) where mi is the total number of convictions respondent i has and p is the probability of reporting a correct conviction. For example, if the probability of a correct conviction in a case is p = 0.9, the binomial distribution would predict for respondents with three prior convictions that 72.9% would have three correct convictions, 24.3% would have two out of the three convictions correct, 2.7% would have one of the three convictions correct, and for 0.1% of the respondents none of their three convictions would be correct. In the case of a known p we could then check whether our observed data match this theoretical distribution, verifying whether approximately 72.9% of our respondents with three convictions report all three as correct (0.729 = 0.9 x 0.9 x 0.9), and so on. Since in practice p is unknown, it can be estimated using the maximum likelihood estimator where \(\hat p \mathop = \sum x_{i} /\sum m_{i}\), the total number of convictions reported to be correct divided by the total number of convictions across all respondents. By extending this logic beyond the simplest case, where the justice system operates with a fixed level of accuracy for all people and all cases, to the more realistic scenario in which the justice system operates with varying degrees of accuracy, it is possible to quantify the excess fraction of all respondents who report complete innocence (See “Appendix” for details). This estimator is necessarily limited to characterizing the magnitude of this departure from the expected value. However, it offers a means of assessing the plausibility of a given distribution of claims even for those individuals who do not themselves have large numbers of prior convictions.

While this estimator provides a useful test for whether the frequencies observed in the data match the estimated p of a single binomial distribution, it is less useful at characterizing any departures from the binomial. Respondents may report conviction histories that are inconsistent with the binomial distribution for multiple reasons. These departures have the potential to be informative, especially if an unexpectedly high number of respondents report that none of their convictions are correct. Using an expanded model with additional parameters that breaks away from assuming that all respondents have a common p, such as assuming some respondents report a correct conviction with probability 0 while others report a correct conviction with probability p, it is possible to characterize the magnitude of these departures from the binomial. The resulting distribution of the xi is a two-component mixture distribution; some fraction of the population, α, always claims innocence (and report xi = 0) and the remaining fraction of respondents, 1 − α, report their number of correct convictions as a draw from a Binomial(mi, p). The α captures the excess in the frequency of claims of innocence beyond what the binomial distribution would predict. According to this model, the probability that a respondent reports all wrongful convictions is \(P\left( {x_{i} = 0} \right) = \alpha + \left( {1 - \alpha } \right)\left( {1 - p} \right)^{{m_{i} }}\), the first term is contributed by respondents who always report that they were wrongfully convicted and the second part from the Binomial(mi, p). For this model to work in practice, it requires a good estimate of α in order to peel it away from other claims of wrongful conviction, eliminating the respondents who will never admit to a correct conviction. The maximum likelihood estimators for α and p do not exist in closed-form, but an EM algorithm (Dempster et al. 1977) converges to the maximum likelihood estimators of α and p. This two-component model, however, is still too restrictive. Obtaining a good estimate of α depends on our ability to adequately model the responses of respondents who have P(xi = 0) < 1. If the binomial component of the two-component model is incorrect, then our estimate of α will also be incorrect. To accommodate this possibility, we relax the restriction that respondents either have 0 probability of reporting a correct conviction or a shared probability p of reporting a correct conviction.

For this more flexible model, we consider the number of reported correct convictions, xi, as a draw from a Binomial(mi, pi) where mi is the reported number of convictions and pi is the probability that a conviction of respondent i’s is reported to be correct. Within each respondent, we assume the probability of reporting a correct conviction is the same pi for each of their convictions. The variation in pis from respondent to respondent reflects the variation in features such as the kinds of crimes they commit, variation in the courts that adjudicated their cases, and the respondents’ views on their cases. The pi can take on any value in [0,1] and we place no constraint on the distribution of the pi across respondents. Instead we let F(p) be any distribution function on [0,1] and estimate it non-parametrically from the data. The two-component model is a special case, one that puts a point mass at 0 and another point mass at another value greater than 0. However, for the flexible model we do not place any restrictions on the shape of F(p). Instead we find the function F that maximizes the likelihood function

where the integral inside the product integrates with respect to F(p) the binomial probability of the number of reported correct convictions. If we fixed F(p) to be a beta distribution, a commonly used distribution for probabilities, the integral in (1) yields the beta-binomial distribution, a generalization of the binomial that can have variance larger than the standard \(m_{i} p_{i} \left( {1 - p_{i} } \right)\). Again, we place no restrictions on F(p) as even the beta distribution might be too strong of an assumption. We compute the non-parametric maximum likelihood estimate (NPMLE) of F by maximizing (1) over all possible distributions on [0,1]. We do not fix F(p) to be discrete, continuous, beta, or any other specific class of distribution. The only restrictions are the standard properties of distributions, 0 ≤ F(p) ≤ 1 and F(p) is non-decreasing. A simulation of this flexible approach (See Appendix for details) demonstrates that the most flexible approach, the NPLME, offers the estimate of the fraction of respondents always reported innocence with the least bias. Unadjusted results and results adjusted using this estimator are reported in the next section.

Results

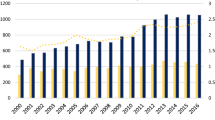

The CJES incorporated a near-replication of RAND Inmate Surveys’ two-item enumerated list of conviction offenses and claimed offenses. After checking off all offenses in their most recent conviction from a list of the most common conviction offenses (with an “other” option for free responses), respondents in the CJES were instructed to indicate which of these conviction offenses they believed themselves to have committed. Comparing these self-reported conviction offenses to self-reported committed offenses, 66.7% (± 1.7) of inmates indicate that they committed all of the offenses in their most recent conviction (See Fig. 2).Footnote 11 Another 22.2% (± 1.6) of inmates report committing some offenses related to their most recent conviction, and 11.1% (± 1.3) of inmates report committing none of the offenses in their most recent conviction.

Confirming the hypothesized ambiguity of partial matches between convicted and claimed conduct, the figure of 22% of respondents reporting partial responsibility for their most recent conviction can be further split into two groups—13% of respondents indicating responsibility for one or more lesser included offenses and 9% of respondents listing offenses that were not obviously constituent offenses. Examples of lesser included offenses include individuals reporting responsibility for drug possession rather than drug distribution or individuals reporting responsibility for trespass rather than burglary. An example of a claim of partial responsibility for a non-constituent offense is the case of a respondent self-reporting a recent conviction for burglary while self-reporting having actually committed drug distribution. These partial responsibility results likely reflect some degree of respondent confusion, but they also illustrate the challenges of enumeration-based correspondence metrics such as these. These limitations aside, with a clear majority of inmates indicating complete responsibility for their most recent convictions and a substantial minority indicating partial responsibility for their most recent conviction, these estimates, like those reported previously in the RAND Inmate Surveys, dispel the widespread misconception that all or even most prisoners claim that they are innocent.

For a more detailed comparison to those results, we examined the offense by offense breakdown of conviction/commission matches. For most offenses in Fig. 3, including the most common conviction offenses, between 60 and 90% of respondents indicated that they committed all of the crimes they were convicted of. Theft, DUI, possession with intent to distribute (PWID), burglary, robbery, drug possession and weapon offenses all follow this pattern. Likewise, these same categories typically have between 3 and 10% of respondents indicating that they did not commit any of the crimes they were convicted of. The variance in responses is largely driven by individuals self-reporting that they only did some of their most recent conviction offenses rather than being driven by individuals self-reporting they did none of their most recent conviction offenses. The only categories that do not follow this pattern are aggravated assault, simple assault, murder/manslaughter, and rape. Interestingly, all of these offenses are generally assaultive in nature, rather than acquisitive or substance abuse-related crimes. And with the exception of rape, which has far higher rates of respondents denying any responsibility, murder and aggravated assault have considerably higher rates of respondents reporting partial involvement rather than complete noninvolvement.Footnote 12 This pattern, again mirroring findings from the RAND survey, reinforces the idea that most prisoners report responsibility for their convictions and that for those convictions that they do not completely acknowledge responsibility for, they frequently claim some degree of responsibility . The correlation between property, substance abuse, and distribution crimes and relatively low rates of unclaimed convictions is also notable. These are the crimes for which a the majority of prisoners are incarcerated (Krause et al. 2016).

While these patterns are broadly consistent with the RAND Survey results, especially as reported in Poveda (2001), respondents in the CJES were more likely to report having completed only some of the crimes for which they were most recently convicted. For example, in the CJES, 65% of inmates convicted of robbery reported committing that offense, 26% reported committing another offense including a lessor included one, and 9% reported not committing any crimes whatsoever. By contrast, in the RAND Inmate Survey, 75% of inmates convicted of robbery reported committing that offense, 13.5% reported committing some other offense, and 11.5% reported not committing any crimes. For this offense, the subgroups claiming complete innocence are quite similar despite the spatial and temporal distance between the two administrations. It appears that the observed differences are driven by the greater numbers of individuals in the CJES who reported commission of some other crime, thereby depressing the number reporting having committed all crimes in their most recent conviction. Similar results can be seen for murder, as well as other serious offense types.

To generate the evidence necessary to distinguish claims of legal and factual innocence, respondents were then asked to indicate if they believed themselves to have been wrongfully convicted, which was immediately followed by a question asking respondents to provide an explanation for their selected binary response to this forced choice question (Fig. 1). Consistent with the results reported in Fig. 2, just over two-thirds (69.8% ± 1.8) of prisoners indicated that they were properly convicted and just under one-third (30.2% ± 1.8) indicated that a wrongful conviction occurred in their most recent case (See Table 2). Conditional on indicating a wrongful conviction, 25.8% (8.2% ± 1.1 of the overall sample) indicated that they “didn’t do any of the things they convicted me of.” 25.7% (8.8% ± 1.1 of the overall sample) indicated that they “didn’t do everything they convicted me of.” 14% (8.2% ± 1.1 of the overall sample) indicated a missing legal element. Several distinct legal or procedural issues surfaced in these responses. For 5.0% (1.6% ± 1.1 of the overall sample) the legal issue was a lack of intent. For 7.2% (2.3% of the total sample) the issue was a charged and convicted level of personal involvement that did not align with the respondent’s self-assessed conduct. Another 13% reported a flawed legal process of some kind while still acknowledging responsibility for their crimes. This category was split almost equally between individuals indicating they “didn’t get a fair trial” (2.0% of the total sample) and individuals indicating that the case against them was weak (2.3% of the total sample). The remaining respondents indicated another reason for their wrongful conviction, often involving police or witness misconduct (4.1% of the total sample) or failed to provide a further explanation for their self-reported wrongful conviction (0.9% of the total sample). These results confirm the findings from the RAND survey that most prisoners are willing to report acceptance of their convictions and that, even among those that report being wrongfully convicted, the majority accept partial responsibility. In contrast to the RAND instrument, the CJES instrument is able to capture a greater range of the respondents’ reasoning for reporting a lack of responsibility for a conviction offense.

In order to better understand the measurement properties of the basic RAND construct in contrast to the CJES instrument, Table 3 compares the number of persons reporting complete, partial, or non-innocence via the RAND construct and responses to the CJES binary measure of wrongful/rightful conviction. This comparison confirms that when individuals report “did no crime” (the RAND formulation) or “no crimes committed” (the CJES reformulation), most respondents are in fact indicating that they have been wrongfully convicted. The RAND instrument also does a reasonable job of capturing respondents claiming complete innocence with a small number of respondents indicating that they did not commit their most recent conviction offense while then indicating that they were properly convicted on the CJES instrument. A larger discrepancy between the two measures can be seen in the number of individuals (267) who reported committing all of the offenses they were convicted of on the RAND construct and yet indicate that they were wrongfully convicted in the CJES forced-choice question. Most likely, these individuals retained some additional legal grievance while still acknowledging their involvement in their conviction offenses.

Examining Table 4, which reports the cross-tabulation of responses to a binary CJES measure of complete innocence derived from the respondent explanation of their claims of wrongful convictions with the binary version of the RAND instrument classification in which respondents answers are grouped according to whether they indicated “did no crime” or not, it appears that the two measures are only moderately correlated (r = 0.46, α = 0.62), with the off-diagonals suggesting that the existing RAND Inmate Instrument is overly inclusive of legal grievances that are distinguishable from factual innocence using the more detailed measurement instrument of the CJES.

Returning to Table 2, in which 8.2% of respondents reported complete innocence of their most recent crimes of convictions, we estimated the distribution of individual inmates’ probabilities of reporting correct convictions using the non-parametric maximum likelihood estimator described above. For individuals reporting prior convictions, the individual fractional probabilities of specific numbers of wrongful and correct convictions were calculated (See Table 5) producing

the distribution \(\widehat{F}(p\)) described in (2) is the distribution that maximizes (1) over all possible distributions on [0,1]. The data suggest that 2% of sample inmates will report that all their convictions were erroneous, 56% will report being correctly convicted in all of their cases, and 42% will report that 70% of their cases were correct and 30% were in error. These results suggest that a small fraction of respondents (2%) with prior convictions were likely to state that they were innocent no matter how many prior convictions they possessed and reported. A 95% bootstrap confidence interval around this estimate has a range of (0.001, 0.032). This estimate of implausible response forms the basis of a corrected prevalence estimate. With 8.2% of the respondents claiming factual innocence in their most recent conviction, and an estimate that 2% of inmates will always claim innocence no matter how many convictions they have, we estimate an adjusted rate of factual innocence of 6.2% of all respondents. This is our most comprehensive estimate for the number of factually innocent respondents in this administration of the CJES.

With a point estimate of self-reported innocence for all inmates in the survey hovering in the mid-to-high single digits, we return to the conviction offense variation observed in Fig. 3. Self-reported innocence as measured using the binary CJES factual innocence measure has now been substituted for the modified RAND construct. Interestingly, the approximate rank order of the two measures is quite similar, with DUI and other theft anchoring the low end of the distribution and murder, other sexual assault and rape anchoring the high end. The previously observed pattern of lower rates of self-reported innocence in acquisitive crimes still holds while purely assaultive crimes also continue to manifest the highest rates of self-reported innocence. Once again, the highest volume offense categories also manifest the lowest levels of self-reported innocence; while the lower volume but highest seriousness offense categories manifest the highest rates. However, this is just one way of categorizing the particular rank order observed. It is equally true that crimes that have elicited the greatest concerns about wrongful convictions [e.g., rape,Footnote 13 murder, arson (not shown)] cluster near the top of self-reported wrongful conviction rates while crimes which rarely receive attention in discussions of wrongful convictions cluster near the bottom of the list [e.g., DUI, other theft, forgery (not shown)]. Consideration of additional correlates may shed light on this recurring pattern, but this is beyond the scope of the current work (Fig. 4).

Applying the binomial adjustment to each offense-category separately, Fig. 5 shows that once again the approximate rank order of self-reported wrongful conviction rates is retained and departures from this order are likely within the bounds of adjacent categories overlapping 95% confidence intervals. However, three subgroups can clearly be observed. Several offenses, including possession with intent to distribute, burglary, robbery and rape are all left unchanged by the binomial adjustment. The range of base self-reported rates across these categories suggest this is not simply a function of very high expected error rates. The second group includes offenses with some adjustment to their reported rates. These include most assaultive crimes, drug possession and other theft. Finally, DUI and theft both are adjusted to zero as the binomial adjustment suggests that all reports for this categories are implausible.

This could reflect the longer criminal histories of individuals imprisoned for these offenses. Conversely, the absence of adjustments to several categories, such as rape, may reflect the shorter criminal histories in this population. As noted previously, the binomial adjustment is dependent on the presence of a non-zero criminal history within the adjusted population. However, it is unlikely that this interpretation is a sufficient explanation for all of the observed patterns. Another possibility is that the key determinant of the size of the binomial adjustment will be the reported rate of wrongful convictions among individuals with one prior conviction. As implemented, the binomial adjustment is re-estimated separately for each offense category. And for each category this will be the largest group of cases. If these cases have a low reported rate of wrongful convictions and subsequent groups (e.g., 2 priors, 3 priors, etc.), which will virtually always be smaller, have higher rates of reported wrongful convictions, then the binomial estimator will, by design, estimate that the entire category warrants a larger correction. Similarly, if one prior conviction cases in an offense category have a high rate of reported wrongful convictions and subsequent categories have low reported rates of wrongful conviction, then these categories will like be subject to a much smaller adjustment. Rape and murder usefully illustrate this pattern. Respondents with a current rape conviction and one prior conviction self-report a correct conviction rate of 70% while the next largest group (2 prior convictions) has a correct conviction rate of 100%. Murder, by contrast, has 92% of one prior conviction cases self-reporting a correction conviction and just 81% reporting that both of their prior convictions (for the 2 prior conviction case) were correct. Whether this interpretation is correct, it is clear that just as the self-reported innocence rate varies considerably by offense so too does the rate of implausible responses, reinforcing the need to estimate and incorporate these adjustments.

Discussion

The dark figure of wrongful convictions is not the first seemingly unmeasurable quantity involving the legal system. For many years, the specter of an analogous figure for unreported and unrecorded crime haunted scholars, who were aware of the limitations of relying on police and court statistics to assess not only the contemporaneous volume of crime, but also changes in crime over time in response to changing social conditions, laws, and police practices (Biderman 1967; Biderman and Reiss 1967). However, beginning in the mid-20th century, the first self-report surveys of illegal behavior (Robison 1936; Huizinga and Elliott 1986) and the first household criminal victimization surveys (Biderman 1967; Skogan 1981), shed light on the frequency of crime and victimization not known by legal authorities. Many design and administration issues needed to be resolved, including recall errors, willingness to participate, and even the rarity of victimization, which was originally thought to make such victimization surveys practically impossible to administer at a reasonable cost (Hindelang 1981). Eventually these problems were resolved and the fundamental utility of the information for resolving key theoretical questions in the study of delinquency became clear.

The scientific study of wrongful convictions is at a similar crossroads. Years of efforts by legal scholars and practitioners have firmly established that wrongful convictions occur and have provided a quantitative and qualitative window into their frequency and contributing factors. This body of work has been influential in policy circles and continues to reshape the operation of the criminal justice system. However, the scientific knowledge contributions have been limited (so far) to the subset of conviction errors readily known to legal authorities—exonerations in mostly capital cases. Efforts by criminologists and other social scientists to examine the frequency and predictors beyond this population, including general prison populations, non-custodial felony caseloads, as well as misdemeanor convictions, have been more limited and have left scholars and policymakers with an incomplete picture of the extent of the potential problem in this wider population. Scholars hoping to solve this problem could wait for the legal community to generate sufficient numbers of exonerations in non-capital caseloads to support scientific inquiry on the basis of counting the occurrence and characteristics of these newly discovered cases. However, this approach will leave them dependent on the ability of legal practitioners to surface a representative sample of all factual errors. The alternative is for social scientists to generate measures of wrongful convictions that are independent of legal advocacy.

While the issues involved in surveying prisoners and other convicted persons regarding their experiences and agreement with their own convictions raises different methodological issues, the present investigation demonstrates that survey methods can provide bounded estimates when they are paired with newly developed methods for evaluating the plausibility of claims on sensitive questions and administered using a sampling frame that generates a representative sample of a known population. These resulting estimates, the first to formally separate claims of legal and factual innocence and to incorporate a formal measure of response plausibility, suggest that prisoners themselves are very often willing to self-report the correctness of their convictions. At the same time, a considerable minority indicate that procedural weaknesses with the administration of justice occurred in their cases. Lastly, a distinct subgroup, with considerable offense variation, maintain that they are completely innocent of the charges against them.

In practice, the existence of these three groups is no longer as much in question as it was nearly one hundred years ago when scholars began debating the existence of wrongful convictions. However, the meaning of the exact size of each group as reported in this study is, and will remain, subject to considerable interpretation. A mid-to-high single digits self-reported factually-innocent wrongful conviction rate can both be interpreted as quite low, since over 90% of remaining convictions were at least partially correctly decided, and as quite high, since the affected prison population is conceivably measured in millions rather than in hundreds as is the case for death penalty cases. With either interpretation, the results, though specific to Pennsylvania, suggest that errors occur with some regularity. And though the results do not imply guilt or innocence in any specific case,Footnote 14 they should serve to remind prosecutors, defenders, judges, and juries that there is some non-zero chance that the defendant before them could in fact be innocent. For this reason, it is imperative that future work both replicate these results in other populations and use the CJES and other self-report instruments to form a better understanding of the predictors of these self-reported wrongful convictions in this population of cases. There is scholarly precedent for this work, as a parallel literature has usefully employed self-report measures to study the predictors of false confessions (Gudjonsson and Sigurdsson 1994; Sigurdsson and Gudjonsson 1996; Gudjonsson et al. 2006, 2009; Redlich et al. 2010). A similar approach using measures like those in the present paper could determine whether the predictors of wrongful capital convictions are also the predictors of non-capital wrongful convictions as well as whether jurisdictions differ in the frequency with which participants report being wrongfully convicted. Before doing so, several limitations of the present investigation merit further examination.

First, the present investigation externally validated demographic and criminal history information and internally validated innocence claims, but did not attempt to externally validate the particular factual innocence claims made by respondents. This decision stemmed from the twin beliefs that self-reported measures of innocence have their own value independent of their correspondence to official measures of guilt generated by the legal system and that the potential benefits of anonymity, in the form of lower non-response and response biases, outweighed the possible benefits of comparing self-reported case information to administrative case information at the individual level. While it is likely at least some survey-reported claims of innocence could be examined for comparability to legal measures of innocence, assuming respondents were asked to provide identifying information, past attempts to compare self-report and official measures have shown that establishing the accuracy of either source of information with respect to the other can be quite difficult (Elliott 1995). Such an approach would also work much better for establishing comparability in cases that involve disputed factual elements and much less well in cases were claimed innocence hinges on questions of intent, involvement or identity.Footnote 15 Implementing these additional comparisons could usefully assess the degree to which the assumptions made in the present work have been well made.

Secondly, achieving a representative sample of the statewide male inmate population was the goal of this particular administration, as it significantly expanded the scope and visibility of possible factual errors within a general criminal justice population. However, doing so is unlikely to enable immediate generalization onto other closely related and important populations due to differences in expected time served and/or probability of wrongful conviction for different offenses, changes in charging patterns at the state and local levels, fluctuations in sentencing and punishment practices over time and intra-jurisdictional variation in procedural rules and legal protections. These factors suggest that the total group of incarcerated individuals in this jurisdiction may be somewhat different than the intake population during the CJES administration. Considering the felony convicted population, of which prisoners are a significant but not exclusive subset, it is possible and quite likely that differences would emerge if the probability of reporting a wrongful conviction is correlated with case characteristics that are themselves correlated with the probability of being sentenced to state prison. At a still broader level, the same logic applies to convictions involving misdemeanors and non-custodial sentences. In order to address the limitations in generalizability, an expansion of the scope of offender surveys is required. This includes a horizontal expansion within the state prison system to include subgroups of offenders not captured in this sample. Women, non-English speaking and capital offenders, for example, are under- or un-represented here, as they are within the overall custodial population. A vertical expansion, encompassing a broader swath of the adjudication process is also needed as offenders diverted away from state incarceration (here, to county jails) or all forms of incapacitation (e.g., community supervision) are also absent from this sample. This type of expansion could focus on convicted persons sentenced to probation as well as those defendants who have accepted or are considering a plea deal. These measures would provide complimentary estimates to those from this first administration of the CJES, further specifying the systematic rates of self-reported wrongful conviction.

Finally, while many of the observed differences in offense-specific rates are likely insignificant, the range—spanning two orders of magnitude—clearly indicates the need for additional research exploring these large differences. Exoneration-based estimates of capital cases are in the low single digits, whereas CJES-based estimates for overlapping but mostly non-capital cases (e.g., murder, rape) are in the low to mid-double digits. Similarly, even within the extremely large error bounds found for most assaultive crimes, the mid-double-digit estimates for rape and other sexual assaults are notable. The specific reasons for these differences will likely require larger samples of cases. Future work could also usefully record more fine-grained gradations and fact-patterns in this subset of cases to enable the identification of the subset of cases that are closer to the fact-patterns often observed in capital cases.

Pending the completion of this additional work, several preliminary conclusions appear warranted. First, the initial estimates from the CJES, when compared to prior estimates that used a variety of different methodologies and samples, are quite similar. The latest estimate from the capital case literature is that 4% of death penalty cases involve innocent persons. Just 2% below the estimate derived from the CJES. Similarly, recent surveys of criminal justice practitioners put their estimates at 1–3% of convictions. And the last administration of a self-report survey of innocence reported a 6% estimate, suggesting that concerns about non-response bias in self-report surveys of innocence may be less than previously thought. Given the inherent imprecision in all existing methodologies for estimating the prevalence of wrongful convictions, the CJES included, these estimates suggest that wrongful convictions occur with at most single-digit frequency for most prisoners. This conclusion assumes that estimates generated by self-report are, at a minimum, an upper bound on the rate of actual innocence.

Second, the observed variation in the offense-specific innocence rates inmate self-report data reinforces the importance of offense-specific innocence estimates. It also highlights the possibility that convictions not well-represented in the capital case literature could have both much higher and much lower wrongful conviction rates. The extent of this variation is another reason why new measurement tools are needed.

Finally, even when participants in social surveys are given assurances of anonymity to report sensitive information, it is critical to assess the quality of reported information, especially when only certain information can be externally validated. Methods, such as those reported in this paper, suggest one pathway to assess the magnitude of this problem. In contrast to prior approaches to problems of self-report response bias, the binomial estimation of implausible responses provides an explicit estimate of the magnitude of the problem. Future surveys of wrongful convictions as well as studies of other topics that could elicit a mix of credible and non-credible self-reported responses could also benefit from the use of these tools.

Notes

Liebman et al. (1999) provides estimates of U.S. legal errors beyond factual innocence in capital cases.

Assuming exonerations only occur in cases where the defendant was actually innocent (which may not be the case), prevalence estimates in this sub-literature are likely to be lower bounds on the actual rate of wrongful conviction in capital cases, since they are samples of surfaced wrongful conviction cases rather than samples of all wrongful convictions. The degree of bias in this estimator will be a function of the bias in the “surfacing” mechanism, which is a combination of post-adjudication legal effort and publicity as well as the discoverability of new information bearing on the guilt or innocence of the convicted person or his/her alternates.

A similar commitment of effort can be seen in the health literature on self-reporting on disease and treatment (U.S. National Center for Health Statistics 1965a, b, 1966, 1967; Hill and Ross 1982; Harlow and Linet 1989; Bergmann et al. 1998) as well as earnings and employment history (Borus 1966; Weiss 1968).

Scholars of wrongful convictions have maintained the distinction between two forms of innocence—factual and legal. [For a non-binary typology based on the legal concept of burden of proof, see Laufer (1995).] Factual innocence, also referred to as actual innocence (Zalman et al. 2008), generally refers to the situation in which a defendant is convicted of a crime that he or she did not actually commit. In the simplest and most extreme case, the factually innocent and wrongfully convicted person was not present and completely lacking in involvement or responsibility for the crime. Cases of mistaken identity, in which the charged defendant is erroneous identified as the perpetrator despite not having been present, are a typical example of true factual innocence. However, factual innocence is not limited to cases of mistaken identity. By contrast, legal innocence, also known as procedural innocence (Findley 2010), is commonly understood to refer to the situation in which a defendant is at least partially, if not wholly, responsible for the crime of which he or she was tried and convicted, but some element of mandatory legal procedure was not properly followed during the investigation or prosecution of the case. Examples of these factually guilty and legally innocent persons include cases in which the state relied on illegally gathered evidence, access to counsel is denied, or the unbiased character of the jury is violated.

In subsequent work they have expanded this approach to non-custodial populations (Sigurdsson and Gudjonsson 1996; Gudjonsson et al. 2006, 2009). In the U.S., Redlich and colleagues have also employed this methodology for understanding the predictors of false confessions (See, for example, Redlich et al. 2010).

Pre-testing revealed that altering the order of the categories had little effect on the number of factual innocence claims but did redistribute other claims across similar categories. For analysis purposes, if respondents checked multiple categories, they were categorized by the highest level of responsibility they reported.

While Spanish language copies of the instrument were available upon request, no effort, beyond pre-testing, was made to address other issues of respondent comprehension. This was intentional as other considered approaches to solving reading, language, or other comprehension problems would likely undermine assurances of anonymity.

The DOC relies on demographic information provided by committing courts. Courts often classify groups such as Dominican individuals as Black while these individuals frequently self-identify as Hispanic on classification forms.

Randomized response methods (RRM), and newer alternatives (Tan et al. 2009), rely on purposefully and transparently adding noise to each individual respondent’s data at the time of collection. Although this necessarily increases the variance of the estimator, measures of central tendency can still be recovered. However, these approaches do not actually verify that respondents are being truthful in their responses. If the respondents understand and believe these promises, it has the potential to produce more truthful responses. The trade-off, however, is increasingly complex surveys, which could theoretically lead to lower response rates or lower quality responses if respondents are confused or distrustful of the more elaborate procedures (See, for example, Berman et al. 1977; Boruch 1979). Putting aside this possible trade-off, at a more fundamental level, the confidence that researchers have in RRM or similar methods when used to measure an unverifiable quantity is only as strong as the researcher’s belief in the elimination of respondents’ remaining likelihood of misleading the researcher. As long as there remains a residual incentive to mislead, the aforementioned methods cannot guarantee an unbiased estimator. For more on randomized response methods, see generally (Warner 1965; Greenberg et al. 1971; Boruch 1972; Raghavarao and Federer 1979; Fox and Tracy 1980, 1984; Tracy and Fox 1981; Miller 1981; Tan et al. 2009; Coutts and Jann 2011).

All reported confidence intervals were computed using bootstrapping (Efron and Tibshirani 1986). While incidence estimates reflect the population, and are therefore not subject to sampling error, these intervals are particularly useful when interpreting estimates conditional on covariates such as those reported in Figs. 3 and 4. For an alternative view of population statistics as samples from super-populations, see Deming and Stephan (1941) and (Thygesen and Ersbøll 2014). In addition, non-sampling error bounds were computed to account for the small degree of unit and item non-response in the survey administration. These confidence intervals were computed following Manski (2003), under the assumption that all non-respondents would have indicated each possible response with a P of 1. The resulting bounds (± 8%) were considerably larger than the bounds generated by the bootstrap. The reporting of these bounds was rejected after an examination of the correlation between response rates and population estimates by survey batch revealed a weakly positive relationship, contradicting the much stronger assumption under Manski.

In many jurisdictions, including this setting for this study, crimes such as murder, manslaughter and rape may encompass a wide range of conduct linked by a common outcome (e.g., death of a victim, sexual contact without legal consent). These complex scenarios, often requiring the subjective testimony of witnesses or participants, may contribute to the increased rate for claims of partial involvement observed here. While beyond the scope of this analysis, this supposition warrants additional inquiry.

Many of the rape convictions involve case circumstances quite different than those addressed by the Urban Institute study referred to earlier in this paper. In that study, stranger rape and the resulting blind search of a DNA database was the basis of their 3% prevalence estimate. The self-reported rape and sexual assault cases in the present study can be assumed to include a substantial number of non-stranger or non-disputed sexual contact cases. These involve a claim not of complete non-presence or non-involvement, which would trigger the collection and eventual analysis of DNA, but instead that the sexual contact was not criminal due to a missing legal element. While these are necessarily murkier cases than those described in the Virginia study, they are nonetheless claims of factual innocence.

Similarly, these results do not address the probability or truthfulness of claims that any given individual defendant, whether within the jurisdiction in which these data were collected or beyond, has been wrongfully convicted or denied due process.

References

Bedau HA, Radelet ML (1987) Miscarriages of justice in potentially capital cases. Stanford Law Rev 40:21–179

Bergmann MM, Byers T, Freedman DS, Mokdad A (1998) Validity of self-reported diagnoses leading to hospitalization: a comparison of self-reports with hospital records in a prospective study of American adults. Am J Epidemiol 147:969–977

Berman J, McCombs H, Boruch R (1977) Notes on the contamination method: two small experiments in assuring confidentiality of responses. Soc Methods Res 6:45–62

Biderman AD (1967) Surveys of population samples for estimating crime incidence. Ann Am Acad Pol Soc Sci 374:16–33

Biderman AD, Reiss AJ (1967) On exploring the “dark figure” of crime. Ann Am Acad Pol Soc Sci 374:1–15

Blumberg AS (1967) The practice of law as confidence game: organizational cooptation of a profession. Law Soc Rev 1:15–39

Borchard E (1932) Convicting the innocent; errors of criminal justice. Yale University Press, New Haven

Boruch RF (1972) Relations among statistical methods for assuring confidentiality of social research data. Soc Sci Res 1:403–414

Boruch RF (1979) Assuring the confidentiality of social research data. University of Pennsylvania Press, Philadelphia

Borus ME (1966) Response error in survey reports of earnings information. J Am Stat Assoc 61:729–738

Brandon R, Davies C (1973) Wrongful imprisonment: mistaken convictions and their consequences. Allen and Unwin, Crows Nest

Carson EA (2014) Prisoners in 2013. Bureau of Justice Statistics, Washington, D.C.

Cernkovich SA, Giordano PC, Pugh MD (1985) Chronic offenders: the missing cases in self-report delinquency research criminology. J Crim Law Criminol 76:705–732

Chaiken JM, Chaiken MR, Peterson JE (1982) Varieties of criminal behavior. RAND Corporation, Santa Monica

Coutts E, Jann B (2011) Sensitive questions in online surveys: experimental results for the randomized response technique (RRT) and the unmatched count technique (UCT). Soc Methods Res 40:169–193

Deming WE, Stephan FF (1941) On the interpretation of censuses as samples. J Am Stat Assoc 36:45–49

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B Methodol 39:1–38

Dwyer J (2000) Actual innocence: five days to execution and other dispatches from the wrongly convicted, 1st edn. Doubleday, New York

Efron B, Tibshirani R (1986) Bootstrap methods for standard errors, confidence intervals, and other measures of statistical accuracy. Stat Sci 1:54–75

Elliott DS (1995) Lies, damn lies, and arrest statistics. Center for the Study and Prevention of Violence, Boulder

Erickson ML, Empey LT (1963) Court records, undetected delinquency and decision-making comments and research reports. J Crim Law Criminol Police Sci 54:456–469

Farrington DP (1977) The effects of public labelling. Br J Criminol 17:112–125

Findley KA (2010) Defining innocence. Albany Law Rev 74:1157–1173