Abstract

Objective

This paper presents a new quasi-experimental approach to assessing place based policing to encourage the careful evaluation of policing programs, strategies, and operations for researchers to conduct retrospective evaluations of policing programs.

Methods

We use a synthetic control model to reduce the bias introduced by models using non-equivalent comparison groups to evaluate High Point’s Drug Market Intervention and demonstrate the method and its versatility for evaluating programs retrospectively.

Results

The synthetic control method was able to identify a very good match across all socio-demographic and crime data for the intervention and comparison area. Using a variety of statistical models, the impact of High Point Drug Market Intervention on crime was estimated to be larger than previous evaluations with little evidence of displacement.

Conclusions

The synthetic control method represents a significant improvement over the earlier retrospective evaluations of crime prevention programs, but there is still room for improvement. This is particularly important in an age where rigorous scientific research is being used more and more to guide program development and implementation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Evidence-based crime prevention is a part of a larger and increasingly expanding movement in social policy to use scientific research evidence to guide program development and implementation. In general terms, this movement is dedicated to the improvement of society through the utilization of the highest quality scientific evidence on what works best (Farrington 2003). The reality in the field, unfortunately, is that program evaluation, at best, is an afterthought. Current approaches to program development and implementation do not well position police departments to determine whether the adopted strategies are actually generating the desired outcomes. More broadly, it hinders the police profession from developing a scientifically-based body of knowledge on “what works” in crime control and prevention (Weisburd and Neyroud 2011). Ideally, place-based police interventions should be evaluated using randomized controlled trials (see, e.g., Weisburd and Gill 2013). However, randomized experiments require considerable a priori planning that is often not possible in many police departments (Braga 2010).

Recent methodological developments, fortunately, can be opportunistically applied to conduct rigorous ex-post-facto evaluation designs of place-based police interventions (see, e.g., Braga et al. 2011). When elements of randomization through experimental manipulation or variation in the natural environment are not feasible, other quasi-experimental methods have been used successfully to reduce confounds. For interventions confined to limited and well-defined geographic areas, interrupted time series and difference-in-difference methods, in conjunction with neighboring control neighborhoods can be useful to remove many, but not all threats to validity. These methods have been used to evaluate gang injunctions (Grogger 2002), combined with propensity score matching to evaluate focused deterrence programs (Corsaro et al. 2012), and used to assess whether business improvement districts reduce crime (MacDonald et al. 2010).

This paper presents an application of a relatively new quasi-experimental method of synthetic controls to measure the impact of a placed-based policing intervention, taking advantage of the unique characteristics of the geography in which it is conducted. The synthetic control approach has been used successfully in macro-level studies in political science to measure the economic impact of terrorist conflict in Basque Country (Abadie and Gardeazabal 2003) and tobacco prevention legislation in California (Abadie et al. 2010). We modify the synthetic control approach developed by Abadie and colleagues to better suit smaller scaled place-based interventions, which can be used in criminology and other social sciences. We use the synthetic control quasi-experimental design to evaluate the impact of a well-known place-based focused deterrence strategy—the High Point Drug Market Intervention program to reduce disorderly narcotics sales and violent behavior by drug sellers in local illicit overt drug markets. Our analyses build on earlier evaluations (Corsaro 2013; Corsaro et al. 2012; Frabutt et al. 2009; Kennedy and Wong 2009) and examine the program in a variety of ways, including its impact on different dependent variables across different time periods and how it may displace crime to other drug markets or areas with similar criminogenic environmental factors.

Drug Market Intervention as a Guiding Example of Synthetic Controls

The Emergence of Focused Deterrence and the Drug Market Intervention

A recent innovation in policing that capitalizes on the growing evidence of the effectiveness of police deterrence strategies is the “focused deterrence” framework, often referred to as “pulling-levers” policing (Kennedy 1996). Pioneered in Boston as a problem-oriented policing project to halt serious gang violence during the 1990s (Braga et al. 2001; Kennedy et al. 1996), focused deterrence strategies honor core deterrence ideas, such as increasing risks faced by offenders, while finding new and creative ways of deploying traditional and non-traditional law enforcement tools to do so, such as directly communicating incentives and disincentives to targeted offenders. In its simplest form, the focused deterrence approach consists of selecting a particular crime problem, such as youth homicide; convening an interagency working group of law enforcement practitioners; conducting research to identify key offenders, groups, and behavior patterns; framing a response to offenders and groups of offenders that uses a varied menu of sanctions (i.e., “pulling levers”) to stop them from continuing their violent behavior; focusing social services and community resources on targeted offenders and groups to match law enforcement prevention efforts; focusing community normative expressions on targeted offenders and groups; and directly and repeatedly communicating with offenders to make them understand why they are receiving this special attention and what the special attention comprises (Kennedy 1996, 2009a).

The Drug Market Intervention (DMI) is a pulling-levers, focused deterrence intervention designed to close down open-air drug markets, modeled after a series of similar interventions in Boston and other cities with slightly different aims. The DMI was first implemented in High Point, North Carolina, a city comprised of some 104,000 residents in 2010 and covering some 50 square miles. In 2003, the High Point Police Department (HPPD), along with the Middle District of North Carolina United States Attorney’s Office, began working with David Kennedy to develop a different approach to the violence associated with their chronic drug problems (Kennedy and Wong 2009). They developed a focused deterrence strategy aimed at eliminating public forms of drug dealing such as street markets and crack houses by warning dealers, buyers, and their families that enforcement is imminent (Kennedy and Wong 2009). With individual “overt” drug markets as the unit of work, the project employed a joint police-community partnership to identify individual offenders; notify them of the consequences of continued dealing; provide supportive services through a community-based resource coordinator; and convey an uncompromising community norm against drug dealing.

The DMI seeks to shut down overt drug markets entirely (Kennedy 2009a). Enforcement powers are used strategically and sparingly, employing arrest and prosecution only against violent offenders and when nonviolent offenders have resisted all efforts to get them to desist and to provide them with help. Through the use of “banked” cases,Footnote 1 the strategy makes the promise of law enforcement sanctions against dealers extremely direct and credible, so that dealers are in no doubt concerning the consequences of offending and have good reason to change their behavior. The strategy also brings informal social control to bear on dealers from immediate family and community figures. The strategy organizes and focuses services, help, and support on dealers so that those who are willing have what they need to change their lives. In the wake of an enforcement operation, the law enforcement—community coalition hold a “call-in” where partnering agencies communicate enforcement threats and make offers of social services to targeted drug offenders. Each operation also includes a maintenance strategy to prevent the drug market from returning. For a detailed description of the DMI, see (Frabutt et al. 2009; Kennedy and Wong 2009).

Prior Impact Evaluations of DMI Programs

Frabutt et al. (2009) evaluated the High Point DMI using multiple methods and found that it was associated with a decrease in drug offenses and both property and violent crime. In a simple pre–post treatment-group-only evaluation, Kennedy and Wong (2009) reported that violent crime decreased 39 % and drug crime decreased by 30 % in the West End. Corsaro et al. (2012) recognized limits in the rigor of prior DMI evaluations and attempted to build stronger comparisons into their analysis of the DMI in High Point. The authors first examined the effects of the intervention in a way similar to the previous set of studies, identifying the difference in violent crime rates before and after the intervention using a HGLM with a Poisson distribution with separate analyses in intervention areas and adjacent areas. They then built on this approach by creating a comparison group using propensity score matching. Propensity score matching techniques generally attempt to create equivalent treatment and comparison groups by summarizing relevant pre-treatment characteristics of each subject into a single-index variable (the propensity score) and then matching subjects in the untreated comparison pool to subjects in the treatment group based on values of the single-index variable (Rosenbaum and Rubin 1983, 1985).

These two approaches used by Corsaro et al. (2012) provided similar results—the decrease in violent crime in targeted areas was 18 % in the unweighted model and 13 % in the matched model. Following this, they also added a group-based trajectory analysis. This approach found that the intervention was associated with a 17 % decrease in violent crime in chronically high crime areas. Conversely, the intervention was also associated with a 67 % increase in violent crime in negligible crime areas, but since this increase was based on a negligibly low initial rate it was much smaller than the decrease in high crime areas and was viewed as substantively unimportant. These approaches represent a great methodological step forward in evaluating place-based crime prevention interventions. However, there is still a question as to whether they represent the best comparison groups.

In a follow-up study, Corsaro examined differences between the sequential intervention effects and outcomes (Corsaro 2013). He found that the first intervention was driving the significant results of DMI on violent crime and that the rest of the interventions had impacts on property offenses, but not violence. None of the interventions had significant impacts on drugs/disorder offenses.

High Point DMI as a Case Study for the Synthetic Control Approach

Future evaluations of DMI focused deterrence strategies would ideally develop randomized block experimental designs similar to those used by place-based policing evaluations to test the impact of the DMI intervention on drug market places (see, e.g., Braga and Bond 2008; Weisburd and Gill 2013; Weisburd and Green 1995). Unfortunately, most jurisdictions that adopt the DMI are responding to chronic drug market problems and do not seem amenable to the considerable a priori planning required to implement randomized field experiments. For instance, the U.S. National Institute of Justice (NIJ) is supporting a comprehensive evaluation of the DMI focused deterrence program in seven sites, each of which received technical assistance in the DMI from the U.S. Bureau of Justice Assistance via the School of Criminal Justice at Michigan State University.Footnote 2 The Rand Corporation serves as the evaluator of the NIJ-supported DMI initiative and, in their proposal, advocated the use of “randomization across and within jurisdictions will maximize our potential to draw strong inferences and make sound policy recommendations.”Footnote 3 Regrettably, not a single participating site was willing to participate in a randomization scheme either across cities or within cities.

There are many challenges when developing rigorous ex-post-facto evaluations of place-based policing interventions (for a discussion, see Braga et al. 2011). Comparing a high crime area to the entire city can be problematic—as a city will largely contain lower crime areas with a few higher crime areas, significant decreases in crime may be obscured when averaging across the entire city. An alternative to using the entire city is to use a selected neighborhood that is arguably similar in some way. Often, the comparison neighborhood is selected because of its geographic proximity to the intervention neighborhood, the adjacent neighborhood, but this can also be problematic. In practice, the adjoining areas can sometimes be different from the target areas in meaningful ways. Partly this is by design, as the target areas are selected for their crime density.

The synthetic control method of retrospectively evaluating place-based programs can potentially add a new and flexible rigorous quasi-experimental evaluation technique to criminologists’ (or any social scientists’) methodological toolbox. The synthetic control approach, to our knowledge, has not been used in tests of criminological theory or in the evaluation of crime and justice programs. The High Point DMI provides a useful opportunity to demonstrate a new synthetic control method for comparative case studies because there are several published research studies already to allow for comparisons between the older and newer methods clear.

Abadie and colleagues developed a comparative case study method that creates a “synthetic” control where traditional regression methods are not sufficient to control for differences in treatment and comparison sites (Abadie et al. 2010; Abadie and Gardeazabal 2003). They use a data-driven approach to selectively weight candidate comparison areas using observed characteristics so that the weighted collection of comparison areas have features that match those the treatment group’s features. The main advantage of this method over those used previously is that the weights allow for greater flexibility than simply inclusion or exclusion of candidate comparison areas. Comparison areas that closely resemble the treatment area can have large weights. If the treatment area has some special features, such as a high rate of auto theft or a unique demographic composition, comparison areas that share those features can receive more weight, enough weight to align with the treatment area.

Using this method, researchers can report the relative contribution of each comparison unit and test how similar (or different) the intervention and synthetic control groups are to understand how other variables may be biasing effect size estimates. Of course, as with any statistical method to overcome the bias introduced with non-comparable comparison units, the matching is only as good as the data on which it is based, which means that the selection of the variables that go into the model must be defensible.

In this paper, we will first describe the method we used to create a synthetic control drug market and the statistics we will use to measure the impact of the DMI on the target market, and then examine possible displacement using a trait-based substitution—meaning that we looks to see if the “crime” associated with one overt drug market is displaced to different overt drug market. We will provide the results of our analyses, starting with very simple descriptive statistics and moving into the more complex modeling and sensitivity analyses using our synthetic controls, and explore displacement. We will discuss the benefits and challenges of using this technique, and conclude with a summary of both the program’s impact and the potential benefit of using synthetic control designs when conducting post hoc evaluations of place-based policing interventions.

Methods

Creating a Synthetic Control for Comparative Case Studies

The development of an effective synthetic comparison area depends on deriving weights for the candidate comparison areas so that collectively they resemble the treatment area. Our approach can balance on both cross sectional and longitudinal trends to create a control group that looks as similar to the intervention group as possible during the pre-intervention period. We have modified the SYNTH function (Abadie et al. 2011) to reduce both the differences in averages and standard deviations across much smaller geographic units that experience a great deal of variation over time in features that we want to match. See the Appendix in ESM for R code with simulated data with comments on how it works.

Let x 0 represent a vector of features for the treatment area (e.g., number of male residents ages 15–24, number of reported drug crimes) and n 0 are appropriate normalizing features for x 0 (e.g., total residential population). Similarly, let x i and n i represent the features and normalizing features for candidate comparison area i. Mathematically, our aim is to solve for weights, w i for i = 1, …, N, to minimize the difference between \(\frac{{{\mathbf{x}}_{0} }}{{n_{0} }}\) and \(\sum\nolimits_{i = 1}^{N} {w_{i} {\mathbf{x}}_{i} } /\sum\nolimits_{i = 1}^{N} {w_{i} n_{i} }\) as measured by some objective function. In our example we use the sum of the absolute differences between \(\frac{{{\mathbf{x}}_{0} }}{{n_{0} }}\) and \(\sum\nolimits_{i = 1}^{N} {w_{i} {\mathbf{x}}_{i} } /\sum\nolimits_{i = 1}^{N} {w_{i} n_{i} }\) as our objective function. Solving for the optimal weights involves an iterative numeric search that increases or decreases the w i that offers the greatest decrease in the objective function. That is, we manipulate the weights so that at each step the weighted comparison areas look more and more like the treatment area.

This is an adaptation of the approach described in (Abadie et al. 2011). Their approach, which conceptually provides the framework we use here, would compute weighted averages of candidate comparison neighborhoods’ rates. However, some rates have high variance especially for small areas. Some crimes, such as certain property crime, occur with relative frequency, while others, such as homicide, occur infrequently (especially when examining them at the monthly block level).Footnote 4 Rather than average rates as \(\sum\nolimits_{i = 1}^{N} {w_{i} \frac{{{\mathbf{x}}_{i} }}{{n_{i} }}}\), per the Abadie et al. (2011) approach, we create a single synthetic control area, compute a weighted total crime counts, and then normalize by the weighted total residential population.

For a given treatment area, the set of weights, w i s, defines the synthetic control. The weights were calculated on data from two years prior to each of the interventions. We matched of both reported crime and arrest rates for: (1) homicide, (2) rape, (3) robbery, (4) assault, (5) burglary, (6) larceny, (7) motor vehicle theft, (8) arson, (9) all UCR part I criminal offenses, (10) drug offenses, (11) all violent offenses, and (12) all criminal offenses, and demographics: (1) number of households, (2) families, (3) race, (4) ethnicity, (5) population of males, and (6) population of males between 18 and 25. Therefore, our matches include 585 variables (24 months × 12 crime incident variables × 12 arrest variables + nine + demographic controls) to capture both time invariant and variant features.

Checking the Weights

The HPPD provided geocoded crime data from 1997 through 2011 on crime incidents (n = 223,917) and calls for service (n = 965,447) to test the new method of creating a synthetic comparison market for the purpose of evaluating DMI. The dataset categorizes the crime incidents into violent and drug crimes. This data was merged with block-level Census data from 2000 (n = 1,835). During the period of study, High Point held five interventions in different neighborhoods: (1) West End, May 2004, 36 blocks (2) Daniel Brooks, April, 2005, 25 blocks (3) Southside, June 2006, 31 blocks (4) East Central, August 2007, 29 blocks, and (5) Washington, February 2010, 24 blocks.

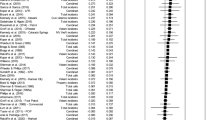

Table 1 and Fig. 1 demonstrate the quality of the synthetic control. The first column represents High Point as a total, which could be considered an unweighted comparison, and the bolded numbers represent where the intervention areas differed from High Point as a whole. For all features, the synthetic control was closely aligned with the features of the treatment area and there were few significant differences. For example, each of the intervention areas are less white than the metropolitan area as a whole. The Daniel Brooks, Southside, East Central, and Washington neighborhoods have a higher percentage of black residents as compared to the city as a whole, while the West End and Southside neighborhoods have a higher percentage of Hispanic residents. The weighting corrects for these demographics as well as differences in crime levels. After the weighing, there were only three variables that were found to be significantly different between the intervention zone and its synthetic comparison—only one more than would be expected by chance, see Table 1.

If the synthetic control is effective, then in the year prior to the weighting the synthetic control and the treatment area should still match on features and outcomes since no intervention was put in place. Therefore, we examined the crime rates in the year prior to the weighting period (e.g., 3 years before the intervention) and found that the synthetic control was essentially statistically indistinguishable from the intervention sites on all available variables, which included the socio-demographics, crime levels, and crime trends (a total of 295 variables). Figure 1 demonstrates the quality of the synthetic control most strikingly. Not only does the rate of calls for service match, but the entire calls for service trend is a close match over a 3 year period. The ability to match, not just averages and percentages, but entire trends demonstrates that this method is a substantial methodological improvement in development of comparison areas. We created similar graphs to Fig. 1 for all 24 time-varying features we matched on and the levels and trends matched les for each of the five treatment areas and their synthetic controls.

Multivariate Analysis

Difference-in-Difference Analysis

Differences-in-Differences (DD) estimation has become an increasingly popular way to estimate causal relationships (Bertrand et al. 2004; Campbell and Stanely 1963; Card and Krueger 1995). DD estimation consists of identifying a specific intervention or treatment (e.g., passage of a law) and comparing the difference in outcomes after and before the intervention for groups affected by the intervention to the same difference for unaffected groups. The DD estimator is defined as the difference in average outcome in the treatment group before and after treatment minus the difference in average outcome in the control group before and after treatment. We incorporated the synthetic control weights in a DD estimator to estimate the impact of the intervention on the four crime outcomes: calls for service, all criminal incidents reported to the police, all violent incidents, and all drug crimes.

The ordinary least squares (OLS) difference in difference model is estimated as a fixed effects regression model, as shown in Eq. (1):

where y it represents the outcome y variable for case i at time t, β is the coefficient that estimates the treatment effect, x it is the treatment status for case i at time t (i.e., it is equal to zero pre-intervention for both intervention and control groups, and equal to zero post-intervention for the control group, and equal to one post-intervention for the intervention group). There are two error terms in the model, α i is the case level error term and represents an error for each individual, and therefore represents the combined effects of all unobserved variables that are time invariant. \(\epsilon_{it}\) is the error term representing random variation at each time.

The outcome of interest is a count variable (e.g., monthly number of crimes per block), and hence the OLS estimator is inappropriate. The Poisson regression model is often used when the outcome is a count variable, but the Poisson model makes an assumption that conditional variance is equal to the conditional mean, and this assumption is rarely satisfied. After careful sensitivity testing reviewing recommended assumptions (Berk and MacDonald 2008), we decided, we estimate the model with the negative binomial regression model, which can be considered an extension of the Poisson regression model (Long 1997).

In the Poisson regression model the conditional mean μ i is given by Eq. (2):

In the negative binomial model, the random variable \(\tilde{\mu }_{i}\) is estimated instead, Eq. (3):

where ε is a random error, uncorrelated with x. Variation in \(\tilde{\mu }\) is considered to be due to x and other sources of randomness. δ i is an additional heterogeneity term, with mean assumed equal to 1.0 for identification purposes. The model parameters are estimated with maximum likelihood. The parameters for the independent variables were expressed as incidence rate ratios. Incidence rate ratios are interpreted as the rate at which things occur; for example, an incidence rate ratio of 0.90 would suggest that, controlling for other independent variables, the selected independent variable was associated with a 10 % decrease in the rate at which the dependent variable occurs.

Meta Analysis

The difference-in-difference analysis provides a separate estimate of the treatment effect (plus standard error) for each of the intervention areas. To improve the interpretability of these estimates we combine them using meta-analysis, as implemented in the Meta for package for R (Viechtbauer 2010) In a fixed effects meta-analysis, we assume that the effect in each study (y) is a function of the true effect size (θ) plus random variation (e), see Eq. (4).

It is possible that there are additional sources of heterogeneity in the effect sizes to be pooled. If this is the case, a random effects meta-analysis is appropriate. In a random effects analysis, see Eqs. (5) and (6):

where:

If τ 2 = 0 then there is considered to be heterogeneity in the effects. Fixed effects meta-analysis is appropriate when the goal is only to make an inference about the effect sizes that are combined in the meta-analysis. If the goal is to generalize beyond these effects, then random effects meta-analysis should be used (Hedges and Vevea 1998). The i 2 statistic describes the proportion of unexplained variance.

Displacement Analysis

Traditional displacement analyses examine whether or not criminal activity “moves,” or occurs in another location due to a place-based intervention (Reppetto 1976) or whether there is a “diffusion” of crime control benefits due to criminals overestimating the reach of the place-based intervention (Clarke and Weisburd 1994). Most place-based policing programs have limited their analyses of displacement/diffusion effects to examining crime levels in areas immediately proximate to the targeted location (see, e.g., (Weisburd et al. 2006). Several analyses have failed to find evidence of this type of displacement with the DMI (e.g., Corsaro et al. 2012; Kennedy and Wong 2009). However, displacement may not simply be geographic—criminals may also change the types of crimes they engage in and/or move to areas outside their surrounding area of operation. As suggested by Cornish and Clarke (1987), criminals may be more likely to displace to locations that share similar criminal opportunity structures to the locations where a crime prevention program has been implemented.

We focused our investigation of displacement to the movement of crime between the five overt drug markets since the first DMI in West End. Activity associated with one drug market may be displaced to a neighborhood across town where another drug market is already established. This is an example of trait-based displacement associated with substitution to another neighborhood with similar characteristics. According to the police in High Point, these five areas represent all the overt drug markets in their jurisdiction.

These other overt drug markets may be natural substitutions for the intervention markets (if they are truly shut down). We model this trait-based substitution in two ways. First, we ran a series of 40 regressions to determine if crime goes up or down in later intervention areas when earlier ones happen. Then we run 40 additional regressions, modeling difference-in-difference models between each subsequent intervention area against their synthetic control. These analyses test for different mechanisms of potential displacement because the synthetic comparison does not represent crime “moving around the corner” but to places elsewhere in High Point with similar criminogenic features to treated locations.

Results

Bivariate Analysis

In general, the four selected crime indicators indicate lower levels of crime post-intervention in the target areas—with calls for service, all crime incidents, and violent crime dropping in four of the five intervention zones in the 1 year following the intervention, and drug crime dropping in three zones. This is initial evidence that the intervention is associated with a drop in crime in raw number and a significant drop in calls for service in the intervention group; however, multivariate statistical tests are required to draw causal inference and more precise estimates and associated significance testing, see Table 2.

Multivariate Analysis

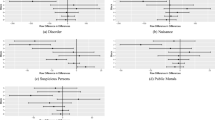

Using the weights derived from the creation of the synthetic control, a difference-in-difference estimator using a negative binomial regression was calculated for the measures of crime in each DMI target markets.Footnote 5 The difference between the difference in crimes rates in the intervention site and the difference in crime rates in the control for the period of 12 months after the intervention were compared.Footnote 6 Only 2 of the 20 outcome variables/neighborhood pairs show an effect at the 5 % confidence level. However, they do present consistent trends, with 16 of the 20 outcome variable/neighborhood pairs showing a decrease in rates in the intervention zones relative to their synthetic controls. If the effects are combined together using a meta-analytic pooling of random effects, the DMI interventions were associated with overall statistically significant decreases in calls for service and violent crimes, but not for drug crimes or crime reports more generally. The magnitude of the effect corresponds to a drop of 16 % for the call for service rate and 34 % for the violent crime rate, see Table 3. To calculate the approximate number of calls for service and violent crime avoided due to DMI, we multiply the percent reduction due to the intervention by the baseline count of crimes. This results in an estimated reduction of calls for service of 1,191 and 40 avoided fewer crimes across all intervention zones, or an average difference of 239 calls and 8 crimes, in the year after DMI, compared to their synthetic control.

Intervention Impact over Time

The DMI is touted as a sustainable solution to an overt drug market (Kennedy 2009b). The next set of analyses assesses the impact over different time periods, from 3 to 60 months post-intervention. These sensitivity analyses also provide greater statistical power. The table below compares the difference coefficient for different locations for different time periods for calls for service as an example, see Table 4.

When comparing the results by neighborhood, the decreases in the West End relative to the control generally become larger over time, but there are no clear trends for other locations. When comparing across offenses, the differences associated with all crimes and drug crimes become larger over time, but there are no apparent trends for calls for service and violent crimes. Additionally, the first months after the intervention see increased drug and all crime offenses relative to their synthetic controls (which could be consistent with increased enforcement) before the crime rates begin to drop. This can be seen more prominently graphically across each of the four dependent variables, see Figs. 2, 3, 4 and 5.

Examining the data across all available time periods, instead of just the 12 months following the intervention, there were decreases in crime on all measures in some places, and decreases in some measures in most places relative to their synthetic control areas, see Table 5. The effects are the clearest in the West End, where the intervention is associated with a decrease in all dependent variables. The effects in Washington are also clear, showing a decrease in counts for the intervention site for three of the four dependent variables. For the three other sites, the results are less clear, with most of the parameters being negative, but statistically insignificant.

When the effects are combined in random effects meta-analyses, the DMI is associated with decreases in calls for service, all crimes, and drug crimes, but not violent crimes, compared to the synthetic control market. For most of these offenses, the magnitude of the drop corresponds to offense rates that are 20–30 % lower relative to the synthetic control. However, the effect is larger for drug offenses, where the pooled coefficient corresponds to difference of 54 % from the control markets. Overall, DMI was associated with 1,593 fewer calls for service, 331 crimes, and 87 drug crimes, in the target zones compared to their controls, in each subsequent year after the intervention.

Displacement

The following analyses test this trait-based substitution effect by examining whether an intervention in one site (e.g., West End) had an effect on the crime rates in a subsequent site (e.g., Daniel Brooks) in the year following the intervention. These were examined both as the difference of one area pre- and post-intervention and as a difference-in-difference compared to the weighted controls for each area.

Crime increased in the Southside neighborhood after the West End intervention—calls for service increased by 19 % and drug crimes went up by 88 %. After the Daniel Brooks intervention, drug crime increased 71 % in the Southside area, but decreased by 42 % in Washington. After the Southside intervention, drug crime went up by 250 %. Three of four interventions coincided with increases in subsequent intervention areas, which were the only other overt drug markets. However, only the last coefficient is significant if we use Bonferroni-adjusted p value to account for testing the same hypothesis multiple times. These estimates, however, are simple pre-post examinations of crime trends and do not include any other controls, see Table 6.

The next set of analysis estimate the difference in the difference between each of the subsequent intervention sites and their synthetic controls. Once these controls are included, there are only two significant regression coefficients—there was an increase in calls for service and drug crimes in Southside compared to its synthetic control after the West End intervention, by 27 and 240 %, respectively. However, we would expect to find two significant findings just by chance and they are not significant using Bonferroni-adjusted p values. While a lack of evidence cannot prove that there is no effect, these analyses provide little to no evidence of a substitution effect at work (Table 7).

Discussion

Program Impact

Our analyses, which are based on strong and rigorous evidence, show that DMI’s impact may actually be larger than previously estimated. However, it is important to take these results in context. DMI’s impact on crime in High Point has received a great deal of attention and the Bureau of Justice Assistance has been investing in training new sites—Michigan State University and the National Network for Safe Communities are both providing training and technical assistance on DMI. Several researchers have suggested that the strategy may not work in different types of drug markets (Reuter and Pollack 2012) and that further replications are necessary to understand if and how it might work elsewhere.

The intervention effect is not the same across the five implementations, which mirrors the findings of an earlier study (Corsaro 2013). Many earlier analyses of the DMI have pooled these effects (Corsaro et al. 2012), and none included the final intervention area (Washington). Using meta-analysis, we find that in the year following a DMI, calls for service fell 16 % and violent crimes fell 34 % on average, while drug crimes and crimes generally initially rose then dropped with no net effect compared to a synthetic control market, see Table 8. Over a longer time period, both overall and drug crime generally continued to drop, with most measures of offense showing a 20–30 % decrease in crime rates generally and a 50 % drop in reported drug crimes rates compared to the synthetic control. The pooled effects are not very precisely estimated, due to high variability in effect size and low sample size, but they are associated with decreases in general crime of up to 50 % and decreases in drug crime between 25 and 72 %.

These results, which use stronger statistical controls than earlier work, find larger effect sizes and more significant effects, probably due to the fact that our estimates are more precise. Using matched groups, Corsaro and colleagues (Corsaro et al. 2012) found a 16 % reduction in violent crime across the pooled first four intervention zones, with a larger effect in high crime trajectory blocks. When the author investigated the differential impact of DMI across the intervention zones, he found that the effect was only significant within the first intervention zone (Corsaro 2013). Corsaro’s second set of analyses found a 20 % reduction in violent crime in West End whereas our analyses found a 70 % reduction. We also estimated a 65 % reduction in violent crime in Washington, an intervention that was not included in his paper. In addition, we found a much larger impact on drug crimes than previous evaluations (Corsaro 2013)—we found a 79 % drop in drug crime in West End (compared to a 41 % drop estimated previously), and decreases in the other intervention areas: 61 % in Southside, 39 % in East Central, and 72 % in Washington, resulting in an overall estimate of 54.2 % reduction in drug crime compared to their synthetic comparisons. Using this different, and arguably more comparable, control unit, we are finding larger and more precise intervention impacts than prior evaluations.

These analyses cannot determine which part of DMI led to its success and which of the underlying mechanisms are responsible for its impact. Is it mainly an incapacitation effect since the violent drug dealers are arrested and prosecuted? Is it mainly a deterrence effect because there is increased law enforcement after the call-in? Or, is it from increased informal social control and neighborhood collective efficacy? It is likely that it is some combination of a lot of different factors, but without accompanying qualitative data, it is difficult to draw concrete conclusions about how and why it may be working.

These analyses may indicate that some of the effects of the DMI come indirectly, through neighborhood effects, rather than reflecting the crime of the drug market directly. The evidence for this mechanism is that the full impact of the DMI does not appear immediately after the intervention, but in fact, grows over time—in some cases, for several years. This would suggest that the apparent decreases in crime do not reflect those crimes that directly result from the open air drug market but are acting through some indirect mechanism, possibly through creating a healthier, more resilient neighborhood. Many researchers have hypothesized about and identified effects of a DMI through setting norms and developing informal social controls are consistent with other evaluations of the DMI (Corsaro and Brunson 2013; Corsaro et al. 2011; Frabutt et al. 2009; Kennedy and Wong 2009). This suggests that DMI has a long term impact and did in fact, shut down the overt drug markets.

It does not appear that the DMI caused crime to be displaced to other markets, at least in the year after the call-in. As many observers suggest, measuring crime displacement and diffusion effects is very complex and it is possible that no study, no matter how well designed, will provide definitive answers on its diverse manifestations (Barr and Pease 1990; Weisburd et al. 2006). Examining the differences in the crime rates in other overt drug markets pre and post-intervention do not provide evidence of trait-based substitution, but this is not conclusive. Currently, there is little research that examines how drug markets, and the violent crime associated with drug markets, may change without an intervention, which would be an important baseline for the understanding of natural market movement and movement due to external pressures. Until it is established how these local overt markets remain geographically stable when left undisturbed, we will not have a normal distribution of movement against which to test displacement due to an intervention.

There is also heterogeneity in the effect, which was established in the meta-analysis. The first intervention and the last intervention have the largest effect while the second, third, and fourth interventions have a small or no effect. It is possible that these variations in the decreases in crime come from factors unrelated to the particular intervention, that the intervention cannot be replicated with the same impact across different settings, or even that the intervention is not sustainable over time. However, it is also possible that the sequence of the interventions does matter, or that there were variations in implementation across the target zones that explain their differential effects. With the first intervention, the crime from the West End drug market could be displaced to similar areas of the city and the difference between the West End and the controls would appear large due to an increase in the controls. As subsequent markets are shut down, the number of similar areas available for displacement would decrease, so the effects of the intervention would appear smaller. If, however, there are finally too few similar areas into which the crime could be displaced, then the decrease in crime would re-emerge as an actual decrease in crime rather than only a relative decrease in crime. This sequence of events would suggest the need to implement the DMIs widely, to eliminate not only the initial overt drug markets but subsequent markets to which displacement could occur. While this explanation does fit the pattern of findings, it is only one of a number of possible explanations.

Utility of Synthetic Control for Comparative Case Studies Applied to Policing Program Evaluation

The High Point DMI experience highlights how the realities of police program development and implementation are indeed compatible with an evidence-based policing model (see also Braga et al. 2011). While policing is making strides towards developing a rigorous knowledge base on effective crime prevention practices (Weisburd and Neyroud 2011), the profession is often limited in its ability to pair the implementation of new programs with rigorous evaluation designs. To overcome the challenges of evaluation in real world settings, evaluators need to continuously develop innovative approaches that take advantage of new theoretical and methodological approaches. Retrospective evaluations can be devised well after a program has been implemented, and if they are methodologically sound, such evaluations can offer important information on the success of a program. This method provides strong evidence to aid policymakers and practitioners make decisions.

As presented above, we applied the synthetic control method to evaluate the main effects of the DMI on violent crime and explore possible crime displacement and diffusion of crime control benefits effects. However, given that this approach has not been used to evaluate crime and justice interventions, it is important to review here the benefits and challenges of the method in our application and how it might be used in other place-based intervention evaluations.

Using the proposed method to calculate weights to create a synthetic control resulted in a comparison area that made the intervention and control areas practically indistinguishable on sociodemographics, crime levels, and crime trends leading up to the intervention. While the new weights create synthetic comparisons that have almost identical measurable environmental criminogenic characteristics during their pre-intervention periods, a concern is that the comparison area does not represent a contiguous drug market. Instead it is made up of small units spread across the city, see Fig. 6. If there is something essential about a contiguous geographic unit, then a modified approach that encourages weight to accumulate on contiguous units may be developed. However, there might not exist such a synthetic control that could maintain the close alignment we achieved in this example.

There is also some reason to be concerned about the overlap of the blocks that make up the synthetic comparison markets when there are multiple interventions in the same area. In this case, five interventions were conducted in a single city in a period of several years. The DMI zones ranged from 24 to 36 square blocks and their synthetic comparison markets were created from 43 to 68 weighted blocks. Many of those blocks are included in multiple synthetic comparison groups or in other intervention zones, see Table 9. High Point is a relatively small city and there are only so many geographic areas with high enough crime rates to be included in the synthetic controls, so four blocks were included in each of the synthetic controls. Also, between 9 and 33 % of the weighted units in any given synthetic control area is a part of anther intervention area, from either a previous and subsequent targeted areas, so they are intervened upon at some point in time.

To deal with this problem of interdependence, a variety of overlapping and non-overlapping time periods were examined. The time between interventions ranged from 8 months to over 2 years, with most being approximately 1 year apart. To deal with this issue, the effect was examined over multiple time periods, using 1 year as a standard post-intervention window, since there was very little overlap during this time frame. Using a larger number of months increases the potential for one intervention to impinge on another, but using a smaller number of months limits the analyses both in statistical power and in identifying trends that grow over time. By limiting the observation period to the initial 12 months, there is little temporal overlap between the pre- and post-intervention periods between the five different interventions.

Conclusions

These results of our test of the impact of DMI in High Point, which use stronger statistical controls than earlier work, find larger effect sizes and more significant effects, probably due to the fact that our estimates are more precise. After 10 years, we estimate that in 2011, the reduction of 21 % overall crime from those five intervention areas resulted only in a 4 % decrease in city-wide crime, and the 54 % decrease in drug crime in the target zone resulted in a 16 % drop in drug crimes for the entire city. While these numbers are impressive, they are a far cry from a crime panacea. Rigorous evaluations of subsequent sites are essential to understanding if the program can be replicated and if it provides a good return on investment. The results of our High Point DMI evaluation contribute to the growing body of evidence that the more focused and specific the strategies of the police, and the more tailored to the problems they seek to address, the more effective the police will be in controlling crime and disorder (Braga and Weisburd 2010; National Research Council 2004; Weisburd and Eck 2004).

As more police agencies adopt evidence-based policing ideals, program evaluators will need a broad range of methodological and statistical approaches, such as those described in this article, in their “tool kit” to address the wide variety of opportunities and challenges they will face. This paper presents an application of a new quasi-experimental methodology to evaluate a place-based policing crime reduction strategy that is flexible enough to be used in small geographic areas with no natural counterfactual. The synthetic control method attempts to create the best comparison area currently available, essentially generating a data-driven counterfactual that mimics the sociodemographics, crime levels, and historical crime trends, to more precisely estimate the intervention’s effect. This method allows for a better and more precise estimate of program impact in flexible geographic levels of aggregation.

Notes

A “banked” case refers to a potential prosecution for narcotics sales, supported by audio and video evidence usually obtained through a controlled buy that is held in inactive status unless the subject of the prosecution continues dealing, at which point an arrest warrant is issued and prosecution proceeds.

Therefore, when an infrequent event, such as homicide occurs, it raises the monthly incidence level so high that it is exerts undue influence on the matching algorithm.

Weights were applied as probability/survey weights in STATA VERSION 12.

These initial models were limited to twelve month pre-post tests.

References

Abadie A, Gardeazabal J (2003) The economic costs of conflict: a case study of the basque country. Am Econ Rev 93:113–132

Abadie A, Diamond A, Hainmueller J (2010) Synthetic control methods for comparative case studies: estimating the effect of California’s tobacco control program. J Am Stat Assoc 105:493–505

Abadie A, Diamond A, Hainmueller J (2011) Synth: an R package for synthetic control methods in comparative case studies. J Stat Softw 42:1–17

Barr R, Pease K (1990) Crime placement, displacement, and deflection. Crime Justice 12:277–318

Berk R, MacDonald JM (2008) Overdispersion and poisson regression. J Quant Criminol 24:269–284

Bertrand M, Duflo E, Mullainathan S (2004) How much should we trust differences-in-differences estimates? Q J Econ 119:249–275

Braga AA (2010) Setting a higher standard for the evaluation of problem-oriented policing initiatives. Criminol Public Policy 9:173–182

Braga AA, Bond BJ (2008) Policing crime and disorder hot spots: a randomized controlled trial. Criminology 46:577–607

Braga AA, Weisburd DL (2010) Policing problem places. Oxford University Press, Oxford

Braga AA, Kennedy DM, Waring EJ, Piehl AM (2001) Problem-oriented policing, deterrence, and youth violence: an evaluation of Boston’s operation ceasefire. J Res Crime Delinq 38:195–225

Braga AA, Hureau DM, Papachristos AV (2011) An ex post facto evaluation framework for place-based police interventions. Eval Rev 35:592–626

Campbell D, Stanely J (1963) Experimental and quasi-experimental designs for research. Hougton Mifflin Company, USA

Card D, Krueger AB (1995) Time-series minimum-wage studies: a meta-analysis. Am Econ Rev 85:238–243

Clarke RV, Weisburd D (1994) Diffusion of crime control benefits: observations on the reverse of displacement. Crime Prevent Stud 2:165–184

Cornish DB, Clarke RV (1987) Understanding crime displacement: an application of rational choice theory. Criminology 25:933–948

Corsaro N (2013) The high point drug market intervention: examining impact across target areas and offense types. Vict Offenders 8:416–445

Corsaro N, Brunson RK (2013) Are suppression and deterrence mechanisms enough? Examining the “pulling levers” drug market intervention strategy in Peoria, Illinois, USA. Int J Drug Policy 24:115–121

Corsaro N, Brunson RK, Gau J, Oldham C (2011) The Peoria pulling levers drug market intervention: a review of program process, changes in perceptions, and crime impact. Report submitted to the Illinois Criminal Justice Information Authority

Corsaro N, Hunt ED, Hipple NK, McGarrell EF (2012) The impact of drug market pulling levers policing on neighborhood violence. Criminol Public Policy 11:167–199

Farrington DP (2003) Methodological quality standards for evaluation research. Ann Am Acad Polit Soc Sci 587:49–68

Frabutt JM, Shelton TL, Di Luca KL, Harvey LK, Kefner MK (2009) A collaborative approach to eliminating street drug markets through focused deterrence. Final Report submitted to the National Institute of Justice (#2006-Ij-Cx-0034)

Grogger J (2002) The effects of civil gang injunctions on reported violent crime: evidence from Los Angeles County*. J Law Econ 45:69–90

Hedges LV, Vevea JL (1998) Fixed-and random-effects models in meta-analysis. Psychol Methods 3:486

Kennedy DM (1996) Pulling levers: chronic offenders, high-crime settings, and a theory of prevention. Val UL Rev 31:449

Kennedy DM (2009a) Deterrence and crime prevention: reconsidering the prospect of sanction. Routledge, New York

Kennedy DM (2009b) Drugs, race and common ground: reflections on the high point intervention. NIJ J 262:12–17

Kennedy DM, Wong SL (2009) The high point drug market intervention strategy. US Department of Justice, Office of Community Oriented Policing Services, USA

Kennedy DM, Piehl AM, Braga AA (1996) Youth violence in Boston: gun markets, serious youth offenders, and a use-reduction strategy. Law Contemp Prob 59:147–196

Long JS (1997) Regression models for categorical and limited dependent variables, vol 7. Sage, Beverly Hills

MacDonald J, Golinelli D, Stokes RJ, Bluthenthal R (2010) The effect of business improvement districts on the incidence of violent crimes. Inj Prevent 16:327–332

National Research Council (2004) Fairness and effectiveness in policing: the evidence. Committee to Review Research on Police Policy and Practices. In: Skogan W, Frydl K (eds) Committee on Law and Justice, Division of Behavioral and Social Sciences and Education. The National Academies Press, Washington, DC

Reppetto TA (1976) Crime prevention and the displacement phenomenon. Crime Delinquency 22:166–177

Reuter P, Pollack HA (2012) Good markets make bad neighbors. Criminol Public Policy 11:211–220

Rosenbaum PR, Rubin DB (1983) The central role of the propensity score in observational studies for causal effects. Biometrika 70:41–55

Rosenbaum PR, Rubin DB (1985) Constructing a control group using multivariate matched sampling methods that incorporate the propensity score. Am Stat 39:33–38

Viechtbauer W (2010) Conducting meta-analyses in r with the Metafor package. J Stat Softw 36:1–48

Weisburd D, Eck JE (2004) What can police do to reduce crime, disorder, and fear? Ann Am Acad Polit Soc Sci 593:42–65

Weisburd D, Gill C (2013) Block randomized trials at places: rethinking the limitations of small N experiments. J Quant Criminol 30:1–16

Weisburd D, Green L (1995) Policing drug hot spots: the Jersey city drug market analysis experiment. Justice Q 12:711–735

Weisburd D, Neyroud P (2011) Police science: toward a new paradigm. Harvard Kennedy School Program in Criminal Justice Policy and Management, Cambridge

Weisburd D, Wyckoff LA, Ready J, Eck JE, Hinkle JC, Gajewski F (2006) Does crime just move around the corner? A controlled study of spatial displacement and diffusion of crime control benefits*. Criminology 44:549–592

Acknowledgments

This work was supported by the National Institute of Justice (Grant Number 2010-DJ-BX-1572). We would like to thank the three anonymous reviewers for their insightful comments, and Beau Kilmer and Allison Ober who provided helpful reviews of earlier versions. We offer additional thanks to our scientific steering committee: Anne Piehl, Rodney Brunson, Scott Decker, Jonathan Caulkins, and George Tita.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Saunders, J., Lundberg, R., Braga, A.A. et al. A Synthetic Control Approach to Evaluating Place-Based Crime Interventions. J Quant Criminol 31, 413–434 (2015). https://doi.org/10.1007/s10940-014-9226-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10940-014-9226-5