Abstract

The study reported here follows the suggestion by Caplan et al. (Justice Q, 2010) that risk terrain modeling (RTM) be developed by doing more work to elaborate, operationalize, and test variables that would provide added value to its application in police operations. Building on the ideas presented by Caplan et al., we address three important issues related to RTM that sets it apart from current approaches to spatial crime analysis. First, we address the selection criteria used in determining which risk layers to include in risk terrain models. Second, we compare the “best model” risk terrain derived from our analysis to the traditional hotspot density mapping technique by considering both the statistical power and overall usefulness of each approach. Third, we test for “risk clusters” in risk terrain maps to determine how they can be used to target police resources in a way that improves upon the current practice of using density maps of past crime in determining future locations of crime occurrence. This paper concludes with an in depth exploration of how one might develop strategies for incorporating risk terrains into police decision-making. RTM can be developed to the point where it may be more readily adopted by police crime analysts and enable police to be more effectively proactive and identify areas with the greatest probability of becoming locations for crime in the future. The targeting of police interventions that emerges would be based on a sound understanding of geographic attributes and qualities of space that connect to crime outcomes and would not be the result of identifying individuals from specific groups or characteristics of people as likely candidates for crime, a tactic that has led police agencies to be accused of profiling. In addition, place-based interventions may offer a more efficient method of impacting crime than efforts focused on individuals.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

There has been an increased interest in developing techniques using spatial analysis programs to identify and target areas where crime concentrates. The most popular approach has been mapping based on high spatial crime density to direct police attention to certain locations to suppress crime and deter offenders. Sherman et al. (1989a, b) advocated this approach in a seminal paper where they suggested that we consider different types of crime occurrence in terms of the ways in which they concentrate in hotspots. Sherman (1995) examined this phenomenon further and concluded that what is important in understanding crime outcomes are onset, recurrence, frequency, desistence, and intermittency, in the context of how these processes influence the concentration of crime. This perspective, drawing together routine activities, rational choice, and crime careers approaches, has been the gold standard of crime analysis for years.

However, while hotspot mapping has allowed police to address the concentration of crime, it has generally turned attention away from the social contexts in which crime occurs. Predictions about crime occurrence are then based on what happened before in locations rather than on the behavioral or physical characteristics of places within communities. This has detached crime analysis from early work done in criminology on the effects that different factors had on the social disorganization of communities and, in turn, on crime. So, while research has been conducted on community structure and crime since the advent of hotspot analysis (see Taylor 2000; Sampson and Raudenbush 1999; Eck et al. 2005; Brantingham and Brantingham 1995), this research has not made its way into the strategic analysis of crime patterns conducted by law enforcement agencies.

More recently, as better data and more sophisticated mapping techniques have come available, opportunities have emerged to move beyond approaches that rely on density mapping to empirical and evidence-based strategies that forecast where crime will emerge in the future (Weisburd and Braga 2006; Ratcliffe 2006; Wortley and Mazerolle 2008). Still, much of the recent work in this area has continued the singular focus on crime concentration by improving on the analytical techniques that incorporate past crime data in the forecasting process (Johnson et al. 2008; Groff and La Vigne 2002; Berk 2009). While important, this research has not explicitly addressed the social contexts of crime emergence in a way that would tie hotspot analysis into a larger crime analysis framework. The research presented here on the social factors that contribute to shootings tests an approach, Risk Terrain Modeling (Caplan et al. 2010) that moves towards an analytical strategy that more directly addresses the role of place-based context in crime forecasting, tying us back to the basic premises in environmental criminology that social and physical characteristics of communities influence how crime emerges, concentrates and evolves (Brantingham and Brantingham 1981).

Literature Review

Crime at Places and Hotspot Analysis in Crime Mapping

That crime concentrates at specific, select places or “hotspots” is well supported by research (Sherman et al. 1989a, b; Harries 1999; Eck 2001; Eck et al. 2005; Ratcliffe 2006) and comports with the daily experiences of crime analysts in law enforcement agencies across the nation (Weisburd 2008). Brantingham and Brantingham (1981) initially provided important conceptual tools for understanding relationships between space and crime, such as with the term “environmental backcloth”. Groff (2007a, b) pointed out that there remain definite tendencies for crime to concentrate and congregate in certain areas according to the structure and features of the underlying study areas. This observation provides support for the notion that the presence or absence of criminal activity in particular areas is enabled by the unique combination of certain factors that make these places opportune or inopportune locations for crime (Eck 1995; Mazerolle et al. 2004); that is, where the potential for crime comes as a result of all the characteristics found at these places. This could be one reason why opportunities for crime are not equally distributed across places, or “small micro units of analysis” (Weisburd 2008: p. 2), and why the analytical approach to studying criminogenic places plays a critical role in the reliability and validity of efforts to forecast future crime locations (Weisburd et al. 2009). In a way, the concept of cognitive mapping (Zurawski 2007), as introduced by psychologists and behavioral geographers, is operationalized by risk terrain modeling to show the most opportune places for offenders to commit crimes.

We cannot assume that because a location has one or more high risk factors for crime that illegal behavior will always ensue. Similarly, just because a location had a cluster of crime incidents before there may not continue to be high crime clusters at the same location indefinitely—a basic assumption of retrospective hotspot mapping. “What is more likely to occur,” explains Caplan et al. (2010) “is that the risk of crime in places that share criminogenic attributes is higher than other places as these locations attract offenders (or more likely concentrate them in close locations) and are conducive to allowing certain events to occur. This is different from saying that crime concentrates in highly dense hotspots. Rather, it suggests that individuals at greater risk to committing crime will congregate in riskier locations.” (p. 18) This phenomenon will be true at many analytical extents: Nationwide, one state might have the highest crime rates compared to all other states; statewide, one city might have the most shootings; but, citywide, some locations in that urban area will be more crime prone than others (Eck and Weisburd 1995).

The identification of crime hotspots through mapping, and the targeting of police activity to these places, has been recognized in high-quality evaluation research as an effective crime-fighting technique (Sherman et al. 1989a, b; Braga 2004). Despite the evident success of this technology in operational policing, there is a manifest disconnect between the conventional practice of mapping and the demands by police agencies to be responsive to the dynamic nature and needs of the communities they serve. Mapping applications in police agencies are restricted to fairly simple density maps based on the retrospective analysis of crime data (Groff and La Vigne 2002). This reactive approach makes analysts less attuned to the idea of crime risk or potential, and it essentially assumes that crime will most likely always occur precisely where it did in the past—even if police intervene (Kennedy and Van Brunschot 2009; Johnson et al. 2008).

Among other things, this poses what Brantingham and Brantingham (1981) referred to as the “stationarity fallacy” that emphasizes the fact that hotspots are combinations of unrelated incidents that occurred over time and are plotted in hotspots as though they are somehow connected beyond sharing a common geography. In overcoming this fallacy, the identification of risky areas should permit police to intervene and allocate resources to reduce risk without analysts having to fear (paradoxically) that the positive impact of intelligence-led interventions will avert negative events to the point that crime counts will be reduced and there are none (or too few) past crimes to be used in traditional inferential statistical forecasting models (e.g., see Berk 2009; Gorr and Olligschlaeger 2002).

In real-world settings, as locations evolve and as police respond to problems in them, crime patterns change. Improving on hotspot analysis, a risk-based approach involves identifying factors that enhance or reduce the likelihood of future crimes in particular locations. This analytical strategy sets out to operationalize the ideas put forth by ecologists concerning the formation of natural areas in cities that contain characteristics that promote crime (Shaw and McKay 1969) and the thinking of criminologists and practitioners concerning the importance of the “environmental backcloth” that has features that attract or generate crime (Brantingham and Brantingham 1995). This backcloth can be considered in terms of the concentration or clustering of risk factors across the urban terrain, which then serves as the metric by which forecasts of crime behavior are made (Kennedy and van Brunschot 2009). Individual risk factors are important, such as those owned by motivated offenders or potential victims (Cohen and Felson 1979), but environmental risk factors are also very important in forecasting crime. “[T]he risk of criminal victimization varies dramatically among the circumstances and locations in which people place themselves and their property”, explained Cohen and Felson (1979, p. 595). It is plausible that circumstances abound daily with motivated offenders, suitable victims, and the absence of capable guardians; but crimes do not always occur. Why? Because these must simultaneously exist at criminogenic (i.e., enabling, high-risk) places to yield criminal events. Given the interest in crime concentration and the focus on the factors that might be used to predict crime occurrence, an effort to address the efficacy of these approaches is warranted. It is within this context that we apply a process whereby crime analysts can identify one or more risk factors to incorporate within a risk terrain model.

A few other researchers have connected environmental factors to crime through simulation models, including the recent work by the Brantingham and Tita (2008), Tita and Griffiths (2005), and Groff (2007a, b), suggesting that the criminal justice community may be closer in its ability to show not just that these factors are important in creating criminogenesis, but also to explain how these processes interact to produce the crime outcomes that appear. In these ecological approaches to crime analysis, there is a dichotomy developing in the way in which we attempt to forecast crime that is relevant to the emerging discussions concerning predictive and place-based policing. One approach suggests that the best way to look at predictors is to use past behavior, either in terms of actual incidents or collections of incidents (hotspots) as indicators of future behavior. An alternative approach is to consider the context in which crimes are occurring and identify factors that would be conducive to crime. Conventionally, this “contextual” approach has identified environmental factors (beyond past crime incidents) that might increase the opportunities for new crimes or support new offending. It is consistent with the ideas that were popular among ecologists, repeated by environmental criminologists when the Brantingham and Brantingham (1995) talked about “environmental backcloths”, and are now appearing in terms of risk terrains or opportunity structures. It also is a theme in situational prevention, although the focus there is less on risk patterns and more on opportunity reduction (Clarke 1997). The study presented here advances earlier efforts by validating a method for selecting some risk factors from a pool of many to include in a risk terrain model that yields the best predictive validity and significantly outperforms conventional approaches to hotspot mapping.

Risk Terrain Modeling

Caplan et al. (2010) have proposed that risk terrain modeling (RTM) offers a way of looking at criminality as less determined by previous events and more a function of a dynamic interaction between social, physical and behavioral factors that occurs at places. They suggest that the ways in which these variables combine can be studied to reveal consistent patterns of interaction that can facilitate and lead to crime. The computation of the conditions that underlie these patterns is a key component of RTM, with the ability to weigh the importance of different factors at different geographic points in enabling crime events to occur. These attributes themselves do not create the crime. As Caplan et al. suggest, they simply point to locations where, if the conditions are right, the risk of crime or victimization will go up. This offers an approach that provides a means of testing for the most appropriate qualities of space (i.e. risk factors) that contribute to these outcomes through a statistically valid selection process. It also promotes the idea of the concentration of risk leading to these problems, in a way that these “risk clusters” can be used to help forecast future crime and direct interventions, such as police patrols, to these high risk locations. This strategy can also be used to support the resiliency and expansion of the mitigating attributes that are in the low risk areas. This analysis of risk needs to be considered in light of the extensive research that has been produced around crime hot spots.

The technical approach to risk terrain modeling is straightforward and is described in detail by Caplan and Kennedy (2010). They identify, through meta-analysis or other empirical methods, literature review, professional experience, and practitioner knowledge (Ratcliffe and McCullagh 2001), all factors that are related to a particular outcome for which risk is being assessed then operationalize each risk factor to a common geography. Essentially, RTM assigns a value signifying the presence, absence or intensity of each risk factor at every place throughout a given geography. Each factor is represented by a separate terrain (risk map layer) of the same geography. When all map layers are combined in a GIS, they produce a composite map—a risk terrain map—where every place throughout the geography is assigned a composite risk value that accounts for all factors associated with the particular crime outcome. The higher the risk value the greater the likelihood of a crime event occurring at that location. Risk terrain modeling of crimes produces maps that show places with the greatest risk or likelihood of becoming spots for crime to occur in the future (Caplan and Kennedy 2009; Groff and La Vigne 2002). This occurs not just because police statistics showed that reported crimes occurred there yesterday, but because the environmental conditions are ripe for crime to occur there tomorrow.

Risk terrain modeling uses a grid of cells to represent a continuous surface, so aggregations to “arbitrary” geopolitical boundaries, police sectors, ZIP codes, census tracts, etc., are not necessary and the modifiable aerial unit problem (MAUP) is less of an issue (Harries 1999). RTM will only be of value, however, if it can be shown to be more accurate, complete, and flexible in its application than current analytical strategies adopted in policing. Risk is defined as the likelihood of an event occurring given what is known about the correlates of that event, and it can be quantified with positive, negative, low or high ordinal values. A terrain is a grid of the study area made up of equally sized cells that represent a continuous surface of places where values of risk exist. Raster data is used to represent terrains in RTM. Places are defined by cells of size x 2 (e.g. 100 ft × 100 ft). Modeling broadly refers to the abstraction of the real world at certain places. Specifically within the context of RTM, modeling refers to the acts of attributing the presence, absence, or intensity of qualities of the real world to places within a terrain, and combining multiple terrains together using map algebra (Tomlin 1994) to produce a single composite map where the new derived value of each place represents the aggregated—synthesized or collinear—qualities of those places irrespective of all other places within the terrain.

Risk terrain modeling (RTM) is an approach to spatial risk assessment to aid in event forecasting by incorporating underlying causes of events, such as crimes, and standardizing all of those factors to common geographic units over a continuous surface. It can be seen as a variation of conventional offender-based risk assessment whose principles were established many decades ago as research began to demonstrate that the characteristics of offenders were correlated with their subsequent behavior (Burgess 1928; Glueck and Glueck 1950). Except, RTM is place-based and combines actuarial risk prediction with environmental criminology to assign risk values to places according to their particular attributes.

Outline of the Current Research

The study reported here follows the suggestion that RTM be developed by doing more work to elaborate, operationalize, and test variables that would provide added value to risk terrain models. Building on the ideas presented by Caplan et al. (2010) we examine how the RTM approach to spatial risk assessment can be implemented into police operations by addressing three important issues that address the validity of RTM that sets it apart from current approaches to spatial crime analysis. First, we address the selection criteria used in determining which risk layers to include in risk terrain models. This is an important step that addresses how we integrate the conceptual framework used in the explanation of crime into models used to predict where it is likely to occur.

Second, we compare the “best model” risk terrain derived from our analysis to the traditional hotspot density mapping technique by considering both the statistical power and overall usefulness of each approach. The retrospective mapping of past crimes is a key element in CompStat programs and has value in tactical assessment of police intervention strategies but it has limited application in forecasting (see Willis et al. 2004). Third, we test for “risk clusters” in risk terrain maps to determine how they can be used to target police resources in a way that improves upon the current practice of using density maps of past crime in determining future locations of crime occurrence.

This paper concludes with an in depth exploration of how one might develop strategies for incorporating risk terrains into operational policing. RTM can be developed to the point where it may be more readily adopted by police crime analysts and enable police to be more effectively proactive and identify areas with the greatest probability of becoming locations for crime in the future. The targeting of police interventions that emerges would be based on a sound understanding of geographic attributes and qualities of space that connect to crime outcomes and would not be the result of identifying individuals from specific groups or characteristics of people as likely candidates for crime. Police interventions addressing specific geographies have been shown to more efficiently address crime than efforts focused on individuals. In Seattle, for example, Weisburd (2008) found that police would have to target four times as many offenders as they would places to account for 50% of the city’s total number of crime incidents.

Methods and Data

Setting

Newark is the largest city in the State of New Jersey, covering 26 square miles, with an estimated 2009 population of over 280,000 persons (U.S. Census Bureau) and the largest municipal police force in the state, with more than 1,300 sworn officers as of 2009. Newark has a long standing reputation as a tumultuous urban environment. The city gained national attention during the riots of 1967, which negatively impacted the city in a way that resonated throughout the following decades (Parks 2007; Tuttle 2009). In 1996 CNN’s Money Magazine named Newark the most dangerous city in America (Fried 1996, November). The latter portion of the 1990s brought about a reduction in violence, mirroring the experience of many U.S. cities at the time. The tide seemingly turned in the new millennium with both murders and non-fatal shootings increasing every year from 2000 to 2006, according to police department figures. Mayor Cory Booker took office in 2006, elected on a platform placing crime reduction as the chief priority of his administration. Under the direction of newly appointed Police Director Garry McCarthy, formerly the lead crime strategist at the NYPD, the mission and structure of the department changed to better address issues of quality of life, violent crime, and the illicit drug trade. The Newark Police Department adopted an intelligence-led policing mantra and re-organized many units to provide increased coverage during evenings and weekends. The city simultaneously made large investments to upgrade many of their technological capabilities.

Since these initiatives went into effect, Newark has experienced a significant reduction in violent crime. According to department figures, overall crime decreased 19% from 2006 through 2009, with murders and shootings decreasing 28 and 40% respectively. While some may instinctively think otherwise, cities experiencing reductions in violence such as Newark can benefit from analytical methods like risk terrain modeling, as much as jurisdictions facing crime increases or stagnation. As violent crime trends downward, so does the margin of error for police (especially police with limited resources). Public desire for crime reduction is ever-present, as is the political motivation for maximizing public safety. For these reasons, a technique that accurately forecasts the locations of future incidents (not just future totals in the aggregate of the city) can be a valuable tool for agencies looking to build upon their recent successes.

We focus our attention on gun violence due to the obvious seriousness of the offense and its (still) high level of occurrence in Newark. Despite ending 2008 with its second lowest murder total since 1965 (67), Newark’s murder rate of 23.9 (per 100,000 residents) is more than double the national average (11.5) for cities with populations greater than 250,000 (UCR 2009). Firearm usage directly dictates the murder rate in Newark, with police data showing 84% of the city’s murders resulting from gun shot wounds from 2007 through May 2010. While recognizing recent successes in violent crime reduction, city officials consider current levels of gun violence to be unacceptable. Citizens share this sentiment, according to the results of a recent survey (Center for Collaborative Change 2010), with Newark residents ranking violent crime along with the related issues of gangs and drugs as the most serious issues facing the city. We also decided to limit our focus on the singular issue of shootings in recognition of the empirical evidence demonstrating that tailoring responses towards specific crime problems heightens the effectiveness of police interventions (Weisburd and Eck 2004).

Identifying Correlates of Shootings in Newark and Operationalizing Them to Risk Map Layers

The Newark Police Department maintains an extensive Geographic Information System (GIS) encompassing numerous data layers. The digitized fields include Part I crime incidents, officer activity (such as arrests and summonses), persons of interests (e.g. “Known Burglars” and “Confidential Informants”), locations of interest (e.g. “Gang Territory”), and business/retail establishments and infrastructure (e.g. Public Housing and Liquor Stores). Such a system presents a unique situation: The abundance of data allows for the creation of numerous risk models, each utilizing a different combination of data layers. At the same time, the analyst faces the daunting task of identifying the “best” combination that produces the model with the strongest predictive capabilities. The more layers accessible within a GIS, the more frustrating this situation can be (and as shown below, more layers do not always make a better model).

Given the size of Newark PD’s GIS (as of the date of this writing, 50 separate layers appeared in the system) we first attempted to get a general sense of the factors Newark police officials believed to contribute most to incidents of gun violence and shootings. Several discussions were had with police supervisors and officers with experience in units primarily tasked with combating violence. Such units included the Operations Bureau, Gang and Narcotics squads, the “Safe City Task Force,” robbery squad, and homicide squad. One of the authors of this paper, also a crime analyst at the Newark Police Department, spoke to about a dozen officers and simply asked “Why do people get shot in this city? If you had to identify the factors leading to these incidents, what would they be?” During these conversations with individuals and groups of two or three, officers predominantly identified five factors, all of which enjoy support from empirical literature: Open-Air Drug Markets (Blumstein 1995; Harocopos and Hough 2005; Lum 2008), Gang Members and other “High Risk” offenders (Kennedy et al. 1996; Topalli et al. 2002; Braga 2004), Public Housing and other Large-Scale Complexes (Newman 1972; Eck 1994; U.S. Department of Housing and Urban Development 2000; Poyner 2006), “Risky Facilities,” such as bars and dance halls (Brantingham and Brantingham 1995; Block and Block 1995; Clarke and Eck 2005; Eck et al. 2007), and past incidents of gun crime, specifically shootings and armed robbery (Ratcliffe and Rengert 2008). The professional insight of experienced police officers in conjunction with a review of relevant empirical research led us to operationalize seven risk layers that we believed would accurately forecast the locations of shooting incidents in Newark: locations of drug arrests, proximity to “at-risk” housing developments, “risky facilities,” locations of gang activity, known home addresses of parolees previously incarcerated for violent crimes and/or violations of drug distribution laws, locations of past shooting incidents, and locations of past gun robberies.

Locations of drug arrests were geocoded to street centerline shapefiles to identify areas with high levels of drug activity. While a well run drug ring may be able to avoid detection, this technique is commonly used as a measure of drug activity (Weisburd and Green 1994; Weisburd and Green 1995; Jacobson 1999). Capturing the “at-risk” housing complexes necessitated a multi-pronged approach. A GIS file of public housing parcels, obtained and updated through the Newark Police Department’s partnership with the Newark Housing Authority, provided the foundation for this dataset. We also accounted for privately owned crime-prone complexes in recognition of their role in gun violence and the illicit drug trade in Newark (Zanin et al. 2004). To gain insight into such complexes, the analyst surveyed and held discussions with the Captain of the Police Department’s Operations Bureau and the four Precinct Commanders. Initially he emailed Precinct Commanders and the Operations Bureau Captain asking them to identify high-crime housing complexes within their commands or the city as a whole, respectively. As a follow up, he sat down with each of them after a CompStat meeting to go over their email replies and make sure no pertinent information was left out. The private complexes identified through this process that were not already within the public housing dataset were added to comprise the final at-risk housing complexes.

In order to operationalize “risky facilities”, we consulted two lists of city businesses. The first contained all establishments in possession of a liquor license. We selected bars, social clubs, and liquor stores for inclusion and excluded traditional dine-in restaurants due to them being considered unrelated to Newark’s shooting incidents. Two additional facility types were added to the “risky facilities” dataset. The first were discotheques. Colloquially referred to as “dance halls” in many areas, Newark’s discotheques are small establishments rented for the purpose of throwing parties at the location. Given the low (and sometimes nonexistent) levels of security at such locations, these events have been seen to generate violence in Newark. Take out eateries comprised the Police Department’s final location of concern. High foot traffic and late hours of operation often characterize these establishments, making it difficult to distinguish legitimate customers from those loitering for unlawful purposes, such as drug dealing or purchasing. Due to the relative lack of guardianship and concentration of potential offenders and victims, such eateries can create ideal environments for crime commission (Brantingham and Brantingham 1995; Cohen and Felson 1979; Felson 2002). While research has shown such establishment types to frequently experience crime, risk levels are not evenly distributed amongst all facilities. Eck et al. (2007) demonstrated that a small portion of facilities accounted for a majority of reported incidents in each establishment type included in the study: bars, retail shops, apartment complexes, and motels. Given the role of place mangers in crime prevention (Felson 1995) it makes sense that certain establishments generate more crime than others. Certain managers may be more capable of incorporating crime control methods into their business operations than others. Unfortunately, such information was not available for the Newark data, leading us to include all establishments in the risky facilities layer (which may have slightly hindered the predictive capability of this variable, which will be demonstrated later in the paper).

Newark Police personnel identified two offender types as playing prominent roles in shootings: Gang members and parolees previously incarcerated for violent crime and/or drug distribution. In the case of gangs, officers considered areas of gang activity, where members congregate to engage in illegal activity, to be more at-risk of violence than gang member residences. The concentration of gang members creates territory in which numerous factions operate. While cooperation amongst offenders may be necessary for the maintenance of a criminal enterprise, Newark’s gang situation is a volatile one. McGloin (2005) found Newark’s gang members to predominantly operate in loosely organized sets as opposed to well-organized gangs with established hierarchies. McGloin also noted the transient nature of Newark’s gangs, with individuals belonging to multiple sets during the study period. Within this context, cooperating sets can evolve into rivals competing over control of drug markets and other valuable assets, with intra-gang violence (e.g. “Blood on Blood”) being as or more common that inter-gang violence (e.g. “Blood on Crip”), a phenomenon observed by Decker and Curry (2002) in their analysis of gangs in St. Louis, MO.

Newark Police Department’s Gang Unit maintains an extensive database of known gang members operating within the city. Individuals arrested or field-interrogated by the unit are debriefed for gang affiliation. The databases contains information on all those identified as gang members, through self-admission or other criteria established by the state Attorney General’s Office. The database captures up to three locations for each entry, denoting the areas the gang member frequents for the purpose of engaging in illegal activity.

The parolee information was generated from the Newark Police Department’s file of prisoners recently paroled into the city of Newark. Through a partnership with the NJ Parole Board, the Newark Police Department is notified of prisoners returning to Newark post-incarceration. Similarly, notifications are sent when parolees move, are re-incarcerated, reach the end of their parole sentence, or otherwise are removed from a caseload. Upon receipt of this information, Newark police updates their database accordingly.

The parolee data are geocoded according to the residence of each individual parolee. Subjects previously incarcerated for drug distribution or violent crime violations were included in this analysis. Those incarcerated for property crime or administrative (e.g. lack of paying child support) offenses were excluded, due to their criminal histories being free of violence or drug dealing (which is seen to contribute to gun violence). While gang members were geocoded based on their frequented areas, rather than residence, data did not exist for the same approach to be taken with parolees. The parole layer is limited in this sense, which may have compromised its potential value to the risk terrain model, as will be seen below.

The final two risk variables are locations of shooting and gun robbery incidents. The Newark Police Department geocodes crime incident data on a daily basis. These daily files are separated by crime type and merged with “year-to-date” crime layers which contain all incidents occurring during the calendar year. To maintain the accuracy of the “year-to-date” layers, crime incidents for the previous 4-weeks are geocoded every Monday. These data replace the cases from the same time period within the year-to-date layers. This exercise takes place in order to capture changes in crime types and to ensure that each incident is contained within the appropriate crime type layer. For example, follow-up investigations may lead police to re-classify a crime by either upgrading (e.g. a theft is re-classified a robbery) or downgrading (e.g. a shooting is discovered to be self inflicted and is accordingly removed from the Aggravated Assault layer) the incident. While most crimes are correctly classified at the time of reporting, this process provides an added mechanism which maximizes the accuracy of the data.

Each of the aforementioned files was converted into a raster layer via the Density Tool in ArcView’s Spatial Analyst extension. Each raster map contained equally sized 145′ × 145′ cells to reflect the average street length in Newark, as measured within a GIS. Each cell received a count of points falling within its boundaries, with those falling near the center of the cell being weighted more heavily than those near the edges.Footnote 1 Final density values represented the total concentration of points.

Cells were then classified into four groups based on standard deviational breaks of density values. Each classification carried a risk score from 0 (below average) to 3 (+2 standard deviations above the mean). In an effort to maximize validity of the risk terrain model, all cells not intersecting streets were excluded before applying the risk values because all Newark PD point data is geocoded to street centerlines—regardless of where the incident actually occurs. Cells in areas such as parks, ponds, and cemeteries have a 0% chance of containing a point because of the absence of streets, so the inclusion of such cells would have negatively skewed the data set and biased the statistical tests.

As the lone polygonal variable, “At-Risk” housing parcels underwent a slightly different process to operationalize its influence on the geography and its risk posed to shootings happening nearby. As these areas were thought to be crime attractors/generators, we considered the risk of a shooting to be highest on the immediate grounds of the complex, with risk diminishing as the distance from the complex increased. To operationalize this to a risk map layer, a multi-ring buffer was drawn around each complex. Areas on or within 145 feet of complex grounds were assigned a value of 3, while areas within 141–280 feet and 281–420 feet received values of 2 and 1, respectively. We assigned a risk value of 0 to all areas outside of the 420 foot buffer. This captured the surrounding area’s proximal risk level up to three blocks away; the closer to a housing complex, the more at-risk the area was for gun violence. The coded file was then converted to a raster layer, enabling it to be combined to other risk map layers within a composite risk terrain.

Research Question 1: How Do We Select Layers for RTM?

The first aim of this study is to maximize the level of shooting incident locations forecasted by risk terrain modeling in Newark. While discussions with Newark Police Personnel led to the identification of seven variables related to shootings, we sought to further improve upon the model by including only those that were most significant and influential. Chi-Squared tests were conducted to identify the place-based risk factors most significantly associated with shooting locations. The seven risk factors that existed during Period 1 (July–September 2008), and the shooting incidents that occurred during Period 2 (October–December 2008) were spatially joined to a blank (vector) grid map of Newark so that each grid cell (of 145′ × 145′) contained eight dichotomous variables in its attributes table: Presence of each risk factor in question, respectively, during Period 1 (Yes or No), and presence of a shooting incident during Period 2 (Yes or No). Results of a series of Chi-Squared tests on 2 × 2 tables allowed us to categorize these variables into four risk models. Model 1 included all of the seven risk factors (Drug Arrests, Gang Territory, At-Risk Housing, Risky Facilities, Shootings, Gun Robberies, and Parolees). Model 2 included risk factors that were significant at p < 0.05 (Drug Arrests, Gang Territory, At-Risk Housing, Risky Facilities, Shootings, and Gun Robberies). Model 3 included risk factors that were significant at p < 0.01 (Drug Arrests, Gang Territory, At-Risk Housing, and Risky Facilities). Model 4 included risk factors that were significant at p < 0.01 and whose proportions of cells experienced 20% or more shootings at places with each risk factor, respectively (Drug Arrests, Gang Territory, and At-Risk Housing) (see Table 1).

For each model, risk map layers (one for each risk factor) were combined using the “Raster Calculator” function in the Spatial Analyst extension. The end result was a composite risk terrain map with each cell exhibiting the summed risk value of all risk map layers. Risk values for cells in the individual risk map layers ranged from 0 to 3, so the risk values for cells in “composite” risk terrain maps varied as follows: Model 1 (7 variables) 0–21, Model 2 (6 variables) 0–18, Model 3 (4 variables) 0–12, Model 4 (3 variables) 0–9.

We hypothesized that: (1) Model 4, with the highest level of significant correlations between each risk factor and shooting incident locations would have better predictive validity than all risk factors at once (Model 1) or Models 2 and 3; and (2) the Model 4 risk terrain map using data from the first period would forecast higher proportions of shooting incident locations during the second period when compared to retrospective hotspot maps of the first period shootings alone.

Data were divided into five three-month time periods: July to September 2008 (Period 1), October to December 2008 (Period 2), January to March 2009 (Period 3), April to June 2009 (Period 4), and July to September 2009 (Period 5). These time periods enabled us to do four tests of predictive validity spanning the course of a full year. This approach differed from the 6 month time intervals (and only two tests) used by Caplan et al. (2010) in Irvington, NJ. More and shorter time periods are possible in Newark because it is a much larger and more populous city and it experiences more shootings per quarter (approx. 73 vs. 10). Other factors, such as Newark Police Department’s emphasis on the “timely and accurate intelligence” mantra of Compstat (Bratton 1998; Maple 1999) and Newark’s public release of crime data on a quarterly (three-month) basis also influenced the decision to use three-month time intervals.

For each time Period 1 through 4, risk terrain maps were produced with risk map layers from each of the four Models—totaling 12 separate maps. Each risk terrain map was spatially joined to shooting incidents from the immediately subsequent time period, resulting in grid cells having two attribute values: (1) “composite” risk value and (2) count of shootings.

A Binary Logistic regression analysis, with “Risk Value” as the independent variable and “Presence of Any Shooting” (Yes or No) as the dependent variable, was used to determine the extent to which shootings occurred in higher risk cells. Regressions were run for each of the twelve risk terrain maps and odds ratios were then compared among the different models; the model having consistently higher odds ratios than the other models was dubbed the “best”. This method is consistent with that used by Caplan et al. (2010). All of the models significantly predicted (p < 0.01) locations of future shootings, with observed odds ratios being at least 1.236. However, the models varied in effectiveness. In three out of four time periods, Model 1 (all 7 risk factors) achieved the lowest odds ratio. The exception was Period 2, in which Model 1 was the second strongest, behind Model 4. Predictive efficacy of the models improved from Model 1 to 2, 2 to 3, and 3 to 4. Model 4, which included only the risk factors that were the most correlated with shootings (i.e., Drugs Arrests, Gang Territory, and At-Risk Housing) had the highest odds ratios and was the best model across all four time periods (see Table 2). As hypothesized, all possible crime correlates that are initially identified as risk factors might not yield the best risk terrain model. Including only those factors with relatively high and significant correlations to the outcome event—in this case, shootings—will produce the best place-based risk assessment of future events.

Research Question 2: How Do Risk Terrain Maps Compare to Retrospective Hotspot Maps?

The Model 4 risk terrain was compared to retrospective density maps of shooting incidents because, if RTM does not outperform retrospective hotspot maps, then their operational value to police is negligible and, thus, not worth the effort that the production of “best model” risk terrains mandates. To make this comparison, separate retrospective maps were created for Periods 1 through 4. Retrospective mapping was defined by using the locations of past events to predict locations of future similar events. “In police work,” Caplan et al. (2010) explain, “this univariate analysis assumes a static environment—that the locations of crimes do not change over time and is operationalized as the production of density maps for visual analysis.” For this study, a retrospective map of shootings was a density map calculated from the locations of shooting incidents from Period 1, 2, 3 and 4, respectively, that would then be used to forecast the locations of shootings that will occur during immediately subsequent time periods. This type of procedure is widely used by crime analysis units in police departments across the country (Harries 1999).

Just like the risk terrain maps, cells of retrospective maps were categorized using standard deviational breaks and coded with a “risk” value from 0 (less than the mean) to 3 (greater than +2 SD). Retrospective maps were then spatially joined with shooting incidents from their subsequent time period. Retrospective maps covered the same geography as risk terrain maps and they had exactly the same size and number of cells. The only difference was the range of risk values: Retrospective maps ranged from 0 to 3; Risk terrain maps ranged from 0 to 9.

Retrospective maps were compared to the Model 4 risk terrain maps using 2 × 2 cross tabulations and Chi-Squared tests. It was hypothesized that “high risk” cells would have significant levels of future shootings in both the retrospective maps and risk terrain maps, but that risk terrain maps would have relatively higher proportions of correct predictions. Consistent with the approach used by Caplan et al. (2010), four categories of “high risk” cells were designated as “highest risk” for each map and tested:Footnote 2 The top 10% (n = 1,752), top 20% (n = 3,504), top 30% (n = 5,262) and top 40% (n = 7,009). This allowed for a numerically equal comparison of cells designated as “high risk” between the two methods—retrospective density maps and risk terrain maps. It also added pragmatic operational value to the results by identifying the coverage area (10 through 40% of the city) needed to maximize any observed benefits so that finite police resources can be allocated most efficiently.

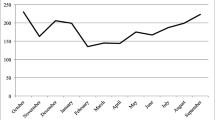

As shown in Table 3 and Fig. 1, the risk terrain model outperformed retrospective maps across each high risk cell designation method and across all time periods. As much as 36% more shootings occurred in high-risk cells identified by the risk terrain model compared to the retrospective map; the top 40% of high-risk cells in the Period 3 risk terrain map correctly predicted the locations of 84% of the shootings during Period 4 (the retrospective maps only predicted as much as 53%). Within the top 10% of high risk cells, RTM outperformed retrospective maps by 5%, on average, with two time periods having differences in the double-digits (Period 2 = 11%; Period 3 = 15%). The top 20 and 30% of high risk cells further exemplified RTM’s superiority over traditional methods, with retrospective maps being outperformed by an average of 12.5 and 18.0% respectively.Footnote 3

With nearly 300 shootings annually in Newark, even a seemingly small 5% improvement over existing analytical practices equates to 15 shootings that will probably occur within known 145′ × 145′ places. In the context of shootings, which run a high risk of fatality, there could be a significant dividend if police take adequate preemptive measures to mitigate one or more risk factors in these places. Herein lies the next question addressed by this study: How can the intelligence produced by RTM be communicated in a meaningful way to police and used for strategic decision-making and tactical operations?

Research Question 3: How Can Risk Terrain Maps be Used as Spatial Intelligence?

Hotspot density mapping is a proven technology that aids police operations. If police want to target areas with existing high crime, then using simple retrospective density maps of these crimes will show the general areas where crime is most concentrated and suitable for common suppression methods (e.g. increase police patrols, ordinance enforcement, curfews, zero-tolerance enforcement of ordinances and misdemeanors, knock and talks). But RTM grounds police plans and operations in ecological theories of crime and the mathematical theory of sparsity (Silverman 2010), which justifies the modeling of criminogenic places rather than requiring absolute knowledge about every incident that already happened.

Consider the example of testing for syphilis during WWII. Military recruiters had to very quickly test every new recruit, but they knew that individually testing everyone would be time consuming and costly. Instead, they drew blood from each recruit and mixed the samples among groups of 10 recruits and tested that one sample of mixed blood. Most of the time those tests were negative because syphilis was sparse among the general population; But, if a positive sample was found, they knew it belonged to at least one of the 10 recruits in the mixed-blood group. This procedure was grounded in the theory of sparsity because syphilis was relatively sparse in the general population so using the groups-of-10 model to “compress” the samples for faster testing worked well. In fact, it worked just as well as testing every recruit separately would have, but without the added costs.

Violent crime is also relatively sparse in the population. Not everyone is a violent criminal and violent crime does not happen everywhere. Even in cities where crime occurs more frequently than other cities, it tends to occur most often at certain places within the city and not at other places (Eck and Weisburd 1995). For this reason, hotspot mapping tends to be the operational default for directing police. Consider robbery, for example, which (though generally sparse) occurs frequently around major transit hubs. Criminological theory suggests that these transient places with a lot of suitable victims/targets are more likely to have robberies, and past crime statistics show that. But, robberies also happen elsewhere in the city. How should police allocate the remaining limited resources to other parts of the city? If they direct resources to other areas with many past crimes, they run the risk that these areas might not have similar amounts of future crimes. The challenge that police agencies have is to allocate resources to areas with high crimes in order to suppress them, and also to areas that pose the highest risk for crimes to occur in the future. Risk terrain modeling especially permits the latter.

This encouraged us to think more about using “risk clusters” instead of crime hotspots to allocate police resources, and we used Local Indicators of Spatial Autocorrelation (LISA), or Local Moran’s I, to test whether RTM could generate this type of information. For the purposes of operational policing, LISA is preferred to other hotspot analysis methods such as the Getis-Ord Gi* statistic (i.e. the “Hotspot Analysis” tool in ArcGIS) because it can identify clusters of places with values similar in magnitude, as well as, features that are spatial outliers. For example, whereas the resultant Z score from the Getis-Ord Gi* statistic tells where features with either high or low values cluster spatially surrounded by other similarly valued features, LISA can distinguish between statistically significant clusters of high values surrounded by high values (HH), low values surrounded by low values (LL), high values surrounded by low values (HL), and low values surrounded by high values (LH).

This kind of information can be especially useful to police strategists within the context of risk terrain modeling because it allows for categorizing (and ultimately prioritizing) the most risky, most vulnerable, or least risky places. Furthermore, without additional research well beyond the scope of this paper, risk values produced by risk terrain modeling remain ordinal. That is, we may say that Place A with a value of 4 is higher risk than Place B with a value of 2, but we cannot say that Place A is twice as risky as Place B. While risk may never truly be absolutely zero, we can reasonably say that places with risk values of zero are at no greater risk for crime occurrence than any other place at random, under normal circumstances. For police commanders, this empirical evidence of zero risk at places could translate to: “Continue with standard practices in these places; no specific resources or interventions are immediately needed.”

For the purposes of cluster analysis, places with zero risk values should be excluded so that they do not lower the expected mean or affect the Z scores in a less-than-meaningful way. In police practice, this issue can be stated as: “Areas with lower risk can be covered through standard practices, such as routine patrol, deployment, and criminal investigations; but where should additional resources and interventions be directed to first in the most efficacious way?” RTM helps answer this question by identifying cells with risk values greater than zero and statistically significant clusters of HH, HL, and LH places since none of these places are (statistically) completely isolated from high-risk places and their influences. Time and other resources permitting, further attention may be given to LL places. Figure 2 shows results of a Local Moran’s I test performed on the Period 1 Model 4 risk terrain map with all zero-valued cells excluded.

It is apparent from this map that risk can, in fact, cluster and that the nature of these clusters can better inform plans for police response. For example, officers might seek to leverage the social and human capital and other strengths of low-risk places that are nearby high-risk places in their efforts to mitigate one or more of the risk factors in both “risk cluster” spots. Or, because lower-risk clusters still have some criminogenic risk factors in them, police can monitor these places as they target nearby high-risk clusters to preempt any displacement or dispersion of risk factors (or new crime incidents) that could occur.

Discussion of Results

The risk clustering approach can direct police to anticipate crime problems early and address the correlates of crime outcomes (note that these can vary by the type of crime being studied). This is not a ridiculous supposition. Aspects of community-oriented policing, problem-oriented policing, and even CompStat programs, have tried to get police to think proactively and take preemptive action. Since risk can cluster in meaningful ways, RTM can ground risk-based policing much more into the contexts in which police operate rather than concentrating police on behavior that they are trying to control.

Risk terrain modeling has specific implications for crime analysis functions of police agencies. Indeed, the role of the crime analyst is central in contemporary policing (Clarke and Eck 2005). Strategic innovations in policing hinge on the work and insight of crime analysis units. Hotspots policing relies on the identification, primarily through GIS analysis, of distinct places experiencing crime concentrations. Problem Oriented Policing relies on intricate “scanning” and “analysis” of crime problems in the development of strategies and stresses rigorous “assessment” of program impact. Each of these aforementioned tasks is most often the direct responsibility of the crime analysts (Boba 2003). CompStat is particularly dependent on the crime analysis function, with meetings unable to take place without data produced by the analysts (Boba 2009; Ratcliffe 2004). We offer Risk Terrain Modeling as an effective analytical framework to be added to the crime analysts’ toolbox. We do not surmise that RTM ought to replace longstanding techniques, but rather offer the approach as a method by which analytical and operational efforts of law enforcement can possibly be enhanced (for a practical example, see Baughman and Caplan 2010).

For RTM to be effectively incorporated into law enforcement, pertinent “risk” variables must be collected and processed in an expedited manner. While crime and officer activity (e.g. arrest) data is routinely collected and updated by law enforcement, it is currently unclear how often police agencies collect risk-related data, or to what extent risk variables are considered at all. In order to undertake risk terrain modeling, police leadership must place an emphasis on the importance of producing risk data on a frequent basis—similar to how the introduction of CompStat led to the more accurately and timely production of crime data. New York City Police officials who instituted CompStat tell of how infrequently crime information was traditionally produced within the department, with crime statistics being generated exclusively for UCR reporting and quarterly press releases, and seldom influencing law enforcement policy and strategy (Bratton 1998; Maple 1999). The introduction of the CompStat model, which held police commanders directly accountable for devising strategies to address real-time crime patterns, made crime analysis a daily, and very necessary, function for law enforcement—both in New York and throughout the United States (Silverman 2006). While such a drastic paradigm shift is not necessary for risk terrain modeling to be incorporated into the crime analysis function, the value of the technique would be heightened by police agencies focusing efforts towards better understanding and measuring place-based factors which accurately forecast incidents of crime.

Such an approach has specific implications for cities like Newark. Since 2006, the Newark Police Department has incorporated a large number of place-based interventions in their efforts against violence. These approaches typically incorporate increased officer presence, high levels of foot patrols, situational-crime prevention techniques (such as street closures and surveillance activities), and proactive narcotics enforcement. Such steep manpower increases require a substantial dedication of resources. Managing finite resources in urban environments is a challenge for police leaders, especially during the recent economic downturn which has led to operating budgets being substantially reduced for most law enforcement agencies. Identification of “risk clusters” can aid police leaders in their resource allocation by identifying high-priority areas both currently receiving and in-need of additional coverage. In the case of Newark, a number of risk clusters fall within existing target areas of the Police Department’s various place-based interventions. However, other high-risk places currently receive no more than basic police attention in the form of patrol, retroactive investigations, and ad hoc suppression efforts. With such data in hand, police mangers can more precisely select areas for future proactive, place-based interventions.

Summary and Conclusions

This paper offers a number of advances to thinking about spatial analysis of crime. It serves as replication of risk terrain modeling from what was offered by Caplan et al. (2010) but adds to it by addressing both the analytical and practical aspects of this approach. This study proposes a procedure for selecting some variables out of many to include in the risk terrain model; it compares RTM to hotspot mapping and suggests a way to utilize both in concert—rather than the need to have RTM replace density mapping; and it considers how risk clusters can be used in strategic decision-making in police organizations.

In future applications of this approach, now that a procedure has been tested to isolate some risk factors from many to include in a risk terrain model, the next step is to develop a reliable method for weighting these factors relative to one another. To date, all risk map layers have carried equal weights. In addition, interventions that reduce risk of ensuing criminal acts can be evaluated by regularly re-assessing risk, and then measuring changes in risk values among different risk terrain maps at micro or macro levels using inferential statistics. For example, when evaluating the impact of a police intervention in reaction to assessed risk, subsequent risk terrain maps might be expected to show certain results, such as an overall reduction in risk values throughout the intervention area. They may also point to a fragmentation or shift of high-risk clusters. It is also possible interventions could lead to an equalization (or smoothing) of risk throughout the study area—with a decreased intensity of high-risk spots and a slightly increased or constant intensity of risk at previously low-risk spots. All of these possibilities would argue for the development of a coordinated risk assessment model that can suggest effective interventions and a clear definition of “success” at the onset of any intervention program. Through this form of evidence-based approach, the likelihood of increased success in reducing and preventing crime goes up.

Notes

The specific parameters for density calculations used in this study were a bandwidth of 1,000 feet and a cell size of 145 feet. A 1,000 foot bandwidth was selected because it seemed a reasonable sphere of influence for shooters—the median blockface is approximately 290 feet (Felson 1995; Taylor 1997). 145 × 145 foot cells were the smallest area that our computers could process reasonably fast and, for this study, if a Risk Terrain Model could predict locations of shootings at the smallest (but reasonable) geographic units, it would best exemplify the utility of RTM for operational policing compared to larger, less specific, units of analysis.

Rows in the attribute tables of each map were sorted in descending order in SPSS by their risk values after random numbers were assigned to each cell. Random numbers were necessary to randomize the sorting of cells with the same risk values. For example, if 11 out of 100 cells had a risk value of eight, and they were sorted in descending order, the top 10% of cells to be designated as “high risk” would all have values of eight. But, the 11% cell would be excluded due to a rather arbitrary sorting algorithm. The random number ensured that every cell had an equal chance of being sorted above or below each cut point.

As is evident from Fig. 1, RTM’s superiority over the retrospective maps declined dramatically in Period 4. This may be the result of numerous place-based operations of the Newark Police Department. Starting in early 2008, the Newark Police began systematic, intensive street level enforcement in various “hot zones” around the city. When an area is designated as a “hot zone,” a vast amount of resources is dedicated to the area. City-wide and precinct-level squads (such as the central narcotics unit and precinct level “conditions” unit) are tasked with conducting operations in these areas on a daily basis. Police patrols are also significantly heighted. Commonly, police units and foot patrols are stationed at these places 24-h a day. These operations are ongoing; resources are not pulled from an area when crime levels drop. Instead, the heightened level of enforcement remains steady even as additional areas are designated as “hot zones.” As of the date of this writing, each of Newark’s four police precincts designates five areas as “hot zones,” according to department documents. In addition, The Newark Police has formed a partnership with various federal and state agencies active within the city. This “Violent Enterprise Strategy Team (VEST),” as it is referred to, targets criminal gangs seen to significantly contribute to violence. VEST operations are conducted throughout numerous areas of the city. Target areas of these aforementioned efforts happen to be “high-risk” areas as denoted by our Risk Terrain Model. Being that the number of such specialized zones has increased over time, more “high risk” areas received higher levels of police attention and enforcement in Period 4 than during the previous study periods. Thus, in Period 4, the true “risk” of violence is most likely lower than the Risk Terrain suggests, which obviously impacts the model’s predictive capability. In light of this observation, future research on the Risk Terrain approach should incorporate police activity in the model as a mitigating factor.

References

Baughman J, Caplan J (2010) Applying risk terrain modeling to a violent crimes initiative in Kansas City, Missouri. RTM Insights 1. http://rutgerscps.org/rtm/KCPD_RTMinAction_InsightsBrief_1.pdf. Accessed 10 March 10

Berk R (2009) Asymmetric loss functions for forecasting in criminal justice settings. Unpublished manuscript

Block R, Block C (1995) Space, place, and crime: hotspot areas and hot places of liquor related crime. In: Eck JE, Weisburd D (eds) Crime and place. Crime prevention studies, vol 4. Criminal Justice Press, Monsey, NY

Blumstein A (1995) Youth violence, guns, and the illicit-drug industry. J Crim Law Criminol 86:10–36

Boba R (2003) Problem analysis in policing. Police Foundation, Washington, DC

Boba R (2009) Crime analysis with crime mapping, 2nd edn. Sage Publications, Thousand Oaks

Braga A (2004) Gun violence among serious young offenders. Problem-oriented guides for police. Problem-specific guides series: no. 23. U.S. Department of Justice. Office of Community Oriented Policing Services, USA

Brantingham PJ, Brantingham PL (1981) Environmental criminology. Sage, Beverly Hills, CA

Brantingham PJ, Brantingham PL (1995) Criminality of place: crime generators and crime attractors. Eur J Crim Policy Res 3:1–26

Brantingham PJ, Tita G (2008) Offender mobility and crime pattern formation from first principles. In: Liu L, Eck JE (eds) Artificial crime analysis systems: using computer simulations and geographic information systems. Idea Press, Hershey, PA, pp 193–208

Bratton W (1998) Turnaround: How America’s top cop reversed the crime epidemic. Random House, New York

Burgess EW (1928) Factors determining success or failure on parole. In: Bruce AA, Burgess EW, Harno AJ (eds) The workings of the indeterminate sentence law and the parole system in Illinois. Illinois State Board of Parole, Springfield, pp 221–234

Caplan JM, Kennedy LW (2010) Risk terrain modeling manual. Rutgers Center on Public Security, Newark

Caplan JM, Kennedy L (2009) Drug arrests, shootings, and gang residences in Irvington, NJ: an exercise in data discovery. Paper presented at threat assessments: innovations and applications in data integration and analysis Conference at the Regional Operations Intelligence Center, West Trenton, NJ

Caplan JM, Kennedy LW, Miller J (2010) Risk terrain modeling: brokering criminological theory and GIS methods for crime forecasting. Justice Q (online first)

Center for Collaborative Change (2010) Neighborhood Survey, Newark, NJ. Retrieved online at http://newarkchange.org/survey/

Clarke RV (1997) “Introduction” in situational crime prevention: successful case studies. Harrow and Heston, Guilderland, NY

Clarke R, Eck JE (2005) Crime analysis for problem solvers in 60 small steps. U.S. Department of Justice Office of Community Oriented Policing Services, USA

Cohen L, Felson M (1979) Social change and crime rate trends: a routine activity approach. Am Sociol Rev 44:588–605

Decker S, Curry G (2002) Gangs, gang homicides, and gang loyalty: organized crimes or disorganized criminals. J Crim Justice 30:343–352

Eck JE (1994) Drug markets and drug places: a case-controlled study of spatial structure of illicit dealing. Unpublished PhD dissertation, University of Maryland, College Park

Eck JE (1995) A general model of the geography of illicit retail marketplaces. In: Eck JE, Weisburd D (eds) Crime and place: crime prevention studies, vol 4. Willow Tree, Modney, NJ, pp 67–95

Eck JE (2001) Policing and crime event concentration. In: Meier R, Kennedy L, Sacco V (eds) The process and structure of crime: criminal events and crime analysis. Transactions, New Brunswick, pp 249–276

Eck JE, Weisburd D (eds) (1995) Crime and place: crime prevention studies, vol 4. Willow Tree Press, Monsey, NY

Eck JE, Chainey S, Cameron JG, Leitner M, Wilson RE (2005) Mapping crime: understanding hot spots. National Institute of Justice, Washington, DC

Eck JE, Clarke R, Guerette R (2007) Ricky facilities: crime concentration in homogeneous sets of establishments and facilities. Crime Prev Stud 21:225–264

Felson M (1995) Those who discourage crime. In: Eck JE, Weisburd D (eds) Crime and place: crime prevention studies, vol 4. Willow Tree Press, Monsey, NY, pp 349–359

Felson M (2002) Crime and everyday life, 3rd edn. Sage Publications, Thousand Oaks

Fried C (1996) America’s Safest City: Amherst, NY; The Most Dangerous: Newark, N.J. CNN Money Magazine

Glueck S, Glueck E (1950) Unraveling juvenile delinquence. Common-wealth, New York

Gorr W, Olligschlaeger A (2002) Crime hot spot forecasting: modeling and comparative evaluation. National Institute of Justice, Washington, DC

Groff ER (2007a) Simulation for theory testing and experimentation: an example using routine activity theory and street robbery. J Quant Criminol 23:75–103

Groff ER (2007b) ‘Situating’ simulation to model human spatio-temporal interactions: an example using crime events. Trans GIS 11(4):507–530

Groff ER, La Vigne NG (2002) Forecasting the future of predictive crime mapping. Crime Prev Stud 13:29–57

Harocopos A, Hough M (2005) Drug Dealing in Open Air Markets. Problem-Oriented Guides for Police. Problem-Specific Guides Series: No. 31. U.S. Department of Justice. Office of Community Oriented Policing Services, USA

Harries K (1999) Mapping crime: principles and practice. National Institute of Justice, Washington, DC

Jacobson J (1999) Policing drug hotspots. Police research series. Number 9. Home Office. Policing and Reducing Crime Unit

Johnson SD, Bowers KJ, Birks D, Pease K (2008) Prospective mapping: the importance of the environmental backcloth. In: Weisburd D, Bernasco W, Bruinsma G (eds) Putting crime in its place: units of analysis in geographic criminology. Springer, New York

Kennedy LW, Van Brunschot E (2009) The risk in crime. Rowman and Littlefield, Lanham

Kennedy D, Piehl A, Braga A (1996) Youth violence in Boston: gun markets, serious youth offenders, and a use-reduction strategy. Law Contemp Probl 59:147–197

Lum C (2008) The geography of drug activity and violence: analyzing spatial relationships of non-homogenous crime event types. Subst Use Misuse 43:179–201

Maple J (1999) The crime fighter: how you can make your community crime-free. Broadway Books, New York

Mazerolle L, Kadleck C, Roehl J (2004) Differential police control at drug-dealing places. Secur J 17:1–69

McGloin J (2005) Policy and intervention considerations of a network analysis of street gangs. Criminol Public Policy 43:607–636

Newman O (1972) Defensible space: crime prevention through urban design. Macmillan, New York

Parks B (2007) The Newark riots: 40 years later. A four part series. The Newark Star Ledger. 8–11 July 2007

Poyner B (2006) Crime-free housing in the 21st century. Jill Dando Institute of Crime Science, University College of London. Brook House Publishing, London

Ratcliffe JH (2004) Crime mapping and the training needs of law enforcement. Eur J Crim Policy Res 10:65–83

Ratcliffe JH (2006) A temporal constraint theory to explain opportunity-based spatial offending patterns. J Res Crime Delinq 43(3):261–291

Ratcliffe JH, McCullagh MJ (2001) Chasing ghosts? Police perception of high crime areas. Br J Criminol 41:330–341

Ratcliffe JH, Rengert G (2008) Near repeat patterns in Philadelphia shootings. Secur J 211–2:58–76

Sampson RJ, Raudenbush S (1999) Systematic social observation of public spaces: a new look at disorder in urban neighborhoods. Am J Sociol 105:603–651

Shaw C, McKay H (1969) Juvenile delinquency and urban areas. University of Chicago Press, Chicago

Sherman LW (1995) Hotspots of crime and criminal careers of places. In: Eck JE, Weisburd D (eds) Crime and place. Crime prevention studies, vol 4. Criminal Justice Press, Monsey, NY, pp 35–52

Sherman LW, Gartin PR, Buerger ME (1989a) Hot spots of predatory crime: routine activities and the criminology of place. Criminology 27:27–55

Sherman LW, Gartin PR, Buerger ME (1989b) Hot spots of predatory crime: routine activities and the criminology of place. Criminology 27:821–849

Silverman E (2006) CompStat’s innovation. In: Weisburd D, Braga A (eds) Police innovations: contrasting perspectives. Cambridge studies in criminology. Cambridge University Press, UK

Silverman A (2010) The path form syphilis to faster MRIs. Retrieved from http://www.npr.org/blogs/alltechconsidered/2010/05/24/127088641/the-path-from-syphilis-to-faster-mris

Taylor RB (1997) Order and disorder of streetblocks and neighborhoods: ecology, microecology and the systemic model of social organization. J Res Crim Delinq 34:113–155

Taylor R (2000) Breaking away from broken windows: Baltimore neighborhoods and the nationwide fight against crime, grime, fear, and decline. Perseus, New York

Tita G, Griffiths E (2005) Traveling to violence: the case for a mobility-based spatial typology of homicide. J Res Crime Delinq 42:275–308

Tomlin C (1994) Map algebra: one perspective. Landsc Urban Plan 30:3–12

Topalli V, Wright R, Fornango R (2002) Drug dealers, robbery, and retaliation: vulnerability, deterrence, and the contagion of violence. Br J Criminol 42:337–351

Tuttle B (2009) How Newark became Newark: the rise, fall, and rebirth of an American City. Rivergate Books, New Brunswick

Uniform Crime Report (2009) Crime in the Untied States 2008. U. S. Department of Justice, Federal Bureau of Investigation, Washington, DC

U.S. Department of Housing and Development (2000) In the crossfire: the impact of gun violence on public housing communities. U.S. department of housing and Development, Washington, DC

Weisburd D (2008) Place-based policing. Ideas in policing series. Police Foundation, Washington, DC

Weisburd D, Braga AA (2006) Police innovation: contrasting perspectives. Cambridge University Press, Cambridge, UK

Weisburd D, Eck JE (2004) What can police do to reduce crime, disorder, and fear? Ann Am Acad Pol Soc Sci 593:42–65

Weisburd D, Green L (1994) Defining the street-level drug market. In: Mackenzie D, Uchida C (eds) Drugs and crime: evaluating public policy initiatives. Sage, Thousand Oaks

Weisburd D, Green L (1995) Policing drug hot spots: the Jersey City drug market analysis experiment. Justice Q 12:711–736

Weisburd D, Bernasco W, Bruinsma G (2009) Units of analysis in geographic criminology: historical development, critical issues, and open questions. In: Weisburd D, Bernasco W, Bruinsma G (eds) Putting crime in its place: units of analysis in geographic criminology. Springer, New York, pp 3–33

Willis JJ, Mastrofski SD, Weisburd D (2004) Compstat and bureaucracy: a case study of challenges and opportunities for change. Justice Q 21:463–496

Wortley Richard, Mazerolle L (eds) (2008) Environmental criminology and crime analysis. Willan Publishers, London, UK

Zanin N, Shane J, Clarke R (2004) Reducing drug dealing in private apartment complexes in Newark, New Jersey. A final report to the U.S. Department of Justice, Office of Community Oriented Policing Services on the field applications of the problem-oriented guides for police project

Zurawski N (2007) Video surveillance and everyday life: assessments of closed-circuit television and the cartography of socio-spatial imaginations. Int Crim Justice Rev 17:269–288

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kennedy, L.W., Caplan, J.M. & Piza, E. Risk Clusters, Hotspots, and Spatial Intelligence: Risk Terrain Modeling as an Algorithm for Police Resource Allocation Strategies. J Quant Criminol 27, 339–362 (2011). https://doi.org/10.1007/s10940-010-9126-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10940-010-9126-2