Abstract

Although program adaptation is a reality in community-based implementations of evidence-based programs, much of the discussion about adaptation remains theoretical. The primary aim of this study was to replicate two coding systems to examine adaptations in large-scale, community-based disseminations of the Strengthening Families Program for Parents and Youth 10–14, a family-based substance use prevention program. Our second aim was to explore intersections between various dimensions of facilitator-reported adaptations from these two coding systems. Our results indicate that only a few types of adaptations and a few reasons accounted for a majority (over 70 %) of all reported adaptations. We also found that most adaptations were logistical, reactive, and not aligned with program’s goals. In many ways, our findings replicate those of the original studies, suggesting the two coding systems are robust even when applied to self-reported data collected from community-based implementations. Our findings on the associations between adaptation dimensions can inform future studies assessing the relationship between adaptations and program outcomes. Studies of local adaptations, like the present one, should help researchers, program developers, and policymakers better understand the issues faced by implementers and guide efforts related to program development, transferability, and sustainability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Despite significant progress in demonstrating the efficacy of health behavior education programs (Botvin & Griffin, 2004; Catalano et al., 2012; Durlak, Weissberg, Dymnicki, Taylor, & Schellinger, 2011; Spoth, Randall, Trudeau, Shin, & Redmond, 2008), the gap between research, practice, and meaningful public health impact remains substantial (Glasgow, Lichtenstein, & Marcus, 2003; Rohrbach, Grana, Sussman, & Valente, 2006; Spoth et al., 2013; Wandersman et al., 2008). Some suggest that this gap occurs because when evidence-based programs (EBPs) are transported into the real world, they are modified to fit local contexts and implemented with less than optimal levels of fidelity (Cohen et al., 2008; Dusenbury, Brannigan, Hansen, Walsh, & Falco, 2005; Miller-Day, Pettigrew, Hecht, Shin, Graham, & Krieger, 2013), and several empirical studies show a positive association between fidelity (i.e., program delivery as designed) and participant outcomes (Breitenstein, Gross, Garvey, Hill, Fogg, & Resnick, 2010b; Byrnes, Miller, Aalborg, Plasencia, & Keagy, 2010; Durlak & DuPre, 2008; Hamre et al., 2010; Hill & Owens, 2013; Pettigrew, Graham, Miller-Day, Hecht, Krieger, & Shin, 2015). Others argue that adaptation (i.e., program modification) may be as important as fidelity in assuring effective and sustainable implementation (August, Gewirtz, & Realmuto, 2010; Bopp, Saunders, & Lattimore, 2013). However, it is difficult to document and evaluate adaptations of EBPs as they are disseminated in real-world settings, and much of the discussion about adaptation remains theoretical. Our goal was to replicate two coding schemes used to examine adaptations in the Strengthening Families Program for Parents and Youth 10–14 (SFP 10–14). SFP 10–14 has a strong evidence base and is one of the most widely-disseminated family-focused substance use prevention programs for middle-school-age youth. This study extends our knowledge about the multidimensional nature of adaptation in the field, provides direction for possible measurement strategies, and adds to the growing research base on SFP 10–14, a program that is already widely implemented in diverse communities across the United States and abroad.

Adaptation Types and Reasons

Few studies have systematically studied types of adaptations in health behavior interventions (Hansen, 2013). The primary adaptation types reported in those few studies are changes to program content, format, and delivery context. Studies also commonly report that facilitators provide additional information or resources; change the program’s target population; add rewards, prizes, or celebrations; and modify training or evaluation processes (Cohen et al., 2008; Lara et al., 2011; Miller-Day et al., 2013; Moore, Bumbarger, & Cooper, 2013; Stirman, Miller, Toder, & Calloway, 2013; Veniegas, Kao, & Rosales, 2009).

Studies that have reported reasons given for adaptations indicate that they often occur in response to group attributes. For example, Hill and Owens (2013) found that in community-based (non-research) implementations of SFP 10–14, the program was delivered to a more heterogeneous population than that for which it was initially designed. Thus, facilitators reported making adaptations to better meet the needs of that population. Facilitators have also reported deleting activities due to insufficient time to complete them or changing delivery styles because they believed that a different format or process would be better. Disagreement with content, clarification of or emphasis on specific content, and technical difficulties have also been reported as common reasons for adaptations (Cohen et al., 2008; Hill, Maucione, & Hood, 2007; Miller-Day et al., 2013; Moore et al., 2013; Ozer, Wanis, & Bazell, 2010). Our study aims to extend our knowledge of types of and reasons for program adaptation in community settings through the replication of two multidimensional coding systems.

Multidimensional Adaptation Coding Systems

Some recent studies of evidence-based program adaptations have utilized coding systems that categorize the multiple dimensions of program adaptations (Berkel, Murray, Roulston, & Brody, 2013; Cohen et al., 2008; Hansen et al., 2013; Hill et al., 2007; Lara et al., 2011; Miller-Day et al., 2013; Ozer et al., 2010; Moore et al., 2013; Stirman et al., 2013). For example, Stirman and colleagues developed a system to classify modifications (i.e., adapations) made to a variety of mental and behavioral health interventions according to who made the modification (e.g., individual practitioner, administrator), what was modified (e.g., content, context), at what level of delivery the modification was made (e.g., individual patient, group), the types of context modifications made (e.g., format, setting), and the nature of the content modifications made (e.g., adding or removing elements). This system highlights the importance of considering multiple dimensions of adapations within and across different studies, and intervention types and settings (Stirman et al., 2013).

In this study we compared the Moore et al. (2013) and Hill et al. (2007) coding schemes. We selected these for several reasons. First, and most importantly, both systems have been previously applied to the SFP 10–14 program and were developed to assess similar aspects of adaptations (i.e., the types of and reasons for adaptations). Each of the previous studies used data from statewide implementations of SFP 10–14, but in different states; use of the two schemes with the same program across states enabled us to examine the generalizability of the two approaches across settings. Second, both were designed to be completed by program facilitators rather than observers, and thus were directly comparable. However, the two systems also contribute unique perspectives; whereas the Moore et al. (2013) system assesses more global aspects of adaptation, which are relevant across different types of EBPs, Hill et al. (2007) developed their system to capture information on the adaptation types and reasons specific to the SFP 10–14 program. For example, the Moore et al. (2013) system uniquely contributes information about the timing of adaptation (proactive vs. reactive) and the alignment with program theory (positive vs. negative). By examining the intersections of these more global codes with the more specific types of SFP 10–14 adaptations, as coded by the Hill et al. (2007) system, we will gain valuable information about whether those few most frequent reasons for adaptation in SFP 10–14 are usually in alignment with the program’s logic model, or whether certain types of adaptation are likely to be planned rather than reactive. These insights have important implications for SFP 10–14 program developers and for training.

The Hill et al. (2007) coding system was developed based on interviews with 41 SFP 10–14 facilitators in Washington State about the types of adaptations they made on a regular basis and their reasons for making those adaptations. The goal of this coding system was to determine the most common types and reasons for program adaptations. Using a grounded theory approach to identify emergent themes, the authors identified 13 types and 15 reasons for adaptation. Hill et al. (2007) examined whether the Pareto principle (Bookstein, 1990) applied to these data. The Pareto principle suggests that in program implementation, a few types of and reasons for adaptations should account for the majority of adaptations. Their analyses showed that just a few types of adaptations accounted for 70 % of all reported adaptations, and similarly, just a few reasons for adaptation accounted for 70 % of all reasons provided. Thus, it appeared that identifying and addressing those vital few types and reasons could substantially increase fidelity.

The Moore et al. (2013) coding system assessed qualitative descriptions of adaptations from 68 respondents implementing a variety of school, community, and family-based programs in a variety of community settings. Similar to the Hill et al. (2007) codes, the goal of the Moore et al. (2013) system was to capture information on the nature of the adaptations and the reasons why they were being made. Unlike Hill et al. (2007), this system also aimed to assess when adaptations were being made and how they aligned with program’s theory. Specially, the system was designed to assess adaptations along three dimensions: fit (philosophical vs. logistical), timing (proactive vs. reactive), and valence or alignment (positive, negative, or neutral). This system makes important contributions because it provides valuable information about whether adaptations are likely to have negative consequences on program outcomes and insight into why adaptations are happening. This system is also easily applied across different EBPs, whereas the Hill et al. (2007) system is likely specific to SFP 10–14, at least for specific types of adaptation.

Our Study

Our study has two primary aims. First, we sought to replicate two multidimensional coding systems that were previously applied to the analysis of SFP 10–14 and to determine if the proportion of adaptation types and reasons were similar to the original studies (Hill et al., 2007; Moore et al., 2013). Based on previous findings, our hypotheses with regard to this aim are: (1a) only a few types of adaptations and a few reasons will account for the bulk of adaptations (Hill et al., 2007); (1b) facilitators will report similar types, reasons, and proportions of specific adaptations to those initially reported by Hill et al. (2007) and (1c) a majority of adaptations will be inconsistent with the program logic model (i.e., negatively aligned), logistical, and reactive as initially reported by Moore, et al. (2013). Because these coding systems were originally applied separately, our second aim was to explore the intersections between dimensions of facilitator-reported adaptations from these two coding schemes. Given the exploratory nature of the second aim, we did not specify any hypotheses; however, based on theory and previous research we expected that adaptations made to superficial aspects of the program (e.g., games) would be logistical in nature, reactive, and either neutral or negatively aligned with the program’s theory. Conversely, we expected that adaptations made to core elements of the program (e.g., group process) would be philosophical in nature and proactive.

Methods

Participants and Procedures

Since 2000, Washington State University (WSU) ExtensionFootnote 1 faculty (in collaboration with state and community partners) have promoted the statewide dissemination of SFP 10–14, an internationally recognized evidence-based family skills training program for youth ages 10–14 and their parents (Spoth et al., 2008; Spoth, Redmond, & Lepper, 1999; Spoth, Reyes, Redmond, & Shin, 1999). The goals of SFP 10–14 are to reduce youth problem behaviors by enhancing parenting and youth coping skills. SFP 10–14 is delivered in 2-h sessions held once a week for seven consecutive weeks. Parents and youth each receive a 1-h separate, concurrent training session followed by a 1-h family session in which they practice skills learned during their separate sessions. The majority of programs (70 %) were implemented in school buildings and the remaining took place in religious, government, or some other public facility.

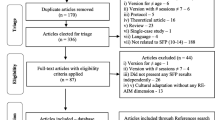

In Washington State, Extension faculty train SFP 10–14 facilitators and help support local implementations. As of 2014, the team has trained over 800 facilitators who have implemented approximately 550 programs reaching over 10,000 parents and youth across the state. Facilitators for these programs submit implementation and outcome data to WSU and in return receive a local outcome report for each program. Starting in 2008, we began collecting information on adaptations. A total of 167 programs submitted these data to WSU from 2008 to 2012; these programs were comparable to the complete sample of programs who have submitted data to WSU since 2003 in terms of program (e.g., number of facilitators, average program cost, language) and participant characteristics (e.g., caregiver average age, race and ethnicity). Fifty-eight percent (N = 97 programs) included a written description for at least one adaptation and therefore were included in our analyses. Because some facilitators in the study reported more than one adaptation during a single program implementation, we first reviewed the qualitative data to identify each distinct instance of an adaptation. We identified 154 unique adaptations reported by the facilitators.

For the 42 % who did not provide a written description, we have no way to know for sure whether this was because they in fact made no adaptations or that they simply omitted information about the adaptations they did make (i.e., that this is missing data). Assuming the former, 58 % is slightly higher than in the Moore et al. (2013) study where only 43 % reported making adaptations. However, it is important to note that the Moore et al. (2013) study included SFP 10–14 programs among a variety of other EBPs as well. It is also important to note that among the 97 programs with reported adaptations, there were 12 facilitators who submitted information on multiple programs in a single year (ranging from two to six programs). Out of the 12 facilitators, eight reported making at least one adaptation coded in the same category across different programs (e.g., change due to group process). These repeat adaptations comprise 17.5 % of the 154 unique adaptations coded.

This research was reviewed and determined to be exempt by the Washington State University Institutional Review Board.

Measures

Adaptation Data

For each 7-week program implemented, the lead facilitator completed an implementation survey which included the following open-ended questions regarding adaptation: “If the program was substantially modified: (1) please tell us the nature of the changes that were made (leaving out program material, adding or changing materials, other); and (2) please take a moment to tell us why (not enough time, high-activity youth, families couldn’t relate to scenarios, etc.).”

Four members of the research team (all authors on this paper) independently coded each unique adaptation (N = 154) using the two coding schemes described below. Once all adaptations were independently coded by each member of the research team according to the three Hill et al. (2007) dimensions (addition/change/deletion, type, reason) and the three Moore et al. (2013) dimensions (fit, timing, alignment), we discussed and resolved disagreements. For the Hill et al. (2007) dimensions, the initial agreement was as follows: 74 % for addition/change/deletion, 66 % for type, and 64 % for reason. For the Moore et al. (2013) dimensions, the initial agreement was as follows: 81 % for fit, 78 % for timing, and 71 % for alignment. For a detailed description of the two coding systems, see the original papers (Hill et al., 2007; Moore et al., 2013). Below we provide brief descriptions of the primary codes as they were utilized in our study.

Hill et al. (2007) Coding System

The first coding dimension included: addition (adding content or process that was not in the original curriculum), change (modifying a portion of the original content or process in concordance with the spirit of what they modified), or deletion (deleting a portion of the original content or process and not replacing it with anything in the same spirit). The second coding dimension was ‘type of adaptation’ which included the following categories: games (entertainment or amusement), activities/icebreakers (educational process or procedure intended to stimulate learning), adaptation to random content (content was deleted/modified/added to, but no clarification provided), group process (changed the method of program delivery or matched delivery to participants in some way), time (added or subtracted time for reasons other than those that fell into other coding categories), videos (added/deleted or substituted something for the video), and adaptation to specific content (specific content was deleted/modified/added to). The third coding dimension was ‘reason for adaptation’ which included the following categories: not enough time (changed/added/deleted content or amount of time because there wasn’t enough time), group attribute (attribute of the group including culture was a reason for change), number of participants (modified a part of the program due to size of the group such as low attendance), need to clarify material (modified something for clarification/emphasis), and unforeseen circumstances (technical difficulties or circumstances beyond facilitator’s control). The code ‘number of participants,’ which was not in the original Hill et al. (2007) coding system, was added because it was reported frequently by the SFP 10–14 facilitators in our study.

Moore et al. (2013) Coding Scheme

The first coding dimension was ‘fit,’ which included the following categories: philosophical (adaptation was made because the program activities or theory did not match or align well with the values or philosophy of the implementing organization or program participants; or adaptation was made to assure the program’s logic and content is delivered in a way that meets the needs or characteristics of the participants), and logistical (adaptation was made because the program’s design does not match or align well with the capacity or context of the implementing organization). The second coding dimension was ‘timing’ which included the following categories: proactive (adaptation was made in anticipation of a potential barrier or problem prior to program implementation or fairly early in implementation), and reactive (adaptation was made during the course of program implementation often due to unanticipated obstacles such as running out of time). The third coding dimension was ‘alignment with program theory’ which included the following categories: positive (adaptation was aligned with program’s theory or logic model), negative (adaptation was not aligned with program’s theory or logic model), and neutral (adaptation was neither in concordance nor did it deviate from program’s theory or logic model).

Results

Aim 1: Comparison of Facilitator-Reported Adaptations to Original Studies

Hypotheses 1a

Our first hypothesis under Aim 1 was that the Pareto principle would hold true using the same coding scheme but different data collection methods and with a different sample. This hypothesis was supported: Fig. 1 shows that four types of adaptations (games, activities, random content, group process) accounted for 76 % of all adaptation types reported and that three reasons for adaptations (not enough time, group attribute, number of participants) accounted for 79 % of all adaptation reasons. The Hill et al. (2007) study found similar results with four types (games, specific content, random content, and activities) and four reasons (not enough time, clarify, group attribute, and disagree with content), each accounting for about 70 % of the reported adaptations.

Pareto diagrams showing reported frequencies of each type (top) and reason (bottom) of program adaptation (left axis). Markers on the curved line indicate cumulative percentages (right axis). The dotted line indicates the cumulative frequency of the four most frequent types of (top) and three most frequent reasons (bottom) for adaptation

Hypothesis 1b

Our second hypothesis was that facilitators would report similar proportions of types and reasons for adaptation as found in Hill et al. (2007). This hypothesis was partially supported. Out of 154 reported adaptations, 130 provided enough information to be coded for addition/change/deletion, 134 for type, and 118 for reason (see Table 1). We found that most adaptations were either changes (45 %) or deletions (43 %), with a much smaller proportion coded as additions (9 %) or some combination of changes/additions/deletions (3 %). In contrast, Hill et al. (2007) found that most adaptations were deletions followed by about equal proportions of additions and changes.

For type of adaptation, adaptations to games (28 %) and activities (25 %) were the most common, followed by adaptations to random content (13 %) and to group process (10 %), time (6 %), videos (5 %), and specific content (5 %). The remaining five types of adaptations (i.e., personality/personal expertise, resource/added information, translation, additional session, rewards/prizes/celebrations) were reported two or fewer times. This is somewhat in line with Hill et al. (2007), who found that games (25 %), activities (12 %), and random content (15 %) were among the most commonly reported types.

With regards to reasons for adaptation, facilitators reported not enough time (37 %) and group attributes (32 %) as the two most common reasons for adaptations, followed by number of participants (9 %), need to clarify material (6 %), and unforeseen circumstances (4 %). The remaining eight adaptation reasons (i.e., group process/dynamics, lack of organization, clarification/emphasis, content didn’t make sense, adjusted for some reason, unforeseen circumstances, translation, participant suggestion) were reported only once or twice. This approximates the findings of Hill et al. (2007) that not enough time (23 %) and group attribute (14 %) were commonly reported reasons; number of participants was not reported in their facilitator interviews and therefore not included in their coding system.

In sum, although there was significant overlap between the earlier and present studies in the most frequently reported codes, there were significant differences in their proportions.

Hypothesis 1c

Our final hypothesis was that the majority of adaptations would be logistical, reactive, and negatively aligned as found in the Moore et al. (2013) system. This hypothesis was strongly supported. Out of the 154 reported adaptations, 127 provided enough information to be coded for fit, 124 for timing, and 127 for alignment. In our study, 57 % were coded as adaptations made due to issues of logistic fit as compared to 42 % made due to issues of philosophical fit and 1 % made due to both. For timing, 54 % were coded as reactive and 46 % as proactive. Finally, for alignment, 61 % were coded as negatively aligned, 26 % were positively aligned, and 13 % were neutral. For each dimension, the proportions found in this study follow patterns similar to those in Moore et al. (2013) who also found that the majority of adaptations were made due to logistical issues, were reactive, and were negatively aligned with the program’s goals.

Aim 2: Intersection of Adaptation Codes

The second aim of this study was to examine the statistical association of the three Hill et al. (2007) dimensions with the three Moore et al. (2013) dimensions using Chi square analyses. These analyses were exploratory rather than hypothesis driven.

Figure 2 shows the intersections between the Hill et al. (2007) add/change/deletion codes and the Moore et al. (2013) fit, alignment, and timing dimensions The top panel shows that additions and changes were most likely adaptations due to philosophical issues (72 and 58 %, respectively) and deletions were most likely due to logistical issues (77 %): χ 2(df = 12, N = 154) = 127.52, p < .001. The middle panel shows that additions and changes were also most likely proactive adaptations (91 and 54 %, respectively) and deletions were most likely reactive (71 %): χ 2(df = 8, N = 154) = 103.59, p < .001. Finally, the bottom panel shows that additions and changes were about equally likely to be positively (36 and 42 %, respectively) as negatively aligned (36 and 37 %, respectively), whereas deletions were most likely to be negatively aligned with the program’s theory and goals (82 %): χ 2(df = 12, N = 154) = 130.75, p < .001.

Figure 3 shows the intersections between the Hill et al. (2007) type codes and the Moore et al. (2013) fit, alignment, and timing dimensions. As the top panel shows, we found that adaptations to games, activities, and random content were more likely to be due to logistical fit issues (54, 62, and 67 %, respectively), but nearly all (92 %) adaptations to group process were due to philosophical issues: χ 2(df = 9, N = 102) = 24.08, p < .01. The middle panel shows that adaptations to games, activities and random content were also more likely to be negatively aligned (65, 68, and 61 %, respectively), whereas adaptations to group process were more likely to be positively aligned (69 %): χ 2(df = 9, N = 102) = 31.86, p < .001. As the bottom panel shows, there was no significant association between adaptation types and timing (i.e., proactive vs. reactive): χ 2(df = 6, N = 102) = 4.16, ns.

Figure 4 shows the intersections between the Hill et al. (2007) reason codes and the Moore et al. (2013) fit, alignment, and timing dimensions. As the top panel shows, we found that adaptations due to insufficient time and number of participants were most likely due to logistical fit issues (93 and 91 %, respectively), and adaptations due to group attributes were most likely due to philosophical issues (74 %): χ 2(df = 6, N = 93) = 54.62, p < .001. The middle panel shows that adaptations due to insufficient time were also most likely to be negative (66 %), and adaptations due to group attribute and number of participants were about equally likely to be negative as positive (53 and 55 % were negative, respectively): χ 2(df = 6, N = 93) = 21.25, p < .01. Finally, the bottom panel shows that adaptations due to not enough time were almost exclusively reactive (98 %) and adaptations due to group attribute were most likely to be proactive (84 %): χ 2(df = 4, N = 93) = 64.75, p < .001.

Discussion

Program adaptation is a reality in community-based implementations of EBPs, and it may also be essential to program success (August et al., 2010; Breitenstein, Fogg, Garvey, Hill, Resnick, & Gross, 2010a; Daele, Audenhove, Hermans, Bergh, & Broucke, 2014; Lara et al., 2011). Practitioners need practical, research-based guidance on how to implement programs in accordance with program models but still be flexible enough to meet the needs of diverse populations and settings (Bopp et al., 2013; Card, Solomon, & Cunningham, 2011; Harn, Parisi, & Stoolmiller, 2013). Thus, we must develop a more comprehensive understanding of the multidimensional nature of adaptations in real-world contexts, and be able to measure them reliably in order to determine their effects on outcomes (Lillehoj, Griffin, & Spoth, 2004; Pankratz, Jackson-Newsom, Giles, Ringwalt, Bliss, & Bell, 2006; Segrott et al., 2014).

Replication of Adaptation Coding Systems

Our goal was to expand the limited knowledge of adaptation through the replication of two established coding systems (Hill et al., 2007; Moore et al., 2013). Replicating the two systems provided insights about their reliability across different contexts, populations, and programs. In many ways our findings replicate those of the original studies, suggesting they are robust even when applied to self-reported data collected from community-based implementations in uncontrolled, non-research settings.

Consistent with Hill et al. (2007) we found that a pattern of only a few types of adaptations and a few reasons comprising the bulk (over 70 %) of all reported adaptations. There was even considerable overlap as to which specific types and reasons were most commonly reported by SFP 10–14 facilitators. Two of the four most common types, games and activities, and two of the three most common reasons, not enough time and group attribute, were the same as in the original study (Hill et al., 2007). Similarly, the Moore et al. (2013) system had remarkably similar findings for the proportions of adaptations coded as positive (about a third) versus negative (about a half) versus neutral alignment (slightly less than one-fifth). For philosophical/logistical fit and proactive/reactive timing codes, the patterns (more adaptations due to logistic reasons than philosophical; more reactive adaptations than proactive) across both studies were similar but with slightly different proportions. The replication of the Moore et al. (2013) findings is especially compelling given the difference in samples. In the original study, Moore and colleagues applied their coding system to data from ten different youth and family-focused EBPs, one of which was SFP 10–14. Their system transported well to our sample, with data from only SFP 10–14. Furthermore, the proportions in each category were the same across the two samples, indicating that the system is especially robust across settings and programs.

Intersections Between Multiple Adaptation Dimensions

We also extended previous work by exploring intersections between the two coding systems. Even though these analyses were exploratory, our results revealed meaningful associations that can inform implementation theory and future research. We found that adaptations made to superficial aspects of the program, like games, were logistical in nature, reactive, and either neutral or negatively aligned with the program’s theory. However, this pattern was similar for adaptations to activities, which may also appear to be a superficial aspect of the program, but are in fact designed to stimulate learning through experience. Adaptations to group process were much more likely to be due to philosophical reasons as expected, but were about equally likely to be proactive as reactive.

Another important contribution of our findings is the information it provides about the global nature (i.e., timing, fit, alignment) of those few, most frequent program-specific types and reasons for adaptation to SFP 10–14. For example, we found that adaptations made due to insufficient time were almost exclusively rated as logistical, negatively aligned, and reactive in nature. We also found that deletions to curriculum, games, activities, and random content were almost exclusively due to logistical issues and negatively aligned with the program’s model. These findings could inform the development of efficient program monitoring tools like implementation checklists, which could be used to guide facilitator decisions regarding adaptation. Running out of time, which often leads to modifications to the program that involve deleting important curriculum content, was a significant issue in our study and aligns with previous findings (Hill et al., 2007; Miller-Day et al., 2013; Moore et al., 2013; Ozer et al., 2010). By noting this and other commonly reported types and reasons for adaptations, particularly those that are more likely to be made reactively and those negatively aligned with the program’s theory, facilitators can proactively brainstorm strategies to handle common issues in ways that adhere to the program model.

Evidence suggests that programs can have high levels of adaptation while maintaining high levels of fidelity (Hansen, 2013), and therefore another strategy would be for developers to use the results of our study to provide a menu of “built-in” adaptations to the program model, which address the most commonly cited reasons for adaptations (e.g., if running out of time, eliminate this activity, but be sure to keep that one; Castro, Barrera, & Martinez, 2004). If program developers provide the option of several possible modifications, all of which align with the program model, facilitators can cover the core program components while flexibly addressing local needs (Card et al., 2011; Daele et al. 2014; Harn et al., 2013; Pettigrew et al., 2013). Overall, our findings should be of interest to program developers and used to improve the format, design, and delivery of the program to reduce the need for adaptations that deviate from the program’s theory.

Our findings do suggest that some adaptations may enhance program delivery in community-based settings, although additional studies linking adaptations to program outcomes are necessary to determine this empirically (Blakely et al., 1987; Hansen, 2013; Hill & Owens, 2013). For example, our results indicate that when facilitators modify group processes or make changes because of the number of participants in their group, they appear to be thoughtful, proactively planned, and positively aligned with a program’s logic model. Theoretical and empirical evidence suggests that adaptations that increase participant engagement and improve program delivery are likely to improve participant outcomes (Berkel, Mauricio, Schoenfelder, & Sandler, 2011; Berkel et al., 2013; Hamre et al., 2010; Pettigrew et al. 2015). Again, future research is needed to determine if these more positively aligned adaptations do in fact lead to increased participant engagement and thus improved behavioral outcomes.

Although our study examined adaptations of one specific family-based preventive intervention in community-based settings, we believe these findings also inform the implementations of behavioral health preventive interventions more generally. For example, we suspect these findings would hold true across similar universal, multi-session, multi-component programs, although previous work examining predictors of sustainment does suggest there may be important implementation differences to account for across program type (Cooper, Bumbarger, & Moore, 2015). Because of the apparent robustness of these coding schemes across settings (and program type in the Moore et al., 2013) in our and previous studies (Hill et al., 2007; Moore et al., 2013), we believe that even if the proportions and intersections of adaptation types and reasons look different across program types, the validity of the coding schemes are likely to generalize.

Contributions, Limitations and Future Directions

In order to advance the study of adaptation in real-world implementations of EBPs, we must develop valid, reliable, classification systems that can be effectively and efficiently used across a variety of settings (Breitenstein et al., 2010a, 2010b; Hansen, 2013; Hill & Owens, 2013). By replicating coding systems and demonstrating similar descriptive findings as the original studies, our study takes an important step toward this goal. Stirman et al. (2013), who developed a system for classifying modifications reported in published research articles describing health behavior interventions in community settings, are among the few researchers to apply a coding system across multiple studies. They found that their coding system could be reliably applied across studies. Possible future research could examine the consistency of the systems described here when used by observers (as in Stirman et al., 2013) or, conversely, the consistency of the Stirman et al. (2013) results across raters (e.g., using facilitator self-reports).

Our study was also unique in that it is one of the few adaptation studies to systematically examine multiple dimensions (e.g., types, reasons, timing) of adaptations and their intersections within the context of a widely disseminated, family-based substance use prevention program (Foxcroft, Ireland, Lister-Sharp, Lowe, & Breen, 2003). Also, most adaptation studies have not taken into account alignment with program theory and timing of the adaptation but focus on categorizing types of adaptations (Cohen et al., 2008; Lara et al., 2011; Miller-Day et al., 2013; Ozer et al., 2010). Hansen et al. (2013) included valence, which is similar to our conceptualization of alignment, and Miller-Day et al. (2013) included timing. However, Moore et al. (2013) is the only coding system to incorporate all three dimensions (fit, timing, and alignment) into one coding system.

It is important to consider these contributions alongside the study’s limitations. Although we believe that facilitator self-reports are the most efficient strategy for collecting adaptation data in the real world, and we are confident these data can be reliably coded, some research suggests self-reported implementation data is biased (Hansen & McNeal, 1999; Hardeman et al., 2008). Facilitators who know they are being monitored may not fully and accurately report modifications to the program—although some studies have shown that facilitators report similar patterns of fidelity but at systematically higher levels than that observed by a third party (Segrott et al., 2014). Also, our self-report measure relied on facilitators’ perception of what a ‘substantial modification’ to the SFP 10–14 curriculum looked like, and it also relied on their ability to accurately report that information in an open-ended format. While the open-ended format allowed facilitators to provide a rich description of the adaptations, the depth of the descriptions varied (in some cases, substantially). It is likely that the reliability of the coding system is affected by this variability; that is, more detailed, better written descriptions are easier to code.

Related to limitations associated with self-report measures are limitations associated with missing data. Fifty-eight percent of the sample included a written description for at least one adaptation, and therefore, were included in the present analyses. For the 42 % who did not provide a written description, we have no way to know for sure whether this was because they in fact made no adaptations or that they simply omitted information about the adaptations they did make. One might expect that programs that deviated greatly from the curriculum would be less likely to admit this, and therefore, our sample of adaptations may be biased. However, in our experience, facilitators are quite comfortable with sharing the changes they make to the program. Those who make the most changes often write the most because they use it as an opportunity to express their concerns about the challenges they encountered with the curriculum, and, in some cases, use it as an opportunity to explain and rationalize poor program results. Another possible limitation is that repeat adaptations comprise 17.5 % of the 154 unique adaptations coded. Although these repetitions could be interpreted as inflating the total number of those types of and reasons for adaptations, we believe it was appropriate to count them as unique because they were reported for different 7-week programs.

In our study, we coded for the alignment of the adaptations with the program’s logic model and therefore, one could hypothesize that negatively aligned adaptations might result in poorer program outcomes. However, we did not directly examine the impact of adaptations on participant engagement or other outcomes, which is a limitation of our study and an important area for future research. Finally, although this study represents a replication of two adaptation coding schemes, and based on similarities in findings across studies we have some confidence in their generalizability, further work is needed to validate them. For example, future studies should consider using these coding system with both observation and self-report data to assure that the dimensions accurately represent the modifications being made in the real world.

Conclusions

Our findings suggest that the Hill et al. (2007) and Moore et al. (2013) systems are robust ways of conceptualizing and categorizing adaptations. In addition to providing detailed information about nature of adaptation, our findings on the associations between adaptation dimensions can be combined with future studies assessing the relationship between adaptations and program outcomes. Researchers, program developers, and policy-makers must understand the issues faced by implementers on the front lines of prevention if we are going to support them. Studies of local adaptations like the present one should help stakeholders better understand the context in which programs are being implemented, guide development of new interventions, and inform issues related to program transferability, generalizability, and sustainability.

Notes

The Extension system, a part of the land-grant university present in each of the United States, is a disseminated network of state university personnel. The mission of Extension is to identify community needs and to support the development of community practices, informed by university research, to meet those needs. County-based personnel serve as the link between campus-based researchers and community agencies, translating research to practice on the one hand and informing the research agenda of the university on the other.

References

August, G. J., Gewirtz, A., & Realmuto, G. M. (2010). Moving the field of prevention from science to service: Integrating evidence-based preventive interventions into community practice through adapted and adaptive models. Applied and Preventive Psychology, 14(1–4), 72–85. doi:10.1016/j.appsy.2008.11.001.

Berkel, C., Mauricio, A. M., Schoenfelder, E., & Sandler, I. N. (2011). Putting the pieces together: An integrated model of program implementation. Prevention Science, 12(1), 23–33. doi:10.1007/s11121-010-0186-1.

Berkel, C., Murry, V. M., Roulston, K. J., & Brody, G. H. (2013). Understanding the art and science of implementation in the SAAF efficacy trial. Health Education, 113(4), 297–323. doi:10.1108/09654281311329240.

Blakely, C. H., Mayer, J. P., Gottschalk, R. G., Schmitt, N., Davidson, W. S., Roitman, D. B., & Emshoff, J. G. (1987). The fidelity-adaptation debate: Implications for the implementation of public sector social programs. American Journal of Community Psychology, 15(3), 253–268. doi:10.1007/BF00922697.

Bookstein, A. (1990). Informetric distributions, part 1: Unified overview. Journal of the American Society for Information Science, 41(5), 368–375. doi:10.1002/(SICI)1097-4571(199007)41:5<368:AID-ASI8>3.0.CO;2-C.

Bopp, M., Saunders, R. P., & Lattimore, D. (2013). The tug-of-war: Fidelity versus adaptation throughout the health promotion program life cycle. The Journal of Primary Prevention, 34(3), 193–207. doi:10.1007/s10935-013-0299-y.

Botvin, G. J., & Griffin, K. W. (2004). Life skills training: Empirical findings and future directions. Journal of Primary Prevention, 25(2), 211–232. doi:10.1023/B:JOPP.0000042391.58573.5b.

Breitenstein, S. M., Fogg, L., Garvey, C., Hill, C., Resnick, B., & Gross, D. (2010a). Measuring implementation fidelity in a community-based parenting intervention. Nursing Research, 59(3), 158–165. doi:10.1097/NNR.0b013e3181dbb2e2.

Breitenstein, S. M., Gross, D., Garvey, C. A., Hill, C., Fogg, L., & Resnick, B. (2010b). Implementation fidelity in community-based interventions. Research in Nursing & Health, 33(2), 164–173. doi:10.1002/nur.20373.

Byrnes, H. F., Miller, B. A., Aalborg, A. E., Plasencia, A. V., & Keagy, C. D. (2010). Implementation fidelity in adolescent family-based prevention programs: Relationship to family engagement. Health Education Research, 25(4), 531–541. doi:10.1093/her/cyq006.

Card, J. J., Solomon, J., & Cunningham, S. D. (2011). How to adapt effective programs for use in new contexts. Health Promotion Practice, 12(1), 25–35. doi:10.1177/1524839909348592.

Castro, F. G., Barrera, M., & Martinez, C. R. (2004). The cultural adaptation of prevention interventions: Resolving tensions between fidelity and fit. Prevention Science, 5(1), 41–45. doi:10.1023/B:PREV.0000013980.12412.cd.

Catalano, R. F., Fagan, A. A., Gavin, L. E., Greenberg, M. T., Irwin, C. E, Jr, Ross, D. A., & Shek, D. T. (2012). Worldwide application of prevention science in adolescent health. The Lancet, 379(9826), 1653–1664. doi:10.1016/S0140-6736(12)60238-4.

Cohen, D. J., Crabtree, B. F., Etz, R. S., Balasubramanian, B. A., Donahue, K. E., Leviton, L. C., et al. (2008). Fidelity versus flexibility: Translating evidence-based research into practice. American Journal of Preventive Medicine, 35(5, Supplement ), S381–S389. doi:10.1016/j.amepre.2008.08.005.

Cooper, B. R., Bumbarger, B. K., & Moore, J. E. (2015). Sustaining evidence-based prevention programs: Correlates in a large-scale dissemination initiative. Prevention Science, 16, 145–157. doi:10.1007/s11121-013-0427-1.

Daele, T. V., Audenhove, C. V., Hermans, D., Bergh, O. V. D., & Broucke, S. V. D. (2014). Empowerment implementation: Enhancing fidelity and adaptation in a psycho-educational intervention. Health Promotion International, 29(2), 212–222. doi:10.1093/heapro/das070.

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350. doi:10.1007/s10464-008-9165-0.

Durlak, J. A., Weissberg, R. P., Dymnicki, A. B., Taylor, R. D., & Schellinger, K. B. (2011). The impact of enhancing students’ social and emotional learning: A meta-analysis of school-based universal interventions. Child Development, 82(1), 405–432. doi:10.1111/j.1467-8624.2010.01564.x.

Dusenbury, L., Brannigan, R., Hansen, W. B., Walsh, J., & Falco, M. (2005). Quality of implementation: Developing measures crucial to understanding the diffusion of preventive interventions. Health Education Research, 20(3), 308–313. doi:10.1093/her/cyg134.

Foxcroft, D. R., Ireland, D., Lister-Sharp, D. J., Lowe, G., & Breen, R. (2003). Longer-term primary prevention for alcohol misuse in young people: A systematic review. Addiction, 98(4), 397–411. doi:10.1046/j.1360-0443.2003.00355.x.

Glasgow, R. E., Lichtenstein, E., & Marcus, A. C. (2003). Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health, 93(8), 1261–1267. Retrieved from http://ajph.aphapublications.org/doi/pdfplus/10.2105/AJPH.93.8.1261.

Hamre, B. K., Justice, L. M., Pianta, R. C., Kilday, C., Sweeney, B., Downer, J. T., & Leach, A. (2010). Implementation fidelity of MyTeachingPartner literacy and language activities: Association with preschoolers’ language and literacy growth. Early Childhood Research Quarterly, 25(3), 329–347. doi:10.1016/j.ecresq.2009.07.002.

Hansen, W. B. (2013). Introduction to the special issue on adaptation and fidelity. Health Education, 113(4), 260–263. doi:10.1108/09654281311329213.

Hansen, W. B., & McNeal, R. B. (1999). Drug education practice: Results of an observational study. Health Education Research, 14(1), 85–97. doi:10.1093/her/14.1.85.

Hansen, W. B., Pankratz, M. M., Dusenbury, L., Giles, S. M., Bishop, D. C., Albritton, J., et al. (2013). Styles of adaptation: The impact of frequency and valence of adaptation on preventing substance use. Health Education, 113(4), 345–363. doi:10.1108/09654281311329268.

Hardeman, W., Michie, S., Fanshawe, T., Prevost, A. T., Mcloughlin, K., & Kinmonth, A. L. (2008). Fidelity of delivery of a physical activity intervention: Predictors and consequences. Psychology & Health, 23(1), 11–24. doi:10.1080/08870440701615948.

Harn, B., Parisi, D., & Stoolmiller, M. (2013). Balancing fidelity with flexibility and fit: What do we really know about fidelity of implementation in schools? Exceptional Children, 79(2), 181–193. doi:10.1177/001440291307900204.

Hill, L. G., Maucione, K., & Hood, B. K. (2007). A focused approach to assessing program fidelity. Prevention Science, 8(1), 25–34. doi:10.1007/s11121-006-0051-4.

Hill, L. G., & Owens, R. W. (2013). Component analysis of adherence in a family intervention. Health Education, 113(4), 264–280. doi:10.1108/09654281311329222.

Lara, M., Bryant-Stephens, T., Damitz, M., Findley, S., Gavillán, J. G., Mitchell, H., et al. (2011). Balancing “fidelity” and community context in the adaptation of asthma evidence-based interventions in the “real world”. Health Promotion Practice, 12(6 suppl 1), 63S–72S. doi:10.1177/1524839911414888.

Lillehoj, C. J., Griffin, K. W., & Spoth, R. (2004). Program provider and observer ratings of school-based preventive intervention implementation: Agreement and relation to youth outcomes. Health Education & Behavior, 31(2), 242–257. doi:10.1177/1090198103260514.

Miller-Day, M., Pettigrew, J., Hecht, M. L., Shin, Y., Graham, J., & Krieger, J. (2013). How prevention curricula are taught under real-world conditions: Types of and reasons for teacher curriculum adaptations. Health Education, 113(4), 324–344. doi:10.1108/09654281311329259.

Moore, J. E., Bumbarger, B. K., & Cooper, B. R. (2013). Examining adaptations of evidence-based programs in natural contexts. The Journal of Primary Prevention, 34(3), 147–161. doi:10.1007/s10935-013-0303-6.

Ozer, E. J., Wanis, M. G., & Bazell, N. (2010). Diffusion of school-based prevention programs in two urban districts: Adaptations, rationales, and suggestions for change. Prevention Science, 11(1), 42–55. doi:10.1007/s11121-009-0148-7.

Pankratz, M., Jackson-Newsom, J., Giles, S., Ringwalt, C., Bliss, K., & Bell, M. (2006). Implementation fidelity in a teacher-led alcohol use prevention curriculum. Journal of Drug Education, 36(4), 317–333. doi:10.2190/H210-2N47-5X5T-21U4.

Pettigrew, J., Graham, J. W., Miller-Day, M., Hecht, M. L., Krieger, J. L., & Shin, Y. J. (2015). Adherence and delivery: Implementation quality and program outcomes for the seventh-grade keepin’it REAL program. Prevention Science, 16(1), 90–99. doi:10.1007/s11121-014-0459-1.

Pettigrew, J., Miller-Day, M., Shin, Y., Hecht, M. L., Krieger, J. L., & Graham, J. W. (2013). Describing teacher–student interactions: A qualitative assessment of teacher implementation of the 7th grade keepin’it REAL substance use intervention. American Journal of Community Psychology, 51(1–2), 43–56. doi:10.1007/s10464-012-9539-1.

Rohrbach, L. A., Grana, R., Sussman, S., & Valente, T. W. (2006). Type II translation: Transporting prevention interventions from research to real-world settings. Evaluation and the Health Professions, 29(3), 302–333. doi:10.1007/s11121-014-0459-1.

Segrott, J., Murphy, S., Morgan-Trimmer, S., Scourfield, J., Holliday, J., Thomas, C., et al. (2014). Programme fidelity in a large pragmatic trial: Findings from a process evaluation of the Strengthening Families 10–14 UK Programme (SFP 10–14 UK). Presented at the Society for Prevention Research.

Spoth, R. L., Randall, G. K., Trudeau, L., Shin, C., & Redmond, C. (2008). Substance use outcomes 5 1/2 years past baseline for partnership-based, family-school preventive interventions. Drug and Alcohol Dependence, 96(1–2), 57–68. doi:10.1016/j.drugalcdep.2008.01.023.

Spoth, R., Redmond, C., & Lepper, H. (1999a). Alcohol initiation outcomes of universal family-focused preventive interventions: One- and two-year follow-ups of a controlled study. Journal of Studies on Alcohol and Drugs, Supplement, 13, 103–111. doi:10.15288/jsas.1999.s13.103.

Spoth, R., Reyes, M. L., Redmond, C., & Shin, C. (1999b). Assessing a public health approach to delay onset and progression of adolescent substance use: Latent transition and log-linear analyses of longitudinal family preventive intervention outcomes. Journal of Consulting and Clinical Psychology, 67(5), 619–630. doi:10.1037/0022-006X.67.5.619.

Spoth, R., Rohrbach, L. A., Greenberg, M., Leaf, P., Brown, C. H., Fagan, A., et al. (2013). Addressing core challenges for the next generation of Type 2 translation research and systems: The translation science to population impact (TSci Impact) framework. Prevention Science, 14(4), 319–351. doi:10.1007/s11121-012-0362-6.

Stirman, S. W., Miller, C. J., Toder, K., & Calloway, A. (2013). Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implementation Science, 8(1), 65. doi:10.1186/1748-5908-8-65.

Veniegas, R. C., Kao, U. H., & Rosales, R. (2009). Adapting HIV prevention evidence-based interventions in practice settings: An interview study. Implementation Science: IS, 4(1), 76. doi:10.1186/1748-5908-4-76.

Wandersman, A., Duffy, J., Flaspohler, P., Noonan, R., Lubell, K., Stillman, L., et al. (2008). Bridging the gap between prevention research and practice: The interactive systems framework for dissemination and implementation. American Journal of Community Psychology, 41(3–4), 171–181.

Acknowledgments

Portions of this research were supported by two grants from the National Institute of Drug Abuse of the U.S. National Institutes of Health (R21 DA025139-01Al, R21 DA19758-01). Our thanks to the program providers and families who participated in this research and to our undergraduate and graduate research assistants.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Cooper, B.R., Shrestha, G., Hyman, L. et al. Adaptations in a Community-Based Family Intervention: Replication of Two Coding Schemes. J Primary Prevent 37, 33–52 (2016). https://doi.org/10.1007/s10935-015-0413-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10935-015-0413-4