Abstract

Background: The literature examining the effects of workstation, eyewear and behavioral interventions on musculoskeletal and visual symptoms among computer users is large and heterogeneous. Methods: A systematic review of the literature used a best evidence synthesis approach to address the general question “Do office interventions among computer users have an effect on musculoskeletal or visual health?” This was followed by an evaluation of specific interventions. Results: The initial search identified 7313 articles which were reduced to 31 studies based on content and quality. Overall, a mixed level of evidence was observed for the general question. Moderate evidence was observed for: (1) no effect of workstation adjustment, (2) no effect of rest breaks and exercise and (3) positive effect of alternative pointing devices. For all other interventions mixed or insufficient evidence of effect was observed. Conclusion: Few high quality studies were found that examined the effects of interventions in the office on musculoskeletal or visual health.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The most common occupational health problems among computer users are visual and musculoskeletal symptoms and disorders [12]. Health problems include eye discomfort, sustained pain in the neck and upper extremities and regional disorders, such as wrist tendonitis, epicondylitis and trapezius muscle strain. The workplace risk factors include hours of computer use, sustained awkward head and arm postures, poor lighting conditions, poor visual correction, and work organizational factors [5, 8, 13, 16–18, 20, 21, 23]. The Institute of Medicine recently called for more intervention research to provide scientifically credible evidence to practitioners who are responsible for risk reduction among computer users [19].

The literature describing interventions intended to prevent or alleviate visual and musculoskeletal symptoms and disorders among computer users has grown in recent years. A broad literature search for participatory ergonomic interventions revealed a twofold increase in the number of articles from 1990 to 2004 [6]. However, the studies conducted to evaluate the effects of workstation, eyewear and behavioral interventions on upper body disorders and visual symptoms are of mixed quality [14]. The methodological heterogeneity challenges researchers attempting to synthesize the evidence. The systematic review process provides a structured approach for evaluating the literature and synthesizing evidence regarding prevention strategies [7, 9, 24]. Furthermore, systematic reviews provide an opportunity to critically reflect on research methods and identify fruitful directions for future research.

The purpose of the systematic review was to identify published studies that evaluated the effects of workplace interventions on visual or upper body musculoskeletal symptoms or disorders among computer users. Studies which met a priori design and quality criteria were evaluated in detail and results were extracted and synthesized. The review included both primary and secondary prevention studies. Based on the evidence synthesis, recommendations were made for primary and secondary prevention and for future intervention studies.

Materials and methods

Primary and secondary intervention studies were systematically reviewed using a consensus process developed by Cochrane [4] and Slavin [24] and adapted by the review team. A review team of 9 researchers from North America (paper co-authors) were identified and invited to participate. Each was identified based on his/her expertise in conducting epidemiologic or intervention studies related to musculoskeletal or visual disorders among computer users or his/her experience conducting systematic reviews. The expertise covered the fields of epidemiology, ergonomics, occupational medicine, safety engineering and optometry.

The basic steps of the systematic review were:

-

Step 1 – Formulate research question and search terms

-

Step 2 – Identify articles relevant to the research question expected to be found by the search

-

Step 3 – International experts contacted to identify key articles

-

Step 4 – Conduct literature search and pool articles with those submitted by experts

-

Step 5 – Level 1 review: Select articles for inclusion based on relevance to the review question and quality using 11 screening criteria

-

Step 6 – Level 2 review: Assess quality of relevant articles with scoring on 19 criteria

-

Step 7 – Level 3 review: Extract data from relevant articles for summary tables

-

Step 8 – Evidence synthesis

The rules or actions for each review step were achieved through a consensus process. For step 1, the review team reached consensus on the primary question “Do office interventions among computer users have an effect on musculoskeletal or visual health?”. The review team also considered studies focused on the effects of specific intervention types (e.g., training, alternative keyboard, glasses, etc.). Three terms from the primary question, “Office”, “Intervention” and “Health”, were defined and used to develop literature search criteria.

“Office” was defined according to work setting and technology. The definition was limited to traditional office settings where computers (either desktop or laptop) were used to process information. Studies involving non-traditional office settings, such as airports, rent-an-office, home offices or traveling offices of sales people or in a setting where the work primarily involved manufacturing or material handling were excluded. Laboratory-based experimental studies were also excluded.

“Intervention” was broadly defined by using the traditional hazard control tiers of engineering controls, administrative controls and personal protective equipment.

“Health” was defined broadly to include musculoskeletal and visual symptoms as well as clinical musculoskeletal and visual disorders or diagnoses. Visual diagnoses included: binocular disorders, accommodative disorders and conditions related to dry eye if specific to computer uses in office environments. Visual diagnoses excluded were: cataracts, retina disorders (e.g., diabetic retinopathy) and infection (e.g., conjunctivitis and/or inflammation - uveitis). Studies which reported only health outcome data from OSHA 200/300 logs or workers’ compensation records were excluded. While muscle loading research (e.g., electromyography) was recognized as defining a plausible pathway, field studies with only muscle loading as the outcome were excluded.

The review was limited to articles published or in press in the English language, peer-reviewed, scientific literature from 1980 forward. This year corresponds to the time when computers began to be used widely in office settings. Book chapters and conference proceedings were excluded. The primary reasons for the limitations were language proficiency of the team and time to complete the review steps.

Literature search

Based on the research question, literature search terms were identified and combined to search the following databases: Medline, Embase, CINAHL and Academic Source Premier. The search terms fell into three broad categories: intervention, work setting and health outcomes (Table 1). Overall the search categories were chosen to be inclusive. However, within the work settings category some terms were exclusive (e.g., non-office based). The specific disease terms: cataract, conjunctivitis, uveitis, diabetic retinopathy, neoplasms and the term muscle loading were used to exclude articles. The search strategy combined the three categories using the AND Boolean operator, while the terms within each category were combined with the Boolean OR operator.

A list of 28 relevant articles was identified by the review team prior to the literature search to test the sensitivity of the literature search procedure. A preliminary literature search missed 13 of the 28 articles due primarily to the absence of key words in the ‘work setting’ category (Table 1). The search was expanded to include the terms ‘computer’ and ‘computer user.’ The second search captured 25 of the 28 key articles identified by the team and was considered evidence of adequate search sensitivity.

International experts were identified and asked to submit relevant published articles or articles in press. The request also included articles accepted for review and from the grey literature (e.g., technical reports, book chapters, theses or dissertations, and conference presentations). The purpose for obtaining the grey literature was to review the bibliographies for relevant peer-reviewed articles. Twenty-eight articles identified by four outside experts were added to the articles reviewed after duplicate references were removed.

Level 1: Selection for relevance

The broad search strategy captured many non-relevant studies and the Level 1 review was designed to exclude them. The Level 1 review required reading of the article title and abstract and, if necessary, the full article. For efficiency, the Level 1 review was divided into two steps, Level la and Level Ib. Articles were screened for relevance at Level la using three criteria: 1) an intervention occurred, 2) the study took place in an office setting, and 3) the intervention was related to computer use. Articles not meeting Level la were excluded from further review. The Level 1b review was then used to screen for 8 article characteristics or qualities (Table 2). One research team member reviewed each article at Level la, while two members reviewed and reached consensus on each article at Level 1b. Articles not meeting Level 1b criteria were excluded from further review.

Since the Level la review was done by a single reviewer, biases could be introduced. Therefore, a quality control (QC) check of the Level la screen was done by an independent reviewer (QC reviewer) who had methodological and content expertise. Ten studies were randomly chosen from each of the eight reviewers and evaluated by the QC reviewer; five of the ten were among those that had been accepted by the reviewer and the remaining five had been excluded. The QC reviewer agreed with the reviewers’ classifications of 70 of 80 articles. He identified four articles for inclusion that the review team excluded. Three of these articles [15, 25, 26] would have been excluded at Level la if the QC reviewer had been involved in group discussions regarding interpretation and application of the Level la screening criteria. The fourth article [22] was excluded at Level 1b. The reviewer also identified six articles for exclusion that the review team had included after Level la screening. Of these six articles, five were excluded by the review team at Level 1b. Overall, we considered the quality of the Level la review process acceptable.

Level 2: Quality assessment

Articles that passed the Level 1 review were scored for quality in the Level 2 review. The team developed a list of 19 methodological criteria (Table 3) to assess article quality. Each article was independently reviewed by two team members and rated as either meeting or not meeting each of these criteria. To reduce bias, review pairs were rotated randomly with at least two other team members. The reviewer pairs were required to reach consensus on quality criteria. Team members did not review articles they had consulted on, authored or co-authored.

Reviewer pair disagreements were identified and reviewers discussed their differences to reach resolution. In cases where agreement could not be reached, a third reviewer was consulted to assist in obtaining consensus.

Summary quality scores for each article were based on a weighted sum score of the 19 criteria. The weighting values assigned to each of the 19 criteria ranged from “somewhat important” (1) to “very important” (3) based on an a priori group consensus process (see Table 4). The highest possible weighted score for an article was 43. Each article received a quality ranking by dividing the weighted score by 43 and multiplying by 100. For evidence synthesis articles were grouped into high (86% to 100%), medium (50% to 85%) and low (0% to 49%) quality categories. The categories were determined by team consensus with reference to review methodology literature [1, 4, 24].

Level 3: Data extraction

The data extracted from each study were used to build summary tables to enable evidence synthesis and the development of overall conclusions. Data extraction for each paper was performed independently by two reviewers and, again, reviewer pairs were rotated to reduce bias. Team members did not review articles they consulted on, authored or co-authored. Differences between reviewers were identified and resolved by consensus. Standardized data extraction forms were developed by the review team based on existing forms and data extraction procedures [9]. Reviewer pairs extracted data on: study design, intervention, musculoskeletal and visual outcome measures, statistical analyses and study findings (see Table 5). During data extraction, reviewers also re-evaluated the Level 2 methodological quality ratings. Changes made to the Level 2 quality ratings required approval by the entire review team.

Evidence synthesis

The evidence synthesis was based on a best evidence synthesis approach [7, 9, 24]. Studies reviewed were heterogeneous: they came from different countries; employed different kinds of interventions; used different study designs; focused on different health outcomes (visual or musculoskeletal); used different health measures; and, conducted substantially different kinds of statistical analyses. Such a high level of heterogeneity required a synthesis approach most commonly associated with Slavin and known as “best evidence synthesis” [24]. The team's approach was adapted from systematic reviews of workplace-based return to work interventions [9] and prevention incentives of insurance and regulatory mechanisms for occupational health and safety [27].

The best evidence synthesis approach considers article quality, quantity of evidence and the consistency of the findings among the articles (Table 6) to classify the evidence as strong, moderate, mixed, partial or insufficient [24]. The synthesis approach first answered the general question about all office ergonomic interventions and then, in a series of post-hoc evaluations, summarized the evidence for each specific intervention category (e.g., VDT glasses). Where specific data values were not reported, the team abstracted data from figures. When multiple findings were reported, the team indicated whether appropriate multiple comparisons were considered. Finally, both significant and non-significant trends were considered and reported. Initially, the plan was to calculate effect sizes for each article in order to apply a uniform method to evaluating the strength of associations. However, this plan was abandoned due to the heterogeneity of outcome measures and study methods and the failure of many articles to present the data necessary to calculate effect sizes. Synthesis conclusions were accepted by review team consensus. The review team classified a study with any positive results and no negative results as a positive effect study. That is, a study with both positive effects and no effects (i.e., no differences between groups) was classified as a positive effect study. A study with only no effects was classified as a no effect study.

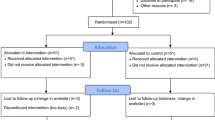

Flowchart of systematic review process up to data extraction with tracking of number of articles associated with each step. While 33 articles were moved forward, two Aaras (1999 and 2002) articles were combined since the 2nd paper was determined to be supplemental to the primary paper. Martin, 2004 and Gatty, 2003 articles were combined since the papers reported results from the same study. Thus the 31 reflects studies not articles

Results

Literature search and selection for relevance

The literature search, using the terms in Table 1, identified 7313 articles after the results from the different databases were merged and duplicates were removed (Fig. 1). The Level la review resulted in exclusion of 6948 articles. The remaining 365 articles were then subjected to Level 1b review. The team excluded 332 articles leaving 33 to be reviewed for methodological quality at Level 2. Four of these articles: Gatty [51] and Martin et al. [50], as well as Aaras, 1999 and Aaras, 2002, were considered as just 2 articles because the pairs of articles reported findings from the same study. This left 31 articles for Level 2 review. The 31 studies were each reviewed by two reviewers using the quality assessment questions in Table 3. The team completed data extraction for all studies evaluated for quality to give a complete picture of the state of the literature.

Methodological quality assessment

The 31 studies that met the relevance criteria were assessed for methodological quality and assigned a quality ranking score. The studies were placed into three quality categories: high (86–100%), medium (50–85%) and low (0–49%).

Nine studies were classified as meeting criteria for high quality [32, 35, 39, 42, 48, 57, 58, 60, 61]. All but one were randomized trials [32]. Despite classification as high quality, most of these studies did not state a primary hypothesis (7 of 9), describe potential contamination between groups (4 of 9) or compare the differences between those who remained in the study and those who dropped out (6 of 9).

The remainder of the studies (22) were classified as medium quality. The most common differences between medium and high quality studies were related to random allocation (15 of 22), descriptions of inclusion/exclusion criteria (14 of 22), reporting a participation rate over 40% (6 of 22), reporting loss to follow up (15 of 22) and adjustment for the effect of covariates/confounders (4 of 22). The medium quality studies did not score as well as the high quality studies on the criteria: having a follow up longer than 1 month; describing baseline characteristics by experimental group; reporting loss to follow up; confirming the intervention took place; and describing the effect of the intervention on an exposure parameter (e.g. changes in posture). The medium quality studies generally scored well on the criteria: stating the research question, having concurrent comparison groups, presenting baseline characteristics, describing the intervention implementation, ascertaining covariates/confounders and describing statistical methods.

No studies were classified as low quality. Having no low quality studies was not surprising given that the Level 1b review included some quality criteria which resulted in the lower quality studies not progressing past Level 1.

Data extraction and evidence synthesis

Data were extracted for synthesis from the 31 studies rated for methodological quality. Data extraction results are presented by 15 consensus intervention categories. The 15 intervention categories and a detailed description of the interventions for each study are presented in Table 7 (additional data from the studies reviewed can be found in a detailed report of this review (http://www.iwh.on.ca/research/sr-wie.php)).

The most common interventions were training (9 of 31) and workstation adjustments (6 of 31). Studies that added new equipment such as arm supports, viewing screen filters, keyboards or pointing devices were not considered workstation adjustments. In many studies, participants receiving the intervention were compared to members of a control group who received either basic ergonomic training or a handout. For example, most of the workstation adjustment intervention groups also received an ergonomic training, while the control groups received just the ergonomic training. Fewer studies (two each) reported on the effectiveness of lenses/VDT glasses, arm supports, eye drops, keyboards, and screen filters. The remaining interventions were evaluated by single studies (see Table 7). Importantly, substantial heterogeneity was observed within the intervention categories for the specific equipment employed, training methods used, workstation adjustments made and intervention protocols.

Some of the study characteristics that were considered important when examining comparability and generalizability are shown in Table 8. The studies originated in various continents: Europe (n=9), Asia (n=2), Australia (n=1), and North America (n=19). A variety of industries and job titles were represented with no one industry or job title being dominant across the studies. However, most of the study participants’ primary job duties involved data entry.

The study designs were predominantly randomized trials (n=23); eight (of 9) high quality studies and 15 (of 22) medium quality studies were randomized trials. The sample sizes tended to be small but varied from 15 [52] to 577 [54]. The length of observation varied from 5 days [59] to 18 months [36]. The level of statistical analysis varied across studies; 12 studies (8 high quality and 4 medium quality) adjusted for one or more covariates in the final analysis.

A summary of the intervention effects are presented in Table 9. The Brisson et al. [35], Mekhora et al. [53], and Horgren et al. [46] studies were removed from consideration from evidence synthesis because they did not analyze between-group differences (just within-group differences). The review team did not find a negative or adverse effect for any intervention. The evidence is summarized by intervention category.

Exercise training

One medium quality study evaluated exercise training administered by a neck school approach [47]. No effect on musculoskeletal outcomes was found. There was insufficient evidence to determine whether exercise training has an effect on musculoskeletal outcomes since there was only one study.

Stress management training

One medium quality study found no effect on musculoskeletal outcomes for a stress management training intervention [39]. There was insufficient evidence to determine whether stress management training has an effect on musculoskeletal outcomes since there was only one study.

Ergonomics training

Four studies examined ergonomics training: one of high quality [32] and three of medium quality [34, 43, 55]. The high quality and one medium quality study [43] found no effect, while the two medium quality studies [34, 55] found both positive and no effects. The four studies implemented different types of trainings ranging from a one hour lecture on ergonomics to multiple participatory training sessions totaling four hours. The studies assessed different musculoskeletal endpoints. The four studies provided mixed evidence of the effect of ergonomics training on musculoskeletal outcomes. One medium quality study [55] examined visual outcomes. There was insufficient evidence with this single study to determine whether ergonomics training has an effect on visual outcomes.

Ergonomics training and workstation adjustment

One medium quality study examined training plus workstation adjustments and found a positive effect on musculoskeletal outcomes [50]. There was insufficient evidence to conclude that training and workstations adjustments together have an effect on musculoskeletal outcomes with only one study.

New chair

One high quality study [32] found a positive effect on musculoskeletal outcomes with the implementation of a highly adjustable new chair with ergonomics and chair training. There was insufficient evidence to conclude that a new chair with training has an effect on musculoskeletal outcomes with only one study.

Workstation adjustments

Two high quality [42, 48] and two medium quality studies [38, 56] examined the effects of a variety of workstation adjustments. The control groups received ergonomics training or no intervention. None of the studies found an effect of workstation adjustments on musculoskeletal or visual outcomes. The studies provide moderate evidence for no effect of workstation adjustments on musculoskeletal outcomes. Two medium quality studies [48, 56] examined visual outcomes and found no effect on visual/eye discomfort. There was moderate evidence that workstation adjustments have no effect on visual outcomes.

Lighting, workstation adjustment and VDT glasses

One medium quality study evaluated the effects of new lighting, workstation adjustment and VDT glasses [30] and found both positive and no effects. There was insufficient evidence to conclude that lighting, workstation adjustment and VDT glasses have an effect on musculoskeletal or visual outcomes with only one study.

Arm supports

There were two studies on arm supports: the one of high quality [58] found positive effects and the one of medium quality [49] found no effects on musculoskeletal outcomes. These studies provide mixed evidence that arm supports have an effect on musculoskeletal outcomes.

Alternative pointing devices

Two studies examined the effect of alternative pointing devices on musculoskeletal outcomes in comparison to a conventional mouse. The one high quality study [58] found both positive effects and no effects for a trackball compared to a conventional mouse. The one medium quality study [28] found positive effects on musculoskeletal outcomes for an alternative mouse compared to a conventional mouse. These studies provide moderate evidence that pointing devices have an effect on musculoskeletal outcomes.

Alternative keyboards

Two high quality studies examined the effect of alternative keyboards on musculoskeletal outcomes [57, 60]. Tittiranonda et al. [60] found positive effects for one of three alternative geometry keyboards when compared to a conventional keyboard. Rempel et al. [57] found positive effects for a keyboard with a modified keyswitch force displacement profile. Although positive effects were found in both studies, the Tittiranonda study found no effects for two keyboards in independent comparisons with a placebo keyboard. Therefore we have a situation where two alternative keyboards in two different studies were shown to have positive effects and two keyboards from a single study were shown to have no effect. As a result the team felt these results represented a mixed level of evidence that alternative keyboards have an effect on musculoskeletal outcomes.

Rest breaks

Four studies, one of high quality [61] and three of medium quality [41, 44, 52], evaluated rest breaks. The high quality and one medium quality study [44] found no effect on musculoskeletal outcomes. The two other medium quality studies [41, 52] found both positive and no effects depending on the time between rest breaks and musculoskeletal outcomes. There was mixed evidence about the effect of breaks on musculoskeletal outcomes. Evidence was insufficient to conclude that rest breaks have an effect on visual outcomes with only one study examining this association [41] and finding both positive and no effects.

Rest breaks and exercise

Two studies, one of high quality and one of medium quality, examined the effects of rest breaks with stretching exercises [44, 61]. Neither study reported an effect on musculoskeletal outcomes. There was moderate evidence that rest breaks together with stretching exercises have no effect on musculoskeletal outcomes.

New office

A single medium quality study evaluated a new office as an intervention [54]. The intervention included a new office, new lighting, new equipment and ergonomics training. There was insufficient evidence to conclude that a new office has an effect on musculoskeletal outcomes since there is only one study.

Screen filters

Two medium quality studies examined the effects of screen filters; one [45] found a positive effect and one [40] found no effect on musculoskeletal and visual outcomes. There was mixed evidence that screen filters have an effect on musculoskeletal or visual outcomes.

VDT glasses

One medium quality study examined the effects of VDT glasses on musculoskeletal and visual outcomes [37]. The study compared VDT glasses to usual glasses. There was insufficient evidence to conclude that VDT glasses have an effect on musculoskeletal or visual outcomes when compared to usual glasses since there was only one study.

Lens types

One medium quality study evaluated the effects of lens type on musculoskeletal and visual outcomes [36]. One occupational lens design was compared to another occupational lens design. The single study provided insufficient evidence to conclude that a specific lens design has an effect on musculoskeletal or visual outcomes when compared to another lens type.

Herbal eye drops

One medium quality study evaluated the effect of herbal eye drops in comparison to two other types of eye drops [33]. There was insufficient evidence to conclude that herbal eye drops have an effect on visual outcomes when compared to conventional eye drops since there was only one study.

OptiZen™ eye drops

One medium quality study evaluated the effect of OptiZen™ eye drops in comparison to another type of eye drop [59]. The single study provided insufficient evidence to conclude that OptiZen™ eye drops have an effect on visual outcomes compared to conventional eye drops.

Discussion

This systematic review sought to answer the question, “Do office interventions among computer users have an effect on musculoskeletal or visual health status?,” and to consider the evidence for effectiveness of specific intervention categories. One major observation was that the office ergonomic intervention literature is heterogeneous in the interventions tested, the study designs employed, and the outcomes measured. Across the 31 studies evaluated in detail, the results suggested a mixed level of evidence for the effects of ergonomic interventions on musculoskeletal and visual outcomes. A mixed level of evidence means there were medium to high quality studies with inconsistent findings. The mixed level of evidence finding may be due to the broad range of interventions included in the review. Importantly, no evidence was found that any office ergonomic intervention had a negative or deleterious effect on musculoskeletal or visual health. The above conclusions do not change when considering only high quality studies.

When examining specific intervention categories, for no intervention was there a strong level of evidence that a specific office ergonomic intervention type improved musculoskeletal or visual health outcomes. The breadth of phrases like “workstation adjustment” and “office equipment”, which aggregate diverse interventions, coupled with a variety of operational definitions of musculoskeletal and visual outcome measures may preclude making strong conclusions.

A moderate level of evidence was found for three intervention categories.

-

Moderate evidence was found that workstation adjustments as implemented in the studies reviewed have NO effect on musculoskeletal or visual outcomes.

-

Moderate evidence was found that rest breaks together with exercise during the breaks have NO effect on musculoskeletal outcomes.

-

Moderate evidence was found that alternative pointing devices have a positive effect on musculoskeletal outcomes.

It should be noted the workstation adjustment interventions were usually compared to ergonomic training. Based on these findings, care should be taken in making any generalizations about the positive role for either workstation adjustments or rest breaks together with exercises on improving musculoskeletal or visual health. However, the results should not discourage researchers and practitioners from continuing to develop and test new workstation adjustments or rest break patterns in combination with exercises.

While moderate evidence was found that alternative pointing devices improved musculoskeletal health, the team considered the devices studied (a trackball and Anir (3M) mouse) to be very different input devices. While both were designed to reduce wrist pronation, Rempel et al. [58] found positive effects only for the left side of the body. Given right handed dominance, the team does not consider the health effects as strongly as it would have if the effects had occurred on the right side of the body. Clearly, more high quality alternative pointing device studies are required.

Considerable diversity of office ergonomic interventions and musculoskeletal and visual endpoints were observed in the literature. The range of workplaces, countries and industries where the interventions were implemented was also diverse. The team found a mixed level of evidence (moderate and high quality studies with inconsistent findings) for a number of interventions.

-

Evidence was mixed that ergonomics training, arm supports, alternative keyboards, and rest breaks have a positive effect on musculoskeletal outcomes.

-

Evidence was mixed that viewing screen filters have a positive effect on visual outcomes.

The team considered the mixed evidence group of intervention categories to be of particular importance to researchers, funding agencies, organized labor, and employers participating in research. For several intervention categories, one or two additional high quality studies might allow for more definitive conclusions.

Finally, many office ergonomic interventions were unique (e.g., new chair) or a unique combination of interventions (e.g., lighting, workstation adjustment, VDT glasses) and were evaluated in just one study. With single studies, evidence was insufficient to make conclusions about intervention effectiveness.

-

Evidence was insufficient to conclude that exercise training, stress management training, ergonomics training together with workstation adjustment, new chair, lighting plus workstation adjustment plus VDT glasses, new office, lens type or VDT glasses had effects on musculoskeletal outcomes.

-

Evidence was insufficient to conclude that ergonomics training, rest breaks, lighting plus workstation adjustment plus VDT glasses, lens type, VDT glasses, herbal eye drops or OptiZen™ eye drops had an effect on visual outcomes.

Many interventions could provide fertile ground for additional high quality studies. Researchers, funders, employers and labor should attend to the effects (Table 9) and study quality (Table 3) when determining interest and investment in research topics.

The high quality studies reviewed shared common threads regardless of the intervention or outcome. All had concurrent comparison groups and all but one were randomized trials. Each was designed to limit threats to internal and external validity. However, dissimilar musculoskeletal and visual outcomes make integrating findings and calculating effect sizes for the interventions difficult. For musculoskeletal outcomes, the review group recommends that studies be 4 to 12 months in duration to allow for examining the persistence of effects. For visual outcomes, the time required to observe effects is uncertain. It may be that short duration studies are adequate to determine long-term health effects. When multiple changes are introduced with an intervention, it is a challenge to identify the component of the intervention that is driving the observed effects. For example, simultaneous implementation of lighting, workstation adjustment, and use of VDT glasses [30] does not allow determination of which intervention component contributes to the symptom improvement. One potential action that stakeholders could take is to convene a conference or a series of position papers advocating standards for office ergonomic intervention research.

The review team considers it important to continue to develop the office ergonomics systematic review literature in several ways. First, non-English language articles and the grey literature may be valuable to the process. Second, contacting the authors of published articles to clarify findings may also be useful. When possible, studies where between group comparisons were not made should be re-analyzed to provide evidence that can be included in data extraction. In an effort to calculate effect sizes, necessary data not provided in the articles should be obtained from researchers, if possible.

Recommendations

In the opinion of the review team, policy recommendations should be based on strong levels of evidence. A strong level of evidence requires consistent findings from a number of high quality studies. The review did not find this level of evidence. The team felt that with moderate levels of evidence it was possible to make recommendations for “practices to consider.” For two of the intervention categories for which a moderate levels of evidence was found, that evidence showed NO effect of the interventions on musculoskeletal or visual outcomes. The third finding of a moderate level of evidence suggested that alternative pointing devices have a positive effect on musculoskeletal outcomes. However, the category of pointing devices is broad and aggregated results from an alternative mouse study and a trackball study make issuing practice recommendations difficult.

An important message to all stakeholders is that the current state of the peer reviewed literature provides relatively few high quality studies of the effects of office ergonomic interventions on musculoskeletal or visual health.

References

AHRQ Guidelines. http://www.ahrq.gov/; 2005.

Andersen JH, Thomsen JF, Overgaard E. Computer use and carpal tunnel syndrome: A 1-year follow-up study. 2003;289:2963–69.

Bergqvist U, Wolgast E, Nilsson B, Voss M. The influence of VDT work on musculoskeletal disorders. Ergonomics 1995;38:754–62.

Cochrane Manual. http://www.cochrane.org/admin/manual.html; 2005.

Cole BL. Do video display units cause visual problems? A bedside story about the processes of public health decision-making. Clin Exp Optom 2003;86:205–20.

Cole D, Rivilis I, VanEerd D, Cullen K, Irvin E, Kramer D. Effectiveness of Participatory Ergonomic Interventions: A Systematic Review. Toronto: Institute for Work & Health; 2005.

Côé P, Cassidy JD, Carroll L, Frank JW, Bombardier C. A systematic review of the prognosis of acute whiplash and a new conceptual framework to synthesize the literature. Spine 2001;26(19):E445–58.

Daum KM, Clore KA, Simms SS, Wilczek DD, Vesely JW, Spittle BM, Good GW. Productivity Associated with visual status of computer users. Optometry 2004;75:33–47.

Franche R, Cullen K, Clarke J, Irvin E, Sinclair S, Frank J, Institute for Work & Health Workplace-Based RTW Intervention Literature Review Research Team. Workplace-based return-to-work interventions: A systematic review of the quantitative literature. J Occup Rehabil 2005;15(4):607–31.

Gerr F, Marcus M, Ensor C. A prospective study of computer users: I. Study design and incidence of musculoskeletal symptoms and disorders. Am J Ind Med 2002;41:222–35.

Gerr F, Marcus M, Monfaith C. Epidemiology of musculoskeletal disorders among computer users: lesson learned from the role of posture and keyboard use. J Electromyogr Kinesiol 2004;14(1):25–31.

Hagberg M, Rempel D. Work-related disorders and the operation of computer VDT’s. Chapter 58 in Handbook of Human-Computer Interaction, 2nd Edition. M. Helander, T.K. Landauer, P. Prabhu (eds.), Elsevier Science BV; 1997.

Hales TR, Sauter SL, Peterson MR. Musculoskeletal disorders among visual display terminal users in a telecommunications company. Ergonomics 1994;37:1603–21.

Karsh B, Moro FBP, Smith MJ. The efficacy of workplace ergonomic interventions to control musculoskeletal disorders: A critical examination of the peer-reviewed literature. Theoret Issues Ergon Sci 2001;2(l):3–96.

Kemmlert K. Economic impact of ergonomic intervention – Four case studies. J Occup Rehabil 1996;6(l):17–32.

Kryger AI, Andersen JH, Lassen CF. Does computer use pose an occupational hazard for forearm pain; from the NUDATA study. Occup Environ Med 2003;60:el4.

Lassen CF, Mikkelsen S, Kryger AI, Brandt L, Overgaard E, Thomsen JF, Vilstrup I, Andersen JH. Elbow and wrist/hand symptoms among 6943 computer operators: a 1-year follow-up study (the NUDATA study). Am J Ind Med 2004;46:521–33.

Marcus M, Gerr F, Monteilh C. A prospective study of computer users: II. Postural risk factors for musculoskeletal symptoms and disorders. Am J Ind Med 2002;41:236–49.

NRC and IOM Report. Musculoskeletal Disorders and the Workplace. National Research Council and Institute of Medicine. Washington, DC: National Academy Press; 2001.

Palmer KT, Cooper C, Walker-Bone K, Syddall H, Coggon D. Use of keyboards and symptoms in the neck and arm: evidence from a national survey. Occup Med 2001;51:392–5.

Punnett L, Bergqvist U. Visual display unit work and upper extremity musculoskeletal disorders, A review of epidemiological findings. National Institute for Working Life – Ergonomic Expert Committee Document 1997;1:1–173.

Schena S, Paradiso V, Schinosa L. Heartbreaking roadwork. Circulation 2000;101(22):2669–70.

Sheedy JE, Shaw-McMinn PG. Diagnosing and treating computer-related vision problems. Philadelphia: Butterworth-Heinemann; 2003, p. 288.

Slavin RE. Best-evidence synthesis: An intelligent alternative to meta-analysis. J Clin Epidemiol 1995;48:9–18.

Starck J. Preface. Noise Health 2005;7(26):1.

Stein SC, Lieberman J, Pasquale M. Letter to the Editor [3] (multiple letters). J Trauma-Injury Infect Crit Care 2004;56(2):457.

Tompa E, Trevithick S, McLeod C. Working Paper #213. A systematic Review of the prevention incentives of insurance and regulatory mechanisms for occupational health and safety. Toronto institute for Work & Health; 2004.

Aaras A, Ro O, Thoresen M. Can a more neutral position of the forearm when operating a computer mouse reduce the pain level for visual display unit operators? A prospective epidemiological intervention study. Int J Human Comput Interact 1999;11(2):79–94.

Aaras A, Dainoff M, Ro O, Thoresen M. Can a more neutral position of the forearm when operating a computer mouse reduce the pain level for VDU operators? Int J Ind Ergon 2002;30(4-5):307–24.

Aaras A, Horgen G, Bjorset HH, Ro O, Walsoe H. Musculoskeletal, visual and psychosocial stress in VDU operators before and after multidisciplinary ergonomic interventions. A 6 years prospective study-Part II. Appl Ergon 2001;32(6):559–71.

Aaras A, Horgen G, Bjorset HH, Ro O, Thoresen M. Musculoskeletal, visual and psychosocial stress in VDU operators before and after multidisciplinary ergonomic interventions. Appl Ergon 1998;29(5):335–54.

Amick BC III, Robertson MM, DeRango K, Bazzani L, Moore A, Rooney T, Harrist R. Effect of office ergonomics intervention on reducing musculoskeletal symptoms. Spine 2003;28(24):2706–11.

Biswas NR, Nainiwal SK, Das GK, Langan U, Dadeya SC, Mongre PK, Ravi AK, Baidya P. Comparative randomised controlled clinical trial of a herbal eye drop with artificial tear and placebo in computer vision syndrome. J Indian Med Assoc 2003;101(3)212:208–9.

Bohr PC. Efficacy of office ergonomics education. J Occup Rehabil 2000;10(4):243–55.

Brisson C, Montreuil S, Punnett L. Effects of an ergonomic training program on workers with video display units. Scand J Work Environ Health 1999;25(3):255–63.

Butzon SP, Eagels SR. Prescribing for the moderate-to-advanced ametropic presbyopic VDT user. A comparison of the Technica Progressive and Datalite CRT trifocal. J Am Optom Assoc 1997;68(8):495–502.

Butzon SP, Sheedy JE, Nilsen E. The efficacy of computer glasses in reduction of computer worker symptoms. Optometry 2002;73(4):221–30.

Cook C, Burgess-Limerick R. The effect of forearm support on musculoskeletal discomfort during call centre work. Appl Ergon 2004;35(4)337–42.

Feuerstein M, Nicholas RA, Huang GD, Dimberg L, Ali D, Rogers H. Job stress management and ergonomic intervention for work-related upper extremity symptoms. Appl Ergon 2004;35(6):565–74.

Fostervold KI, Buckmann E, Lie I. VDU-screen filters: Remedy or the ubiquitous Hawthorne effect? Int J Ind Ergon 2001;27(2):107–18.

Galinsky TL, Swanson NG, Sauter SL, Hurrell JJ, Schleifer LM. A field study of supplementary rest breaks for data-entry operators. Ergonomics 2000;43(5):622–38.

Gerr F, Marcus M, Monteilh C, Hannan L, Ortiz D, Kleinbaum D. A randomized controlled trial of postural interventions for prevention of musculoskeletal symptoms among computer users. Occup Environ Med 2005;62:478–87.

Greene BL, DeJoy DM, Olejnik S. Effects of an active ergonomics training program on risk exposure, worker beliefs, and symptoms in computer users. WORK: J Prev Assess Rehabil 2005;24(1):41–52.

Henning RA, Jacques P, Kissel GV, Sullivan AB, Alteras-Webb SM. Frequent short rest breaks from computer work: effects on productivity and well-being at two field sites. Ergonomics 1997;40(1):78–91.

Hladky A, Prochazka B. Using a screen filter positively influences the physical well-being of VDU operators. Cent Eur J Public Health 1998;6(3):249–53.

Horgen G, Aaras A, Thoresen M. Will visual discomfort among Visual Display Unit (VDU) users change in development when moving from single vision lenses to specially designed VDU progressive lenses? Optom Vis Sci 2004;81(5):341–9.

Kamwendo K, Linton SJ. A controlled study of the effect of Neck School in Medical Secretaries. Scand J Rehabil Med 1991;23:143–52.

Ketola R, Toivonen R, Hakkanen M, Luukkonen R, Takala EP, Viikari-Juntura E, Expert Group. Effects of ergonomic intervention in work with video display units. Scand J Work Environ Health 2002;28(1):18–24.

Lintula M, Nevala-Puranen N, Louhevaara V. Effects of Ergorest arm supports on muscle strain and wrist positions during the use of the mouse and keyboard in work with visual display units: a work site intervention. Int J Occup Safety Ergon 2001;7(1):103–16.

Martin SA, Irvine JL, Fluharty K, Gatty CM. Students for WORK. A comprehensive work injury prevention program with clerical and office workers: phase I. WORK: J Prev Assess Rehabil 2003;21(2):185–96.

Gatty CM. A comprehensive work injury prevention program with clerical and office workers: Phase II. Work 2004;23(2):131–7.

Mclean L, Tingley M, Scott RN, Rickards J. Computer terminal work and the benefit of microbreaks. Appl Ergon 2001;32(3):225–37.

Mekhora K, Liston CB, Nanthavanij S, Cole JH. The effect of ergonomic intervention on discomfort in computer users with tension neck syndrome. Int J Ind Ergon 2000;26(3):367–79.

Nelson NA, Silverstein BA. Workplace changes associated with a reduction in musculoskeletal symptoms in office workers. Hum Factors 1998;40(2):337–50.

Peper E, Gibney KH, Wilson VE. Group training with healthy computing practices to prevent repetitive strain injury (RSI): A preliminary study. Appl Psychophysiol Biofeedback 2004;29(4):279–87.

Psihogios JP, Sommerich CM, Mirka GA, Moon SD. A field evaluation of monitor placement effects in VDT users. Appl Ergon 2001;32(4):313–25.

Rempel D, Tittiranonda P, Burastero S, Hudes M, So Y. Effect of keyboard keyswitch design on hand pain. J Occup Environ Med 1999; 41(2):111--119.

Rempel D, Krause N, Goldberg R, Benner D, Hudes M, Goldner GU. A randomized controlled trial evaluating the effects of two workstation interventions on upper body pain and incident musculoskeletal disorders among computer operators. Occup Environ Med 2006; 63(5):300–306.

Skilling FC Jr, Weaver TA, Kato KP, Ford JG, Dussia EM. Effects of two eye drop products on computer users with subjective ocular discomfort. Optometry 2005;76(1):47–54.

Tittiranonda P, Rempel D, Armstrong T, Burastero S. Effect of four computer keyboards in computer users with upper extremity musculoskeletal disorders. Am J Ind Med 1999;35(6):647–61.

van den Heuvel SG, De Looze MP, Hildebrandt VH, The KH. Effects of software programs stimulating regular breaks and exercises on work-related neck and upper-limb disorders. Scand J Work Environ Health 2003;29(2):106–16.

Acknowledgements

This project was sponsored in part by the Institute for Work & Health, an independent not-for-profit research organization. The Institute receives ongoing support and received direct funding for this review from the Ontario Workplace Safety & Insurance Board. The authors wish to thank: Donald Cole for quality control check, Jonathan Tyson for comments on the report, the assistance of Quenby Mahood, Krista Nolan, and Dan Shannon for obtaining bibliographic information and other materials; Jane Gibson, Tony Culyer, Evelyne Michaels, Kiera Keown, and Cameron Mustard for their editorial advice; and Shanti Raktoe for administrative support. Shelley Brewer is supported by an Occupational Injury Prevention Training Grant (T42 OH008421) from the National Institute for Occupational Safety and Health.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Brewer, S., Eerd, D.V., Amick III, B.C. et al. Workplace interventions to prevent musculoskeletal and visual symptoms and disorders among computer users: A systematic review. J Occup Rehabil 16, 317–350 (2006). https://doi.org/10.1007/s10926-006-9031-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10926-006-9031-6