Abstract

Speakers routinely produce gestures when conveying verbal information such as route directions. This study examined developmental differences in spontaneous gesture and its connection with speech when recalling route directions. Children aged 3–4 years and adults were taken on a novel walk around their preschool or university and asked to verbally recall this route, as well as a route they take regularly (e.g., from home to university, or home to a park). Both children and adults primarily produced iconic (enacting) and deictic (pointing) gestures, as well as gestures that contained both iconic and deictic elements. For adults, deictic gestures typically accompanied phrases both with description (e.g., go around the green metal gate) and without description (e.g., go around the gate). For children, phrases with description were more frequently accompanied by iconic gestures, and phrases without description were more frequently accompanied by deictic gestures. Furthermore, children used gesture to convey additional information not present in speech content more often than did adults, particularly for phrases without description.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Verbal and nonverbal communication enable us to share knowledge such as where to find the best food and how to avoid danger (e.g., Tversky 2011). While verbal communication allows us to directly convey a wealth of information such as physical object structure, form, and position, as well as abstract meanings, mood, and attitude, it does not occur in isolation (Tversky 2011). Whether we are communicating face to face or over the phone, verbal communication is often accompanied by nonverbal behaviors such as hand gestures (Alibali et al. 2001; Wesp et al. 2001). These hand gestures can both reflect and affect thinking by externalizing our thought processes (Segal et al. 2014; Wilson 2002). Gestures produced by speakers can, therefore, provide speakers with feedback on their own thought processes, as well as provide listeners with important information about a speaker’s thoughts beyond the content of speech (Goldin-Meadow and Alibali 2013; Madan and Singhal 2012).

The embodiment of thought processes through gesture may serve two functions: (1) help speakers translate ideas into speech and/or (2) eliminate the need to translate ideas into speech at all (Hostetter and Alibali 2007; Wilson 2002). If a gesture is used to help a speaker translate an idea into speech, then there is likely to be a large degree of overlap in the content of the gesture and the associated speech phrase. On the other hand, if a gesture removes the need to translate ideas into speech at all, then the gesture is likely to present additional information, beyond that contained in the speech phrase. Although these two possibilities are not mutually exclusive, there is limited research examining the degree of additional information conveyed by gesture beyond speech, or the relationship between types of gesture and speech content (Hostetter and Alibali 2007). Examining the relationship between speech and gesture will enhance our understanding of the role gesture plays in cognition and communication, and the construction of meaning beyond speech (Tversky 2011).

Gesture Definitions

Gestures can represent and communicate aspects of thought to listeners more directly than can words (Tversky 2011). For example, iconic gestures, such as shaping a tall box, can convey object characteristics such as height, thereby disambiguating the target object from other objects through hand shape (Cassell et al. 2007). Deictic gestures, such as pointing to the left, convey movement, direction, or relational information to the listener (Cassell et al. 2007). Metaphoric gestures, such as enacting “balancing” accompanying dialogue about comparisons, convey abstract concept characteristics (Allen 2003; McNeill 1992). In contrast, beat gestures are simple rhythmic hand movements which go along with the rhythm of speech but have no apparent semantic meaning (Allen 2003; McNeill 1992).

Iconic, deictic and metaphoric gestures can be further separated into gestures which allow individuals to express information both consistent with (i.e., redundant) and beyond (i.e., non-redundant) the phrases they accompany (Alibali et al. 2000; Tversky 2011). Redundant gestures reflect the meaning of the words they accompany (Alibali et al. 2000). For example, “the grass area is flat” accompanied by a gesture indicating a flat surface, conveys the same information in gesture as in speech. Conversely, non-redundant gestures (sometimes called gesture-speech mismatches) go beyond the words they accompany, communicating additional information more directly and offering a second window into the speaker’s thinking (Alibali et al. 1997; McNeill et al. 1994). For example, “go past the front of the building” with a gesture indicating a large rectangular box to the right conveys information beyond the speech content, namely that a rectangular building is on the right.

Previous research has shown that gestures can convey information beyond speech content, with some gestures conveying more information than others (Beattie and Shovelton 1999). This has been demonstrated with iconic gestures relating to particular semantic features (e.g., relative position and size of objects), which can convey information beyond speech content (Beattie and Shovelton 1999; Cassell et al. 1999). Similarly, Cassell et al. (2007) reported a relationship between the form of a gesture and the characteristics of the entity to which it refers. While these findings suggest that some gestures relate meaningfully to the speech they accompany and that they can convey information beyond speech content, literature is yet to address the relationship between other gesture types (e.g., deictic and metaphoric) and the speech they accompany. These findings also suggest, however, that the commonly used practice of binary coding (i.e., placing gestures into redundant and non-redundant categories) does not pick up differences in the extent to which gestures communicate additional information (i.e., extent of redundancy; Alibali et al. 2000; Beattie and Shovelton 1999; Cassell et al. 1999). Examining the relationship between speech and a range of gestures, as well as the degree of redundancy between information conveyed between speech and gesture will further enhance our understanding of gestures as an embodiment of speakers’ mental representations.

Gesture Production in Adults

To date, research has primarily focused on the rate, type, and frequency of gestures, and not investigated the relationship between types of gesture (e.g., iconic, deictic) and the content of the phrases they accompany (Hostetter and Alibali 2007; Hostetter and Skirving 2011; Morsella and Krauss 2004; Tversky 2011; Wesp et al. 2001). For instance, Hostetter and Skirving (2011) reported that adults presented with an animated cartoon of an event and a verbal description of the event produced more iconic and deictic gestures accompanying verbal recall than those who did not watch the cartoon. Similarly, adults presented with images of abstract or recognizable objects, produced more gesture when describing images from memory compared with visually accessible images, and when describing difficult to encode images than easy to encode images (Morsella and Krauss 2004). In addition, the frequency of adults’ gesture production changes depending on task demands/conditions, as adults produced fewer gestures when retelling a story to a listener who has heard the story before than when conveying it to a listener for the first time (Galati and Brennan 2013). While these studies demonstrate that adults produce a range of gestures when recalling information and that the rate of gestures increases when memory is challenged or when conveying novel information to listeners, they do not shed light on gesture’s relationship with speech content. Examining the extent of gesture redundancy (i.e., degree of additional information conveyed by gesture beyond speech) and the relationship between types of gesture and speech content will clarify the role of different types of gesture in externalizing thought processes and conveying meaning.

The Function of Gesture Production for Adults

Gestures accompanying verbal communication reflect and affect a speaker’s cognition by externalizing thought processes (Wilson 2002), providing benefits to the speaker themselves. On one hand, the form and shape gestures take reflect speakers’ thought processes, providing them with feedback on their thoughts (Goldin-Meadow and Alibali 2013; Madan and Singhal 2012). On the other hand, producing gestures reduces the overall load on cognitive resources by reducing the cognitive workload of speech, thereby freeing resources for other cognitive processes such as memory (e.g., word retrieval; Goldin-Meadow et al. 2001; Just and Carpenter 1992; Wilson 2002). In this way, the embodiment of speakers’ cognition through gesture benefits speakers’ thought processes and helps communicate information. Producing gestures may benefit speakers for some tasks more than others, with existing research reporting that speakers produce more gestures when communicating spatial information than non-spatial information (Rauscher et al. 1996).

Gesture Production, Spatial Tasks and Adults

Communicating spatial information such as describing an object’s location, or providing route directions, enables us to interact with and communicate about the physical world (Taylor and Tversky 1996). For instance, verbal route directions are narratives, comprised primarily of propositions containing spatial information such as prescriptive movements (e.g., go left), locations (e.g., the gate on your right), movement descriptions (e.g., take the sharp left), and location descriptions (e.g., the green metal gate on your right; Daniel et al. 2009). Route knowledge comes from processing perceptual input as we move through environments which we then transform into verbal route directions (Allen 2000; Cassell et al. 2007).

Adults accompany verbal route directions with gestures, particularly deictic and iconic gestures (Allen 2003). The visual characteristics of these gestures often reflect the spatial features of the object referred to in speech (e.g., a flat hand shape vertically orientated might refer to objects with an upright plane), or the relationship between two locations (e.g., using one hand to symbolize a location and the other hand to convey a landmark relative to that location; Cassell et al. 2007; Emmorey et al. 2000). Together these studies demonstrate through the rate, synchrony with words, and types of gestures produced, that adults both produce gestures accompanying spatial route information and that hand shape may act to disambiguate a location (Allen 2003; Cassell et al. 2007). While this suggests the existence of an informational relationship between speech and gesture, the dimensions of the information conveyed in speech and gesture independently or together has not been established. Furthermore, existing research does not indicate the effect of task purpose during spatial communication on the characteristics of gestures accompanying spatial information. Importantly, existing research does not indicate the characteristics of gestures accompanying spatial information produced by children.

Gesture Production in Children

As noted above, gestures are a valuable source of information about a speaker’s thoughts. It is important therefore to clarify the role of gesture in cognition and communication as language and gesture production develop, i.e., during early childhood (Alibali et al. 1997; Church and Goldin-Meadow 1986). Children as young as 8 months produce gestures, allowing them to engage with and communicate about their immediate world before they have the words to do so (Bates 1976; Bates et al. 1979; Capirci and Volterra 2008; Goldin-Meadow and Alibali 2013; Iverson and Goldin-Meadow 2005). While children reach linguistic milestones at different rates, gesture and language development are thought to be related, with infants transitioning from simple pointing gesture only to single word utterances, to single word plus gesture combinations as their vocabulary develops (Adams et al. 1999; Iverson and Goldin-Meadow 2005; Özçalışkan and Goldin-Meadow 2010). In addition, the number of speech plus gesture combinations children produce increases significantly between 14 and 22 months of age, with gestures increasing in variety and complexity over time (Behne et al. 2014; Özçalışkan and Goldin-Meadow 2005). Producing gestures enables infants to communicate multiple pieces of information and meanings before they have the vocabulary to do so, thereby facilitating language learning through exposure to new words via social interactions (Goldin-Meadow and Alibali 2013; Iverson and Goldin-Meadow 2005; McNeill 1992).

The association between gestures and the context of spoken words grounds language learning in the physical world (Yu et al. 2005). Between the ages 10 and 24 months children observed during play and meal time often used gesture to refer to objects, transitioning with age from using gesture only to using speech plus gesture or speech only, to refer to objects (Iverson and Goldin-Meadow 2005). Consistent with this change, children’s early word meanings are perceptual (i.e., based on the concrete environment) and not functional (i.e., based on abstract relatedness) with children learning nouns (e.g., bed, cat) faster than verbs (e.g., painting; Gentner 1978; Tomasello et al. 1997; Yu et al. 2005). Children’s vocabulary continues to expand and by age 2, children’s vocabulary repertoire has between 50 and 600 words (Cameron and Xu 2011). Between ages 3 and 5 years children’s ability to communicate and comprehend speech and gesture further improves, as the foundations of adult syntax and sentence structure are acquired (Cameron and Xu 2011; O’Reilly 1995).

The Function of Gesture Production for Children

During the language learning years gestures may perform a functional role in the construction of phrases and the expression of meaning (Capirci and Volterra 2008; Iverson and Goldin-Meadow 2005). For example, producing the iconic gesture: both hands flapping, to indicate the animal “bat”, disambiguates it from a sport based “bat” which they might accompany with a striking motion (Kidd and Holler 2009). Producing gestures provides preschoolers with a means to communicate ideas beyond their current vocabulary and provides their listeners with insight into the content and extent of their knowledge and cognitive development (Goldin-Meadow and Alibali 2013; Hostetter and Alibali 2007; Madan and Singhal 2012; Wilson 2002). Language, and therefore gesture, also plays a fundamental role in the development and communication of theory of mind and spatial perspective taking, an important conceptual change taking place between ages of 2½ and 5 years (Astington and Jenkins 1999; Flavell et al. 1981; Schober 1993; Selman 1971; Wellman et al. 2001).

Theory of mind is the understanding that others and oneself have mental states (e.g., desires, emotions, intentions), and the realization that these mental states may or may not reflect reality (i.e., false beliefs; Astington and Jenkins 1999; Wellman et al. 2001). Similarly, spatial perspective taking is the ability to infer or recognize that the other person may see an object that the child does not see, or vice versa, and that an object may have a different appearance depending on distance and the side the object is seen from (Flavell et al. 1981; Schober 1993). Cognitive abilities, such as theory of mind and spatial perspective taking skills, undergo considerable development during the preschool years, with children expressing their knowledge and understanding through both speech and gesture (Bretherton et al. 1981). The gestures children produce indicate to others the extent and content of children’s current knowledge available for communication before they have the words to do so (Bretherton et al. 1981). Due to limitations in language ability, we may be able to more accurately assess children’s cognitive development through examining their gestures over and above their speech content. However, existing research has failed to investigate the extent of additional information provided in the gestures children produce. Examining the gestures children produce during tasks such as spatial communication, which require children to apply their conceptual understanding of theory of mind and perspective taking skills, will provide a clearer picture of how their speech and gestures combine to convey information they cannot express through speech alone.

Gesture Production, Spatial Tasks and Children

During early childhood, gestures offer a way for speakers to organize and package ideas, as well as a way to convey semantically complex information beyond their current language skills (Özçalışkan and Goldin-Meadow 2010). In this way, the gestures preschoolers produce become an important source of information about changes in spatial cognition as language develops (Just and Carpenter 1992; Nicoladis et al. 2008). Children transition between the ages of 2–5 years from pointing to the route to an object’s location, to pointing to the location of the room in which the object is located (Nicoladis et al. 2008). This suggests that children’s conceptualization and communication of space changes as language skills and cognition develop. Examining differences between preschoolers’ and adults’ communication of environmental knowledge will provide insight into early conceptualization of space and how these change during language development.

When communicating environmental knowledge, while children aged 8, 9, and 10 years and adults rarely conveyed information in speech without also communicating that information in gesture, they also convey a lot of information in gesture that was not present in speech (Sauter et al. 2012). Looking at younger children, when recalling the route from nursery school to home, 4-year-old children omitted important movement and location information compared with 6-year-olds (Sekine 2009). For example, children aged 4 years did not use left/right terms, conveying the actual route directly and continuously through gesture. By 6 years of age, 50% of children used left/right terms and produced more speech-only route direction phrases than phrases containing both speech and gesture (Sekine 2009). The overall number of turns reported and gestures produced increased as children got older, with 6 year olds less likely to fail to mention a turn compared with younger children (Sekine 2009). This age difference suggests that as vocabulary and cognition develop, the ability to effectively communicate route directions improves. It does not, however, account for the role different types of gestures play in the communication of route directions. If gestures offer a way for young children to communicate spatial concepts that they do not yet have the words to express, then it is important to examine the types of gestures preschool aged children produce when conveying route directions, the degree of gestural redundancy, and how those gestures accompany the verbal communication of route directions (Iverson and Goldin-Meadow 2005).

The Current Study

The preschool years constitute a critical time for language development. Gestures, therefore, become an important source of information about a child’s developing spatial knowledge and cognitive processes. Gestures are also an important source of information about adults’ spatial conceptualization and cognitive processes. The current study was designed to examine the gestures produced by children and adults while conveying spatial route directions, thereby examining the communication of environmental thinking as language and cognition develop. It is important to note there is some evidence that task purpose influences adults’ gesture production (e.g., Galati and Brennan 2013) and how adults process and subsequently recall spatial information (e.g., Taylor et al. 1997). To account for potential differences in speech and gesture production due to task instruction, two adult conditions were included in the study design. Some adults were given the same task instructions as children and some adults were given task instructions in language more appropriate to adults. Therefore, the current study was designed to explore differences in adults’ speech and gesture production depending on task instruction.

Consequently, there are five aims of this study: (1) to document the types of spontaneous gestures preschoolers and adults produce when recalling route directions (descriptions); (2) to compare the types of gestures produced by children versus adults; (3) to examine the additional information presented through gesture (level of redundancy) in relation to different speech phrases; (4) to examine the relationship between the types of gesture produced and the associated phrase content; and (5) to examine whether any developmental differences noted above in aims 1–4 hold when adults are a) given the same task instructions as children compared with b) given task instructions in language more appropriate to adults.

If gestures allow individuals to convey information beyond their current vocabulary, then we would expect the gestures produced by children (who have a more limited vocabulary) to depict information beyond speech content to a greater extent than gestures produced by adults (who have a more complete vocabulary). Differences in gesture redundancy between children and adults would imply differences in cognitive processing of environmental information. In addition, if gestures help speakers translate ideas into speech and/or eliminate the need to translate ideas into speech at all, then we would expect deictic (pointing) gestures to accompany speech without descriptive content to a greater extent than speech with descriptive content, and iconic gestures (which can depict concrete descriptive information) to accompany speech with descriptive content to a greater extent than speech without descriptive content. Finally, due to the exploratory nature of the effect of task instruction on adults’ speech and gesture production, there were no directional hypotheses for the effect of task purpose on adults speech and gesture production.

Method

Participants

Thirty-three children were recruited from preschools in Sydney, Australia (19 boys and 14 girls, mean age = 4 years 7 months, SD = 5 months, range = 3 years 10 months to 5 years 5 months). Seventy-five adults were recruited from an introductory psychology unit at Macquarie University (38 males and 37 females, mean age = 21 years, SD = 5, range 18–52). Two children were removed from analysis due to a failure to provide either verbal or gestural responses. The final sample comprised of 31 children and 75 adults. This study was approved by the Macquarie University Faculty of Human Sciences, Human Research Ethics Committee (HREC). Preschool directors and parents/guardians of the children received an information sheet describing the purpose and procedure of the study as well as a consent form. Only children whose parents had returned a completed form were allowed to participate in the study. Each child was asked at the beginning of their session if they would like to participate. University participants were also given an information sheet and consent form indicating the purpose and procedure of the study. Children were given stickers for participating and adults were given course credit.

Materials and Procedure

Prior to participation, informed consent was obtained from all individual participants included in the study. Adults went on a short walk in and around a building on the university campus and children went on a short walk within the grounds of their preschool. In a quiet room at the university campus and at the preschools, the experimenter sat directly opposite participants and maintained eye contact throughout participants’ descriptions. Adults were allocated to either the detail condition or the description condition. Adults in the detail condition were asked, “can you now describe for me the path that we took at the beginning of the session, in as much detail as possible, as if you’re describing it to someone who hasn’t taken the path but will be taking the path?” Adults in the describe condition and children were asked, “can you tell me everything you remember about the walk we took outside?”.

Participants were also asked to recall a route that they take regularly. Adults in the detail condition were asked, “can you tell me about the path that you take from home to university as if you’re describing it to someone who hasn’t taken the path but will be taking the path?” Adults in the describe condition were asked, “can you tell me about a walk that you go on from home to university?” Children were asked, “can you tell me about a walk that you go on from home to a park?” Initially, the instructions presented to adult participants in the detail condition were piloted on 12 children to ensure the wording of instructions was appropriate for preschoolers. The instructions regarding detailed description of routes, however, failed to elicit a response from the children. As a result, the wording of instructions for preschoolers was revised to be age appropriate. If any participant took too long before continuing to give the description, the experimenter said, “and where did you/we go after that?” and/or “did you/we go anywhere else?” There are no reasons to expect differences between the two tasks (routes: familiar and unfamiliar). Both tasks were used to establish a richer data set, with greater scope for participants to produce a variety of gestures and speech phrases. The order of route recall was counterbalanced between participants.

Participants’ spontaneous actions were of interest, therefore, specific instructions regarding gesturing were explicitly avoided. As the participants’ verbal route descriptions and accompanying gestures were of interest, no measure of correct recall was included. No directional feedback or confirmation was provided by the experimenter. Responses were videotaped for later analysis. The entire procedure took 10–15 min per participant, completed in a single session.

Coding

Speech was transcribed verbatim, including filled pauses (e.g., “ummm”) and hesitations. Speech content was divided into propositions following Daniel et al. (2009). Propositions were minimal information units combining a predicate and one or two arguments. For example, “we turned left out of the building—right out of the building—I’m really bad at my lefts and rights”, was divided into a succession of statements: “we turned left out of the building”; “right out of the building”; “I’m really bad at my lefts and rights”. Propositions were then classified according to five categories outlined by Daniel et al. (2009). Three additional categories (movements with description, location and movement with description, and cardinal directions) were included to capture the descriptive and cardinal information conveyed by participants during route direction communication reported by Allen (2000) and Ward, Newcombe, and Overton (1986). See Table 1 for a full list of phrase categories and their associated characteristics.

Gestures were segmented into individual gestures and only included hand and arm movements using the format described in McNeill (1992). Individual gestures were described in terms of hand shape, placement and motion, including identifying the speech content they accompanied. Incidental and irrelevant hand movements, e.g., fiddling with hair, were not coded. Gestures were classified according to the following categories outlined by McNeill (1992), Alibali et al. (2000), Cassell et al. (2007) and Thompson et al. (1998). See Table 2 for a full list of each gesture type and associated characteristics.

Finally, the redundancy of each gesture was assessed based on the information expressed in gesture and the information expressed in speech (Alibali et al. 2000). Binary categorization of a gesture as either redundant or non-redundant, however, serves only to simplify naming and does not designate “more” or “less” the extent of additional information conveyed by a gesture (Gregory 2004). Gestures differ in the extent to which they communicate additional information, and as such, these differences are not picked up by the commonly used practice of binary coding, placing gestures into redundant and non-redundant categories (Beattie and Shovelton 1999). In order to maximize the information obtained about the extent a gesture conveys information consistent with or beyond speech content, a summative/Likert redundancy rating scale was developed for measurement purposes (Gregory 2004). Gestures were rated according to the degree to which they contained information not expressed in speech content on a scale from 1 (Redundant, e.g., “go straight along the wide path” accompanied by a gesture pointing forward, i.e., information about direction present in speech) to 9 (Non-redundant, e.g., “follow the road” accompanied by a gesture indicating the road turns to the right, i.e., novel information about the direction of the road not present in speech). A rating of 5 indicates that the gesture conveys some information present in speech and some novel information (e.g., “turn at the end of the corridor” accompanied by a gesture indicating a turn to the right, conveying turning information present in speech, while also conveying novel information that the direction of the turn is to the right).

Reliability

A second coder independently coded 25% of the spoken and gesture transcripts. Inter-rater reliability was evaluated through single-rater intraclass correlations (ICCs), with a consistency model. When comparative judgements are made about objects of measurement, a consistency model takes the ratings of two or more judges and through an additive transformation serves to equate them to determine the extent of agreement between them (McGraw and Wong 1996; Shrout and Fleiss 1979). Using a consistency definition, an ICC value of 1.00 reflects perfect agreement while ICC values closer to 0.00 reflect imperfect agreement (i.e., a large discrepancy between judges’ ratings; McGraw and Wong 1996). For speech coding, intraclass correlations were obtained for phrases without description (ICC = .861, p < .001), and phrases with description (ICC = .978, p < .001; see below for the collapsing of speech categories). For gesture coding, intraclass correlations were obtained for iconic gesture (ICC = .876, p < .001), deictic gesture (ICC = .983, p < .001), metaphoric gesture (ICC = .614, p < .001), beat gesture (ICC = .746, p = .001), and iconic–deictic gesture (ICC = .692, p < .001). An intraclass correlation was similarly obtained for the redundancy rating scale for gestures (ICC = .798, p < .001).

Results

Analysis Plan

Descriptive statistics were used to assess the distribution of each dependent variable. Multilevel Modelling was then used for analyses due to the nested nature of the data, with speech phrases and gestures being nested within participants (MLM; Peugh 2010). Naturally nested data structures, such as children within classrooms, or repeated observations within participants as in the current study, violate the assumption of independence of observations needed for traditional statistical analyses (e.g., Analysis of Variance; Peugh 2010). Ignoring this violation results in biased parameter estimates and may inflate error rates. Here, participants produced different numbers of speech phrases, precluding the use of traditional repeated measures analyses. For the current analyses, therefore, individual speech phrases and gestures were treated as the Level 1 unit, with participants as the Level 2 unit. Where appropriate, follow up tests of simple effects were Bonferroni adjusted to control the family-wise error rate at alpha = .05. Where appropriate, Cohen’s d has been reported as a measure of effect size. There are no effect sizes for multinomial logistic multilevel model main effects and interactions at this time, however, odds ratios for simple effects are reported. Individuals who talk for longer have greater opportunities to produce gesture, therefore, total number of words produced was entered as a covariate to assess the differences in gesture production between adults and children in relation to the number of words produced during recall.

Preliminary Analyses

A preliminary analysis was conducted to determine which distribution was appropriate for each analysis. Given the skew or categorical nature of some variables, a negative binomial, a multinomial, and normal distribution was applied where appropriate. Few metaphoric or beat gestures were produced by participants during recall. They were therefore excluded from inferential analyses but are included in the descriptive statistics below. The phrase types “comment” and “cardinal directions” were similarly excluded due to low production rates by both adults and children. Phrases were then converted into a binary variable indicating phrases with or without description. Phrases and gestures were pooled across the two routes recalled, as no significant main effects or interactions for route type were found. Similarly, no effects of gender were found for any analyses, and gender was excluded in the analyses below.

Main Analyses

Frequency of Each Type of Gesture at Recall

Descriptive statistics were used to examine the types of gestures produced by adults and children at recall. Both children and adults primarily produced iconic, deictic, and iconic–deictic gestures (see Fig. 1). The mean phrase to gesture ratio was 3.34 for children, such that gestures accompanied 33.4% of phrases. For adults asked to convey detailed directions, the phrase to gesture ratio was 2.34, such that gestures accompanied 23.4% of phrases. For adults asked to describe directions, the phrase to gesture ratio was 3.42, such that gestures accompanied 34.2% of phrases.

Differences in Gesture Production at Recall

A negative binomial multilevel model analysis with two predictors, group (child, adult describe, adult detail), and type of gesture (iconic, deictic, iconic–deictic) was carried out to examine differences in the rate of production of gesture at route direction recall between children and adults and between the two adult conditions. The total number of words produced by each participant was entered as a covariate to control for differences in the amount of speech produced by children and adults. Group significantly predicted the number of gestures produced at recall, F(2, 626) = 5.59, p = .004. Examination of simple effects revealed that adults produced more gestures than children, whether adults were asked to describe the route,F(1, 626) = 8.83, p = .003, d = .70, or convey the route in detail, F(1, 626) = 9.02, p = .003, d = .76. There was no difference in the number of gestures produced between adults asked to describe the route and those asked to convey detail directions, F(1, 626) = 0.26, p = .610, d = .12. There was a main effect of type of gesture, F(2, 626) = 71.92, p < .001, such that more deictic gestures, F(1, 626) = 69.17, p < .001, d = .82, and iconic–deictic gestures, F(1, 626) = 76.35, p < .001, d = .97, were produced than iconic gestures, but there was no difference in the number of deictic and iconic–deictic gestures produced, F(1, 626) = .16, p = .686, d = .04.

There was a significant interaction between age group and type of gesture, F(4, 626) = 13.74, p < .001. Simple effects analysis revealed that children produced more iconic gestures than adults asked to describe the route, F(1, 626) = 9.33, p = .002, d = .62, however, adults asked to describe the route produced more deictic, F(1, 626) = 28.47, p < .001, d = .85, and iconic–deictic gestures, F(1, 626) = 9.45, p = .002, d = .63, than children. There was no difference in the number of iconic, F(1, 626) = 3.67, p = .056, d = .45, and iconic–deictic gestures, F(1, 626) = 6.01, p = .015, d = .41, produced between children and adults asked to convey detail directions. Adults asked to convey detail directions produced significantly more deictic gestures, F(1, 626) = 25.44, p < .001, d = .79, than children. There was no significant difference between adults asked to describe the routes and adults asked to convey detail directions in the production of iconic, deictic, and iconic–deictic gestures (all ps > .05) (see Fig. 1).

The Informational Relationship Between Speech and Gesture

A linear multilevel model analysis with two predictors, group (child, adult describe, adult detail) and type of phrase (with description, without description), was carried out to examine the amount of additional information gesture conveyed beyond the associated phrase content, as measured by the redundancy rating scale. Group significantly predicted the redundancy of gestures produced at recall, F(2, 2298) = 3.43, p = .033. Examination of simple effects revealed that children’s gestures depicted information beyond speech content to a greater extent than did adults asked to describe the route, F(1, 2298) = 6.85, p = .009, d = .65. There was no significant difference between children and adults asked to convey the route in detail, F(1, 2298) = 2.54, p = .111, d = .39, or between adults asked to describe the route and adults asked to convey detail directions, F(1, 2298) = 1.86, p = .172, d = .32. There was a main effect of type of phrase, F(1, 2298) = 6.85, p = .009, d = .06, such that gestures accompanied phrases with description conveying information beyond speech content to a greater extent than for phrases without description.

There was a significant two-way interaction between group and type of phrase, F(2, 2298) = 4.85, p = .008. Simple effects analysis revealed that children’s gestures depicted information beyond speech content to a greater extent than did those of adults who were asked to describe the route for phrases without description, F(1, 2298) = 19.80, p < .001, d = 1.13. For phrases with description there was no significant difference between children and adults asked to describe the routes, F(1, 2298) = .06, p = .803, d = .06. When adults were asked to convey detailed directions, for phrases without description, children’s gestures depicted information beyond speech content to a greater extent than adults’, F(1, 2298) = 8.56, p = .003, d = .74. For phrases with description, there was no significant difference between adults and children, F(1, 2298) = 0.02, p = .887, d = .04; see Fig. 2. There was no significant difference between adults asked to describe the route and adults asked to convey detailed directions for both phrases without description, F(1, 2298) = 5.30, p = .021, d = .53, and phrases with description, F(1, 2298) = .20, p = .656, d = .10.

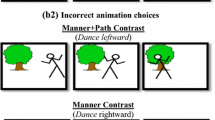

The Relationship Between Types of Gesture and Phrase Content

A multinomial logistic multilevel modelFootnote 1 analysis with two predictors, group (child, adult describe, adult detail) and type of phrase (with description, without description) was carried out to examine the relationship between the types of gesture produced (iconic, deictic, iconic–deictic) and phrase type and group. There was a main effect of group, F(2, 2292) = 16.65, p < .001, and a main effect of type of phrase, F(1, 2292) = 39.94, p < .001.

There was a significant two-way interaction between group and type of phrase, F(2, 2292) = 5.11, p < .001. Examination of simple effects revealed that children were less likely to produce deictic than iconic gestures for phrases with description than phrases without description (OR 0.15, 95% CI [.06, .20], p < .001), and less likely to produce iconic–deictic than iconic gestures for phrases with description than phrases without description (OR 0.15, 95% CI [.05, .49], p = .002) than are adults asked to describe the route. There was no effect of type of phrase on the production of deictic compared with iconic–deictic gestures (p > .05; see Fig. 3).

Adults asked to describe the route, on the other hand, were more likely to produce deictic than iconic–deictic gestures for phrases without description than phrases with description (OR 3.26, 95% CI [1.72, 6.19], p < .001), and more likely to produce deictic than iconic gestures for phrases without description than phrases with description (OR 5.40, 95% CI [2.79, 10.48], p < .001) than are children. There was no effect of type of phrase on the production of iconic–deictic compared with iconic gestures (p > .05). This pattern of results was replicated when comparing the relationship between gesture types and types of phrase between children and adults asked to convey detailed directions.

Adults asked to describe the route were more likely to produce deictic than iconic–deictic gestures for phrases without description than phrases with description (OR 3.25, 95% CI [1.71, 6.17], p < .001), and more likely to produce deictic than iconic gestures for phrases without description than phrases with description (OR 5.45, 95% CI [2.81, 10.55], p < .001) than were adults asked to convey detail directions. There was no effect of type of phrase on the production of iconic–deictic compared with iconic gestures (p > .05).

Discussion

Externalized thought processes, such as hand gestures, provide insight into environmental thinking, particularly as language and cognition develop. Here, we captured some of the complex role that gesture plays in relaying route direction information by preschool children and adults. While not all phrases were accompanied by gesture, most participants produced a range of gestures when conveying route directions from memory. Three kinds of gestures dominated however, namely iconic, deictic, and iconic–deictic gestures. In relation to the number of words produced during recall, adults produced more gestures than children, with the biggest difference found in the production of deictic and iconic–deictic gestures.

Differences in Gesture Production at Recall

When describing route directions, children produced more iconic gestures than adults but adults produced more deictic and iconic–deictic gestures than children. One possibility is that children’s greater production of iconic gestures reflects developmental differences in the conceptualization of space. That is, it may be that children’s cognitive limitations in both theory of mind/spatial perspective taking, as well as in language (e.g., the meaning of the words left and right), result in a more concrete conceptualization of space that is reflected in the greater production of enacting gestures. Adults, with a wider vocabulary and greater capacity for spatial perspective taking, generate a more abstract mental representation of space, reflected in the greater production of pointing gestures. Developmental differences in the production of gestures, therefore, imply that processing environmental information and thinking about space changes as language and cognitive capacity increase with age.

Given that task purpose influences the processing and subsequent recall of environmental information, it is important to examine whether developmental differences in gesture and speech production hold when adults are given task instructions in language more appropriate to adults (Taylor et al. 1997). Adults asked to convey route directions in detail continued to produce more deictic gestures than children, however, adults asked to convey the route in detail and children produced similar amounts of iconic and iconic–deictic gestures. In other words, the developmental difference in deictic gesture production holds when adults are given the same task instructions as children and when given task instructions in language more appropriate to adults. The developmental difference in gesture production is further supported by the finding that adults asked to convey the route in detail produced similar amounts of each type of gesture as adults asked to describe the route. For adults, changing task instructions from describing the route to conveying it so that someone can follow the route based on their route directions does not change how adults use gesture when communicating about space. Taken together, these findings provide further evidence of developmental differences in gesture production during the communication of spatial information.

The Informational Relationship Between Speech and Gesture

Given that gestures derive semantic meaning from the words and phrases they accompany, it is important to view the differences in adult and child gesture production within the context of the phrases they accompany (Blades and Medlicott 1992; Capirci and Volterra 2008; Tversky 2011). Children’s gestures depicted information beyond speech content to a greater extent than did adults’ for phrases with and without description. For example, a child accompanied the phrase “I went over there” with a gesture pointing to the right, while an adult produced the same gesture accompanying the phrase “we turned right”. This suggests that children may compensate for limitations in their verbal capacity by using their hands to extend the meaning of their verbal output.

While children’s gestures depicted a similar amount of information beyond speech for both phrases with and without description, when phrases did not include a description, children’s gestures depicted information beyond speech content to a greater extent than did those of adults asked to convey detailed directions. It is possible that compared with children, adults’ language capacity to convey detailed route directions reduces the need for gestures to accompany speech when description is not included in the phrase content. Furthermore, adults asked to convey detailed directions produced gestures which depicted information beyond speech content to a similar extent as adults asked to describe the route regardless of the type of phrase. In other words, the request for detailed route directions elicits gestures which extend the information conveyed in speech content to a similar extent as instructions to describe a path through the environment. These findings are important because they reflect qualitative differences in gesture production as a function of development and qualitative similarities as a function of task purpose.

The Relationship Between Types of Gesture and Phrase Content

Closer examination of the types of gestures accompanying phrases with and without description revealed that children conveyed direction information (deictic) with their hands when phrases did not include descriptive content and depicted concrete images of referents (iconic) when phrases did include a description. In contrast, adults used their hands to convey direction information for phrases both with and without description regardless of the spatial task instructions. These results suggest that while adults use their hands to convey direction information, children use their hands to enrich their verbal description of space and to convey direction information when not describing space. In this way, children use gestures to convey environmental characteristics of movements and locations required for successful route completion, which may be beyond the limitations of their vocabulary. The similarity in adults’ production of different types of gestures accompanying phrases with and without description for the different task purposes suggests that the purpose of the task does not change the way we communicate about space.

In Context

To this point, the frequency, type and timing of gestures accompanying route directions remained the focus of studies examining adult and early childhood spatial communication (Allen 2003; Sekine 2009). Allen (2003) reported that adults primarily produced deictic and iconic gestures when conveying route directions from memory, with only one other study reporting that the visual characteristics of gestures correspond to the spatial features of spoken referents (Cassell et al. 2007). Looking at preschool aged children, 4 year olds, not yet able to give coherent and accurate route directions, produced proportionally more gestures than 6 year olds (Sekine 2009). Together with the current study, these findings suggest that gesture provides a valuable resource for information about a child’s mental representations of environmental space, particularly when they perhaps do not have the verbal resources to provide as detailed descriptions as do adults.

Task instructions and purpose also influence the way we think and communicate information (Emmorey et al. 2000; Galati and Brennan 2013; Gelman et al. 2013). To date, the impact of task instructions on spatial communication, in particular, gesture production has been somewhat overlooked. There is some evidence that speakers adapt their gestures as a function of the perceived knowledge state of their listener (Galati and Brennan 2013; Kang et al. 2015). For example, when explaining a topic to a novice, speakers first establish a common knowledge base, relying on a paper diagram as well as producing gestural diagrams to facilitate communication (Kang et al. 2015). When explaining a topic to an expert, however, speakers begin with and rely on the paper diagram to facilitate communication (Kang et al. 2015). Furthermore, gestures can reflect the spatial perspective taken by the speaker with adults producing gestures in 3D space when communicating about space (route perspective) and gestures along a 2D plane when describing space from a single viewpoint (survey perspective; Emmorey et al. 2000). Similarly, other domains demonstrate that speech content changes as a function of the task purpose, with adults and children aged 5 and 6 years using general language terms in teaching contexts and when in a teacher role than in narrative contexts (Gelman et al. 2013). The influence of test conditions on speech and gesture have been tested with other content, but not for gesture production in relation to speech content, and not for route information. In the current study, the similarity in the ways adults produced gestures and their connection to speech content despite differences in spatial task purpose suggests that the verbal and nonverbal way we communicate about space is qualitatively and quantitatively stable, regardless of how it is elicited.

The Function of Gesture Production When Communicating Spatial Information

At all ages, thinking and communicating takes place within the context of the environment and as such, the production of gesture and its function for speakers needs to be understood in that context (Wilson 2002). In any environment, our ability to process, store, and convey information ultimately depends on our cognitive parameters (Just and Carpenter 1992). Embodied cognition theory suggests that we use the environment, through the production of gestures, to hold and/or manipulate information in order to overcome the limitations in our ability to simultaneously process information (e.g., attention and working memory; Wesp et al. 2001; Wilson 2002). In this way, externalizing or embodying mental representations of our thoughts through the production of gestures during thinking and communication can extend the limitations of our cognitive parameters and thereby facilitate the thinking process (Wilson 2002).

Despite existing only momentarily in the external environment, gestures ground thought processes and communication in the environment, and in so doing, allows speakers to offload cognitive workload (Tversky 2011; Wilson 2002). For adults, producing deictic gestures reduces the overall load on cognitive resources by conveying route elements through pointing, thereby reducing the workload of the speech production system, making more resources available for concurrent cognitive processes (e.g., memory; Goldin-Meadow et al. 2001; Just and Carpenter 1992; Wilson 2002). In other words, producing deictic gestures accompanying spatial route information reduces the resources needed by the speech production process makes more resources available for creating and maintaining spatial mental representations, thereby facilitating route direction communication (Cameron and Xu 2011). At the same time, by reflecting speakers’ mental representations, gestures also provide speakers with feedback on their thought processes (Goldin-Meadow and Alibali 2013; Madan and Singhal 2012; Wilson 2002). In this way, gestures clarify and emphasize aspects of spatial messages during the communication process, helping to maintain mental representations of environments and routes, such as position and movement. As extensive cognitive changes occur during development, the function of gesture may also change.

During early childhood, gestures perform a functional role in the construction of phrases and the expression of meaning as vocabulary develops (Capirci and Volterra 2008; Iverson and Goldin-Meadow 2005). For example, producing the iconic gesture: both hands with fingers pointing down pendulum from left to right, accompanying the descriptive phrase “there is a big zebra crossing”, tells the listener how the child conceptualizes the crossing as a location as well as movement associated with it. In this way, the gestures preschoolers produce provide those around them with insight into the content and extent of their spatial knowledge. On the other hand, adults communicating the same phrase might accompany it with a simple pointing gesture indicating to the listener the location of the crossing in relation to the self. The degree of overlap in the content of gestures and the associated speech phrase suggests that gestures primarily help adults translate ideas into speech and, to a lesser extent reduces the need to translate ideas into speech at all. Conversely, the degree of additional information present in gesture beyond that contained in speech phrases suggests that while children use gestures to transform ideas into speech, gestures are primarily used to reduce the need to translate ideas into speech at all. In the current study, the differences in the ways preschoolers and adults produce gestures and their connection to speech content suggests that the verbal and nonverbal way we communicate about space changes as language and cognition develop.

As well as playing a functional role in the construction of phrases and therefore, the communication of meaning, during development gestures may also facilitate the management and allocation of limited mental resources (Capirci and Volterra 2008; Iverson and Goldin-Meadow 2005). For example, producing a gesture enacting climbing a tree to indicate a location, disambiguates it from another location along the route which might be accompanied by a gesture enacting height. In this way, producing iconic gesture provides preschoolers with a means to communicate spatial knowledge beyond the limitations of their current vocabulary and cognitive capacity (Goldin-Meadow and Alibali 2013; Hostetter and Alibali 2007; Madan and Singhal 2012; Wilson 2002). Importantly, this suggests that the embodiment of thought processes through gesture production may have multiple functions for speakers throughout the lifespan which are not mutually exclusive.

Through form, position, and movement, gestures function to reference and represent objects in speech, convey space and movement, facilitate cognition, either separately or concurrently through a single gesture (Kok et al. 2016). That is, gesture provides the cognitive system with a stable external physical and visual presence that can provide speakers with a means through which to think and communicate (Pouw et al. 2014). Gestures re-represent action in the form of abstracted action, and as such, offloading cognitive work into the environment through abstracted actions is particularly relevant for tasks involving actions (i.e., spatial tasks; Wilson 2002). As with other tasks, gesture may serve two functions for speakers performing spatial tasks: help speakers translate spatial knowledge/information into speech and/or directly communicate spatial location/movement information, thereby eliminating the need to translate this information into speech (Hostetter and Alibali 2007; Wilson 2002).

Limitations and Future Research

It should be noted that the accuracy of verbal recall, as well as the relationship between the form of the gesture and the spatial feature of the entity to which it refers could not be assessed. Existing research suggests that the gestures adults produce relate meaningfully to the visual characteristics of spatial features of the entity to which they refer (Cassell et al. 2007). In this study, children and adults were asked to recall the short walk taken at the beginning of the session, as well as a walk they take regularly. While this method allows us to examine developmental differences in gesture production, gesture redundancy, and the relationship between types of gesture and phrase content, it does not allow us to examine the role gestures play in accurate route communication during development. It is possible, that the developmental differences in gesture production found in the current study also extend to developmental differences in the relationship between gesture and accurate route communication, as well as the correspondence between the morphological features of gestures and the visual aspects of referents. Future research could determine whether children’s speech and gesture when reporting route direction information is accurate, and whether the morphological features of children’s gestures correspond to the visual aspects of referents. Continued research into verbal and nonverbal spatial communication, such as an examination of description accuracy and gesture morphological correspondence, will prove integral to increasing our understanding of gesture as a way to extend the mind through embodying thought processes.

Language not only enables us to directly communicate what we think about space, but it also shapes how we think about it (Tversky 2011). Throughout development, our vocabulary for describing space expands as we interact with the world and with others, from single word-gesture utterances as infants, until as adults, our vocabulary allows us to effectively communicate spatial knowledge through words alone (Bates 1976; Blades and Medlicott 1992; Capirci and Volterra 2008; Daniel et al. 2009; Iverson and Goldin-Meadow 2005; Tversky 2011). The findings from the current study suggest that preschoolers’ spatial knowledge is deeper than that which their limited vocabulary allows them to verbally express. By using gestures to represent visual characteristics and directions, preschoolers can convey the physical qualities of the route and the movements required beyond the limitations of their developing vocabulary. By examining differences between adults’ and preschoolers’ use of gesture in relation with speech we are able to capture some developmental changes in spatial cognition and language, beyond that which speech alone allows. These differences highlight the need to include measures of gesture when examining cognition and language during development.

Notes

There are no effect sizes for multinomial logistic multilevel model main effects and interactions at this time, however odds ratios for simple effects are reported.

References

Adams, A., Bourke, L., & Willis, C. (1999). Working memory and spoken language comprehension in young children. International Journal of Psychology, 34, 364–373. https://doi.org/10.1080/002075999399701.

Alibali, M., Flevares, L., & Goldin-Meadow, S. (1997). Assessing knowledge conveyed in gesture: Do teachers have the upper hand? Journal of Educational Psychology, 89, 183–193. https://doi.org/10.1037/0022-0663.89.1.183.

Alibali, M., Heath, D., & Myers, H. (2001). Effects of visibility between speaker and listener on gesture production: Some gestures are meant to be seen. Journal of Memory and Language, 44, 169–188. https://doi.org/10.1006/jmla.2000.2752.

Alibali, M., Kita, S., & Young, A. (2000). Gesture and the process of speech production: We think, therefore we gesture. Language and Cognitive Processes, 15, 593–613. https://doi.org/10.1080/-16909600750040571.

Allen, G. (2000). Principles and practices for communicating route knowledge. Applied Cognitive Psychology, 14, 333–359. https://doi.org/10.1002/1099-0720(200007/08)14:4<333:AID-ACP655>3.0.CO;2-C.

Allen, G. (2003). Gestures accompanying verbal route directions: Do they point to a new avenue for examining spatial representations? Spatial Cognition & Computation: An Interdisciplinary Journal, 3, 259–268. https://doi.org/10.1207/s15427633scc0304_1.

Astington, J., & Jenkins, J. (1999). A longitudinal study of the relation between language and theory-of-mind development. Developmental Psychology, 35, 1311–1320. https://doi.org/10.1037/0012-1649.35.5.1311.

Bates, E. (1976). Language and context: The acquisition of pragmatics. New York: Academic Press.

Bates, E., Benigni, L., Bretherton, I., Camaioni, L., & Volterra, V. (1979). The emergence of symbols: Cognition and communication in infancy. New York: Academic Press.

Beattie, G., & Shovelton, H. (1999). Do iconic hand gestures really contribute anything to the semantic information conveyed by speech? An experimental investigation. Semiotica, 123, 1–30. https://doi.org/10.1515/semi.1999.123.1-2.

Behne, T., Carpenter, M., & Tomasello, M. (2014). Young children create iconic gestures to inform others. Developmental Psychology, 50, 2049–2060. https://doi.org/10.1037/a0037224.

Blades, M., & Medlicott, L. (1992). Developmental differences in the ability of give route directions from a map. Journal of Environmental Psychology, 12, 175–185. https://doi.org/10.1016/S0272-4944(05)80069-6.

Bretherton, I., McNew, S., & Beeghly-Smith, M. (1981). Early person knowledge as expressed in gestural and verbal communication: When do infants acquire a “theory of mind”. In M. Lamb & L. Sherrod (Eds.), Infant social cognition: Empirical and theoretical considerations (pp. 333–373). East Sussex: Psychology Press.

Cameron, H., & Xu, X. (2011). Representational gesture, pointing gesture, and memory recall of preschool children. Journal of Nonverbal Behavior, 35, 155–171. https://doi.org/10.1007/s10919-010-0101-2.

Capirci, O., & Volterra, V. (2008). Gesture and speech: The emergence and development of a strong and changing partnership. Gesture, 8, 22–44. https://doi.org/10.1075/gest.8.1.04cap.

Cassell, J., Kopp, S., Tepper, P., Ferriman, K., & Striegnitz, K. (2007). Trading spaces: How humans and humanoids use speech and gesture to give directions. In T. Nishida (Ed.), Conversational informatics: An engineering approach (pp. 133–160). New York: Wiley. https://doi.org/10.1002/9780470512470.ch8.

Cassell, J., McNeill, D., & McCullough, K. (1999). Speech-gesture mismatches: Evidence for one underlying representation of linguistic and nonlinguistic information. Pragmatics & Cognition, 7, 1–34. https://doi.org/10.1075/pc.7.1.03cas.

Church, R., & Goldin-Meadow, S. (1986). The mismatch between gesture and speech as an index of transitional knowledge. Cognition, 23, 43–71. https://doi.org/10.1016/0010-0277(86)90053-3.

Daniel, M.-P., Przytula, E., & Denis, M. (2009). Spoken versus written route directions. Cognitive Processing, 10, 201–203. https://doi.org/10.1007/s10339-009-0297-4.

Emmorey, K., Tversky, B., & Taylor, H. (2000). Using space to describe space: Perspective in speech, sign, and gesture. Spatial Cognition and Computation, 2, 157–180. https://doi.org/10.1023/A:1013118114571.

Flavell, J., Everett, B., Croft, K., & Flavell, E. (1981). Young children’s knowledge about visual perception: Further evidence for the level 1–level 2 distinction. Developmental Psychology, 17, 99–103. https://doi.org/10.1037/0012-1649.17.1.99.

Galati, A., & Brennan, S. (2013). Speakers adapt gestures to addressees’ knowledge: Implications for models of co-speech gesture. Language, Cognition and Neuroscience, 29, 435–451. https://doi.org/10.1080/01690965.2013.796397.

Gelman, S., Ware, E., Manczak, E., & Graham, S. (2013). Children’s sensitivity to the knowledge expressed in pedagogical and nonpedagogical contexts. Developmental Psychology, 49, 491–504. https://doi.org/10.1037/a0027901.

Gentner, D. (1978). On relational meaning: The acquisition of verb meaning. Child Development, 49, 988–998. https://doi.org/10.2307/1128738.

Goldin-Meadow, S., & Alibali, M. (2013). Gesture’s role in speaking, learning, and creating language. Annual Review of Psychology, 64, 257–283. https://doi.org/10.1146/annurev-psych-113011-143802.

Goldin-Meadow, S., Nusbaum, H., Kelly, S., & Wagner, S. (2001). Explaining math: Gesturing lightens the load. Psychological Science, 12, 516–522. https://doi.org/10.1111/1467-9280.00395.

Gregory, R. (2004). Psychological testing: History, principles, and applications. Boston: Allyn & Bacon.

Hostetter, A., & Alibali, M. (2007). Raise your hand if you’re spatial: Relations between verbal and spatial skills and gesture production. Gesture, 7, 73–95. https://doi.org/10.1075/gest.7.1.05hos.

Hostetter, A., & Skirving, C. (2011). The effect of visual vs. verbal stimuli on gesture production. Journal of Nonverbal Behavior, 35, 205–223. https://doi.org/10.1007/s10919-011-0109-2.

Iverson, J., & Goldin-Meadow, S. (2005). Gesture paves the way for language development. Psychological Science, 16, 367–371. https://doi.org/10.1111/j.0956-7976.2005.01542.x.

Just, M., & Carpenter, P. (1992). A capacity theory of comprehension: Individual differences in working memory. Psychological Review, 99, 122–149. https://doi.org/10.1037/0033-295X.99.1.122.

Kang, S., Tversky, B., & Black, J. (2015). Coordinating gesture, word, and diagram: Explanations for experts and novices. Spatial Cognition & Computation, 15, 1–26. https://doi.org/10.1080/13875868.2014.958837.

Kidd, E., & Holler, J. (2009). Children’s use of gesture to resolve lexical ambiguity. Developmental Science, 12, 903–913. https://doi.org/10.1111/j.1467-7687.2009.00830.x.

Kok, K., Bergmann, K., Cienki, A., & Kopp, S. (2016). Mapping out the multifunctionality of speakers’ gestures. Gesture, 15, 37–59. https://doi.org/10.1075/gest.15.1.02kok.

Madan, C., & Singhal, A. (2012). Using actions to enhance memory: Effects of enactment, gestures, and exercise on human memory. Frontiers in Psychology, 3, 507. https://doi.org/10.3389/fpsyg.2012.00507.

McGraw, K., & Wong, S. (1996). Forming inferences about some intraclass correlation coefficients. Psychological Methods, 1, 30–46. https://doi.org/10.1037/1082-989X.1.1.30.

McNeill, D. (1992). Hand and mind: What gestures reveal about thought. Chicago: University of Chicago Press.

McNeill, D., Cassell, J., & McCullough, K.-E. (1994). Communicative effects of speech-mismatched gestures. Research on Language and Social Interaction, 27, 223–237. https://doi.org/10.1207/s15327973rlsi2703_4.

Morsella, E., & Krauss, R. (2004). The role of gestures in spatial working memory and speech. The American Journal of Psychology, 117, 411–424. https://doi.org/10.2307/4149008.

Nicoladis, E., Cornell, E., & Gates, M. (2008). Developing spatial localization abilities and children’s interpretation of where. Journal of Child Language, 35, 269–289. https://doi.org/10.1017/S0305000907008392.

O’Reilly, A. (1995). Using representations: Comprehension and production of actions with imagined objects. Child Development, 66, 999–1010. https://doi.org/10.1111/j.1467-8624.1995.tb00918.x/abstract.

Özçalışkan, Ş., & Goldin-Meadow, S. (2005). Gesture is at the cutting edge of early language development. Cognition, 96, 101–113. https://doi.org/10.1016/j.cognition.2005.01.001.

Özçalışkan, Ş., & Goldin-Meadow, S. (2010). Sex differences in language first appear in gesture. Developmental Science, 13, 752–760. https://doi.org/10.1111/j.1467-7687.2009.00933.x.

Peugh, J. (2010). A practical guide to multilevel modeling. Journal of School Psychology, 48, 85–112. https://doi.org/10.1016/j.jsp.2009.09.002.

Pouw, W., de Nooijer, J., van Gog, T., Zwaan, R., & Paas, F. (2014). Toward a more embedded/extended perspective on the cognitive function of gestures. Frontiers in Psychology, 5, 359. https://doi.org/10.3389/fpsyg.2014.00359.

Rauscher, F., Krauss, R., & Chen, Y. (1996). Gesture, speech, and lexical access: The role of lexical movements in speech production. Psychological Science, 7, 226–231. https://doi.org/10.1111/j.1467-9280.1996.tb00364.x.

Sauter, M., Uttal, D., Alman, A., Goldin-Meadow, S., & Levine, S. (2012). Learning what children know about space from looking at their hands: The added value of gesture in spatial communication. Journal of Experimental Child Psychology, 111, 587–606. https://doi.org/10.1016/j.jecp.2011.11.009.

Schober, M. (1993). Spatial perspective-taking in conversation. Cognition, 47, 1–24. https://doi.org/10.1016/0010-0277(93)90060-9.

Segal, A., Tversky, B., & Black, J. (2014). Conceptually congruent actions can promote thought. Journal of Applied Research in Memory and Cognition, 3, 124–130. https://doi.org/10.1016/j.jarmac.2014.06.004.

Sekine, K. (2009). Changes in frame of reference use across the preschool years: A longitudinal study of the gestures and speech produced during route descriptions. Language and Cognitive Processes, 24, 218–238. https://doi.org/10.1080/01690960801941327.

Selman, R. (1971). Taking another’s perspective: Role-taking development in early childhood. Child Development, 42, 1721–1734. https://doi.org/10.2307/1127580.

Shrout, P., & Fleiss, J. (1979). Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin, 86, 420–428. https://doi.org/10.1037/0033-2909.86.2.420.

Taylor, H., Naylor, S., & Chechile, N. (1997). Goal-specific influences on the representation of spatial perspective. Memory & Cognition, 27, 309–319. https://doi.org/10.3758/BF03211414.

Taylor, H., & Tversky, B. (1996). Perspective in spatial descriptions. Journal of Memory and Language, 35, 371–391. https://doi.org/10.1006/jmla.1996.0021.

Thompson, L., Driscoll, D., & Markson, L. (1998). Memory for visual-spoken language in children and adults. Journal of Nonverbal Behavior, 22, 167–187. https://doi.org/10.1023/A:1022914521401.

Tomasello, M., Akhtar, N., Dodson, K., & Rekau, L. (1997). Differential productivity in young children’s use of nouns and verbs. Journal of Child Language, 24, 373–387. http://journals.cambridge.org/article_S0305000997003085.

Tversky, B. (2011). Visualizing thought. Topics in Cognitive Science, 3, 499–535. https://doi.org/10.1111/j.1756-8765.2010.01113.x.

Ward, S., Newcombe, N., & Overton, W. (1986). Turn left at the church, or three miles north: A study of direction giving and sex differences. Environment and Behavior, 18, 192–213. https://doi.org/10.1177/0013916586182003.

Wellman, H., Cross, D., & Watson, J. (2001). Meta-analysis of theory-of-mind development: The truth about false belief. Child Development, 72, 655–684. https://doi.org/10.1111/1467-8624.00304.

Wesp, R., Hesse, J., Keutmann, D., & Wheaton, K. (2001). Gestures maintain spatial imagery. The American Journal of Psychology, 114, 591–600. https://doi.org/10.2307/1423612.

Wilson, M. (2002). Six views of embodied cognition. Psychonomic Bulletin & Review, 9, 625–636. https://doi.org/10.3758/BF03196322.

Yu, C., Ballard, D., & Aslin, R. (2005). The role of embodied intention in early lexical acquisition. Cognitive Science, 29, 961–1005. https://doi.org/10.1207/s15516709cog0000_40.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Standard

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Rights and permissions

About this article

Cite this article

Austin, E.E., Sweller, N. Gesturing Along the Way: Adults’ and Preschoolers’ Communication of Route Direction Information. J Nonverbal Behav 42, 199–220 (2018). https://doi.org/10.1007/s10919-017-0271-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-017-0271-2