Abstract

This study aims to systematically review prior research on the evaluation and benchmarking of automated acute leukaemia classification tasks. The review depends on three reliable search engines: ScienceDirect, Web of Science and IEEE Xplore. A research taxonomy developed for the review considers a wide perspective for automated detection and classification of acute leukaemia research and reflects the usage trends in the evaluation criteria in this field. The developed taxonomy consists of three main research directions in this domain. The taxonomy involves two phases. The first phase includes all three research directions. The second one demonstrates all the criteria used for evaluating acute leukaemia classification. The final set of studies includes 83 investigations, most of which focused on enhancing the accuracy and performance of detection and classification through proposed methods or systems. Few efforts were made to undertake the evaluation issues. According to the final set of articles, three groups of articles represented the main research directions in this domain: 56 articles highlighted the proposed methods, 22 articles involved proposals for system development and 5 papers centred on evaluation and comparison. The other taxonomy side included 16 main and sub-evaluation and benchmarking criteria. This review highlights three serious issues in the evaluation and benchmarking of multiclass classification of acute leukaemia, namely, conflicting criteria, evaluation criteria and criteria importance. It also determines the weakness of benchmarking tools. To solve these issues, multicriteria decision-making (MCDM) analysis techniques were proposed as effective recommended solutions in the methodological aspect. This methodological aspect involves a proposed decision support system based on MCDM for evaluation and benchmarking to select suitable multiclass classification models for acute leukaemia. The said support system is examined and has three sequential phases. Phase One presents the identification procedure and process for establishing a decision matrix based on a crossover of evaluation criteria and acute leukaemia multiclass classification models. Phase Two describes the decision matrix development for the selection of acute leukaemia classification models based on the integrated Best and worst method (BWM) and VIKOR. Phase Three entails the validation of the proposed system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The application of automation systems is very important in complex medical cases [1]. Automated detection and classification of acute leukaemia is necessary to provide patients with suitable treatment and mitigate its dangers. The rapid and accurate diagnosis of this type of cancer plays a core role in patient treatment and recovery [2,3,4,5]. Most automation systems for complex medical problems depend on machine learning techniques, where machine learning is one of the common scientific fields based on artificial intelligence concepts. Machine learning techniques can handle many issues related to acute leukaemia, such as diagnosis, detection and classification [6,7,8]. Hence, numerous studies [1, 9,10,11,12] confirmed the necessity of adopting automated systems and methods to deal with various issues related to acute leukaemia. They stated that these systems provide precision results with fast response. Accordingly, many investigations focused on proposing or enhancing the detection and classification methods for acute leukaemia. Others studies [11, 13,14,15,16,17,18] developed automated systems to manage issues related to acute leukaemia, and all these efforts attempted to provide optimal results regarding acute leukaemia classification and detection [16, 19]. Moreover, the automated classification of acute leukaemia became common in many hospitals and cancer specialist centres to overcome the limitations of manual analysis [16, 18, 19]. Despite all the benefits obtained from these systems, however, users began facing challenges in choosing an automated system that provides highly accurate results with the highest performance among many available alternatives [20]. The vast diversity among available classification systems for acute leukaemia makes it difficult for health organisations to decide on which system to use. Therefore, the administrations of health organisations encounter difficulty in evaluating and comparing automated classification systems for acute leukaemia to select the best system, especially as no single system is superior to the rest [15, 16, 20] and many suffer from a lack of accuracy and computational efficiency [21]. Conversely, the difficulty of evaluation and comparison arises due to the multiple criteria of evaluation and the conflict among them [22]. The evaluation and benchmarking of automated classification systems for serious medical cases such as acute leukaemia are crucial in the quest for ascertaining the optimal system [10]. Such a process is critical because the wrong classification system can cost health organisations loss of patient life, legal accountability and even financial costs if the system fails to live up to expectations. For instance, if the system incorrectly identifies non-cancer cells as cancerous, that outcome may have adverse effects on the patient’s mental state, and he/she may need further surgery and diagnosis to determine whether he/she is cancer-free. The most serious case is when the system incorrectly identifies cancer cells as non-cancerous. Such an error is more important in this case because the existence of the disease will go unnoticed, appropriate therapy will not be implemented and then loss of life may transpire. Both cases will have a negative impact on the reputation and performance of healthcare organisations [189, 190, 194, 195]. Thus, identifying the most efficient technique to help health organisations in making right decisions on classification system selection is necessary [197, 201, 202, 209, 211]. Evaluating and benchmarking processes are required for selecting the best automated classification system among many available alternatives, especially since these systems are not cheap and related to human medical concerns [21]. A comprehensive review of literature is essential to highlight the automated multiclass classification systems for acute leukaemia, the benefits and characteristic of these systems from a wide bibliography and the challenges in the selection of the best classification systems resulting from the difficulty of evaluating and benchmarking these systems. The challenges and open issues in the selection of acute leukaemia classification systems need further study and analysis. The methodological aspects of providing a decision support system for the evaluation and benchmarking must also be emphasised to ensure the continuous provision of a better multiclass classification model for acute leukaemia through choosing the optimal classification system and overcoming related challenges. Figure 1 presents the framework of the literature review for this study. The remainder of this study consists of three parts. Part 2 provides a review and an in-depth and comprehensive analysis of past studies. Part 3 presents a discussion of the methodological aspects for our proposed decision support system. Part 4 provides the study conclusion.

Comprehensive review

A literature review is detailed in the following sections. All the steps and procedures in the protocol of the systematic review for evaluating and benchmarking the classification of acute leukaemia are described.

Systematic review protocol

This section presents the protocol of systematic review used in this study. The method of systematic review, information sources, selection of studies, search process, inclusion and exclusion criteria, data collection and literature taxonomy are described below.

Methods

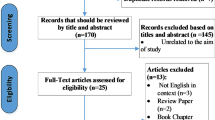

This section describes the procedures in the search, collection, filtering and reading of articles. Three reliable indexes, namely, IEEE Xplore, ScienceDirect (SD) and Web of Science (WoS) were adopted in searching for the articles. These three indexes cover a wide range of journals and conference articles related to our study field. They are also characterised by ease of use and the capability to build simple and complex search queries. The research query was used on IEEE Xplore, SD and WoS. The main keywords formed the search query (‘leukemia’ OR ‘leukemias’ OR ‘Leukaemia’) along with the main terms of evaluation (‘Evaluation’ OR ‘Assessment’ OR ‘Benchmark’ OR ‘measurement’) with computerised and AI terms to limit the scope of search to only the articles that adopted automation methods (‘AI’ OR ‘artificial intelligent’ OR ‘automated’ OR ‘Automation’ OR ‘Computerized’). The queries were run via the expert search form on these three databases. The search range covered only the articles and conference papers in the last 10 years. The query string is presented at the top of Fig. 2. Articles duplicated in the three selected databases were removed after the search process. Two iterations of intensive search were conducted in the selection of relevant articles. The first iteration focused on excluding irrelevant articles through scanning the titles and abstracts. The second one entailed intensive full-text reading for all the relevant articles. These two rounds were conducted using similar eligibility criteria. The third iteration emphasised screening the last set of studies to determine the evaluation criteria applied in the evaluation process of acute leukaemia classification tasks and excluded any study that did not utilise any of the identified evaluation criteria. The final set of articles was related to all studies which used any of the evaluation criteria for the binary and multiclass classification tasks of acute leukaemia. Note that review and survey studies that mentioned the evaluation criteria but did not actually use them were excluded in the third iteration. Important information used in writing this review which was extracted from the relevant articles during the full reading was saved into an Excel file. Only studies that fulfil the inclusion criteria listed in Fig. 2 were included. The exclusion conditions applied are as follows: non-English papers, non-English articles, studies which did not focus on acute leukaemia detection or classification, studies which focused only on segmentation of acute leukaemia images and the manual classification and analysis of acute leukaemia and articles that did not use any of the evaluation criteria. The list of relevant papers was organised in an Excel sheet file and EndNote library [198,199,200, 203, 204, 208]. Through full-text reading, the authors extracted numerous important highlights and information from the surveyed papers [183, 184, 188, 196]. The researchers were keen to extract important information and details that enabled them to reflect a detailed picture of all the features and aspects of acute leukaemia detection and classification with related evaluation and benchmarking processes. According to the purpose of this study, all relevant articles were classified into three categories and formulised into literature taxonomy. All the important highlights and information were placed on the body texts.

Results of taxonomy

This part describes all the results from the initial search until the final results were reached. The first query searches in the three databases yielded 1298 papers, the largest proportion (644 papers: 49%) were from IEEE Xplore, followed by SD (528 papers: 41%), and then WoS (126 papers: 10%). The search period covered publications in the last 10 years. In the first scan, 12 duplicate articles were excluded and 974 were excluded after reading their title and abstract, which indicated that they did not meet the inclusion criteria. A total of 312 articles remained after the exclusion of irrelevant and duplicated articles. Using the eligibility criteria, 312 irrelevant papers were excluded via full-text reading, resulting in the semi-final set of 89 papers gathered through the inclusion criteria. The final set of 83 papers involved studies that used at least one of the identified evaluation criteria. The final set was analysed and used to develop the research taxonomy, which considers a wide perspective for automated detection and classification of acute leukaemia research and reflects the trends of usage of the evaluation criteria in this field.

With the final set of articles, three groups were organised that represented the main research directions in this domain. The taxonomy was achieved in two phases, the first one (Fig. 3, left section) included three research directions: the first direction included 56 articles that focused on proposed methods, the second one covered 22 articles that presented proposals for system development and the third direction included 5 papers on evaluation and comparison. The second phase (Fig. 2, right section) demonstrated all the criteria used for evaluating acute leukaemia classification.

Proposed methods

The first category covers the research efforts on classification and feature selection/reduction. This category includes 56 articles that focused on enhancing the performance of classification or proposing new classification methods to deal with the binary or multiclass classification problem and improve feature selection/reduction. This category includes three groups of papers. The largest one has 33 articles that propose new classification methods or the enhancement of current approaches. The second one has 15 papers that deal with feature selection/reduction methods, and the last one has 8 papers that contribute to the classification tasks and the resolution of feature problems. The following section will describe these three groups of studies while emphasising the evaluation criteria used in each study.

Firstly, this study depends on average accuracy and time for measuring the performance of the flexible neural multiclass algorithm in [23]. In [24], accuracy and training were used as performance indicators for the general regression of the neural network. Moreover, the neural network depends on the association data extraction method in [25] and relies on accuracy.

Secondly, accuracy and training were used in [26] to evaluate the proposed twin-space SVM. Accuracy–time criteria were used to boost an evolutionary support vector machine [27]. This study [28] was comprehensive in using evaluation criteria, accuracy, precision, specificity, sensitivity, true positive (tp), true negative (tn), false positive (fp), false negative (fn), and time complexity as validation measures and proposed a map-reduced-based proximal SVM method.

Thirdly, FUZZY-ARTMAP neural networks used in [29] depended on two evaluation criteria, accuracy and validation, to classify the WBCs. These evaluation criteria involving accuracy, specificity, sensitivity and time were utilised to evaluate the classification tasks in [22]. Another work [30] employed accuracy and time as performance metrics of fuzzy nearest neighbours, while [31] used accuracy-specificity and sensitivity and time criteria in the evaluation of a decision tree algorithm-based fuzzy rule.

Fourthly, Bayesian and k-NN classifiers in [32, 33] were evaluated only through accuracy. Fifthly, the hybrid methods proposed in [8] were evaluated using the time complexity criterion. The accuracy criterion was the only performance criterion for evaluating the hybrid methods proposed in [34,35,36].

Lastly, the accuracy criterion was used to evaluate the proposed methods in some works [37], including the SMIG module, [38] 2,1-norm algorithm, [39, 40] geometric algorithm, [41] algorithm of rotation forest, [42] similarity-balanced discriminant neighbourhood embedding [43] and Naive-Bayes method. In [7, 44, 45], accuracy and e-validation were employed to evaluate their methods. As for other works, [46] applied the method regression model and [47] used the ant colony optimisation model, which were based on accuracy–time and validation in performance evaluation. Elastic net for simultaneous classification was measured by error rate in [48]. The study in [49] depended on sensitivity criterion in hierarchical tree method evaluation. Conversely, [2] used time and accuracy to measure the performance of the squares regression method. The last study in this group, [50], was more comprehensive in evaluation criteria terms, as it used accuracy, specificity, sensitivity, f-measure, tp, tn, fp, fn, time complexity, e-training and e-validation. An integrated method for classification was suggested in [51].

Numerous articles have proposed methods for feature selection/reduction. This section will focus on describing the classification performance evaluation criteria used in each study. The first three studies [3, 52, 53] used an accuracy criterion in the evaluation process. These three evaluation criteria (accuracy, specificity and sensitivity) are used in [54, 55]. Article [56] used five criteria: accuracy, tp, tn, fp and fn. Studies [57, 58] were based on accuracy, sensitivity and time complexity in the performance evaluation process. In [59], only two criteria were used, namely, accuracy and time, while [60] evaluated the performance of the proposed method according to accuracy, precision and specificity. Another work [61] used accuracy, precision, specificity, sensitivity and validation. The last four works used most of the available criteria. [62] depended on accuracy, tp, tn, fp, fn and time complexity in the evaluation section. [63] used accuracy, sensitivity specificity, tp, tp, tn and fn. [64] used accuracy, precision, specificity, sensitivity, tp, tn, fp, fn, training and validation. Finally, [65] conducted an evaluation based on accuracy, tp, tn, fp, fn, training, and validation.

The research efforts in the last group included studies on feature selection and classification. The evaluation criteria used in each study will be also described in this section. Accuracy criterion was used to evaluate the hybrid method proposed in [66]. Multiple fuzzy-rough sets were evaluated according to accuracy and sensitivity in [67]. Three evaluation criteria, namely, accuracy, training and validation, were used in [68]. Accuracy, time complexity, and training were used in [69]. These three studies [70] [71] [72] each used two criteria, namely, e-training and time complexity, accuracy and validation and accuracy and validation, respectively. A new approach that combines feature (gene) selection with transudative SVM was used in [73].

System development

This category includes 22 papers that focused on providing proposals for developing the classification systems of acute leukaemia. The studies in this section are described by concentrating on the evaluation criteria used in various classification systems based on supervised or unsupervised classification engines. Two types of efforts are included in this section: 1) the evaluation criteria used in systems based on a supervised classification engine and 2) the said criteria used in systems on unsupervised classification methods. The first five studies emphasised the detection or classification system according to three phases, namely, segmentation, feature extraction and classification. However, they used different criteria. Accuracy, precision, specificity and f-measure were used in [13]; accuracy and validation were used in [74]; accuracy and time were used in [9]; and accuracy and training were used in [75, 76]. The next four studies involved four phases: preprocessing, image segmentation, extraction and/or selection of the features and data classification. Accuracy, precision, specificity and sensitivity were used for the evaluation process in [11, 15, 16], while accuracy and precision, specificity, sensitivity, tp, tn, fp and fn were represented in the evaluation criteria in [77]. Accuracy, specificity, sensitivity and time complexity were used in [21]. Accuracy, precision, specificity, and f-measure were employed in [14]. Another two works [17, 18] focused on developing classification systems that encompass six phases and used accuracy as the performance measurement. [19], which also depended on accuracy, whereas [78] used time complexity in its evaluation section. [79] used accuracy and recall as measurements. Two key phases with 10 sub-phases based on game theory were used in [6], which then evaluated the resulting method using accuracy, time complexity and validation. In [80], accuracy was used to evaluate the proposed automated detection schema of lymphoblasts. Conversely, in [81], accuracy, sensitivity, training and validation were the main evaluation criteria for the gene selection and classification system. Another research effort proposed a system of classification based on the fuzzy rule concept [82] with pre-processing, fuzzy clustering and selection, rule extraction and classification and use validation in the evaluation process. A fuzzy expert system was evaluated according to accuracy in [83]. Finally, an intelligent multi-agent was used to assist in understanding the process of classification in [1].

Evaluation and comparative study

This research direction contains five articles that attempted to evaluate the classification methods or compare them according to selected evaluation criteria. The first study [84] depended on error rate criteria to compare two classification schemes, while in [10], accuracy and precision, specificity, sensitivity, f-measure, tp, tn, fp and fn were the main evaluation criteria. The remaining three [12, 20, 85] used accuracy as a main criterion.

Evaluation and benchmarking for acute leukaemia classification systems

This section describes the evaluation and benchmarking. It includes the criteria of evaluation and presents the various evaluation and benchmarking tools and their limitations. A summary of evaluation and benchmarking challenges and open issues is also reported.

Evaluation criteria

This section presents the different evaluation criteria for acute leukaemia classification tasks. These criteria were divided into two main groups: the reliability group and time complexity. Each of these groups has a subgroup.

Reliability group

The reliability group includes four subgroups of criteria (Fig. 4): the matrix of parameters, relationship of parameters [i.e. Ave Accuracy, Precision (Micro), Precision (Macro), Recall (Micro) and Recall (Macro)], the behaviour of parameters [i.e. F-score (Micro) and F-score (Macro)] and error rate. This section describes in detail the evaluation criteria in each group.

Matrix of parameters

The matrix of parameters is the main sub-category of the reliability group. This matrix is also known as the confusion matrix. It includes the key parameters of machine learning outputs. This matrix is also commonly used in the machine learning domain [36, 86]. It is widely utilised in describing the performance of classification models [20]. The values in a confusion matrix show the predicted and actual classification class achieved by the classification system [87]. The confusion matrix describes the incorrect and correct predictions in comparison to the real results of the test samples [28]. This approach allows for a more detailed analysis than the mere proportion of correct classifications.

A confusion matrix consists of two aspects. The first dimension represents the actual classification class of an object, and the other pertains to the classification class which the classification model predicts; moreover, each cell has the corresponding number of predictions achieved by the classification model that falls into that cell [60] [64]. The size of the confusion matrix is N × N, where N is the number of the various values of a label [11]. For a binary classification problem, the confusion matrix has two actual classes and two predicted classes, which mean the confusion matrix table has two rows and two columns [65]. For multiclass classification, the confusion matrix has more than two actual classes and predicted classes [36]. Figure 5 illustrates the binary classification task confusion matrix.

A confusion matrix for binary classification is formed from the four predicted outcomes (true positives, true negatives, false positives and false negatives), which are produced from the binary classification model [60]. The confusion matrix values are listed and described in Table 1.

Two of those parameters reflect the correct classification (true positive and true negative), while the false positive and false negative outcomes are two possible types of errors. Hence, this matrix allows for the identification of points which are correctly and incorrectly classified. It illustrates the performance of a classification model by displaying the actual and predicted points.

The confusion matrix is used in many of the reviewed studies to describe the performance of a binary classification model. [28] used a confusion matrix to summarise the testing results of the classification model which aimed to classify acute leukaemia into ALL and acute myelogenous leukaemia (AML). Among the test samples, 16 were in ALL and 8 were in AML. Figure 6 depicts the results of the resulting confusion matrix for binary classification. [60, 61, 64] also used confusion matrices to present the performance results of classifying acute leukaemia into AML and ALL. [11] used the confusion matrix for the results of classifying acute leukaemia into normal cells and blast cells.

The confusion matrix is also used in the performance evaluation of more general cases of multiclass classification. Multiclass evaluation is an extension of the methods used in binary evaluation, wherein multiple classes are involved instead of only two. The confusion matrix can generate multiclass cases [86]. For a multiclass classification problem, the confusion matrix is built with L classes, with L being more than two classes (Fig. 7).

In Fig. 7, the points in the grids with matching actual and predicted classes are the correct predictions. The grey grids are the grids for the correctly classified points (correct decisions made). In an ideal scenario, all other grids should have zero points or, in case of misclassifications, the values of other grids are the errors in the confusion between the various classes.

Fan et al. describes the detailed classification performance per class in the confusion matrix, and the sub-types of ALL (BCR-ABL, E2A-PBX1, MLL, T-ALL, TEL-AML1 and Others) were the classes that were used in the confusion matrix [36]. [65] used a multiclass classification technique to classify the data samples into five categories (ALL, AML, CLL, CML and Normal). Figure 8 depicts the results for multiclassification in the confusion matrix table.

In Fig. 8, the multiclass classification model successfully classified all samples correctly.

Finally, the confusion matrix describes all the results of multiclass classification tasks, and thus provides details about the correct and incorrect predictions. The parameters of the confusion matrix are the basis for evaluating the classification models, and through these parameters the rest of the evaluation metrics are calculated.

Relationship of parameters

This group of evaluation criteria includes five metrics typically used to measure the quality ratio of any multiclass classification model.

-

Accuracy

Classification accuracy is considered one of the most important metrics of evaluation. Accuracy expresses the performance or significance of the algorithms’ behaviour [30]. Classification accuracy is a commonly used metric for evaluating the quality of a classification system [59]. The value of classification accuracy changes depending on the selected datasets [30, 86].

Accuracy is measured for multiclass classification tasks in the macro level based on confusion matrix results as follows [86, 88]:

where Ci has many classes, for which tpi is the true positive, fpi is the false positive, fni is the false negative, and tni is the true negative.

Many studies have relied widely on accuracy in the performance evaluation of classification tasks, but they did not consider the differences between the types of classified classes; in real cases, especially in medicine, the distinctions between certain classified classes are very important [76].

-

Precision

Accuracy can be measured provided that a specific class has been predicted [60]. Precision (P) is defined as the sum of true positives (TP) over the sum of true positives plus the sum of false positives [16]. Precision is measured for multiclass classification tasks in the micro and macro levels according to the results of the confusion matrix [86, 88]. Table 2 describes the equations for each precision level that can be used.

Precision provides the ratio of subjects with positive outcomes that are correctly identified [60]. Thus, precision measures the classifier exactness. A low number of false positives means high precision, and vice versa [87].

-

Recall

Recall is also called sensitivity or rate of true positive, and it indicates the test’s capability to determine positive outcomes [16]. It is a measure of a classification model’s capacity to identify the instances of a specific class from a dataset [87]. Recall depicts the completeness of a classification model. A low number of false negatives means a high recall, whereas a high number of false negatives means low recall [87]. The higher the recall, the better the classification model becomes. It determines the ratio of accurately classified samples to total samples [50, 60, 89].

Recall is measured for multiclass classification tasks in the micro and macro levels according to the results of the confusion matrix [86, 88]. Table 3 describes the equations for each precision level that can be used.

Behaviour of parameters

This section describes two measures, namely, Fscore(micro) and Fscore(macro). Fscore refers to the harmonic mean of recall and precision. It gives the overall performance of a classification model [14, 16, 60]. We can examine the combined performance through this metric [50].

Fscore is measured for multiclass classification tasks in the micro and macro levels according to the results of the confusion matrix [86, 89]. Table 4 describes the equations for each precision level that can be used.

Error rate within the dataset group

This criterion measures errors made by the classification model. It is one of the main criteria in the evaluation and benchmarking of classification systems for acute leukaemia [1, 60, 66]. Roy et al. stated that determining the best classification model depends on the results of the error rate, and among some available classifiers, they chose the one that achieved the best outcome according to the error rate metric on the training and validation sets [72]. The lower the error rate, the better the classification model [64, 74]. [40] indicated that performing cancer prediction with a small error rate requires a comparatively big training sample set in the classification model learning. The importance of the error rate as a criterion in evaluation and benchmarking is supported by Shi et al., who used error rate in the comparison of their method with previous techniques [61]. The evaluation of the error rate of a classification model using the training dataset is considered an unreliable criterion. In fact, the classification model can overfit the training dataset with such a strategy. Thus, we need to use an independent dataset that differs from the one used in the training process (test data sample) to measure the error rate of the classification model. Hence, the data sample is divided into the number of parts using N-fold cross validation. The said validation is a random division of the sample of the dataset into N parts. Then, one of those parts is used for testing the classification model, and the others parts are used for the classification model learning [66].

Error rate is measured for multiclass classification tasks in the macro level according to the results of the confusion matrix using the following equation [86, 89]:

Table 5 presents the survey of the reliability group criteria used in various reviewed studies.

Table 5 provides a comprehensive review of the different evaluation metrics in evaluating and benchmarking the acute leukaemia detection and classification in all reviewed studies. The largest ratio of studies (90%) used accuracy. The other percentages are as follows: error rate (21%), recall (26%) and specificity (30%). The ratio of usage of other metrics with less than 20% are as follows: FP% (16%), FN% (13%), precision (15%), TP% (15%), TN% (3%) and fscore (1%). Variations occurred in the percentage of usage for these metrics among various reviewed studies and conflict was noted among the sub-metrics (Table 4). Additionally, no study used all this set of metrics together. The usage variance of these metrics indicates a serious challenge in using a specific set of metrics when evaluating and benchmarking the detection and classification of acute leukaemia classification. Such varying usage rates of evaluation metrics also suggest that no common guideline exists for evaluating various metrics and that each study applied the metrics that fulfils its objectives.

Time complexity

Time complexity is one of the challenges faced by researchers seeking to develop an acute leukaemia classification system. One of the main requirements for acute leukaemia classification is obtaining the least time complexity [55, 57, 72]. Time complexity is a significant metric in the evaluation and benchmarking of classification models [67]. [90] stated that one of the important requirements of acute leukaemia classification is rapid detection and classification, and the system which consumes less time, especially for medical images, can help save lives through the early detection of disease and prompt treatment. Time complexity is the time consumed by the input and output of sample images, which means the time required to complete the classification task of that algorithm [91]. One of the disadvantages of a classifier is the time-consuming processing [90, 91]. Time complexity is also vital in evaluating the performance efficiency of a system based on image processing; the best classifier is the one that achieves the highest accuracy and the least time complexity [77]. Processing time depends on the size of the dataset [70]. Table 6 illustrates the survey of time complexity criteria used in various reviewed studies.

Table 6 indicates that 24% of the reviewed studies applied this metric to measure the processing time. All remaining studies did not mention this metric. Processing time is one of the main requirements that should be measured in the classification systems of acute leukaemia. However, many of the reviewed studies focused on other metrics and overlooked time complexity.

Benchmarking tools

This section aims to review the various tools used in benchmarking different classification systems or methods. These tools compare the outputs of various classification systems according to specific criteria. The purpose of this comparison is to ascertain the quality enhancement of a new system against previous or other approaches. These tools are developed mainly to fulfil machine learning processes and activities, as well as data analysis [92], but they have also been used in many studies for benchmarking processes [8, 20, 25, 31, 93], However, benchmarking based on these tools indicate incomplete benchmarking processes.

Common benchmarking tools

Many tools can used in benchmarking. The widely used examples are described in the following sections.

Rapid miner

Rapid Miner is a software application that provides many tools and function libraries for machine learning applications. Developed by the Rapid Miner Company, it offers a comfortable and friendly environment based on a client/server model with two server options as service or as cloud. It can be run across different platforms and includes many machine learning methods and more than 100 schemes. Its methods support various analyses, such as clustering, classification and regression. In addition, it allows for flexibility regarding file formats, as it can accommodate about 22 formats. It was first developed in 2001 at the Technical University of Dortmund and was known then as YALE. Its name was later changed to Rapid Miner [93,94,95,96].

Weka

Weka is an integrated platform containing a group of methods, algorithms and visualisation possibilities for the modelling and analysis of data. It provides friendly GUI for ease of use. It supports many machine learning and data mining applications, such as association, clustering, classification and preprocessing. It was first developed in the Waikato University labs. It runs on Linux, Windows, and OS X systems. Weka was developed initially for the analysis of agricultural data. The new version based on java was first developed in 1997 and is applicable to different domains [3, 20, 31, 62, 94].

R tool

R software is a free tool that provides an integrated statistical and visualisation environment. The R Core Team developed it, and the first version was released in 1997. It supports work in multi platforms. S language was used to develop the R tool, which was deployed as open source software. Many extra packages were developed and provided as a free and publicly available resource. It supports various applications, such as statistical and data mining applications. It provides advanced and complex statistical tools and includes most formulae and mathematical symbols. This tool is considered easy to use and applicable for sampling [94,95,96].

Konstanz information miner

Konstanz Information Miner (KNIME) is an integration environment for data analysis and reporting. It was developed by KNIME AG to support various ML and DM algorithms and activities. The first version of KNIME was created in 2004 at the Konstanz University by software engineers. It works on Windows, Linux and OS X systems. It includes more than 100 methods for data cleaning and preprocessing, data mining, cleansing and data analysis and also provides different interactive visions for parallel coordinates, scatter plots and others [94, 95].

Orange

The Orange tool is one of the commonly used analytical software. It covers a wide range of DM algorithms which perform many activities, such as scoring of features and filtering. Python and C++ were the main languages used to develop this tool, so it is characterised by flexibility and robustness [95].

KEEL

KEEL is a data mining tool that includes many traditional methods and techniques for data processing and knowledge extraction. It can deal with different file formats (e.g. ARFF, CSV and XML) and provides possibilities for learning depending on intelligence and building simple, hybrid models and statistical modules. It also covers different feature processing, including selection and discretisation [96] .

Weaknesses of the reviewed benchmarking tools

Although the tools described in the previous section are widely used in the machine learning field [93], they suffer from many weaknesses in the process of evaluation and benchmarking.

Not all the tools described above were used to compare acute leukaemia classification systems. Moreover, the tools used for the evaluation and benchmarking process were utilised according to some, but not all, the evaluation criteria. Hence, such usage does not reflect all the necessary aspects of evaluation and benchmarking. The weaknesses of current tools in terms of evaluation and benchmarking include [97,98,99] failure to calculate the overall parameters of the reliability group of metrics, inability to compare among multiclass classification models simultaneously using more than one criteria, failure to match multiclass classification models and inability to rank multiclass classification models from best to worst.

Thus, a new method for evaluation and benchmarking that covers all important and required aspects is necessary. Including all the measurement criteria (reliability group and time complexity) during the evaluation and benchmarking will generate accurate results that reflect the quality of all aspects of the multiclass classification models of acute leukaemia. In turn, such accuracy would allow us to choose the appropriate classification model according to its evaluation and compare it with other models from multiple aspects and on the basis of different criteria.

Discussion

This section presents a detailed description and analysis of the challenge and open issues related to evaluation and benchmarking, in-depth analysis of the studies that attempted evaluation and benchmarking and an explanation of the automated multiclass classification of acute leukaemia. It also includes the recommended solution. The review of prior studies highlighted three serious open issues resulting in the difficulty of evaluation and benchmarking of the multiclass classification of acute leukaemia: conflicting criteria issue, criteria importance and evaluation of criteria issues. Multicriteria decision analysis is proposed as a solution. A decision support system based on MCDM is suggested for the evaluation and benchmarking of the automated multiclass classification of acute leukaemia. The recommended decision support system has three sequential phases. Phase One presents the identification procedure and the process for establishing a decision matrix based on a crossover of evaluation criteria and acute leukaemia multiclass classification models. Phase Two describes the decision matrix development for the selection of acute leukaemia classification models according to integrated BWM and VIKOR. Phase Three involves the validation of the proposed system.

Challenge and open issues related to evaluation and benchmarking

Recently, evaluation and benchmarking classification tasks associated with critical medical fields have gained growing interest to obtain high-performance classification processes. Such efforts confront problems and issues in several significant aspects. The issues and challenges are found in the evaluation and benchmarking of acute leukaemia multiclass classification systems. Benchmarking is carried out after the development of any system, with the aim of comparing the new system with other similar systems under the same conditions and metrics [9]. Evaluation and benchmarking involve verifying whether the newly developed multiclass classification systems satisfy the requirements. The main requirements for automated multiclass classification systems for acute leukaemia are high reliability versus decreased time complexity and high accuracy versus low error rate [90]. Achieving these requirements simultaneously poses a challenge [47]. Given the difficulty in overcoming this challenge, we find that most studies focused on one requirement and neglected the rest. This situation causes a conflict between criteria during the comparison process. Such conflict will be reflected in the evaluation and benchmarking. As a result, the benchmarking process is affected because benchmarking between multiple conflicting criteria is problematic [83]. In addition, the current comparison approach between the proposed systems and the previous systems in all the reviewed studies does not consider all evaluation and benchmarking criteria and instead concentrates on one aspect of the evaluation, overlooking the rest because it is not flexible enough to address the conflict between the various criteria. Therefore, multiclass classification tasks require better evaluation and benchmarking. The following subsections will explain the main issues that cause the challenge in evaluation and benchmarking. Figure 9 illustrates the main issues of evaluation and benchmarking in automated acute leukaemia classification.

Conflicting criteria issue

An important issue found in related literature is the conflict or tradeoff between different performance criteria. The tradeoff situation results in the loss of one or more aspects of the performance quality of acute leukaemia classification systems. Tradeoff requires the users to give up one requirement for another owing to the difficulty of achieving balance between all requirements. In our case, a conflict occurs among criteria that measure the basic requirements of any acute leukaemia classification system [21]. These criteria are related to identifying the strengths and weaknesses of each system, which leads to the inability to make a rational decision for evaluation and benchmarking the different alternatives and selecting the best one among them. The varying ratios among the different criteria collected in our study also showed the effect of the conflict on various criteria used by researchers. Consequently, the conflict amongst evaluation criteria for acute leukaemia classification systems constitutes a formidable challenge in our intention to create a skin cancer segmentation/classification approach. This challenge mainly arises from conflicting terms, particularly, the conflict amongst the criteria and amongst the data. The reviewed studies demonstrate conflicting criteria or tradeoff problems between reliability, time complexity of the acute leukaemia classification model and error rate within the dataset in the evaluation and benchmarking of classification systems. Reliability should be high, time complexity for conducting the output images should be low, error rate resulting from the training datasets should be low and accuracy should be high. In the development of multiclass classification systems for acute leukaemia, all development requirements must be taken into account [90]. Conflicting data are observed due to the section matrix of parameters on TP, FP, TN and FN, which show the rise in TP and TN when parameters FP and FN are reduced [13, 28, 50, 62]. By contrast, a comprehensive assessment and benchmarking methodology covering all evaluation criteria and capable of dealing with the conflict criteria should be used to ensure a successful system that achieves its objectives [22]. This status of conflicting data pointed to an obvious conflict between the probability parameters. Such parameters significantly affect the values of the rest of the metrics within the reliability group. Thus, such requirements must be considered during evaluation and benchmarking. Each reviewed study reported that evaluation and benchmarking of all criterions are independent of the general framework. Accordingly, the approach of acute leukaemia classification must be performed to standardise basic and advanced requirements, and a clear methodology must be implemented during research for testing, evaluation and benchmarking. A new and flexible evaluation and benchmarking method must be applied to address all conflicting criteria and data problems. However, to our knowledge, solutions in this aspect have not yet been suggested on these particular issues.

Issue of criterion importance

The evaluation of acute leukaemia classification systems involves a set of criteria, and the importance of each criterion varies according to the objectives for which the system is developed. In other words, the importance of one of the evaluation criteria may be increased in exchange for the low importance of another criterion according to the objectives of the system. Thus, a conflict will exist between evaluation and benchmarking criteria, due to the different importance of each criterion in different systems [47]. The conflict status among the criteria is one of the serious challenges for the evaluation process. Suitable action must be prescribed for a situation wherein the importance of a certain criterion is increased while that of others is decreased. Two key sides should be taken into account. First, the behaviour of the classification systems of acute leukaemia must be understood and achieved, thereby giving specific significance to the design. Second, the approach must be evaluated by considering the tradeoff.

The evaluator’s opinions may disagree with the designers’ aims as well, which can impact the final evaluation of the needed approach. Technically, evaluation and benchmarking of the classification systems of acute leukaemia entail taking into account multi criteria simultaneously, including rate of time complexity and reliability with their sub-criteria [10, 77, 87] and assigning the favourable weight for all aspects to benchmark the approaches of acute leukaemia classification. After comparing the scores of all approaches, those with the ‘highest balancing rate’ must receive the highest priority level, and those with the ‘least balancing rate’ must be given the lowest priority levels. Evaluation and benchmarking are difficult tasks and can be regarded as extremely challenging because all classification approaches of acute leukaemia show multiple attributes that must be taken into account. For example, error rate and rate of time complexity have been proven to be very significant in the classification of acute leukaemia because they offer an objective complement to the acute leukaemia classification decision and optimise inter-rater consistency. Consequently, for these attributes, different weights may be provided by each decision maker. On the one hand, developers who aim to give a score for an acute leukaemia classification approach might assign more weight to one feature rather than to other features that attract less interest. On the other hand, developers who aim to use benchmarking software to solve such problems will probably target various attributes as the most significant one, such as the accuracy [2, 23, 69]. Thus, evaluation and benchmarking for classification approaches of acute leukaemia suffer from highly complex attribute problems.

Issue for the evaluation criteria

Numerous critiques have been performed on the criteria of evaluation. A problematic figure exists on the variation of error rate values in dataset criticism resulting from the varying sizes of the datasets used in different acute leukaemia experiments [61, 68]. Thus, one important issue of these criteria must consider the error rate value with each experiment owing to the lack of a standard dataset; in addition, an unjustified consumption of effort and time exists caused by an unorganised collection of dataset, depending on individual studies [10]. The reason for criticising the reliability set of the criteria is that its result depends on the confusion matrix that contains four parameters, namely, TP, FP, TN and FN. The number of pixels may be lost during the cropping of the image background of acute leukaemia using an image editor when manually labelling the actual class; moreover, comparing the actual class with the predicted class to compute one of the matrices of parameters are needed [10, 18, 79, 84]. Thus, this status will affect the results from all reliability sets (behaviour, matrix and relationship) of parameters, which are considered debatable. Although the critiques for these criteria exist in many earlier studies, they are still extensively used for evaluating the various tasks of acute leukaemia detection and classification.

Critical review and analysis

The growing number of available classification models and systems of acute leukaemia is considered a major problem for health organisations and other cancer treatment centres. Health organisations specialising in cancer treatment have encountered a challenge on how to select the appropriate acute leukaemia classification system that would allow accurate and rapid detection and classification of acute leukaemia. Previous studies have clearly demonstrated that the acute leukaemia classification tasks vary in terms of the accuracy of the results they provide, apart from the overall performance disparities. In the same context [15, 16, 20], no single classification system is confirmed to be superior over the rest. According to our systematic survey on automated classification of acute leukaemia, a total of 83 studies addressed the different aspects of such a classification system. The explanation in ‘Section 2.2.1. Proposed Methods’ demonstrates that most of the reviewed studies attempted to propose developing a new classification method; alternately, other studies attempted to enhance the current methods. Most of those studies were focused on enhancing the accuracy of classification, decreasing the classification time or improving the overall performance of classification. In addition, the analysis in ‘Section 2.2.2. System development’ demonstrates that numerous studies have developed classification systems of acute leukaemia, and these systems differ in terms of classification techniques, phases and procedures of classification; different accuracies of the classification results are also provided by each system. The analyses in Sections “Evaluation criteria”. and 2.2.2. presented many of the methods; models or systems of classification of acute leukaemia have been proposed or developed in the literature. Obviously, all those works differ from one another in terms of classification techniques used and procedures followed, as well as the difference in the accuracy of the classification results from one to the other. Apart from the disparity of their overall performance, all results confirm the difficulty of making a decision to choose a better option among them.

However, no study has provided a comprehensive and integrated solution to assist in evaluating and benchmarking the multiclass classification models or systems to determine a suitable one. In ‘Section 2.2.3. Comparative and Evaluation Study’, five studies were described in Table 7, which attempted to address the evaluation and benchmarking issues. However, our analysis of their work found that they only attempted to evaluate the classification tasks of acute leukaemia on the basis of partial dimensions. Alternately, they compared among several models of acute leukaemia classification on the basis of individual criteria. Therefore, their solution cannot be used as basis for taking a complete picture that reflects all dimensions of evaluation and comparison to choose the right decision for a suitable solution of acute leukaemia classification.

As shown in Table 7, studies that focused on the evaluation and comparison of acute leukaemia dealt with a few aspects of this evaluation and neglected other aspects. By contrast, [12, 20] depended on the evaluation and benchmarking of the accuracy aspect only. At the same time, Snousy et al. confirmed that accuracy is an important criterion in cancer classification task but is not the only goal in the cancer domain; their study aimed to investigate the effectiveness of various features of the selection methods on classification accuracy among different classification models. [10, 85] attempted to evaluate and benchmark on the basis of a few of the reliability group criteria. Rota et al. depended on accuracy, precision and recall; whereas Labati et al. focused on TP, TN, FP, FN, specificity, sensitivity and accuracy. Finally, [84] dealt with the evaluation and comparison regarding error rate. To make a substantive judgment on the quality and performance of acute leukaemia classification systems, an evaluation and benchmarking method are required which covers all the main requirements and cannot be assessed from only single aspect. In the same context, Saritha et al. confirmed that the automated classification system should have high accuracy and efficacy, less processing time, small error and robust. Early identification of leukaemia yields in providing the appropriate treatment to the patient [90]. This study attempts to fill the gap in the evaluation and benchmarking of the acute leukaemia classification area. This study provides a new decision support system for the evaluation and benchmarking of multiclass classification of acute leukaemia that includes all the key evaluation and benchmarking metrics. This system shall be capable of assisting the administrations of health organisations and various users to evaluate and benchmark acute leukaemia multiclass classification solution. It can also ensure that the selected classification models meet all necessary requirements.

Automated classification of acute leukaemia

Acute leukaemia

Blood is an essential component in the human body, and it achieves many of the important functions related to maintaining the metabolism process by delivering oxygen and other vital minerals. White blood cells (WBC), red blood cells (RBC) and platelets are the essential components of blood [32]. Blood cancer is one of the most serious types of cancer [19]. Leukaemia is a type of blood cancer distinguished by an irregular or abnormal growth in the number of white cells in the blood known to be immature blasts [16, 37]. Two kinds of abnormal white cells can turn into leukaemia, namely, myeloid and lymphoid cells [90]. [14, 18, 90] described acute and chronic leukaemia as two main types of leukaemia; the first type is characterised by a quick progression unlike the second type, which grows slowly. In an acute leukaemia case, irregular white cells called immature blasts work improperly. The immature blasts increase quickly and will worsen if not controlled immediately. By contrast, in the chronic type, young blood cells are present, but only the mature ones produce functional cells. Acute leukaemia results from a rapidly increasing production of white blood cells which then results in an abnormal increase in irregular cells or for the latter to be passed into the blood stream [32, 100]. Acute leukaemia starts in the bone marrow and blood and rapidly progresses. Abnormal white cells can grow in adults and children [29]. Based on the famous model of leukaemia categorisation, acute leukaemia is divided into two types, namely, acute lymphoblastic leukaemia (ALL) and acute myeloid leukaemia (AML) [14, 53, 79]. Each type includes a number of subtypes; ALL has two subtypes (B cell ALL and T cell ALL), while AML is categorised into eight subtypes (M0, M1, M2, M3, M4, M5, M6 and M7) [79]. ALL comprises rapidly increasing infected lymphocytes. Lymphocytes are a type of white blood cells which fight infection [11]. ALL will be fatal if no remedial action is taken immediately because it is characterised by a rapid proliferation into the different body organs, especially blood circulation. Therefore, a rapid diagnosis of this type of acute leukaemia is important for the patient’s recovery [13, 16]. In AML, these immature cells do not develop and are incapable of warding off infections [101]. ALL usually occurs in young children, whereas AML infects adults more than children [14, 102]. The diagnosis of acute leukaemia is mostly difficult and requires quick treatment; thus, this type requires modern and non-traditional methods to assist the physician in making a diagnosis [15, 81, 102].

Acute leukaemia classification

Data classification is a generally common topic in various sciences, such as statistics, computer science and decision science [185]. It has many applications in medicine, engineering and management and can address many issues related to data recognition, diagnosis and detection, among others [6]. The accurate and fast detection of acute leukaemia and its classification into its subtypes are important when making appropriate remedial action [7, 8, 52]. Diagnosis and classification of acute leukaemia is a field that requires automation, especially as the manual methods for detection and classification suffer from several limitations; conversely, satisfying the need for rapid and accurate methods that can facilitate early detection and prediction of cancerous patterns [1,2,3, 80]. Automated classification is extensively applied to analyse cancer and is relatively a fledgling and an interdisciplinary technology that integrates the primary ideas of digital image processing [101].

Many studies have been conducted to automatically classify acute leukaemia and their subtypes and thus enhance early diagnosis [19]. An automatic classification of the ALL, images of blood or bone marrow are processed using image processing techniques [11]. A well-planned classification system plays a significant role in the accurate classification of acute leukaemia. Classification systems not only help experts make the right decision but also minimise possible errors [6]. A computerised system will be helpful for the analysis of stained microscopic images of the blood cells [13]. In practice, an identity from one of the known classes is utilised to assign the unknown test parameters; this task is known as classification. The classifier uses the set of features and identifies the difference between normal and diseased cells [77]. Classification is the task of associating the appropriate class label with the blood test sample by using the measurements [100].

Classification models classify a cell as normal cell or cancer-affected cell, that is, a blast cell. Alternately, they classify the cell into subtypes by comparing a few of the features [90]. Choosing the most appropriate classification methods is essential to improve classification performance [81].

When leukaemia is classified into two classes only, namely, normal and cancer cells (abnormal) or AML and ALL, this classification task is called a binary classification; whereas when the disease is classified into more than two classes, namely, normal cell, AM and ALL or, L1, L2 and L3, this classification task is called multiclass classification [8] [103].

Many machine learning methods can be employed for the multiclass classification of acute leukaemia into subtypes. The most employed classification methods will be described in the following subsections:

-

Artificial Neural Network (ANN) is one of most popular methods among the artificial intelligence fields. Numerous authors mentioned that ANN has significant capability in interpreting and analysing medical data sets. It can represent complex patterns depending on a mathematical model that works in a way that simulates the human brain [32] [187].

-

Support Vector Machine (SVM) is considered one of the oldest and most important methods of artificial intelligence. According to earlier studies, SVM is the most extensively used in the classification of acute leukaemia. It executes the procedures of classification by building hyper-planes in a multidimensional space that distributes cases of dissimilar and different class labels [102, 104] [186].

-

Decision Tree (DT) is a classical model of machine learning. The structure of this method is similar to a tree; each of its branches represents a class of sample with similar characteristics. Many decision tree extensions have been developed, such as CART, C4.5, ID3 and EG2 [41].

-

Nearest Neighbour (NN) is one of the commonly used classification algorithms. It works under supervised and nonparametric approach [30]. Classification in NN is performed by the votes of nearby neighbours. Depending on the principle of voting, objects will be identified according to their related classes [104].

-

Random Forest (RF) [41] is an ensemble machine learning method that contains a number of DTs through a random division of the feature space. It is constructed by collecting multiple DTs and works under supervised and nonparametric approach. The principle of its work is to segment the feature space into a number of subspaces and extract the most significant features; this process is repeated until the most distinguishable training dataset and the basic classification method for various feature subspaces are obtained [104].

-

Bayesian Network is a joint distribution based on the probability for a group of random variables to have a potential mutual causal relationship. In this method, the variables are represented by nodes; the causal relationship between each node pair is node edge and a conditional probability distribution in each of the nodes [33] [187].

Recommended pathway solutions for future direction

The previous sections described the existing evaluation and benchmarking approaches. Those sections described the evaluation methods, evaluation criteria and the challenges and issues of acute leukaemia multiclass classification evaluation. This section will present the new recommendation pathway solution. The supporting reviews are presented as follows. The processes of evaluation and benchmarking of multiclass classification for acute leukaemia involve considering simultaneous multiple attributes (time complexity rate, reliability). Thus, a decision support system is proposed based on the MCDM method to solve multiple criteria attributes that may increase the quality of decision making [205, 206] [193]. In the real world, beneficial methods that address MCDM issues are introduced as the recommended solutions that support decision makers in solving the problems and performing analyses, evaluation and ranking [105] [192, 207].

Multi-Criteria decision making: definition and importance

Keeney and Raiffa [106] define multi-criteria decision making (MCDM) as ‘an extension of decision theory that covers any decision with multiple objectives. A methodology for assessing alternatives on individual, often conflicting criteria, and combining them into one overall appraisal…’ In addition, Belton and Stewart [107] define MCDM as ‘an umbrella term to describe a collection of formal approaches, which seek to take explicit account of multiple criteria in helping individuals or groups explore decisions that matter’. MCDM is one of the most well-known techniques for decision making and deals with the complex decision problems in handling multiple criteria [108, 109] [191]. It presents a systematic method of solving the decision problems on the basis of multiple criteria [109]. The aim is to assist decision makers in dealing with such problems [110]. The methods and procedures of MCDM frequently depend on quantitative and qualitative approaches, and it is often focused on simultaneously dealing with multiple and conflicting criteria [111, 112] [207, 208]. Depending on the approaches, MCDM can increase the decision quality through effective and rational methods more than the traditional processes [113]. MCDM aims to attain the following: (1) categorise the suitable alternatives among a group of available alternatives, (2) rank the suitable alternatives on the basis of their performance in decreasing order and (3) select the best alternative [105] [205, 206]. Based on these goals, the suitable alternative(s) will be scored. The essential terms requiring definitions in any MCDM solution, namely, the evaluation matrix or decision matrix, are also the decision criteria [114]. A decision matrix consists of n criteria and m alternatives that must be created. The intersection of each criteria and alternative is specified as x_ij. Therefore, we have a matrix (x_ij) _ (m*n) expressed as follows:

where A_1, A_(2),….,A_m are possible alternatives the decision makers want to rank (i.e. classification models). C_1,C_(2),…,C_n are the criteria against which the performance of each alternative is evaluated. Lastly, x_ij is the rating of alternative A_i with respect to criterion C_j, and W_j is the weight of criterion C_j. Certain processes must be achieved to score the alternatives, such as normalisation, maximisation indicator, adding weights and other processes depending on the method. For example, suppose that D is the decision matrix utilised to score the performance of the alternative Ai, where based on Cj, Table 8 is an example of the multi-criteria problem described by [115].

The values in the graph are difficult to evaluate owing to the large numbers in c2 and c3 (Fig. 10).

Enhancing the decision-making process is important by involving the decision makers and stakeholders. Using proper methods for decision making is also necessary to handle multi-criteria problems [210]. MCDM is extensively used in healthcare [112, 116] [116]. Decision makers in healthcare can improve their decision making through a systematic method and attainment of the best decision, depending on the various MCDM methods [116]. In particular, many of the healthcare decisions are complex and unstructured [116].

MCDM Methods

Several MCDM theories have been explored. Figure 11 shows the most commonly used MCDM techniques, which use different notations [116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133]. Table 9 provides a brief description of each technique.

MCDM techniques are diverse, and this variety might cause difficulty in selecting the suitable techniques among the many available MCDM techniques. Each technique has its own limitations and strengths [118, 134,135,136,137]. Thus, selecting the appropriate MCDM method is important. To determine the best MCDM method, many studies have presented their advantages and limitations. Other studies presented a comparison analysis among various MCDM methods. Figure 12 illustrates the advantages and disadvantages of the common MCDM methods [116, 119, 125, 128, 130, 131, 134, 136, 138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168].

To the best of our knowledge, none of the analysed methods have been employed to rank the multiclass classification models for acute leukaemia. Many MCDM methods have been proposed and employed in various studies for the calculation of criteria (factors) weights, such as WSM, SWA, AHP, and BWM [134, 156,157,158,159]. The present study has employed the best and worst methods because they can provide the results with more consistency against AHP and other weighting MCDM methods; in addition, the pairwise comparisons based on BWM are lesser than the other methods [141,142,143,144]. The pairwise comparisons in BWM method focus on reference comparisons as well; meaning, it executes the preference of the most important criterion over all the other criteria and the preference of all the criteria over the least important criterion [143, 169] [162]. Conversely, the most common MCDM methods for ranking the alternatives are TOPSIS and VIKOR; these two methods employ the compromise priority approach for multiple response optimisation [140] [131] [149]. VIKOR and TOPSIS are both based on an aggregating function representing ‘closeness to the ideal’. The ranking index of VIKOR is based on a particular measure of ‘closeness’ to the ideal solution. Conversely, TOPSIS determines the selected alternative on the basis of its proximity to the (‘shortest distance’) ideal solution and the greatest distance from the ‘negative-ideal’ solution; however, it does not consider the relative importance of the distances from these points [131, 138]. In addition, VIKOR can rank the alternatives to determine the best one accurately and rapidly [140]. The recent style of VIKOR studies has changed into integrating VIKOR with another MCDM method rather than applying it alone. In the reviewed studies, numerous examples of applying VIKOR with BWM were provided to achieve consistency improvement for the subjective weights. Such integration between VIKOR with BWM also archives a robust method based in the advantages of the two methods to overcome the uncertainties associated with the problem under study [138, 163,164,165,166,167,168], VICOR and BWM are clear and easy to use for those without an MCDM background; it can also be performed in a friendly computing environment [138].

Thus, VIKOR and BWM have been adopted to resolve different real-world issues. However, VIKOR cannot elicit the weights and check the decision-making consistency. To overcome these limitations, several authors have recommended employing the BWM with VIKOR [118, 144, 166]; BWM can set weights and check the consistency, along with its flexibility to be applied with other methods. As a conclusion, evaluating and benchmarking acute leukaemia multiclass classification suggest a need to integrate the BWM to set weights for evaluation and benchmarking criteria (reliability, time complexity rate), depending on the judgments of experts. Moreover, VIKOR is recommended to supply the ranking of multiclass classification models. Figure 13 illustrates the proposed solution for the evaluation and benchmarking of acute leukaemia multiclass classification.

Best-worst method (BWM)

Determining the most important and desirable alternative is the main aim of the MCDM methods when multiple criteria for decision making exist. Weights are elicited to the decision criteria depending on a comparison among them [143]. Pairwise comparison among the attributes enables us to set the weights for the attributes in each aspect. The BWM method is one of the common multi-criteria decision-analysis methods, which perform less pairwise comparison that leads to obtaining the highest consistency in the weight obtaining process [160]. In 2015, Rezaei developed the BWM, in his method amid to weights obtaining for decision criteria and alternatives with respect to multiple various criteria through pairwise comparisons, but it requires a number of comparisons; consequently, it focused on improving the consistency for the weight setting process [169] [155]. The weight elicitation process in BWM depends on reference comparisons, which lead to less comparisons; accordingly, it focuses on determining the best criterion, the preference of this criterion over all the other criteria and the preference of all the criteria over the worst criterion [169]. BWM uses a scale from 1 to 9 to determine the preferences among the criteria. It achieves more reliable outcomes than most MCDM, is easy to use, decreases the times of comparison and ensures the results’ reliability by making fewer comparisons [143, 170]. It likewise contains an consistency index to measure the reliability of the reference comparisons among the criteria. As BWM noted, selecting the best criteria is not difficult among the available criteria, whereas the difficult part is how to determine the importance level of the best criterion over the other criteria, as well as the importance of all the criteria over the worst one. The BWM provides the comparison outcome through the numbers from f 1, 2, 3, 4, 5, 6, 7, 8 and 9 g and neglects the reciprocals of each pair to overcome the problem arising from the unequal distance between fractional comparisons [162].

In general, the BWM focuses on eliciting the weights for decision criteria based on the reference comparison for the most desirable criterion (best one) and the least desirable criterion (worst one) with the other set of decision criteria. Using BMW to elicit the weight includes five sequential steps [138, 143, 160, 166, 171, 172], as demonstrated in Fig. 13.

VIKOR method

VIKOR is one of the common MCDM methods that aim to improve the solutions of complex decisions. VIKOR was developed by Serafim Opricovic under the name ‘VlseKriterijumska Optimizacija I Kompromisno Resenje’ [88]. Ranking and selecting the alternatives are the main issues of this method, especially when difficulties arise in decision making because of multiple conflicting criteria [131] [173]. The main principle of VIKOR’s work is comparing available alternatives on the basis of the multiple criteria to rank the alternatives and select the best among them. It can deal with criteria even when different measurement units are used [174]. The compromise ranking of alternatives is conducted according to the closeness of the alternative to the ideal solution; meaning, the alternatives will be ranked from the nearest to the ideal solution to the farthest one [175]. VIKOR method must determine the items in compromise ranking, that is, the solution that considers compromising and stabilising the intervals of the weight for preference stability of the compromise solution [131]. For alternative ranking, VIKOR is considered a common method and has been employed by many studies [173, 176, 177].

In VIKOR method, the alternatives and decision criteria are arranged in a structure known as a decision matrix; the columns of this matrix depict a number of alternatives, and the rows represent a number of decision criteria [166, 174].

The steps in the VIKOR method [88, 131, 175, 176, 178] are as follows:

-

Step 1.

For each criterion, define the highest and lowest values.

-

Step 2.

Construct the weighted decision matrix.

-

Step 3.

Calculate Si and Ri in rough number.

-

Step 4.

Calculate Qi in rough number.

-

Step 5.

Perform alternative ranking.

-

Step 6.

Check the ‘acceptable advantage’ and ‘acceptable stability’ in decision making.

Figure 14 illustrates the integrated MCDM method employed as the recommended decision support solution to deal with the main issues of benchmarking/selection of the multiclass classification of acute leukaemia. BWM method is employed to elicit the weights for the evaluation criteria. The weights obtained from BWM are passed to the VIKOR method, which is responsible for the ranking among the alternatives based on weighted criteria.

Methodology aspects

This section introduces the description and explanation of the methodological aspects of the decision support system for evaluating and benchmarking the multiclass classification of acute leukaemia. The identification of the decision matrix based on the evaluation and benchmarking criteria is the first phase (Section “Identification of a decision matrix”), followed by the development phase of a new decision support system for the evaluation and benchmarking based on integrated BWM and VIKOR (Section “Development phase”). The final phase is the validation process (Section “Validation phase”). The proposed methodology is presented in Fig. 15.

Identification of a decision matrix

This phase aims to construct the decision matrix, which is the main component in our decision support system. The decision matrix components consist of decision alternatives and decision criteria. The decision alternatives in our case are multiclass classification models for acute leukaemia, and the criteria are evaluation criteria and sub-criteria identified in the previous phase. Eight multiclass classification models will be built for acute leukaemia to use these models as decision alternatives in our decision matrix.