Abstract

Clinical pathways’ variances present complex, fuzzy, uncertain and high-risk characteristics. They could cause complicating diseases or even endanger patients’ life if not handled effectively. In order to improve the accuracy and efficiency of variances handling by Takagi-Sugeno (T-S) fuzzy neural networks (FNNs), a new variances handling method for clinical pathways (CPs) is proposed in this study, which is based on T-S FNNs with novel hybrid learning algorithm. And the optimal structure and parameters can be achieved simultaneously by integrating the random cooperative decomposing particle swarm optimization algorithm (RCDPSO) and discrete binary version of PSO (DPSO) algorithm. Finally, a case study on liver poisoning of osteosarcoma preoperative chemotherapy CP is used to validate the proposed method. The result demonstrates that T-S FNNs based on the proposed algorithm achieves superior performances in efficiency, precision, and generalization ability to standard T-S FNNs, Mamdani FNNs and T-S FNNs based on other algorithms (CPSO and PSO) for variances handling of CPs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Clinical pathways (CPs) have been accepted as an important tool to facilitate the delivery of high quality, cost-efficient health care service along with increasingly diverse health demands. The CP is a management plan that displays goals for patients and provides the sequence and timing of actions necessary to achieve these goals with optimal efficiency [1, 2]. Most of references demonstrate that the CP has many advantages for the application in hospitals, such as reducing medical expense, increasing patient satisfaction and improving medical care quality, etc [3, 4].

Although the CP predefines predictable standardized care process for a particular diagnosis or procedure, many variances may still unavoidably occur due to individual complexities and subjective initiatives of both patients/families and healthcare professionals after applying it to patients care. During the process of treatment, the variance handling is the most critical issue in managing and controlling CPs. In general, the CP variances include patient, physician, system, pre-admission, and discharge variances. For example, during osteosarcoma preoperative chemotherapy CP, some uncertain variances (such as white and red blood cells decreasing, platelets decreasing, liver, kidney damage, stomatitis, and neuropathy etc.,) may usually occur [5]. Moreover, most variances present complex, fuzzy, uncertain, and high-risk characteristics, and thus may cause complicating diseases or even endanger patients’ life. Therefore, it is necessary to handle these variances accurately and effectively. And the uncertain variances of CPs may need different variance handling measures. In this paper, we pay more attention to one kind of variance, and the type of variance handling measures can be determined in some extent. However, the degree of different variances handling measures is fuzzy. Therefore, we adopt “fuzzy” method instead of “stochastic” method. In real world, some key index parameters have strong relationship with variances handling measures of CPs, and variances handling problems are highly nonlinear in nature so that it’s hard to develop a comprehensive mathematic model.

According to Table 1, previous researches mainly focus on the definition of CP variances (shown in Table 1), and recent researches mainly focus on documenting, classifying, and analyzing occurred variances during the implementation of CPs, e.g., in [12].

As usual, a difficulty in dealing with variances is in its fuzzy and uncertain features. That is to say, during the CP variances handling, many data are imprecise and incomplete. The fuzzy models can describe vague statements as in natural language. Therefore, to cope with this difficulty, a typed fuzzy Petri net extended by process knowledge (TFPN-PK) model method is presented to provide support for the handling decision of different types of CP variances (shown in Table 1), and the different types of variances can be dealt with based on different strategies [13–15]. Moreover, an extended event-condition-action (ECA) rules method is proposed to model and handle the CP variances validly [16].

However, the above methods mentioned in Table 1 depend on the fuzzy rules provided by experts, which are usually difficult to obtain. Moreover, fuzzy systems do not have much learning capability, and it is difficult for a human operator to tune the fuzzy rules and membership functions from the training data.

Artificial neural networks (ANNs) have emerged as important tools for non-linear data mapping [17]. ANNs are useful in solving non-linear problems where the algorithm or rules to solve the problem are unknown or difficult to express. The data structure and non-linear computations of ANNs allow good fits to complex, multivariable data. ANNs process information in parallel and are robust to data errors. A disadvantage of ANNs is regarded as a “blackbox” technology because of the lack of comprehensibility. Therefore, although ANNs are capable of learning the complex nonlinear relationships between index parameters and CP variances handling, they cannot explicitly provide the readable and comprehensible rules for healthcare professionals to make clinical decisions. Moreover, it is much difficult by using ANNs to handle the fuzzy and uncertain CP variances.

Fuzzy neural networks (FNNs) combine the advantages of both fuzzy logic in processing vague and uncertain information and neural network in good learning abilities, meanwhile, overcome their weaknesses [18]. Fuzzy neural network can also handle imprecise information through linguistic expressions. For several decades, FNNs have attracted much attention and have been applied in many domains [19]. Two typical types of FNNs are Mamdani-type and Takagi-Sugeno (T-S)-type models [20, 21]. Many researches have shown that if a T-S fuzzy model is used, the network size and learning accuracy is superior to those of Mamdani-type fuzzy model [22].

The accuracy and efficiency of variances handling for CPs are very important. According to the characteristics of CPs’ variances, T-S FNNs can be used for variances handling of CPs in assisting doctors to make decisions. However, there are many drawbacks of T-S FNNs by commonly back propagation (BP) algorithm with gradient descent for training [23], such as being easy to trap into local minimum point, being sensitive to initial values and poor ability on global search. Therefore, many researches use genetic algorithms (GAs) for the learning of the fuzzy models, and attain better performance than BP algorithm [24]. For example, hybrid of GA and Kalman filter [25], and multi-objective hierarchical GA [26] have been proposed to optimize fuzzy models.

In the [27], GA algorithm is used to adjust the parameters of T-S fuzzy neural network. Lin and Xu [28] built T-S neural network with consequent parts as constants. The parameters of membership functions and consequent part of the network were coded and an improved GA was used to search for the optimum parameters. However, GA is characterized by huge computation time and slow convergence near the optimum [29].

Recently, a new evolutionary technique, the particle swarm optimization (PSO) [30] has emerged as an important optimization technique [31, 32]. Compared with GA, the PSO has some attractive characteristics: (1) it has a fast convergence rate; (2) it is easy to implement; (3) it has few parameters to adjust. It retains good knowledge studied by all particles. And it encourages cooperative co-evolution and information sharing between particles, which enhance the search for a global optimal solution. Successful applications of the PSO to some optimization problems, such as function optimization and neural network optimization, have demonstrated its potential [30, 32]. The framework for identifying the fuzzy models using PSO algorithm is proposed by [33, 34]. In addition, the authors found that with the same complexity that was generated from the same data, PSO algorithm can generate better results for identifying the fuzzy models than GA algorithm for fuzzy neural network optimization.

For example, Shoorehdeli and Teshnehlab [35] reported a hybrid learning algorithm which is based on particle swarm optimization for training the antecedent part and gradient descent for training the conclusion part.

Although many researches have shown that the PSO performs well for global search because of its capability in quickly finding and exploring promising regions in the search space, they are relatively inefficient in local search and easy to result in premature convergence. Therefore, some improved approaches and variants of PSO have been reported in [36–40]. These methods improve the global ability of the PSO to some degree, but they are hard to get a good tradeoff between global convergence and convergent efficiency.

Cheng-Jian Lin et al. [41, 42] proposed the improving particle swarm optimization and immune-based symbiotic particle swarm optimization for use in T-S fuzzy neural networks can improve the ability of searching global solution, thus the accuracy can be improved. However, it cost long computation time and there is a weak ability in high dimension optimization problem.

Combined with the notion of co-evolution, a cooperative particle swarm optimization (CPSO) algorithm [43] has been proposed and proven to be more effective than the traditional PSO in most optimization problems. For example, Ben Niua, et al. [44] adopted the multi-swarm cooperative PSO to optimize the parameters of the fuzzy neural networks, and obtained higher accuracy with a large computation cost. However, for this method, there is a weak ability in the structure and parameters optimization simultaneously. The weak and strength sides of all methods mentioned are summarized in Table 2.

As shown in Table 2, computational intelligence significantly enhances the accuracy. However, most of the above mentioned methods have their shortcomings: (1) few optimize simultaneously the structure and parameters of T-S FNNs; (2) inefficiency in optimize the high dimensional nonlinear parameters; (3) time-consuming. Furthermore, most of the methods are easy to result in premature convergence.

As usual, the CPSO algorithm with a simple splitting and collaborating approach may become trapped in the sub-optimal locations in the search space. The sequence of the subgroup optimization can influence its optimization result. Moreover, the original PSO uses a population of n-dimensional vectors, and then these vectors can be partitioned into n swarms of 1-D vectors for the CPSO algorithm. However, with the increasing of the dimensions of the population, the computing time will increase greatly. If an n-dimensional vector is split into K parts roughly (K called the split factor) in order to decrease the computing time, every parameter in the n-dimensional vector will not be optimized sufficiently. In addition, many researchers adopt random perturbation and existent mutation mechanisms, e.g., in [45] to avoid comparative inefficiency of the CPSO in fine tuning the solution. However, this problem cannot be resolved radically.

In this study, a subgroup decomposing method is adopted for the particles in random cooperative evolution with the highest performance, and the “exponential smoothing mutation mechanism” is used for avoiding comparative inefficiency and local searching to further improve the quality of solutions. This method is much different from the existing ones, and hopefully it can increase the diversity of the swarms, and avoid plunging into local optimum. This method is called as the random cooperative decomposing particle swarm optimization algorithm (RCDPSO). Moreover, a discrete binary version of PSO (DPSO) by Kennedy and Eberhart [46] is employed to optimize the T-S FNNs structure. Then, a new variances handling method of CPs is proposed, which is based on T-S FNNs with integrating RCDPSO/DPSO optimization to improve the efficiency, precision, and generalization ability of T-S FNNs for variances handling of CPs. Especially, this study puts its emphasis on using RCDPSO/DPSO to optimize simultaneously the structure and parameters of T-S FNNs, and it is much different from those previously researches.

The remainder of this paper is organized as follows. "The review of particle swarm algorithm" makes review on particle swarm algorithm. "Random cooperative decomposing particle swarm optimization algorithm" describes the principle of RCDPSO algorithm. "A novel hybrid learning algorithm of T-S fuzzy model for variances handling of CPs" gives a novel hybrid learning algorithm of T-S FNNs for variances handling of CPs. A case study on liver poisoning of osteosarcoma preoperative chemotherapy is used to validate the proposed method in "Case study". The whole article is summarized and the further research is sketched in "Conclusion".

The review of particle swarm algorithm

The PSO algorithm is an adaptive algorithm based on a social-psychological metaphor. Each particle has its own position and velocity to move around the search space. The jth component of the ith particle represents a possible solution and has a position vector xij, a velocity vector vij, and the best personal position pij encountered so far by the particle. During each iteration, each particle accelerates in the direction of its own personal best position pij, as well as in the direction of the global best position pgj discovered so far by any of the particles in the population. At each time step t, the velocity of the jth component of the ith particle is updated using Eq. 1:

where w is the inertia weight; c1 and c2 are acceleration coefficients; r1 and r2 are uniformly distributed random numbers in the range (0, 1). The velocity vij is limited to the range [vmin, vmax]. Based on the updated velocities, the jth component of the ith particle is calculated using

Based on (1) and (2), the populations of particles tend to cluster together with each particle moving randomly in a random direction.

Kennedy and Eberhart proposed a discrete binary version of PSO [46], which is very appropriate to solve discrete optimization problems. DPSO takes the values of binary vectors of length n and the velocity represents the probability that a bit will take the value 1. The velocity updating formula Eq. 1 remains unchanged, but it is constrained to the interval [0, 1] by a limiting transformation S(vij). Then the particle changes its bit value by the following Equations:

where vij is the jth velocity dimension of particle i, pij is the jth position dimension of particle i, S(vij) is a constrain convert function and rand is a random number drawn from U(0,1).

Random cooperative decomposing particle swarm optimization algorithm

The PSO algorithm may easily get trapped in a local optimum when tackling complex problems. Through empirical studies, it has observed that combing the CPSO algorithm and PSO algorithm can get a better result. The detailed description can be shown in [43].

In order to avoid comparative inefficiency of the CPSO in finely tuning the solution and further obtain better solutions gotten by CPSO algorithm, the “exponential smoothing mutation mechanism” is used once it satisfies the predefined iteration cycle. That is to say, implementing local searching by small mutation is to further improve these solutions found by the RCDPSO algorithm. By this method, a better solution can be found. Then, by means of combing the current personal information and the best personal history information of the particles, the new particle parameters will be gotten.

During the process of cooperative evolution with random execution sequence, the decomposing algorithm is adopted for particles with the best performance. The method for evaluating the performance of particles is given as follows:

We can make the evaluation for each m number of iterations. During this period, the deviation value between the fitness value of the particle in each subgroup and the best global particle in iteration is regarded as a judging criterion. The smallest deviation value is regarded as the best subgroup, and then it would be further decomposed. The decision formula is

where, j is the jth subgroup, r means the particle, f(r, j, k) is the rth particle’s fitness value of the jth subgroup in the kth iteration with co-evolution. fgbest_(j, k) is the best fitness value of the jth subgroup in the kth iteration. εk,j is the deviation sum of the global best particle of the jth subgroup in the kth iteration. During a period, the average of the deviation sum of the subgroup can be given as follows:

Here l means the period, and life is a length of the period.

The “exponential smoothing mutation” is used for local searching to further improve the quality of solutions. The principle of the “exponential smoothing mutation mechanism” is to offer the guidance for optimization by combing the current personal information and the best personal history information of the particles. The “m” current best performance particles and “m” personal best particles recorded in the history warehouse are taken out. And these particles are regarded as the “base” of the exponential smoothing. Then the new parameter structure can be defined, which is named as the “weight”. The weight of the dimensionality is the same as the parameters for optimization, so the dimension of “posa” is the same as the one of “pos”. It can be defined as follows:

The “posa” is also given a rand number with (0, 1), here, popa_size denotes the particles, and parts_size denotes the dimensionality of the parameters. The posa(i, j) means that the ith particle with the jth parameter.

The pseudocode of the “exponential smoothing mutation mechanism” can be given as follows:

Here, ak(i, j) can be obtained by the following exponential smoothing equation:

The pseudocode of the normalized ak(i, j) can be given as follows:

The normalized ak(i, j) is called weight, m is regarded as the “base” of the corresponding particle number in the “pos”, and the initial value of “a(i, j)” is a random matrix of m × n with (0, 1].

After the parameters of the “posa” having been optimized for a few times, we take out n best particles from the “posa”, and calculate the corresponding shape parameter of the trapezoid membership function. Then the n particles are used as “the mutation particles” to rewrite the previous n particles with the worse performance obtained by RCDPSO optimization.

A novel hybrid learning algorithm of T-S fuzzy model for variances handling of CPs

The Structure of T-S FNNs

For the multiple-input and single-output (MISO) system, the T-S model [20] can be given as follows: Let X = [x1, x2, ..., xn]T denote an input vector, where each variable xi is a fuzzy linguistic variable. The set of linguistic variables for xi is represented by \( T\left( {{x_i}} \right) = \left\{ {A_i^1,A_i^2, \ldots, A_i^{{m_i}}} \right\},i = 1,2, \ldots, n \), where \( A_i^{{r_i}}\left( {{r_i} = 1,2, \ldots, {m_i}} \right) \) is the r thi linguistic value of the input xi. The membership function of fuzzy set defined on domain of xi is \( {\mu_{A_i^{{r_i}}}}\left( {{x_i}} \right)\left( {{r_i} = 1,2, \ldots, {m_i}} \right) \). Let y denote output vector. A rule takes form of

According to [20, 33, 35], the T-S FNNs is composed of five layers, which are divided into two networks: antecedent and consequent. The first four layer of this T-S FNNs correspond to the antecedent network, the fifth layer is output layer, and the consequent parts are indicated with broken line.

-

Layers 1

(Input layer): No function is performed in this layer. The node only transmits input values to layer 2.

-

Layers 2

(Fuzzification layer): Nodes in this layer correspond to one linguistic label of the input variables in layer 1. That is to say, the membership value specifying the degree to which an input value belongs to a fuzzy set is calculated in this layer. In this study, the trapezoid membership function is adopted.

-

Layers 3

(Rule layer): Firing strength of every rule is calculated. There are m rules. According to the premise parts of rules, the neuron representing some linguistic values of some input is connected with the neuron representing some rule. The degree the input vector x matches rule Rulej is computed by the min operator: \( {\alpha_j} = {\mu_{A_1^{j{r_1}}}}\left( {{x_1}} \right){\mu_{A_2^{j{r_2}}}}\left( {{x_2}} \right) \ldots {\mu_{A_n^{j{r_n}}}}\left( {{x_n}} \right) \). αj is called the firing strength of rule Rulej.

-

Layers 4

(Normalized layer): In the fourth layer, the output of each node in this layer is the normalized firing strength. These parameters denote linear parameters or so called consequent parameters. Thus, the outputs of this layer can be given by \( {\overline \alpha_j} = {\alpha_j}/\sum\limits_{j = 1}^m {{\alpha_j}} \).

-

Layers 5

(Output layer): This layer sums up all the activated values from the inference rules to generate the overall output y.

The principle of novel hybrid learning algorithm

T-S FNNs are optimized by adapting the structure, the antecedent parameters (membership function parameters) and consequent parameters so that a specified objective function is minimized. In this study, a novel hybrid algorithm by integrating the RCDPSO/DPSO algorithm and kalman filtering algorithm is proposed to tune the structure and parameters of T-S FNNs for variances handling of CPs. This hybrid procedure is iterated until the output error is reduced to a desired goal or a maximal number of generations predefined by user reached.

Fitness function: The fitness function for measuring the performance of individuals is defined as:

where Tij is the expected output, yij is the predicted output, n is the number of training samples, and m is the number of output nodes. Thus, the fitness is the mean squared error (MSE) of T-S FNNs model. The mean square error (MSE) of training samples and testing samples are calculated respectively to evaluate the performance of the network. The mean absolute error (MAE) is other evaluation performance index for T-S FNNs.

The least square algorithm is usually adopted for the consequent parameters estimation. However it is not feasible when the matrix is a singular matrix or approximate singular matrix. Therefore, kalman filtering algorithm is employed for the consequent parameters estimation [47]. Suppose “m” is the number of rules, and the input set is Xi = (x1i, x2i,..., xni)T, according to the Eq. 10, the output can be given as follows:

Let X = [x1, x2, .., xN]T denote the input vector, Y = [y1, y2, .., yN]T denote the output vector. Meanwhile, the T = [T1, T2, .., TN]T denote the actual output vector. By matrix transformation, we get the equation as follows:

P is consequent parameters, which needs to be estimated. The system error is ξ = ‖T-Y‖. Find an optimal coefficient vector P* such that the system error is minimized. This problem can be solved by the linear least squares (LLS) method, implemented by approximating P = (ψTψ)−1ψTY. In order to avoid calculating the inverse matrix, the kalman filter algorithm (KF) [22, 35, 47] is used to determine the P:

Initial condition is p0 = 0, S0 = λI. ψi+1 is the i+1 row of the ψ, and Pi is the coefficient matrix after the ith iteration. λ is a bigger constant usually, here is 5000, I is a m(n + 1) × m(n + 1) unit matrix.

The hybrid learning algorithm description for T-S FNNs

-

Step 1:

Initialize population size, particle size and related parameters;

-

Step 2:

Generate randomly the membership function parameters of input variables, and then, according to the Eq. 14, the consequent parameters of T-S fuzzy model are estimated;

-

Step 3:

Code the parameters. Moreover, “the golden section” method is used to determine the dimensions of population division;

-

Step 4:

Initialize the positions and velocities of the particles of all the sub-swarms, calculate the fitness of all the particles, and initialize the personal best position and global best position of each sub-swarm;

-

Step 5:

Generate the execution random sequence with co-evolution, and then begin cooperative optimization for each sub-swarm;

-

Step 6:

Satisfy the iteration period predefined by users, according to the selection criteria, the best performance particle is decomposed, and then the minimum value of the decomposed offspring replace the value of the mother particle;

-

Step 7:

When the mutation mechanism criterion is reached, the “exponential smoothing mutation” module will be started. After mutation, the particles with good performance are chosen to replace the worse particles with the same amount;

-

Step 8:

Generate a Q group, choose a particle in the Q group randomly, and ensure that it is not the global optimal value obtained by integrating RCDPSO/DPSO algorithm. Calculate the fitness of all the particles in evolution to find the optimal solution;

-

Step 9:

Stop scheme: The stopping criterion is reached whenever one of the following conditions is fulfilled: (1) a given fitness value is achieved; (2) the total number of generations specified by users is reached. Once the stopping criterion is reached, go to step 10; otherwise, go to step 5;

-

Step 10:

Decode and generate T-S model parameters, the optimal solution is output, and the algorithm is terminated.

Case study

In this part, an example on the CP of osteosarcoma preoperative chemotherapy (variances usually occur in this stage) is given to validate the proposed method. The aim of this study was to improve the accuracy and efficiency of variances handling for CPs. Furthermore, the experiment can be divided into three main stages. Firstly, according to the variances characteristics of osteosarcoma preoperative chemotherapy and experts’ knowledge, the membership function of evaluation index and the fuzzy rule base can be established. Secondly, according to the obtained fuzzy rules, the T-S FNNs model can be constructed. Thirdly, the constructed standard T-S FNNs and the T-S FNNs with CPSO, PSO and RCDPSO/DPSO optimization are respectively tested with the same training and test samples to compare the accuracy and efficiency in variances handling of CPs.

As usual, combining many different kinds of chemotherapy drugs is adopted as the osteosarcoma preoperative chemotherapy scheme. In this study, the preoperative chemotherapy scheme is simplified to “high-dose methotrexate (HDMTX) + calcium folinate (CF) rescue”. As usual, the variances can be listed as follows: 1) Skin rash; 2) Stomatitis; 3) Gastrointestinal reactions; 4) Liver damage; 5)Renal damage; 6) Myelosuppression [5]. Moreover, during the process of preoperative chemotherapy, liver damage occurs with a higher percentage, which can directly influence the life quality of patients and further execution of the chemotherapy scheme. Therefore, in this paper, we pay more attention to liver damage variances. The evaluation of liver damage includes mandatory indexes: 1) Alanine Amino Transferase (ALT); 2) Aspartate aminotransferase (AST) and the experience index (EI). According to these indexes, the dosage of liver protection drugs can be determined. The membership functions of these indexes are listed as follows:

Index 1: Alanine Amino Transferase (ALT). ALT can be classified into 0, I, II, III, IV five grades, where 0 means that the liver function is normal, while IV means that the liver function damage is very severe. The membership function definition and parameters’ values of ALT is shown in Table 3. The ULN is the upper limit of the normal status, meaning that when 0≤ the amount of ALT≤1.5×ULN, the liver function is normal; when 1×ULN≤ the amount of ALT≤2.75×ULN, the liver function is minor damage; when 2.25×ULN≤ the amount of ALT≤5.5×ULN, the liver function is moderate damage; when the 4.5×ULN≤ the amount of ALT≤10.5×ULN, the liver function is severe damage; when 9.5×ULN≤ the amount of ALT≤15×ULN, the liver function is very severe damage.

The membership function of index 2 (AST) is equal to index 1(ALT). Besides the AST and ALT, the experience index (such as the patient’s age, sex, and medical history) should be considered. Therefore, according to the medical expert experience, an experience index (EI) can be constructed to consider all “other factors” comprehensively. Moreover, the scoring method is adopted for the EI. It can be divided into “normal”, “not good”, “serious” three parts, as shown in Table 3. The trapezoidal membership function is adopted.

The output variables are the dosage strength of protecting liver drugs. When a liver poisoning happens, under normal circumstances, the liver protection drugs should be used. Based on actual situation, during the liver protection therapy, no more than three kinds of liver protection drugs should be used. Therefore, the output is set to three kinds of liver protection drugs dosage, where the three kinds of medicine will be named as “liver protection drug A”, “liver protection drug B” and “liver protection drug C”, followed by the symbol of the “Y1”, “Y2”, “Y3”. The dosage of the domain value is [0, 1], where “0” means no drugs, “1” indicates that the kinds of drugs the maximum allowable dose. Fuzzy output space is divided into five parts, namely “0-class”, “A-class”, “B-class”, “C-class” and “D-class”. The range of different parameter values can be shown in Table 3.

The fuzzy partition number of the fuzzy input space determines the maximum possible number of fuzzy rules. This fuzzy system has three inputs, namely ALT, AST and EI, in which the number of fuzzy partition is respectively 5, 5 and 3, so the maximum number of rules in the system is 5 × 5 × 3 = 75. The format of fuzzy rules can be listed as follows:

-

Rule: IF ALT level is I grade and AST level is I grade and the experience index is normal

-

THEN

a strength of the liver protection drug A is C-class,

a strength of the liver protection drug B is 0-class,

a strength of the liver protection drug C is 0-class.

-

The fuzzy rules have three inputs and three outputs. Therefore, it belongs to multiple-input and multiple-output (MIMO) systems. The three output actions are mutually independent, which can be split into three sub-rules of MISO. Suppose the rule’s reliability is 1. According to experts’ knowledge of osteosarcoma, the fuzzy rule base can be established as shown in Table 4.

Experiments on T-S FNNs

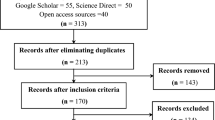

T-S FNNs has three outputs: Y1, Y2 and Y3, which should be split into three MISO systems. T-S model with three inputs and one output Y1 are used as illustrated in Fig. 1. The membership functions of input variable “ALT” is shown in Fig. 2. According to these rules depicted in Table 4, the ANFIS architecture is constructed in Fig. 3. ANFIS system was used at the MATLAB environment.

After the training samples and test samples are loaded, the fuzzy inference system is generated by the grid partition method, and the target error of 0.001 is set up. And the T-S fuzzy model is tested with varying numbers and types of input-output membership functions (MFs) of the model with constructed 75 rules. The input membership function is chosen for the trapezoid distribution (trapmf) or gaussian distribution (gaussmf) respectively. The fuzzy partition number is 5 or 3, the output membership function is linear (Linear) or constant (Constant).

The neural network performance criterion is measured by the mean absolute error (MAE) between the predicted outcome and the actual outcome. The collection of well-distributed, sufficient, and accurately measured input data is the basic requirement to obtain an accurate model. The 430 real samples have been collected from Shanghai No. 6 People’s Hospital of China. The samples had been randomly split into a training data set containing 380 samples and a test data set containing the remaining 50 samples. The experiments were carried out by using cross-validation method on all data sets, and the testing data sets were used to verify the accuracy and the effectiveness of the trained T-S model.

The best model error of test samples as the final model, and the gauss membership function (gaussmf) with 0.0297 test error for Y1, trapezoid membership function (trapmf) with 0.0243 test error for Y2, and gaussmf with 0.0538 test error for Y3 can be obtained. The detailed results of the final model for Y1, Y2 and Y3 are shown in Table 5.

Experiments on T-S FNNs based on RCDPSO/DPSO, CPSO and PSO optimization

The experiments are carried on the RCDPSO/DPSO, CPSO and PSO-based T-S FNNs, as well as standard T-S fuzzy model respectively. The same 380 samples for training groups and 50 samples for testing groups are used. The experiments were carried out by using cross-validation method on all data sets. The initial parameters can be given in Table 6.

Each method is tested for 10 times. The mean is regarded as final result, and it can be seen in Table 7. The comparison learning curves with MSE and MAE of the T-S fuzzy model based on the RCDPSO/DPSO, CPSO and PSO is shown in Fig. 4.

The prediction errors and deviation comparison diagrams of the target output and actual output with 50 groups tested samples are given from Figs. 5 and 6.

As shown in Fig. 4, after the RCDPSO/DPSO-based T-S fuzzy model is trained for 500 times, the training samples of the MSE can reach 0.00051, MAE can reach 0.01352, less than 2%. The MSE for the test samples is 0.0013, MAE is 0.0166, showing good generalization performance. During the training process, the declining velocity of the error is very fast, the MAE gets to <0.04 for 30 times, and then it reaches a stable phase. Meanwhile, the MSE for the training reaches a stable phase for 300 times. However, the training error of MAE with the PSO-based T-S fuzzy model is still 0.08 for 30 times, and it get to 0.0325 for 500 times. Its prediction accuracy is less than the same input conditions of the RCDPSO/DPSO and CPSO model. By analysis, the test sample error of the RCDPSO/DPSO-based T-S method is relatively small. However, there is a relatively large error range for PSO method. For T-S fuzzy model, the mean errors of Y1, Y2 and Y3 of the training samples and test samples are 0.0348 and 0.0359 respectively. The MSE and MAE are all slightly higher than the PSO-based T-S model with 0.0325 and 0.0356. The change comparison diagram of membership function (MF) with is shown in Fig. 7. Finally, after the optimal structure and parameters have been achieved by integrating RCDPSO/DPSO-based T-S fuzzy neural network, three rules abstracted from the RCDPSO/DPSO based T-S fuzzy model are listed as follows:

-

Rule 1: IF x1 is μ(0.01, 0.01, 1.0, 1.5), x2 is μ(1.0,1.5,2.25,2.75 ), x3 is μ(0, 0, 2, 3.5)

-

THEN

Y = 0.3109 x1 + 0.5264 x2 + 0.0213x3 −0.6728

-

-

Rule 2: IF x1 is μ(1.0,1.5,2.25,2.75 ), x2 is μ(1.0,1.5,2.25,2.75 ), x3 is μ(2,3.5,6.5,8 )

-

THEN

Y = 0.2484 x1 + 0.1671 x2 + 0.0023x3+0.0380

-

-

Rule 3: IF x1 is μ(4.5,5.5,9.5,10.5 ), x2 is μ(1.0,1.5,2.25,2.75 ), x3 is μ(2,3.5,6.5,8 )

-

THEN

Y = −0.1015 x1 + 0.0318 x2 + 0.0156x3+0.3951

-

Where μ(a,b,c,d) is the linguistic value corresponding to the membership function.

The experimental comparisons analysis of T-S FNNs based on RCDPSO/DPSO, CPSO and PSO optimization

In order to further compare and analyze the overall performance of T-S FNNs based on RCDPSO/DPSO, CPSO and PSO optimization with the optimal solution (the actual value), the same 430 samples are experimented. In one trial, a certain number of samples, denoted as training-size, are randomly selected from the data set as the training samples. And 40 samples are chosen for testing samples. The remaining samples are used as testing samples. Each neural network is then trained and tested 10 times. Its average result is recorded as the final result. In this research, the size of the problems (example size) varied over n = 30, 60,..., 390. That is to say, we run the trials over the network with training-size ranging from 30 to 390.

From largest to smallest they are: T-S FNNs based on PSO, CPSO and RCDPSO/DPSO optimization. In this study, the optimal solution is known (the actual value). According to literature [48], the metric chosen is (Z-Z*)/Z* (where Z is the heuristic solution value and Z* is the actual value), which expresses the result as a proportion of the optimal solution.

Figure 8 shows the mean value of this metric for each heuristic, again as a function of problem size n. It can be seen that for all heuristics, the metric increases nonlinearly with n.

Figure 9 shows the means of the log-transformed metric values. As with the previous figures, it appears that T-S FNNs RCDPSO/DPSO optimization outperforms T-S FNNs based on CPSO optimization, which in turn outperforms T-S FNNs based on PSO optimization for all n.

All three heuristics’ metrics seem to be different and seem to depend upon n.

From Figs. 8 and 9, it is obvious that the deviation of T-S FNNs based on RCDPSO/DPSO optimization is the smallest across different training-sizes, which means that the T-S FNNs based on RCDPSO/DPSO optimization network is more stable and robust than T-S FNNs based on CPSO and PSO optimization regardless of the training-size. Therefore, the T-S FNNs based on RCDPSO/DPSO optimization can obtain a relative high accuracy to provide an effective decision support tool for fuzzy and uncertainty variances handling of CPs.

The comparison of experimental results with other methods

In order to verify the effectiveness of FNNs in handling variances of CPs, the standard Mamdani fuzzy neural network is also tested with the same training and test data. It can be divided into the following three scenarios for experiments analysis:

-

(1)

The membership function of input variables is known in advance, and training FNNs is used to adjust the “weight” to get the minimum error. This method can be labeled as Mamdani_km;

-

(2)

The fuzzy segmentation and membership function of input variables is determined according to expert’s experience. However, the parameters of membership function can only be estimated approximately, and all these parameters and weight in fuzzy neural network training process needed to be adjusted. This method can be labeled as Mamdani_am;

-

(3)

The fuzzy segmentation and membership function of input variables is known, however, the parameters of fuzzy membership function are completely unknown, and they will be generated randomly. This method can be labeled as Mamdani.

Similarly, with the same parameters conditions for the RCDPSO/DPSO-based T-S fuzzy model, they can be labeled as the RCDPSO/DPSO_km, RCDPSO/DPSO_am and RCDPSO/DPSO respectively. The results and segmental comparison diagram are shown in Table 7, and Fig. 10. The test error of “Mamdani” fuzzy model is 0.3098, and the value is very big. Therefore, it can not be shown in Fig. 10.

Experimental results can be summarized as follows:

-

When the input variables all are random or approximate initial parameters, the mean square error (MSE) and the mean absolute error (MAE) of the RCDPSO/DPSO-based T-S FNNs model are the smallest. The error of the CPSO-based T-S FNNs is lower than the RCDPSO/DPSO-based one, however it is higher than the PSO-based T-S FNNs and the standard T-S FNNs model;

-

When the input of the membership function is known, and is determined according to expert’s experience, the RCDPSO/DPSO-based T-S fuzzy model can get the best result. However, it will take a long time. Therefore, the standard Mamdani or T-S FNNs model is another good choice, because this neural network can make full use of existing expert knowledge, which can significantly reduce the uncertainty of the neural network.

All these indicate that the proposed RCDPSO/DPSO-based T-S FNNs is better in efficiency and precision for variances handling of CPs. Although the RCDPSO/DPSO_km-based T-S FNNs provides smaller MSE and MAE, the initial parameters of membership function are often unknown, which make it infeasible in a real-life situation.

The comparison of diagnosis accuracy rate and discussion

As is known to us all, the liver is an important metabolism and detoxification organ, which participates in the metabolism of protein, fat, glucose metabolism and drugs detoxification. Especially, during osteosarcoma preoperative chemotherapy, the dosage strength and kinds of protecting liver drugs are very important to use for the patients with liver damage variances. And any excess or unfit drugs can increase liver burden and even damage liver function. The reason of causing liver damage with unsuitable drugs can be listed as follows:

-

(1)

Some drugs can direct damage the liver before hepatic metabolism;

-

(2)

Some drugs can damage the liver during hepatic metabolism;

-

(3)

During osteosarcoma preoperative chemotherapy, any drug is sensitive to patients.

Therefore, for liver poisoning variances in osteosarcoma preoperative chemotherapy, too many kinds and excessive doses of liver protection drugs can aggravate liver burden, and even cause complicating disease. The proper using liver protection drugs not only can cure patients’ liver poisoning variances but also can reduce medical expense of osteosarcoma CP.

In this paper, to validate the proposed method, the accuracy of using liver protection drugs can be determined by the computation of the diagnosis accuracy rate.

Here, the correct decisions means that the values of prediction result and actual result belong to the same “class”. For example, the actual value of the dosage strength for protecting liver drugs with patient “Jack” is “A” class. After adopting the proposed method for prediction, the prediction value of the dosage strength for protecting liver drugs with patient “Jack” is still “A” class. By this criterion, the network model is then tested 10 trials with the given test-set-size. The same 50 testing samples are used. The final accuracy rate with the test-set-size (10, 20 and 50) is determined by the average accuracy rate for the testing samples in 10 trials. Table 8 gives the obtained diagnosis accuracy rates with different train-set-sizes. From Table 8, the different models with the average diagnosis accuracy rate is 98.19%, 95.33%, 84.67%, 99.84%, 99.16%, 81.20%, 94.24%, 88.72%, 82.95%, and 81.57% respectively. The diagnosis accuracy rate of the RCDPSO/DPSO-based T-S fuzzy model is 98%. Meanwhile, the diagnosis accuracy rate of the standard T-S fuzzy model (the average value of Y1, Y2 and Y3) is 82.95%.

Especially, the proposed RCDPSO/DPSO-based T-S fuzzy model or RCDPSO/DPSO_km -based T-S fuzzy model outperforms above 15% accuracy of the standard T-S fuzzy model or the standard Mamdani fuzzy model. Moreover, the T-S FNNs with PSO or improved PSO optimization can achieve better results than the standard T-S FNNs and the standard BP neural network for the variances handling of CPs. As usual, the input variables are random or approximate initial parameters, the proposed RCDPSO/DPSO-based T-S FNNs model is often needed, although the RCDPSO/DPSO_km-based T-S FNNs can obtain higher diagnosis accuracy rate than the proposed RCDPSO/DPSO-based T-S FNNs model. Thus, these results indicate that the proposed RCDPSO/DPSO-based T-S FNNs model is effective in handling CPs variances.

Conclusion

In this paper, a new variances handling method of CPs is proposed, which is based on T-S FNNs with integrating RCDPSO/DPSO optimization. The parameters optimization consists of two phases: first, all structure and antecedent parameters are adjusted by integrating RCDPSO/DPSO optimization; second, all consequent parameters are estimated through kalman filtering algorithm. During the process of cooperative evolution with random execution sequence, a decomposing algorithm is adopted for the particles with the best performance. Therefore, it not only ensures the convergence rate, but also improves performance of the algorithm in global search. Moreover, the exponential smoothing mutation mechanism is adopted to further improve the solutions found by the RCDPSO optimization. Therefore, the optimal structure and parameters can be achieved simultaneously by novel hybrid learning algorithm.

To demonstrate the performance of the proposed method, a case study on liver poisoning variances problems of osteosarcoma preoperative chemotherapy is tested. The results demonstrate that the RCDPSO/DPSO-based T-S -.18pt?>FNNs can obtain better performance with high accuracy, efficiency and generalization ability than other existing methods. Moreover, based on the trained RCDPSO/DPSO-based T-S FNNs, the interrelated rules would be extracted, which can be used to provide doctors or healthcare professionals with the guidelines for management and control of complex CPs variances. Moreover, this method is also applicable to other CPs variances (e.g. cesarean section CPs hemorrhage variances). Thus, it verifies that the RCDPSO/DPSO-based T-S FNNs is an effective decision support tool for fuzzy and uncertainty variances handling of CPs.

References

Bragato, L., and Jacobs, K., Care pathways: the road to better health services. J. Health Organ. Manage. 17(3):164–180, 2003.

Cheah, J., Development and implementation of a clinical pathway programme in an acute care general hospital in Singapore. Int. J. Qual. Health Care 12:403–412, 2000.

Hunter, B., and Segrott, J., Re-mapping client journeys and professional identities: a review of the literature on clinical pathways. Int. J. Nurs. Stud. 45(4):608–625, 2008.

Wakamiya, S. J., and Yamauchi, K., What are the standard functions of electronic clinical pathways? Int. J. Med. Inform. 78(8):543–550, 2009.

Du, G., Jiang, Z. B., Diao, X. D., Ye, Y., Yao, Y., Modeling, variation monitoring, analyzing, reasoning for intelligently reconfigurable clinical pathway. Proceedings of the IEEE International Conference on Service Operations, Logistics and Informatics, 85–90, Chicago, IL, USA, 2009.

Wigfield, A., and Boon, E., Critical care pathway development: the way forward. Br. J. Nurs. 5(12):732–735, 1996.

Cheah, J., Clinical pathways: changing the face of client care delivery in the next millennium. Clinician Manag. 7(78):78–84, 1998.

Price, M. B., Jones, A., Hawkins, J. A., et al., Critical pathways for postoperative care after simple congenital heart surgery. AJMC 5:185–192, 1999.

Atwal, A., and Caldwell, K., Do multidisciplinary integrated care pathways improve interprofessional collaboration? Scand. J. Caring Sci. 16(4):360–367, 2002.

Bryan, S., Holmes, S., Prostlethwaite, D., and Carty, N., The role of integrated care pathways in improving the client experience. Prof. Nurse 18(2):77–79, 2002.

Caminiti, C., Scoditti, U., Diodati, F., and Passalacqua, R., How to promote, improve and test adherence to scientific evidence in clinical practice. BMC Health Serv. Res. 5(62):1–11, 2005.

Wakamiya, S., and Yamauchi, K., A new approach to systematization of the management of paper-based clinical pathways. Comput. Meth. Programs Biomed. 82:169–176, 2006.

Ye, Y., Jiang, Z. B., Diao, X. D., Yang, D., and Du, G., An ontology-based hierarchical semantic modeling approach to clinical pathway workflows. Comput. Biol. Med. 39(8):722–732, 2009.

Ye, Y., Jiang, Z. B., Diao, X. D., Du, G., Knowledge-based hybrid variance handling for patient care workflows based on clinical pathways. Proceedings of the IEEE International Conference on Service Operations, Logistics and Informatics, 13–18, Chicago, IL, USA, 2009.

Ye, Y., Jiang, Z. B., Diao, X. D., Du, G., A Semantics-Based Clinical Pathway Workflow and Variance Management Framework, 2008 IEEE International Conference on Service Operations and Logistics, and Informatics, 758–762, Bei Jing, China, 2008.

Du, G., Jiang, Z. B., Diao, X. D., Sun, Y. J., Ye, Y., and Yao, Y., Adaptive workflow engine based on rule for clinical pathway. Journal of Shanghai Jiao Tong University 43(7):1021–1026, 2009.

Er, O., Yumusak, N., and Temurtas, F., Chest diseases diagnosis using artificial neural networks. Expert Syst. Appl. 37(12):7648–7655, 2010.

Chen, Y., Yang, B., Abraham, A., and Peng, L., Automatic design of hierarchical Takagi–Sugeno fuzzy systems using evolutionary algorithms. IEEE Trans. Fuzzy Syst. 15(3):385–397, 2007.

Yoon, Y., Guimaraes, T., and Swales, G., Integrating artificial neural networks with rule-based expert systems. Decis. Support Syst. 11(5):497–507, 1994.

Takagi, T., and Sugeno, M., Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 15:116–132, 1985.

Lin, F. J., Lin, C. H., and Shen, P. H., Self-constructing fuzzy neural network speed controller for permanent-magnet synchronous motor drive. IEEE Trans. Fuzzy Sys. 9(5):751–759, 2001.

Jang, J. S. R., ANFIS: Adaptive network based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 23(3):665–684, 1993.

Pedrycz, W., and Reformat, M., Evolutionary fuzzy modeling. IEEE Trans. Fuzzy Syst. 11(5):652–665, 2003.

Oh, S. K., Pedrycz, W., and Park, H. S., Hybrid id entification in fuzzy neural networks. Fuzzy Sets Syst. 138:399–426, 2003.

Wang, L., and Yen, J., Extracting fuzzy rules for system modeling using a hybrid of genetic algorithms and Kalman filter. Fuzzy Sets Syst. 101:353–362, 1999.

Wang, H., Kwong, S., Jin, Y., Wei, W., and Man, K. F., Multi-objective hierarchical genetic algorithm for interpretable fuzzy rule-based knowledge extraction. Fuzzy Sets Syst. 149(1):149–186, 2005.

Tang, A. M., Quek, C., and Ng, G. S., GA-TSKfnn: parameters tuning of fuzzy neural network using genetic algorithms. Expert Syst. Appl. 29:769–781, 2005.

Lin, C. J., and Xu, Y. J., A self-adaptive neural fuzzy network with group-based symbiotic evolution and its prediction applications. Fuzzy Sets Syst. 157:1036–1056, 2006.

Jelodar, M. S., Kamal, M., Fakhraie, S. M., Ahmadabadi, M. N., SOPC-based parallel genetic algorithm. Proceedings of the IEEE Congress on Evolutionary Computation, Vancouver, BC, Canada, 2006.

Kennedy, J., Eberhart, R C., Particle swarm optimization. Proceedings of the IEEE International Conference on Neural Networks. Piscataway, 1942–1948, 1995.

Fourie, P. C., and Groenwold, A. A., The particle swarm optimization algorithm in size and shape. Struct. Multidiscip. O. 23(4):259–267, 2002.

Han, M., Sun, Y. N., and Fan, Y. N., An improved fuzzy neural network based on T-S model. Expert Syst. Appl. 34:2905–2920, 2008.

Khosla, A., Kumar, S., Aggarwal, K. K., A framework for identification of fuzzy models through particle swarm optimization, Proceedings of the IEEE Indicon Conference, 388–391, Chennai, India, 2005.

Khosla, A., Kumar, S., Ghosh, K. R., A comparison of computational efforts between particle swarm optimization and genetic algorithm for identification of fuzzy models, in: Annual Conference of the North American Fuzzy Information Processing Society-NAFIPS, 245–250, 2007.

Shoorehdeli, M. A., Teshnehlab, M., and Sedigh, A. K., Training ANFIS as an identifier with intelligent hybrid stable learning algorithm based on particle swarm optimization and extended Kalman filter. Expert Syst. Appl. 160:922–948, 2009.

Shi, Y., and Eberhart, R. C., Empirical study of particle swarm optimization. Proc. IEEE Int. Conf. Evolutionary Computation 3:101–106, 1999.

Ratnaweera, A., Halgamuge, S. K., and Watoson, H. C., Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficient. IEEE Trans. Evol. Comput. 8(3):240–255, 2004.

Lovbjerg, M., Rasmussen, T. K., Krink, T., Hybrid particle swarm optimizer with breeding and sub-populations. Proceedings of the Genetic and Evolutionary Computation Conference (GECCO). San Francisco, CA, July 2001.

Xie, X. F., Zhang, W. J., et al., Optimizing semiconductor devices by self-organizing particle swarm. Congress on Evolutionary Computation, Oregon, USA, 2017–2022, 2004.

He, R., Wang, Y., et al., An improved particle swarm optimization based on self-adaptive escape velocity. JSW 16(12):2036–2044, 2005.

Lin, C.-J., Wang, J.-G., and Lee, C.-Y., Pattern recognition using neural-fuzzy networks based on improved particle swam optimization. Expert Syst. Appl. 36:5402–5410, 2009.

Lin, C.-J., An efficient immune-based symbiotic particle swarm optimization learning algorithm for TSK-type neuro-fuzzy networks design. Fuzzy Sets Syst. 159:2890–2909, 2008.

Van den Bergh, F., and Engelbrecht, A. P., A cooperative approach to particle swarm optimization. IEEE Trans. Evol. Comput. 8(3):225–239, 2004.

Niu, B., Zhu, Y. L., He, X. X., and Shena, H., A multi-swarm optimizer based fuzzy modeling approach for dynamic systems processing. Neurocomputing 71:1436–1448, 2008.

Higashi, N., Iba, H., Particle swarm optimization with Gaussian mutation. Proceedings of the IEEE Swarm Intelligence Symp. Indianapolis: IEEE Inc, 72–79, 2003.

Kennedy, J., Eberhart, R. C., A discrete binary version of the particle swarm algorithm, systems, man, and cybernetics, Computational Cybernetics and Simulation IEEE International Conference, 5 (12–15): 4104–4108, 1997.

Simon, D., Training fuzzy systems with the extended Kalman filter. Fuzzy Sets Syst. 132:189–199, 2002.

Coffin, M., and Saltzman, Statistical analysis of computational tests of algorithms and heuristics. INFORMS J. Comput. 12(1):24–44, 2000.

Acknowledgement

This work described in this paper was supported by Research Grant from National Natural Science Foundation of China (60774103) and Major Program Development Fund of SJTU. Moreover, we would also like to thank to the whole medical staff of Shanghai No. 6 People’s Hospital for real data collecting and helpful discussions.

The authors would like to express sincere appreciation to the journal editor and two anonymous referees for their detailed and helpful comments to improve the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Du, G., Jiang, Z., Diao, X. et al. Variances Handling Method of Clinical Pathways Based on T-S Fuzzy Neural Networks with Novel Hybrid Learning Algorithm. J Med Syst 36, 1283–1300 (2012). https://doi.org/10.1007/s10916-010-9589-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10916-010-9589-6