Abstract

In this paper, superconvergence points are located for the approximation of the Riesz derivative of order \(\alpha \) using classical Lobatto-type polynomials when \(\alpha \in (0,1)\) and generalized Jacobi functions (GJF) for arbitrary \(\alpha > 0\), respectively. For the former, superconvergence points are zeros of the Riesz fractional derivative of the leading term in the truncated Legendre–Lobatto expansion. It is observed that the convergence rate for different \(\alpha \) at the superconvergence points is at least \(O(N^{-2})\) better than the optimal global convergence rate. Furthermore, the interpolation is generalized to the Riesz derivative of order \(\alpha > 1\) with the help of GJF, which deal well with the singularities. The well-posedness, convergence and superconvergence properties are theoretically analyzed. The gain of the convergence rate at the superconvergence points is analyzed to be \(O(N^{-(\alpha +3)/2})\) for \(\alpha \in (0,1)\) and \(O(N^{-2})\) for \(\alpha > 1\). Finally, we apply our findings in solving model FDEs and observe that the convergence rates are indeed much better at the predicted superconvergence points.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last two decades, the theory of fractional differential equations (FDEs) has been extensively studied in [15, 17, 18, 28]. Especially, the Riesz fractional derivative, which appears frequently in spatial fractional models (such as various diffusion models), has been widely studied. Some finite-difference based numerical schemes for approximating fractional derivatives and solving linear or nonlinear FDEs are presented in [7, 25,26,27, 30, 34, 40]. There are also works that apply finite element method and DG method to solve Riesz FDEs; some of them concentrate on theoretical analysis, [3, 19, 22], and some are mainly about improving algorithms, such as fast algorithms [11, 20].

On the other hand, spectral methods are promising candidates for solving FDEs since their global nature fits well with the nonlocal definition of fractional operators. Using integer-order orthogonal polynomials as basis functions, spectral methods [4, 8, 10, 12, 13, 33, 37, 42] help enormously with the alleviation of the memory cost for the discretization of fractional derivatives. The authors of [5, 9, 35, 36] designed suitable bases to deal with singularities, which usually appear in fractional problems. In particular, Mao et al. [16] proposed a spectral Petrov–Galerkin method, which is based on generalized Jacobi functions, for solving Riesz FDEs, and provided rigorous error analysis.

In this work, we study the superconvergence phenomenon for some spectral interpolation of the Riesz fractional derivative. In the literature, superconvergence of the h-version finite element method has been well studied and understood, see, e.g., [14, 29], while there have been some relatively recent superconvergence studies of polynomial spectral interpolation and spectral methods in the case of integer-order derivatives, see, e.g., [31, 32, 38, 39]. As for fractional-order derivatives, Zhao and Zhang studied the Riemann–Liouville case recently [41] and found systematically some superconvergence points in the spirit of an earlier work on integral-order spectral methods [39].

A major difficulty in the investigation of superconvergence of spectral methods for fractional problems, compared with integer-order derivatives, is the nonlocality of fractional operators and the complicated forms of fractional derivatives. The second challenge is the construction of a good basis for a spectral scheme. Given a suitable basis, one can then begin the analysis of the approximation error in order to locate the superconvergence points.

One objective of this work is to consider Lobatto-type polynomial interpolants of a sufficiently smooth function, and identify those points where the values of the Riesz fractional derivative of order \(\alpha \) are superconvergent. Note that \(x=\pm 1\) are interpolation points for the Legendre–Lobatto interpolation; this fact guarantees that after taking the derivative of order \(\alpha \in (0,1)\), the global error doesn’t blow up. In this case, superconvergence points are zeros of the Riesz fractional derivative of the corresponding Legendre–Lobatto polynomials. Furthermore, according to a series of numerical experiments, we observe that the convergence rate is at least \(O(N^{-2})\) better than the global convergence rate.

Comparing with the Riemann–Liouville fractional derivative, the main difficulty in studying the Riesz case is to deal with the left and right fractional derivatives simultaneously, see the definition of (2.2)–(2.3). Fractional derivatives of order \(\alpha >1\) create stronger singularities at \(x=\pm 1\), which restrain the use of Lobatto-type polynomials. To handle the stronger singularity, the fractional interpolation using generalized Jacobi functions (GJF) is introduced (in Sect. 4), and its well-posedness, convergence and superconvergence properties are theoretically analyzed. When \(0<\alpha <2\), if the given function is GJF-interpolated at zeros of the Jacobi polynomial \(P_{N+1}^{\frac{\alpha }{2},\frac{\alpha }{2}}(x)\), then the superconvergence points for a fractional derivative of order \(\alpha \) are exactly the interpolation points. Moreover, the convergence rate at the superconvergence points is \(O(N^{-\frac{\alpha +3}{2}})\) and \(O(N^{-2})\) higher than the global convergence rate for \(0<\alpha <1\) and \(1<\alpha <2\), respectively.

To demonstrate the usefulness of our discovery of these superconvergence points, we use the GJF as a basis to solve a model fractional differential equation by both Petrov–Galerkin and spectral collocation methods. We observe that convergence rates at the predicted superconvegrence points are indeed much better than the best possible global rates.

The organization of this paper is as follows. In Sect. 2, definitions and properties of fractional derivatives, Jacobi polynomials, generalized Jacobi functions, and Gegenbauer polynomials are introduced. Section 3 is about the Legendre–Lobatto polynomial interpolation and Sect. 4 deals with the GJF fractional interpolation, along with numerical examples. Section 5 considers some applications of superconvergence theory. Finally, we draw some conclusions in Sect. 6.

2 Preliminaries

We begin with some basic definitions and properties. Throughout the paper, \({\mathbb {Z}}^+\) denotes the set of all positive integers, \({\mathbb {N}}\) denotes the set of all nonnegative integers. \({\mathcal {M}}_{n}({\mathbb {R}})\) denotes the space of all of \(n \times n\) matrices defined on real number field.

2.1 Definitions and Properties of Fractional Derivatives

First, we recall the definitions and properties of Riesz fractional derivatives.

Definition 2.1

Let \(\gamma \in (0,1)\), the left and right fractional integral are defined respectively, as follows:

Then for \(\alpha \in (k-1,k)\), where \(k \in {\mathbb {Z}}^{+}\), the left and right Riemann–Liouville derivatives are defined respectively by:

where \(D^k:=\frac{d^k}{dt^k}\) is the k-th (weak) derivative.

Definition 2.2

Let \(\gamma \in (0,1)\), the one dimensional Riesz potentials are defined as follows:

where sign(x) is the sign function, \(c_1=\frac{1}{2 \sin (\pi \gamma /2)}\), \(c_2=\frac{1}{2 \cos (\pi \gamma /2)}\). Then for \(\alpha \in (k-1,k)\), we can therefore define the Riesz fractional derivative:

Definition 2.3

For any positive real number \(\alpha \in (k-1,k)\), we define:

and

Let us recall the Leibniz rule for fractional derivatives.

Lemma 2.4

(see [21], Chap.2) Let \(\alpha \in {\mathbb {R}}^+\), \(n \in {\mathbb {Z}}^+\), and \(\alpha \in (n-1,n)\). If both f(x) and g(x) along with all their derivatives are continuous in \([-1,1]\), then the Leibniz rule for the left Riemann–Liouville fractional differentiation takes the following form

By changing variables, we can derive the Leibniz rule for the right Riemann–Liouville derivative.

Lemma 2.5

(see [23], Chap.15) Under the same conditions as Lemma 2.4, the Leibniz rule for the right Riemann–Liouville differentiation takes the following form

Lemma 2.6

(see [21], Chap.2) Let \(\gamma \), \(\eta >0\), and let u(x) be a continuous function. Then for any \(x \geqslant -1\), we have:

and

2.2 Jacobi Polynomials and Generalized Jacobi Functions

We start from the definition of Generalized Jacobi Functions and Gegenbauer polynomials.

Definition 2.7

Let \(\alpha >-1\), the Generalized Jacobi Functions (GJF) is defined as follows:

where \(P_n^{\alpha ,\alpha }(x)\) is the n-th Jacobi polynomial with respect to the weight function \(\omega (x)=(1-x)^{\alpha }(1+x)^{\alpha }\).

Definition 2.8

We define the Gegenbauer polynomials by Jacobi polynomials:

The Gegenbauer polynomials have the following properties by [32]:

and

where \(D_{\lambda }\) is a positive constant independent of n; and

where \({\mathcal {E}}_{\rho }\) is the \(Berstein \ ellipse\) defined in (3.4). When we set \(\lambda = \frac{\alpha +1}{2}\), we have:

and

consequently

where \(C_{N+1}^{(\frac{\alpha +1}{2})}(x)=c_{(\frac{\alpha +1}{2},N+1)} P_{N+1}^{\frac{\alpha }{2},\frac{\alpha }{2}}(x)\).

Then, the following lemma shows the connection between the GJF and Riesz fraction derivatives.

Lemma 2.9

(see [16], Theorem 2) Let \(\alpha \in (k-1,k)\), \(k \in {\mathbb {Z}}^+\), then we have

moreover, for \(m=0,1,\ldots ,k-1\),

where \(\nu =o\), \(C(k)=(-1)^{\frac{k-1}{2}}\), if k is odd; \(\nu =e\), \(C(k)=(-1)^{\frac{k}{2}}\), if k is even. Especially:

3 Legendre–Lobatto Interpolation for \(0<\alpha <1\)

According to Definition 2.2, the Riesz fractional derivative of order \(\alpha \) is equivalent to the two-sided Riemann–Liouville fractional derivatives of the same order. This leads to singularities at \(x=\pm 1\). In order to get rid of the singularities, we consider interpolating u(x) by Lobatto-type polynomials, in particular the Legendre–Lobatto polynomials. By doing so, \(x= \pm 1\) are zero points of multiplicity 1 of the error \(u(x)-u_N(x)\) since \(x=\pm 1\) are two of the interpolation points. This guarantees that, after taking derivatives of order \(\alpha \in (0,1)\), the global error is finite. In this section, we always assume that u(x) is analytic on \([-1,1]\), and can be analytically extended to a certain \(Berstein \ ellipse\).

3.1 Interpolation of Analytic Functions

Let \(\{ x_i \}_{i=0}^N\) be the \((N+1)\) interpolation points, where \(-1 \leqslant x_0<x_1< \cdots <x_N \leqslant 1\). Let \(u_N(x) \in {\mathbb {P}}_{N}([-1,1])\) be the interpolation polynomial, s.t.

And we define

If \(\{ x_i \}_{i=0}^N\) is the set of zero points of \((N+1)\) degree Legendre–Lobatto polynomial, then

where \(L_{n}(x)\) represents the Legendre polynomial of degree n, and the right hand side is exactly the Legendre–Lobatto polynomial of degree \((N+1)\).

Suppose that u(x) is analytic on \([-1,1]\), it is well known that u(x) can be analytically extended to a domain enclosed by the so-callded Bersteinellipse, with the foci \(\pm 1\):

where \(i=\sqrt{-1}\) is the imaginary unit, \(\rho \) is the sum of semimajor and semiminor axes. Then we have the following bounds for \({\mathcal {L}}({\mathcal {E}}_{\rho })\), the perimeter of the ellipse, and \({\mathcal {D}}_{\rho }\), the shortest distance from \({\mathcal {E}}_{\rho }\) to \([-1,1]\) respectively:

For convenience, we define:

To study the property of superconvergence, by introducing the \(Hermite's \ contour \ integral\) as presented in [1, 2, 6], we have the following point-wise error expression:

The following analysis is based on this error expression.

3.2 Theoretical Statements

Unfortunately there is not an estimation of \(^R D^{\alpha -m} \omega _{N+1}(x)\), \(m=0,1,\cdots \) so far. But with the following reasonable assumption:

we can still have the following theorem:

Theorem 3.1

Suppose \(0<\alpha <1\). Let u(x) be an analytic on and within the complex ellipse \({\mathcal {E}}_{\rho }\), where \(\rho > 3+2\sqrt{2}\), and let \(u_N\) be the spectral interpolant using the Gauss–Lobatto points. Then under the assumption of (3.6), the \(\alpha \)-th Riesz fractional derivative of the error \(^R D^{\alpha } (u(x)-u_N(x))\) is convergent, and is dominated by the leading term of the infinity series.

Proof

The proof starts with (3.5), according to (2.1), (2.4), (2.5), \(\forall x \in [-1,1]\), we have:

If we denote:

and by [31], there exists \(C_{\rho }>0\), such that

Then

We estimate the infinity sum next. When \(m\geqslant 2\), by Lemma 2.6 and Holder’s inequality,

for \(\forall x \in [-1,1]\), so

which leads to

if we strictly have \({\mathcal {D}}_{\rho }>2\), i.e. \(\rho >3+2\sqrt{2}\), then \(\exists c_{\rho },d_{\rho }>0\), s.t.

so we can have:

Therefore, by (3.11), the infinity sum in (3.9) can be estimated below:

by the formula \((1+x)^{\alpha } = \sum _{m=0}^{\infty } {\alpha \atopwithdelims ()m} x^m\). Under the assumption (3.6),

so \(^R D^{\alpha }(u(x)-u_N(x))\) is convergent, and the leading term dominates the error. \(\square \)

Parallel to the conclusion in [41], we have the following theorem.

Theorem 3.2

Under the same assumptions in Theorem 3.1, the \(\alpha \)-th Riesz fractional derivative superconverges at \(\{ \xi _i^{\alpha } \}\), which satisfies

where \(\omega _{N+1}(x)\) is defined by (3.3).

Proof

According to the analysis in the previous theorem, the decay of the error is dominated by the leading term:

When \(x=\xi _i^{\alpha }, i=0,1,\ldots ,N\), the leading term vanishes, and the remaining terms have higher convergent rates. \(\square \)

Next, we describe a method to compute \(\{ \xi _i^{\alpha } \}_{i=0}^N\). For \(0<\mu <1\), we start from

to have:

In the numerical experiments, we observe that all of the zeros of \(^R D^{\alpha } (L_{N-1}-L_{N+1})(x)\) are multiplicity 1 and lie in \((-1,1)\). So they can be calculated by Newton iteration:

- (1)

The initial value may be produced by observing the plot.

- (2)

The function \(^R D^{\alpha } (L_{N-1}-L_{N+1})(x)\) is differentiable when \(x \ne \pm 1\), so the Newton iteration may be applied. And it converges fast.

3.3 Numerical Statements and Validations

3.3.1 Numerical Validations for Superconvergence Points

In this subsection, some numerical examples are presented to show the superconvergence.

Example 1

We consider the function \(u(x)=(1+x)^9(1-x)^9.\)

Here u(x) is interpolated at \(N+1=12\) zero points of \(\omega _{12}(x)\), where \(\omega _{12}(x)\) is the Legendre–Lobatto polynomial of degree 12. We set \(\alpha =0.1\), 0.3, 0.5, 0.7, and 0.9, respectively. Figure 1 plots \(^RD^{\alpha }{(u-u_{11})(x)}\) on \([-1,1]\) with different \(\alpha \), and the asterisks indicate the superconvergence points, i.e. the zeros of \(^RD^{\alpha } \omega _{12}(x)\), predicted by Theorem 3.2.

Example 2

We consider the function \(u(x)=\sin (4\pi x)+\cos (4.5 \pi x)\). In the left panel of Fig. 2, we set \(N=34\) and \(\alpha =0.79\). Again, it shows the error of \(^RD^{\alpha }{(u-u_{N})(x)}\), and the superconvergence points, predicted by Theorem 3.2, are highlighted. To further compare the superconvergence phenomenon with different \(\alpha \) values, we set a smaller \(N=14\), and \(\alpha =0.11\), 0.46, 0.79 respectively in the right panel.

In all of the figures, we see that the error at those points is much smaller than the global maximal error. In addition, we observe that both the global maximal error and the errors at the superconvergence points increase, when \(\alpha \) increases.

3.3.2 Numerical Observation of Superconvergent Rates

In order to quantify the superconvergence rate, we define the following ratio:

Example 1

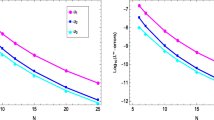

Consider \(u(x)=(1+x)^9(1-x)^9\). Then we plot the ratio in the log-log chart with \(N=8,10,12,14,16\) in Fig. 3 (left) for \(\alpha =0.01,0.1,0.2,0.3,0.4,0.5\), and Fig. 3 (right) for \(\alpha = .5,0.6,0.7,0,8,0.9,0.99\).

Example 2

Consider \(u(x)=\sin (4\pi x)+\cos (4.5 \pi x)\). Then we set \(\alpha =0.1, 0.3,0.5, 0.7, 0.9\), and plot the ratio in the log-log chart with N from 12 to 30, respectively (Fig. 4).

In both of the examples, two lines \(N^2\) and \(N^3\) are also plotted as reference slopes.

(Example 2) Left: curves \(^RD^\alpha (u-u_{32})(x)\) for \(\alpha =0.79\), the roots of \(^RD^\alpha \omega _{33}(x)\) are highlighted by \(*\). Right: curves \(^RD^\alpha (u-u_{14})(x)\) for \(\alpha =0.11,0.46,0.79\). For each \(\alpha \), the roots of \(^RD^\alpha \omega _{15}(x)\) are highlighted by \(*\)

We see that at the superconvergence points, the convergent rate is at least \(O(N^{-2})\) faster than the global rate.

4 GJF Fractional Interpolation for Arbitrary Positive \(\alpha \)

4.1 Theoretical Statements

When \(\alpha >1\), interpolation by the Lobatto-type polynomials, which provides zeros of multiplicity 1 at \(x=\pm 1\) does not work anymore, since it is not able to control the two-sided Riemann–Liouville fractional derivatives of order \(\alpha > 1\). Inspired by Lemma 2.8, the GJF fractional interpolation is introduced here. On the other hand, due to singularities at \(x=\pm 1\), some more strict conditions are required for u(x). Let \(\alpha >1\) be the order of Riesz fractional derivatives, we always assume that \((1-x^2)^{-\frac{\alpha }{2}}u(x)\) is analytic on \([-1,1]\), and can be analytically extended to a \(Berstein \ ellipse\) with an appropriate \(\rho \). In this section, we mainly concentrate on the analysis of the situation \(0<\alpha <2\). The conclusion can be generalized to the cases \(\alpha >2\). Let’s start with the definition of GJF fractional interpolation:

Definition 4.1

Let \(\alpha \in (k-1,k)\) be a given positive real number, where \(k \in {\mathbb {Z}}^+\). Define

Suppose \(v(x):=(1-x^2)^{-\frac{\alpha ^*}{2}}u(x) \in C[-1,1]\), the goal is to find

where \(v_N(x) \in {\mathbb {P}}_N[-1,1]\), such that

then \(u_N(x)\) is called the GJF fractional interpolant with order \(\frac{\alpha ^*}{2}\) of u(x).

In fact, (4.3) is equivalent to find \(v_N(x) \in {\mathbb {P}}_N[-1,1]\), such that

Since \(v_N(x)\) is a polynomial approximation, by (3.5), (4.2), (4.3), \(\forall x \in [-1,1]\), the error can be expressed by:

Therefore, we may analyze the superconvergence points by using the same framework as the previous section.

Theorem 4.2

Suppose \(0<\alpha <2\). Let u(x) be a function such that \((1-x^2)^{-\frac{\alpha }{2}}u(x)\) is analytic on and within the complex ellipse \({\mathcal {E}}_{\rho }\), where \(\rho > 3+2\sqrt{2}\), and let \(u_N(x)\) be the GJF fractional interpolant of u(x) at \(\{ \xi _j^{\alpha } \}_{j=0}^N\), the roots of \(P_{N+1}^{\frac{\alpha }{2},\frac{\alpha }{2}}(x)\). Then the \(\alpha \)-th Riesz fractional derivative of the error \(^R D^{\alpha } (u(x)-u_N(x))\) is convergent, and is dominated by the leading term of the infinity series.

Proof

By taking the fractional derivative of (4.5), we have:

where \(\nu =o\) when k is odd and \(\nu =e\) when k is even. If we set \(\{x_i \}_{i=0}^N\) to be zero points of \(P_{N+1}^{\frac{\alpha ^*}{2},\frac{\alpha ^*}{2}}(x)\), then we can consider

Due to the form in (2.13), for \(m \in {\mathbb {N}}\), define:

Since \(0<\alpha <2\), so \(\alpha =\alpha ^*\).

I. When \(0<\alpha <1\), by the same analysis in Theorem 3.1, we have:

where \(c(\rho ,v) = \frac{C_{\rho }M_v \cdot {\mathcal {L}}({\mathcal {E}}_{\rho })}{2 \pi } (1+\rho ^{-2})^{\frac{\alpha +1}{2}} (\rho ^2+\rho ^{-2})^{\frac{1}{2}}\).

II. When \(1<\alpha <2\), by Lemma 2.9, we have:

Even though \(\phi _2(x)\) is not a classical Gegenbauer, we may calculate it by:

where

with \(c_2=\frac{1}{2 \cos (\pi \gamma /2)\Gamma (\gamma )}\), and \({_{N+1}^{(\frac{\alpha }{2},\frac{\alpha }{2})}c_0^{(\frac{\alpha }{2},1-\frac{\alpha }{2})}}\) represents the weighted inner product (see [24], pp 76):

We estimate it numerically, seeing Figs. 5, 6 and 7, and observe that:

and by (2.11), we have:

And by using the Holder’s inequality, we have:

If \(m \geqslant 3\), \(\phi _m(x)\) are fractional integrals. So similar to (3.10), we have:

So for the \((m+1)\)-th term in the infinity series of \(^R D^{\alpha }(u(x)-u_N(x))\), we have:

if we strictly have \({\mathcal {D}}_{\rho }>2\), i.e. \(\rho >3+2\sqrt{2}\), then \(\exists c'_{\rho },d'_{\rho }>0\), s.t.

so we have:

Therefore,

where \(c(\rho ,v) = \frac{C_{\rho }M_v \cdot {\mathcal {L}}({\mathcal {E}}_{\rho })}{2 \pi } (1+\rho ^{-2})^{\frac{\alpha +1}{2}} (\rho ^2+\rho ^{-2})^{\frac{1}{2}}\). \(\square \)

Thanks to the analysis in [32], we may quantitatively analyze the gain of convergence rate at the superconvergence points. The result is given in the following theorem.

Theorem 4.3

Under the same assumptions in Theorem 4.2, when \(0<\alpha <1,\) we obtain the following global error estimation:

and the error estimation at the superconvergence points \(\{ \xi _j^{\alpha } \}_{j=0}^N\):

when \(1<\alpha <2\), the global error estimation and the error estimation at the superconvergence points \(\{ \xi _j^{\alpha } \}_{j=0}^N\) are given respectively in the following:

where \(M_v=\sup _{z \in {\mathcal {E}}_{\rho }} \{ (1-z^2)^{-\frac{\alpha }{2}}u(z) \}\).

Proof

When \(0<\alpha <1\), by Lemma 2.9, we have:

So (4.16) is obtained by combining the estimation of \(\phi _0\) and (4.8). If \(x=\xi _j^{\alpha }\), then the leading term vanishes, i.e.

so that (4.17) is obtained.

When \(1<\alpha <2\), we may use (4.9), (4.10), (4.12), (4.15) and the same argument to get the result. \(\square \)

When \(\alpha >2\), since

where \(\alpha -\alpha ^*\) is an even integer, we can generalize the results.

Corollary 4.4

Let \(\alpha \in (k-1,k)\), \(k \in {\mathbb {Z}}^+\), \(k \ll N\), and \(\alpha ^*\) be defined in (4.1). Under the same assumptions in Theorem 4.2, we have:

when k is odd,

the superconvergence points \(\{\xi _{i}^{\alpha } \}_{i=0}^{N-k+1}\) are the zero points of \(P_{N-k+2}^{\frac{\alpha ^*}{2}+k-1,\frac{\alpha ^*}{2}+k-1}(x)\), and at those points, we have:

when k is even,

the superconvergence points \(\{\xi _{i}^{\alpha } \}_{i=0}^{N-k+2}\) are the zero points of \(P_{N-k+3}^{\frac{\alpha ^*}{2}+k-2,\frac{\alpha ^*}{2}+k-2}(x)\), and at those points, we have:

where the constants only depend on \(\alpha \), \(\rho \).

Proof

When k is odd, by (2.1), (4.7), and Lemma 2.9,

From (2.8), the leading term is

and the second term is

and \(\phi _m=o(\phi _1)\), for \(m \geqslant 2\). Therefore, the leading term vanishes at \(\{\xi _{i}^{\alpha } \}_{i=0}^{N-k+1}\), zero points of \(P_{N-k+2}^{\frac{\alpha ^*}{2}+k-1,\frac{\alpha ^*}{2}+k-1}(x)\). Similar to the proof of Theorem 4.2, the estimates (4.20) and (4.21) are derived from (4.8).

When k is even,

and the rest of the proof is similar to the case when k is odd. \(\square \)

4.2 Numerical Validations

To make sure v(x) is smooth enough, in this subsection, we consider the function:

where \(v(x)=\frac{1}{1+(x+3)^2}\), and we set \(\alpha =0.4, 1.7\) respectively. It’s easy to see that v(x) has two simple poles at \(z=-3 \pm i\) in the complex plane. Hence it is analytic within the Berstein ellipse with \(\rho > 3+2\sqrt{2}\). In the numerical example, we set \(N=10\) so \(v_N(x)\) is interpolated at 11 zero points \(\{ \xi _j^{\alpha } \}_{j=0}^{10}\) of \(P_{11}^{\frac{\alpha }{2},\frac{\alpha }{2}}(x)\). The true solution of \(^RD^{\alpha }u(x)\) is approximated by the sum of 40 terms. Fig 8 (left) and (right) depict graphs of \(^RD^{\alpha }(u-u_{10})(x)\), where \(u_N(x)\) is the GJF fractional interpolation, and \(\alpha =0.4\) and 1.7, respectively. According to Theorem 4.2, the 11 interpolation points are predicted as superconvergence points. Similar with Fig. 1, the errors at those superconvergence points are significantly less than the global maximal error.

5 Applications

In this section, we focus on applications of superconvergence. Let \(1<\alpha <2\), and we consider the following FDE:

We provide two methods to solve for the equation: Petrov–Galerkin method and spectral collocation method. Our goal is to observe superconvergence phenomenon in numerical solutions. In the following numerical examples, we set f(x) be the function such that \(u(x)=\frac{(1-x^2)^{\frac{\alpha }{2}}}{1+0.5x^2}\) is the true solution. Then we demonstrate the error curve \(^RD^{\alpha }(u-u_N)\) and highlight, by ‘\(*\)’, its value at the superconvergence points predicted in Theorem 4.2.

5.1 Petrov–Galerkin Method

For any given \(1<\alpha <2\), we are looking for

such that \(\forall v \in {\mathbb {P}}_N[-1,1]\), we have:

where the right hand side is calculated by numerical quadrature with large enough M. According to (2.14), by setting \(v=P_i^{\frac{\alpha }{2},\frac{\alpha }{2}}\), \(i=0,1,\ldots ,N\), (5.2) is equivalent to find \((c_0,c_1,\ldots , c_N)^T \in {\mathbb {R}}^{N+1}\), such that, for \(i=0,1,\ldots ,N\),

where \(d_j=-\frac{\Gamma (j+1+\alpha )}{\Gamma (j+1)}\). We observe that the stiffness matrix is diagonal and dominates the system.

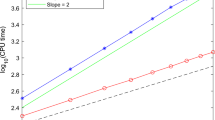

We plotted error curves \(^RD^\alpha (u-u_N)\) in Figs. 9 and 10 for \(\alpha =1.27,1.84\), \(N=13,17\), respectively. According to Theorem 4.2, the superconvergence points are predicted to be zeros of \(P^{\frac{\alpha }{2},\frac{\alpha }{2}}_{N+1}(x)\). We observe that errors at those points are much smaller than the global maximal error, and moreover, both the global maximal error and errors at the superconvergence points increase, when \(\alpha \) increases. Figure 11 depicted the reciprocal of (3.13), for \(\alpha =1.1,1.52,1.9\), respectively, where \(O(N^{-1})\) is plotted as a reference slope (Since they are too close to each other, only three \(\alpha \) cases are shown in Fig. 11). We see that, at the superconvergence points predicted by Theorem 4.2, the convergence rate is \(O(N^{-1})\) faster than the optimal global rate.

5.2 Spectral Collocation Method

For any given \(1<\alpha <2\), according to the definition of GJF fractional interpolation with order \(\frac{\alpha }{2}\), we have:

where \(\ell _j \in {\mathbb {P}}_N[-1,1]\) is the Lagrange basis function satisfying

Therefore, we are looking for \(V=(v_0,v_1, \cdots , v_N)^T \in {\mathbb {R}}^{N+1}\), such that

where \(u_N(x_i)=(1-x_i^2)^{\frac{\alpha }{2}} v_i\). Then, (5.5) is equivalent to solve the linear system:

where \(D,\Lambda \in {\mathcal {M}}_{N+1}({\mathbb {R}})\), \(F \in {\mathbb {R}}^{N+1}\), and for \(i,j=0,1,\ldots ,N\),

and the differential matrix D can be analytically calculated by (2.14). Here, we set \(\{ x_i \}_{i=0}^N\) to be zeros of \(P^{\frac{\alpha }{2},\frac{\alpha }{2}}_{N+1}(x)\).

Error curves \(^RD^\alpha (u-u_N)\) are plotted in Figs. 12 and 13 for \(\alpha =1.27,1.84\), \(N=13,17\), respectively. As predicted by Theorem 4.2, the superconvergence points \(\{ x_i \}_{i=0}^N\) are zeros of \(P^{\frac{\alpha }{2},\frac{\alpha }{2}}_{N+1}(x)\). We can see the errors at those points are significantly smaller than the global maximal error. Furthermore, the performance of superconvergence points of the collocation method is much better than that of the Petrov–Galerkin method. Errors at superconvergence points for the collocation method are more closer to zeros than the Petrov–Galerkin case as demonstrated by Fig. 14, the reciprocal of (3.13) ratios with \(O(N^{-3})\) and \(O(N^{-4})\) as reference slopes. We observe that the convergence rate at superconvergence points for the collocation method is about \(O(N^{-3})\) better than the optimal global rate. One possible reason is that the interpolation points and superconvergence points of \(\alpha \)th Riesz derivative are identical.

6 Concluding Remarks

In this work, we investigated superconvergence for \(u-u_N\) under Riesz fractional derivatives. We identified superconvergence points and found the improved convergence rate at those points. When \(0<\alpha <1\), we consider \(u_N(x)\) as either polynomial interpolation or GJF fractional interpolation, the improvement in convergence rates are \(O(N^{-2})\) and \(O(N^{-\frac{\alpha +3}{2}})\), respectively. When \(\alpha >1\), only the GJF fractional interpolant is discussed due to the singularity, and the improvement in the convergence rate is \(O(N^{-2})\). In particular, when \(0<\alpha <2\), for the case of GJF fractional interpolation, the superconvergence points are the same as the interpolation points. In addition, when we apply our superconvergence knowledge to the numerical solution of model FDEs, our theory predicts accurately the locations of superconvergence points. Moreover, we notice that for the Petrov–Galerkin method, the convergence improvement at the superconvergence points is only \(O(N^{-1})\), which is inferior to \(O(N^{-2})\), the improvement for the interpolation; and for the spectral collocation method, the convergence improvement at the superconvergence points is \(O(N^{-3})\) to \(O(N^{-4})\), which is superior to \(O(N^{-2})\), the improvement for the interpolation.

It seems that polynomial-based interpolation plays only a limited role in solving FDEs. We believe that GJF-type fractional interpolation is to be preferred in fractional calculus. We hope that our findings can be useful in numerically solving FDEs, especially when using data at the predicted superconvergence points.

References

Askey, R.: Orthogonal Polynomials and Special Functions. SIAM, Philadelphia (1975)

Bernstein, S.N.: Sur l’ordre de la meilleure approximation des foncions continues par des polynomes de degré donné. Mém. Publ. Class Sci. Acad. Belgique (2) 4, 1–103 (1912)

Bu, W., Tang, Y., Yang, J.: Galerkin finite element method for two-dimensional Riesz space fractional diffusion equations. J. Comput. Phys. 276, 26–38 (2014)

Chen, F., Xu, Q., Hesthaven, J.S.: A multi-domain spectral method for time-fractional differential equations. J. Comput. Phys. 293, 157–172 (2015)

Chen, S., Shen, J., Wang, L.-L.: Generalized Jacobi functions and their applications to fractional differential equations. Math. Comput. 85, 1603–1638 (2016)

Davis, P.J.: Interpolation and Approximation. Dover, New York (1975)

Deng, K., Deng, W.: Finite difference/predictor-corrector approximations for the space and time fractional Fokker–Planck equation. Appl. Math. Lett. 25(11), 1815–1821 (2012)

Fatone, L., Funaro, D.: Optimal collocation nodes for fractional derivative operators. SIAM J. Sci. Comput. 37, A1504–A1524 (2015)

Huang, C., Zhang, Z., Song, Q.: Spectral methods for substantial fractional differential equations. J. Sci. Comput. 74, 1554–1574 (2018)

Ishteva, M., Boyadjiev, L., Scherer, R.: On the Caputo operator of fractional calculus and C-Laguerre functions. Math. Sci. Res. 9, 161–170 (2005)

Lei, S., Sun, H.: A circulant preconditioner for fractional diffusion equations. J. Comput. Phys. 242, 715–725 (2013)

Li, C.P., Zeng, F.H., Liu, F.: Spectral approximations to the fractional integral and derivative. Frac. Calc. Appl. Anal. 15, 383–406 (2012)

Li, X., Xu, C.J.: A space–time spectral method for the time fractional diffusion equation. SIAM J. Numer. Anal. 47, 2108–2131 (2009)

Lin, Q., Lin, J.: Finite Element Methods: Accuracy and Improvement. Math. Monogr. Ser. 1. Science Press, Beijing (2006)

Mandelbrot, B.B., Van Ness, J.W.: Fractional brownian motions, fractional noises and applications. SIAM Rev. 10, 422–437 (1968)

Mao, Z., Chen, S., Shen, J.: Efficient and accurate spectral method using generalized Jacobi functions for solving Riesz fractional differential equations. Appl. Numer. Math. 106, 165–181 (2016)

Meerschaert, M.M., Benson, D., Baeumer, B.: Operator Lévy motion and multiscaling anomalous diffusion. Phys. Rev. E 63, 1112–1117 (2001)

Metzler, R., Klafter, J.: The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

Mustapha, K., McLean, W.: Uniform convergence for a discontinuous Galerkin, time-stepping method applied to a fractional diffusion equation. IMA J. Numer. Anal. 32, 906–925 (2012)

Pang, H., Sun, H.: Multigrid method for fractional diffusion equations. J. Comput. Phys. 231, 693–703 (2012)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Roop, J.P.: Computational aspects of FEM approximation of fractional advection dispersion equations on bounded domains in \({\mathbb{R}}^2\). J. Comput. Appl. Math. 193(1), 243–268 (2006)

Samko, S.G., Kilbas, A.A., Marichev, O.I.: Fractional Integrals and Derivatives, Theory and Applications. Gordon and Breach Science Publishers, Washington (1993)

Shen, J., Tang, T., Wang, L.-L.: Spectral Methods: Algorithms, Analysis and Applications. Springer Series in Computational Mathematics, vol. 41. Springer, Berlin (2011)

Shen, S., Liu, F., Anh, V., Turner, I.: The fundamental solution and numerical solution of the Riesz fractional advection-dispersion equation. IMA J. Appl. Math. 73, 850–872 (2008)

Shen, S., Liu, F., Anh, V., Terner, I., Chen, J.: A novel numerical approximation for the space fractional advection-dispersion equation. IMA J. Appl. Math. 79(3), 421–444 (2014)

Stynes, M., Gracia, J.L.: A finite difference method for a two-point boundary value problem with a Caputo fractional derivative. IMA J. Numer. Anal. 35, 698–721 (2015)

Sun, H.G., Chen, W., Chen, Y.Q.: Variable-order fractional differential operators in anomalous diffusion modeling. Phys. A 388, 4586–4592 (2009)

Wahlbin, L.B.: Superconvergence in Galerkin Finite Element Methods. Lecture Notes in Mathematics, vol. 1605. Springer, Berlin (1995)

Wang, H., Du, N.: Fast alternating-direction finite difference methods for three-dimensional space-fractional diffusion equations. J. Comput. Phys. 258, 305–318 (2013)

Wang, L.-L., Zhao, X.D., Zhang, Z.: Superconvergence of Jacobi–Gauss-type spectral interpolation. J. Sci. Comput 59, 667–687 (2014)

Xie, Z., Wang, L., Zhao, X.: On exponential convergence of Gegenbauer interpolation and spectral differentiation. Math. Comput. 82, 1017–1036 (2012)

Xu, Q., Hesthaven, J.S.: Stable multi-domain spectral penalty methods for fractional partial differential equations. J. Comput. Phys. 257, 241–258 (2014)

Yang, Q., Turner, I., Liu, F., Ilić, M.: Novel numerical methods for solving the time-space fractional diffusion equation in two dimensions. SIAM J. Sci. Comput. 33, 1159–1180 (2011)

Zayernouri, M., Karniadakis, G.E.: Fractional Sturm–Liouville eigen-problems: theory and numerical approximations. J. Comput. Phys. 47, 2108–2131 (2013)

Zayernouri, M., Karniadakis, G.E.: Fractional spectral collocation method. SIAM J. Sci. Comput. 36, A40–A62 (2014)

Zeng, F., Liu, F., Li, C.P., Burrage, K., Turner, I., Anh, V.: Crank-Nicolson ADI spectral method for the 2-D Riesz space fractional nonlinear reaction-diffusion equation. SIAM J. Numer. Anal. 52, 2599–2622 (2014)

Zhang, Z.: Superconvergence of a Chebyshev spectral collocation method. J. Sci. Comput. 34, 237–246 (2008)

Zhang, Z.: Superconvergence points of polynomial spectral interpolation. SIAM J. Numer. Anal. 50, 2966–2985 (2012)

Zhao, X., Sun, Z., Hao, Z.: A fourth-order compact ADI scheme for two-dimensional nonlinear space fractional Schrodinger equation. SIAM J. Sci. Comput. 36, 2865–2886 (2014)

Zhao, X., Zhang, Z.: Superconvergence points of fractional spectral interpolation. SIAM J. Sci. Comput. 38, A598–A613 (2016)

Zheng, M., Liu, F., Turner, I., Anh, V.: A novel high order space-time spectral method for the time-fractional Fokker–Planck equation. SIAM J. Sci. Comput. 37, A701–A724 (2015)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported in part by the National Natural Science Foundation of China under grants NSFC 11871092, NSAF U1530401, 11701081, 1186010285; the Jiangsu Provincial Key Laboratory of Networked Collective Intelligence (No. BM2017002), the Natural Science Youth Foundation of Jiangsu Province of China (Nos. BK20160660); the Fundamental Research Funds for the Central Universities of China (No. 2242019K40111); and Key Project of Natural Science Foundation of China (No. 61833005).

Rights and permissions

About this article

Cite this article

Deng, B., Zhang, Z. & Zhao, X. Superconvergence Points for the Spectral Interpolation of Riesz Fractional Derivatives. J Sci Comput 81, 1577–1601 (2019). https://doi.org/10.1007/s10915-019-01054-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-01054-6