Abstract

In this paper, we consider the linearly constrained \(\ell _1\)–\(\ell _2\) minimization, and we propose an accelerated Bregman method for solving this minimization problem. The proposed method is based on the extrapolation technique, which is used in accelerated proximal gradient methods proposed by Nesterov and others, and on the equivalence between the Bregman method and the augmented Lagrangian method. A convergence rate of \(\mathcal{O }(\frac{1}{k^2})\) is proved for the proposed method when it is applied to solve a more general linearly constrained nonsmooth convex minimization problem. We numerically test our proposed method on a synthetic problem from compressive sensing. The numerical results confirm that our accelerated Bregman method is faster than the original Bregman method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem

where \(\beta \ge 0\), \(A\in \mathbb{R }^{m\times n}\), \(x\in \mathbb{R }^n\), and \(b\in \mathbb{R }^m\). The penalized form of the (1), in which the linear constraints are penalized by using a square of its \(\ell _2\)-norm,

where \(\gamma >0\), has been used in statistical analysis of the variable selection problem [23, 35], neuroimaging [5], and genome analysis [14]. It is called as the elastic net problem. In variable selection problem, \(A\) is a modeling matrix whose columns are predictors[35] and De Mol et al [15] proposed good predictors using dictionary learning based on training set. There are some algorithms for solving the elastic net problem. Zuo and Hastie [35] proposed the least angle regression and Friedman et al. [17] proposed the coordinate descent method.

In compressive sensing [1, 12], a linearly constrained \(\ell _1\) minimization problem, i.e., the problem (1) with \(\beta = 0\):

where \(m \ll n\), i.e., an underdetermined system of equations, has been used. This linearly constrained \(\ell _1\) minimization problem (3) is often called as the basis pursuit problem [13]. The main theory of compressive sensing is that a large sparse signal can be recovered from highly incomplete information. Many real-world signals can be approximated well by sparse or compressible under a suitable basis, so it can be considered that a matrix \(A\) is the product of a sensing matrix and the basis transformation matrix and the number of rows of \(A\) (i.e., \(m\)) is less than the number of columns of \(A\) (i.e., \(n\)). The theoretical results provided in [9–11] related to compressive sensing showed that the solution of basis pursuit (3) is equal to the sparsest solution in certain conditions of \(A\) with overwhelming probability. The basis pursuit problem can be recast as Linear Programming(LP) and so LP solvers, such as \(\ell _1\)-magic [8] and PDCO [29], were proposed for solving the basis pursuit. Recently, Yin et al. [34] proposed the Bregman method, which is equivalent to an augmented Lagrangian method, to solve the basis pursuit problem. Several other algorithms can also be applied to the basis pursuit problem [30, 31].

Adding the \(\Vert \cdot \Vert ^2_2\) in the basis pursuit problem yields the tractable object function \(\Vert x\Vert _1 + \frac{\beta }{2}\Vert x\Vert ^2_2\), which is a strictly convex function. Thus, the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem (1) has a unique solution and the dual problem is smooth. When \(\beta \) is sufficiently small, the solution of (1) is also a solution of the basis pursuit problem (3). This exact regularization property of \(\ell _1\)–\(\ell _2\) has been studied in [16, 33]. Problem (1) has a \(\ell _2\) norm in the regularizer term, so it is less sensitive to noise than the basis pursuit problem (3).

The linearized Bregman method [6, 7] is a well-known optimization algorithm for compressive sensing. This method actually solves the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem (1) [6, 27] instead of the basis pursuit problem (3). However, the linearized Bregman method converges slowly, so many accelerated schemes have been proposed. The kicking technique was introduced in [27]. Yin [33] proved that the linearized Bregman method is equivalent to a gradient descent method applied to the Lagrangian dual problem of (1). Based on this study, Yin [33] improved the linearized Bregman method using Barzilai-Borwein line search [2], the limited memory BFGS [24], and nonlinear conjugate gradient methods. Huang et al. [22] developed an accelerated linearized Bregman method based on the fact that the linearized Bregman method is equivalent to a gradient descent method applied to the Lagrangian dual of problem (1) and the extrapolation technique, which is used in the accelerated proximal gradient methods [3, 25] studied by Nesterov and others. Yang et al. [32] applied alternating split technique to the Lagrange dual of problem (1) for solving the linearly constraints \(\ell _1\)–\(\ell _2\) minimization problem.

Recently, He et al. [19] developed the accelerated augmented Lagrangian method for the linearly constraints minimization problem whose objective function is differentiable. Since the problem (1) has only linear constraint, in this paper, we extend the algorithm in [19] to solve the linearly constrained minimization problem in which the object function is convex and continuous but not differentiable. By using the equivalence between the Bregman method and the augmented Lagrangian method (ALM), and using the generalization of the accelerated ALM [19], we propose the accelerated Bregman method for solving the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem (1). We observe that the performance of the proposed method is similar with that of the accelerated linearized Bregman method from numerical simulations when we solve the linearly constraints \(\ell _1\)–\(\ell _2\) minimization problem (1). In [22], from the numerical tests they observed that the accelerated linearized Bregman method is faster than the algorithms discussed in [33]. Hence, we can expect that our proposed method is faster than the methods discussed in [33].

The remainder of this paper is organized as follows. In Sect. 2, we introduce the Bregman method and linearized Bregman method. In Sect. 3, we describe the accelerated ALM, propose our accelerated Bregman method, and give \(O(\frac{1}{k^2})\), where \(k\) is the iteration count, complexity of the accelerated Bregman method and the accelerated ALM. In Sect. 4, we provide the numerical tests on the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem and we compare the performance of our accelerated Bregman method with that of other methods.

2 Bregman Methods

In this section, we introduce the Bregman method and linearized Bregman method. The Bregman method [26] was proposed for solving total variation-based image restoration. The Bregman distance with respect to an \(\ell _1\)–\(\ell _2\) function \(f(\cdot ) = \Vert \cdot \Vert _1 + \frac{\beta }{2}\Vert \cdot \Vert ^2_2\) with points \(u\) and \(v\) is defined as

where \(p\) is an element in \(\partial f(v)\), i.e., a subdifferential of \(f\) at \(v\). The Bregman method for solving (1) can be expressed as follows:

starting with \(x^0 = \mathbf 0 \) and \(p^0 = \mathbf 0 .\)

By Fermat’s rule [28, Theorem 10.1] and the first step in (4), we have

Hence \(p^{k+1}\) in the second step in (4) is a subgradient of \(f\) at \(x^{k+1}.\) Note that the original Bregman method does not have the parameter \(\gamma \) (i.e., \(\gamma \) is set as \(1\)). Instead the scaling parameter \(\mu \) is used, i.e., \(f(x) = \mu (\Vert x\Vert _1+\frac{\beta }{2}\Vert x\Vert ^2_2)\). We can get the same solution by setting \(\gamma =\frac{1}{\mu }\). Now, we slightly modify the origianl Bregman method as follows:

starting with \(x^0 = \mathbf 0 \) and \(b^0 = b.\)

The following lemma gives the condition of the equivalence of the first step of the original Bregman method (4) to that of the modified Bregman method (5).

Lemma 1

\(x^{k+1}\) computed by Bregman method (4) equals \(x^{k+1}\) computed by (5) if and only if

Proof

It is obvious by just comparing \(x^{k+1}\) in (4) and \(x^{k+1}\) in (5). \(\square \)

By using Lemma 1 and mathematical induction, we can establish the following theorem.

Theorem 1

The modified Bregman method (5) is equivalent to the original Bregman method (4).

It was proved in [34] that the Bregman method is equivalent to the ALM which was proposed in [20]. The Lagrangian function of (1) is

where \(\lambda \in \mathbb{R }^m\) is the Lagrange multiplier. The augmented Lagrangian function

combines the Lagrangian function with the penalty term \(\Vert Ax-b\Vert ^2_2.\) The ALM for solving (1) is

For completeness, we provide a proof of the equivalence between the Bregman method and the ALM. By comparing \(x^{k+1}\) in (5) and \(x^{k+1}\) in (7), we obtain the following technical lemma to prove the equivalence. The proof is simple, so we omit it.

Lemma 2

\(x^{k+1}\) of the first step in (5) is equal to that of the first step of the ALM (7) if and only if

Theorem 2

The Bregman method (4) is equivalent to the augmented Lagrangian method (7) starting with \(\lambda ^0 = \mathbf 0 \).

Proof

We show that (8) holds for all integers \(k \ge 0\) using induction. If \(k =0\), (8) holds by the initial conditions \(b^0 = b\) and \(\lambda ^0 = \mathbf 0 .\) Suppose that the (8) holds for \(k\). The \(x^{k+1}\) in (5) equals the \(x^{k+1}\) in the ALM according to Lemma 2. Thus, we have

where the first equality is from \(b^{k+1}\) in (5) and the third equality is from \(\lambda ^{k+1}\) in (7). Thus, the Bregman method is equivalent to the ALM according to Theorem 1 and Lemma 2. \(\square \)

In general, the subproblem in the first step of (4) does not have the closed form solution. Hence, we have to use other iterative methods to solve the subproblem of the Bregman method. This often takes times to solve the subproblem. In order to solve this difficulty, the linearized Bregman method [7] replaces \(\frac{1}{2}\Vert Ax-b\Vert ^2_2\) with its linearization term \((A^T(Ax^k - b))^T x\) and adds the proximal term to that replacement:

Similar to the Bregman method,

by the optimality condition of \(x^{k+1}\). Hence \(p^{k+1}\) is in the subdifferential \(\partial f(x^{k+1}).\)

In [7, 27], it was proved that if \(0 < \delta < \frac{2}{\Vert AA^T\Vert _2},\) then \(x^k\) in the linearized Bregman method converges to the solution of

Recently, an accelerated version of the linearized Bregman method was proposed in [22].

where \(\alpha _k = 1+\theta _k(\theta _{k-1}^{-1} -1) \) with the setting \( \theta _{-1} = 1 \, \text{ and} \theta _k = \frac{2}{k+2} \, \text{ for} \text{ all} k\ge 0.\) Huang et al. [22] showed that the convergence rate of the accelerated linearized Bregman method is \(\mathcal{O }(\frac{1}{k^2})\), where \(k\) is the iteration count, based on the equivalence between the linearized Bregman method and the gradient descent method, and the extrapolation scheme used in Nesterov’s accelerated gradient descent method [3, 25].

3 Accelerated Bregman Method

In this section, we propose an accelerated Bregman method which has an \(O(\frac{1}{k^2})\) global convergence rate.

In Sect. 2, we show that the Bregman method is equivalent to the ALM. It was proposed in [19] that if \(f(x)\) is a differentiable convex function, the augmented Lagrangian method can be accelerated using the extrapolation scheme used in Nesterov’s accelerated method. Based on the equivalence and the results in [19], we propose the accelerated Bregman method:

where \(t_0 = 1\).

3.1 Equivalence to the Accelerated ALM

In this subsection, we prove the equivalence between the proposed accelerated Bregman method and the accelerated augmented Lagrangian method which is a generalization of the method proposed in [19] in the sense that \(f\) can be nondifferentible. The following lemma is similar to Theorem 2.

Lemma 3

The accelerated Bregman method (11) starting with \(\lambda ^0 = \mathbf 0 \) is equivalent to the following method starting with \(b^0 = b\).

Proof

We show that (6) is satisfied for all \(k\) by induction. Based on the initial condition, (6) holds for \(k=0.\) We assume that (6) holds for all \(k \le n\). For all \(k\le n\), we get

where the first equality uses the second step of (11), the second equality is derived from the induction hypothesis, and the fourth equality uses the second step of (12). Hence

By using the above equality and the third step of (12), we have the following equalities

By induction,

is satisfied for all integers \(k\ge 0.\) Thus, the accelerated Bregman method (11) is equivalent to the method (12). \(\square \)

The next lemma shows that the method (12) starting with \(b^0 = b\) is equivalent to the accelerated augmented Lagrangian method starting with \(\lambda ^0 = \mathbf 0 \).

Lemma 4

The method (12) starting with \(b^0 = b\) is equivalent to the following accelerated ALM starting with \(\lambda ^0 = \mathbf 0 \):

Proof

It is sufficient to show that (8) is satisfied for all \(k\) according to Lemma 2. We will prove this by induction. When \(k=0\), (8) is satisfied by the initial condition \(b^0=b, \lambda ^0 = \mathbf 0 .\) We assume that

is satisfied for \(k \le n\). This implies that, for all \(k\le n\), we have

where the first equality is from \(\tilde{b}^{k+1}\) in (12) and the final equality is from \(\tilde{\lambda }^{k+1}\) in (13). We find the following equalities using the induction hypothesis and the previous equality

By induction,

is satisfied for all integers \(k\ge 0.\) \(\square \)

By Lemmas 3 and 4, we get the following theorem for the equivalence between the accelerated Bregman method (11) and the accelerated ALM.

Theorem 3

The accelerated Bregman method (11) starting with \(p^0 = \mathbf 0 \) is equivalent to the accelerated augmented Lagrangian method (13) starting with \(\lambda ^0 = \mathbf 0 \).

3.2 Complexity of the Accelerated Bregman Method

In the previous subsection, we proved the equivalence of the accelerated ALM and the accelerated Bregman method. Therefore, the convergence rate of the (accelerated) Bregman method is equal to the convergence rate of the (accelerated) ALM. In this section, we will show that the convergence rate of the ALM is \(\mathcal{O }(\frac{1}{k})\) and the convergence rate of the accelerated augmented Lagrangian method (AALM) is \(\mathcal{O }(\frac{1}{k^2})\).

In [19], the authors considered an ALM (7) for the linearly constrained smooth minimization problem:

where \(h\) is differentiable and the set \(X\) is closed convex set. They showed that (7) was accelerated and the convergence rate of the accelerated version (13) was \(\mathcal{O }(\frac{1}{k^2}).\) In this paper, we extend the accelerated augmented Lagrangian method in [19] to solve the linearly constrained nonsmooth minimization problem, especially the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem (1). The key lemma is Lemma 6 and the proof of the \(\mathcal{O }(\frac{1}{k^2})\) convergence rate is similar to that in [19]. The dual problem of (1) is

where \(L(x,\lambda )\) is a Lagrangian function. Problem (1) is a convex optimization whose constraints are only a linear equation, so there is no duality gap according to Slater’s condition [4]. Let \((x^*,\lambda ^*)\) be the saddle point of the Lagrangian function. Several lemmas are required to prove the \(\mathcal{O }(\frac{1}{k^2})\) convergence rate.

The following lemma gives the bound for the difference of the Lagrangian function values at the current iterates and any point that satisfies \(A^T\lambda \in \partial f(x)\) in terms of dual variables.

Lemma 5

Let \((x^{k+1},\lambda ^{k+1})\) be generated by the augmented Lagrangian method (7). For any \((x,\lambda )\) that satisfies

we get the inequality

Proof

By using (15) and the definition of the Lagrangian function, we have the following inequalities

where the fourth equality is based on the second step of (7). \(\square \)

The next lemma shows that the condition (15) in Lemma 5 is satisfied with \((x,\lambda ) = (x^{k+1},\lambda ^{k+1}), \, \text{ or} (x^*,\lambda ^*)\) according to Lemma 6. In what follows, a point \((x^*,\lambda ^*)\) is a Karush–Kuhn–Tucker (KKT) [21] point of the problem:

if the following conditions are satisfied:

Lemma 6

The \((x^{n+1},\lambda ^{n+1})\) generated by (7) satisfies the condition (15) with \((x,\lambda ) = (x^{n+1},\lambda ^{n+1})\) and \((x^*,\lambda ^*)\) also satisfies the same condition

Proof

By Fermat’s rule [28, Theorem 10.1] and the first step in (7), we have

i.e.,

Based on \(\lambda ^{n+1}\) in (7),

According to the definition of the subdifferential, we get

Since \((x^*,\lambda ^*)\) satisfies the KKT condition,

From the first condition, we get

Thus, based on the definition of the subdifferential, we obtain

\(\square \)

By the proof of Lemma 6, it is satisfied that

By the definition of the subdifferential, we get

Hence, we also have

The following lemma is a key lemma to establish the \(\mathcal{O }(\frac{1}{k})\) convergence rate for ALM.

Lemma 7

Let \((x^{k+1},\lambda ^{k+1})\) be generated by the (7). We have

Proof

Lemma 5 with \((x,\lambda ) = (x^*,\lambda ^*)\) implies that

The above inequality yields that

\(\square \)

We have the inequality

from Lemma 7 and \(L(x^{k+1},\lambda ^{k+1}) \le L(x^*,\lambda ^*).\) Then the inequality (17) implies the global convergence of ALM (7). By summing (17) over \(k = 1,\cdots , n\) we have

which implies that

In the next theorem, we prove that the convergence rate of ALM is \(\mathcal{O }(\frac{1}{k})\).

Theorem 4

Let \((x^k,\lambda ^k)\) be generated by (7). We obtain

Proof

We get

from Lemma 7. Thus, we have

Summing this inequality over \(n = 0,\cdots ,k-1\), we have

Based on Lemma 5 for \(k=n\) and setting \((x,\lambda ) = (x^n,\lambda ^n),\) we obtain

By multiplying this inequality with \(n\) and summing it over \(n=0,\cdots ,k-1\), we have the following inequalities

It follows from adding (18) and the above inequality that

Thus, we have

\(\square \)

The iterate \((x^{n+1},\tilde{\lambda }^{n+1})\) generated by AALM (13) contents

by replacing \((x^{k+1},\lambda ^{k+1})\) with \((x^{k+1},\tilde{\lambda }^{k+1})\) in Lemma 6. Therefore, we get the following lemmas by simply changing the notation.

Lemma 8

Let \((x^{k+1},\tilde{\lambda }^{k+1})\) be generated by the AALM (13). For \((x,\lambda ) = (x^*,\lambda ^*)\) or \((x^{n+1},\tilde{\lambda }^{n+1})\) generated by the AALM, we have the inequality

Lemma 9

Let \((x^{k+1},\tilde{\lambda }^{k+1})\) be generated by the AALM (13). We obtain

Several lemmas are required to obtain our main result.

Lemma 10

The sequence \(\{t_k\}\) generated by (11) satisfies

and

Proof

The proof is based on Lemma 3.3 in [19] and the definition of \(t_k.\) \(\square \)

Lemma 11

The inequality

where \(v_{k} = L(x^*,\lambda ^*) -L({x}^{k}, \tilde{\lambda }^{k})\) and \(u_k = t_{k-1}(2\tilde{\lambda }^{k} - \lambda ^{k-1} - \tilde{\lambda }^{k-1}) + \tilde{\lambda }^{k-1} - \lambda ^*\), is satisfied for all \(k\ge 0\)

Proof

When \(k=0\), this is trivial. By Lemma 8 with \((x,\lambda ) = (x^n,\tilde{\lambda }^n), (x^*,\lambda ^*)\) and using the definition of \(v_n\), we have

By multiplying (20) by \(t_n-1\) and adding (21), we get

By multiplying the above inequality by \(t_n\) and applying lemma 10, we have

where the second equality is from the elementary identity \(x^T y = \frac{1}{4}\Vert x+y\Vert ^2_2 -\frac{1}{4}\Vert x-y\Vert ^2_2\). Based on the definition of \(\lambda ^{k}\) in AALM (13), we get

Thus, it follows that

By multiplying (22) by 4 and summing it over \(n = 1,\cdots ,k\), we have

Since \(\Vert u_{k+1}\Vert ^2_2 \ge 0,\) we get

\(\square \)

Now, we establish our main theorem.

Theorem 5

Let \((x^{k+1},\tilde{\lambda }^{k+1},\lambda ^{k+1})\) be generated by the AALM. For any \(k\ge 1,\) we have

Proof

Based on the Eq. (19), we get

This together with Lemma 10 implies that

By simple calculation and \(t_0 = 1\), we have

Lemma 9 with \(k=0\) yields that

Thus, we have

where the equality is from the identity \(2\Vert a-c\Vert ^2_{2} -2\Vert b-c\Vert ^2_{2}-2\Vert b-a\Vert ^2_{2} = \Vert a-c\Vert ^2_{2} - \Vert b-a +b-c\Vert ^2_{2}\) with \(a = \lambda ^0, b= \tilde{\lambda }^1, c=\lambda ^*\). Thus, (23) and (25) imply

\(\square \)

Remark 1

In [19], the authors considered a generalized augmented Lagrangian method (26) with a symmetric positive definite matrix penalty parameter \(H_k\) that satisfied

when the object function \(f\) was differentiable:

We can also extend the \(\mathcal{O }(\frac{1}{k^2})\) convergence rate result for this generalized method when \(f(x)\) is not necessarily differentiable.

4 Numerical Simulation

In this section, we provide numerical tests for the comparison of the performance of our accelerated Bregman method and other methods, such as the Bregman method and the accelerated linearized Bregman method, for the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem. We use the Bregman method (5) and the accelerated Bregman method (12). The subproblems for \(x^{k+1}\) in the Bregman method (5) and the accelerated Bregman method (12) are solved using the fixed point (FP) method [18]. We have implemented our accelerated Bregman method in MATLAB. All runs are performed on a Windows 7 PC with an Intel core i7-2700K 3.4 GHz CPU and 16 GB of memory and MATLAB (Version R2011b).

4.1 FP Method

In this subsection, we briefly describe the fixed point method [18] for the following minimization problem:

where \(g:\mathbb{R }^n \rightarrow \mathbb{R }\) is differentiable and convex. Note that minimizing a convex function is equivalent to finding a zero for the subdifferential. The optimal condition of the (27) is

where \(x^*\) is an optimal solution for (27) and \(x_i^*\) denotes the \(i\)th component of \(x^*\). From the optimal condition, it is easy to prove that

for any \(\tau >0.\) To solve the Eq. (28), we consider fixed point iterations

For the subproblem of the Bregman method when it is applied to solve the linearly constrained \(\ell _1\)–\(\ell _2\) minimization problem, we set

with \(\bar{\mu } = \gamma \) and

4.2 Comparison of the Bregman Method with the Accelerated Bregman Method

In this section, we compare the performance of the Bregman method with that of the accelerated Bregman method for solving the linearly constrained \(\ell _1\)–\(\ell _2\) minimization. For experiments, we set \(n = 5000, m=2500\) for the size of the Gaussian measurement matrix \(A\) whose entries are selected randomly from a standard Gaussian distribution \(\mathcal{N }(0,1)\). The sparsity \(k\), i.e., the number of nonzero elements of the original solution, is fixed at 250. The location of nonzero elements in the original solution (signal) \(\bar{x}\) is selected randomly and the nonzero elements of \(\bar{x}\) are selected from the Gaussian distribution \(\mathcal{N }(0,2^2)\) (matlab code : 2*randn(k,1)). The noise \(\bar{n}\) in

is generated by a standard Gaussian distribution \(\mathcal{N } (0,1)\) and then it is normalized to the norm \(\sigma = 0.01.\) We terminate all the methods when

is satisfied or the number of iterations exceeds 1000. The coefficient \(\gamma \) in the penalty term of the subproblem of the (accelerated) Bregman method is fixed to \(\gamma = \frac{2}{\Vert A^Tb\Vert _2}\). The stopping condition of the fixed point method is when

is satisfied or the number of iterations in FP exceeds 100.

In each test, we calculate the residual error \(\Vert Ax-b\Vert _2\), the relative error

and the signal-to-noise ratio (SNR)

where \(x\) is the recovery signal.

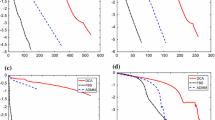

In Table 1, we report the number of iterations (and the total number of inner iterations), the CPU time, the residual error, the relative error, and SNR of the Bregman method and the accelerated Bregman method for various \(\beta \).

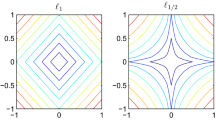

Both methods with \(\beta = 1, 1.5\) converge slower than those with \(\beta < 1\). And, based on the relative error and SNR, it is observed that the sparse original signal is well restored when \(\beta < 1\). This is also shown in Figs. 1 and 2. The accelerated Bregman method is faster than the Bregman method. In Fig. 3, we show the relation between the relative error and the number of iterations for both methods.

4.3 Comparison of the Accelerated Linearized Bregman Method with the Accelerated Bregman Method

In this subsection, we compare the performance of the accelerated Bregman method and the accelerated linearized Bregman method for solving the linearly constrained \(\ell _1\)–\(\ell _2\) minimization with the same setup for \(A\) and \(k\) as in the previous subsection but with \(\sigma =0.01,\;0.1\). The nonzero elements of \(\bar{x}\) are selected from the Gaussian distribution \(\mathcal{N }(0,2^2).\) When noise is present, we terminate all the methods when the residual error \(\Vert Ax^k-b\Vert _2\le \sigma \) or the number of iterations exceeds \(1000\). But, for a noise-free case, i.e., \(b=A\bar{x}\), we stop all the methods when the relative residual error

is satisfied. The coefficient \(\gamma \) in the penalty term of the subproblem of the accelerated Bregman method is fixed to \(\gamma = \frac{2}{\Vert A^Tb\Vert _2}\). When \(\beta = 1.5\) for \( \sigma = 0.01\) and noise-free case, we use the coefficient \(\tau =\frac{1.8\beta }{\Vert A\Vert ^2_2}\) in the accelerated linearized Bregman method. In other cases, we use the coefficient \(\tau =\frac{2\beta }{\Vert A\Vert ^2_2}\). We observed that two iterations for FP is enough to ensure the convergence of the accelerated Bregman method. Thus, we stop the FP method when

are satisfied or the number of iterations of FP exceeds \(2\).

In Tables 2, 3 and 4, we report the total number of iterations, the CPU time, the residual error, the relative error, and SNR of the accelerated linearized Bregman method and the accelerated Bregman method for various \(\beta \). Based on the relative error and SNR, we observe that the sparse original signal is well restored when \(\beta \le 0.5\).

For all \(\sigma \), the accelerated Bregman method is faster than the accelerated linearized Bregman method when \(\beta \le 0.1\). In this experiments, we observe that the accelerated Bregman method overall performs better than the accelerated linearlized Bregman method when \(\beta \) is relatively small. Both accelerated methods find the better solution when \(\beta \) is relatively small.

5 Conclusion

In this paper, we proposed an accelerated algorithm for the Bregman method to solve the linearly constrained \(\ell _1\)–\(\ell _2\) minimization. We have shown that the convergence rate of the original Bregman method is \(\mathcal{O }(\frac{1}{k})\) and that of the accelerated Bregman is \(\mathcal{O }(\frac{1}{k^2})\) for the general linearly constrained nonsmooth minimization, based on the equivalence between the Bregman method and the augmented Lagrangian method. The numerical simulation results shows that the proposed method is faster than the original Bregman method and, when the regularization parameter \(\beta \) for \(\ell _2\) is relatively small, is even faster than the accelerated linearized Bregman method.

References

Baraniuk, R.: Compressive sensing. IEEE Signal Process. Mag. 24, 118–121 (2007)

Barzilai, J., Borwein, J.: 1988 Two point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Beck, A., Teboulle, M.: Fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Bunea, R., She, Y., Ombao, H., Gongvatana, A., Devlin, K., Cohen, R.: Penalized least squares regression methods and applications to neuroimaging. NeuroImage 55, 1519–1527 (2011)

Cai, J., Osher, S., Shen, Z.: Convergence of the linearized Bregman iteration for \(\ell _1\)-norm minimization. Math. Comput. 78, 2127–2136 (2009)

Cai, J., Osher, S., Shen, Z.: Linearized Bregman iterations for compressed sensing. Math. Comput. 78, 1515–1536 (2009)

Candés, E., Romberg, J.: \(\ell _1\)-MAGIC : recovery of sparse signals via convex programming. Technical Report, Caltech (2005)

Candés, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Candés, E., Tao, T.: Decoding by linear programming. IEEE Trans. Inf. Theory 51, 4203–4215 (2005)

Candés, E., Tao, T.: Near optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 52, 5406–5425 (2006)

Candés, E., Wakin, M.: An introduction to compressive sampling. IEEE Signal Process. Mag. 21, 21–30 (2008)

Chen, S., Donoho, D., Saunders, M.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20, 33–61 (1999)

Cho, S., Kim, H., Oh, S., Kim, K., Park, T.: Elastic-net regularization approaches for genome-wide association studies of rheumatoid arthritis. BMC Proc. 3, S25 (2009)

De Mol, C., De Vito, E., Rosasco, L.: Elastic net regularization in learning theory. J. Complex. 25, 201–230 (2009)

Friedlander, M., Tseng, P.: Exact regularization of convex programs. SIAM J. Opt. 18, 1326–1350 (2007)

Friedman, J., Hastie, T., Hofling, H., Tibshirani, R.: Pathwise coordinate optimization. Ann. Appl. Stat. 1, 302–332 (2007)

Hale, E., Yin, W., Zhang, Y.: Fixed-point continuation applied to compressed sensing: Implementation and numerical experiments. J. Comput. Math. 28, 170–194 (2010)

He, B. and Yuan, X. : The acceleration of augmented Lagrangian method for linearly constrained optimization. optimization online. http://www.optimization-online.org/DB_FILE/2010/10/2760.pdf (2010).

Hestenes, M.: Multiplier and gradient methods. J. Opt. Theory Appl. 4, 303–320 (1969)

Hiriart-Urruty, J.-B., Lemarechal, C.: Convex Analysis and Minimization Algorithms: Part 1: Fundamentals. Springer, New York (1996)

Huang, B., Ma, S., Goldfarb, D.: Accelerated linearized Bregman method. J. Sci. Comput. (2012). doi: 10.1007/s10915-012-9592-9

Li, Q., Lin, N.: The Bayesian elastic net. Bayesian. Analysis 5, 151–70 (2010)

Liu, D., Nocedal, J.: On the limited memory method for large scale optimization. Math. Program. B 45, 503–528 (1989)

Nesterov, Y.: A method of solving a convex programming problem with convergence rate \({\cal O}(\frac{1}{k^2})\). Sov. Math. Doklady 27, 372–376 (1983)

Osher, S., Burger, M., Goldfarb, D., Xu, J., Yin, W.: An iterative regularization method for total variation-based image restoration. SIAM J. Multiscale Model. Simul. 4, 460–489 (2005)

Osher, S., Mao, Y., Dong, B., Yin, W.: Fast Linearized Bregman iteration for compressive sensing and sparse denoising. Commun. Math. Sci. 8, 93–111 (2010)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, New York (1998)

Saunders, M. : PDCO. Matlab software for convex optimization. Technical report, Stanford University. http://www.stanford.edu/group/SOL/software/pdco.html (2002)

Sra, S., Tropp. J. A.: Row-action methods for compressive sensing, vol 3. In ICASSP, Toulouse, pp. 868–871 (2006).

Van den Breg, E., Friedlander, M.P.: Probing the pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31, 890–912 (2009)

Yang, Y., Moller, M., Osher, S. : A dual split Bregman method for fast L1 minimization. Math. Comput. (to appear)

Yin, W.: Analysis and generalizations of the linearized Bregman method. SIAM J. Imaging Sci. 3, 856–877 (2010)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for \(\ell _1\)-minimization with applications to compressive sensing. SIAM J. Imaging Sci. 1, 143–168 (2008)

Zuo, H., Hastie, T.: Regularization and variable selection via the elastic net. J. R. Stat. Soc. B 67, 301–320 (2005)

Acknowledgments

Sangwoon Yun and Hyenkyun Woo were supported by the Basic Science Research Program through the National Research Foundation of Korea(NRF) funded by the Ministry of Education, Science and Technology(2012R1A1A1006406 and 2010-0510-1-3 respectively). Myungjoo Kang was supported by the NRF funded by the Ministry of Education, Science and Technology(2012001766).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kang, M., Yun, S., Woo, H. et al. Accelerated Bregman Method for Linearly Constrained \(\ell _1\)–\(\ell _2\) Minimization. J Sci Comput 56, 515–534 (2013). https://doi.org/10.1007/s10915-013-9686-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-013-9686-z