Abstract

Internet-delivered intervention may be an acceptable alternative for the more than 90% of problem gamblers who are reluctant to seek face-to-face support. Thus, we aimed to (1) develop a low-dropout unguided intervention named GAMBOT integrated with a messaging app; and (2) investigate its effect. The present study was a randomised, quadruple-blind, controlled trial. We set pre-to-post change in the Problem Gambling Severity Index (PGSI) as the primary outcome and pre-to-post change in the Gambling Symptom Assessment Scale (G-SAS) as a secondary outcome. Daily monitoring, personalised feedback, and private messages based on cognitive behavioural theory were offered to participants in the intervention group through a messaging app for 28 days (GAMBOT). Participants in the control group received biweekly messages only for assessments for 28 days (assessments only). A total of 197 problem gamblers were included in the primary analysis. We failed to demonstrate a significant between-group difference in the primary outcome (PGSI − 1.14, 95% CI − 2.75 to 0.47, p = 0.162) but in the secondary outcome (G-SAS − 3.14, 95% CI − 0.24 to − 6.04, p = 0.03). Only 6.7% of the participants dropped out during follow-up and 77% of the GAMBOT group participants (74/96) continued to participate in the intervention throughout the 28-day period. Integrating intervention into a chatbot feature on a frequently used messaging app shows promise in helping to overcome the high dropout rate of unguided internet-delivered interventions. More effective and sophisticated contents delivered by a chatbot should be sought to engage over 90% of problem gamblers who are reluctant to seek face-to-face support.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Problem gambling could lead to serious mental health problems, including suicidal behaviour (Lorains et al. 2011). Problem gamblers are often troubled by their debt and dishonesty, which can seriously damage the relationships with their significant other (Svensson et al. 2013). According to previous studies, 0.7–6.5% of people can be classified as problem gamblers (Calado and Griffiths 2016; Svensson et al. 2013).

With regard to the definition of “problem gambling” with Problem Gambling Severity Index (PGSI) (Ferris and Wynne 2001), the thresholds were three or greater in some previous studies and eight of greater in others (Calado and Griffiths 2016). In the present study, we adopted the former threshold because less-severe forms of problem gambling can cause greater population-level harm due to greater prevalence (Browne et al. 2017). Thus, our definition of “problem gambling” encompasses both sub- and supra-threshold for diagnosis of “gambling disorder”.

Despite the considerable negative impact on their lives, less than 10% of problem gamblers have sought professional support (Slutske et al. 2009; Suurvali et al. 2009) though several studies demonstrated the effect of certain therapies, including cognitive behavioural therapy (CBT) which generally consists of identifying triggers for gambling, practicing adaptive responses, and cognitive reconstruction (Goslar et al. 2017). Major reasons for this treatment gap are related to psychological barriers preventing people with gambling problems from pursuing in-person support (Suurvali et al. 2009). Some self-help book or minimum therapist-guided brief interventions have already showed their efficacy for problem gambling (LaBrie et al. 2012; Petry et al. 2016).

Internet treatments, especially when provided without human interference, are promising candidates for overcoming psychological barriers in seeking face-to-face support for gambling problems (Yakovenko and Hodgins 2016). However, there has been only one randomised controlled trial demonstrating a significant effect for Internet-delivered intervention without therapist contact (Casey et al. 2017). In the trial, the severity of gambling problems significantly decreased in the group for Internet-delivered CBT (I-CBT) and the group called ‘Monitoring, Feedback, Support messages’ which had access to any portion of the I-CBT contents (I-MFS), as compared to a waitlist control group.

However, this trial had limitations. First, 48% of participants dropped out of intervention before outcome assessment, which could have caused attrition bias. High dropout rate is a common shortcoming of internet-delivered unguided interventions (Melville et al. 2010). Second, having a waitlist control may have overestimated the effects of intervention than assessment-only control (Furukawa et al. 2014). Study participants are usually interested in receiving experimental intervention. Thus, one allocated to a waitlist control may tend to remain disordered conditions so as to receive their originally desired intervention. In the field of addiction research, a meta-analysis studying low-intensity intervention for alcohol use disorder reported the effect size of studies with a waitlist control as 0.48, whereas the effect sizes of studies with ‘assessment only’ or ‘brochure only’ control were 0.15 and 0.20, respectively (Riper et al. 2014).

Thus, we planned to develop a low-dropout unguided computerised intervention program for problem gambling and aimed to investigate its effect in a randomised controlled trial (RCT). To reduce the dropout rate, we developed an intervention for problem gambling using an automated chat program (chatbot). To avoid overestimation, we used the assessment-only control instead of the waitlist control in the current RCT.

Methods

Study Design and Participation

This was a quadruple-blind RCT for problem gamblers seeking help online. Participants were adults aged 18 years or over whose PGSI total score (past 12 months) was three or greater. We excluded those who were receiving face-to-face support from mental health professionals for their gambling problem to ensure generalisability of study results to problem gamblers reluctant to seek face-to-face support.

As we mentioned in the “Introduction” section, the threshold score of PGSI can be three or greater or eight or greater, according to previous studies. We chose the lower threshold because even less problematic gambling can cause harm, and the nature of GAMBOT, an online self-help program, will allow us to intervene in a broader population in limited additional cost.

All participants were recruited through online advertisements. Recruitment began on 26 March 2018 and ended on 3 August 2018. We provided study information on our open trial website, including portraits and institutional affiliations of the authors. Most visitors accessed our website through online advertisements on Google or Yahoo and read the informed consent document. The online advertisement appeared to users searching for helpful information to stop their problem gambling using keywords such as ‘stop’ and ‘gambling’. In the informed consent document, we did not provide any explicit information for visitors regarding differences between the active and control interventions. We only explained that each participant would receive messages at a different frequency via LINE, the most popular messaging app in Japan, which is similar to WhatsApp or WeChat. Visitors wanting to participate in the study answered a questionnaire via LINE for an eligibility check. Eligible participants were required to send pictures of their identification via LINE to avoid multiple participation. All interventions and assessments were also performed on LINE. This trial was reported according to CONSORT-EHEALTH (Eysenbach and Group 2011).

Intervention and Control

We developed a rule-based chatbot named GAMBOT. A ‘rule-based’ chatbot acts in accordance with predetermined rules and scenarios. Unlike a chatbot with artificial intelligence, a ‘rule-based’ chatbot cannot behave flexibly. GAMBOT can only reply simple greetings and words or encouragement in response to free text messages from users.

The scenario was developed by RS based on a standard workbook on group CBT for gambling disorder. The scenario was implemented for GAMBOT by Hachidori Inc., which provided a chatbot development platform. After in-house user testing, we started this RCT to evaluate the efficacy of GAMBOT. No content changes were made during the trial period.

Table 1 shows the contents sent to each group on each day. Participants in the intervention group (GAMBOT) received monitoring, personalised feedback, and messages based on cognitive behavioural theory from GAMBOT around 9 pm every day during the 28 days of the trial period.

Participants in the control group received messages only for assessments (Assessment Only, AO) every two weeks during the 28 days. GAMBOT was not publicly available so that participants in the control group were not able to use it (Table 2).

We did not send any reminder messages to encourage participants to respond more to GAMBOT, even if a participant responded only a few times during the trial period. No co-intervention was provided by the authors for either group.

Level of Human Involvement

From recruitment to outcome assessment, human involvement was required only for confirmation of participants’ identities and sending Amazon gift card codes via LINE after pre- and post-intervention. All participants received Amazon gift cards worth 1000 Japanese yen [US $10] at both the baseline and day 28 assessments.

Bug Fixes, Downtimes, Content Changes

There were downtimes due to misconfiguration of the server for 4 days beginning 3 July 2018. We excluded those who participated in our primary analysis during downtime on the 6th since participants in the intervention group, which received daily messages from GAMBOT, might have been affected more than those in the AO group, which only received messages three times in 28 days. As we mentioned in the “Statistical analyses” section, we performed sensitivity analyses which used the data from 253 participants, including those excluded due to the downtime.

Outcome Measures

The primary outcome was the absolute change in PGSI from pre-to-post intervention which was used as an outcome measure in several previous studies (Ferris and Wynne 2001; Goslar et al. 2017). PGSI is a self-administered questionnaire consisting of nine items to evaluate problematic gambling behaviours over the previous 12 months. Each item is scored from 0 = ‘Never’ to 3 = ‘Always’. The total score ranges between 0 and 27, with higher scores indicating greater problems. We used the Japanese version of PGSI which its validity and reliability were confirmed with a nationwide sample (So, Matsushita et al. 2019). In order to detect a change in gambling behaviours after the four-week trial period, we made a minor change in the PGSI to ask participants about their gambling behaviours over the previous four weeks.

Secondary outcomes included between-group differences of pre-to-post intervention absolute changes in the Gambling Symptom Assessment Scale (G-SAS) (Kim et al. 2009), amounts wagered in the past month, and gambling frequency in the past month. G-SAS is a self-administered questionnaire used to assess problem gambling severity in the most recent week. It consists of 12 items (e.g. “1. If you had unwanted urges to gamble during the past week, on average, how strong were your urges?”) which are rated between 1 and 4. The total score is calculated by summing up the score for each item: therefore, it ranged from 12 to 48, with higher scores reflecting more severe problem gambling. In the present study, we used the Japanese version of G-SAS (Yokomitsu and Kamimura 2019) which demonstrated excellent internal consistency (the Cronbach a coefficient was 0.96). Amounts wagered and gambling frequency were self-reported by participants in response to 1-item questions.

Furthermore, we obtained usage data concerning how participants in the intervention group used GAMBOT. We defined ‘use’ of GAMBOT as any reaction by participants to LINE messages on any day. For dosage of use, we measured the number of days using GAMBOT. We also asked participants in the intervention group to answer a questionnaire investigating the usability and workability of GAMBOT at post-intervention. All baseline characteristics and outcome data were self-administered by participants on LINE. No face-to-face or telephone contact was conducted.

Furthermore, we described the impression from participants of the intervention group after using GAMBOT with Net Promoter Score (NPS) (Reichheld 2003). NPS is often used to assess the willingness of participants to recommend a product to others. Users of a product are asked to rate their willingness to do so between 0 and 10. ‘Promoters’ are those with rates of 9 or 10; ‘Passives’ are those whom rate 7 or 8; and ‘Detractors’ rate 6 or less. The NPS of a product is calculated from the gap between the percentage of ‘Detractors’ and ‘Promoters’.

Randomisation and Blinding

Real-time simple randomisation by a server-side program was performed after informed consent and the eligibility check. Researchers were concealed since no random allocation sequence was prepared.

We conducted a quadruple-blind trial. Generally, there are four parties which should be blinded to allocation to avoid biases as much as possible: participants; carers and people delivering the interventions; outcome assessors; and statisticians (Higgins et al. 2011). With a careful description in the informed consent document, participants, who were also outcome assessors, were unaware whether they were allocated to the intervention of interest or not. Since researchers could potentially provide participants with additional interventions, they were also blinded owing to the automated procedure of the trial requiring minimal human involvement. Furthermore, the statistical analyst (TAF) was also blinded to the allocation until results of the analyses for primary and secondary outcomes were fixed. TAF was provided only with items collected from participants in both groups.

Sample Size

We prospectively calculated the sample size as 99 participants in each group, for a total of 198, to detect a standardised mean difference of 0.4 on the PGSI with adequate power (beta level of 0.20) at a two-sided alpha level of 0.05. Previous trials of online interventions for gambling problems demonstrated effect sizes between 0.5 and 1.0 using wait-list control. We, however, conservatively set an effect size of 0.4 because using the wait-list control may have inflated the size of effects.

Statistical Analyses

TAF, blinded to the allocation, performed statistical analyses using STATA 15.1 according to the pre-specified statistical analysis plan, except for usage data. RS conducted statistical analyses related to usage data using R 3.4.1. We included all participants in the randomly allocated group except for 56 excluded, as described in the “Bug Fixes, Downtimes, Content Changes” section, before starting these analyses. To assess the influence of excluding the 56 participants from the main analyses, we additionally conducted sensitivity analyses for the following efficacy outcomes using data from all the participants.

Regarding the efficacy analyses, we followed the intention-to-treat basis and multiple imputations were performed for missing outcome data. We calculated the point estimate and 95% CI of the between-group difference in the change score of the PGSI total score from baseline to post-treatment (day 28) using an analysis of covariance (ANCOVA) as the primary efficacy analysis and the baseline PGSI total score as a covariate. As a secondary efficacy analysis, we compared the post-treatment G-SAS total score between the two groups with ANCOVA. We used the PGSI total score at baseline as a covariate, instead of the G-SAS total score, because we inadvertently failed to obtain the G-SAS at baseline. We also investigated the between-group difference in changes in the previous-month gambling value and frequency using the same model as the primary analysis. We did not make any adjustments for multiple testing in the secondary efficacy analyses owing to their exploratory nature.

As for usage data, we compared the proportion of participants who did not provide post-intervention outcomes between the two groups with Fisher’s exact test. Using data from the participants allocated to the GAMBOT group, we depicted the frequency and recency of responses to messages from GAMBOT.

Changes from Original Protocol

As for ANCOVA with G-SAS as a secondary outcome, we inadvertently failed to obtain the G-SAS, which we had originally planned to use as a covariate, at baseline. Thus, we used the PGSI total score at baseline instead. All decisions to change the original protocol were made before any statistical analyses were conducted.

Ethical Considerations

We required all participants to provide informed consent. Informed consent procedures were conducted online. First, potential participants accessed our trial website with their smartphone to read the informed consent document. They were required to tap a button with labelled ‘I read the informed consent document and consent to participate in this trial’ if they were willing to participate. Subsequently, GAMBOT was added to the ‘friend list’ of their LINE account and automatically started a conversation to confirm that they had read the informed consent document, were willing to participate in this trial, and met eligibility criteria.

To protect privacy, we printed and deleted images of identification sent electronically from the participants immediately after checking for multiple participation. Information about identifications was preserved offline separately from data recorded online.

The study protocol was approved by the ethics committees of the National Hospital Organisation Kurihama Medical and Addiction Centre and Kyoto University Graduate School of Medicine.

Trial Registration

We prospectively registered this trial with the University Hospital Medical Information Network Clinical Trial Registration (UMIN-CTR), Japan, on the 20th of March 2018 (UMIN000031836).

Results

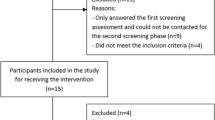

We recruited 254 participants between 26 March and 3 August 2018. Of these, we analysed data from 197 participants. We excluded 56 participants as described in the “Bug Fixes, Downtimes, Content Changes” section in “Methods” section. We also excluded a participant of the AO group during the data cleaning process upon finding they did not satisfy eligibility criteria. We obtained post-intervention assessment data from 93% of the analysed participants (185/197). Figure 1 depicts participant flow and Table 1 shows their baseline characteristics.

Participants in both groups showed decreases in PGSI total scores (GAMBOT (n = 96): − 4.38, 95% CI − 5.56 to − 3.20; AO (n = 101): − 3.24, 95% CI − 4.35 to − 2.13). However, there was no significant difference in the pre-to-post intervention changes in PGSI scores between the intervention and control groups (difference: − 1.14, 95% CI − 2.75 to 0.47, p = 0.162, effect size: 0.20, 95% CI − 0.08 to 0.48). In terms of secondary outcomes, only G-SAS at post-intervention was significantly lower in the GAMBOT group than the AO group (Between-group difference: − 3.14, 95% CI − 0.24 to − 6.04, p = 0.03). Pre-to-post intervention changes in gambling value and frequency were not significantly different between the two groups. Table 3 shows results of the primary and secondary outcomes in detail.

We also conducted sensitivity analyses for the efficacy outcomes without excluding the 56 participants affected by downtime. As Table 4 shows, the results of the sensitivity analyses were similar to the results of the primary analyses excluding the 56 participants.

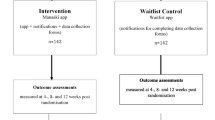

Figures 2 and 3 show usage data on how participants in the intervention group used GAMBOT. As depicted in Fig. 2, 77% of participants (74/96) continued to use GAMBOT throughout the intervention period. Figure 3 shows a detailed pattern of GAMBOT use for each participant. On average, participants responded to GAMBOT 22.6 out of 27 days, excluding day 28 which was reserved for post-intervention assessment.

We used the NPS to assess the willingness of participants in the intervention group to recommend GAMBOT to others. The number of ‘Promoters’, ‘Passives’, and ‘Detractors’ were 18, 18, and 51, respectively. Thus, the NPS of GAMBOT was − 33, which was calculated based on the difference between the number of Detractors and Promoters.

Discussion

The purpose of this trial was to evaluate the efficacy of GAMBOT for problem gamblers seeking help online. GAMBOT was integrated into the most popular mobile messaging service in Japan as a chatbot aiming to achieve a lower dropout rate than previously reported online interventions.

As we had intended, the dropout rate was low. Around 80% of participants in the intervention group continued to use GAMBOT through the participation period and 93.4% of participants in both groups provided primary outcome data. Despite such utilisation, we failed to demonstrate a significant between-group difference (an effect size of 0.4) in the primary outcome via the pre-to-post intervention change in PGSI scores with the predetermined sample size. Though the point estimates of all gambling-related outcomes were in favour of the GAMBOT group, only G-SAS at post-intervention was significantly lower in the GAMBOT group than the AO group.

Regarding the primary outcome, the point estimate of the effect size was 0.20 and insignificant. This point estimate is smaller than previously reported effect sizes of I-CBT (1.19) and I-MFS (0.80) without therapist involvement (8). The first possible explanation for this is the difference in control conditions. The trial by Casey et al. used the waitlist control, which can lead to overestimation of effect as much as thrice (10, 11) whereas we used an assessment-only control. As shown in Table 3, the PGSI score on day 28 was significantly lower in both groups. Even the biweekly assessments in the AO group might have affected participants’ gambling behaviour, which then would have made it difficult to detect a between-group difference. The second possible explanation is the change in contents. Their I-CBT consisted of six components developed to duplicate the style of face-to-face CBT as much as possible. However, our GAMBOT intervention was delivered basically as simple text messages, though their content was based on a source for face-to-face CBT and delivered somewhat interactively. More interactive interventions, such as those using artificial intelligence (Fitzpatrick et al. 2017), may result in better outcomes. Thus, our intervention can be classified as a low-intensity intervention such as motivational interviewing or personalised feedback which is generally for less problematic population (Cunningham et al. 2009; Martens et al. 2015). GAMBOT might work better as preventive intervention for at-risk gamblers rather than therapeutic intervention for problem gamblers. The last possible explanation is the difference in dropout rates. The completion rate of our GAMBOT intervention was 77% and far higher than that of the I-CBT (37%) or I-MFS (42%) by Casey et al., possibly because our intervention was integrated on a messaging app which people used several times a day to exchange private messages. However, by retaining more patients who are generally less engaged than others, our trial may have missed possible differences between the intervention and control.

In terms of the willingness of participants to recommend GAMBOT to others, the NPS of − 33 seems unfavourable. However, NPSs in Japan are reported to be lower than those in other areas because Asian people tend to prefer intermediate scores (e.g. four to six in 10-Likert scale) which are regarded as ‘Detractors’ when calculating an NPS (Seth et al. 2016). In Japan, even for a service with top customer satisfaction in each industry, its NPS can often be below zero. For example, NPS of iPhone in Japan was − 3.1(NTTCom Online Marketing Solutions Corporation 2017) whereas that in U.S. was 63 (Borison 2015). The NPS of GAMBOT (− 33) was equivalent to average of health food industries in Japan (− 31.3) (NTTCom Online Marketing Solutions Corporation 2016).

There are some limitations of our trial. First, although it was not expensive, the monetary incentive might have increased completion and follow-up rates in our trial more than in a realistic setting. However, the risk of bias in effect estimation due to this incentive would be low since participants in both groups received equal rewards. Second limitation is about “fake” problem gamblers participation motivated by the monetary incentive. Though we only advertised to those searching for helpful information to stop their problem gambling for gathering participants and the total price of the incentive (2000 Japanese yen) seems not worth disclosing personal information (e.g. identity cards) for average Japanese people, we could not avoid the possibility of including such “fake” people. Third, we assessed the gambling problems of our participants based on their self-report without an in-person interview. Though it may limit the validity of our measurement, an in-person assessment would not be suitable for our study context because our target population was originally problem gamblers hesitant to contact mental health professionals. The fourth limitation is generalizability. Our results cannot be applied to all problem gamblers because all of our participants were those who searched for ways to cope with their gambling problems. However, motivating problem gamblers who do not seek any help is out of scope for GAMBOT though it is another important issue.

Despite these limitations, our trial has several strengths, the first being low risk of bias in the effect estimation. We controlled: (1) selection bias through fully computerised random allocation; (2) performance bias with quadruple-blinding and avoiding the waitlist control; (3) attrition bias with an over 90% rate of follow-up and multiple imputation; and (4) reporting bias with a prospectively registered study protocol and prospective statistical analysis plan. The second strength is generalisability of the results to real-world settings because: (1) we did not exclude any participants except those who had received support from mental health professionals; (2) we avoided any reminders, personal involvement, or special training for participants through the trial process since these would be costly to include in a real-world setting if the program was to be widely used.

In conclusion, though we attained high completion and follow-up rates, we failed to demonstrate a significant effect of our GAMBOT intervention for problem gamblers. Our results suggest some future directions. For problem gamblers, we should make GAMBOT more flexible and sophisticated. Taking advantage of its low cost and high acceptability, adjusting GAMBOT as a preventive intervention for less problematic gamblers would be useful for decreasing population-level gambling-related harm.

References

Browne, M., Greer, N., Rawat, V., & Rockloff, M. (2017). A population-level metric for gambling-related harm. International Gambling Studies, 17(2), 163–175. https://doi.org/10.1080/14459795.2017.1304973.

Calado, F., & Griffiths, M. D. (2016). Problem gambling worldwide: An update and systematic review of empirical research (2000–2015). Journal of Behavioral Addictions, 5(4), 592–613. https://doi.org/10.1556/2006.5.2016.073.

Casey, L. M., Oei, T. P. S., Raylu, N., Horrigan, K., Day, J., Ireland, M., et al. (2017). Internet-based delivery of cognitive behaviour therapy compared to monitoring, feedback and support for Problem Gambling: A randomised controlled trial. Journal of Gambling Studies, 33(3), 993–1010. https://doi.org/10.1007/s10899-016-9666-y.

Cunningham, J. A., Hodgins, D. C., Toneatto, T., Rai, A., & Cordingley, J. (2009). Pilot study of a personalised feedback intervention for problem gamblers. Behavior Therapy, 40(3), 219–224. https://doi.org/10.1016/j.beth.2008.06.005.

Eysenbach, G., & Group, C. E. (2011). CONSORT-EHEALTH: Improving and standardising evaluation reports of web-based and mobile health interventions. Journal of Medical Internet Research, 13(4), e126.

Ferris, J. A., & Wynne, H. J. (2001). The Canadian problem gambling index. Ottawa: Canadian Centre on Substance Abuse.

Fitzpatrick, K. K., Darcy, A., & Vierhile, M. (2017). Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomised controlled trial. JMIR Mental Health, 4(2), e19.

Furukawa, T. A., Noma, H., Caldwell, D. M., Honyashiki, M., Shinohara, K., Imai, H., et al. (2014). Waiting list may be a nocebo condition in psychotherapy trials: A contribution from network meta-analysis. Acta Psychiatrica Scandinavica, 130(3), 181–192. https://doi.org/10.1111/acps.12275.

Goslar, M., Leibetseder, M., Muench, H. M., Hofmann, S. G., & Laireiter, A. R. (2017). Efficacy of face-to-face versus self-guided treatments for disordered gambling: A meta-analysis. Journal of Behavioral Addictions, 6(2), 142–162. https://doi.org/10.1556/2006.6.2017.034.

Higgins, J. P., Altman, D. G., Gøtzsche, P. C., Jüni, P., Moher, D., Oxman, A. D., et al. (2011). The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ, 343, d5928. https://doi.org/10.1136/bmj.d5928.

Kim, S. W., Grant, J. E., Potenza, M. N., Blanco, C., & Hollander, E. (2009). The Gambling Symptom Assessment Scale (G-SAS): A reliability and validity study. Psychiatry Research, 166(1), 76–84.

LaBrie, R. A., Peller, A. J., LaPlante, D. A., Bernhard, B., Harper, A., Schrier, T., et al. (2012). A brief self-help toolkit intervention for gambling problems: A randomized multisite trial. American Journal of Orthopsychiatry, 82(2), 278–289. https://doi.org/10.1111/j.1939-0025.2012.01157.x.

Lorains, F. K., Cowlishaw, S., & Thomas, S. A. (2011). Prevalence of comorbid disorders in problem and pathological gambling: Systematic review and meta-analysis of population surveys. Addiction, 106(3), 490–498.

Martens, M. P., Arterberry, B. J., Takamatsu, S. K., Masters, J., & Dude, K. (2015). The efficacy of a personalised feedback-only intervention for at-risk college gamblers. Journal of Consulting and Clinical Psychology, 83(3), 494–499. https://doi.org/10.1037/a0038843.

Melville, K. M., Casey, L. M., & Kavanagh, D. J. (2010). Dropout from internet-based treatment for psychological disorders. The British Journal of Clinical Psychology, 49(Pt 4), 455–471. https://doi.org/10.1348/014466509X472138.

Petry, N. M., Rash, C. J., & Alessi, S. M. (2016). A randomized controlled trial of brief interventions for problem gambling in substance abuse treatment patients. Journal of Consulting and Clinical Psychology, 84(10), 874–886. https://doi.org/10.1037/ccp0000127.

Reichheld, F. F. (2003). The one number you need to grow. Harvard Business Review, 81(12), 46–55.

Riper, H., Blankers, M., Hadiwijaya, H., Cunningham, J., Clarke, S., Wiers, R., et al. (2014). Effectiveness of guided and unguided low-intensity internet interventions for adult alcohol misuse: A meta-analysis. PLoS ONE, 9(6), e99912. https://doi.org/10.1371/journal.pone.0099912.

Seth, S., Scott, D., Svihel, C., & Murphy-Shigematsu, S. (2016). Solving the mystery of consistent negative/low Net Promoter Score (NPS) in cross-cultural marketing research. Asia Marketing Journal, 17(4), 43–61.

Slutske, W. S., Blaszczynski, A., & Martin, N. G. (2009). Sex differences in the rates of recovery, treatment-seeking, and natural recovery in pathological gambling: Results from an Australian community-based twin survey. Twin Research and Human Genetics, 12(5), 425–432.

So, R., Matsushita, S., Kishimoto, S., & Furukawa, T. A. (2019). Development and validation of the Japanese version of the problem gambling severity index. Addictive Behaviors, 8, 49.

Suurvali, H., Cordingley, J., Hodgins, D. C., & Cunningham, J. (2009). Barriers to seeking help for gambling problems: A review of the empirical literature. Journal of Gambling Studies, 25(3), 407–424.

Svensson, J., Romild, U., & Shepherdson, E. (2013). The concerned significant others of people with gambling problems in a national representative sample in Sweden—a 1 year follow-up study. BMC Public Health, 13, 1087. https://doi.org/10.1186/1471-2458-13-1087.

Yakovenko, I., & Hodgins, D. C. (2016). Latest developments in treatment for disordered gambling: Review and critical evaluation of outcome studies. Current Addiction Reports, 3(3), 299–306.

Yokomitsu, K., & Kamimura, E. (2019). Factor structure and validation of the Japanese version of the Gambling Symptom Assessment Scale (GSAS-J). Journal of Gambling Issues. https://doi.org/10.4309/jgi.2019.41.1.

Acknowledgements

We would like to thank E. Kamimura and K. Yokomitsu for providing the Japanese version of G-SAS with us. We also thank R. Fujioka and A. Muraida for helping coordination of the present study.

Funding

This trial was funded by the Japan Agency for Medical Research and Development (AMED). AMED had no role in any process of the study from development of the intervention to writing the manuscript.

Author information

Authors and Affiliations

Contributions

SH is the principal investigator and is responsible for the study process. SH, RS, TAF, and TB developed the study protocol. RS, SM, TM, SF, HO, and SH contributed to developing the intervention. RS designed the system for randomization and the data collection system. TAF performed the statistical analysis for the primary and secondary outcomes. RS aggregated the usage data. SH, RS, and TAF drafted the manuscript. SM and SH acquired the funding. All authors reviewed and edited the manuscript and approved the final version.

Corresponding author

Ethics declarations

Conflict of interest

RS, SM, TM, SF, HO, and SH had been involved in the development of the workbook for face-to-face group CBT for Gambling Disorder on which the content of GAMBOT was based. GAMBOT was mainly developed by RS referring to the workbook. Both the workbook and GAMBOT were developed not for business but for academic interest. RS has received personal fees from Igaku-shoin Co., Ltd., Kagaku-hyoronsha Co., Ltd., Medical Review Co., Ltd., Otsuka Pharmaceutical Co., Ltd., and CureApp Inc. outside the submitted work. TAF reports personal fees from Meiji, grants and personal fees from Mitsubishi-Tanabe, personal fees from MSD, personal fees from Pfizer, outside the submitted work; TAF has a patent 2018-177688 pending. SH, TM, SF, HO, and TB declared no conflict of interest. The authors have received national funding from the Japan Agency for Medical Research and Development (AMED).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

So, R., Furukawa, T.A., Matsushita, S. et al. Unguided Chatbot-Delivered Cognitive Behavioural Intervention for Problem Gamblers Through Messaging App: A Randomised Controlled Trial. J Gambl Stud 36, 1391–1407 (2020). https://doi.org/10.1007/s10899-020-09935-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10899-020-09935-4