Abstract

We consider in this paper a class of linear multiplicative programming problems that arise from numerous applications such as network flows and financial optimization. The problem is first transformed into an equivalent nonlinear optimization problem to provide a novel convex quadratic relaxation. A simplicial branch-and-bound algorithm is then designed to globally solve the problem, based on the proposed relaxation and simplicial branching process. The convergence and computational complexity of the algorithm are also analyzed. The results of numerical experiments confirm the efficiency of the proposed algorithm for tested instances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the following linear multiplicative programming (LMP) problem:

where \(\varvec{c}_{i}, \varvec{d}_{i}\in R^n,\ c_{0i}, d_{0i}\in R\), \(\varvec{A}\in R^{m\times n}, \ \varvec{b}\in R^{m}\), and D is a nonempty bounded set.

As a special case of nonconvex programming problems, LMP problem covers many significant applications, such as robust optimization [1, 2], financial optimization [3], network flows [4,5,6], VLSI chip design [7]. Additionally, linear 0–1 programming, bilinear programming and general quadratic programming can be reformulated as LMP problem. For example, consider the following quadratic programming problem [8]

where \(\varvec{Q}\in R^{n\times n}\) is a symmetric matrix with the rank p, as pointed out by Tuy [9], there exists linearly independent vectors \(\varvec{c}_1, \ldots ,\varvec{c}_p\in R^n\) and \(\varvec{d}_1, \ldots ,\varvec{d}_p\in R^n\) such that

Therefore, the applications of LMP problem contain the applications of these special cases of LMP.

For solving LMP problem, various algorithms have been developed, such as the outer approximation algorithm [10], parametric simplex methods [11, 12], branch-and-bound methods [13,14,15], quadratic programming algorithms [16,17,18], monotonic approach [19], optimal level solution methods [6, 20,21,22]. Additionally, Wang et al. [23] developed a branch-and-bound algorithm by utilizing the convex envelope of bilinear function and constructing linear relaxation. Shen et al. [24, 25] proposed a linear relaxation method by exploiting the characteristics of the initial problem to present a branch-and-bound algorithm for solving LMP problem. Jiao et al. [15] gived a branch-and-bound algorithm for globally solving generalized LMP problem with coefficients. Shen et al. [26] presented a rectangular branch-and-bound algorithm for LMP problem. Zhao et al. [27] introduced an efficient inner approximation algorithm for solving the generalized LMP problem with generalized linear multiplicative constraints. For solving LMP problem, Zhao et al. [28] took advantage of the two-phase relaxation technique to develop a branch-and-bound algorithm.

In this paper, a simplicial branch-and-bound algorithm (SBBA) is presented for globally solving LMP problem. In the algorithm, we first lift LMP problem to a new equivalent problem (EP), and then further transform EP to another equivalent problem (EP1), so that the underestimation of the objective function of EP1 can be constructed easily to obtain the lower bounds of its optimal value. Thus the subproblems that must be solved for obtaining these lower bounds are all the convex quadratic programs, and so the main computational effort of the algorithm is only involved in solving a sequence of convex quadratic programming problems that do not grow in size from iteration to iteration. Compared with other branch-and-bound algorithms such as [23,24,25,26, 28], the main features of the proposed SBBA are as follows. (1) In the branch-and-bound search, SBBA not only uses the simplices rather than more complicated polytopes in [23,24,25,26] as partition elements, but also the working space for branching is \(R^p\) instead of \(R^n\) [23, 26]. This implies that the algorithm economizes the required computation cost. (2) Different from the algorithms in [23, 28], we analyze the computational complexity of SBBA. (3) For the bounding technique of the branch-and-bound search, we propose a convex quadratic relaxation by exploiting the characteristics of the problem, while the authors in Refs. [23,24,25] utilized the different linear relaxations to present the lower bounds of the optimal value of the problem. (4) It can be seen from Tables 1 and 2 in Sect. 4 that the CPU time of SBBA is more less than that of the algorithms in [24, 25] and the solver BARON. Especially, the numerical results show that the proposed SBBA can efficiently find the optimal solutions for all test instances.

The content of this paper is organized as follows. In Sect. 2, LMP problem is converted into the problem EP1 and the convex quadratic programming problem is constructed to provide the lower bound of the optimal value of EP1. In Sect. 3, we present a simplicial branch-and-bound algorithm which integrates the convex quadratic relaxation and simplicial branching rule. Additionally, the convergence and complexity analysis of the proposed algorithm are established. We report numerical comparison results in Sect. 4. Finally, we conclude the paper in the last section.

2 Reformulation and underestimation

In this section, we first transform LMP problem into an equivalent problem EP1, and then construct the convex quadratic relaxation to estimate the lower bound of the optimal value of EP1.

2.1 Problem reformulation

For solving LMP problem, we lift LMP problem to a new nonlinear optimization model that is equivalent to LMP problem. To this end, for each \(\ i=1,\ldots ,p\), let us define

and introduce an initial p-simplex

with \(p+1\) vertices \(\varvec{u}^{0,i}\ (i=1,\ldots , p+1)\), where

By using the simplex \(S_{0}\) that covers the hyper-rectangle \([\varvec{y}^{min},\varvec{y}^{max}]\), LMP problem can be translated into the following problem EP

where

The equivalence result for EP and the original LMP problem is given by the following theorem.

Theorem 1

If \((\varvec{x}^{*},\varvec{y}^{*})\) is an optimal solution of EP problem, then \(\varvec{x}^{*}\) is an optimal solution of LMP problem, and \(f(\varvec{x}^*)=\varphi (\varvec{x}^*,\varvec{y}^*)\). Conversely, if \(\varvec{x}^{*}\) is an optimal solution of LMP problem, then \((\varvec{x}^{*},\varvec{y}^{*})\) is an optimal solution of EP problem, where \({y}_{i}^{*}=\varvec{d}_{i}^{T}\varvec{x}^{*}-\varvec{c}_{i}^{T}\varvec{x}^{*}+d_{0i}-c_{0i},\ i=1,\ldots ,p\).

Proof

If \((\varvec{x}^{*},\varvec{y}^{*})\) is an optimal solution of EP problem, then \(\varvec{x}^{*}\) is a feasible solution for LMP problem. Suppose that \(\varvec{x}^{*}\) is not an optimal solution of LMP problem, then there exists a feasible solution \(\overline{\varvec{x}}\in D\) such that

Since the inequalities

hold for any \(\varvec{x}\in D,\ \varvec{y}\in S_0\), \(i=1,\ldots ,p\), it follows that

for each \(i=1,\ldots ,p\). Summing up from \(i=1\) to p on both sides of the above inequalities, we get

Further, the equality in (2.3) holds if and only if \({y}_{i}=\varvec{d}_{i}^{T}\varvec{x}-\varvec{c}_{i}^{T}\varvec{x}+d_{0i}-c_{0i},\ i=1,\ldots ,p.\) Now, let \(\overline{{y}}_{i}=\varvec{d}_{i}^{T}\overline{\varvec{x}}-\varvec{c}_{i}^{T}\overline{\varvec{x}}+d_{0i}-c_{0i}\), then \((\overline{\varvec{x}},\overline{\varvec{y}})\) is a feasible solution of EP, and it follows from (2.3) that

According to \(\varvec{x}^*\in D,\ \varvec{y}^*\in S_0\) and (2.3), we have

Then, by (2.2), (2.4) and (2.5), we follow that \(\varphi (\overline{\varvec{x}},\overline{\varvec{y}})<\varphi (\varvec{x}^{*},\varvec{y}^{*}),\) which contradicts the optimality of \((\varvec{x}^{*},\varvec{y}^{*})\) for EP. Thus, \(\varvec{x}^{*}\) is an optimal solution of LMP problem. In addition, for the optimal solution \((\varvec{x}^*,\varvec{y}^*)\) of EP problem, it must hold

In fact, if the formula (2.6) is not true, that is, \({y}_{i}^{*} \ne \varvec{d}_{i}^{T}\varvec{x}^{*}-\varvec{c}_{i}^{T}\varvec{x}^{*}+d_{0i}-c_{0i}, i=1,\ldots ,p\), let \(\overline{{y}_{i}} =\varvec{d}_{i}^{T}\varvec{x}^{*}-\varvec{c}_{i}^{T}\varvec{x}^{*}+d_{0i}-c_{0i}, i=1,\ldots ,p\). Clearly, \(\overline{{y}_{i}}\ne {y}_{i}^{*}\) for each i, and \((\varvec{x}^{*},\overline{\varvec{y}})\) is a feasible solution of EP. It follows from (2.3) that

This contradicts the fact that \((\varvec{x}^{*},\varvec{y}^{*})\) is the optimal solution of EP. Additionally, since the soution of EP satisfies (2.6), by (2.3) we have \( \varphi (\varvec{x}^*,\varvec{y}^*)=f(\varvec{x}^*).\)

Conversely, assume that \(\varvec{x}^{*}\) is an optimal solution of LMP problem. Let \({y}_{i}^{*}=\varvec{d}_{i}^{T}\varvec{x}^{*}-\varvec{c}_{i}^{T}\varvec{x}^{*}+d_{0i}-c_{0i},\ i=1,\ldots ,p.\) Then \((\varvec{x}^{*},\varvec{y}^{*})\) is the feasible solution of EP. Now, suppose EP exists a feasible solution \((\overline{\varvec{x}},\overline{\varvec{y}})\) such that

From (2.3), we obtain

and

According to (2.7)–(2.9), we get

This contradicts the optimality of \(\varvec{x}^{*}\) to LMP problem. Therefore, \((\varvec{x}^{*},\varvec{y}^{*})\) is the optimal solution of EP problem. This completes the proof. \(\square \)

From Theorem 1, it is easy to see that we can solve LMP problem by solving EP problem. Next, for convenience, we further reformulate EP into the problem EP1 by

where \(\psi (\varvec{y})=\min \limits _{\varvec{x}\in D}\varphi (\varvec{x},\varvec{y}).\) The following theorem illustrates that problems EP and EP1 are equivalent.

Theorem 2

\(\varvec{y}^{*}\) is the optimal solution of EP1 if and only if \((\varvec{x}^{*},\varvec{y}^{*})\) is the optimal solution of EP with \(\varvec{x}^*=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\varvec{y}^*)\), and \(\psi (\varvec{y}^*)=\varphi (\varvec{x}^*,\varvec{y}^*)\).

Proof

If \(\varvec{y}^{*}\) is an optimal solution of EP1, it follows from \(\varvec{x}^*=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\varvec{y}^*)\) that

Let \((\overline{\varvec{x}},\overline{\varvec{y}})\) be the feasible solution of EP. Clearly, \(\overline{\varvec{y}}\) is a feasible solution of EP1, then we have

By the definition of \(\psi (\varvec{y})\) and \(\overline{\varvec{x}}\in D\), it follows that

From (2.10)–(2.11) we conclude that

So, \((\varvec{x}^{*},\varvec{y}^{*})\) is the optimal solution to problem EP. Additionally, by invoking the definitions of \(\varvec{x}^*\) and the function \(\psi (\varvec{y})\), we have \(\psi (\varvec{y}^*)=\varphi (\varvec{x}^*,\varvec{y}^*).\)

Additionally, if \((\varvec{x}^{*},\varvec{y}^{*})\) is an optimal solution of EP, then \(\varvec{y}^{*}\) is a feasible solution to EP1. Suppose that \(\varvec{y}^{*}\) is not the optimal solution of EP1, then there exists \(\overline{\varvec{y}}\in S_0\) such that

From the definition of the function \(\psi (\varvec{y})\) and \(\varvec{x}^{*}\in D\), we conclude

Note that \((\varvec{x}^{*},\varvec{y}^{*})\) is the optimal solution of problem EP, then

Let \(\overline{\varvec{x}}=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\overline{\varvec{y}}).\) Then \((\overline{\varvec{x}},\overline{\varvec{y}})\) is a feasible solution of EP, and we have

Using (2.13)–(2.15), we obtain

This contradicts that \((\varvec{x}^{*},\varvec{y}^{*})\) is the optimal solution of EP. Thus \(\varvec{y}^{*}\) is the optimal solution of EP1. The proof is finished \(\square \)

According to Theorems 1 and 2, if \(\varvec{y}^{*}\) and \(\varvec{x}^{*}\) are the optimal solutions of problems EP1 and LMP, respectively, then we must have \(\varvec{x}^*=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\varvec{y}^*)\) such that

that is, problems EP1 and LMP have the same optimal value. In the following, we will focus on how to solve EP1.

2.2 Underestimation of EP1

In this subsection, for solving EP1 we first propose a lower bounding function for the objective function \(\psi (\varvec{y})\) of EP1, then construct a convex quadratic relaxation to estimate the lower bound of the optimal value of EP1.

Let \(S=[\varvec{u}^{1},\ldots ,\varvec{u}^{p+1}]\) be \(S_{0}\) or a sub-simplex of \(S_{0}\), and define two sets of vertices and edges of S as follows:

Define the length \(\delta (S)\) of the longest side of S by

Now, the corresponding \(\textrm{EP1}\) over S can be written as

where \(\psi (\varvec{y)}=\min \limits _{\varvec{x}\in D}\varphi (\varvec{x},\varvec{y})\).

We next present a result to show a lower bounding function for the objective function \(\psi (\varvec{y})\) of problem EP1(S), by relaxing \( \sum \nolimits _{i=1}^{p}\frac{1}{2}[(\varvec{c}_{i}^{T}\varvec{x} +c_{0i})^2+(\varvec{d}_{i}^{T}\varvec{x}+d_{0i})^2]\) and \(\varvec{c}_{i}^{T}\varvec{x}-\varvec{d}_{i}^{T}\varvec{x}+c_{0i}-d_{0i}\) in the function \(\varphi (\varvec{x},\varvec{y})\), to \(w\in R\) and \({z}_i\in R\), respectively.

Theorem 3

For any given \(\varvec{y}\in S=[\varvec{u}^{1},\ldots ,\varvec{u}^{p+1}]\), consider the problem \((\mathrm{{P}}_{\varvec{y}})\) by

then we have \(\psi (\varvec{y)}\ge \phi (\varvec{y})\).

Proof

For any given \(\varvec{y}\in S,\) let

then we have \(\varvec{x}(\varvec{y})\in D\) and \(\psi (\varvec{y})=\varphi (\varvec{x}(\varvec{y}),\varvec{y}).\) By using \(\varvec{x}(\varvec{y})\in D\) and the definition of the function \(\varphi \), we can obtain

Now, for simplifying the right-hand side of the inequality (2.16), let us denote

Thus, it follows from (2.16) that

As a result, \((\overline{w},\overline{\varvec{z}})\) is the feasible solution of problem (\(\textrm{P}_{\varvec{y}}\)), and its corresponding objective function value is as follows:

According to the definition of \(\varphi (\varvec{x},\varvec{y})\), we get

Since \(\psi (\varvec{y})=\varphi (\varvec{x}(\varvec{y}),\varvec{y})\), we have \(\psi (\varvec{y})=g(\overline{w},\overline{\varvec{z}})\). Note that \(\phi (\varvec{y})\) is the optimal value of problem (\(\textrm{P}_{\varvec{y}}\)), we can conclude \(\psi (\varvec{y})=g(\overline{w},\overline{\varvec{z}})\ge \phi (\varvec{y})\). The proof of the theorem is completed. \(\square \)

For any fixed \(\varvec{y}\in S\), notice that (\(\textrm{P}_{\varvec{y}}\)) problem in Theorem 3 is a linear program, then the dual problem of (\(\textrm{P}_{\varvec{y}}\)) is as follows:

By the strong duality, we get \(\phi (\varvec{y})=\overline{\phi }(\varvec{y})\). Additionally, for any \(\varvec{y}\in S\), we know that there exists the unique \(\varvec{\lambda }\in R^{p+1}\) such that

Thus problem \(\textrm{DP}_{\varvec{y}}\) has an unique feasible solution \(\varvec{\lambda }\), that is, the optimal solution to \(\textrm{DP}_{\varvec{y}}\) is \(\varvec{\lambda }\) and the optimal value is

Additionally, let us denote

and let \(\mathrm{{diag}}(\cdot )\) be a vector whose components are the diagonal elements of a square matrix \((\cdot )\). Substituting \(\varvec{y}=\sum \limits _{j=1}^{p+1}{\lambda }_{j}\varvec{u}^{j}\) into (2.18) and utilizing Theorem 3, we have

where

Note that the matrix \(M_{S}\) is a positive semi-definite matrix, and the corresponding convex quadratic programming (CQP) problem with respect to S will be considered as follows:

whose optimal value can be used as a lower bound on the optimal value of EP1(S) (see Theorem 4).

Theorem 4

For any \(S\subseteq S_0\), we have

where \(\widehat{\phi }_{S}\) is the optimal value of \(\textrm{CQP}(S)\) problem.

Proof

From Theorem 3 and the dual problem \(\textrm{DP}_{\varvec{y}}\), we can easily get

Next, we will show \(\min \limits _{\varvec{y}\in S}\overline{\phi }(\varvec{y})=\widehat{\phi }_{S}.\) For convenience, let us consider the following problem:

where \(\varvec{y^*}\) is the optimal solution to problem \(\mathrm{(\widehat{P}}_{S})\). Now, assume that \(S=[\varvec{u}^{1},\ldots ,\varvec{u}^{p+1}]\), then there exists the unique \(\varvec{\lambda }^{*}\in R^{p+1} \) such that

Substituting \(\varvec{y}^*\) into the objective function of problem \(\mathrm{(\widehat{P}}_{S})\) and using (2.18)–(2.19), we have

Thus, it follows from (2.20) that \(\varvec{\lambda }^{*}\) is the feasible solution of CQP(S) problem, and so \(\overline{\phi }(\varvec{y}^{*})\ge \widehat{\phi }_{S},\) i.e.,

Additionally, suppose that the optimal solution of CQP(S) problem is \(\overline{\varvec{\lambda }}\), then

and the corresponding optimal value is

Let \(\overline{\varvec{y}}=\sum \limits _{j=1}^{p+1}\overline{{\lambda }}_{j}\varvec{u}^{j},\) and it is clear that \(\overline{\varvec{y}}\) is a feasible solution of problem \(\mathrm{(\widehat{P}}_{S})\). Substituting \(\overline{\varvec{y}}\) into the objective function of problem \(\mathrm{(\widehat{P}}_{S})\) and using (2.18)–(2.19) again, we know that

This implies that \(\min \limits _{\varvec{y}\in S}\overline{\phi }(\varvec{y})\le \widehat{\phi }_{S}.\) By (2.21) we have \(\min \limits _{\varvec{y}\in S}\overline{\phi }(\varvec{y})=\widehat{\phi }_{S}.\) This completes the proof. \(\square \)

In the following, we will show that the optimal value of CQP(S) problem is sufficiently close to one of EP1(S) problem, when the length of the longest side of the simplex S is sufficiently small.

Theorem 5

Given \(\varepsilon >0\) and the simplex \(S=[\varvec{u}^{1},\ldots ,\varvec{u}^{p+1}]\), let \(\delta _{0}=\max \limits _{1\le j\le p+1}\Vert \varvec{u}^{j}\Vert \). Assume that \(\overline{\psi }_{S}\) and \(\widehat{\phi }_{S}\) are the optimal values of problems \(\textrm{EP1}(S)\) and \(\textrm{CQP}(S)\), respectively. Then we have

if \(\delta (S)\le \varepsilon /\delta _{0}.\)

Proof

Let \(\psi _{0}=\min \limits _{1\le j\le p+1}\psi (\varvec{u}^j)\), and suppose that \(\Vert \varvec{u}^{j0}\Vert ^{2}=\max \limits _{1\le j\le p+1}\Vert \varvec{u}^{j}\Vert ^{2}\). From \(\varvec{u}^j\in S\) we can conclude that

In addition, Theorem 4 implies \(\widehat{\phi }_{S}=\min \limits _{\varvec{y}\in S}\overline{\phi }(\varvec{y})\). For any \(\varvec{y}\in S\), there exists the unique \(\varvec{\lambda }\) satisfying (2.17). According to (2.18), we know that

Thus

Using \(\varvec{y}=\sum \limits _{j=1}^{p+1}{\lambda }_{j}\varvec{u}^{j}\) for any \(\varvec{y}\in S\), we can derive that

which implies that

This, together with (2.23)–(2.24), yields

and so it follows from (2.22) and (2.25) that

This achieves the proof. \(\square \)

3 Simplicial branch-and-bound algorithm

Based on the above discussion, by combining the CQP problem and the simplicial branching rule to be proposed, we will develop a simplicial branch-and-bound algorithm for globally solving LMP problem within a prescribed \(\varepsilon \) tolerance. We also establish the convergence of the algorithm and estimate its complexity.

The main idea of the proposed algorithm consists of two basic operations: successively refined partitioning of the simplices and estimation of bounds for the optimal value of LMP problem given by Theorem 4.

3.1 Simplicial partitioning rule

In order to give the partitioning rules needed for the proposed algorithm, we first introduce the relevant definitions and notations as follows.

Given a simplex \(S\subseteq S^0\), we say that a finite set of nonempty simplices, denoted \({\mathscr {P}}=\{S_{i}\ | \ i\in {\mathscr {I}}\}\), is called as a simplicial partition of S, if

where \({\mathscr {I}}\) denotes a finite index set, and \(\mathrm{{rbd}}\) stands for the relative boundary of a set.

For a simplicial partition \({\mathscr {P}}\), its vertex collection \(V({\mathscr {P}})\), the boundary set \(E({\mathscr {P}})\) and the diameter \(\delta ({\mathscr {P}})\) can be defined separately, given by

For two simplicial partitions \({\mathscr {P}}_1=\{S_{i}\ | \ i\in {\mathscr {I}}_1\},\) \({\mathscr {P}}_2=\{S_{j}\ | \ j\in {\mathscr {I}}_2\},\) we say that \({\mathscr {P}}_2\) is nested in \({\mathscr {P}}_1\), if there exists \(i\in {\mathscr {I}}_1\) such that \(S_{j}\subseteq S_{i}\), for all \(j\in {\mathscr {I}}_2\). We say that a sequence of simplicial partitions \(\{{\mathscr {P}}_k\ | \ k\in {\mathbb {N}}\}\) is a sequence of nested partitions if \({\mathscr {P}}_{k+1}\) is nested in \({\mathscr {P}}_k\) for all \(k\in {\mathbb {N}}\), where \({\mathbb {N}}\) is the set of natural numbers.

Now, we begin with the establishment of the simplicial partitioning rule to present the branch-and-bound algorithm for solving LMP. At every iteration of the algorithm, the proposed partitioning rule is adopted to subdivide simplices. The partition approach used in each iteration is that we first choose the longest edge of the current sub-simplices and then simultaneously subdivide all sub-simplices with the common selected longest edge. The detailed simplicial partitioning rule is given as follows:

Simplicial partitioning rule:

Input: An initial p-simplex \(S_0\).

Output: A sequence of nested partitions of \(S_0\), denoted \(\{{\mathscr {P}}_{k}| k \in {\mathbb {N}} \}\).

-

Step 1:

Let \({\mathscr {P}}_{0}=\{S_{0}\},\ k=0.\)

-

Step 2:

for \(k \in {\mathbb {N}}\) do The nested simplicial partitions \({\mathscr {P}}_{k+1}\) of \({\mathscr {P}}_{k}\) is generated by Steps 2.a and 2.b:

-

(Step 2.a)

Select the longest edge \([\varvec{w},\varvec{v}]\) from \( E({\mathscr {P}}_{k})\), and let \(\varvec{t}=(\varvec{w}+\varvec{v})/2.\)

-

(Step 2.b)

For every \(S_{i}\in {\mathscr {P}}_{k}\) satisfying \([\varvec{w},\varvec{v}]\in E(S_{i})\), let \(\{\varvec{u}^{1},\ldots ,\varvec{u}^{p-1}\}=V(S_{i})\backslash \{\varvec{w},\varvec{v}\}.\) Update \({\mathscr {P}}_{k+1}={\mathscr {P}}_{k}\backslash S_{i}\bigcup \{S_{i,1},S_{i,2}\},\) where \(S_{i,1}=[\varvec{w},\varvec{t},\) \(\varvec{u}^{1},\ldots ,\varvec{u}^{p-1}], \) \( S_{i,2}=[\varvec{t},\varvec{v},\varvec{u}^{1},\ldots ,\varvec{u}^{p-1}].\)

-

(Step 2.a)

-

Step 3:

end for

From the above simplicial partition, the following lemma follows immediately.

Lemma 1

For an initial p-simplex \(S_0\), consider the above simplicial partition rule, then the following three statements are valid.

-

(i)

The sequence of nested partitions \(\{{\mathscr {P}}_{k}\}\) is exhaustive, i.e., \(\delta ({\mathscr {P}}_{k})\rightarrow 0, k\rightarrow \infty .\)

-

(ii)

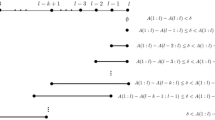

If a parameter q is selected such that \(q\in (0,\delta (S_0)]\), and let

$$\begin{aligned} \eta =\frac{\sqrt{3}}{2},\ \beta =\lceil \log _{\eta }(q/\delta (S_0))\rceil ,\ K=(p+1)^{2^{\beta }}, \end{aligned}$$then we have

$$\begin{aligned} \delta ({\mathscr {P}}_{k})\le q, \ \mathrm{for\ all }\ k\ge K. \end{aligned}$$ -

(iii)

K is a strict upper bound on the total number of vertices at the K-th iteration.

Proof

For the proof of this lemma, we can refer to Theorem 4.3 and Lemmas 4.5 and 4.9 in [29]. \(\square \)

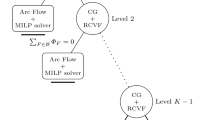

3.2 Simplicial branch-and-bound algorithm

In this subsection, we develop a simplicial branch-and-bound algorithm (SBBA) that can find a global optimal solution to LMP problem within a pre-specified \(\varepsilon \)-tolerance by combining the simplicial partitioning rule and the proposed CQP relaxation. The algorithm is assumed that an initial p-simplex \(S_0\) containing \([\varvec{y}^{min},\varvec{y}^{max}]\) has been constructed by using (2.1), and such an initial algorithm may be stated as follows.

Algorithm: SBBA

- Step 0 :

-

(Initialization): Given the convergence tolerance \(\varepsilon >0\), solve problem \(\textrm{CQP}(S_{0})\) to obtain the optimal value \(\widehat{\phi }_{S_{0}}.\) Let \(LB_{0}=\widehat{\phi }_{S_{0}}\) be the lower bound of the optimal value of \(\textrm{EP1}\). Let \(\psi (\varvec{u}^{0j_0})=\min \{\psi (\varvec{u}^{0j})\ |\ j=1,\ldots ,p+1\}\), \(\overline{\varvec{y}}^*=\varvec{u}^{0j_0}.\) Let \(\overline{UB}=\psi (\overline{\varvec{y}}^*)\) be the upper bound of the optimal value of \(\textrm{EP1}\). If \(\overline{UB}-LB_{0}\le \varepsilon \), stop, \(\overline{\varvec{y}}^*\) is the global \(\varepsilon \)-optimal solution of EP1, \(\overline{\varvec{x}}^*\) is the global \(\varepsilon \)-optimal solution of LMP problem, where \(\overline{\varvec{x}}^*=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\overline{\varvec{y}}^*)\). Otherwise, set \(k=0,\ \Omega =\{S_{0}\}.\)

- Step 1::

-

Let \(\overline{S}=\mathop {\arg \max }_{S\in \Omega }\delta (S).\) Select the longest edge \([\varvec{w},\varvec{v}]\in E(\overline{S})\) of \(\overline{S}\) satisfying \(\Vert \varvec{w}-\varvec{v}\Vert =\delta (\overline{S}).\) Let \(\varvec{t}=(\varvec{w}+\varvec{v})/2\) and calculate \(\psi (\varvec{t})\). If \(\overline{UB}>\psi (\varvec{t}),\) update \(\overline{UB}=\psi (\varvec{t}), \ \overline{\varvec{y}}^*=\varvec{t}.\)

- Step 2::

-

For every \(S_{k}\in \Omega \) satisfying \([\varvec{w},\varvec{v}]\in E(S_{k})\), bisect each \(S_{k}\) into two new sub-simplices \(S_{k,1}, S_{k,2}\) by \(\varvec{t}\) (see details in (Step2.b) of the simplicial partitioning rule above). Set \(H=\{S_{k,1},S_{k,2}\}.\)

- Step 3::

-

For each simplex \(S_{k,j}(j=1,2),\) solve the corresponding problem \(\textrm{CQP}(S_{k,j})\) to obtain the optimal value \(\widehat{\phi }_{S_{k,j}}.\) Let \(LB(S_{k,j})=\widehat{\phi }_{S_{k,j}}.\) If \(LB(S_{k,j})>\overline{UB},\) set \(H=H\backslash {S_{k,j}}.\) Let \(\Omega =(\Omega \backslash S_{k})\bigcup H,\ LB_{k}=\min \{LB(S)\ | \ S\in \Omega \}.\)

- Step 4::

-

Set \(\Omega =\Omega \backslash \{S \ |\ \overline{UB}-LB(S)\le \varepsilon ,S\in \Omega \}.\) If \(\Omega =\emptyset ,\) stop, \(\overline{\varvec{y}}^*\) is the global \(\varepsilon \)-optimal solution of EP1, and \(\overline{\varvec{x}}^*\) is the global \(\varepsilon \)-optimal solution of LMP problem, where \(\overline{\varvec{x}}^*=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\overline{\varvec{y}}^*)\); Otherwise, set \(k=k+1\) and go to Step 1.

3.3 Convergence and complexity of SBBA

In this subsection, we present the new convergence result of the proposed SBBA and estimate its computational complexity.

Lemma 2

For any \(\varvec{y}\in S_0=[\varvec{u}^{0,1},\ldots ,\varvec{u}^{0,p+1}]\), there exists a positive constant \(\alpha \) such that \(\Vert \varvec{y}\Vert \le \alpha ,\) where

Proof

For any \(\varvec{y}\in S_0=[\varvec{u}^{0,1},\ldots ,\varvec{u}^{0,p+1}]\), there exists \(\varvec{\lambda }\) satisfying

Then, for the i-th component \({y}_{i}\) of \(\varvec{y}\), \(i\in \{1,\ldots ,p\}\), we have

From (2.1), the i-th component of the \((i+1)\)-th vertex \(\varvec{u}^{0,i+1}\) of \(S_0\) satisfies

and for the i-th component of the j-th vertex \(\varvec{u}^{0,j}\) of \(S_0\), we have

According to the above relation and (3.1), it holds that

Further, we have

Thus, the proof is finished. \(\square \)

Theorem 6

Given \(\varepsilon >0\), let \(f^*\) be the optimal value of LMP problem. At the k-th iteration, if the sequence of simplicial partitions \(\{{\mathscr {P}}_{k}\}\) satisfies that \(\delta ({\mathscr {P}}_{k})\le \varepsilon /\alpha \), where \(\alpha \) is given by Lemma 2, then the proposed SBBA stops and returns an \(\varepsilon \)-optimal solution of LMP problem in the sense that

Proof

At the k-th iteration, let \(\widehat{S}_k=\mathop {\arg \min }_{S\in \Omega }LB(S)\). By Step 3 of SBBA, it follows that \(LB_k=LB(\widehat{S}_k)=\widehat{\phi }_{\widehat{S}_k}.\) Assume that the set of all vertices of the simplex \(\widehat{S}_k\) is \(\{\widehat{\varvec{u}}^{k1},\ldots ,\widehat{\varvec{u}}^{k,p+1}\}.\) From Lemma 2 we have \(\Vert \widehat{\varvec{u}}^{kj}\Vert \le \alpha \) for each \(j\in \{1,\ldots ,p+1\}\). This implies that

Similar to the proof of Theorem 5, by using (3.2) and (2.25) we obtain

Let \(\psi (\widehat{\varvec{u}}^{kj_{0}})=\min \limits _{1\le j\le p+1}\psi (\widehat{\varvec{u}}^{kj}),\) and from the above inequality it follows that

Furthermore, since \(\overline{UB}\) is updated by the value of \(\psi (\varvec{y})\) at the vertexes of the simplex, and so

If the sequence of simplicial partitions \(\{{\mathscr {P}}_{k}\}\) satisfies that \(\delta ({\mathscr {P}}_{k})\le \varepsilon /\alpha \), we see from \(\widehat{S}_k\in {\mathscr {P}}_{k}\) that \(\delta (\widehat{S}_k)\le \varepsilon /\alpha \). According to Steps 3 and 4 of the algorithm, using (3.3) we get for any \(S\in \Omega \)

Thus the algorithm stops. Let \(\psi ^*\) denote the optimal value of EP1. From the algorithm we have \(\overline{UB}=\psi (\overline{\varvec{y}}^*)\), and it follows from (3.4) that

Therefore, \(\overline{\varvec{y}}^*\) is the global \(\varepsilon \)-optimal solution of EP1. Now, let us consider problems LMP and EP1 again. Denote \(\overline{\varvec{x}}^*=\mathop {\arg \min }_{\varvec{x}\in D}\varphi (\varvec{x},\overline{\varvec{y}}^*)\). The definition of \(\psi (\varvec{y})\) implies that \(\varphi (\overline{\varvec{x}}^*,\overline{\varvec{y}}^*)=\psi (\overline{\varvec{y}}^*).\) According to (2.3) in the proof of Theorem 1, for any \(\overline{\varvec{x}}^*\in D,\ \overline{\varvec{y}}^*\in S_0\), we have

Using Theorems 1 and 2 it holds that \(f^*=\psi ^*\). Further, from (3.5) we obtain

So \(\overline{\varvec{x}}^*\) is the \(\varepsilon \)-optimal solution of LMP problem, and the proof of the theorem is completed. \(\square \)

Next, by using Lemma 1 we can summarize the result on the worst-case complexity of the proposed SBBA. Let \(T(a_1,a_2)\) be the time taken to solve a CQP problem with \(a_1\) variables and \(a_2\) constraints. Based on the above analysis, we have the following results.

Theorem 7

For sufficiently small \(\varepsilon >0\) satisfying \(\varepsilon /\alpha \in (0,\delta (S_0)]\), let

then the complexity of the proposed SBBA for solving \(\textrm{LMP}\) problem is bounded above by

where \(\alpha \) is given in Lemma 2, and \(C_{\widehat{K}}^{p+1}=\widehat{K}!/((\widehat{K}-p-1)!(p+1)!).\)

Proof

Since the sufficiently small \(\varepsilon >0\) satisfies \(\varepsilon /\alpha \in (0,\delta (S_0)]\), we see from Lemma 1 that after at most \(\widehat{K}\) iterations the sequence of simplicial partitions \(\{{\mathscr {P}}_{k}\}\) implies that \(\delta ({\mathscr {P}}_{k})\le \varepsilon /\alpha \). According to Theorem 6, SBBA finds the \(\varepsilon \)-optimal solution of LMP in at most \(\widehat{K}\) iterations. Additionally, Lemma 1 also indicates that after \(\widehat{K}\) iterations the total number of vertices of all simples S is strictly less than \(\widehat{K}\). Note that each p-dimensional simplex has \(p+1\) vertices. Thus, after \(\widehat{K}\) iterations the number of all simplices S is strictly less than \(C_{\widehat{K}}^{p+1}\). This implies that the total number of CQP(S) problems associated with these simplices S is also strictly less than \(C_{\widehat{K}}^{p+1}\). Moreover, we also notice that the main computation cost is to estimate \(\psi (\varvec{t})\) (see Step 2) and to acquire \({\widehat{\phi }_{S}}\) (see Step 3) at each iteration of SBBA, in which they are obtained by solving different CQP problems. Hence, the complexity of the proposed SBBA for solving \(\textrm{LMP}\) is bounded above by

and the proof is completed. \(\square \)

4 Numerical experiment

In this section, we report the numerical comparison for the proposed SBBA, the two algorithms in [24, 25] and the global optimization software BARON [30]. All the experiments were implemented in Matlab(2018a) and ran on a PC(Intel(R) Core(TM) i7-7700 M CPU (3.6GHz)). The linear programming problems in [24, 25] were solved by the LP solver linprog in Matlab Optimization Toolbox, and CQP problems were solved by the QP solver in CPLEX [31].

In computational experiments, the tolerance is set as \(\varepsilon =10^{-6}\), and the average numerical results are listed in Tables 1 and 2. Some notations in Tables 1 and 2 are given as follows:

-

Opt.val: the average optimal value obtained by the algorithm for five test instances;

-

CPU: the average CPU time of the algorithm in seconds for five test instances;

-

Iter: the average number of iterations of the algorithm for five test problems;

-

“−” denotes that the algorithm fails to find the solution within 3600 s for all instances.

We first consider the following rank-one nonconvex quadratic programming problem (see [32]):

where the components of \(\varvec{c}_{1}, \varvec{c}_{2}\in R^{n}\) are randomly generated in \([-1,1]\), and all elements of \(\varvec{A}\in R^{m\times n}\), \(\varvec{b}\in R^{m}\) are the random numbers in the interval [0.1, 1]. This problem is known to be NP-hard [33].

For each setting (n, m), we tested the proposed SBBA, the solver BARON and the algorithms in [24, 25] for five randomly generated instances of (P1), and Table 1 lists the average performance of the numerical results about the four algorithms. As can be seen in Table 1, BARON is the most inefficient one among the four methods, and the largest dimension n of the problems solved is \(n<200\). Also, for each solved instance, we observe from Table that BARON spends the longest CPU time and the algorithms in [24, 25] require much more CPU time than one of SBBA, in which their CPU time grow very fast as the number of (n, m) increases, compared with SBBA. Moreover, the algorithm in [25] fails to find the global solutions within 3600 s for large scale problems with \((n,m)=(5000,500)\).

Next, we further tested four methods (ours, BARON and ones in [24, 25]) on the following problem:

where all elements of \(\varvec{c}_{i}, \varvec{d}_{i}\in R^{n}\) and \(c_{0i}, d_{0i}\in R\) are randomly generated in the interval \([-0.5,0.5]\), and the elements of \(\varvec{A}\in R^{m\times n},\ \varvec{b}\in R^{m}\) are the random numbers in [0.1, 1].

In computational test for problem (P2), the number of summation terms p varies from 2 to 4 and the dimension (n, m) increases from (100, 10) to (5000, 500). We observe from Table that BARON spends the longest CPU time among the several methods for each solved instance. But the other advantage of BARON is that it seems to be insensitive to p, although the largest dimension of problem (P2) solved by it is \((m,n)=(800,80)\). Moreover, except for the proposed SBBA in this paper, the other methods fail to find the global optimal solution for some cases. For example, for the case \((n,m)>(1500,150)\) with \(p=3\), the algorithm in [24] fails to find the global solution within 3600 s, and the approach in [25] fails to terminate in 3600 s when \((n,m)>(3000,30)\) with \(p=2\).

As a contrast, SBBA is more efficient than other algorithms (BARON and ones in [24, 25]) for low-dimensional instances, say \(p<5\), and SBBA can successfully solve higher dimensional problems in the case of small p. For example, SBBA solves the instances with \((n,m,p) = (5000,500,2)\) in hundreds of seconds. Similar to the Refs. [24, 25], the disadvantage of SBBA is that the CPU time increases rapidly in the number of summation terms p.

5 Conclusions

In this paper, we first lift LMP problem to an equivalent nonlinear optimization problem EP1 by using reformulation techniques. A new convex quadratic relaxation is then constructed to provide a lower bound on the optimal value of EP1. Combined with the simplicial branching rule and the new relaxation, we present a simplicial branch-and-bound algorithm for globally solving LMP problem. Additionally, we establish the convergence of the proposed algorithm and analyze its computational complexity. Finally, numerical results demonstrate that the proposed algorithm is more efficient than other algorithms in the literature for test instances.

There are several issues left open. First, is it possible (and how) to estimate the tighter lower bound than that provided by CQP relaxation for LMP? A deep consideration in this direction will help to establish the theoretical basis of nonlinear relaxations. Secondly, can we develop similar nonlinear relaxations for other hard optimization problems, which are stronger than CQP relaxation and how to solve the resulting relaxation effectively? More study is needed to address these issues.

References

Mulvey, J.M., Vanderbei, R.J., Zenios, S.A.: Robust optimization of large-scale systems. Oper. Res. 43, 264–281 (1995)

Bennett, K.P., Mangasarian, O.L.: Bilinear separation of two sets in n-space. Comput. Optim. Appl. 2, 207–227 (1993)

Maranas, C.D., Androulakis, I.P., Floudas, C.A., et al.: Solving long-term financial planning problems via global optimization. J. Econ. Dyn. Control 21, 1405–1425 (1997)

Cambini, A., Martein, L.: Generalized convexity and optimization: theory and applications. Lect. Notes Econ. Math. Syst. 616(18), 311–318 (2009)

Cambini, R., Sodini, C.: On the minimization of a class of generalized linear functions on a flow polytope. Optimization 63(10), 1449–1464 (2014)

Cambini, R., Sodini, C.: A unifying approach to solve some classes of rank-three multiplicative and fractional programs involving linear functions. Eur. J. Oper. Res. 207(1), 25–29 (2010)

Dorneich, M.C., Sahinidis, N.V.: Global optimization algorithms for chip design and compaction. Eng. Optim. 25(2), 131–154 (1995)

Benson, H.P.: Global maximization of a generalized concave multiplicative function. J. Optim. Theory Appl. 137, 105–120 (2008)

Tuy, H.: Convex Analysis and Global Optimization. Springer, Cham (2016)

Konno, H., Kuno, T., Yajima, Y.: Global minimization of a generalized convex multiplicative function. J. Glob. Optim. 4(1), 47–62 (1994)

Schaible, S., Sodini, C.: Finite algorithm for generalized multiplicative programming. J. Optim. Theory Appl. 87(2), 441–455 (1995)

Konno, H., Kuno, T.: Linear multiplicative programming. Math. Program. 56, 51–64 (1992)

Zhou, X.G., Wu, K.: A method of acceleration for a class of multiplicative programming problems with exponent. J. Comput. Appl. Math. 223, 975–982 (2009)

Wang, C.F., Liu, S.Y., Shen, P.P.: Global minimization of a generalized linear multiplicative programming. Appl. Math. Model. 36, 2446–2451 (2012)

Jiao, H.W., Liu, S.Y., Chen, Y.Q.: Global optimization algorithm for a generalized linear multiplicative programming. J. Appl. Math. Comput. 40, 551–568 (2012)

Shen, P.P., Duan, Y.P., Ma, Y.: A robust solution approach for nonconvex quadratic programs with additional multiplicative constraints. Appl. Math. Comput. 201(1–2), 514–526 (2008)

Wu, H.Z., Zhang, K.C.: A new accelerating method for global non-convex quadratic optimization with non-convex quadratic constraints. Appl. Math. Comput. 197(2), 810–818 (2008)

Luo, H.Z., Bai, X.D., Lim, G., et al.: New global algorithms for quadratic programming with a few negative eigenvalues based on alternative direction method and convex relaxation. Math. Program. Comput. 11, 119–171 (2019)

Tuy, H., Nghia, N.D.: Reverse polyblock approximation for generalized multiplicative/fractional programming. Vietnam J. Math. 31(4), 391–402 (2003)

Cambini, R., Sodini, C.: Global optimization of a rank-two nonconvex program. Math. Methods Oper. Res. 71(1), 165–180 (2010)

Cambini, R., Venturi, I.: A new solution method for a class of large dimension rank-two nonconvex programs. IMA J. Manag. Math. 32(2), 115–137 (2021)

Cambini, R.: Underestimation functions for a rank-two partitioning method. Decis. Econ. Finance 43(2), 465–489 (2020)

Wang, C.F., Bai, Y.Q., Shen, P.P.: A practicable branch-and-bound algorithm for globally solving linear multiplicative programming. Optimization 66(3), 1–9 (2017)

Shen, P.P., Wang, K.M., Lu, T.: Outer space branch and bound algorithm for solving linear multiplicative programming problems. J. Glob. Optim. 78, 453–482 (2020)

Shen, P.P., Wang, K.M., Lu, T.: Global optimization algorithm for solving linear multiplicative programming problems. Optimization 71(6), 1421–1441 (2022). https://doi.org/10.1080/02331934.2020.1812603

Shen, P.P., Huang, B.D.: Global algorithm for solving linear multiplicative programming problems. Optim. Lett. 14, 693–710 (2020)

Zhao, Y.F., Yang, J.J.: Inner approximation algorithm for generalized linear multiplicative programming problems. J. Inequalities Appl. 2018, 354 (2018). https://doi.org/10.1186/s13660-018-1947-9

Zhao, Y.F., Liu, S.Y.: An efficient method for generalized linear multiplicative programming problem with multiplicative constraints. Springerplus 5(1), 1302 (2016)

Dickinson, P.J.: On the exhaustivity of simplicial partitioning. J. Glob. Optim. 58(1), 189–203 (2014)

Sahinidis, N.V.: BARON: a general purpose global optimization software package. J. Glob. Optim. 8(2), 201–205 (1996)

IBM ILOG CPLEX. IBM ILOG CPLEX 12.3 User’s Manual for CPLEX, 89 (2011)

Goyal, V., Genc-Kaya, L., Ravi, R.: An FPTAS for minimizing the product of two non-negative linear cost functions. Math. Program. 126, 401–405 (2011)

Matsui, T.: NP-hardness of linear multiplicative programming and related problems. J. Glob. Optim. 9, 113–119 (1996)

Acknowledgements

The authors are grateful to the editor and the anonymous referees for their valuable comments and suggestions, which has helped to substantially improve the presentation of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported by NSFC (Grant Numbers: 12071133; 11871196; 11671122).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shen, P., Wu, D. & Wang, K. Globally minimizing a class of linear multiplicative forms via simplicial branch-and-bound. J Glob Optim 86, 303–321 (2023). https://doi.org/10.1007/s10898-023-01277-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-023-01277-w

Keywords

- Linear multiplicative programming

- Global optimization

- Simplicial branching

- Convex quadratic relaxation

- Branch-and-bound