Abstract

We introduce a variant of the knapsack problem, in which the weights of items are also variables but must satisfy a system of linear constraints, and the capacity of knapsack is given and known. We discuss two models: (1) the value of each item is given; (2) the value-to-weight ratio of each item is given. The goal is to determine the weight of each item, and to find a subset of items such that the total weight is no more than the capacity and the total value is maximized. We provide several practical application scenarios that motivate our study, and then investigate the computational complexity and corresponding algorithms. In particular, we show that if the number of constraints is a fixed constant, then both problems can be solved in polynomial time. If the number of constraints is an input, then we show that the first problem is NP-Hard and cannot be approximated within any constant factor unless \(\mathrm{P}= \mathrm{NP}\), while the second problem is NP-Hard but admits a polynomial time approximation scheme. We further propose approximation algorithms for both problems, and extend the results to multiple knapsack cases with a fixed number of knapsacks and identical capacities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The knapsack problem is one of the most fundamental and well-known combinatorial optimization problems. Given a set of items, each with a weight and a value, the goal of the knapsack problem is to find a subset of items whose total weight does not exceed a given capacity and the total value is maximized. In the classical problem, the weights and values are known and independent of the decision. However, in practice, the parameters could be unknown or part of the decisions.

In this paper, we introduce a variant of the knapsack problem called the “knapsack under linear constraints” (KLC) problem. We present two models in which the weights of items are part of the decisions, and they must satisfy a set of linear constraints. In the first model, the value of each item is given, while in the second model, the value-to-weight ratio of each item is given. The goal of the KLC problem is to decide the weight of each item such that the linear constraints are satisfied, and to choose a subset of items to maximize the total value while not exceeding the knapsack’s capacity. In the following, we first show a few practical applications that motivate the study of the KLC problem.

-

1.

Hiring and Salary Determination Problem Suppose that a company wants to hire some employees to meet certain objectives. The human resources department needs to determine the anticipated salaries for the candidates and make the hiring decisions. Each candidate is associated with a value according to his/her proficiency and ability, and there are several constraints on the salaries. There is also a total budget for the hiring. An example is described in Table 1. To explain this example, let \(x_i\) be the salary of candidate i. For instance, the total salaries of IT department cannot exceed 10,000: \(x_1 + x_2 + x_3 \le 10,\!000\); the salary of candidate 1 needs to be within a range: \(3500 \le x_1 \le 5000\). Furthermore, there are some other constraints, e.g., the salary of candidate 1 is at most 1 / 3 of the entire department: \(x_1/(x_1 + x_2 + x_3) \le 1/3\), or equivalently, \(2x_1 - x_2 - x_3 \le 0\). The decision maker has a value for each candidate, which is given in the last row in Table 1. After deciding the anticipated salaries, the decision maker selects some of the candidates such that the total salary is no more than \(30,\!000\) and the total value is maximized. The above-described problem can be formulated as a knapsack problem under linear constraints, in which the values of items are known and the item weights (salaries) are determined by linear constraints.

-

2.

Industrial Production Problem Suppose that a factory wants to produce several products. The decision maker needs to decide which product to produce, and also the weight/size of each product, which needs to satisfy several linear constraints. In addition, the factory has a limited space to store the products. The values of products usually depend on their weights, therefore the information available to the decision maker is the value-to-weight ratios of the potential products. A concrete example is given in Table 2. In Table 2, the first few rows represent the linear constraints that the weights of products have to satisfy. To explain the example in Table 2, let \(x_i\) be the weight of product i. For example, each unit weight of product 1, 2,...,n costs 200, 300,...,100, respectively. There is a budget limitation for the decision maker, say no more than 10,000. Therefore, the weights are required to satisfy the linear inequality: \(200x_1 + 300x_2 + \cdots + 100 x_n \le 10,000\). Also each product consumes certain ingredients, which also have limitations. For instance, each unit weight of product 1, 2,...,n needs 2, 1,...,4 units of ingredient A, respectively, while the amount of A available is 56. Therefore, the weights are required to satisfy the linear inequality: \(2x_1 + x_2 + \cdots + 4 x_n \le 56\). Also, due to the capacity of the storage, the total weights of items cannot be more than 50. The decision maker has estimated value-to-weight ratios for each product, which are described in the last row in Table 2. For example, if \(x_1\) units of product 1 are produced and selected, then a gained value of 3\(x_1\) is expected. The whole problem can be formulated as a knapsack problem under linear constraints, in which the value-to-weight ratios are known and the item weights are determined by several linear constraints.

There are some variants of the knapsack problem in the literature in which the values or weights are uncertain or unknown, e.g., the stochastic knapsack problem [3, 6] and the robust knapsack problem [2, 13]. In the stochastic knapsack problem, the weight of each item is associated with a probability distribution, while the value and the capacity are given and fixed. The goal is to maximize the value of selected items while having some probabilistic guarantee on the weights. In the robust knapsack problem, the weight of each item is unknown but belongs to a given interval, and the objective is to find a robust solution under certain performance criterions. These problems are different from the KLC problem in that the item weights in the former problems are still exogenously given, whereas they are part of decisions in the KLC problem.

In this paper, we study the two models of the KLC problem proposed earlier, and investigate their computational complexity and corresponding algorithms. First, we show that when the number of constraints is a fixed constant, both the two models can be solved in polynomial time. When the number of constraints is also an input, we show that the first model (the value of each item is given) is NP-Hard and cannot be approximated within any constant factor unless \(\mathrm{P}= \mathrm{NP}\). Nevertheless, we propose a simple \(\frac{1}{n}\)-approximation algorithm for it. For the second model (the value-to-weight ratio of each item is given), we show that it is also NP-Hard but can be approximated within some constant factors. Particularly, we propose two approximation algorithms, a \(\frac{1}{2}\)-approximation algorithm and a polynomial time approximation scheme (PTAS) for this case. Finally, we extend these results to the multiple identical knapsack case.

Although the two models of the KLC problem are seemingly similar, our results reveal that they have different computational complexity. In addition, our results suggest that the KLC problem could be a simpler or harder problem than the classical knapsack problem, depending on the setting. This is in the same spirit as the result in [14], which studied a scheduling problem where the job processing times satisfy a set of linear constraints.

The remainder of this paper is organized as follows: In Sect. 2, we give a formal definition of the KLC problem and the two models studied in this paper, and briefly review some related results. In Sect. 3, we study the case when the number of constraints is a fixed number. In Sect. 4, we discuss the case with an arbitrary number of constraints. We first study the model in which the values are given in Sect. 4.1, and then consider the model in which the value-to-weight ratios are given in Sect. 4.2. In Sect. 5, we extend the results to the case with more than one knapsack. We provide some concluding remarks in Sect. 6.

2 Preliminary

We first formally define the knapsack problem under linear constraints as follows:

Definition 1

There are n items \(I = \{1,...,n\}\), a knapsack with capacity W and k linear inequalities. Each item has a nonnegative value (or a value-to-weight ratio). The goal of the knapsack problem under linear constraints (KLC) is to determine the weights of the items satisfying the linear inequalities, and to find a subset of them with total weight at most W such that the total value is maximized.

In our research, we study two settings of the KLC problem. In the first model, the value of each item is given. We call it the KLC1 problem and denote \(x_j\), \(v_j\) as the weight and the value of item j, respectively. The weights \(\varvec{x} = (x_1, \ldots , x_n)\) should satisfy \( A\varvec{x} \le \varvec{b}, x_j \ge 0, \) where \(A \in \mathbb {R}^{k \times n}\) and \(\varvec{b}\in \mathbb {R}^{k \times 1}\). Then the KLC1 problem can be formulated as the following optimization problem:

where \(y_{j} = 1\) indicates that item j is selected to the knapsack. For ease of exposition, we assume that in the KLC1 problem, the items are sorted in a non-increasing order based on the value \(v_j\). In the second model, the value-to-weight ratio of each item is given. We call it the KLC2 problem. We denote \(u_j\) as the value-to-weight ratio of item j. Using the same notation, the value of item j is thus \(u_jx_j\), and the problem can be formulated as follows:

Similarly, we assume that the items are sorted in a non-increasing order based on the value-to-weight ratios \(u_i\) in the KLC2 problem.

It is obvious that both the two problems are NP-Hard in general, since we can choose the linear constraints such that the weights are uniquely determined. Therefore, the KLC problem is a generalization of the knapsack problem, which is known to be NP-Hard [7]. Moreover, we can see that both the two problems can be formulated as a mixed integer (binary) bilinear programming problem, which is NP-Hard and extremely hard to solve in general [1, 4, 8, 12]. Nevertheless, our problems have specific structures, and as we will show later, some cases of the KLC problem can be solved in polynomial time or be approximated.

Before we end this section, we briefly review the existing results about the knapsack problem. The knapsack problem is one of the most fundamental and well-studied problems in operations research and computer science literature. This problem is NP-Hard and can be solved in pseudo-polynomial time by dynamic programming [7, 11]. In addition, there is a well-known greedy algorithm for the knapsack problem, in which the items are selected as much as possible in a non-decreasing order of the value-to-weight ratio, and then compare it to the item with maximum value and choose the better one. This is an \(\frac{1}{2}\)-approximation algorithm and it can be improved to a PTAS by incorporating the partial enumeration technique [15]. The first fully polynomial time approximation scheme (FPTAS) is proposed in [10]. The multiple knapsack problem is a generalization of the knapsack problem in which there are more than one knapsacks. It is well-known that there is no FPTAS for the multiple knapsack problem even if the number of knapsacks is two and the capacities are identical unless \(\mathrm{P}= \mathrm{NP}\) [5]. The \(\frac{1}{2}\)-approximation algorithm for the knapsack problem can be generalized to the multiple knapsack case with the same performance ratio [11]. A natural approach is to pack the knapsack successively by the algorithm for classical knapsack problem [5, 16]. Moreover, a PTAS for the multiple knapsack problem is proposed in [5]. For more details, we refer the readers to the survey by Kellerer et al. [11]

3 Fixed number of constraints

We first consider the case where k, the number of constraints, is a fixed constant. In this case, we show that both the KLC1 and the KLC2 problem can be solved in polynomial time, although it is well-known that the knapsack problem is NP-Hard [7]. To establish this result, we first state the following lemma:

Lemma 1

Both the KLC1 and the KLC2 problems have an optimal solution in which at most \(k + 1\) items with nonzero weights are selected.

Proof

We first prove the lemma for the KLC2 problem. We show that given any optimal solution to the KLC2 problem, we can find an optimal solution that has at most \(k + 1\) items with nonzero weights. Suppose we have an optimal solution to (KLC2) with \(I'\subseteq I\) being the subset of selected items. We can fix the \(y_j\) in (KLC2), that is, \(y_j = 1\) if \(j \in I'\) and 0 otherwise. Then we obtain the following linear program:

It is clear that the optimal solution to (LP2) is also an optimal solution to the KLC2 problem, since the optimal solution to the KLC2 problem is a feasible solution to (LP2). By the property of linear programming, the optimal basic solution to (LP2) has at most \(k + 1\) nonzero \(x_j\)s, thus the lemma holds.

For the KLC1 problem, a similar argument holds. The only change is to replace the objective function \(\sum \nolimits _{j\in I'} u_{j}x_{j}\) of (LP2) by \(\sum \nolimits _{j\in I'} v_{j}x_{j}\), we denote the corresponding linear program by (LP1). \(\square \)

By Lemma 1, when the number of constraints k is a fixed constant, we can find the optimal solution to the KLC1 problem and the KLC2 problem in polynomial time by enumeration. We describe the detail in Algorithm 1. During the execution of the algorithm, we solve the linear program (LP1) for the KLC1 problem, and solve the linear program (LP2) for the KLC2 problem.

Theorem 1

Algorithm 1 returns an optimal solution to the KLC1 problem and the KLC2 problem, and its computational complexity is \(O(n^{k + 1}L)\), in which L is the input length of the problem.

Proof

The optimality follows from Lemma 1 and the fact that during the enumeration procedure there must be a set \(I'\) with the same items as an optimal solution. Then when we solve (LP1)/(LP2) with that selection, an optimal solution will be obtained.

Now we study the running time of Algorithm 1. There are at most \(O(n^{k + 1})\) enumerations. During each enumeration, we need to solve one linear program, which has \(k + 1\) variables and the same number of constraints. The running time for solving the linear program is \(O((k + 1)^{3}L)\) (see [18]). Therefore, Algorithm 1 requires \(O\left( n^{k + 1}L\right) \) operations in total.\(\square \)

4 Arbitrary number of constraints

In this section, we discuss the case where the number of constraints k is an input of instance. We first study the KLC1 problem, and then consider the KLC2 problem.

4.1 The KLC1 problem

We first present a simple \(\frac{1}{n}-\)approximation algorithm for the KLC1 problem with an arbitrary number of constraints. The algorithm simply finds the feasible solutions of some linear programs in which a single item is selected, and then returns the best result.

Theorem 2

The KLC1 problem admits an \(\frac{1}{n}\)-approximation algorithm.

Proof

The idea of the algorithm is simple. We return the item j which has the maximum value \(v_j\) among all items that are feasible to the following linear inequalities:

If there is no j such that (1) is feasible, then we claim that the problem is infeasible. By the design of the algorithm, \(v_j\) is the item that has the maximum value in any feasible solution to the KLC1 problem, thus we have \(nv_j \ge OPT\) where OPT is the optimal value of the original problem. Therefore, the KLC1 problem has an \(\frac{1}{n}\)-approximation algorithm. \(\square \)

The following theorem states that finding an approximation algorithm that is slightly better than this trivial algorithm seems to be a difficult task.

Theorem 3

There is no \(\frac{1}{n^{1-\epsilon }}\)-approximation algorithm for the KLC1 problem for any fixed \(\epsilon > 0\), unless \(P = NP\).

Proof

We establish the inapproximability result by a gap-preserving reduction from the maximum independent set problem [7].

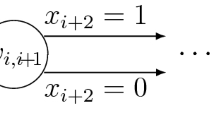

Independent Set Problem: Given a graph \(G = (V, E)\) and an integer K, to decide whether there is a vertex set \(V' \in V\) with size at least K such that no two vertices in \(V'\) are adjacent.

Given an instance of the independent set problem, we construct an instance of KLC1 with n items, where \(n = |V|\). We associate item j to vertex \(j \in V\), for \(j = 1,...,n\), and its weight is denoted by \(x_j\). The weights of items have to satisfy \(n + |E|\) linear constraints that are stated in the second and third constraints in (2). The value is \(v_j = 1\) for each item j, and the capacity of the knapsack W is 0. We will show that \(G = (V, E)\) has an independent set with size at least K if and only if there is a feasible solution to the following mathematical programming problem:

such that the total value \(\sum \nolimits ^{n}_{j = 1} y_{j} \ge K\), where \(y_j = 1\) means that the item j is selected. If this holds, then because the maximum independent set problem does not admit an \(\frac{1}{n^{1-\epsilon }}\)-approximation algorithm for any fixed \(\epsilon > 0\) unless \(P = NP\) [9, 17], the theorem will hold.

We first prove the “only if” part, assume that G has an independent set \(V'\) with size at least K, then we set \(x_j = 0\) and \(y_j = 1\) for each vertex \(j \in V'\) and \(x_j = 1\) and \(y_j = 0\) otherwise. Since the size of \(V'\) is at least K, we have the total value \(\sum \nolimits ^{n}_{j = 1} y_{j} = \sum \nolimits _{j \in V'} y_{j} \ge K\). Note that \(x_j = 1 - y_j\) and \(V'\) is an independent set, it can be verified that the constraints in (2) are satisfied. Therefore, (2) has a feasible solution with total value at least K.

Now we prove the “if” part. Assume there exists a feasible solution to (2) with total value at least K. Define the vertex set \(V' = \{j : y_j = 1, j = 1,...,n\}\subseteq V\), and it follows that the size of \(V'\) is \(\sum \nolimits ^{n}_{j = 1} y_{j}\) which is at least K. By the first and third constraints in (2), we have \(\sum _{j \in V'} x_{j} = \sum _{j \in V'} x_{j}y_{j} \le \sum ^{n}_{j = 1} x_{j}y_{j} \le 0\) and hence \(x_j = 0\) for all \(j \in V'\). By the second constraints in (2), we have \(x_k \ge 1\) for all \((j, k) \in E\). Therefore, \(V'\) is an independent set with size at least K and the theorem holds. \(\square \)

Theorem 3 implies that the KLC1 problem is very hard to approximate, even though the original knapsack problem admits an FPTAS and this problem can be solved in polynomial time when the number of constraint is fixed.

4.2 The KLC2 problem

In this section, we show that the KLC2 problem is easier to approximate than the KLC1 problem. First, note that there may be some item j that does not belong to any feasible solution, i.e., the linear system \(A\varvec{x} \le \varvec{b}, \varvec{x} \ge 0\) and \(x_j \le W\) is infeasible. This item can be easily detected by checking the feasibility of the above linear programs for all items, which requires \(O(n\cdot n^3L) = O(n^4L)\) time. For ease of exposition, we assume that such items are eliminated in the rest of our discussion.

Next, we propose a \(\frac{1}{2}\)-approximation algorithm to the KLC2 problem, which is based on the greedy algorithm for the knapsack problem. Since the value-to-weight ratio is given, we can determine the order of items which is identical to the greedy choice of the knapsack problem. Then we solve a series of linear programs. We give the detail of the algorithm in Algorithm 2.

We have the following performance guarantee for Algorithm 2.

Theorem 4

The Greedy Algorithm is a \(\frac{1}{2}\)-approximation algorithm for the KLC2 problem.

Proof

First, Algorithm 2 is a polynomial time algorithm. This is true by noting that it only requires to solve 2n linear programs in Algorithm 2, each of which takes \(O(n^3L)\) time.

Now we prove the performance of Algorithm 2. Let P be the value returned by the algorithm, and \(\varvec{x}^*\) and \(O\!P\!T\) be the weights and optimal value in an optimal solution. First, it can be seen that at least one of the linear programs (LP3) and (LP4) is feasible, since the greedy choice of the knapsack under the weights \(\varvec{x}^{*}\) is feasible to one of them. Therefore, the algorithm must return a feasible solution with total weight at most W. If the optimal solution contains a single item, then it is clear that one of the solution to (LP3) will return the optimal solution. Now suppose that the optimal solution contains more than one item. Note that the items are sorted by non-increasing order according to their value-to-weight ratios. Define \(k = \min \left\{ l: \sum \nolimits _{j = 1}^{l} x^*_j > W\right\} \). We have

where \(\varvec{x}'\) and \(\varvec{x}''\) are the weights of the optimal solution to the (LP3) and (LP4) during the execution of the algorithm when choosing the single item k and \(1, 2,...,k - 1\), respectively. Here, the first inequality follows by the definition of \(\varvec{x}^*\) and the choice of k. The second inequality follows from the fact that (LP3) and (LP4) return the optimal solutions when only the single item k, and the items \(1, 2,...,k - 1\) are chosen, respectively. The last inequality follows from the fact that the algorithm returns the solutions with maximum objective. Therefore, Algorithm 2 is a \(\frac{1}{2}\)-approximation algorithm. \(\square \)

Next, we present a PTAS for the KLC2 problem. Given an instance of the KLC2 problem, we first apply the greedy algorithm to obtain a \(\frac{1}{2}\)-approximate solution. Let LB be the returned objective value and define \(UB = 2LB\). It can be seen that LB and UB are lower and upper bounds of the optimal value respectively. Given an arbitrary precision \(\epsilon > 0\), we set \(h = \left\lceil \frac{2}{\epsilon }\right\rceil \). Note that if the optimal solution selects at most h items, then we can enumerate all the possible selections and solve the linear program (LP2) in polynomial time, which is similar to Algorithm 1. If the optimal solution selects more than h items, then we guess the highest value items and their values (approximately), and then apply Algorithm 2 to the remaining items. We describe this algorithm in Algorithm 3. We have the following result.

Theorem 5

Algorithm 3 is a PTAS for the KLC2 problem.

Proof

First, we show that Algorithm 3 will terminate in polynomial time. For each fixed \(\epsilon \), Step 2 to Step 5 enumerates at most \(O(n^h) = O(n^\frac{2}{\epsilon })\) subsets of items and possible selections. During each iteration, we solve one linear program, which can be done in polynomial time. Step 6 enumerates at most \(\genfrac(){0.0pt}0{n}{h} = O(n^\frac{2}{\epsilon })\) subsets of items. The value l satisfies \(\Delta ^{l - 2}\frac{\epsilon }{2} LB < UB\), where \(\Delta = \frac{1}{ 1 - \frac{\epsilon }{2}}\). Therefore, \(l < \log \frac{UB}{\frac{\epsilon }{2} LB}/ \log \Delta + 2 \le \frac{4 - 2\epsilon }{\epsilon } \log \frac{4}{\epsilon } + 2\), where the last inequality follows from the fact that \(\log (1 + x) \ge {x}/{2}\) when \(0< x < 1\) and \(UB = 2LB\). The number of iterations in Step 7 is \(l^{h} \le \left( \frac{4}{\epsilon } \log \frac{4}{\epsilon } + 2\right) ^h\), which is a polynomial of the input size. Solving the linear programs and other operations can be done in polynomial time, therefore Algorithm 3 is a polynomial time algorithm if \(\frac{1}{\epsilon }\) is a fixed constant.

If the optimal solution selects at most h items, then it is clear that at least one of the iterations in Step 2 to Step 5 returns the optimal solution. Otherwise, we denote the optimal item set, the weights and the value by \(I^*\), \(\varvec{x}^*\) and \(\textit{OPT}\), respectively. We focus on the iteration in Step 6 that the items of \(I^h\) are exactly the h highest value items selected in an optimal solution, and the value for each \(i \in I^{h}\), i.e., \(u_{i}x^*_{i}\) falls in \([Y_{i}, X_{i}]\). Note that the solution returned by the algorithm must have a value at least as large as that returned in this iteration.

Let \(\textit{OPT} = \textit{OPT}^{big} + \textit{OPT}^r\), in which \(\textit{OPT}^{big}\) is the value of the items in \(I^* \cap I^{big}\) and \(O\!P\!T^r\) are the values of the items in \(I^* \setminus I^{big} = I^* \cap I^r\), and \(I^{big}\), \(I^r\) are the sets defined in Step 8. Denote the value returned in the corresponding iteration by P, and the corresponding weights by \(\varvec{x}\). Note that each item in \(I^{big}\) satisfies \(\Delta u_{i}x_{i} \ge u_{i}x^*_i\), that is, \(u_{i}x_{i} \ge \left( 1 - \frac{\epsilon }{2}\right) u_{i}x^*_i\). Let \(P = P^{big} + P^{greedy}\), where \(P^{big}\), \(P^{greedy}\) are the total value of items in \(I^{big}\) and \(I^{greedy}\) respectively. It follows that \(P^{big} \ge \left( 1 - \frac{\epsilon }{2}\right) O\!P\!T^{big}\).

Now we argue that \(u_{i}x_{i} \le \frac{\epsilon }{2}\) OPT for each item \(i \in I^{r}\). Note that \(I^r = I^{small} \cup (I \setminus I^h)\). If \(i \in I^{small}\), then we have \(u_{i}x_{i} \le \frac{\epsilon }{2} LB \le \frac{\epsilon }{2}\) OPT. Otherwise, we have \(i \in I\setminus I^h\). Note that \(I^h\) are the h highest value items in the optimal solution, thus we have \(u_{i}x_{i} \le \frac{OPT}{h} \le \frac{\epsilon }{2}\) OPT and the argument holds. Let \(P'\) be the optimal value of the problem restricted to item set \(I^r\) with capacity \(W - \sum \limits _{j \in I^{big}} x_j\). We must have \(P' \ge O\!P\!T^r\) since all the items in \(I^{h}\) are selected in the optimal solution in this iteration and \(I^{big}\) is a subset of \(I^{h}\). Furthermore, since \(P^{greedy}\) is returned by Algorithm 2 on \(I^r\), according to the same argument as in (3), we have \(P^{greedy} \ge P' - \max _{k\in I^r}u_{k}x_{k} \ge O\!P\!T^r - \frac{\epsilon }{2} O\!P\!T\). Therefore, \(P = P^{big} + P^{greedy} \ge \left( 1 - \frac{\epsilon }{2}\right) O\!P\!T^{big} + O\!P\!T^r - \frac{\epsilon }{2}O\!P\!T \ge (1 - \epsilon ) O\!P\!T\). In conclusion, Algorithm 3 is a PTAS for the KLC2 problem. \(\square \)

5 Multiple knapsack under linear constraints

In this section, we extend the previous results to the multiple identical knapsack case. For the KLC1 problem, the multiple knapsack version is at least as hard as the KLC1 problem which is already hard to approximate. It is also easy to see that a similar \(\frac{1}{n}\)-approximation algorithm still works. In this section, we mainly focus on the multiple identical knapsack version of the KLC2 problem.

We can define the multiple identical knapsack under linear constraints (MIKLC2) problem similar to Definition 1 in Sect. 2. First, we discuss the case that when k and m are fixed constants. Similar to Lemma 1, given any optimal solution to the MIKLC2 problem, we can construct a linear program similar to (LP2):

where \(j \in I^i\) indicates that the item j is assigned to knapsack i in this optimal solution. Use the same approach as Lemma 1, we can see that the MIKLC2 problem has an optimal solution in which at most \(k + m\) items with nonzero weights are selected. Therefore, we can slightly modify Algorithm 1 to solve the MIKLC2 problem, by enumerating all possible assignments of these items to the m knapsacks in Step 1 of Algorithm 1. The total running time is \(O\left( \genfrac(){0.0pt}0{n}{k + m}(m + 1)^{k + m}(k + m)^3L\right) = O(n^{k + m}L)\), which is a polynomial to the input size if k and m are fixed constants. We note that this result can also be applied to the case when the capacities are non-identical, and also to the multiple knapsack extension of the KLC1 problem.

Theorem 6

The MIKLC2 problem with m knapsacks and k linear constraints can be solved in \(O(n^{k + m}L)\) time, where L is the weight of input length.

For the case when the number of constraints is arbitrary, we propose an approximation algorithm for the MIKLC2 problem. The idea is based on the \(\frac{1}{2}-\)approximaton greedy algorithm for the multiple knapsack problem in the literature (see [11]). Let us briefly review this algorithm. It is without loss of generality to assume that all the items have weights no more than the knapsack capacity. The algorithm assigns the items in the non-increasing order based on the value-to-weight ratios. If there is an item which cannot be assigned to the current knapsack, then we ignore this item and call it a “split item”. Then the algorithm moves to the next knapsack and assign the next highest value-to-weight ratio item. After all the knapsacks or the items are considered, we obtain the first feasible solution. Note that there are at most m split items, and each of them can be assigned to a single knapsack solely, this constitutes the second feasible solution. It is not hard to show that the optimal value is at most the sum of the values of these two feasible solutions, therefore returning the best of them leads to a \(\frac{1}{2}-\)approximation algorithm.

In our algorithm, we first guess these m split items, and then use the same idea of Algorithm 2 in the single knapsack case. We describe the detail procedure in Algorithm 4 and state the result in Theorem 7.

Theorem 7

Algorithm 4 is a \(\frac{1}{2}\)-approximation algorithm for the MIKLC2 problem with m knapsacks.

Proof

There are at most \(O(n^{m})\) subsets of I which have no more than m items. During each iteration, the algorithm solves (LP5) and (LP6) each once. In total, the running time is \(O(n^{m}\cdot n^{4}L)\) = \(O(n^{m + 4}L)\) which is a polynomial to the input size if m is a fixed constant.

For the performance guarantee, we consider the iteration that \(I^{s}\) is exactly the set of split items when we apply the greedy algorithm of multiple knapsack problem under the weights \(\varvec{x}^*\). Based the proof of Theorem 4 and the \(\frac{1}{2}-\)approximation algorithm of multiple knapsack problem [11], one can similarly show that Algorithm 4 returns a \(\frac{1}{2}-\)approximation solution, and we omit the detail to avoid repetition. \(\square \)

We remark that this greedy algorithm can be extended to a PTAS with a fixed number of knapsacks and identical capacities. The idea is similar to that in Algorithm 3, mainly by partial enumeration and apply Algorithm 4 to the small items. Note that there is no FPTAS for the multiple knapsack problem even if the number of knapsacks is two and the capacities are identical unless \(\mathrm{P}= \mathrm{NP}\) [5], thus it is unlikely that an FPTAS can be found for the MIKLC2 problem.

6 Conclusion

In this paper, we study the knapsack problem under linear constraints, and propose several optimal or approximation algorithms for this problem and its multiple knapsack variant. There are some open questions: the first is to design an FPTAS or prove that it does not exist for the KLC2 problem; the second is to design approximation algorithms for the MIKLC2 problem with running time independent of m, and for the more general problem with non-identical capacities. It is also interesting to consider other combinatorial optimization problems under linear constraints.

References

Adams, W.P., Sherali, H.D.: Mixed-integer bilinear programming problems. Math. Program. 59(1), 279–305 (1993)

Bertsimas, D., Sim, M.: The price of robustness. Oper. Res. 52(1), 35–53 (2004)

Bhalgat, A., Goel, A., Khanna, S.: Improved approximation results for stochastic knapsack problems. In: Proceedings of the Twenty-second Annual ACM-SIAM Symposium on Discrete Algorithms, SODA ’11, pp. 1647–1665. SIAM (2011)

Burer, S., Letchford, A.N.: Non-convex mixed-integer nonlinear programming: A survey. Surv. Oper. Res. Manag. Sci. 17(2), 97–106 (2012)

Chekuri, C., Khanna, S.: A polynomial time approximation scheme for the multiple knapsack problem. SIAM J. Comput. 35(3), 713–728 (2005)

Dean, B.C.: Approximation algorithms for stochastic scheduling problems. Ph.D. thesis, Massachusetts Institute of Technology, Boston (2005)

Garey, M.R., Johnson, D.S.: Computers and Intractability: A Guide to the Theory of NP-Completeness. W. H. Freeman and Co., San Francisco (1979)

Gupte, A., Ahmed, S., Cheon, M., Dey, S.: Solving mixed integer bilinear problems using MILP formulations. SIAM J. Optim. 23(2), 721–744 (2013)

Håstad, J.: Clique is hard to approximate within \(n^{1 - \epsilon }\). In: Proceedings 37th Annual Symposium on Foundations of Computer Science, pp. 627–636 (1996)

Ibarra, O.H., Kim, C.E.: Fast approximation algorithms for the knapsack and sum of subset problems. J. ACM 22(4), 463–468 (1975)

Kellerer, H., Pferschy, U., Pisinger, D.: Knapsack Problems. Springer, New York (2004)

Köppe, M.: On the complexity of nonlinear mixed-integer optimization. In: Lee, J., Leyffer, S. (eds.) Mixed-Integer Nonlinear Programming. IMA Volumes in Mathematics and its Applications, vol. 154, pp. 533–558. Springer, Berlin (2011)

Monaci, M., Pferschy, U.: On the robust knapsack problem. SIAM J. Optim. 23(4), 1956–1982 (2013)

Nip, K., Wang, Z., Wang, Z.: Scheduling under linear constraints. Eur. J. Oper. Res. 253(2), 290–297 (2016)

Sahni, S.: Algorithms for scheduling independent tasks. J. ACM 23(1), 116–127 (1976)

Wang, Z., Xing, W.: A successive approximation algorithm for the multiple knapsack problem. J. Comb. Optim. 17(4), 347–366 (2009)

Williamson, D.P., Shmoys, D.B.: The Design of Approximation Algorithms. Cambridge University Press, Cambridge (2011)

Ye, Y.: Interior Point Algorithms: Theory and Analysis. Wiley-Interscience, New York (1997)

Acknowledgements

We thank the anonymous reviewers for their constructive comments. Zhenbo Wang’s research has been supported by NSFC No. 11371216.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nip, K., Wang, Z. & Wang, Z. Knapsack with variable weights satisfying linear constraints. J Glob Optim 69, 713–725 (2017). https://doi.org/10.1007/s10898-017-0540-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-017-0540-y