Abstract

Manufacturing industry plays a very significant role in the economic functioning of any country. In recent times, reverse engineering (RE) has become an integral part of manufacturing set-up owing to its numerous applications. The quality of RE product primarily depends on the quality of digitization i.e., part measurement. There is a diverse range of digitization devices which can be employed in RE. These machines have variability in terms of cost, accuracy, ease of use, accessibility, scanning time, etc. Therefore, the decision regarding the selection of a suitable device becomes important in a particular RE application. The decisions taken in the planning stage for RE can have a long lasting impact on the functionality, quality and the economics of components to be used by manufacturing industries. To accomplish the selection procedure, a comparative study of three digitization techniques has been carried out. The determination of an appropriate digitization system is basically a multi-criteria decision making (MCDM) problem. MCDM techniques are yet to be applied in the selection of digitization systems for RE. MCDM is one of the most widely used decision methodologies in business and engineering spheres. The aim of this work is to describe various MCDM methods in the selection of digitization systems for RE. This paper intends to employ combinations between different MCDM methods such as group eigenvalue method (GEM), analytic hierarchy process (AHP), entropy method, elimination and choice expressing reality (ELECTRE), technique for order of preference by similarity to ideal solution (TOPSIS) and simple additive weighing (SAW) method. In this work, GEM, AHP, Entropy methods has been used to elicit weights of various selection criteria, while TOPSIS, ELECTRE and SAW have been applied to rank the alternatives. A comparative analysis has also been performed to determine the efficacies of different approaches. The conclusion of the paper reveals the best digitization system as well as the characteristics of different MCDM methods and their suitability in RE application.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

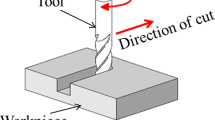

Manufacturing industry plays a pivotal role in the economic advancement of any country. Lately, reverse engineering (RE) has become an indispensable component of manufacturing industries owing to its diverse applications. In manufacturing field, RE can be defined as a design methodology where an existing part is first digitized or measured to obtain data and then a computer aided design (CAD) model is reconstructed (Yuan et al. 2001). The newly created CAD model can be utilized in many industrial applications such as design upgradation and modification, analysis, manufacturing, inspection, etc. The basic concept behind RE is the determination of the design intent of an existing part. A general procedure for RE an object consists of three main steps: digitization (data acquisition), surface reconstruction and CAD modelling. A physical model is digitized using a suitable data acquisition method to obtain all the relevant design information.

Digitization (also referred to as scanning) is the first and most important step in the RE process. It is defined as a process of acquiring point coordinates from the part surfaces (Motavalli 1998). The outcome of the digitization process is a cloud of data points. There are many different digitization (measurement) technologies that can be used to collect 3D data of an object’s shape (Savio et al. 2007; Vezzetti 2007). The different digitization techniques are based on either contact or non-contact sensors. The techniques based on contact methods, such as scanning touch probe collect a large number of points by dragging the probe tip across the object’s surface. They provide greater advantages as compared to touch trigger probe, while measuring the free form and sculptured surfaces. The various non-contact methods use lasers, white light, moire interferometry, patterned light techniques, microwave, and radar to obtain data points without touching the measuring surface. Currently, the different types of stationary and portable arm coordinate measuring machines (CMMs) are widely employed for the RE of a large range of industrial parts and very often are equipped with either a contact type or a contactless probe or both. The laser line scanning probe and scanning touch probe can be used either with portable arm CMM or stationary CMM to digitize any surface depending on the application’s requirements. The quality of the reconstructed surface model depends on the type and accuracy of measured point data, as well as the type of measuring device (Son et al. 2002).

The efficient use of digitization systems requires users to fully understand the application and working conditions of the measurement systems. As a result of the variable performance and high cost of different digitization techniques, it is important to select the most appropriate technique for a given RE application. According to Baker et al. (2001), a valuable decision (or selection) can only be accomplished with the help of a systematically structured and a well-defined methodology. Indeed, multi-criteria decision-making (MCDM) represent one such technique and can be employed to refine decision making (Osman et al. 2016; Salomon and Montevechi 2001). It is one of the prominent branch of operations research (OR), which deals with decision problems consisting of a set of finite alternatives assessed by a number of decision criteria (Banwet and Majumdar 2014). MCDM has noticed an implausible usage over the last several decades (Velasquez and Hester 2013). It has progressed to accommodate different real-world applications such as supply chain management, banking, healthcare, warehouse location, environment assessment, manufacturing, etc. (Aruldoss et al. 2013). This paper analyses several frequently used MCDM methods, and determines their applicability in the selection of digitization systems for RE. In this work, a different combinations of weight computation and ranking methods have been employed. Actually, integrating criteria weight methods with ranking methods, help to express stakeholder’s preferences more precisely. Therefore, combinations between various MCDM methods such as group eigenvalue method (GEM), analytic hierarchy process (AHP), entropy method, elimination and choice expressing reality (ELECTRE), technique for order of preference by similarity to ideal solution (TOPSIS) and simple additive weighing (SAW) have been used.

The objective of this work is the implementation of MCDM methods for the identification of the most appropriate digitization system. Therefore, the methodology in this work focuses on the performance evaluation of three different systems which are widely employed at present for the data acquisition in RE. The three systems include the scanning touch probe incorporated into a stationary CMM, a laser line scanning probe mounted on a stationary CMM and a laser line scanning probe on a portable arm CMM. The performance evaluation includes the assessment of various criteria to be used in the MCDM methods. The various criteria include the cost, maximum permissible error (MPE), accuracy, scanning time, ease of use, accessibility, etc. To determine the accuracy, the deviation between a number of pre-defined points on the actual mold and the corresponding points in the CAD model has been computed. This investigation has also computed the level of deviations to represent the upper bound on surface tolerance that can be attained with respective system. Two RE software have been employed for point cloud data processing and generation of CAD with the intent to find the surface tolerance. To evaluate other criteria, this investigation has also outlined digitization issues and the problems associated with each of the digitizing devices for the acquisition of point cloud data.

Literature review

The recent developments in RE methodologies and techniques have made it, a necessary phase in the design and production processes (Sokovic and Kopac 2006), since it provides an efficient approach for creating a CAD model of the free form surfaces or sculptured surfaces (Zhang 2003). There have been many instances where RE was successfully used to improve the efficiency of the design and production phases. For example, Mavromihales et al. (2003) utilized RE methodology to improve the production of dies for fan blades of Rolls Royce aero engines. They improved the geometry of casting as well as provided a more accurate die design. Similarly, Ye et al. (2008) proposed a RE innovative design methodology called reverse innovative design (RID). The core of this RID methodology was the construction of feature based parametric solid models using the scanned data. Although, RE technique savors many benefits, still, it has to cope up with many issues. An interesting review of issues associated with the RE of geometric models was carried out by Várady et al. (1997). They discussed capabilities of a large number of data acquisition methods for capturing different geometric shapes. Barbero and Ureta (2011) also compared several digitization systems based on laser, fringe projection and X-ray. Their aim was the selection of appropriate system based on accuracy, quality of the distribution of points and triangular meshes. Likewise, a comprehensive review of different techniques and sensors focusing on 3D imaging techniques in industry, cultural heritage, medicine and criminal investigation can be found in Sansoni et al. (2009). Moreover, Savio (2006) and Vrhovec and Munih (2007) outlined various sources of error that might result into a measurement uncertainty of CMMs. The probe accessibility issue has been thoroughly studied by Wu et al. (2004). The effects of probe configuration, i.e., probe length, volume and orientation were analyzed and an efficient algorithm was proposed to improve CMM probe accessibility. It is often difficult to find a complete relationship between the specified performance of a CMM and the actual results obtained because of unexplained difference between them. Harvie (1986) proposed a method of identifying these differences and discussed some sources of error. A performance evaluation test proposed by Gestel et al. (2009) allowed the determination of measurement errors of laser line scanning probes. This method, which was based on planar test artifact identified the influence of in-plane and out-of-plane angle as well as the scan depth on the performance of laser line scanning probe. Similarly, Feng et al. (2001) introduced an experimental approach to determine significant scanning process parameters and an empirical relationship to identify digitizing errors for laser scanning operations. A case study by Ali et al. (2008) revealed different scanning parameters which affected the efficiency of data processing and accuracy of the CAD model.

The comparative study of different techniques has always been a useful tool in analyzing the potential of various techniques (Gapinski et al. 2014). The study by Barisic et al. (2008) presents a very good example where, non-contact (ATOS) and contact systems were compared and analyzed to evaluate their capabilities. Accordingly, Martínez et al. (2010) studied the application of different scanning systems (laser triangulation sensor and a touch trigger probe) mounted on CMM. They investigated these technologies, identified scanning strategies and estimated deviations. An industrial comparison carried out by Hansen and De Chiffre (1999) in the Scandinavian countries determined measurement uncertainties associated with the CMM. The comparison depended on the measurement of a variety of dimensions, angles, etc., on five different workpieces. Similarly, De Chiffre et al. (2005) established a new method based on calibrated artefact for the comparison of optical CMMs and compared their capabilities with that of tactile CMMs. All these studies advocate the need of a selection technique which can be used to decide on the appropriate system. For example, according to Stefano and Enrico (2005), a proper strategy that can help in the selection of the right acquisition technology and the scanning parameters is always crucial.

MCDM provides strong decision making approach which can be used in diverse domains including manufacturing, where selection of the best alternative is highly complex (Papakostas et al. 2012; Michalos et al. 2015). As a matter of fact, seldom any work related to the selection of digitization systems using MCDM can be noticed in the literature. However, considerable work in other domains, where MCDM based on either single technique or their hybrid can be listed out. For example, Sadeghzadeh and Salehi (2011) applied MCDM method in the automotive industry. They reviewed various guidelines in the strategic technologies of fuel cells and used TOPSIS to rank important solutions for the development of fuel cell technologies. Similarly, Ozcan and Celebi (2011) considered AHP, TOPSIS, ELECTRE and Grey theory to select the most suitable warehouse location for a retail business which involve high uncertainty and product variety. The safety evaluation of coal mines was carried out by Li et al. (2011) to establish the risk-free operation of coal mines. The weights for different criteria were obtained using the entropy weight method, while TOPSIS was used to rank the coal mines based on their safety conditions. AHP is considered as one of the most commonly applied MCDM techniques (Grandzol 2005). For instance, the optimum maintenance strategies for virtual learning and textile industries, were obtained by Fazlollahtabar and Yousefpoor (2008) and Ilangkumaran and Kumanan (2009) respectively, using AHP. Several other techniques such as ELECTRE (Balaji et al. 2009; Wu and Chen 2009), SAW (Al-Najjar and Alsyouf 2003; Jafari et al. 2008; Afshari et al. 2010; Adriyendi 2015), TOPSIS (Mousavi et al. 2011) are also used every now and then, in the selection of the best alternative. To enhance the performance of the Brazilian telecom company, Bentes et al. (2012) employed a combination of Balanced Scorecard (BSC) and AHP. The BSC was utilized to prioritize different performance indexes and AHP to rank the performance of various functional units. There have been many MCDM methods with their inherent capabilities and competence. Therefore, they have to be selected or applied depending on the application requirement. Pourjavad and Shirouyehzad (2011) performed a comparative analysis of three MCDM methods TOPSIS, ELECTRE and VIKOR for the application in production lines. They analyzed the performance of parallel production lines in the mining industry and compared the effectiveness of three MCDM methods. Notice that different MCDM techniques may produce contradicting results in the same application. As a result, a solution which is repeated by several MCDM techniques should be chosen as the ideal solution. Thor et al. (2013) reviewed and compared the application of four popular MCDM techniques including the AHP, ELECTRE, SAW and TOPSIS in choosing the most appropriate maintenance procedure. In the same way, Osman et al. (2016) implemented the hybrid between simple multi-attribute rating technique (SMART), ELECTRE and the TOPSIS. The SMART method was used to compute the weights of each criterion while ELECTRE and TOPSIS were utilized to find the best alternative and rank the different alternatives respectively. Tscheikner-Gratl et al. (2017) investigated the applicability of five MCDM methods (ELECTRE, AHP, WSM, TOPSIS, and PROMETHEE) in an integrated rehabilitation management scheme. The objective was to compare different MCDM methods and determine their suitability for the management of water systems.

It can be concluded that the studies pertaining to the selection of appropriate digitization systems in RE are seldom reported in the literature. As a consequence of numerous applications in various fields, it can be assured that decision making regarding selection of digitization system can become simple and effective with the application of the proper MCDM method. The subsequent sections exhibit the application of various MCDM methods in the selection of a suitable digitization system in RE.

MCDM methods

This work aims to implement the combination between various MCDM methods such as GEM, AHP, Entropy method, ELECTRE, TOPSIS and SAW. The combinations, including the GEM–TOPSIS, AHP–TOPSIS, Entropy–TOPSIS, GEM–SAW, AHP–SAW, Entropy–SAW, GEM–ELECTRE, AHP–ELECTRE have been utilized to get a best digitization system. A brief overview of different MCDM methods is discussed below.

Determination of weights

In this work, GEM, AHP and entropy methods have been used to elicit weights of various selection criteria.

Analytic hierarchy process

One of the most effective MCDM tools developed to assess and rank various alternatives is the AHP (Grandzol 2005; Saaty 1980; Çimren et al. 2007; Ishizaka and Labib 2009). It can be very useful when a number of qualitative, quantitative, and contradictory factors are involved in decision making. This eigenvalue method was developed by Saaty (1980). This method uses paired comparisons of each criterion with each other to give a matrix for eigenvector and hence weight calculations which measures the importance of various criteria. The different steps for AHP can be described as follows:

-

Step 1 Data or rating of different criteria is gathered from various experts or stakeholders. A pairwise comparison of different criteria is carried out using a qualitative scale which is then converted to quantitative numbers shown in Table 1. The preference scale for pairwise comparisons of two items ranges from the maximum value 9 to minimum value 1/9.

-

Step 2 The pairwise comparisons of various criteria obtained in step 1 are arranged to form a square or comparison matrix. The diagonal elements of the matrix are denoted as 1. The elements in the lower triangular are reciprocal to elements in the upper triangular. The criterion with value greater than 1 is better than the criterion with value less than 1.

Suppose m alternatives \((A_{1}, A_{2}, A_{3}, \ldots ,A_{m})\) are rated by n experts \((M_{1}, M_{2}, M_{3}, \ldots ,M_{n})\). As a result, n matrices with ratings from various experts will be obtained. The square matrix \(M_{1}\) represents the ratings by expert \(M_{1}\) for m alternatives.

$$\begin{aligned} M_1= & {} \left[ {{\begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1&{} {a_{12} }&{} \cdots &{} {a_{1m} } \\ {a_{21} }&{} 1&{} {a_{23} }&{} {a_{2m} } \\ \vdots &{} \ldots &{} \ddots &{} \vdots \\ {a_{m1} }&{} {a_{m2} }&{} \ldots &{} 1 \\ \end{array} }} \right] ; \\&\hbox {where}, a_{21} =\frac{1}{a_{12}},\ldots ,a_{m1} =\frac{1}{a_{1m} } \end{aligned}$$ -

Step 3 The principal eigenvalue and the corresponding normalized eigenvector value (or the priority vector) of the comparison matrix provide the relative importance of the various criteria being compared. It is normalized, since the sum of all elements in the priority vector is 1. The elements of the normalized eigenvector are termed as weights with respect to the given criteria. The largest value in the priority weight represents the most important criterion. To get the eigenvector values, following steps can be adopted:

-

Step I Given comparison matrix (e.g., \(M_{1})\) is multiplied with itself to provide a resultant matrix \(R_{1}\)

$$\begin{aligned} R_{1}=M_{1} \times M_{1} \end{aligned}$$ -

Step II Subsequently, the sum across the rows in \(\hbox {R}_{1}\) is computed

-

Step III The normalized principal eigenvector column representing the relative ranking of the criteria is obtained by dividing each row sum with the sum of its column (i.e., row sum column).

-

Step IV Again, the resultant matrix \(R_{2 }\) is computed by multiplying the matrix \(\hbox {R}_{1 }\)with itself such that

$$\begin{aligned} R_{2 }=R_{1} \times R_{1} \end{aligned}$$ -

Step V Next, the normalized principal eigenvector values are obtained for \(R_{2}\).

This process of computation of resultant matrix and its normalized eigenvector continues until the eigenvector values are repeated.

Note that there are several methods that can be used to compute the eigenvector values. For example, the geometric mean is an alternative measure of the normalized principal eigenvector value.

-

-

Step 4 The pairwise comparisons attained with this method are subjective and need to be evaluated. Saaty (1980) proposed a random consistency index (RI) shown in Table 2 to conform if the judgement made by the experts is appropriate or not.

Prof. Saaty defined consistency ratio, CR as the comparison between consistency index (CI) and RI. It indicates the amount of allowed inconsistency in the judgement.

$$\begin{aligned} CR = \frac{ CI }{ RI } \end{aligned}$$where CI can be defined as a degree of consistency among pairwise comparisons and is defined as follows:

$$\begin{aligned} CI = \frac{\lambda _{max} -n}{n-1} \end{aligned}$$\(\lambda _{max}\) represents the maximum eigenvalue of the decision matrix and n is the number of criteria. The computation of \(\lambda _{max}\) can be carried out in following ways:

-

Step (i) Compute nth root of the product of row elements across each row in the decision square matrix

-

Step (ii) The values obtained for each row in the previous step is divided by the column (nth root column) sum

-

Step (iii) A column matrix is computed by multiplying the square decision matrix with the column matrix obtained in the preceding step

-

Step (iv) Each of the elements in the previously [Step (iii)] obtained column matrix is divided by the corresponding elements in the column matrix obtained in Step (ii)

-

Step (v) The average of the column matrix computed in Step (iv) provide \(\lambda _{max}\)

If CR is less than or equal to 10%, the inconsistency is acceptable else the subjective judgement need to be revised.

-

Step 5 Finally, the criteria weights obtained for each decision maker are combined using the aggregation technique known as aggregate individual priorities (AIP). The AIP is adopted because it is the most recommendable aggregation technique to deal with highly complex decision problems in a small expert group (Ossadnik et al. 2016). The geometric mean (GM) (Forman and Peniwati 1998) and preference aggregation (PA) (Angiz et al. 2012) procedures are used separately to aggregate the individual weights. The Data Envelopment Analysis (DEA) based PA method (Angiz et al. 2012) selected in this work computes the group decision weights of decision makers through an optimization model.

$$\begin{aligned}&\hbox {GM (across the row)}\\&\quad = \left( {\prod _{i=1}^n M_i } \right) ^{\frac{1}{n}} (n \hbox { is the number of experts}) \end{aligned}$$ -

PA method Suppose there are m decision makers evaluating n criteria.

The weights obtained for various decision makers are converted into a rank matrix, where each criteria are ranked depending on their weights.

Next, the rank matrix is used to construct a matrix which identifies the number of times a criterion is assigned a particular rank.

A matrix representing the summation of the decision weights \((\alpha _{mr})\) of a given criterion being placed in a particular rank, is constructed

The efficiency score of each criterion is obtained using a modified (Cook and Kress 1990) model:

where \(d (i, \varepsilon )=\frac{\varepsilon }{i}\) and \(\varepsilon _{max} = 0.2993.\)

Finally, the group decision weights are computed by normalizing the efficiency scores

Group eigen value method

The GEM (Qiu 1997) was exercised to assign attribute weights or determine their importance by establishing an expert judgement matrix. As a result of restricted knowledge and experience of one expert, judgement matrix from the congregation of diverse experts should be used. The aim of this method is to identify an ideal expert, who has the highest decision reliability level, most precise evaluation and his evaluation is consistent with those of other experts in the group. The steps for GEM procedure can be described as follows.

Consider the evaluation of n attributes \(N_{1}, N_{2}, N_{3}, \ldots , N_{n}\) by a group of m experts \(M_{1}, M_{2}, M_{3},{\ldots }{\ldots },M_{m}\). Let \(x_{ij}\) be the evaluation value of jth attribute by ith expert. Consider \(x = (x_{ij})_{mxn}\) be the \(m \times n\) order matrix evaluated by a group of experts such that

The evaluation vector of an expert with highest decision level and precise evaluation can be represented as follows

The sum of intersection angles between ideal expert evaluation vector and evaluation vectors of other experts should be minimum. It means \(x{*}\) can be computed when the function \(f = \sum \nolimits _{i=1}^m \left( {b^{T}x_i } \right) ^{2}\), attains the maximum value. Therefore, the evaluation vector of an ideal expert, \(x{*}\) can be computed when

The parameter, \(b^{T}\) is an eigenvector of F which satisfies, \(\forall b = (b_{1}, b_{2}, \ldots , b_{n})^{T}. \rho _{max}\) is the maximum positive eigenvalue of matrix \(F=x^{T}.x. x{*}\) is the positive eigenvector corresponding to \(\rho _{max}\). Each expert’s standardized weight vector can be obtained after the eigenvector is normalized corresponding to the maximum eigenvalue. Following steps should be adopted to compute the attribute weights.

-

1.

Assignment of evaluation points to various attributes by experts

-

2.

Transpose of evaluation matrix, and then, multiply evaluation matrix with the transpose one, as follows

$$\begin{aligned} F=x^{T}.x \end{aligned}$$ -

3.

To calculate eigenvector \(x{*}\), the power method can be adopted as used by Qiu (1997):

-

Let \(k = 0, y_{0} = (1/n, 1/n,\ldots ,1/n)^{T}\)

$$\begin{aligned} y_{1}=F y_{0}; z_{1}=\frac{y_1}{\Vert y_1\Vert _2} \end{aligned}$$ -

For \(k = 1,2,3,\ldots , y_{k+1} = { Fz}_{k}\), and \(z_{k+1}=\frac{y_{k+1} }{\Vert y_{k+1}\Vert _2 }\)

-

Check if \(\vert z_{k\rightarrow k+1}\vert \le \varepsilon \), if yes, then, \(z_{k+1}\) corresponds to \(x{*}\), otherwise go to the previous step (where, \(\varepsilon \) represent the precision and \(\vert z_{k\rightarrow k+1}\vert \) is maximum absolute value of the difference between \(z_{k}\) and \(z_{k+1}\))

-

-

4.

Normalization of obtained eigenvector

\(w_{j}=x_{*j}/ \sum \nolimits _{j=1}^n x_{*j}\) where, j = 1, 2, 3,...n, so that \(\sum \nolimits _{j=1}^n x_{*j} \) =1 and \(x_{*j }\)is the weight computed for attributes using GEM

Entropy weighing method

Entropy weight determination is an objective method which utilizes the inherent information of the attributes to determine their weights. As a result, noise in the resulting weights can be minimized, thus producing unbiased results (Li et al. 2011). The greater entropy weight of the attribute represents its higher significance. The entropy weight method utilizes the inherent information of attributes to make resultant weights more objective than subjective (Lotfi and Fallahnejad 2010).

Suppose there are m alternatives and n alternatives, \(x_{ij}\) represent the value for jth criterion and ith alternative. The entropy weight can be obtained as follows (Li et al. 2011; Lotfi and Fallahnejad 2010):

-

1.

Normalization of attribute values, \(r_{ij}\)

$$\begin{aligned}&\hbox {Benefit attribute }= \frac{x}{x_{max}}; \hbox {Cost attribute }\\&\quad = \frac{x_{min} }{x} (i = 1, 2, 3, 4, \ldots , m; j = 1, 2, 3, \ldots , n) \end{aligned}$$ -

2.

Calculation of entropy

$$\begin{aligned}&H_j = -\, \frac{\sum \nolimits _{i=1}^m P_{ij} \ln P_{ij} }{\ln m} \\&\quad (i = 1, 2, 3, 4, \ldots , m; j = 1, 2, 3, \ldots , n)\\&\hbox {where},\\&P_{ij} = \frac{r_{ij} }{\sum \nolimits _{i=1}^m r_{ij}}\\&\quad (i = 1, 2, 3, 4,\ldots , m; j = 1, 2, 3, \ldots , n) \end{aligned}$$ -

3.

Computation of entropy weight

$$\begin{aligned} w_j = \frac{1-H_j }{n-\sum \nolimits _{j=1}^n H_j }, \sum \limits _{j=1}^n w_j = 1, (j = 1, 2, 3, \ldots , n) \end{aligned}$$

Ranking methods

The TOPSIS, ELECTRE and SAW are well-known ranking methods developed by Hwang and Yoon (1981), Benayoun et al. (1966) and MacCrimmon (1968) respectively. These methods guide the decision makers to select the most appropriate alternative with the greatest benefit and minimum discrepancy, depending on the importance of different criteria.

Technique for order preference by similarity to ideal solution

The TOPSIS selects a near-optimal solution according to Yue (2011) and Krohlinga and Pacheco (2015). It utilizes the idea of increasing the distance from negative-ideal solution and minimizing the distance from the positive ideal solution. It finds its application in numerous areas such as supply chain management, manufacturing systems, banking, etc.

Let \(x_{ij}\) represent the value of alternative i corresponding to criterion j. A decision matrix comprising values of different alternatives with respect to their criteria can be represented as:

Let J represent the set of benefit attributes or criteria (i.e., higher is better) and \(J'\) be the set of negative attributes or criteria (lower is better). The implementation of TOPSIS is dependent on the following steps.

-

Step 1 Generation of normalized decision matrix. It transforms various attribute dimensions into non-dimensional attributes in order to carry out their comparison.

$$\begin{aligned} r_{ij}=x_{ij}/(\Sigma x^{2}_{ij})\hbox { for }i = 1, {\ldots }, m; j = 1, {\ldots }, n \end{aligned}$$ -

Step 2 Construction of the weighted normalized decision matrix. Multiplying each column of the normalized decision matrix by its associated weight (computed using GEM). Now, the element of new matrix would become:

$$\begin{aligned} R_{ij}=w_{j} r_{ij} \end{aligned}$$ -

Step 3 Determination of ideal and non-ideal solution as follows:

$$\begin{aligned}&\hbox {Positive Ideal Solution (PIS)}, A^{+} = \{R_{1}^{+}, R_{2}^{+},\ldots ,R_{m}^{+}\};\\&R_{j}^{+}=\{\hbox {max}_{i} (R_{ij})\hbox { if }j \in J; \hbox {min}_{i} (R_{ij})\hbox { if }j \in J'\}\\&\hbox {Negative Ideal Solution (NIS)}, A^{-} = \{R_{1}^{-}, R_{2}^{-},\ldots ,R_{m}^{-}\};\\&R_{j}^{-} = \{\hbox {min}_{i} (R_{ij})\hbox { if }j \in J; \hbox {max}_{i} (R_{ij})\hbox { if }j \in J'\} \end{aligned}$$ -

Step 3 Computation of Euclidean distances from the PIS, \(A^{+}\) (benefits) and NIS, \(A^{-}\) (cost) of each alternative as follows:

$$\begin{aligned} S_{i }^{+}= & {} [\Sigma (R_{j}^{+}- R_{ij})^{2}]^{1/2}(i= 1,2,\ldots ,m;\\&j= 1,2,\ldots ,n)\\ S_{i}^{-}= & {} [\Sigma (R_{j}^{-}- R_{ij})^{2}]^{1/2}(i= 1,2,\ldots ,m;\\&j= 1,2,\ldots ,n) \end{aligned}$$ -

Step 4 Calculation of the relative closeness for each alternative with respect to PIS as given by:

$$\begin{aligned} \theta _{i}=S_{i}^{-} / (S_{i}^{+}+ S_{i}^{-}), \quad 0< \theta _{i }< 1 \end{aligned}$$ -

Step 5 Rank the alternatives according to the relative closeness. The best alternatives are those that have higher value \(\theta _{i}\) and therefore should be chosen because they are closer to PIS.

Elimination and choice expressing the reality

The ELECTRE method is based on the concept of concordance and discordance indices in order to identify the best alternative (Fulop 2005; Pang et al. 2011; Milani et al. 2006; Mojahed et al. 2013; Abedi and Ghamgosar 2013). It selects the best choice through all alternatives pairwise comparison. Among the ELECTRE family, ELECTRE I is usually applied to the selection problems. It generally finds its applications in water, environment and energy management. It is based on the following steps.

-

Step 1 Compute the normalized decision matrix.

Suppose each of the m alternatives \((A_{1}, A_{2}, A_{3}, \ldots , A_{m})\) are evaluated with respect to \(n (N_{1}, N_{2}, N_{3}, \ldots , N_{n})\) attributes (or criteria). The resulting decision matrix can be denoted as \(X = (x_{ij})_{mxn}\). The decision matrix can be normalized using following equations

$$\begin{aligned} r_{ij }=\frac{x}{x_{max}}\hbox { (for benefit attribute) or } \frac{x_{min} }{x}\hbox { (for cost attribute)} \end{aligned}$$ -

Step 2 Calculate the weighted normalized decision matrix as follows.

$$\begin{aligned} R_{ij}=r_{ij}{*}w_{j}, i = 1, 2, 3, \ldots , m; j = 1, 2, 3, \ldots , n \end{aligned}$$\(w_{j}\) is the relative weight of the jth criterion and \(\sum \nolimits _{j=1}^n w_j = 1\)

-

Step 3 Determine the concordance C(a, b) and discordance sets D (a, b). The C(a, b) is composed of criteria where \(A_{a}\) is either better or equal to \(A_{b}\) while D (a, b) comprises of criteria for which \(A_{a}\) is inferior to \(A_{b}\). The two sets can be defined as follows

$$\begin{aligned} C (a, b)= & {} \{j {\vert } W_{aj }\ge W_{bj}\}; \quad D (a, b) = \{j {\vert } W_{aj }< W_{bj}\}\\ a, b= & {} 1,2,3, \ldots , m, a \ne b \end{aligned}$$\(W_{aj}\) is the weighted normalized value of attribute \(A_{a}\) with respect to jth criterion while \(W_{bj}\) is the weighted normalized value of attribute \(A_{b}\) with respect to jth criterion.

-

Step 4 Calculate the concordance matrix. The concordance index of C(a, b) which is equal to the sum of the weights of criteria in the concordance set is obtained as

$$\begin{aligned} c_{ab }= \sum \limits _{j\in C\left( {a,b} \right) } w_j , \sum \limits _{j=1}^n w_j = 1 \end{aligned}$$The concordance matrix can be written as follows

$$\begin{aligned} C=\left[ {{\begin{array}{c@{\quad }c@{\quad }c@{\quad }c} -&{} {c_{12} }&{} \cdots &{} {c_{1m} } \\ {c_{21} }&{} -&{} \ldots &{} {c_{2m} } \\ \vdots &{} \vdots &{} \ddots &{} \vdots \\ {c_{m1} }&{} {c_{m2} }&{} \ldots &{} - \\ \end{array} }} \right] \end{aligned}$$ -

Step 5 Calculate the discordance matrix. The discordance index of D(a, b) is computed as

$$\begin{aligned} d_{ab }=\frac{{\max }_{j\in D \left( {a, b} \right) } \left| {W_{aj} -W_{bj} } \right| }{{\max }_{j\in J} \left| {W_{aj} -W_{bj} } \right| } \end{aligned}$$The discordance matrix can be written as follows

$$\begin{aligned} D=\left[ {{\begin{array}{c@{\quad }c@{\quad }c@{\quad }c} -&{} {d_{12} }&{} \cdots &{} {d_{1m} } \\ {d_{21} }&{} -&{} \ldots &{} {d_{2m} } \\ \vdots &{} \vdots &{} \ddots &{} \vdots \\ {d_{m1} }&{} {d_{m2} }&{} \ldots &{} - \\ \end{array} }} \right] \end{aligned}$$ -

Step 6 Determine the concordance dominance matrix. Compute threshold value \((\bar{c})\) as follows

$$\begin{aligned} \bar{c}= & {} \sum \limits _{a=1}^m \sum \limits _{b=1}^m \frac{c_{ab} }{m\left( {m-1} \right) },\\&m\hbox { is the dimension of the matrix} \end{aligned}$$A concordance dominance matrix, O is defined as

$$\begin{aligned} o_{ab}= 1\hbox { if }c_{ab} \ge \bar{c}, o_{ab}= 0\hbox { if }c_{ab} < \bar{c} \end{aligned}$$ -

Step 7 Determine the discordance dominance matrix, P Compute the threshold value \((\bar{d})\) as follows

$$\begin{aligned} \bar{d}= & {} \sum \limits _{a=1}^m \sum \limits _{b=1}^m \frac{d_{ab} }{m\left( {m-1} \right) },\\&m\hbox { is the dimension of the matrix} \end{aligned}$$The discordance dominance matrix, P is defined as

$$\begin{aligned} p_{ab}= 1\hbox { if }d_{ab} < \bar{d}, p_{ab} = 0\hbox { if }d_{ab} \ge \bar{d} \end{aligned}$$ -

Step 8 Determine the aggregate dominance matrix, S as

$$\begin{aligned} S=o_{ab}* p_{ab} \end{aligned}$$The matrix, S is computed by multiplying the corresponding elements of O and P.

-

Step 9 Obtain the best alternative. Each column of the matrix, H must be analyzed. The column with the least number of 1’s should be chosen as the most preferred choice.

Simple additive weighting

The SAW is also known as a weighted linear combination or scoring method (Afshari et al. 2010; Adriyendi 2015). It is a simple and most commonly used MCDM method. Its working is based on the computation of weighted average. In this method, an evaluation score is computed for each alternative by multiplying the normalized value with attribute weights and summing of the products for all criteria. The procedure for SAW can be described as follows.

Suppose there are m alternatives and n criteria, \(x_{ij}\) represent the value for jth criterion and ith alternative.

-

Step 1 Normalization of attribute values, \(r_{ij}\)

$$\begin{aligned}&\hbox {Benefit attribute }= \frac{x}{x_{max}}; \hbox {Cost attribute} = \frac{x_{min}}{x} \\&\quad (i = 1, 2, 3, 4, \ldots , m; j = 1, 2, 3, \ldots , n) \end{aligned}$$ -

Step 2 Construction of weighted normalized decision matrix

$$\begin{aligned} W_{ij }=w_{j}\times r_{ij}, \sum \limits _{j=1}^n w_j = 1 \end{aligned}$$ -

Step 3 Computation of score for each alternative

$$\begin{aligned} S_{i}= \sum \limits _{j=1}^n W_{ij} (i = 1, 2, 3, 4, \ldots ,m) \end{aligned}$$ -

Step 4 Selection of best alternative Alternative with highest score represent the most appropriate choice i.e.,

$$\begin{aligned} \hbox {Best Alternative }= {\max }_i S_i \quad (i = 1, 2, 3, 4, \ldots ,m) \end{aligned}$$

Experimentation

This section discusses about the procedure used to get the values for different criteria. The selected part was scanned using three different digitization devices. The distinguishing features of each of the data capturing methods are discussed in order to assess different criteria to be used in the MCDM methods. The various criteria include the cost, MPE, accuracy, part size, scanning time, ease of use, accessibility, etc.

Characteristics of different systems

The medium size stationary CMM (shown in Fig. 1) employed in this investigation can accommodate rather large size work pieces (1200 mm \(\times \) 900 mm \(\times \) 700 mm) and achieves the accuracy (1.6 \(+\) L/333) \(\upmu \hbox {m}\), where L is the length of the work piece in mm. This machine can be equipped with both the contact and non-contact scanning probes.

Using the Design of experiment (DOE), it was established that similar scanning accuracy is achieved using both the active and passive scanning touch probes (Fig. 2a, b). The only difference found was the shorter scanning time while using an active scanning system which employed at higher scanning speed. These systems can provide linear measuring tolerance between (1.7 \(+\) L/300) \(\upmu \hbox {m}\) and (3.5 \(+\) L/250) \(\upmu \hbox {m}\). The accuracy of the acquired data for these systems depends on the parameters such as the scanning speed, distance between lines, distance between points, etc. The laser line scanning probe of a stationary CMM (Fig. 2c) works on the measuring principle of triangulation. The lighting system projects a laser line onto the object to be scanned and the sensor receives the reflected light from the object. The laser line scanning probe offers a measuring accuracy of \(20\, \upmu \hbox {m}\). This system can scan up to 250,000 points/s with a maximum scanning speed of 30 mm/s. An optimal distance between this probe and the surface to be scanned has been found to be between 55–75 mm.

The portable arm CMM provides an alternative to the stationary CMM in the RE of large work pieces. It provides greater flexibility and dexterity to the user. The laser line scanning probe incorporated with a portable arm CMM (shown in Fig. 1) provides an accuracy upto \(40\,\upmu \hbox {m}\) under ideal conditions as specified by the manufacturer. It has a measuring range up to 6 feet and can scan upto 19,200 points/s. The system output is compatible with a number of commercially available software such as Geomagics, Polyworks, and Rapidform, etc.

Procedure to evaluate digitization technologies

A mold cavity of moderate complexity was chosen as a test object in this investigation. The injection mold cavity is an ideal part for comparing the scanning technologies as it provides a freeform shape with sharp edges, fillets, radii, sharp corners and curvatures. The cavity consisted a shape of a plastic bottle (154 mm long and 67 mm round) and it was machined to its final shape by ball end milling. The deviations measured between a number of pre-defined points on the actual mold and the corresponding points in the CAD model were used to compare the accuracy of the three probing techniques. This deviation is actually an indication of the accuracy and represents the total error, i.e., the sum of the digitization error and the data processing error. The same processing software and the same processing parameters were used to process each of the three point clouds for developing the CAD model, since the contribution of data processing error to the total error was presumed to be the same in each case. Therefore, the accuracy of the CAD models differed largely due to the different digitization systems and the associated digitization issues.

The test object was scanned using three different digitization systems to capture the point cloud data. The same set up for this investigation was ensured by fixing the test object on the table of stationary CMM as shown in Fig. 1. In this way, the errors due to different setups of the object could be avoided. The data thus obtained from three different techniques was processed and a CAD models were created using two different commercial software. The block diagram representing the methodology adopted in this investigation is shown in Fig. 3.

The processing of each of the three point clouds was carried out in two different commercially available processing software: RE software-I and RE software-II. The two processing software were utilized to ascertain the results of the investigation. Finally, the CAD models obtained from the point cloud measurements were compared with the actual mold at a number of pre-defined points using the touch probing system of a CMM. The analysis for all the models was performed in a common analysis software.

Data acquisition of the injection mold cavity

The selected part was scanned using three different scanning devices two based on a stationary bridge type CMM (Fig. 4) and one based on a portable arm CMM. The common steps while conducting the scanning of the surface are as follows: Cleaning of part and probing system, fixing of part on the machine table, calibration of the scanning probes prior to conducting the experiments as per the procedures provided by CMMs vendors and confirming to international standards and the setting of parameters for scanning in the measurement software.

Scanning touch probe incorporated in the stationary CMM

There were a number of parameter settings such as pitch of scan lines, pitch of scan points, scanning speed etc., in the scanning touch probe. The pitch of scan lines defines the distance between scan lines and the pitch of scan points is the distance between points on each scan line. Using the DOE approach, the optimal values of the following parameters were established and are presented in Table 3.

Laser line scanning probe incorporated in the stationary CMM

This method of data acquisition works in a semi-automatic mode. The alignment of the sensor (and the laser beam) with reference to machine axis and calibration of the laser line scanning probe was performed using a mat grey reference sphere with 30 mm diameter. The positioning of the part on the machine table was done in such a way that no information about the shape and features of the part remain undigitized. The important parameters required to be set for effective scanning included the pitch line direction, pitch scan direction, kind of points, measuring field, laser power, shutter time, threshold of reflection, etc.

The point data were acquired as qualified surface points (QSP). The QSP is a set of points extracted from the raw scan lines. An area of interest (AOI) is defined around the intersection point using the lattice constants: pitch line direction and pitch scan direction. All the points within AOI are used to calculate QSP according to Gaussian sphere algorithm. The pitch scan direction represents the distance between scanning lines and the pitch line direction is the distance between points on each line. The output power is measured in mW while the shutter time controls the exposure time of the detector and is measured in ms. The threshold for reflection is a measure of noise while the measuring field determines the range captured by the laser line scanning probe. Using the DOE approach, the optimal values of the following parameters were established and they are shown in the Table 4.

Laser line scanning probe attached to the portable arm CMM

The data acquisition using the portable arm CMM is a manual method where the operator moves the scanner around the object to get all the required scans. The articulated arm allows the free orientation of scanning device mounted at the end of the portable arm. The calibration of the laser line scanning probe involved the measurement of a calibration plate. The part was positioned in such a way that the complete geometry could be reached with the free movement of the arm and all vital information could easily be captured. There were some important parameters for effective scanning and these parameters included the scan rate, scan density, time of exposure, noise threshold, data format, etc. The scan rate is defined as the number of scan lines per second where, the 1/1 is the normal rate of scan lines per second. The scan density decides the number of points on each scan line and the 1/1 means that all of the points reflected into the sensor. The exposure settings of the laser depend on the nature of the object being scanned and is calculated automatically by the device depending on the surface morphology and reflectivity. The noise threshold is a non-dimensional entity which represents the measure of noise in the data. The value for noise threshold was automatically selected by the software depending on the part surface and environmental conditions. The data format can either be ordered data or unordered (raw) data or both. The ordered data is a point cloud of consistent density with points placed in orderly rows and columns. The unordered data is a point cloud of variable density and it comprises of points at every location that the laser line scanning probe detects on the object. The laser line scanning probe measures the intensity, or the return power of all the pixels projected onto the surface by the laser line probe. All the data having intensity below the noise threshold value is considered noise or “chatter”. Before starting the scanning procedure, the various parameters influencing the scan procedure needed to be selected as shown in the Table 5.

Processing of the data and the creation of the CAD model

The digital point cloud data was either compatible with the RE software or it has to be converted to a neutral format which the processing software can handle. Subsequently, the point clouds acquired using the different digitization techniques were processed and CAD models were obtained. The same data processing steps (Fig. 6) and the parameters (such as surface approximation parameters, triangle count, noise reduction, etc.) were followed to retrieve the final CAD models. The point clouds captured by three different measuring systems are shown in Fig. 5.

The two different processing software were utilized separately to process the same set of point clouds and generate the CAD models. The objective behind the two processing software was to study the variation of deviation caused by the slightly different processing techniques used in the software. A total of six CAD models were obtained, each with three different point clouds and processed by two different RE processing software.

Analysis of the CAD model

A total of 19 points were selected on the whole surface to find out the deviation between the RE CAD model and the actual part. Out of these 19 points, the 4 points (P9, P10, P18 and P19) were selected on the open surfaces with rather large radii of curvature, 2 points (P1 and P16) were chosen on the fillets, 3 points (P13, P14 and P15) were on the corners, 7 points (P2, P3, P5, P6, P7, P8 and P17) were selected on the vertical faces and the remaining 3 points (P4, P11 and P12) were defined on the surfaces having small curvatures and the radii. The accuracy of the CAD models was evaluated using the analysis software. The analysis consisted of measuring the deviation between a point on the actual part and the corresponding point in the CAD model.

The CAD models from the RE processing software were transformed into a neutral format (e.g., IGES) compatible with the analysis software. The CAD model comparison steps are shown in Fig. 6. The CAD model imported into the analysis software was overlaid onto the actual part in order to calculate the deviation. For the complete alignment of the part, the six points were probed manually on the test part. Among the six probed points, three points were needed to block the tilting (eliminated two rotations and one translation), two points for blocking 2D rotation (eliminated one rotation and translation) and one point to block the remaining last translation. The process of aligning the CAD model with the position of the actual part on the machine table takes place in an iterative fashion and is repeated until the achieved alignment is within the specified tolerance. Hence, the alignment process brought the actual part in the same coordinate system as that of the CAD model. Subsequently, the 19 points located on the different parts of the mold were manually defined in the CAD model. Then, an automatic measuring cycle was executed to measure the actual positions of the points selected in the CAD model. Finally, the deviation between the points in the CAD model and its counterpart on the actual mold surface was calculated as shown in Fig. 7.

All the six CAD models (three each from the two RE software) were analyzed following the same steps as mentioned above to compare the three digitization techniques.

Attributes of digitization systems

The attributes can be categorized either as benefit attributes or cost attributes. The attributes whose higher values are required, i.e., they have a positive influence on system performance when their value increases, can be defined as the benefit attributes. For example, in this work, part size, and accessibility constitute benefit attributes. Similarly, cost attributes have a negative effect, if their value increases. The cost, MPE, error, scanning time, operator involvement, no. of scanning parameters, difficulty can be categorized as cost attributes.

The cost of the system (in dollars, includes the equipment, software and setup costs) was determined either through quotations or from their respective websites. As a result of the specifications provided by the manufactures mismatch with actual values, owing to numerous uncertainty components (such as temperature, geometric errors, workpiece characteristics, operator, evaluation software, alignment, fixturing, etc.), the MPE (as specified by manufactures) as well as the error (i.e., RMS), obtained through comparative analysis, were used as attributes. The MPE was actually computed under ideal conditions, while the error was calculated under real conditions. The part size determines the maximum volume \((\hbox {m}^{3})\) that can successfully be measured by the respective system. It was computed as follows. The different systems could accommodate volumes as follows: VAST XXT \(0.4\hbox { m}^{3 }(800\times 1000 \times 500~\hbox {mm}^{3})\), line scanner \(0.8\hbox { m}^{3} (900 \times 1200 \times 700\hbox { mm}^{3})\) and portable laser scanner \(1.7\hbox { m}^{3} (1200 \times 1200 \times 1200\hbox { mm}^{3})\). The scanning time (min) which is the time taken for digitization, was also determined experimentally as described in previous sections. As the number of scanning parameters increases, the effort to get the appropriate values for these parameters also increases.

The measured deviations at a number of pre-defined points and their RMS (root mean square) values for three cases as mentioned before are presented in Tables 6 and 7. The RMS values quantifies the measurement in the three systems. The data in the Table 6 are obtained after processing in RE software-I and the data in Table 7 are obtained after processing in RE software-II.

From the Tables 6 and 7, it can be seen that the least RMS deviation was observed using the scanning touch probe \((9.51\, \upmu \hbox {m})\) and the highest deviation with portable arm CMM mounted laser line scanning probe \((50.93\, \upmu \hbox {m})\). The stationary CMM provided better performance as compared to the portable arm CMM. It had been found that the laser line scanning probe on the stationary CMM resulted into an RMS value of deviation \(26.02 \,\upmu \hbox {m}\) whereas laser line scanning probe on the portable arm CMM yielded a deviation of \(50.93\,\upmu \hbox {m}\). Similar results were obtained with CAD models processed in the RE software-II. The least deviation of \(12.21\, \upmu \hbox {m}\) was observed with scanning touch probe and the highest deviation of \(61.04\, \upmu \hbox {m}\) with portable arm CMM mounted laser line scanning probe. It had also been noticed that the laser line scanning probe with stationary CMM provided an RMS value of deviation \(25.88\,\upmu \hbox {m}\) which was lower than the RMS value of deviation \(61.04 \,\upmu \hbox {m}\) delivered by the laser line scanning probe with portable arm CMM.

The scanning time is another important performance measure other than the accuracy of the digitizers to compare the different methods of part geometry digitization. The scanning time is an essential component of the total time taken by any RE process. The scanning touch probe system of stationary CMM had taken a highest time of 188 min for capturing the mold surface geometry while portable arm CMM mounted laser line scanning probe had digitized the same mold geometry in approximately 15 min. Moreover, the time taken by the laser line scanning probe on the stationary CMM was almost 30 min.

The largest deviation of \(146.3\, \upmu \hbox {m}\) (point P7) was observed in the CAD model generated from the point cloud data captured with laser line scanning probe of portable arm CMM. It could also be discovered that the CAD model obtained from the point cloud data measured by the scanning touch probe of the stationary CMM had a least deviation of \(0.6 \,\upmu \hbox {m}\) at point P9 (Table 6). The similar results were observed with CAD models developed in RE software-II. In case of the point cloud data processed in RE software-II, the largest deviation of \(147.5\, \upmu \hbox {m}\) (point P7) was observed in the CAD model generated from the point cloud data captured using the laser line scanning probe of portable arm CMM whereas the least deviation was noticed in the CAD model obtained from the point cloud data measured by the scanning touch probe of stationary CMM as presented in Table 7. The data acquired with stationary CMM mounted laser line scanning probe attained better results in comparison to portable arm CMM mounted laser line scanning probe. The largest deviations were traced at points P1, P5, P6, P7 and P8, etc., while the lowest deviations were observed at points P9, P16, P18, P19 (Fig. 8).

The least deviation of \(0.6\,\upmu \hbox {m}\) was obtained by the scanning touch probe system of stationary CMM, but the RMS value of deviation was \(9.51\,\upmu \hbox {m}\). Under the ideal conditions, the uncertainty of measurement in the stationary CMM using a scanning touch probe is about \(3\,\upmu \hbox {m}\). The RMS deviation is about \(9.51\,\upmu \hbox {m}\) i.e., a difference of \(6.5 \,\upmu \hbox {m}\). In the analysis software, the CAD model was overlaid on top of the actual object and there was an error of about \(2 \,\upmu \hbox {m}\) inherent to the process. Thus, about \(5\,\upmu \hbox {m}\) deviation might probably be attributed to a large number of factors and among them the surface undulation of as milled mold might have contributed about 3-\(4 \,\upmu \hbox {m}\) to this error. The laser line scanning probe of a stationary CMM had resulted into the least deviation of \(3.4 \,\upmu \hbox {m}\) and the RMS value of \(26.02\,\upmu \hbox {m}\). Similarly, the laser line scanning probe on a portable arm CMM had provided a least deviation of \(9.6\,\upmu \hbox {m}\) and a RMS deviation of \(50.93 \,\upmu \hbox {m}\). It had been witnessed that the RMS value of deviation in each of the three cases is considerably larger than the measurement uncertainties of these devices under ideal condition.

The operator involvement included three levels, i.e., automatic (no involvement of the operator, once the measurement begins), semi-automatic (partial engagement) and manual (complete service of the operator needed). The difficulty level defines the ease of use and user friendliness of the system while accessibility determines the ability of the system to inspect various regions on the part.

The different levels of the intangible attributes such as operator involvement, difficulty and accessibility were decided based on experience and experimentation. The intangible attributes are defined as criteria which cannot be measured on a well-defined scale. Therefore, the different levels were identified using the scale for intangibles as shown in Table 8. The different attributes identified using experiments, manufacturer data and user experience are shown in Table 9.

Selection of digitization systems

The overall benefit of selecting the most appropriate digitization system is precisely related to the improved RE performance. Selecting the right digitization system for the given application influences the efficiency and efficacy of the RE process. The random selection of the data acquisition system can lead to lower benefit to cost ratio. A combination between various MCDM approaches as shown in Fig. 8 have been applied to obtain the best digitization system. The methods presented in this research possess their own benefits and limitations to aid in decision-making. Following are the different approaches adopted to realize the best system for the given RE application.

GEM–TOPSIS

The GEM–TOPSIS procedure employed in this work has been inspired by machine selection method developed by Yang et al. (2016). This method was based on the alliance of two techniques, i.e., GEM (Qiu 1997) and TOPSIS (Hwang and Yoon 1981).

To compute weights of different attributes, a group of five experts was considered. This group comprised of industrialists, academician, researcher and operator. These experts were made to rank various attributes on a scale of 1–10, where, 1 represents a lowest priority and 10 points at highest priority. The ranking results thus obtained are shown in Table 10.

The ratings can be represented by the evaluation matrix x, as shown below

Assuming precision, \(\varepsilon = 0.0005\) and applying the power method, the ideal evaluation vector \(x_{*}\) can be computed from the Table 11.

The most important attribute among the experts is the error (computed in actual working conditions) and lowest weight was obtained for operator involvement.

After normalization, the weights obtained for different attributes can be re-written as

The implementation of TOPSIS commenced with development of decision matrix as shown in Table 12. Subsequently, the weighted normalized decision matrix (Table 13) was prepared using weights obtained from GEM procedure.

To compute PIS and NIS, the different attributes have been categorized as benefit and cost attributes.

Set of benefit attributes, \(J =\) Part size and accessibility

Set of cost attributes, \(J^{{\prime }} =\) Cost, MPE, error, scanning time, operator involvement, no. of scanning parameters, and difficulty

Consequently, the Euclidean distances from PIS \((S_{i}^{+})\) and NIS \((S_{i}^{-})\) were computed and finally, the relative closeness to PIS \((\theta _{i})\) was determined as shown in Table 14.

Among three alternatives, the system \(\hbox {S}_{1}\) which is a scanning touch probe, has highest \(\theta _{i}\), and thus, it can be reported as the best system. Hence, the three systems can be ranked as follows:

AHP–TOPSIS

The AHP–TOPSIS technique used in this work is similar to the approach adopted by Banwet and Majumdar (2014). This approach was based on the combination of AHP and TOPSIS.

The AHP was used to elicit weights by forming the pairwise comparison matrix as shown in Table 15. A total of five pairwise comparison matrix for five stakeholders were obtained. The objective behind multiple experts is combining the judgements of more than one expert to eliminate any bias. The CR is computed for each expert to conform if the judgement made by them is acceptable or not. The inconsistency is acceptable, since CR is less than 10% in each case as shown in Table 16.

The collective judgement was obtained through GM (Forman and Peniwati 1998) as well as PA technique (Angiz et al. 2012) as shown in Table 17.

Subsequently, the weighted normalized decision matrix were obtained as shown in Tables 18 and 20 using AHP–GM and AHP–PA respectively.

The PIS and NIS (based on Table 18) were obtained as follows.

Consequently, the Euclidean distances from PIS \((S_{i}^{+})\) and NIS \((S_{i}^{-})\) were computed and finally, the relative closeness to PIS \((\theta _{i})\) was determined as shown in Table 19.

Among three alternatives, the system \(\hbox {S}_{1}\) which is a Scanning touch probe, has highest \(\theta _{i}\), and thus, it can be reported as the best system. Hence, the three systems can be ranked as follows.

The PIS and NIS (based on Table 20) were obtained as follows.

The Euclidean distances from PIS \((S_{i}^{+})\) and NIS \((S_{i}^{-})\) were computed and finally, the relative closeness to PIS \((\theta _{i})\) was determined as shown in Table 21.

Among three alternatives, the system \(\hbox {S}_{1}\) which is a Scanning touch probe has highest \(\theta _{i}\), and thus, it can be reported as the best system. Hence, the three systems can be ranked as follows:

Entropy–TOPSIS

The amalgamation of Entropy–TOPSIS scheme is influenced by the approach of Li et al. (2011), who applied entropy weight method and TOPSIS method in the safety evaluation of coal mines. In this approach, the weights of criteria were computed using the entropy weight method, while three digitization systems were evaluated using TOPSIS. The computation of the entropy method initiated with the computation of the weighted normalized decision matrix as shown in Table 22.

After standardization of different attributes, the entropy \(H_{j}\) and weights were computed for different criteria are shown in Table 23.

Once the entropy weights were obtained, the TOPSIS which commenced with the normalization of decision matrix was applied. The computed values of the weighted normalized decision matrix are shown in Table 24.

To compute PIS and NIS, the different attributes have to categorized as benefit and cost attributes.

The Euclidean distances from PIS \((S_{i}^{+})\) and NIS \((S_{i}^{-})\) were computed to obtain relative closeness with PIS \((\theta _{i})\) as shown in Table 25.

Among three alternatives, the system \(\hbox {S}_{1}\) which is a Scanning touch probe, has highest \(\theta _{i}\), and thus, it can be reported as the best system. Hence, the three systems can be ranked as follows:

GEM–ELECRE

This approach is a consolidation GEM and ELECTRE methods. In this method, the weights computed by GEM were used as input to the ELECTRE method.

ELECTRE commenced with the development of the weighted normalized matrix as shown in Table 26.

Consequently, the concordance and discordance sets were defined as shown in Tables 27 and 28 respectively. A concordance set signifies that one alternative is better than other alternative in terms of sum of weights. A discordance set is computed by the absolute difference of the alternative pair divided by the maximum difference over all pairs.

The intersection of the concordance and discordance sets resulted in the aggregate matrix, which was calculated as shown in Table 29.

Among the three alternatives, S1 (Scanning touch probe) was identified as the best alternative.

AHP–ELECTRE

This approach was a fusion of AHP and ELECTRE methods. The weights obtained using AHP were fed to the ELECTRE method. Subsequently, ELECTRE method generated the weighted normalized decision matrix as shown in Table 30.

Then, the concordance and discordance matrices were produced as shown in Tables 31 and 32 respectively.

The alliance of the concordance and discordance sets resulted in the aggregate set, which was calculated as shown in Table 33.

S1 (Scanning touch probe) was obtained as the best choice among the three alternatives.

Entropy–ELECTRE

This combination involved the development of a weighted normalized decision matrix (Table 34) using the weights computed by the entropy weight method.

Next, ELECTRE method required the evaluation of two indices, the concordance (Table 35) and the discordance (Table 36) indices, defined for each pair of alternatives.

The intersection of the concordance and discordance sets provided the aggregate set as shown in Table 37.

Among the three alternatives, S1 (Scanning touch probe) was identified as the best alternative.

SAW

SAW utilizes a linear combination of weighted criteria for each alternative in order to compute the overall final score of each alternative In this method, the cumulative evaluation for each of the alternatives is performed and then the alternatives are ranked depending the final. The weights calculated using GEM, AHP–GM, AHP–PA and Entropy were used in SAW for acquiring best alternative. The final scores computed for GEM–SAW, AHP (GM)–SAW, AHP (PA)–SAW and Entropy–SAW are presented in Table 38.

In each of the four combinations, the same ranking order was obtained. Based on the final scores, the three systems were ranked as follows.

Comparative analysis

The accuracy provides an important parameter in the selection of digitization systems. As shown in Fig. 9, the lowest error was obtained in the case of scanning touch probe system, whereas portable arm CMM resulted in highest error. It is also interesting to note that there was a difference in RMS values of deviation from the two RE software after processing the identical point cloud data. For example, the RMS value of deviation was \(9.51\,\upmu \hbox {m}\) in the case of RE software-I and it was \(12.21\,\upmu \hbox {m}\) in the case of RE software-II i.e., a difference of less than \(3\,\upmu \hbox {m}\). A difference of \(10\,\upmu \hbox {m}\) was registered in the case of the data from the laser line scanning probe of the portable arm CMM. It might be due to a number of factors such as a different mode of operation while point cleaning, surface segmentation and surface generation, etc. in the two processing software.

The cost of the systems is also an important consideration, while choosing a system for a given application. Among the three systems, the portable arm CMM was found to be the cheapest, whereas laser line scanning probe was the most expensive. The scanning time is another important performance measure other than the accuracy and cost of the digitizers, when comparing different methods of part geometry digitization. The scanning time is an essential component of the total time taken by any RE process. The scanning touch probe system of stationary CMM takes highest time, while portable arm CMM mounted laser line scanning probe digitize the same geometry in the least time. The time taken by the laser line scanning probe on the stationary CMM takes almost double the time taken by the portable arm CMM.

It can also be pointed out that the largest deviation were observed in the CAD model generated from the point cloud data captured with laser line scanning probe of portable arm CMM. The CAD model obtained from the point cloud data measured by the scanning touch probe of the stationary CMM had the least deviation. The data acquired with stationary CMM mounted laser line scanning probe attained better results in comparison to portable arm CMM mounted laser line scanning probe. Notice that the largest deviations were traced at points P1, P5, P6, P7 and P8, etc. It has to be pointed out that the points having larger deviations either lay on the vertical wall of the surface or existed at some curvature, radius, sharp corners etc. as shown in Fig. 7. The larger deviations at these points might have been caused by the digitization issues such as poor reflection (including total reflection, multiple inner reflection, etc.), occlusion, inaccessibility to the features. The lowest deviations observed at points P9, P16, P18, P19 were lying on open surfaces and they suggested ideal reflection, high accessibility, no occlusion, etc. at these points on the surface.

The laser line scanning probe with stationary CMM worked in the semi-automatic mode, which reduced the human intervention compared to the laser line scanning probe on portable arm CMM where the operation was completely manual. Therefore, the mode of operation affected the accuracy of the final CAD model. The portable arm CMM mounted devices being rather bulky resulted in the fatigue of the operator. The working volume of portable arm CMM mounted devices is limited by the reach of the arm, but being portable it can be used for scanning even very large parts. The scanning touch probe and the laser line scanning probe attached to a portable arm CMM could access every corner of the part, whereas laser line scanning probe incorporated into a stationary CMM had limited accessibility due to limited scanning range and obvious danger of collision with the part. In the portable arm CMM mounted laser line scanning probe, exposure time was automatically selected according to the lighting conditions and reflectivity as compared to the laser line scanning probe of stationary CMM where a number of parameters such as shutter time, measuring field, laser power had to be adjusted simultaneously which lead to a point cloud data with least noise. Though, the several parameters selection process may be time consuming and tedious task, but it provides a desirable option which invariably leads to optimal data acquisition by having control over parameters such as laser power, shutter time, measuring field, threshold of reflection, etc.

The combinations of MCDM methods adopted in this work can be qualified as rational, feasible and systematic. They can successfully be applied in the selection of digitization systems for RE applications. However, as a result of a large number of existing MCDM techniques with their benefits and limitations, it becomes mandatory to select the appropriate method. Different MCDM techniques demand for varying levels of effort and provide different results. Therefore, the choice of the MCDM significantly impacts the quality of the decision as well as the magnitude of the needed effort. As shown in Table 39, different MCDM methods can rank same alternatives in different sequence. The different methods possess distinctive difficulty levels as well as variable computational requirements. Some techniques are good for large sized problems, while some of them are more suited to small scale problems.

For the purpose of comparative analysis, different methods have been rated for difficulty on a scale of 10, with 10 representing the highest difficulty level and 1 as the easiest method. These ratings may vary depending on the user’s understanding and the means of execution. The AHP–ELECTRE technique was the most demanding, whereas GEM–SAW was the simplest among all the used approaches. Notice that some techniques are best suited to medium sized problems, while some are more effective and efficient in large sized problems. The problems involving more than 15 criteria were identified as a large sized problem, whereas small sized problem consisted of less than 10 criteria. A comprehensive list of benefits and limitations of different MCDM methods is presented in Table 39. It can also be realized that ELECTRE do not provide the ordering of the alternatives, instead it gives the best choice. Among several solutions, a choice which is reproduced by several techniques can be can be considered as an ideal solution. Therefore, S1 which represents scanning touch probe mounted on stationary CMM, can be adopted as the best choice. The primary reason which can be associated with its selection is its high accuracy. Moreover, if a simple and a complex method provide similar ranking, the simplest method should be used because it can save computation time as well as effort.

In order to measure the level of ranking agreement between different MCDM methods, Kendall’s coefficient of concordance (Kendall’s W) (Habibi et al. 2014; Schmidt et al. 2001) was computed as follows. W ranges from 0 to 1, with 1 indicating the perfect match between the results obtained by different methods.

where \(r_{ij} =\) rating MCDM method i gives to alternative \(j, m =\) MCDM methods and \(k =\) number of alternatives

For this digitization selection problem, the W was obtained as 0.6461. It indicates some level of agreement between the different methods.

Conclusion and future works

The major contribution of this study is the performance evaluation of contact and non-contact digitization devices mounted on a stationary and a portable arm CMM as well as application of different MCDM methods to find out the best alternative. The goal is to customize the correct information, where a user can get enough knowledge regarding the different digitization systems as well as MCDM approaches.

This work has identified the strengths and weaknesses of different digitization systems and their specific applications and provided an indication of a level of error (deviation) which the authors believes will be of interest to the engineers involved in different RE applications. This work also finds opportunities in MCDM methods for the selection of digitization systems in RE.

The following conclusions can be drawn based on the comparative analysis of different digitization systems.

-

The lowest RMS deviation (10–12 \(\upmu \hbox {m}\)) has been obtained from point cloud measured by a scanning touch probe of the stationary CMM. The highest value of deviation at any point on the mold did not exceed 28 \(\upmu \hbox {m}\). This suggests that a surface tolerance in the range of 30 \(\upmu \hbox {m}\) can be satisfied using a bridge type CMM with a scanning touch probe.

-

The highest RMS deviation (51–61 \(\upmu \hbox {m}\)) has been obtained from the point cloud data measured by the laser line scanning probe attached to the portable flexible arm CMM. The highest value of deviation at any point on the mold did not exceed 148 \(\upmu \hbox {m}\). It suggests that a surface tolerance in the range of 150 \(\upmu \hbox {m}\) can be satisfied using this probe.

-

The shortest scanning time of 15 min was required for capturing the point cloud data using a laser line scanning probe attached to portable flexible arm CMM and the longest scanning time of 188 min was taken when capturing surface geometry using a scanning touch probe of stationary CMM.

-

There was some difference in RMS values of deviations at different points. For example, the RMS value of deviation was 9.51 \(\upmu \hbox {m}\) in the case of RE software-I and it was 12.21 \(\upmu \hbox {m}\) in the case of RE software-II i.e., a difference of less than 3 \(\upmu \hbox {m}\). It might be due to a number of facts such as a slightly different mode of operation while point cleaning, surface segmentation and surface generation in the two processing software. The two processing software, though may have similar steps, but the implementation of these steps in the two software is slightly different.

-

The laser line scanning probe of a stationary CMM operated in semi-automatic mode with much lesser human involvement as compared to the portable flexible arm CMM. Therefore, the point cloud data captured by the laser line scanning probe of stationary CMM had better quality and more uniform distribution of points as compared to the laser line scanning probe of the portable flexible arm CMM where the point cloud has a larger spread and contaminated with noise and outliers. Hence, the consistent point set of data, low noise and lesser number of outliers captured by the laser line probe of the stationary CMM was responsible for almost zero difference between the results from the two RE software.

Several methods have been proposed for solving MCDM problems. The analysis of MCDM methods performed in this paper provides a clear guide for the selection of MCDM method in a particular situation. Hence, following inferences can be made with regard to the selection of digitization system.

-

A major criticism of MCDM is that different techniques may yield different results when applied to the same problem. A decision maker should look for a solution that is closest to the ideal. Therefore, a solution which is repeated by several MCDM techniques can be chosen as the ideal solution.

-

This work does not suggest that any MCDM method is better than other methods, but it highlights the significance of evaluating different decision-making techniques and finding the most appropriate method for the given application.

-

The results of the different methods may not be equal. This can be attributed to different solution algorithms, different weights and their distributions.

-

The choice of a particular MCDM method influences the quality of the decision and the level of effort required. The different method exhibits varying difficulty levels and different computational requirements.

-

It would be rational to use one of the simplest methods. However, to check for consistency and increase the reliability of the results, the application of several methods is encouraged.

-

It can be pointed out that some techniques are good for large sized problems, while some of them are more suited to small scale problems.

-

In the present study, the AHP–ELECTRE technique was the most exhaustive, whereas GEM–SAW was the simplest among all the used approaches.

-