Abstract

Machining state monitoring is an important subject for intelligent manufacturing. Feature construction is accepted to be the most critical procedure for a signal-based monitoring system and has attracted a lot of research interest. The traditional manual constructing way is skill intensive and the performance cannot be guaranteed. This paper presented an automatic feature construction method which can reveal the inherent relationship between the input vibration signals and the output machining states, including idling moving, stable cutting and chatter, using a reasonable and mathematical way. Firstly a large signal set is carefully prepared by a series of machining experiments followed by some necessary preprocessing. And then, a deep belief network is trained on the signal set to automatically construct features using the two step training procedure, namely unsupervised greedily layer-wise pertaining and supervised fine-tuning. The automatically extracted features can exactly reveal the connection between the vibration signal and the machining states. Using the automatic extracted features, even a linear classifier can easily achieve nearly 100% modeling accuracy and wonderful generalization performance, besides good repeatability precision on a large well prepared signal set. For the actual online application, voting strategy is introduced to smooth the predicted states and make the final state identification to ensure the detection reliability by taking consideration of the machining history. Experiments proved the proposed method to be efficient in protecting the workpiece from serious chatter damage.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Machining is the most widely used processing method in product manufacturing. Under the pressure of manpower cost and the market demand, machining automation, or called unmanned manufacturing, has become the most promising way to balance the economic cost and the production (Altintas 2012). Effective and automatic machining state monitoring is very important to protect the machining tool, timely reveal equipment failures and reduce the risk of breakdown (Abellan-Nebot and Subirón 2010; Quintana et al. 2011; Siddhpura and Paurobally 2012; Teti et al. 2010). Thanks to the advancement of sensor technology, abundant machining information of the machining state during the machining can be obtained using proper sensors, like accelerometer, dynamometer, microphone, etc., which provides an alternative way to monitor the machining state according to the real-time measured signals (Aydin et al. 2015; Quintana et al. 2011). And as the market develops, many sensors are becoming essential parts of the machine tool when it is produced (Siddhpura and Paurobally 2013). Therefore, monitoring the machining states based on the real-time measured vibration signals is a very promising way.

In the past decades, researchers have proposed a lot of machining state detection methods base on real-time measured vibration signals (Kuljanic et al. 2009; Lamraoui et al. 2014; Quintana et al. 2011; Siddhpura and Paurobally 2012; Tangjitsitcharoen 2011; Tangjitsitcharoen et al. 2015; Teti et al. 2010; Zhang et al. 2013). All of these methods are composed of three steps: signal pretreatment, feature extraction and state identification. Feature extraction, which aims at constructing a number of intermediate variables from complex measured vibration signals for identifying machining states, is thought to be the most critical part for the state monitoring method (Sick 2002). The result of a model for state monitoring greatly depends on whether the features have exactly caught the critical property of the monitored machining states (Quintana et al. 2011).

There have been a lot of contributions discussing the best features for machining state identification. Kuljanic et al. (2008, 2009) extracted some statistical parameters obtained from wavelet decomposition in their multisensory chatter detection system. Schmitz (2003) found that statistical features constructed by sampling once-per-revolution could be used to identify chatter. Liu et al. 2011 introduced energy and kurtosis parameters from the empirical mode decomposition in their state detection method. Tangjitsitcharoen and Pongsathornwiwat (2013) used the average variance of the cutting forces signal to identify whether the machining state is stable. All these signal features can be roughly divided into three categories (Siddhpura and Paurobally 2013; Teti et al. 2010): statistics from time domain signals, like average value, magnitude, effective value, root mean square, skewness, kurtosis, power etc., statistic with frequency domain preprocessing, like Fourier spectrum, signal power in specified frequency ranges, spectrum bandwidth etc, and statistic with time and frequency preprocessing, like wavelet feature, Hilbert-Huang feature etc.

Generally speaking, feature extraction is a skilled job and highly relies on one’s experience. There are so many combinations of statistic and signal processing method that it is hardly possible to cover all the features to seek the best one using the traditional manual way. The quality of the features completely depends on the human ingenuity and prior knowledge, which is quite unstable and unreliable. Besides, the threshold, a critical parameter in these detection methods, is also usually empirically set which results in that the performance of the method cannot be guaranteed. That is why there is still no exact conclusion about what feature is the best for the machining state monitoring task. And because every machine tool has different nature characteristic and different working conditions, it is quite common that a detection system works well in one condition, but fails in another working environment. Teti et al. (2010) pointed out that only about 15% of the presented paper reasonably explained why these features were selected, while most of the presented features, were just claimed to be appropriate. A method which can automatically construct features directly based on the measured vibration signal and the objective of the state monitoring task without too much manual interference may be a proper way to achieve more stable and reasonable performance.

Feature learning or called representation learning is a recently developed machine learning method, which is motivated by the success of the deep learning method on the image (Bengio 2009) and speech (Mohamed et al. 2012) processing tasks. By simulating the hierarchical way that the brain processes information (Hubel and Wiesel 1962) using special network structure and training algorithm, feature learning method can automatically reveal the inner connection and underlying explanatory factors between the input and the output, and construct features that exactly represent this complex relationship (Bengio 2013; Bengio et al. 2013; Goodfellow et al. 2014). As long as the training data set is well prepared, the extracted feature set is, in the mathematical sense, the best representation for the modeling task (Bengio et al. 2013). Feature learning method has achieved great breakthrough in computer vision recognition, natural speech processing, “Image To Text” task etc., (LeCun et al. 2015). However, according to the author’s literature survey, there have been no contributions about the application of automatic feature learning method on the machining state monitoring. Taking account of the similarity between vibration signals and the sound wave, it might be very promising to expect wonderful performance by introducing the feature learning method.

In this paper, deep belief network, a kind of feature learning method, will be introduced to automatically extract features from the measured vibration signal instead of the controversial manual feature extraction step. These features will then be used to monitor the different machining state, including idling moving, stable cutting and chatter. In addition, a voting strategy is introduced to the final state identification step to resolve the chaotic interference in the development stage of chatter (Hinton 2010). Comparisons with manual feature based methods will be discussed, and experiment results show that the presented method can exactly protect the workpiece from the chatter damage which is one of the critical objectives of the machining state monitoring subject.

Automatic feature learning using deep belief networks

A brief introduction of automatic feature learning

The performance of modeling method is heavily dependent on the selection of the data representation which is usually called feature. As long as the features have exactly caught the critical connection between the raw data and the modeling task, even a simply linear classifier, like softmax classifier, can easily achieve very good performance (LeCun et al. 2015). The traditional feature engineering for machining state monitoring is usually conducted by taking advantage of human knowledge and ingenuity to construct data representation to reduce the complexity of the modeling task using some statistics and signal preprocessing methods from time domain, frequency domain or time-frequency domain. This work is usually skill intensive and the performance cannot be guaranteed. Inspired by the great breakthrough in speech and image recognition, the concept of “feature learning” or “representation learning” (Bengio 2013; Bengio et al. 2013; Goodfellow et al. 2014) is created, aimed to learn proper data representation to extract useful information which can make the modeling work much easier. And nowadays feature learning methods has become an important research field in the machine learning community (Bengio et al. 2013).

Deep learning, proposed by Hinton and Salakhutdinov (2006), is the most important feature learning strategy which applies deep structure to extract a hierarchical features and construct highly abstracted data representation using multiple nonlinear transformations. Highly abstracted features are generally invariant to most of the local changes of the input signals and can be much more easily connected to the machining state objective.

A common deep learning method for supervised feature learning is deep belief networks (DBN). DBN is a hierarchical model constructed by stacking a number of restricted Boltzmann machines (RBMs). By combining the stacked RBMs with a classifier, DBN can be used as a discriminative model for classification application, like machining state monitoring. The top classifier layer evaluates how well the current features can represent the relationship between the input signals and the output machining states and provide the optimizing direction.

Figure 1 illustrates a typical discriminative DBN model (Bengio 2009; Hinton 2010; Hinton and Salakhutdinov 2006). It is composed of two parts: a feature constructor using a series of stacked RBMs and a linear classifier on the top. The output of the last RBM (for example, L5) is the highest features abstraction and it is used as the data representation instead of the original signal in the following modeling task.

Learning algorithm of deep belief networks

Training a restricted Boltzmann machine

Restricted Boltzmann machine (RBM), the most important component in the DBN model, is a generative stochastic artificial neural network based on statistical mechanics (Hinton 2010), which can learn the probability distribution represented by the training signals. It is composed of two layers of stochastic units, a visible layer and a hidden layer, as illustrated in Fig. 2a. All visible units are connected to the hidden units and vice versa, but there are no connections within each layer. Using the binary unit both for the visible units and hidden units, the network energy can be defined as

where nV, nH are respectively the number of visible units and hidden units, \(v_{j}, h_{i}\) are respectively the value of the j-th visible unit and the i-th hidden unit, \(\omega _\textit{ij}\) is the connection weight between the i-th hidden unit and the j-th visible unit. According to the statistical mechanics theory, the joint distribution probability can be defined as

And then two activation probability functions for any visible or hidden unit can be derived as

Training a RBM means looking for a configuration to maximize the probability of \(P(\mathbf{v})\). Using maximum likelihood estimation to maximize the log cost function

where nS is the number of the training samples. \(v^{(s)}\) is the input of the s-th sample. The gradient can be derived as

The first item in Eq. (5) can be easily obtained while calculating the second item needs to traverse all the possible value combinations of the visible units and hidden units which is a NP-hard problem and there is no efficient global solution. Prof. Hinton (2002) proposed an approximation method called contrastive divergence algorithm using Gibbs sampling method, which is based on the Markov Chain Monte Carlo strategy (Bengio 2009). Finally the updating rules for the network parameters can be obtained as

where k is the step of Gibbs sampling used in the contrastive divergence algorithm, and notation \({\mathbf{v}}^{(s,k)}_{\cdot }\) indicates the result of s-th sample after k-step Gibbs sampling. Notation \(\alpha \) is the learning rate. Batch gradient ascent algorithm can be used to train the network. Some parameters used in this paper is: k = 1, \(\alpha =0.1\), momentum (Hinton 2010) from 0.5 to 0.9 is used. Gradient of weight is decayed (Hinton 2010) by 0.0002. The network weights are initialized to random values chosen from a zero-mean Gaussian with a standard deviation of 0.1 as recommended by Hinton (2002, 2010).

Training deep belief networks

Discriminative deep belief networks is composed of a series of stacked restricted Boltzmann machines and a linear classifier on the top, usually softmax classifier. There are two steps to train a deep belief networks: one is unsupervised pre-training the stacked RBMs using a large number of unlabelled signal frame. And then is the supervised fine-tuning with a small size of well labelled samples using back-propagation algorithm (Hinton 2010).

This procedural just like the way the brain learns things without any prior knowledge (Hubel and Wiesel 1962). For example, before learning the concept of “cat”, you may have seen a lot of different animals in life or from pictures, like dog, cat, pig, mouse etc., and have learnt a lot of features about these animals, like mouse, nose, leg, hair etc., while you may just do not know whether these animals are in different categories or not. This is the unsupervised learning step in DBN training. One day, you are showed several pictures with label “cat”, and the brain begin to work, combining the learned experience and the labelled pictures, many special characteristics are calibrated which can exactly distinguish “cat” from other animals. After learning about “cat”, you can further proposed some universal characters in writing which can tell others what is “cat”. That is what we learn from books. This procedure matches the fine-tuning step in DBN training.

Figure. 2b illustrates the way to train the DBN. In the unsupervised pre-training procedure, the first RBM is trained using raw signal samples. And after the first RBM has been well trained, its output is used as the input of the next RBM and the obtained connection weights of the previous RBM are kept unchanged. This procedure continue until the last RBM. After all the RBMs have been well trained, the fine-tuning step is conducted using the back-propagation algorithm to slightly adjust all weights on a small size of well labelled signal sample set. More detailed theory derivation can be found in Ref. Bengio (2009).

Methodology

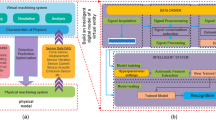

The proposed machining state monitoring method consists of following six steps, including signal acquisition, signal preparation, feature learning, classifier training, state prediction and state identification, as illustrated in Fig. 3. The function of each section is explained below:

- Step 1:

Signal acquisition Collecting the signals including different vibration states using different kinds of sensors. In this paper, four groups of vibration signals are acquired using accelerometer sensors;

- Step 2:

Signal preparation Necessary pretreatment for signals including windowing, selection, segmentation, normalization etc.;

- Step 3:

Feature learning Automatically constructing features based on the signal samples and the objective using feature learning model, like DBN in this paper;

- Step 4:

Classifier training Building the classification model using the automatically extracted features; It should be noticed that as the feature learning step needs the classification result to supervise the learning direction, the two steps, namely feature learning and classification model training, are usually integrated together;

- Step 5:

State prediction Predicting the machining states using the observed vibration signals using the well-trained classification model;

- Step 6:

State identification Final identification of the current vibration state based on the predicted state and the history state information. As the vibration always presents some chaotic phenomenon especially when the machining state is changing, it is not reliable to identify the current state just according to the current predicted state. Here a voting strategy which take consideration of the machining state history, is introduced to make the final machining state identification.

Signal acquisition

To acquire vibration signals of different machining states, a series of experiments were conducted on a TC500 Drilling and Tapping Center which can operate at a high milling speed to 15,000 rpm. Fig. 4 illustrates the experimental setup. Accelerometer (PCB 356A15 3D 2–5 k HZ ±5%) was mounted on the spindle housing to measure the real-time vibration signals. LMS SCADAS Lab was employed to collect signals with the sampling frequency for 20,480 Hz. Cutting experiments were conducted by straightly milling an aluminum brick with a three teeth end mill cutter.

Because the chatter is not a common machining state, spindle speed, feed rate and depth of cut all varied from low level to high level to activate the chatter phenomenon. The signals for idling moving and stable cutting can be easily identified. The chatter is a resonance phenomenon (Fu et al. 2016) and the signal shows only a steep peak in the frequency space which is much larger that the cutting frequency. Total four groups of vibration signals which contain different machining states are selected from the milling experiments and the associated machining parameters are listed in Table 1.

Signal preparation for the model training

The signal preparation transforms the continuous measured vibration signals into a uniform data format that can be conveniently manipulated in the subsequent steps, including segmentation and normalization. Segmentation means that the continuous signal is divided into a series of frames by shifting a sampling window with a certain time length, as illustrated in Fig. 5a. In order to keep the quality of the training samples, the signals in the state transition period are abandoned, namely only signals in the state which can be obviously distinguished are taken account for training samples, as shown in Fig. 5b. Finally, total 47,745 frames are obtained in all four groups.

After segmentation, signal normalization is conducted. Vibration amplitude is normalized in a frame, namely.

where x represents the vibration amplitude vector for a fame. The kernel property of chatter is the change of the dominating vibration frequency, which is visually represented by the different margin density of the vibration signal. After this normalization, the frequency information and the amplitude relationship of different frequency components are absolutely reserved and the interference of the amplitude variation which is a derived chatter phenomenon and can be easily influenced by many external factors is excluded, as illustrated in Fig. 6.

After these two steps, the sample is prepared. In order to assess the overfitting risk, the original sample set is randomly divided into a training set with 40,000 samples and a valid set with 7745 samples.

Automatic feature constructing and model training using deep belief networks

Using the signal frame set obtained in the Sect. 3.2 to train a DBN model, described in the Sect. 2, with 256-256-256-100-2-3 structure, the fine-tuning result is displayed in Fig. 7. The platform before epoch 6 is caused by the fine-tuning strategy that we only update the last classifier layer in the first few epoch and then the whole network (Hinton 2010). It can be found that in just about 10 epoch, the modeling accuracy gets to be 0.9999, and then slowly converges to 1. It will be found later that this process will always converge to the same limit for any runs, which only differs in the number of epoch needed. Another important character is the test accuracy curve closely follows the train accuracy curve which means that the training process does not suffer from overfitting problem without doing anything purposely to guarantee the generalization performance of the model during the whole training procedure. The test sample set here is just used to verify the overfitting performance.

Cutting state prediction

When the features have been well constructed and the classification model has been well trained, the machining state classifier can begin to work. The real-time measured vibration signals should be continually collected using the sampling window with the same length as used in the model training procedural as illustrated in Fig. 5a. And inputting the measured vibration signal into the classifier, the predicted machining state can be obtained.

Another important issue for a machining state monitoring is the time complexity of the signal processing algorithm which directly determine the time resolution of the system. And the window frame shift should be chosen according to the time resolution require by both the monitoring problem and the processing speed. The time complexity of predicting a state, given a single signal frame, is constant when the network structure is settled, and can be calculated as

where \(n_{i}\) represents the number of units of the i-th layer (not including the bias units), N is the number of layers in the network and T is the total calculation times. With some information about the calculating power of the processor, the highest time resolution for monitoring system can be calculated before the actual running. For the network used in this paper, it is around 0.15 ms and we choose 0.2 ms with consideration of the fluctuation. Multiply by the sampling frequency, the least frame shift can be set to 5 point, and the actual time resolution is 0.244 ms which is so small that we can nearly ignore the response time of the detection system. After the normalization, the signal frame can go through the network, and the predicted cutting state can be acquired.

Cutting state identification using voting strategy

As many researchers have pointed out that identifying the cutting state just according to one signal information or just the current signal is not reliable (Cho et al. 2010; Kuljanic et al. 2009; Teti et al. 2010), especially for the chatter problem, whose development stage has quite chaotic signal appearance (Litak et al. 2009). These uncertain signal behavior will lead to confusing detection result. And some unexpected external excitation, like impact or hard particle in the workpiece, will also influence the signal behavior. In order to build a timely and reliable detection system, a voting strategy is introduced which makes the identification of the current cutting state according to both the current signal behavior and several neighbor history states. Fig. 8 illustrates the process of the voting strategy.

After the signal frame has been acquired and normalized, the associated predicted state can be obtained by inputting the frame into the well-trained DBN network. The process from measuring the signal frame to obtaining the predicted state is called a cycle. And after a running cycle, a vote for different cutting states is conducted by counting the occurrence times of different cutting states in the ‘voting area’ which is defined as the predicted states of the current cycle and several past cycles as illustrated using the dashed box in Fig. 8. And the final state is identified according to the vote partition. An appropriate criterion for the state identification is 0.05–0.95 rule based on the small probability hypothesis. If the vote of any state keeps increasing and even rejects the small probability hypothesis, identification is made that the machining state is going to change to the current state. And if the vote of any state keeps increasing and eventually becomes the majority, we make the identification that the current cutting state is underway. For some harmful machining states, like chatter, a warning will be given when the current vote reject the small probability hypothesis. And for some harmless machining states, like stable cutting and idling moving, the state can be exactly identified when the current state has become the majority. The delay is usually less than a single second which has little influence for harmless state identification but is very important for harmful state detection.

Results and discussions

Modeling result comparison between different features and different modeling methods

Two different features and two common modeling methods are included in this comparison. Wavelet method is a one of the most common used method for vibration signal processing and the wavelet features proposed by Yao et al. (2010), including the energy ratio and standard variation, are included in this comparison. Mel-frequency cepstrum coefficient (MFCC) is the most important feature used in the speech recognition (Hinton et al. 2012), which is a representation of the short-term power spectrum of a sound, based on a linear cosine transform of a log power spectrum on a nonlinear Mel-scale of frequency. Feature “None” represents the original signal frame. The two modeling method is support vector machines (SVM) and back-propagation neural network (BPNN). The modeling results using different feature and modeling method combinations for the three machining states classification are illustrated in Table 2.

Using different features, the results of BPNN are quite different. Using MFCC, serious overfitting occurs with a small training error and a much larger test error, while the error relationship turns to be absolutely opposite when using the original signal frame. For SVM, high-parameters selection results in unsatisfying performance because the data dimension and the number of the training samples are both too large. But with some data dimension reduction using MFCC or Wavelet features, the results get much better. However, the DBN method consistently performs well using all the three feature set, and the close accuracy of training and test indicates that the method does not encounter any overfitting problem.

Another repeatability comparison is made between DBN and the BPNN using the original signal frame. The two methods repeatedly run many times and display the training error versus the test error, the result is illustrated in Fig. 9. Compared to the DBN, the results of BPNN is much more scattered which indicates the bad repeatability precision. The modeling accuracy is also much lower with a maximum training error to 4.14% and related test error to 5.96%. The input space is so complicated that the random initialization strategy for the network weights cannot steadily achieve the global minimum. The statistical distribution of the training error and the test error also reveal the bad repeatability. The low accuracy and precision make the BPNN modeling process quite unstable and unsatisfying. On the contrary, the DBN steadily performs wonderfully in accuracy and repeatability precision. Although the DBN needs more time to complete the model training, it is much easier to use and the accuracy is more stable besides wonderful generalization performance.

Feature comparison between different feature extracting methods

In order to find out the reason for the high performance of the DBN method, a comparison is presented between the DBN feature, principal component analysis (PCA) method and a manual defined wavelet feature. Figure. 10 illustrates the three feature spaces obtained using the same original signal frame set.

Principal component analysis (PCA) is a statistical procedure that uses an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components (Jolliffe 2002). It is a common way to visualize high dimensional data distribution. Figure. 10a is the feature space constructed by the first two principal components using PCA method. The chatter state is clearly separated from the other two states with an obvious loop margin. Using a classification method with quadratic data transformation, chatter state can be easily modeled. But the idling moving state and the stable cutting state stay tangled together in the center of the stable cutting state and cannot be separated intuitively.

With some human experience, Figure. 10b is obtained using wavelet method proposed by Yao et al. 2010. The chatter state has been transformed into a linearly separable place, but the other two states still mixed together in the tail part and cannot be separated. This is caused by the objective. When designing the features, the author purposely works to distinguish the chatter state from the stable cutting state, and the idling moving state is not in the consideration. This is a primary limitation for manual way that it is not flexible to use when any of the application condition or the target changes.

Figure. 10c is the feature space constructed using DBN method. The two outputs of the L5 layer is visualized in a 2D space. Obviously, the three cutting states are clearly separated to three corners with a large margin. The feature space is constructed from the input signals, and the training process is automatic and self-adaptive. Based on the well prepared features constructed by DBN, any kind of classification method will achieve outstanding performance. In the opinion of statistic learning theory, thanks to the large class margin, the model will naturally present good generalization performance. Although these two features presented no clear physical meanings so far, for monitoring task, this is already good enough.

Results of cutting state identification

A new signal segment, absolutely not included in the training process, is used to verify the performance of the voting strategy for identifying the chatter state to protect the workpiece from the chatter damage, which is the critical objective for a cutting state monitoring method (Siddhpura and Paurobally 2012). The number of history recodes used in the voting is set to 256. The votes for different states are illustrated in the Fig. 11. A comparison is made with the manual features proposed by Yao et al. (2010), using the wavelet method, standard variation (T1) and energy ratio (T2) of the sub-signal where the chatter frequency located. The wavelet feature curve is also displayed in the figure.

The signal segment is illustrated in Fig. 12b. A transition process from stable cutting to chatter can be found from 1 to 1.5 s and the transition signal segment is displayed in Fig. 11. A clear state transition can be found from the vote for different cutting state from around 1.2 to 1.4 s. Before 1.2 s, the stable cutting vote stays majority and the vote for chatter is very low. At around 1.22 s, the vote for chatter begins to increase and the vote for stable cutting keeps decreasing. This feature is very suitable for state identification. And using the 0.05–0.95 rule, a warning about chatter will be given at 1.244 s. An increasing trend can also be found from the wavelet features T1 and T2. But the variation is quite inconspicuous and the fluctuation also causes some disturbance for the state identification. Most likely, the detection moment using the wavelet feature is around 1.327 s when T1 and T2 begin to grow, which is relatively much later than the proposed method.

In order to examine the ability of protecting the workpiece, the workpiece surface associated with the aforesaid signal is presented in Fig. 12a. The cutter goes straightly from the left to right, and more and more obvious chatter marks occur at around 1.3 s which means chatter is developing. Eventually it turns out to be serious chatter and left clear and regular chatter marks on the workpiece surface during 1.5–2.5 s. After that the cutter goes out and left a bright band. The predicted label is relatively stable when cutting state is stable. After that, it turns into the transition zone from stable cutting to chatter. The predicted state becomes a little disordered, which is caused by the chaotic signal performance during the chatter development. After counting the votes, we get the final vote curve displayed in Fig. 12d. The percentage of votes for each machining state is obtained by normalizing the votes by dividing by the number of voters. Based on this vote distribution, the current cutting state then can be identified according to the 0.05–0.95 voting rule presented in Sect. 3.5. Because the chatter is a harmful state, warning will be made when its vote portion grows larger than 0.05. Identification result is displayed in Fig. 12e. Finally the system gives the warning about the chatter at 1.244 s which can be found from the workpiece surface that it is the very beginning when the chatter begins to develop and the workpiece has not been damaged by the chatter marks which is the most important objective for chatter detection.

Conclusions

Feature constructing is the most important procedure for signal-based machining state monitoring. By introducing the feature learning method, this paper presents an effective machining state monitoring method which automatically extracts features from the vibration signals. Instead of the traditional troublesome manual feature extraction way, a rigorous mathematical method is used to reveal the inherent relationship between the input vibration signals and the output machining states. Experiments prove the method to be effective in protecting the workpiece from the chatter damage.

Some conclusions can be drawn that:

- (a)

The automatically extracted signal features can exactly reveal the relationship between the vibration signal and the machining states;

- (b)

Using the automatic extracted features, even a linear classifier can easily achieve nearly 100% modeling accuracy and wonderful generalization performance on a well prepared large signal set;

- (c)

The voting strategy is effective in producing stable cutting state identification and can exactly protect the workpiece from the chatter damage;

- (d)

The time complexity of the proposed method is constant and usually less than 1 ms considering the current CPU power, which is very important to guarantee the time resolution of the monitoring system and identify the chatter at its very early beginning.

References

Abellan-Nebot, J. V., & Subirón, F. R. (2010). A review of machining monitoring systems based on artificial intelligence process models. International Journal of Advanced Manufacturing Technology, 47(1–4), 237–257.

Altintas, Y. (2012). Manufacturing automation: metal cutting mechanics, machine tool vibrations, and CNC design. Cambridge: Cambridge University Press.

Aydin, I., Karakose, M., & Akin, E. (2015). Combined intelligent methods based on wireless sensor networks for condition monitoring and fault diagnosis. Journal of Intelligent Manufacturing, 26(4), 717–729.

Bengio, Y. (2009). Learning deep architectures for AI. Foundations and Trends in Machine Learning, 2(1), 1–127.

Bengio, Y. (2013). Deep learning of representations: Looking forward. In A. H. Dediu, C. Martín-Vide, R. Mitkov, & B. Truthe (Eds.), Statistical language and speech processing. First international conference, SLSP 2013, Tarragona, Spain, July 29–31, 2013. Proceedings (pp. 1–37). Springer Berlin Heidelberg.

Bengio, Y., Courville, A., & Vincent, P. (2013). Representation learning: A review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(8), 1798–1828.

Cho, S. Y., Binsaeid, S., & Asfour, S. (2010). Design of multisensor fusion-based tool condition monitoring system in end milling. International Journal of Advanced Manufacturing Technology, 46(5–8), 681–694.

Fu, Y., Zhang, Y., Zhou, H., Li, D., Liu, H., Qiao, H., et al. (2016). Timely online chatter detection in end milling process. Mechanical Systems and Signal Processing, 75, 668–688.

Goodfellow, I. J., Erhan, D., Carrier, P. L., Courville, A., Mirza, M., Hamner, B., et al. (2014). Challenges in representation learning: A report on three machine learning contests. Neural Networks.

Hinton, G. (2010). A practical guide to training restricted Boltzmann machines. Momentum, 9(1), 926.

Hinton, G., Deng, L., Yu, D., Dahl, G. E., Jaitly, N., Mohamed, A. R., et al. (2012). Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Processing Magazine, 29(6), 82–97.

Hinton, G. E. (2002). Training products of experts by minimizing contrastive divergence. Neural Computation, 14(8), 1771–1800.

Hinton, G. E., & Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504–507.

Hubel, D. H., & Wiesel, T. N. (1962). Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. The Journal of physiology, 160(1), 106.

Jolliffe, I. (2002). Principal component analysis. Wiley Online Library.

Kuljanic, E., Sortino, M., & Totis, G. (2008). Multisensor approaches for chatter detection in milling. Journal of Sound and Vibration, 312(4–5), 672–693.

Kuljanic, E., Totis, G., & Sortino, M. (2009). Development of an intelligent multisensor chatter detection system in milling. Mechanical Systems and Signal Processing, 23(5), 1704–1718.

Lamraoui, M., Thomas, M., El Badaoui, M., & Girardin, E. (2014). Indicators for monitoring chatter in milling based on instantaneous angular speeds. Mechanical Systems and Signal Processing, 44(1–2), 72–85.

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444.

Litak, G., Sen, A. K., & Syta, A. (2009). Intermittent and chaotic vibrations in a regenerative cutting process. Chaos Solitons & Fractals, 41(4), 2115–2122.

Liu, H. Q., Chen, Q. H., Li, B., Mao, X. Y., Mao, K. M., & Peng, F. Y. (2011). On-line chatter detection using servo motor current signal in turning. Science China Technological Sciences, 54(12), 3119–3129.

Mohamed, A. R., Dahl, G. E., & Hinton, G. (2012). Acoustic modeling using deep belief networks. IEEE Transactions on Audio, Speech, and Language Processing, 20(1), 14–22.

Quintana, G., Ciurana, J., Altintas, Y., & Weck, M. (2011). Chatter in machining processes: A review. International Journal of Machine Tools & Manufacture, 51(5), 363–376.

Schmitz, T. L. (2003). Chatter recognition by a statistical evaluation of the synchronously sampled audio signal. Journal of Sound and Vibration, 262(3), 721–730.

Sick, B. (2002). On-line and indirect tool wear monitoring in turning with artificial neural networks: A review of more than a decade of research. Mechanical Systems and Signal Processing, 16(4), 487–546.

Siddhpura, A., & Paurobally, R. (2013). A review of flank wear prediction methods for tool condition monitoring in a turning process. International Journal of Advanced Manufacturing Technology, 65(1–4), 371–393.

Siddhpura, M., & Paurobally, R. (2012). A review of chatter vibration research in turning. International Journal of Machine Tools & Manufacture, 61, 27–47.

Tangjitsitcharoen, S. (2011). Advance in detection system to improve the stability and capability of CNC turning process. Journal of Intelligent Manufacturing, 22(6), 843–852.

Tangjitsitcharoen, S., & Pongsathornwiwat, N. (2013). Development of chatter detection in milling processes. International Journal of Advanced Manufacturing Technology, 65(5–8), 919–927.

Tangjitsitcharoen, S., Saksri, T., & Ratanakuakangwan, S. (2015). Advance in chatter detection in ball end milling process by utilizing wavelet transform. Journal of Intelligent Manufacturing, 26(3), 485–499.

Teti, R., Jemielniak, K., O’Donnell, G., & Dornfeld, D. (2010). Advanced monitoring of machining operations. CIRP Annals-Manufacturing Technology, 59(2), 717–739.

Yao, Z. H., Mei, D. Q., & Chen, Z. C. (2010). On-line chatter detection and identification based on wavelet and support vector machine. Journal of Materials Processing Technology, 210(5), 713–719.

Zhang, Z. Y., Wang, Y., & Wang, K. S. (2013). Fault diagnosis and prognosis using wavelet packet decomposition, Fourier transform and artificial neural network. Journal of Intelligent Manufacturing, 24(6), 1213–1227.

Acknowledgements

The authors would like to acknowledge financial support from the National Program on Key Basic Research Project (Grant No. 2013CB035805), National Natural Science Foundation Council of China (Grant Nos. 51675199, 51635006), Fundamental Research Funds for the Central Universities (Grant No. 2016YXZD059).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fu, Y., Zhang, Y., Gao, H. et al. Automatic feature constructing from vibration signals for machining state monitoring. J Intell Manuf 30, 995–1008 (2019). https://doi.org/10.1007/s10845-017-1302-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-017-1302-x